Abstract

Tea is an important but vulnerable economic crop in East Asia, highly impacted by climate change. This study attempts to interpret tea land use/land cover (LULC) using very high resolution WorldView-2 imagery of central Taiwan with both pixel and object-based approaches. A total of 80 variables derived from each WorldView-2 band with pan-sharpening, standardization, principal components and gray level co-occurrence matrix (GLCM) texture indices transformation, were set as the input variables. For pixel-based image analysis (PBIA), 34 variables were selected, including seven principal components, 21 GLCM texture indices and six original WorldView-2 bands. Results showed that support vector machine (SVM) had the highest tea crop classification accuracy (OA = 84.70% and KIA = 0.690), followed by random forest (RF), maximum likelihood algorithm (ML), and logistic regression analysis (LR). However, the ML classifier achieved the highest classification accuracy (OA = 96.04% and KIA = 0.887) in object-based image analysis (OBIA) using only six variables. The contribution of this study is to create a new framework for accurately identifying tea crops in a subtropical region with real-time high-resolution WorldView-2 imagery without field survey, which could further aid agriculture land management and a sustainable agricultural product supply.

1. Introduction

In recent years, due to global warming, many regions of the Earth have suffered from drastic climate change, which has increased the frequency of extreme climate events. Not only does this cause certain inconveniences for the lives of human beings, but it also threatens our lives and property. The growth of crops has an intimate relationship with the climate, so any climatic change could impact the amount and the quality of crop production [1,2,3,4,5,6,7,8,9,10,11]. In the past, in order to control the production surface area and the amount of production, governments would generally monitor the sensitive crops with prices that fluctuate according to the weather, disasters, or public preferences so as to prevent price collapses, which could cause financial damage to farmers. Therefore, it is important to have a comprehensive understanding of the acreage of crop production and the market situation of various crops in order to propose important contingency measures in response to the economic losses and potential food crises caused by extreme climate phenomena. In the sub-tropical region in Taiwan, for example, tea is a sensitive crop of high economic value and a featured export product. About 3306 tons of teas of various special varieties are exported annually, and the number is still growing. According to the United Nations Food and Agriculture Organization (FAO), the global production of tea grew from 3.059 million tons in 2001 to 4.162 million tons in 2010, while the global area planted with tea grew from 2.41 million hectares in 2001 to 3.045 million hectares in 2010. This is certainly an amazing growth rate, and many studies conducted in different countries found that global warming would result in tea production losses [12,13,14,15], so adopting effective measures for productivity control is necessary.

Timely and accurate LULC interpretation and dynamic agriculture structure monitoring are the key points of agricultural productivity control to ensure a sustainable food supply [16,17,18,19,20,21,22]. In actual operation, on-site investigation and orthogonal photograph interpretation are two methods widely used, especially by government agencies, to obtain information about tree characteristics and crop species composition. However, on-site investigation requires a lot of time and manpower and even though the working process is very detailed and precise, the ineffective investigation results in incomplete, unstable and sporadic LULC products. Aerial photograph interpretation and vector digitizing on GIS platforms (e.g., ArcGIS, MapInfo, QGIS) is also time-consuming and expensive for large crop mapping and classification. Besides, it is easier for the researchers to obtain extensive LULC information with high temporal resolution by using satellite imagery rather than aerial photographs or other methods. This information is fundamental for agricultural monitoring because the farmer compensation and the recognition of production losses are highly time-dependent. Therefore, daily transmission satellite imagery could be a very useful tool for LULC mapping.

However, the accurate interpretation ability for individual stands with the highly heterogeneous farm or shape characters of single canopies, is limited by the relatively low spatial resolution if we apply moderate-resolution satellite imagery (e.g., SPOT 4 and SPOT 5 provided by Airbus Defence and Space) [23,24,25,26,27,28,29,30,31]. Over the last few years, the spectral resolution, spatial resolution and temporal resolution have undergone rapid and substantial progress (e.g., Formosat-2 multispectral images offer 8 m resolution and panchromatic band images at 2 m resolution). For some urban and flat areas, individual stands, such as a tree or a shrub, can be easily distinguished by using very high resolution (VHR) imagery [32,33,34,35]. This provides opportunities to differentiate farm and crop types. High spatial resolution satellite imagery has thus become a cost-effective alternative to aerial photography for distinguishing individual crops or tree shapes [36,37,38,39,40,41,42,43,44,45,46]. For example, WorldView-2 images and support vector machine classifier (SVM) have been used to identified three dominant tree species and canopy gap types (accuracy = 89.32.1%) [47]. Previous study has applied a reproducible geographic object-based image analysis (GEOBIA) methodology to find out the location and shape of tree crowns in urban areas using high resolution imagery, and the identification rates were up to 70% to 82% [36]. QuickBird image classification with GEOBIA has also been proved useful to identify urban LULC classes and obtained a high overall accuracy (90.40%) [32].

Apart of the impact of satellite imagery resolution, image segmentation methods and classification algorithms also play essential roles in LULC classification workflows. In early years, many studies focused on developing pixel-based image analysis (PBIA) by taking the advantage of VHR image characteristics [48,49,50,51,52,53,54], but it is obvious that recent studies mapping vegetation composition or individual feature have demonstrated the advantages of object-based image analysis (OBIA) [55,56,57,58,59,60,61,62]. In OBIA, researchers first have to transfer the original pixel-image into object units based on specific arrangement or image characteristics, which is called image segmentation, and then assign the category of every object unit by using classifiers. OBIA can improve the deficiency of PBIA, such as the salt and pepper effect, therefore it has become one of the most popular workflows. For example, Ke and Quackenbush classified five tree species (spruce, pine, hemlock, larch and deciduous) in a sampled area of New York (USA) by applying object-based image analysis with QuickBird multispectral imagery and obtained an average accuracy of 66% [63]. Pu and Landry presented a significantly higher classification accuracy of five vegetation types (including broad-leaved, needle-leaved and palm trees) using object-based IKONOS image analysis [39]. The results were much better than using pixel-based approaches. Kim et al. obtained a higher overall accuracy of 13% with object-based image analysis by using an optimal segmentation of IKONOS image data to identify seven tree species/stands [64]. Ghosh and Joshi compared several classification algorithms on mapping bamboo patches using very high resolutionWorldView-2 imagery, and achieved 94% producer accuracy with an object-based SVM classifier [65].

According to the abovementioned reasons and background, the main objectives of this study include: (1) to investigate the classification accuracy of a combined workflow applied on WorldView-2 imagery to identify tea crop LULC; (2) to compare the capabilities of several ordinary LULC classification algorithms (e.g., maximum likelihood (ML), logistic regression (LR) random forest (RF) and support vector machine (SVM) based on pixel and object-based domains) in identifying tea LULC; and (3) to explore the potential of using high spatial/spectral/time resolution WorldView-2 imagery to identify tea crop LULC classification, and the details about image segmentation and feature extraction will also be discussed. The main content of this paper is divided into four major sections. Section 1 and Section 2 include descriptions and introductions of the study, introductions to on site investigation and satellite imagery data sets, frameworks of feature extraction and classification developed for this study. Section 3 contains the main findings and breakthroughs of this study, followed by their discussion. The final part consists of the conclusions.

2. Materials and Methods

2.1. Study Area and Workflow

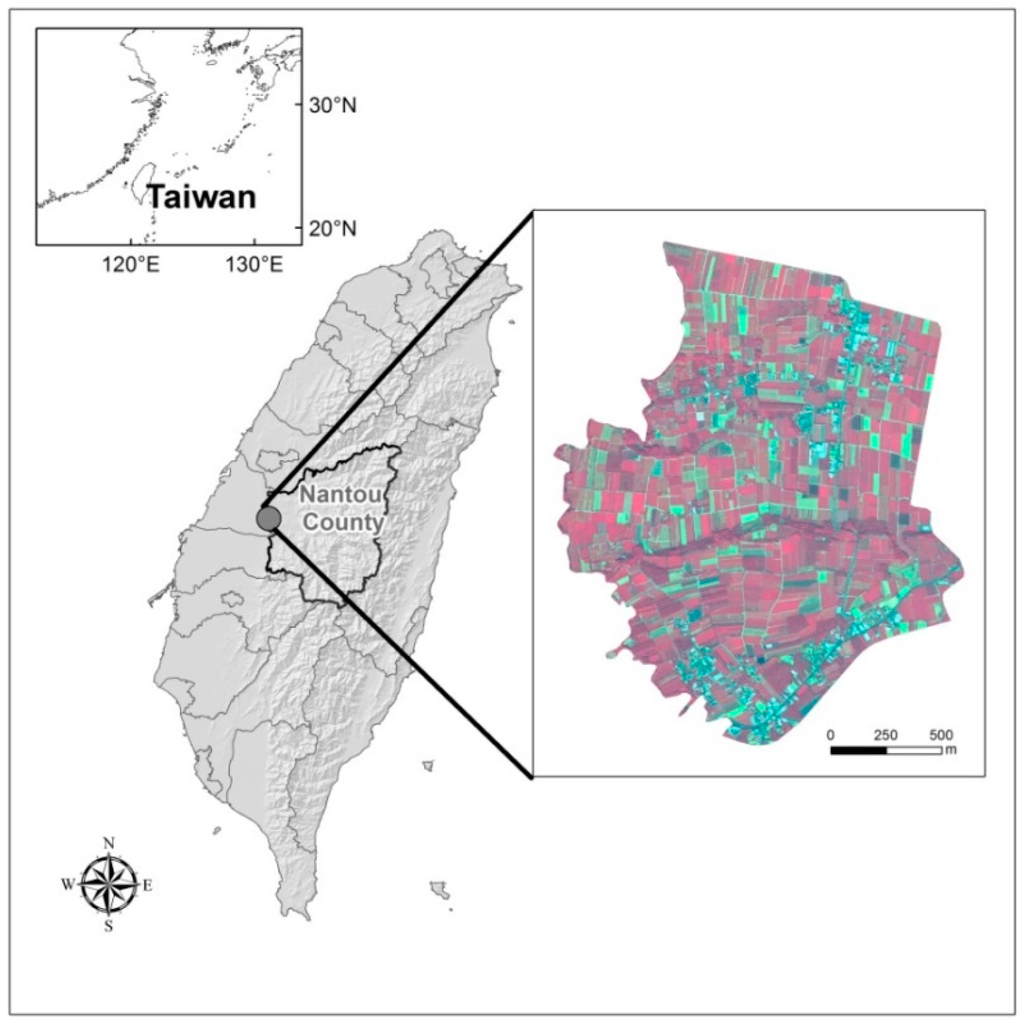

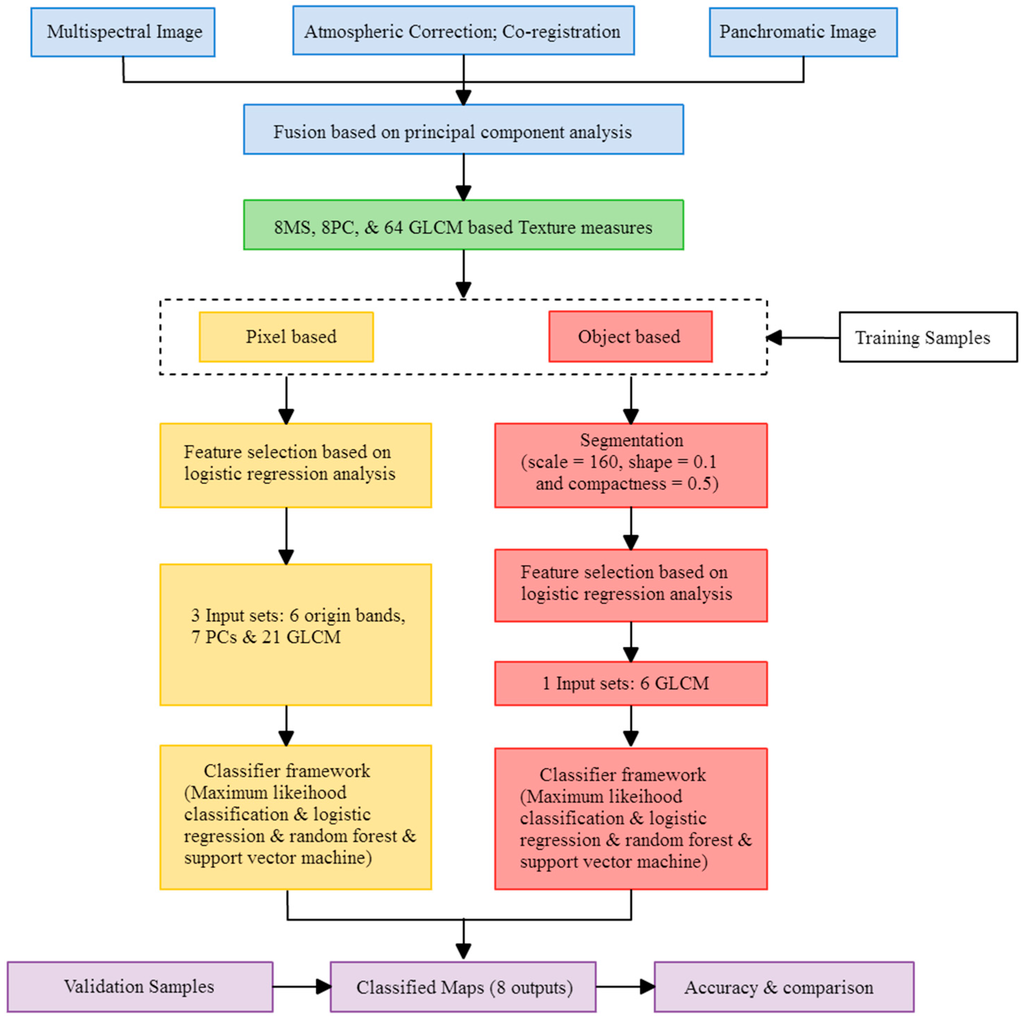

The study area is located in part of Nantou County in central Taiwan (between 120.620°E to 120.683°E and 23.8492°N to 23.8894°N). The total area is about 263 hectares. According to the 2014 agricultural statistic data of Taiwan, the annual tea production of this area is about 2.3% of the total output. The climate of this region is sub-tropical, with average temperatures of 22~25 °C and an annual rainfall of 1500~2100 mm. The terrain is mainly a flat plain near low slope hills. This region contains typical agricultural villages with abundant and minimally changed Camellia sinensis (L.) O. Ktze. Var. Sinensis and Camellia sinensis (L.) O. Ktze. Var. Assamica (Mast.) Kitam. f. Assamica tea crops and pineapple LULC. The study area is shown in Figure 1. The methodological flowchart for mapping the tea cultivation area is shown in Figure 2.

Figure 1.

The location of study area in Nantou, Taiwan and the WorldView-2 imagery used to map tea cultivation area in this study.

Figure 2.

Methodological flowchart for mapping tea cultivation area.

2.2. Data Sets

2.2.1. Collection of Ground Reference Data

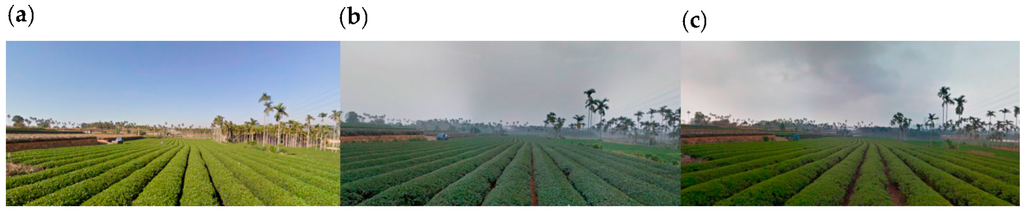

We surveyed the exact plantation location of all tea and other non-tea LULC during 4–5 March 2015 (Figure 3). A Garmin global positioning system (GPS) and ArcGIS mobile device were used as the tools for semi-automatic site mapping with a scale of 1:2500. The LULC includes five types: (1) tea (Camellia sinensis (L.) O. Ktze. Var. Sinensis and Camellia sinensis (L.) O. Ktze. Var. Assamica (Mast.) Kitam. f. Assamica); (2) other crops (e.g., pineapple and betel palm (Areca catechu)); (3) water and ponds; (4) buildings and roads; and (5) forest (evergreen broadleaf forest). Other crops in the study area were mainly pineapple and betel nut. We re-categorized these five LULC types into “tea” and “non-tea” LULC by integrating all land features except the tea areas. The results of the field survey showed that there are around 33 hectares of tea and 230 hectares of non-tea regions in our study area.

Figure 3.

Tea crops (Camellia sinensis (L.) O. Ktze. Var. Sinensis and Camellia sinensis (L.) O. Ktze. Var. Assamica (Mast.) Kitam. f. Assamica) of three different seasons located in study area. (a) December 2014; (b) March 2015; (c) August 2015.

2.2.2. WorldView-2 Imagery

The main LULC of the study area are tea crops and pineapples, but it was not easy to do artificial interpretation even with VHR orthogonal aerial photographs due to the similar arrangements of the plants. For this reason, WorldView-2 imagery with both high spatial and spectral resolution was applied as a main material. The WorldView-2 imagery satellite (DigitalGlobe, Inc., Westminster, CO, USA) is a commercial satellite with eight multispectral bands and very high resolution. The average amount of time needed to revisit and acquire data for the exact same location is 1.1 day, and the spatial resolution is 0.46~0.52 m (panchromatic band, 450~800 nm) and 1.84~2.08 m (multispectral bands). The eight multispectral bands contained coastal blue (400~450 nm), blue (450~510 nm), green (510~580 nm), yellow (585~625 nm), red (630~690 nm), red edge (705~745 nm), NIR1 (770~895 nm) and NIR2 (860~1040 nm). The added spectral diversity of WorldView-2 satellite such as coastal blue, yellow and red edges provides the ability to perform detailed environmental and landscape change detection better than with other satellite images (e.g., Formosat-2, SPOT6, Kompsat) and DMC aerial photographs with only four bands or less. We applied cloud-free imagery (<5%) of the study area as the original material. The original digital number (DN) value of the imagery was converted to top-of-atmosphere spectral reflectance [66]. The imagery was also ortho-rectified and co-registered to the two degree zone Transverse Mercator projection coordinate system with a root mean square error of less than 0.5 pixels per image. The time of image acquisition for this study was acquired on 22 February 2015 corresponding to the date of ground reference data (4–5 March 2015). It worth mentioned that tea crops within study area are stable in LULC, farm shape arrangement, and not leafing out all years (Figure 4). Therefore, if the tea LULC still remained, the image acquisition and interpretation would not be limited or affected by season or date within a period due to stable vegetation spectral dynamics. This is beneficial to ground verification and long term monitoring.

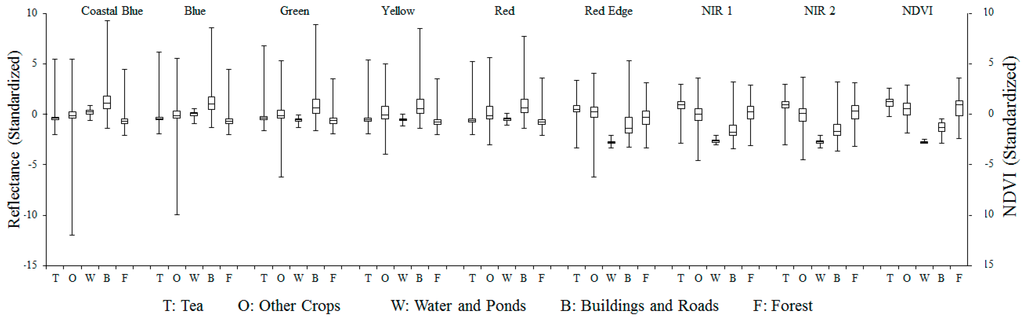

Figure 4.

Distribution of standardized reflectance of different LULC across 8 multi-spectral bands and NDVI.

As for the resolution of WorldView-2 imagery, the intervals between every tea plantation were not clear in the 2 m resolution MS bands in this study. Therefore, a pan-sharpening process was added to improve the spatial resolution of the MS bands from 2 m to 0.5 m. The pan-sharpened archives had enhanced interpretability in inferring forms and shapes of tea crop LULC. Each band of the pan-sharpened imagery was then standardized by using mean and standard deviation. The reason is that the reflectance value range of the WorldView-2 image varies between each band and makes the input variables contribute unequally to the PBIA and OBIA.

2.3. Spectral Variability Analysis

The common scientific challenge of mapping vegetation with very high spatial resolution imagery is to distinguish between different vegetation classes due to the superimposition of spectral signatures. In order to test the LULC classification capability of eight MS band WorldView-2 imagery, and the difference or similarity of tea crops in different season, spectral separability analysis was undertaken as the initial step. Figure 4 presents the box-whisker-plots of spectral variability of the training pixels of five basic LULC classes. Reflectance of buildings and roads in NIR1, NIR2, coastal blue wavelengths and reflectance of water and ponds in all red edge wavelengths were significantly different from other classes. Besides, in most bands, tea LULC, forest canopies, and other crops (pineapple crop and betel palm) showed similar spectral variability in the blue, green, yellow, red bands and normalized difference vegetation index (NDVI, ranges from minus one (−1) to plus one (+1)), and little difference in NIR1 and NIR2 bands. In other words, tea farm patches cannot be definitely distinguished from non-tea crops if we only use eight MS bands and NDVI without other information.

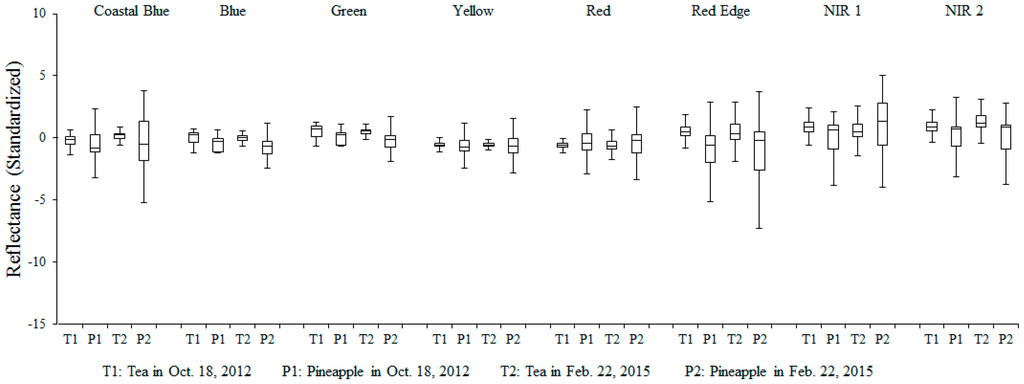

As for the evaluation of tea spectral dynamics, usually, when plants are harvested, they have a physiological response characterized by intensive vegetation growth and high primary production to restore the area that was eliminated. This frequently translates into a displacement of the red edge position towards longer wavelengths. However, Figure 5 shows that the mean standardized reflectances of tea were almost the same in each band between 18 October 2012 and 22 February 2015. The same appearance was noted for pineapple as well. For this reason, we believed that the tea LULC and tea spectral dynamics are relatively stable.

Figure 5.

Standardized reflectance of tea crops (Camellia sinensis (L.) O. Ktze. Var. Sinensis and Camellia sinensis (L.) O. Ktze. Var. Assamica (Mast.) Kitam. f. Assamica) and pineapple in different dates across eight multi-spectral bands.

2.4. Generation of Other Input Variables

This study assumed that the classification accuracy of tea and non-tea LULC separation would be increased if more sub-class bands or indices were involved in the procedure. Thus, eight WorldView-2 bands treated with pan-sharpening and standardization, 64 GLCM texture indices derived from these eight bands, and eight principal components, for a total of 80 variables, were used as the input variables. In regard to the spectral variables, first, the multispectral images of the eight bands of WorldView-2 and the panchromatic images of the single band were treated with fusion using hyperspherical color spaces (HCS) [67]; this is followed by standardization which would be more convenient for the subsequent feature selection by using logistic regression (LR) analysis, which is ORIGIN 1–8 in Table 1.

Table 1.

Description of the input variables used for the classification.

In addition, PC 1–8 was generated from the analysis of ORIGIN 1–2 in the principal component analysis. In regard to the spatial characteristics, the texture indices adopted in this study consisted of mean, variance, contrast, dissimilarity, homogeneity, correlation, entropy, angular second moment in GLCM. These indices are supposed to be helpful for distinguishing the structure differences between tea and other crop fields, such as the fields of pineapple and betel palm. With regards to plant structure, the canopies of tea trees have a more compact structure compared with other crops. In addition, although the tea trees and other crops in the study area are mostly planted line by line, the canopies of tea trees usually cover most of the soil surface. Therefore, tea crop fields show smoother texture than other crop fields. The relationship between the above ecological meaning and the GLCM indices along with the formulas of each of the index are shown in Table 1. Among them, i is the row number, j is the column number; pi,j is the normalized frequencies at which two neighboring pixels separated by a constant shift occur in the WorldView-2 imagery; N is the number of gray levels presented in the imagery.

With the purpose of proving that the increase of “sub-categories” or “factors” could enhance the LULC classification, this study adopted four spectral distance measurements: Euclidean, divergence, transformed divergence (TD), Jefferies-Matusita (J-M), to calculate the degree of separation of the training samples of tea trees and non-tea trees (forest, building and road, water bodies and ponds and other non-tea LULC) in the spectrum. In the TD assessment, 2000 was the best categorical resolution. Basically, above 1900 represents a good categorical resolution. The closer the selected sample from the training area is to 0, the worse the categorical separation efficiency is, so training data shall be re-selected. J-M has a similar concept towards TD; the closer to 1414, the better the separability is (Table 2). The results showed that the larger the increase of sub-categorical bands or indicators is, the more effective it is to increase the separability between tea and non-tea farmland. This study subsequently investigated which sub-categorical band was the most helpful.

Table 2.

Various spectral distance measurements between tea and non-tea (including non-tea LULC, forest, water and ponds, buildings and roads) training area.

2.5. Feature Selection-LR Analysis

We applied LR analysis, a log-linear model suitable for quantitative analysis, in two different stages of this research. In feature selection stage, LR analysis was a filter to reduce the dimension of the data from 80 to less than 80. The next part we applied LR analysis was to evaluate the best LULC classifier, and LR model was one of the classifiers.

LR analysis is useful when the dependent variable is categorical (e.g., yes or no, presence or absence) and the explanatory (independent) variables are categorical, numerical, or both [68,69,70]. Generally speaking, a LR model can be expressed in the following forms [71]:

In Equation (1), means the conditional probability of landslide changes; is the vector of independent variable; if , the above equation can be revised to:

In Equation (2), p is the occurrence probability; is the intercept; and , , ... are the regression coefficients of the input variables , , ... . In order to interpret the occurrence probability, one has to use the coefficients as a power to the natural log (e). The result represents the corresponding coefficient of each responding independent variable is the effect on odds (tea and non-tea occurrence), and when = 0 it means that the odds will not change. In this way, we can sum up the main factors related to the land cover. Last, Cox & Snell test, Omnibus test, Hosmer and Lemeshow test and odds ratio are used for the LR model as to achieve the assessments of the explanatory power of the factors and the model fit. We applied SPSS for calculating LR here, and all input row data (.txt) were generated from original layer files (.grd) by using the conversion tools of ArcGIS software.

2.6. Classification Procedure

The classification in this study basically used PBIA and OBIA mainly because the high spatial resolution of pan-sharpened WorldView-2 0.5 m images may present high spectral heterogeneity of the surface features, and the LULC classification may be affected if one only includes PBIA [72]. The difference of OBIA and PBIA is that the image shall be segmented before the classification and the objects are created; the establishment of objects from image segmentation mainly uses the heterogeneity, shape and boundary of surface features to define a object. This study segmented the images with multi-scale segmentation by using the eCognition image processing software concept. During the image segmentation, the scale, shape and compactness were the three parameters which produced the most reasonable results. The reasonability defined in this study refers to: (1) the drawing of the tea tree plantation in the study area using the boundaries; and (2) the object is still complete and does not include more than one kind of LULC classification after the image segmentation. This study used ORIGIN variable layers as the image segmentation basis. The best parameters obtained were 160 in scale, 0.1 in shape and 0.5 in compactness within the study area. After obtaining the reasonable segmented results, a classification of the LULC for the segmented images follows. PBIA classifies LULC based on the value of the single pixel, yet the OBIA would assign the LULC classes with the characters of homogeneous segmented features.

In both PBIA and OBIA, the aforementioned 80 variables were a huge amount which would cause a great burden to the system operation. For this reason, LR model was applied to reduce data dimensions before putting variables into ML, LR, RF, and SVM classifiers. The area of training samples for tea and non-tea was 6.2 and 16.1 hectares separately for PBIA classifiers, and 36 and 132 segments for OBIA classifiers. The following section provides a detailed description of tea tree classification with RF and SVM.

2.6.1. Classification Procedure-RF Classifier

We employed a newly developed RF algorithm as one of the LULC classifiers in this study. The main concepts of the RF algorithm are based on a non-parametric decision-tree, and it allows various kinds of inputs with different scales and types, including data sources recorded on different measurement scales [73]. This advantage allows us to use three different sets of variables involving original spectral bands and their derivative GLCM and PCA. The process of RF starts by selecting many bootstrap (with replacement) observations from the original data. The data source of a single classification tree comes from each bootstrap sample, but the predictor variables decrease with the binary partition classification tree at each node. The observations eliminated from bootstrapping at each decision-tree are called the “out-of-bag” (OOB) observations. The error rate for the entire RF classifier was generated from the comparison between the observed classification and the predicted classification for all observations derived from the out-of-bag vote tallies [74]. We thus built a separate RF model for three sets of predictors:

- Two control parameters were set: one for the amount of the decision trees (ntree), while the other was for the number of characteristic parameters (mtry);

- We took the original training samples and then put them back with a bootstrap method; the best number and sample of decision tree Xi (i = number of iterations) were randomly collected from the original samples (dataset) X. Each sample stage contained about one third of X. The element that was not included in the sample was called OOB;

- Every decision tree would have a complete growth without pruning. This step differs from a traditional decision tree;

- In every iteration of the bootstrap, the prediction did not include the dependent variables within OOB, and the results generated from all the decision trees were to be averaged;

- When all OOB data was included in the generation of decision tree, the importance of every factor could be ensured through the calculation of RMSE with increased percentage; thus, the correlations of these factors with the dependent variables could be established;

- Last vote was to elect the classification results into tea tree or non-tea tree.

2.6.2. Classification Procedure—SVM Classifier

The theoretical concept of SVM was basically developed from the optimal conditions of the hyperplane from the linear or non-linear separability. The optimal hyperplane not only correctly separates two types of samples but also has the largest class intervals. For a linearly separable binary classification, if the training data with k number of samples is represented as {Xi, yi}, i = 1, …, k where is an N-dimensional space and is a class label with two classes, the SVM classifier can be created by a hyperplane given by:

where W is a vector perpendicular to the linear hyperplane and b is a scalar showing the offset of the discriminating hyperplane from the origin. For the two classes y, two hyperplanes can be used to discriminate the data points in the respective classes. The two hyperplanes can be expressed as:

(W · Xi) + b ≥ +1 for all y = + 1, i.e., class 1, and

(W · Xi) + b ≤ −1 for all y = − 1, i.e., class 2

For a nonlinear classification, the input data is mapped onto a higher dimensional feature space using a non-linear mapping function. The classification is then carried out in the mapped space rather than the input feature space. In order to increase the computational efficiency, a kernel-trick is usually used to definite kernel function. In this study, we chose the kernel function contributing to the best tea and non-tea LULC classification from the four basic options: linear, polynomial, radial and sigmoid. Besides, two crucial parameters, cost of constraints violation (C) and sigma (σ), were also considered to create the best SVM classifier. C accounts for the over-fitting of the model while σ decides the shape of the hyperplane [65].

2.7. Accuracy Assessment

For accuracy assessment of PBIA results, we converted the ground reference data from vector to grid file format with a cell size of 0.5 m (i.e., the same cell size as the spatial resolution of pan-sharpened WorldView-2 images) and calculated the areas classified correctly and incorrectly, while for OBIA we calculated the number of segmented objects rather than the areas with correct and incorrect classification results. Indices including producer’s accuracy (PA), user’s accuracy (UA), overall accuracy (OA) and kappa index of agreement (KIA) were then applied to evaluate the PBIA and OBIA results. The KIA measures the difference between the actual agreement and the chance agreement in the error matrix [75,76]. The KIA is computed as:

where r is the number of rows in the error matrix; xii is the number of observations in row i and column i (on the major diagonal); xi+ is the total of observations in row i; x+i is the total of observations in column i; N is the total number of observations included in the matrix.

To assess if the statistical difference between the results of PBIA and OBIA exists, McNemar’s test based on the standardized normal test statistic was used in this study. The difference in accuracy between two approaches is statistically significant if |z| > 1.96, and the sign of z indicates which the more accurate approach is [77,78]. The z-score is computed as:

where fGP indicates the number of samples correctly classified by OBIA and incorrectly classified by PBIA and fPG indicates the number of samples correctly classified by PBIA and incorrectly classified by OBIA.

3. Results

In this section, we describe the feature selection results, followed by the classification ones. When discussing the results, we mostly focus on the tea cultivation area.

3.1. Feature Selection

Although both PBIA and OBIA had 80 input variables, yet their “basic-unit values” were different; thus, LR model filter shall be carried out separately as to select the best variables for pixel-based and object-based approaches.

In terms of feature selection in a pixel-based approach, the eight multispectral bands, the eight principal components and the 64 GLCM textures were used to choose the significant impact variables in the LR analysis. The analytical result obtained with Cox & Snell R square test was 0.481. The p value of the Omnibus test was 0.000, while p < 0.01 meant the explained variance of independent variables was significantly greater than the variance of the unexplained ones. This also represented that there was at least one independent variable which can effectively explain and predict the dependent variable. The p-value of Hosmer and Lemeshow test was 0.511. The insignificant p-value here meant LR model was indicated of good fit. With the 34 significant variables listed in Table 3, the best classification accuracy (87.5%) gained from the training data can be achieved.

Table 3.

Feature selection results of PBIA with LR model.

Among them, the 1st to the 21th represents the texture variables. MEAN2 means the MEAN texture of the second band in WorldView-2 fusion image. The others may also be induced in the same way. From the results, the ENTROPY and SECOND MOMENT textures in each band were both considered as not significant, so they were both removed from the classifiers. MEAN7 has the highest B coefficient among all texture variables and this implies that it is the most beneficial index to differentiate tea from non-tea LULC. PC1 means the first main component. Except for PC6, the rest of the main components were significant; ORIGIN, which was the original band of WorldView-2 fusion image, was not significant in the first and fifth band of the model; thus, only the other six bands were kept. The variables from the aforementioned Table 3 were the input variables of the four pixel-based classifiers. In terms of feature selection step in OBIA, the Cox & Snell R square value of the analytical results was 0.547, meaning the intensity of correlation and the predictive power of the input variables and dependent variables are both good. The result of the Omnibus test was the value of p = 0.000, meaning that the explained variance was significantly greater than the unexplained variance, and among the input variables, at least one variable can effectively explain and predict the dependent variable. The p-value of Hosmer and Lemeshow test was 0.977. It meant LR model was indicated of good fit. The six significant variables listed in Table 4 can be used to achieve the best classification accuracy (94.7%) from the training data.

Table 4.

Feature selection results of OBIA using LR model.

The difference between OBIA and PBIA consisted in the variables which were related to spectral characteristics, such as MEAN, ORIGIN and PC, and they were not considered as significant; however, the similarity among them is that CONTRAST, DISSIMI and CORREL were considered as significant. This means when the classification was carried out with OBIA, the spatial characteristics would have more referential meaning than the spectral characteristics. This feature could avoid the high spectral heterogeneity phenomenon in high spatial resolution images [72]. The variables in the aforementioned Table 4 were taken as the input variables of the four object-based classifiers.

3.2. Classification with the Selected Input Variables

The following two sub-sections demonstrated the results generated from ML, LR, RF and SVM classifiers with the use of 34 and six selected variables for the pixel-based and object-based approaches respectively.

3.2.1. Pixel-Based Results

The study evaluated the classification accuracies of ML, LR, RF and SVM classifiers seperately in PBIA. First, in the LR analysis, we used Equation (2) and the B coefficient in Table 3 to derive the occurrence probability p; pixels with the value of p < 0.5 were assigned as the class of tea while non-tea if p ≥ 0.5. RF classifier takes the best ntree = 500 and mtry = 11 as the final parameter setting. SVM classifier obtained the best classification results with radial kernel function and gamma value of 0.1. The best results obtained with the use of the aforementioned classifiers were shown in Table 5 and Figure 6.

Table 5.

Error matrix for pixel-based classification with 34 selected variables; the unit is pixel number.

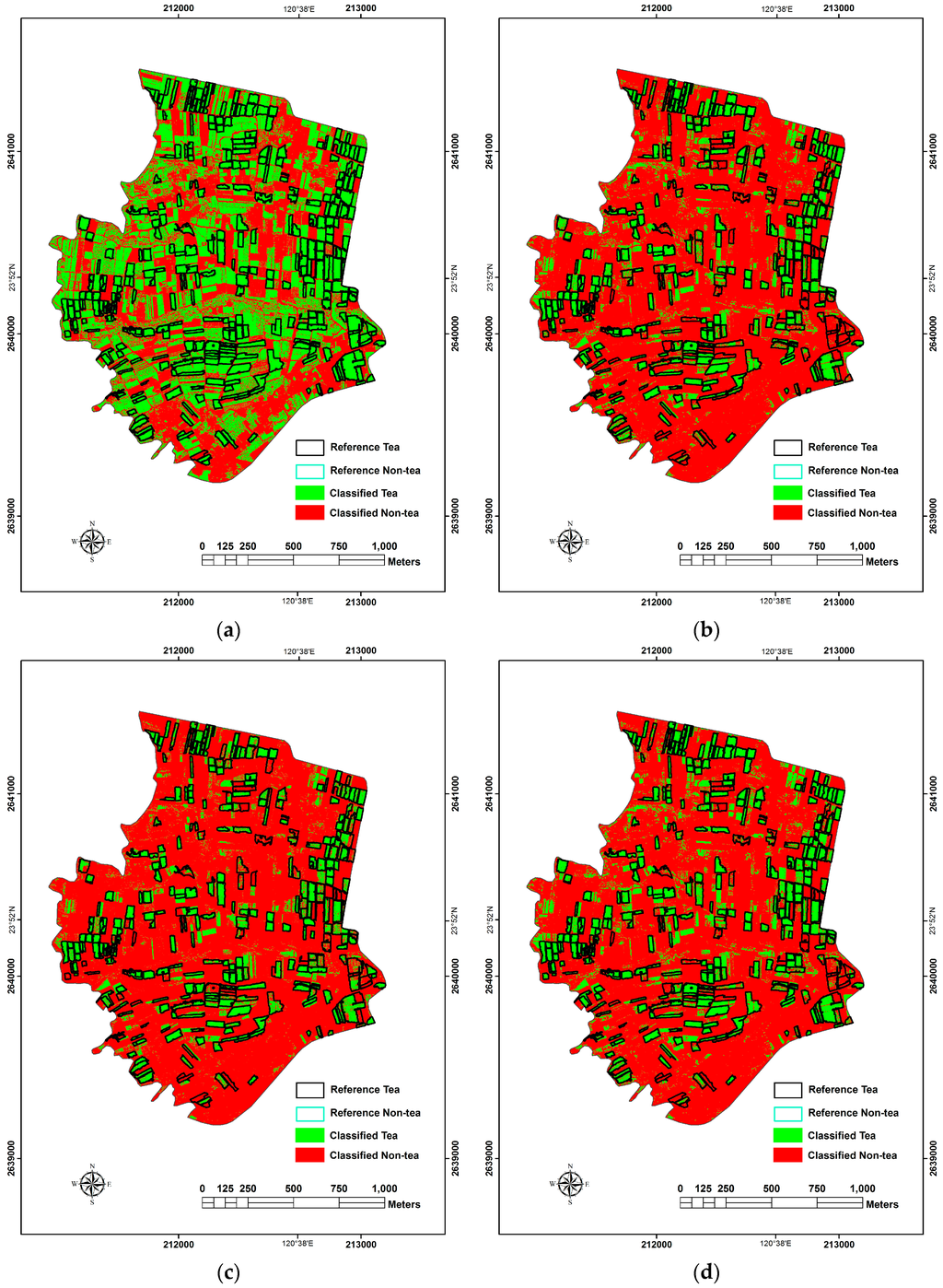

Figure 6.

The results of PBIA classification. (a) ML; (b) LR; (c) RF; (d) SVM.

In terms of classification accuracy, SVM was the highest (OA = 84.70% and KIA = 0.690) followed by RF analysis, ML and LR. In terms of McNemar’s test, the accuracy of SVM which was the best in classification was significantly higher than the second best RF (|z| = 35.961), while LR was significantly the lowest (with |z| = 72.109 compared with ML).

For more detailed information about the PA and UA values of each classifier, we found that the PA value of ML classifier was much higher than others (Figure 6a) since over 50% of study area was interpreted as tea tree LULC. It obviously reduced the omission error in tea tree classification (i.e., 1—PA); however, it also caused several non-tea areas to be commissioned as the classof tea and decreased the UA of the class of tea and the PA of class of non-tea.

3.2.2. Object-Based Results

In the LR analysis, the same as the pixel-based approach, objects with the value of p < 0.5 were assigned as the class of tea while they were non-tea. RF classifier obtained the best classification results when ntree = 500 and mtry = 3. SVM classifier obtained the best classification results with radial kernel function and gamma values of 0.1. The best results gained with the aforementioned classifiers are shown in Table 6 and Figure 7.

Table 6.

Error matrix for object-based classification with six selected variables; the unit is object number.

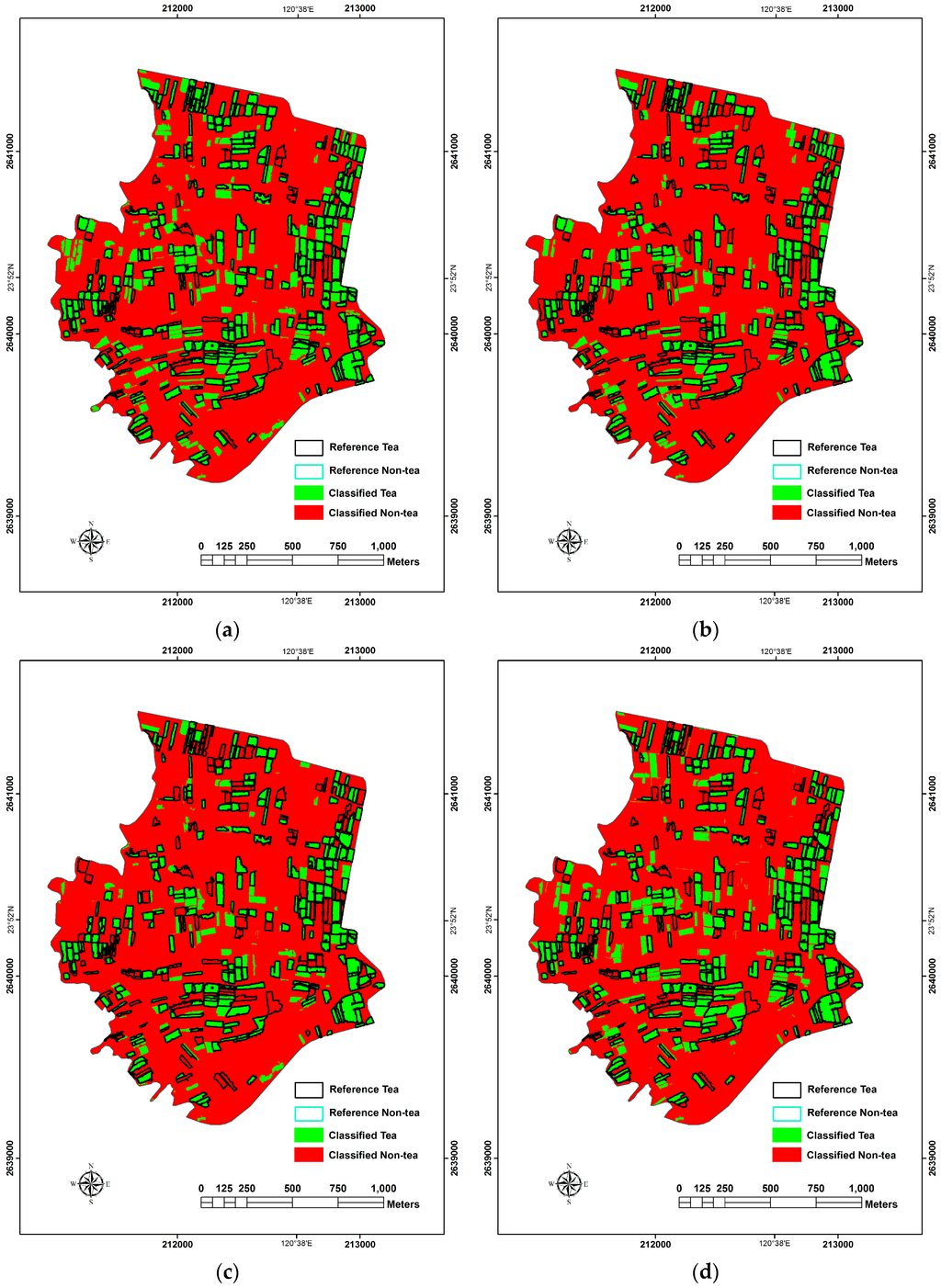

Figure 7.

The results of OBIA classification. (a) ML; (b) LR; (c) RF; (d) SVM.

In terms of the accuracy of classification, ML was the one with the highest member in OBIA. OA and KIA may both reach to 96.04% and 0.887. LR, SVM analysis and RF followed. The one with the lowest accuracy was RF in OA and KIA with 91.20% and 0.733 respectively. The results of McNemar’s test showed that ML was with the best classification accuracy which was significantly higher than the one of the second best LR (|z| = 138.082), while the accuracy of SVM analysis was significantly higher than RF (|z| = 38.051). Evaluating the details of PA and UA of the classifiers, the PA of tea tree with ML was higher than the other classifiers, which was the main reason for it to give the best overall classification results. The PA of tea tree and the UA of non-tea tree with RF were not high, meaning that many tea tree areas were classified as non-tea tree areas, which was the main reason for it to be the worse results among the classifiers.

3.2.3. Overall Comparison

Using the same classifier to compare the results obtained with the use of PBIA and OBIA, the classification accuracy of OBIA among ML and LR analysis classifiers was higher, while the one among the results gained with the use of PBIA in SVM and RF was higher. Although the result of McNemar’s test indicated that both results had significant differences, the significant values |z| of ML and LR analysis classifiers were larger than the ones of RF and SVM. Overall, it turned out that the results obtained with the use of OBIA were better than the results obtained with PBIA in both OA and KIA (Table 7).

Table 7.

Comparison of the accuracy assessment of pixel- and object-based approaches.

What is worth attention is that the accuracy of OBIA + ML was not only higher than that of PBIA + ML but also the best among all the results obtained with PBIA and OBIA. In addition, if we take a look at the ML results of the two approaches, the PA of tea tree LULC is the highest among all the classifiers mainly because the ML, whether among PBIA or OBIA results, tended to classify more areas as tea tree LULC as to avoid the error caused by omission. The performance of all classifiers of PBIA and OBIA approaches were unstable while the OA varied a lot between 82.97% and 96.04%. However, the results of RF in this study showed a difference from those of the previous studies for other LULC classification which showed the highest OA result than other classifiers [65,79]. It was speculated that perhaps in the experiment design of this study, only two categories—tea and non-tea LULC were provided—tea tree and non-tea tree LULC so that RF cannot show its advantage in classification.

4. Discussion

In the feature selection stage, adding variables such as GLCM layer would increase the interpretation capability of land-use/land cover, but it requires a lot of time and efforts. This study tried to find the most useful variables to be added here so we can promote the efficiency of interpretation with lowest cost. As mentioned in Figure 2, the feature selection stage based on LR model delineated 34 useful variables in PBIA and 6 GLCM variables in OBIA. All selected variables were significant related factor for LR function (p < 0.05, and B coefficient can be considered as the weights of importance). It means that we only need to input 34 variables in PBIA classifier, and six variables in the OBIA classifier. We think it is important for saving money if the government (e.g., Council of Agriculture) needs to lower the cost of crop cultivation investigation.

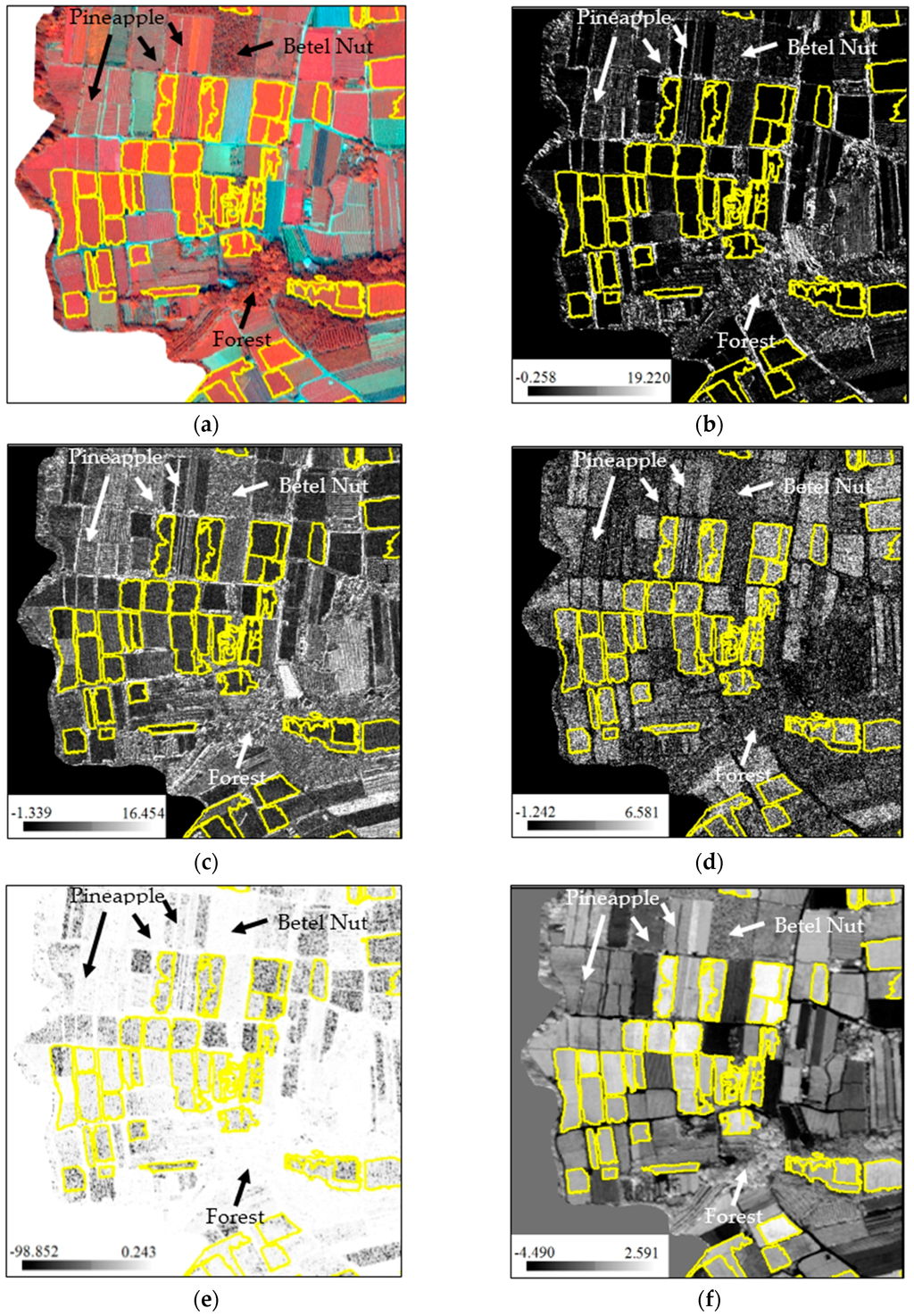

Previous studies have already discussed the use of four newly available spectral bands (coastal, green, yellow, red edge) for the classification of the tree species [39,80]. In this research, the results of feature selection using LR analysis showed that the influence of band 6 (red edge) and band 4 of its derivative texture indices were significant to the tea classification. Figure 8 shows the signature comparison between tea, betel nut, pineapple and forest fields using input variables with different band combination. Obviously, the visual differences became clear when standardized CONTRAST6, DISSIMI6, HOMO2, CORREL6 and PC2 were included in classification process. This means red edge spectrum and some texture indices were useful in distinguishing between tea and non-tea LULC. It also supports the conclusions of the previous studies concerning vegetation classification [39,81]. The superiority of the red edge spectrum indicates that it can reflect the chlorophyll concentration and the physiological status of crops [82]; and thus it can also be helpful for land cover classification [81]. Besides, principal component transformation is commonly considered a good method to reduce data dimension and to extract useful information. After the processing of 8-band WorldView-2 imagery, the most abundant information was transformed into the first PC (PC1) and the information decreases gradually to the last PC (PC8). According to the LR analysis results, however, PC2 to PC8 except PC6 were still helpful for tea and non-tea LULC discrimination. This implies that every component may have to be thought about when conducting binary classification. LR analysis also indicates the importance of each variable. Taking tea, betel nut, pineapple fields and forest as the examples, Figure 8 shows that the input variables with higher absolute B coefficient values differentiate the LULC above more than those with lower B coefficients.

Figure 8.

Signature comparison of tea, betel nut, pineapple and forest fields using input variables with higher B coefficient. (a) is the original image displayed with band 7, 3 and 2; while (b–f) are standardized CONTRAST6, DISSIMI6, HOMO2, CORREL6 and PC2 respectively. The yellow boundaries delineate the tea crop fields.

As for textural information, LR analysis in feature selection showed that GLCM MEAN and DISSIMI were the two most dominant variables for the tea LULC classification. GLCM MEAN represented the local mean value of the processing window and had high relation with the original spectral information. The processing window also called moving window, is the basic unit for calculating grid layer features. The value of each pixel is calculated by shifting the window one pixel at a time until all pixels are calculated. The size of moving window was 3 × 3 pixels in this study. B coefficients of the MEAN which were higher than those of other variables suggested that the spectral information was more useful than the structural characteristics of WorldView-2 imagery. Nevertheless, the dissimilarity measures, which were based on empirical estimates of the feature distribution, were also critical for enhancing the structural difference between tea and non-tea LULC.

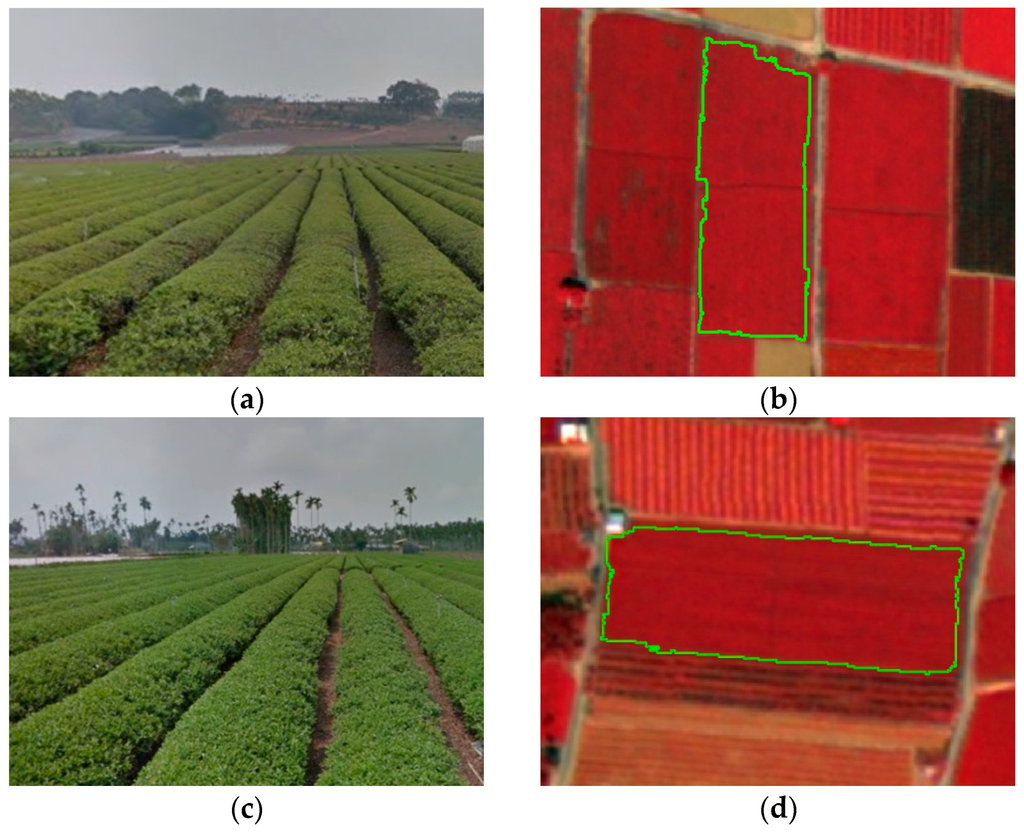

Though previous studies seldom used MEAN and DISSIMI for tea classification, these two measures had been proven effective in improving classification accuracy for agricultural and forest areas [65,83] and the idea also indirectly support the findings of this study. The tea trees in the study area were planted in rows with totally different characteristics from those of the crops observed on the ground in structure and arrangement. However, in Table 3, the overall spectral variables of B coefficient are still larger than the structural variables when using pixel-approach. This means that in pixel-based classification, the spectral variables were more effective in distinguishing the differences of the ground coverage of tea trees and non-tea trees comparing to the structural variables. This could be explained with the two examples in Figure 9. Even in the pan-sharpened WorldView-2 imagery with 0.5-m high spatial resolution, there are not all characteristics of the line by line formation could be clearly presented due to the narrow interval between tea trees. For this reason, it seems to be much harder for the structural variables to play to their strengths.

Figure 9.

Comparative images of the photograph of the tea tree field (left row) and WorldView-2 (right row). (a) is the photograph captured in 120.6341°E, 23.8684°N in 4 March 2015 while (b) is its corresponding WorldView-2 image captured in 22 February 2015; (c) is the photograph captured in 120.6292°E, 23.8615°N in 5 March 2015 while (d) is its corresponding WorldView-2 image captured in 22 February 2015.

As for the issues for reducing data dimensions and the number of input variables, the comparative analysis between PBIA and OBIA in this study demonstrated a significant difference that using OBIA was more effective and sensitive in conducting variable filtering process than using PBIA. The strength of OBIA was attributed to the less amount of samples and the reduction of spectral heterogeneity within each variable layer when producing objects from pixels. Taking this study for example, it means that people could spend less time generating input variables and achieve satisfactory results compared to using PBIA if OBIA is applied. However, it is worth noting that OBIA demands more computational resources, requires specialized software (i.e., eCognition Suite) and is hard to applied in a large study area even the number of objects is much less than the total number of pixels. The above mentioned factors often set limitations on the use of OBIA.

One parametric classifier (ML) and three non-parametric classifiers (LR, SVM and RF) were applied in this study. According to the accuracy assessment of PBIA and OBIA approaches, almost all OA values were found to exceed 82.97%. The use of OBIA + ML obviously got the best OA value among OBIA approaches, but the use of PBIA+ML did not. The main reason that the overall accuracy of ML classifier was not as good as non-parametric approach (SVM or RF) in PBIA comes from the bimodal data distribution of tea-farm training samples due to the alternate permutation of tea plants and corridors. The special arrangement might lead tea farms divided into two LULC types in PBIA when ML classier was applied. However, in OBIA, the basic unit was polygon that contained many WorldView-2 image pixels, the average treatment effect within tea farm training samples would decrease the difference of every basic unit, eliminate the spectral heterogeneity within every tea LULC patches, and lead sample distribution more like normal distribution. The ML classifier performs well for normally distributed data, this is why the classification result of the use of OBIA + ML was much better than PBIA + ML.

Minimum distance classifier has been commonly used to classify remote sensing imagery [84,85]. This classifier works well when the distance between means is large compared to the spread (or randomness) of each class with respect to its mean; moveover, it is very good approach dealing with non-normal distribution data. However, this study did not consider this classifier as one of the methods in PBIA and OBIA approaches. The reason is that the non-tea class spreads much wider in the feature space and thus the distance between the means of non-tea and tea classes is not large compared to the spread of non-tea class. In this case, if there is an unclassed sample situating near the boundary of non-tea and tea classes in the feature space, the classification result may not be ideal. As for the ML classifier, the occurrence frequencies of non-tea and tea classes are used to aid the classification process. Without this frequency information, the minimum distance classifier can yield biased classifications. Although salt and pepper effect may influence the distribution normality of the data, OBIA approach can decrease the possibility of salt and pepper effect. Therefore, we think ML classifier still has the superiority especially in OBIA approach.

As for SVM classifier, its superior classifying effects toward high-dimensional feature space, capability on processing non-linear data, characteristics to avoid over fitting and under fitting, and the stability to decrease the salt and pepper effect made it the best classifier of PBIA. However, it was not the best algorithm of OBIA. We inferred the most possible reasons: (1) the OBIA image segmentation stage in this study made the sample data look like a case of normal distribution, decreased the possibility of salt and pepper effect, and promoted the effects of ML algorithm; (2) OBIA image segmentation was possible to combine tea farms and pineapple farms together due to the line by line arrangements of tea trees and pineapples with unfixed interval. Although the advantages of SVM with soft margin were not presented in the application of OBIA, the OA and Kappa values were still good.

As for the LULC monitoring frequency, compared with many herbaceous crops, the monitoring task on tea trees with high spatial resolution commercial satellite imagery is much easier because of the long-lived perennial characteristics of tea tree. The relatively stable life cycle of tea production in Taiwan often starts on the 3rd year after the planting of saplings, and then there is going to be one to two harvests every season for at least 30 years. Therefore, people do not need to collect a series of period images as frequently as in the monitoring on herbaceous crops. Nevertheless, it is worth mentioning that this study still has some limitations. The first one is the study area of this research was limited to a flat and gentle sloping area, yet some tea farms are in the high mountains where the terrain effect would certainly have an influence due to the shadow-effect of satellite images. For this reason, we suggest the users to apply our workflow in flat or gentle slope areas. Besides, at some places, physiological changes among non-tea plants might influence the spectral reflection of satellite images, so we considered that our workflow is suitable for the use in sub-tropical places where most plants are evergreen.

After all, in order to achieve tea production prediction or evaluation of high efficiency and low costs, we suggest the governments apply the methodology used in this study as a standardized framework, while field surveys and re-investigation with manpower resources should be the backup option for calibration or supplementary once people want to totally eliminate the commission and omission errors. The classification method in this study can be further applied in two different ways. The first one is of benefit to countries with the largest tea planting areas (e.g., China, India, Kenya, Sri Lanka, and Vietnam). Besides, it can also be applied in other crop classification studies such as banana, pineapple, sugar cane, and watermelon with particular type of plantation and farming arrangement.

5. Conclusions

Traditional field surveys or orthogonal photograph interpretation for the monitoring of sensitive crops are time-consuming and expensive. The assistance of high spatial and spectral resolution imagery has been proven useful for mapping several crops and helpful in agriculture land management and sustainable agricultural production. However, there is a gap in the research on mapping tea LULC in intensive and heterogeneous agriculture areas due to the spectral and spatial resolution restrictions. This study investigated the WorldView-2 imagery characteristics and the most applied significant variables and classification approaches used to develop a stepwise classification framework for tea tree mapping in Taiwan. The results show it is not enough for tea and non-tea crop classification if we only apply the original approach of eight MS bands of WorldView-2. Advanced variables such as GLCM texture indices are necessary. It is evident that logistic regression analysis in this study was helpful in feature selection, and the OBIA approach filtered more insignificant variables than the PBIA approach. In other words, the OBIA approach costs less but generated additional variables.

As for higher accuracy of tea tree classification, one parametric classifier (ML) and three non-parametric classifiers (LR, SVM and RF) were applied. The obtained data showed that all classifiers achieved overall accuracies ranging from 82.97% to 96.04%. Parametric ML showed significantly higher accuracy in OBIA while the lower accuracy of PBIA was due to the different spectral heterogeneity in the tea tree samples. We suggest that the OBIA+ML approach would offer the best combination of efficiency and stabilization in tea tree classification. Overall, the applicability of remote sensing imagery for the evaluation, and monitoring of tea trees was well confirmed.

Acknowledgments

We appreciate Taiwan Agricultural Research Institute for providing the satellite images. We also thank many research assistants and colleagues in Feng Chia University for assisting ground surveys.

Author Contributions

Yung-Chung Matt Chuang and Yi-Shiang Shiu conceived and designed the experiments; Yi-Shiang Shiu performed the experiments; Yung-Chung Matt Chuang and Yi-Shiang Shiu analyzed the data and contributed reagents/materials/analysis tools; Yung-Chung Matt Chuang wrote the paper.

Conflicts of Interest

The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

References

- Barlow, K.M.; Christy, B.P.; O’Leary, G.J.; Riffkin, P.A.; Nuttall, J.G. Simulating the impact of extreme heat and frost events on wheat crop production: A review. Filed Crop. Res. 2015, 171, 109–119. [Google Scholar] [CrossRef]

- Kou, N.; Zhao, F. Effect of multiple-feedstock strategy on the economic and environmental performance of thermochemical ethanol production under extreme weather conditions. Biomass Bioenergy 2011, 35, 608–616. [Google Scholar] [CrossRef]

- Leblois, A.; Quirion, P.; Sultan, B. Price vs. weather shock hedging for cash crops: Ex ante evaluation for cotton producers in Cameroon. Ecol. Econ. 2014, 101, 67–80. [Google Scholar] [CrossRef]

- Van Bussel, L.G.J.; Müller, C.; van Keulen, H.; Ewert, F.; Leffelaar, P.A. The effect of temporal aggregation of weather input data on crop growth models’ results. Agric. For. Meteorol. 2011, 151, 607–619. [Google Scholar] [CrossRef]

- Van Oort, P.A.J.; Timmermans, B.G.H.; Meinke, H.; Van Ittersum, M.K. Key weather extremes affecting potato production in The Netherlands. Eur. J. Agron. 2012, 37, 11–22. [Google Scholar] [CrossRef]

- Gbegbelegbe, S.; Chung, U.; Shiferaw, B.; Msangi, S.; Tesfaye, K. Quantifying the impact of weather extremes on global food security: A spatial bio-economic approach. Weather Clim. Extrem. 2014, 4, 96–108. [Google Scholar] [CrossRef]

- Huang, J.; Jiang, J.; Wang, J.; Hou, L. Crop Diversification in Coping with Extreme Weather Events in China. J. Integr. Agric. 2014, 13, 677–686. [Google Scholar] [CrossRef]

- Iizumi, T.; Ramankutty, N. How do weather and climate influence cropping area and intensity? Glob. Food Sec. 2015, 4, 46–50. [Google Scholar] [CrossRef]

- Silva, J.A.; Matyas, C.J.; Cunguara, B. Regional inequality and polarization in the context of concurrent extreme weather and economic shocks. Appl. Geogr. 2015, 61, 105–116. [Google Scholar] [CrossRef]

- Van Wart, J.; Grassini, P.; Yang, H.; Claessens, L.; Jarvis, A.; Cassman, K.G. Creating long-term weather data from thin air for crop simulation modeling. Agric. For. Meteorol. 2015, 209–210, 49–58. [Google Scholar] [CrossRef]

- Zhang, W.; Zheng, C.; Song, Z.; Deng, A.; He, Z. Farming systems in China: Innovations for sustainable crop production. In Crop Physiology: Applications for Genetic Improvement and Agronomy; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Wijeratne, M.A. Vulnerability of Sri Lanka tea production to global climate change. In Climate Change Vulnerability and Adaptation in Asia and the Pacific; Springer: New York, NY, USA, 1996; pp. 87–94. [Google Scholar]

- Azapagic, A.; Bore, J.; Cheserek, B.; Kamunya, S.; Elbehri, A. The global warming potential of production and consumption of Kenyan tea. J. Clean. Prod. 2016, 112, 4031–4040. [Google Scholar] [CrossRef]

- Smit, B.; Cai, Y. Climate change and agriculture in China. Glob. Environ. Chang. 1996, 6, 205–214. [Google Scholar] [CrossRef]

- Liu, Z.-W.; Wu, Z.-J.; Li, X.-H.; Huang, Y.; Li, H.; Wang, Y.-X.; Zhuang, J. Identification, classification, and expression profiles of heat shock transcription factors in tea plant (Camellia sinensis) under temperature stress. Gene 2016, 576, 52–59. [Google Scholar] [CrossRef] [PubMed]

- Croce, K.-A. Latino(a) and Burmese elementary school students reading scientific informational texts: The interrelationship of the language of the texts, students’ talk, and conceptual change theory. Linguist. Educ. 2015, 29, 94–106. [Google Scholar] [CrossRef]

- Easterling, W.E. Adapting North American agriculture to climate change in review. Agric. For. Meteorol. 1996, 80, 1–53. [Google Scholar] [CrossRef]

- Hansen, M.C.; Egorov, A.; Potapov, P.V.; Stehman, S.V.; Tyukavina, A.; Turubanova, S.A.; Roy, D.P.; Goetz, S.J.; Loveland, T.R.; Ju, J.; et al. Monitoring conterminous United States (CONUS) land cover change with Web-Enabled Landsat Data (WELD). Remote Sens. Environ. 2014, 140, 466–484. [Google Scholar] [CrossRef]

- McCullum, C.; Benbrook, C.; Knowles, L.; Roberts, S.; Schryver, T. Application of Modern Biotechnology to Food and Agriculture: Food Systems Perspective. J. Nutr. Educ. Behav. 2003, 35, 319–332. [Google Scholar] [CrossRef]

- Gollapalli, M.; Li, X.; Wood, I. Automated discovery of multi-faceted ontologies for accurate query answering and future semantic reasoning. Data Knowl. Eng. 2013, 87, 405–424. [Google Scholar] [CrossRef]

- Baker, E.W.; Niederman, F. Integrating the IS functions after mergers and acquisitions: Analyzing business-IT alignment. J. Strateg. Inf. Syst. 2014, 23, 112–127. [Google Scholar] [CrossRef]

- Milenov, P.; Vassilev, V.; Vassileva, A.; Radkov, R.; Samoungi, V.; Dimitrov, Z.; Vichev, N. Monitoring of the risk of farmland abandonment as an efficient tool to assess the environmental and socio-economic impact of the Common Agriculture Policy. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 218–227. [Google Scholar] [CrossRef]

- Wilson, B.T.; Lister, A.J.; Riemann, R.I. A nearest-neighbor imputation approach to mapping tree species over large areas using forest inventory plots and moderate resolution raster data. For. Ecol. Manag. 2012, 271, 182–198. [Google Scholar] [CrossRef]

- Vegas Galdos, F.; Álvarez, C.; García, A.; Revilla, J.A. Estimated distributed rainfall interception using a simple conceptual model and Moderate Resolution Imaging Spectroradiometer (MODIS). J. Hydrol. 2012. [Google Scholar] [CrossRef]

- Sheeren, D.; Bonthoux, S.; Balent, G. Modeling bird communities using unclassified remote sensing imagery: Effects of the spatial resolution and data period. Ecol. Indic. 2014, 43, 69–82. [Google Scholar] [CrossRef]

- Li, W.; Li, H.; Zhao, L. Estimating Rice Yield by HJ-1A Satellite Images. Rice Sci. 2011, 18, 142–147. [Google Scholar] [CrossRef]

- Setiawan, Y.; Lubis, M.I.; Yusuf, S.M.; Prasetyo, L.B. Identifying Change Trajectory over the Sumatra’s Forestlands Using Moderate Image Resolution Imagery. Procedia Environ. Sci. 2015, 24, 189–198. [Google Scholar] [CrossRef]

- Leinenkugel, P.; Kuenzer, C.; Oppelt, N.; Dech, S. Characterisation of land surface phenology and land cover based on moderate resolution satellite data in cloud prone areas—A novel product for the Mekong Basin. Remote Sens. Environ. 2013, 136, 180–198. [Google Scholar] [CrossRef]

- Saadat, H.; Adamowski, J.; Tayefi, V.; Namdar, M.; Sharifi, F.; Ale-Ebrahim, S. A new approach for regional scale interrill and rill erosion intensity mapping using brightness index assessments from medium resolution satellite images. CATENA 2014, 113, 306–313. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, X.; Zhao, X.; Liu, B.; Yi, L.; Zuo, L.; Wen, Q.; Liu, F.; Xu, J.; Hu, S. A 2010 update of National Land Use/Cover Database of China at 1:100000 scale using medium spatial resolution satellite images. Remote Sens. Environ. 2014, 149, 142–154. [Google Scholar] [CrossRef]

- Bridhikitti, A.; Overcamp, T.J. Estimation of Southeast Asian rice paddy areas with different ecosystems from moderate-resolution satellite imagery. Agric. Ecosyst. Environ. 2012, 146, 113–120. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Soares Machado, C.A.; Knopik Beltrame, A.M.; Shinohara, E.J.; Giannotti, M.A.; Durieux, L.; Nóbrega, T.M.Q.; Quintanilha, J.A. Identifying concentrated areas of trip generators from high spatial resolution satellite images using object-based classification techniques. Appl. Geogr. 2014, 53, 271–283. [Google Scholar] [CrossRef]

- Mora, B.; Wulder, M.A.; White, J.C. Segment-constrained regression tree estimation of forest stand height from very high spatial resolution panchromatic imagery over a boreal environment. Remote Sens. Environ. 2010, 114, 2474–2484. [Google Scholar] [CrossRef]

- Wania, A.; Kemper, T.; Tiede, D.; Zeil, P. Mapping recent built-up area changes in the city of Harare with high resolution satellite imagery. Appl. Geogr. 2014, 46, 35–44. [Google Scholar] [CrossRef]

- Ardila, J.P.; Bijker, W.; Tolpekin, V.A.; Stein, A. Context-sensitive extraction of tree crown objects in urban areas using VHR satellite images. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 57–69. [Google Scholar] [CrossRef]

- Diaz-Varela, R.A.; Zarco-Tejada, P.J.; Angileri, V.; Loudjani, P. Automatic identification of agricultural terraces through object-oriented analysis of very high resolution DSMs and multispectral imagery obtained from an unmanned aerial vehicle. J. Environ. Manag. 2014, 134, 117–126. [Google Scholar] [CrossRef] [PubMed]

- Bunting, P.; Lucas, R.M.; Jones, K.; Bean, A.R. Characterisation and mapping of forest communities by clustering individual tree crowns. Remote Sens. Environ. 2010, 114, 2536–2547. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Fan, Y.; Koukal, T.; Weisberg, P.J. A sun–crown–sensor model and adapted C-correction logic for topographic correction of high resolution forest imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 94–105. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Gomez, C.; Mangeas, M.; Petit, M.; Corbane, C.; Hamon, P.; Hamon, S.; De Kochko, A.; Le Pierres, D.; Poncet, V.; Despinoy, M. Use of high-resolution satellite imagery in an integrated model to predict the distribution of shade coffee tree hybrid zones. Remote Sens. Environ. 2010, 114, 2731–2744. [Google Scholar] [CrossRef]

- Zhou, J.; Proisy, C.; Descombes, X.; le Maire, G.; Nouvellon, Y.; Stape, J.-L.; Viennois, G.; Zerubia, J.; Couteron, P. Mapping local density of young Eucalyptus plantations by individual tree detection in high spatial resolution satellite images. For. Ecol. Manag. 2013, 301, 129–141. [Google Scholar] [CrossRef]

- Garrity, S.R.; Allen, C.D.; Brumby, S.P.; Gangodagamage, C.; McDowell, N.G.; Cai, D.M. Quantifying tree mortality in a mixed species woodland using multitemporal high spatial resolution satellite imagery. Remote Sens. Environ. 2013, 129, 54–65. [Google Scholar] [CrossRef]

- Gärtner, P.; Förster, M.; Kurban, A.; Kleinschmit, B. Object based change detection of Central Asian Tugai vegetation with very high spatial resolution satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 31, 110–121. [Google Scholar] [CrossRef]

- Dons, K.; Smith-Hall, C.; Meilby, H.; Fensholt, R. Operationalizing measurement of forest degradation: Identification and quantification of charcoal production in tropical dry forests using very high resolution satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 18–27. [Google Scholar] [CrossRef]

- Cho, M.A.; Malahlela, O.; Ramoelo, A. Assessing the utility WorldView-2 imagery for tree species mapping in South African subtropical humid forest and the conservation implications: Dukuduku forest patch as case study. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 349–357. [Google Scholar] [CrossRef]

- Kayitakire, F.; Hamel, C.; Defourny, P. Retrieving forest structure variables based on image texture analysis and IKONOS-2 imagery. Remote Sens. Environ. 2006, 102, 390–401. [Google Scholar] [CrossRef]

- Radoux, J.; Defourny, P. A quantitative assessment of boundaries in automated forest stand delineation using very high resolution imagery. Remote Sens. Environ. 2007, 110, 468–475. [Google Scholar] [CrossRef]

- Silva, T.S.F.; Costa, M.P.F.; Melack, J.M. Spatial and temporal variability of macrophyte cover and productivity in the eastern Amazon floodplain: A remote sensing approach. Remote Sens. Environ. 2010, 114, 1998–2010. [Google Scholar] [CrossRef]

- Lamonaca, A.; Corona, P.; Barbati, A. Exploring forest structural complexity by multi-scale segmentation of VHR imagery. Remote Sens. Environ. 2008, 112, 2839–2849. [Google Scholar] [CrossRef]

- Weiers, S.; Bock, M.; Wissen, M.; Rossner, G. Mapping and indicator approaches for the assessment of habitats at different scales using remote sensing and GIS methods. Landsc. Urban Plan. 2004, 67, 43–65. [Google Scholar] [CrossRef]

- Sawaya, K.E.; Olmanson, L.G.; Heinert, N.J.; Brezonik, P.L.; Bauer, M.E. Extending satellite remote sensing to local scales: Land and water resource monitoring using high-resolution imagery. Remote Sens. Environ. 2003, 88, 144–156. [Google Scholar] [CrossRef]

- Delenne, C.; Durrieu, S.; Rabatel, G.; Deshayes, M. From pixel to vine parcel: A complete methodology for vineyard delineation and characterization using remote-sensing data. Comput. Electron. Agric. 2010, 70, 78–83. [Google Scholar] [CrossRef]

- Antonarakis, A.S.; Richards, K.S.; Brasington, J. Object-based land cover classification using airborne LiDAR. Remote Sens. Environ. 2008, 112, 2988–2998. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J.E.; Fredrickson, E.L.; Burkett, L. An object-based image analysis approach for determining fractional cover of senescent and green vegetation with digital plot photography. J. Arid Environ. 2007, 69, 1–14. [Google Scholar] [CrossRef]

- Jacquin, A.; Misakova, L.; Gay, M. A hybrid object-based classification approach for mapping urban sprawl in periurban environment. Landsc. Urban Plan. 2008, 84, 152–165. [Google Scholar] [CrossRef]

- Conchedda, G.; Durieux, L.; Mayaux, P. An object-based method for mapping and change analysis in mangrove ecosystems. ISPRS J. Photogramm. Remote Sens. 2008, 63, 578–589. [Google Scholar] [CrossRef]

- Bock, M.; Xofis, P.; Mitchley, J.; Rossner, G.; Wissen, M. Object-oriented methods for habitat mapping at multiple scales – Case studies from Northern Germany and Wye Downs, UK. J. Nat. Conserv. 2005, 13, 75–89. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, X. Object-oriented subspace analysis for airborne hyperspectral remote sensing imagery. Neurocomputing 2010, 73, 927–936. [Google Scholar] [CrossRef]

- Ouma, Y.O.; Josaphat, S.S.; Tateishi, R. Multiscale remote sensing data segmentation and post-segmentation change detection based on logical modeling: Theoretical exposition and experimental results for forestland cover change analysis. Comput. Geosci. 2008, 34, 715–737. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; López-Granados, F.; García-Ferrer, A.; Peña-Barragán, J.M.; Jurado-Expósito, M.; de la Orden, M.S.; González-Audicana, M. Object- and pixel-based analysis for mapping crops and their agro-environmental associated measures using QuickBird imagery. Comput. Electron. Agric. 2009, 68, 207–215. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. Forest species classification and tree crown delineation using Quickbird imagery. In Proceedings of the ASPRS 2007 Annual Conference, Tampa, FL, USA, 7–11 May 2007.

- Kim, S.-R.; Lee, W.-K.; Kwak, D.-A.; Biging, G.S.; Gong, P.; Lee, J.-H.; Cho, H.-K. Forest Cover Classification by Optimal Segmentation of High Resolution Satellite Imagery. Sensors 2011, 11, 1943–1958. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, A.; Joshi, P.K. A comparison of selected classification algorithms for mappingbamboo patches in lower Gangetic plains using very high resolution WorldView 2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 298–311. [Google Scholar] [CrossRef]

- Updike, T.; Comp, C. Radiometric use of WorldView-2 imagery. Tech. Note 2010, 1–17. [Google Scholar]

- Padwick, C.; Deskevich, M.; Pacifici, F.; Smallwood, S. Worldview-2 pan-sharpening. In Proceedings of the ASPRS 2010 Annual Conference, San Diego, CA, USA, 26–30 April 2010.

- Fienberg, S. The Analysis of Cross-Classified Categorical Data, 2nd ed.; MIT Press: Cambridge, MA, USA, 1985. [Google Scholar]

- Agresti, A. Building and Applying Logistic Regression Models. In Categorical Data Analysis; John Wiley & Sons, Inc.: New York, NY, USA, 2003; pp. 211–266. [Google Scholar]

- Menard, S.W. Applied Logistic Regression Analysis. In Applied Logistic Regression Analysis; SAGE Publishing: Thousand Oaks, CA, USA, 2002. [Google Scholar]

- Ohlmacher, G.C.; Davis, J.C. Using multiple logistic regression and GIS technology to predict landslide hazard in northeast Kansas, USA. Eng. Geol. 2003, 69, 331–343. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random forests for classification in ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; Lewis Publishers: Boca Raton, FL, USA, 1999. [Google Scholar]

- Foody, G.M. Thematic map comparison: Evaluating the statistical significance of differences in classification accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Ratle, F.; Camps-Valls, G.; Weston, J. Semisupervised Neural Networks for Efficient Hyperspectral Image Classification. Geosci. IEEE Trans. Remote Sens. 2010, 48, 2271–2282. [Google Scholar] [CrossRef]

- Clark, M.L.; Roberts, D.A. Species-Level Differences in Hyperspectral Metrics among Tropical Rainforest Trees as Determined by a Tree-Based Classifier. Remote Sens. 2012, 4, 1820. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with Random forest using very high spatial resolution 8-band worldView-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Schuster, C.; Förster, M.; Kleinschmit, B. Testing the red edge channel for improving land-use classifications based on high-resolution multi-spectral satellite data. Int. J. Remote Sens. 2012, 33, 5583–5599. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, 1–4. [Google Scholar] [CrossRef]

- Pathak, V.; Dikshit, O. A new approach for finding an appropriate combination of texture parameters for classification. Geocarto Int. 2010, 25, 295–313. [Google Scholar] [CrossRef]

- Zhong, Y.; Zhang, L. An Adaptive Artificial Immune Network for Supervised Classification of Multi-/Hyperspectral Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2012, 50, 894–909. [Google Scholar] [CrossRef]

- Yang, C.; Odvody, G.N.; Fernandez, C.J.; Landivar, J.A.; Minzenmayer, R.R.; Nichols, R.L. Evaluating unsupervised and supervised image classification methods for mapping cotton root rot. Precis. Agric. 2014, 16, 201–215. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).