1. Introduction

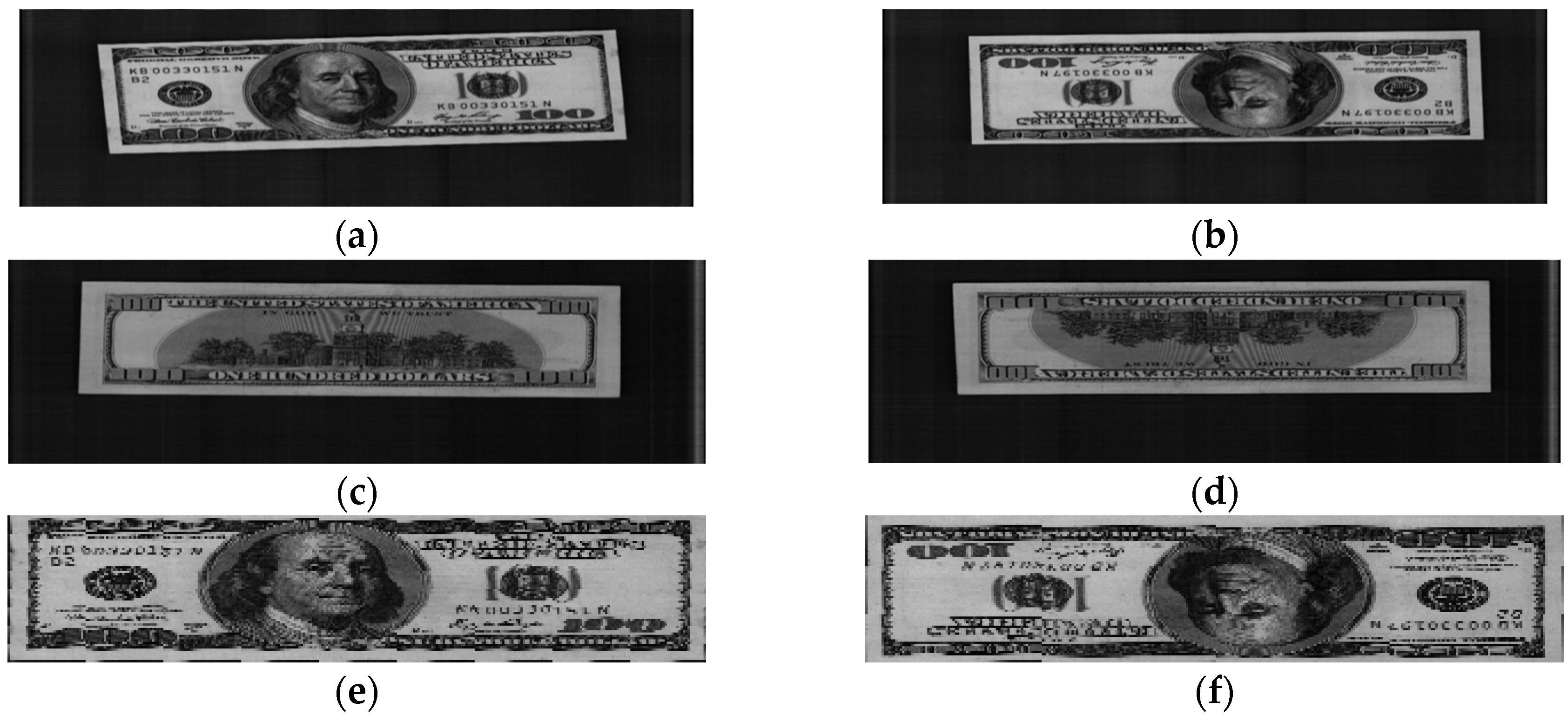

The accurate and reliable recognition of banknotes plays an important role in the growing popularity of payment facilities such as ATMs and currency-counting machines. There have been many studies on this classification functionality that have been based on the optical characteristics of banknotes. Most studies on classification of banknotes by denomination (e.g., $1, $5, $10, etc.) have been based on images of banknotes captured by visible-light sensors. In general, a banknote can appear in four directions on two sides, i.e., the forward and reverse images of the front and back sides, and the captured images of these input directions are used in recognition of banknotes.

Previous studies using visible-light images of banknotes can be divided into those that used whole banknote images for recognition [

1,

2,

3,

4,

5,

6] and those that used certain regions of banknote images [

7,

8,

9,

10,

11,

12]. Wu

et al. [

1] proposed a banknote orientation recognition method that uses the average brightness of eight uniform rectangles on a banknote image as the input of the classifier using a three-layer back-propagation (BP) network. However, their experiments only focused on orientation recognition of one type of Chinese banknote—the Renminbi (RMB) 100 Yuan note. A Chinese banknote recognition method using a three-layer neural network (NN) was proposed by Zhang

et al. [

2]. This method uses linear transforms of gray images to reduce the effect of noise and uses the edge characteristics of the transformed image as the input vectors to the NN classifier. This method was applied to Sri Lankan banknote recognition in [

3]. A BP network was used as the classifier in research by Gai

et al. [

4]. In this research, recognition features were extracted by applying generalized Gaussian density (GGD) to the capture of the statistical characteristics of quaternion wavelet transform (QWT) coefficients on banknote images. To recognize multiple banknotes, Hassanpour and Farahabadi considered the texture characteristics of paper currencies as a random process and used hidden Markov model (HMM) for classification [

6]. The Indian banknote recognition method proposed by Sharma

et al. [

5] uses a local binary pattern (LBP) operator to extract features from banknote images and classifies banknote types using Euclidean distances with template images.

In studies concerning regions of banknotes, not all the image data that a banknote provides have been used for recognition; only certain areas on banknote images have been selected and used. This helps to reduce the amount of input data required and puts the focus on regions of banknotes that have high degrees of discrimination. A Bangladeshi banknote recognition system was proposed using axis symmetric masks to select regions of banknote images before feeding information into a multilayer perceptron network to reduce the network size and adapt it to banknote flipping [

7]. Axis-symmetrical masks were also applied to the neuro-recognition system proposed by Takeda and Nishikage [

8] for analysis of Euro currency using two image sensors. Takeda

et al. also proposed a mask optimization technique using a genetic algorithm (GA) [

10] that could be used to select good masks using the sum of the pixels uncovered by masks, called the slab value [

9], as the input to the recognition neural network. Component-based banknote recognition with speeded-up robust features (SURF) was proposed by Hasanuzzaman

et al. [

11]. In this method, components that provide specific information about banknotes, such as denomination numbers, portraits, and building textures, are cropped and considered to be reference regions. In the multi-currency classification method proposed by Youn

et al. [

12], multi-template correlation matching is used to determine the discriminant areas of each banknote that are highly correlated among banknotes of the same types and poorly correlated among those of different types.

Another approach to extracting classification features involves using statistical procedures such as principal component analysis (PCA) [

13,

14,

15,

16] or canonical analysis (CA) [

17] to reduce the size of the feature vector. In research using learning vector quantization (LVQ) as the classifier, input feature vectors have been extracted by PCA from banknote data acquired by various type of sensors, such as sensors of various wavelengths [

13], or point and line sensors [

14]. The banknote recognition method proposed by Rong

et al. [

15] for a rail transit automatic fare collection system employs PCA to extract features and build matching templates from banknote data acquired by various sensors, such as magnetic, ultraviolet (UV) fluorescence and infrared (IR) sensors. In the hierarchical recognition method proposed by Park

et al. [

16], United States dollar (USD) banknotes were classified by front or back size and their forward or backward directions using a support vector machine (SVM) and then recognized by denomination ($1, $2, $5,

etc.) using a K-mean algorithm based on the PCA features extracted from sub-sampled banknote images. In research by Choi

et al. [

17], CA was used for size reduction and to increase the discriminating power of features extracted from Korean won images using wavelet transform.

There have also been studies combining both of the above feature extraction approaches. The Indian currency recognition method proposed by Vishnu and Omman [

18] selects five regions of interest (ROI): the textures of center numerals, shapes, Reserve Bank of India (RBI) seals, latent images, and micro letters on scanned banknote images. PCA is subsequently used for dimensionality reduction of the extracted features. Finally, the recognition results are validated using a classifier implemented with WEKA software [

18]. Texture-based analysis is also used in the Indian banknote recognition method proposed by Verma

et al. [

19]. In this method, linear discriminant analysis (LDA) is applied to ROIs containing textures on banknote images for feature reduction, and SVM is applied for classification. Here, the ROI selection is conducted with the help of a set of external tools called Mazda. In the smartphone-based US banknote recognition system proposed by Grijalva

et al. [

20], regions of interest are located in the right parts of banknote images. From these regions, weight vectors are extracted using PCA and are compared with those of a training set using the Mahalanobis distance to determine the denomination of an input banknote. Although ROIs were defined in [

20], it is uncertain whether the selected areas on banknote images are indeed those with highest discriminating power for recognition purposes.

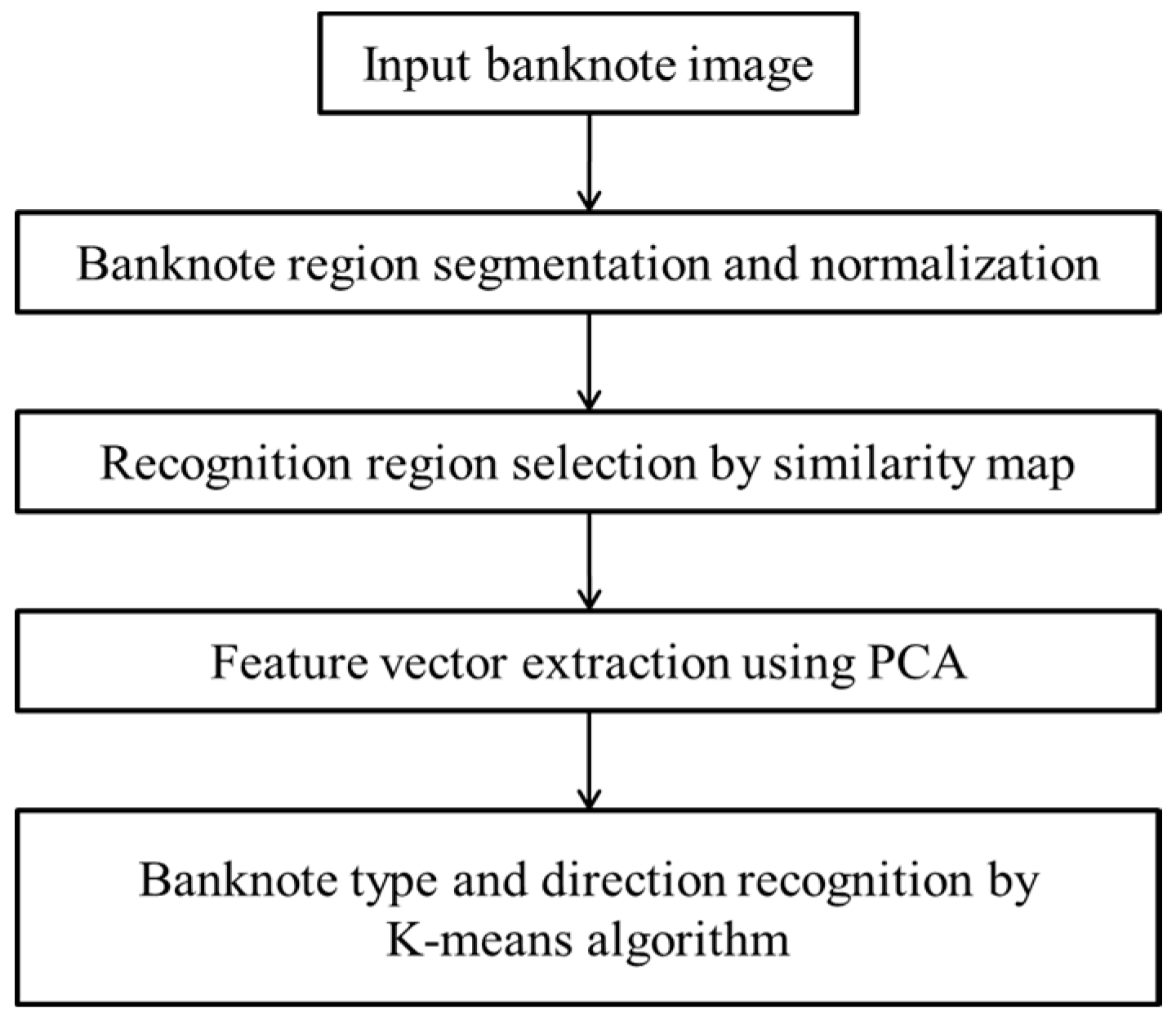

To overcome these limitations, we propose a banknote recognition method that uses a combination of both of the feature extraction approaches mentioned above. From the sub-sampled banknote images, we select areas that have high degrees of similarity from among banknotes in the same class and high degrees of difference among those in different classes. The discriminant features are then extracted from the selected data using PCA, and the banknote type is determined by the classifier based on K-means algorithm. Our method is novel in the following respects:

- (1)

Using the sub-sampled banknote region from the captured image, the local areas that have high discriminating power are selected using a similarity map. This map is obtained based on the ratio of correlation map values, considering between-class and in-class dissimilarities among banknote images.

- (2)

Optimally reduced features for recognition are obtained from the selected local areas based on PCA, which reduces both the noise components and the processing time.

- (3)

The performance of our method has been measured using both normal circulated banknotes and test notes, and the effectiveness of our method has been confirmed in the harsh testing environment of banknote recognition.

- (4)

Through experiments with various types of banknotes—US dollars (USD), South African rand (ZAR), Angolan kwanza (AOA) and Malawian kwacha (MWK)—we have confirmed that our method can be applied irrespective of the type of banknote.

Table 1 presents a comparison between our research and previous studies. The remainder of this paper is organized as follows:

Section 2 presents the details of the proposed banknote recognition method. Experimental results are presented in

Section 3, and conclusions drawn from the results are presented in

Section 4.

3. Experimental Results

In this study, we used a database consisting of 99,236 images captured from 49,618 USD banknotes on both sides. The images in the database include the four directions of 17 types of banknotes: $1, $2, $5, recent $5, most recent $5, $10, recent $10, most recent $10, $20, recent $20, most recent $20, $50, recent $50, most recent $50, $100, recent $100 and most recent $100. The number of images in each banknote class is shown in

Table 2. In our experimental database of USD banknote images, both the number of images and the number of classes are comparatively larger than those in previous studies, as shown in

Table 3.

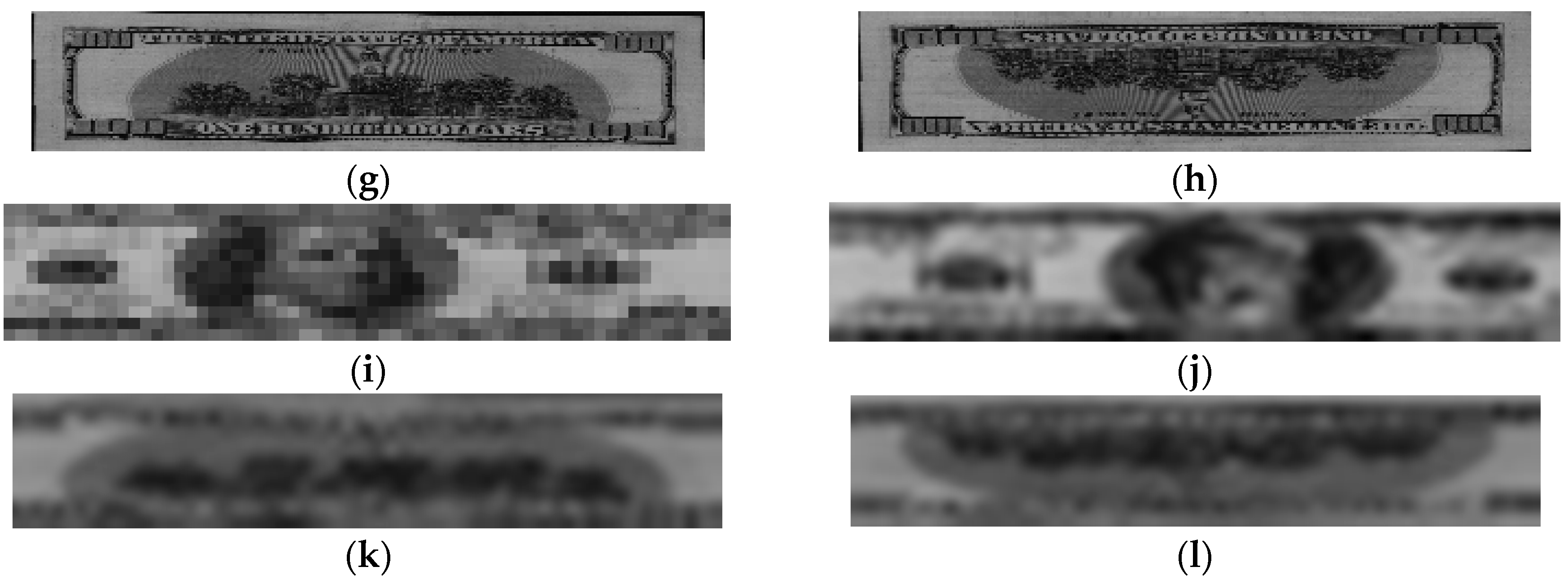

First, we calculated the similarity map and applied half-histogram thresholding to obtain the mask for selecting the discriminative areas in a 64 × 12-pixel sub-sampled banknote image. Using the resulting binary mask shown in

Figure 5, we selected 388 gray values of the pixels corresponding to the white areas of the mask from the sub-sampled image for banknote classification.

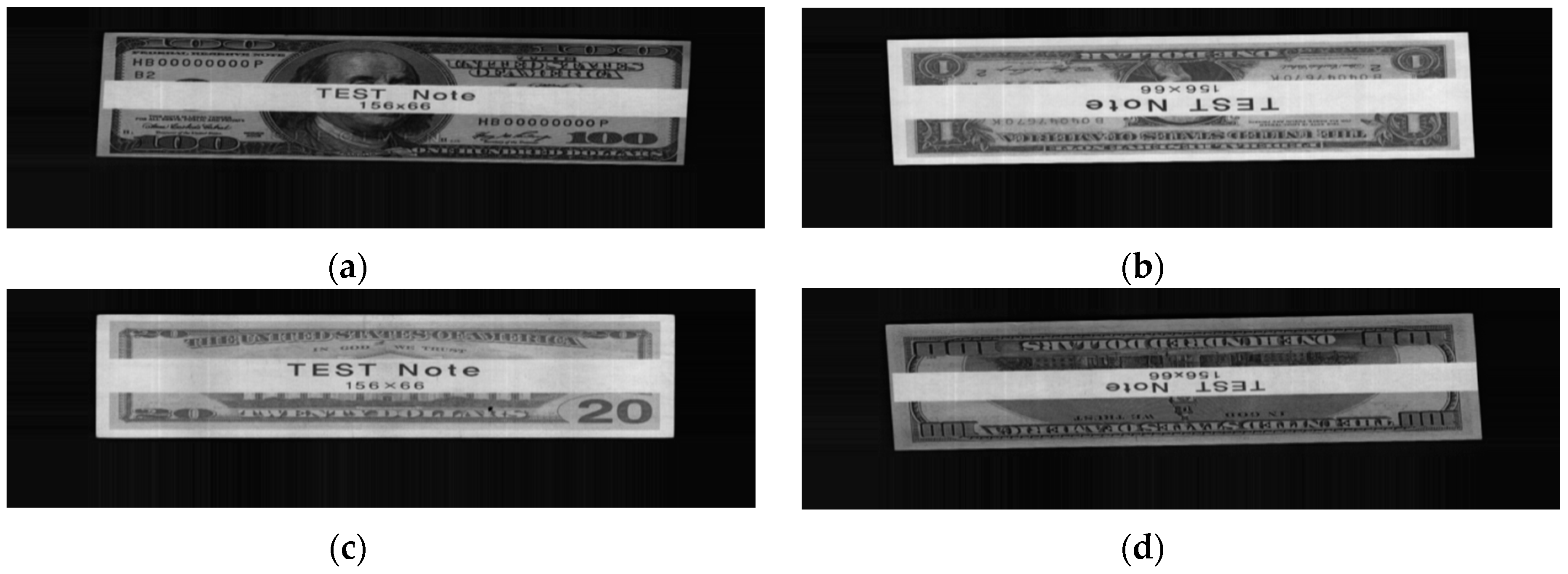

From the selected banknote image data, we extracted the classification features using PCA. In this step, the reliability of the classification results is affected by the dimensionality of the extracted PCA feature vector, as explained in

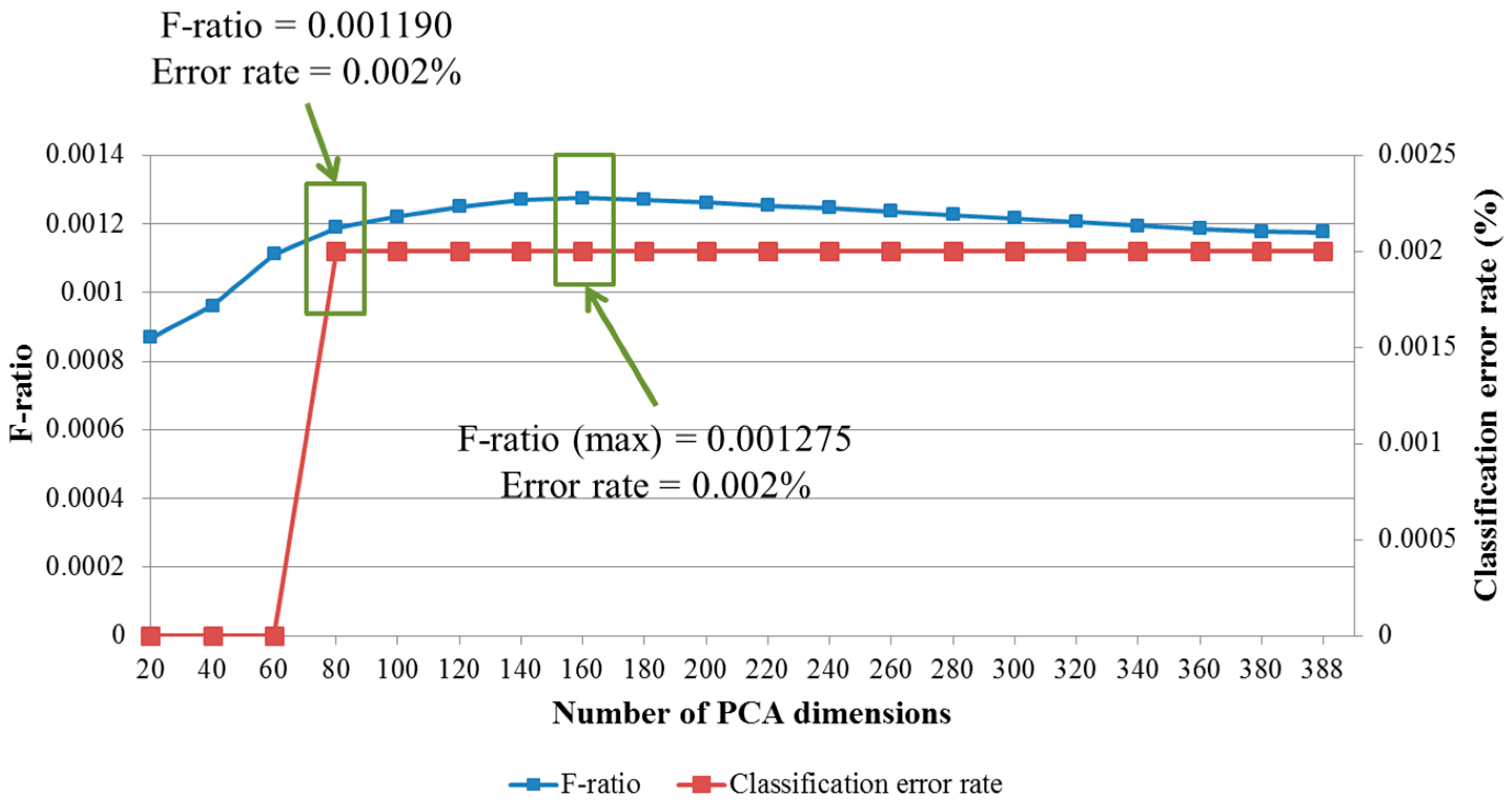

Section 2.4. Therefore, in subsequent experiments, we determined the optimal PCA dimensionality that yields the best classification accuracy and reliability in term of maximization of the F-ratio given by Equation (9). A USD test note database consisting of 2794 images was collected for our rejection test experiments. We considered test notes and false accepted cases of banknotes to belong to the same distribution, such that the remaining distribution consists of only genuine accepted cases. The experimental results for the F-ratio calculation and classification accuracy for various numbers of PCA dimensions are shown in

Figure 8. The error rate was calculated based on unsupervised K-means clustering for 68 classes in the USD banknote database.

It can be seen from the

Figure 8 that although there were no classification errors in the cases in which 20, 40, or 60 PCA dimensions were used for feature extraction, the separations between the distributions of genuine accepted cases and rejected cases were not good in terms of low ratios between each distribution’s scatter measures. The scatter plot of the matching distances when 20 PCA dimensions were used is shown in

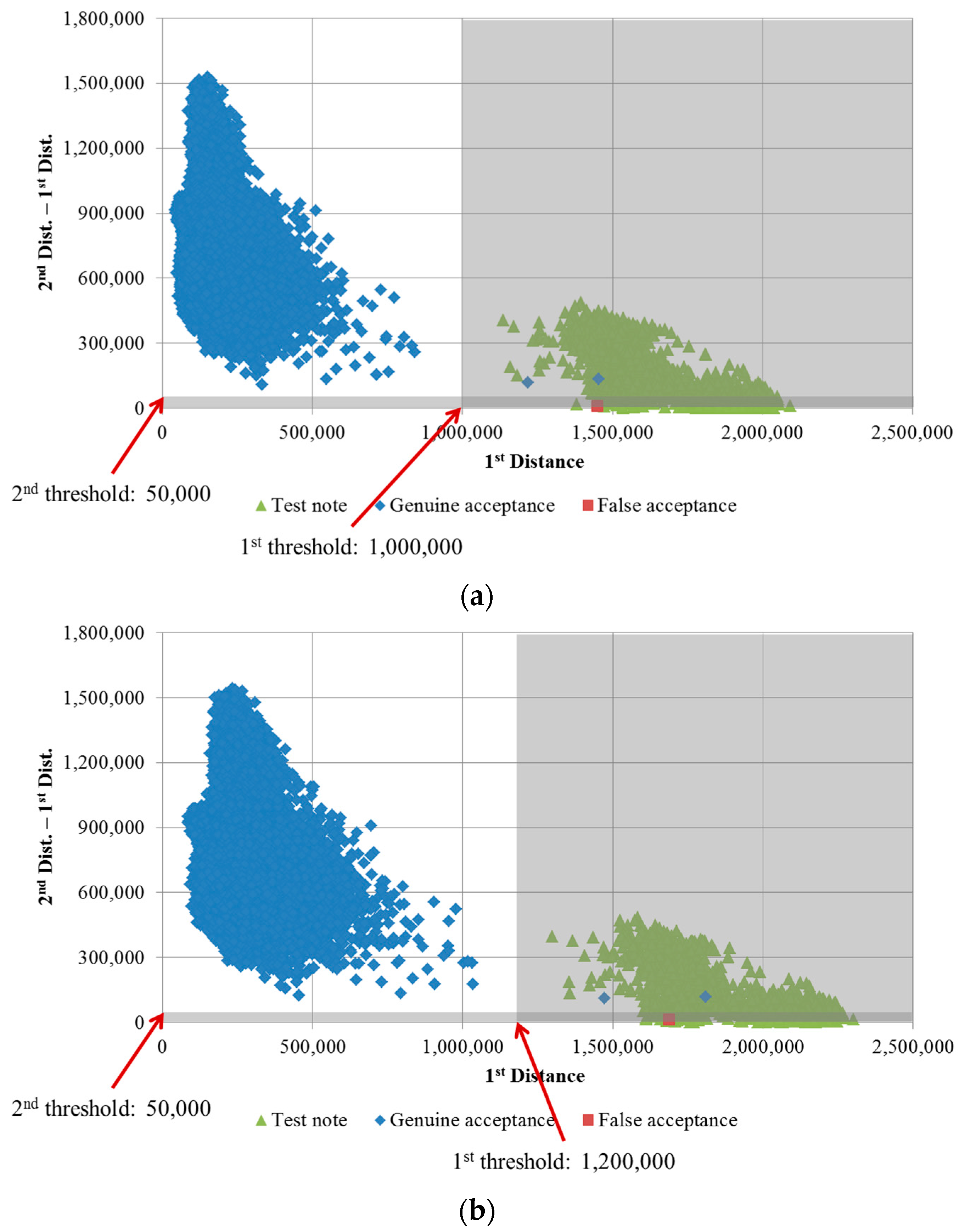

Figure 6a. The F-ratio reached a maximum value of 0.001275 at a dimensionality of 160. As the number of extracted features increases, much more processing time is required. Therefore, we considered two cases—the use of 80 or 160 PCA dimensions for feature extraction—in subsequent experiments conducted to evaluate the recognition accuracy achieved. Scatter plots of the distances for the cases of extraction of 80 and 160 PCA dimensions for banknote and test note matching tests are shown in

Figure 9.

With the parameters for banknote feature extraction determined, we evaluated the accuracy of the proposed recognition method in comparison to the accuracy reported for methods used in previous studies, as shown in

Table 4. When we used 80 or 160 PCA dimensions for feature extraction, there were no changes in the error rates and rejection rates, which were 0.002% and 0.004%. The rejected cases correspond to the banknotes for which the 1st matching distances were higher than the 1st threshold and for which the differences between the 1st and 2nd distances were lower than the 2nd threshold. The 2nd threshold is used to ensure that the recognition result is reliable, as explained in

Section 2.4.2. In

Figure 9, the positions of rejected cases on the scatter plots are in the gray areas. Because the total error and false rejection rate was 0.006%, the correct recognition rate of our method was 99.994%.

It can be seen from

Table 4 that although the numbers of banknote images and classes in our USD database were greater than in other studies, the recognition accuracy of our method was higher than that in previous studies in terms of low false recognition rates and rejection rates. Consequently, we can confirm that our proposed method outperforms the previous previously proposed methods for USD banknote recognition.

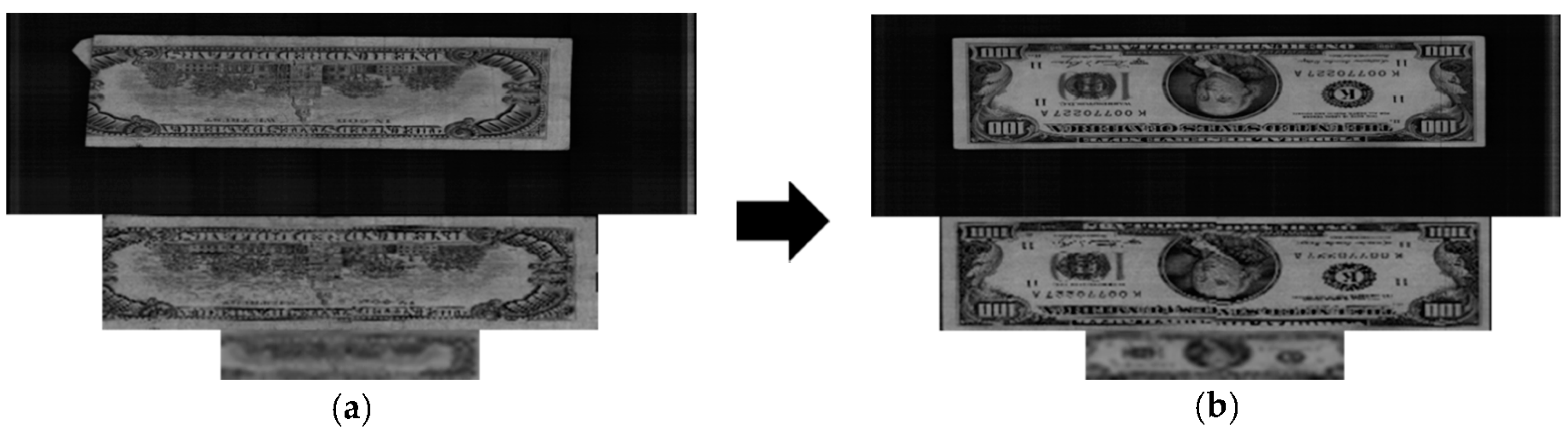

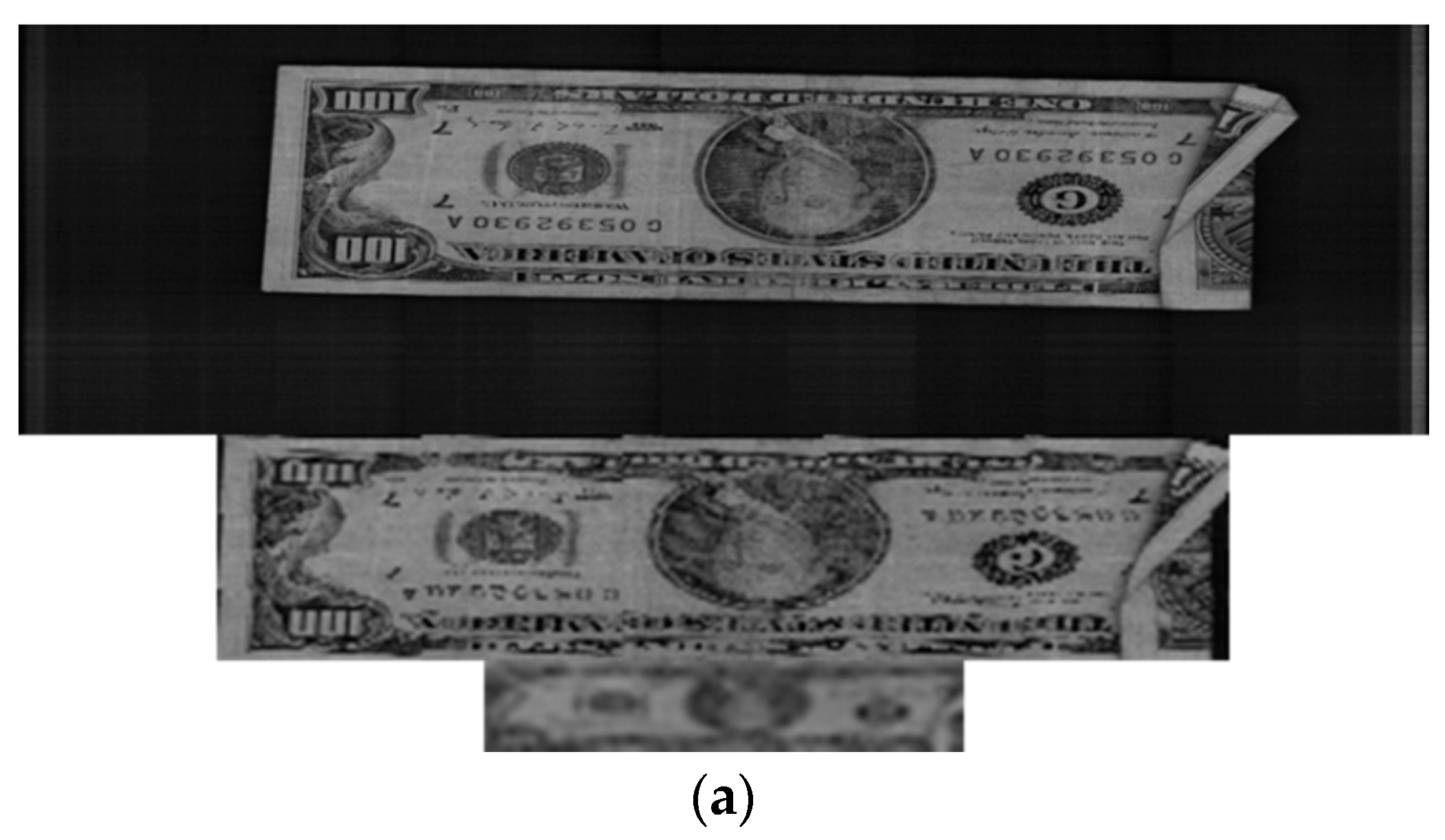

The false recognition case for our method is shown in

Figure 10, in which the uppermost image is the original banknote image and the middle and lower images are the deskewed image and the 64 × 12-pixel sub-sampled image of the upper image, respectively. The banknote on the left was misclassified as belonging to the class of the banknote on the right. This error case occurred because the image was captured from a folded banknote, as seen in

Figure 10.

Figure 11 presents illustrations of rejection cases in our experiments in which the images are arranged in the same manner as in

Figure 10. Although these input banknote images were correctly recognized, their matching scores were too high, so their distributions on the scatter plots in

Figure 9 were in the rejection region, where the 1st distances are greater than the 1st threshold. It can be seen in

Figure 11 that the upper case corresponded to images of a folded banknote, similar to the false recognition case in

Figure 10. The remainder of the rejected images was captured from a severely damaged banknote with a tear, folded corner, and writing patterns. These resulted in 1st distances to the genuine classes of these banknote features being higher than the 1st rejection threshold and consequently the images being rejected by the system.

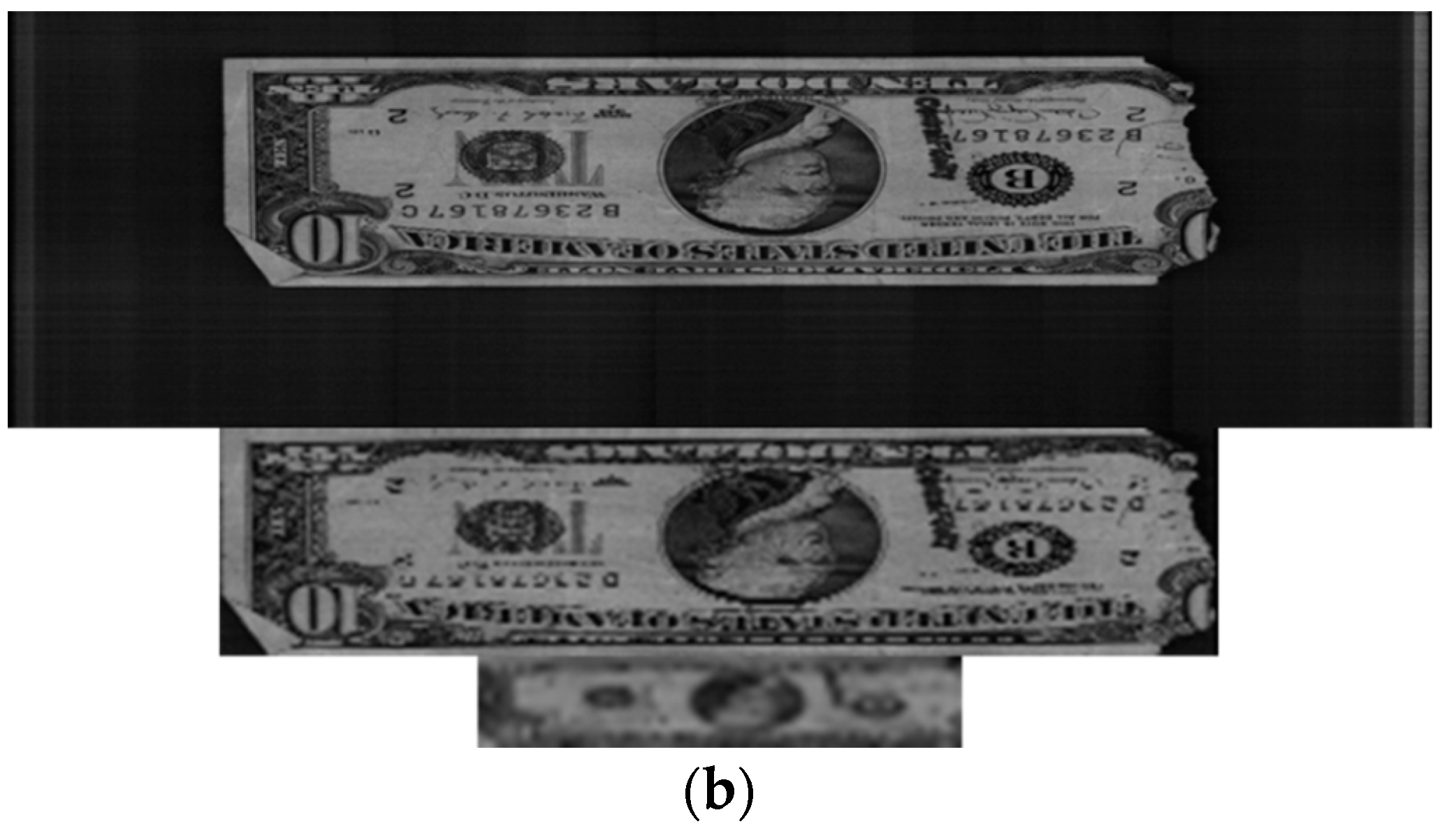

In subsequent experiments, we applied the proposed method to other countries’ banknote image databases to confirm the performance of our method for different types of paper currency. The banknotes used in these experiments were South African rand (ZAR), Angolan kwanza (AOA) and Malawian kwacha (MWK). The numbers of banknote images and classes in the experimental databases are shown in

Table 5.

Figure 12 shows some examples of banknote images for each type of currency.

Because test notes similar to those used in the USD experiments were not available for the AOA, MWK and ZAR currencies, we tested the recognition accuracy of these databases using the same parameters as those for USD recognition. In addition, because there has been no previous research on recognition of paper banknotes from Angola, Malawi, or South Africa, we were not able to compare the accuracy of our method with any methods applied to these currencies in previous studies. Our proposed method correctly recognized 100% of the banknote images in the AOA and ZAR databases and 99.675% of the banknotes in the MWK database. The experimental results for the similarity maps and the recognition error rates for the AOA, MWK and ZAR databases are given in

Table 6.

From the images of the similarity maps and the resulting masks shown in

Table 6, most of the selected areas for recognition in the MWK and ZAR banknote images were on the two sides. The recognition area in the AOA banknote images was located in the middle of the images. The reason for these results is that the patterns on these banknotes, such as numbers, seals, photos, and portraits, are asymmetrically distributed. In the cases of MWK and ZAR, the photo and portrait patterns are printed far to the two sides of the banknotes, while on AOA banknotes, the feature patterns are more different in the middle areas, depending on the banknotes’ denominations and directions. As a result, the high-discriminating-power regions for AOA, MWK, and ZAR banknote images were determined and are shown in

Table 6.

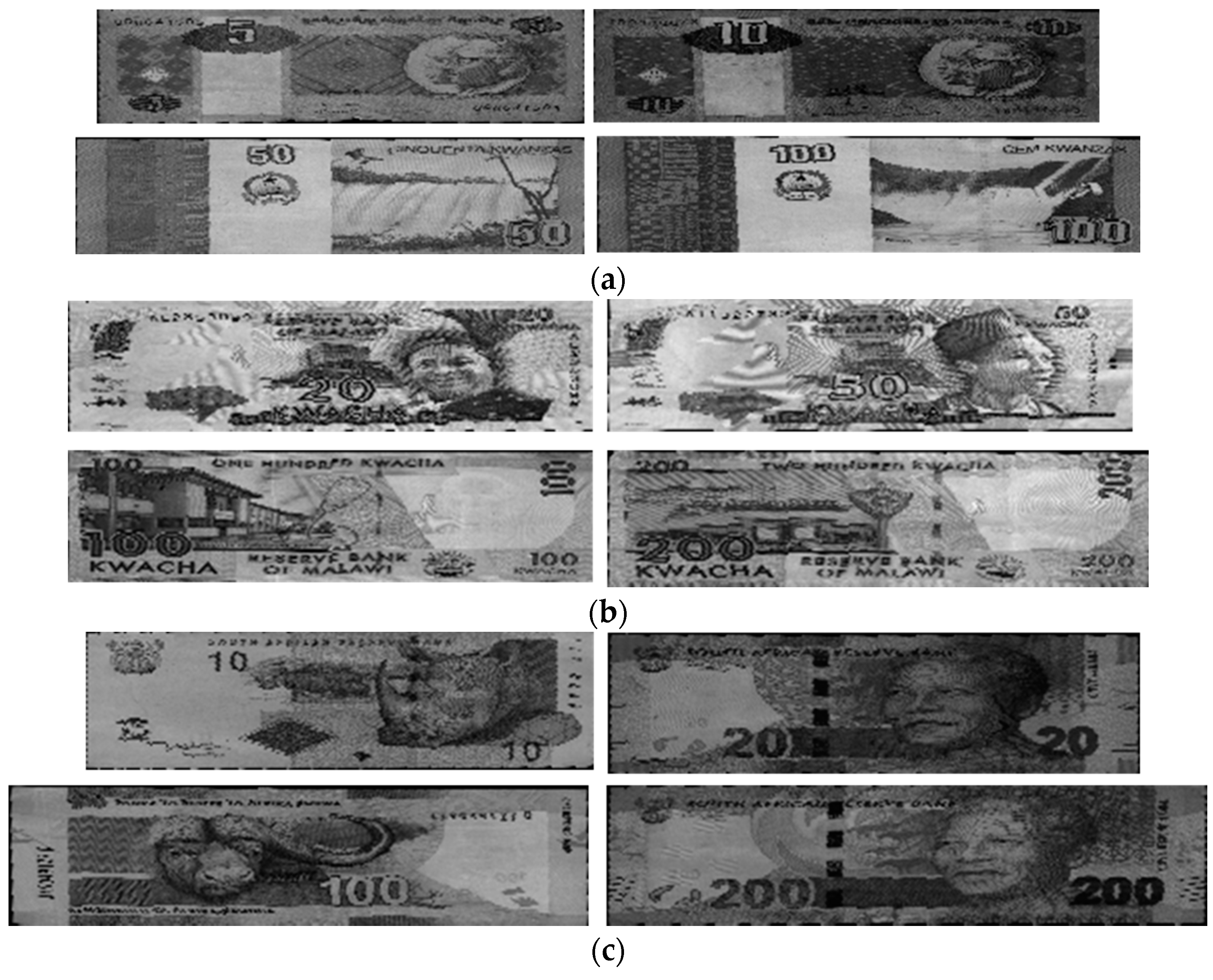

Examples of error cases in the MWK database are shown in

Figure 13. The lower image with smaller sizes for each pair was sub-sampled from the upper banknote image. In this figure, the MK100 banknote images of the front side and forward back side shown in the left column were misclassified as MK500 banknotes in the same direction, examples of which are shown in the right column. It can be seen from the

Figure 13 that in the areas selected for recognition by map in

Table 6, the shapes of the textures in the sub-sampled images of the MK100 and MK500 banknotes are slightly similar to each other. This resulted in misclassification in these cases. However, the banknote images in the AOA and ZAR databases were correctly recognized with error rates of 0%, so we can confirm that our proposed method can be applied to paper currencies from various countries and achieve good performance in terms of matching accuracy.

We also measured the processing time for our recognition method. This experiment was conducted using the USD banknote image database and a desktop personal computer (PC) with a 2.33 GHz CPU and 4 GB of memory. In these experiments, we compared the time required when using 80 and 160 PCA dimensions for the feature extraction process. When 160 PCA dimensions were used, the system was able to process approximately 442 images/s (1000/2.26 ms). When the number of extracted features by PCA was reduced by half, the processing speed increased to 568 images per second (1000/1.76 ms). Therefore, we used 80 PCA dimensions for final decisions using our banknote recognition method. The average processing times are shown in

Table 7.

In a previous study [

16] in which a higher-powered PC (3.5-GHz CPU, 8 GB of memory) was used, up to 5.83 ms was required to recognize an image. Our proposed method required less processing time for the following reasons. First, because we used deskewed banknote images of smaller sizes (up to 400 × 120 pixels, compared to 1212 × 246 in [

16]), the sub-sampling time was reduced. Second, the number of extracted features used for recognition in our method was smaller (80 dimensions, compared to 192 in [

16]). Therefore, the feature extraction and matching processes required less time than with the method used in [

16].

In addition, we measured the processing time of a banknote-counting machine using a Texas Instruments (TI) digital media processor (chip). This machine required approximately 1 ms to process and recognize one input banknote using our proposed method with 80 PCA features extracted from a visible-light banknote image.

We also calculated the total memory usage required to employ our proposed method with a counting machine with limited resources. The measurement details are given in

Table 8. The total memory usage was 931,924 Bytes for our method. Our proposed method outperformed the USD recognition method described in [

16] in terms of both processing time (15.6 ms) and memory usage (1.6 MB).