Representing Geospatial Environment Observation Capability Information: A Case Study of Managing Flood Monitoring Sensors in the Jinsha River Basin

Abstract

:1. Introduction

1.1. Discovery of Earth Environmental Sensors under the Sensor Web Environment

1.2. Representation of Observation Capability Information

1.3. Representation Requirements of Geospatial Environmental Observation Capability Information

2. Representation Model of Geospatial Environmental Observation Capability Information

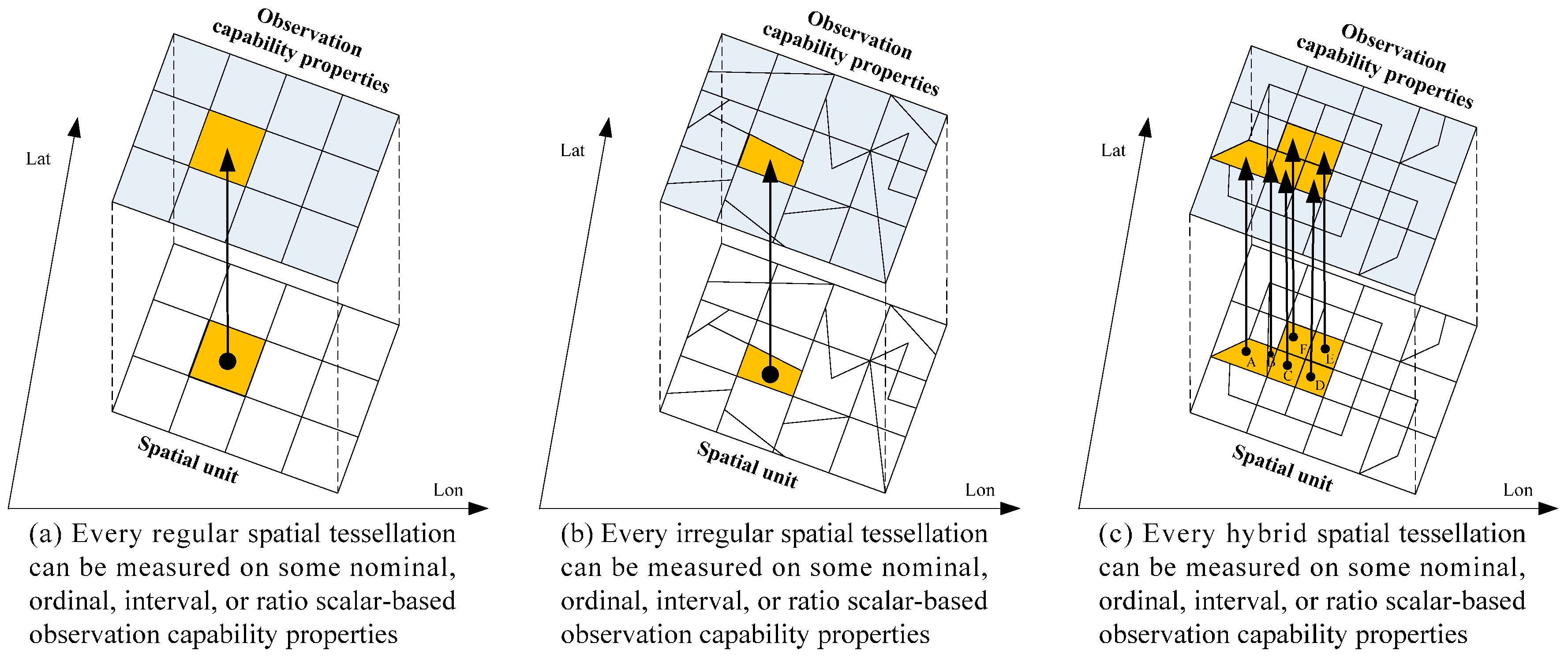

2.1. Space Abstraction in the Model

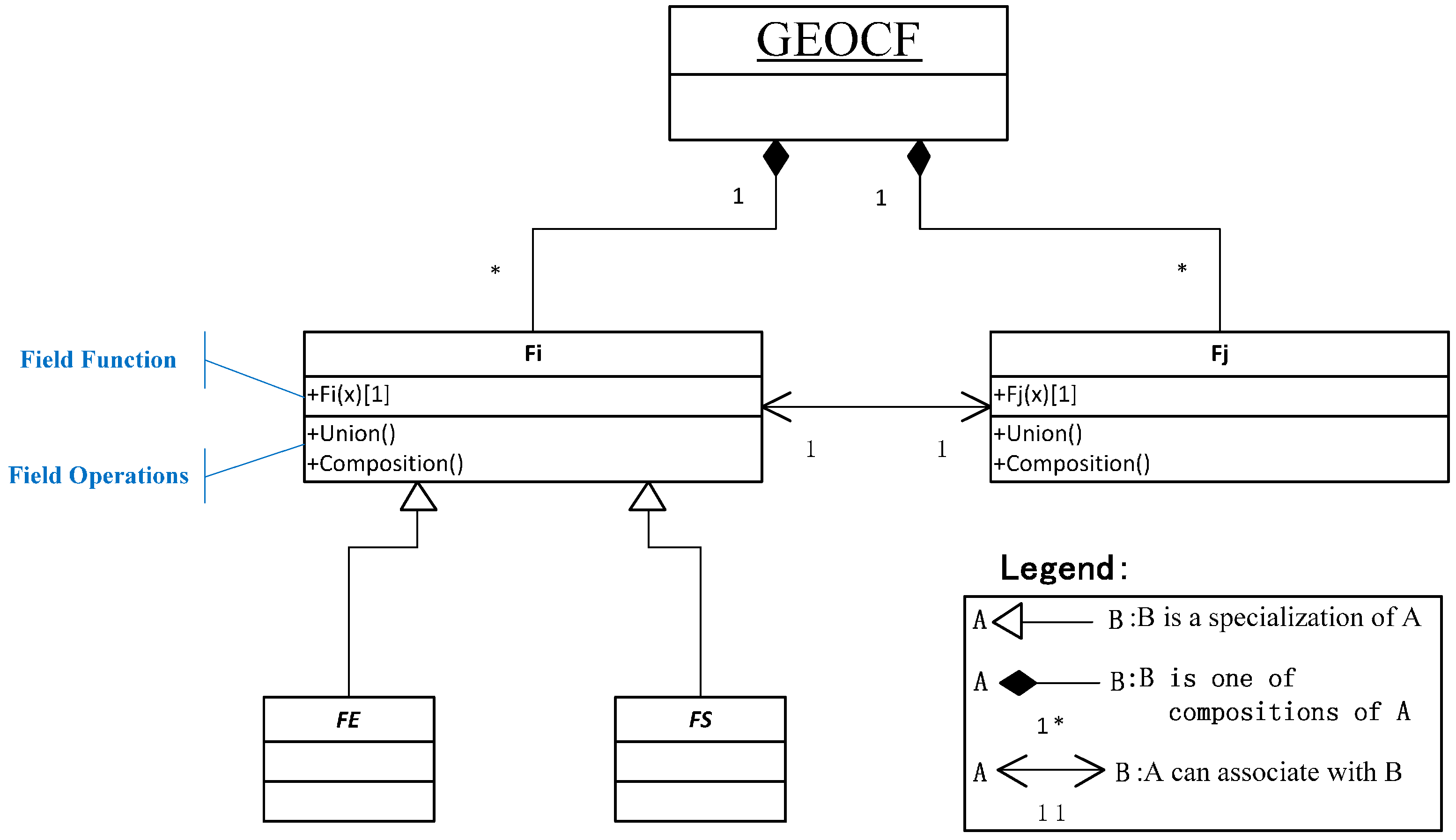

2.2. Framework of GEOCF

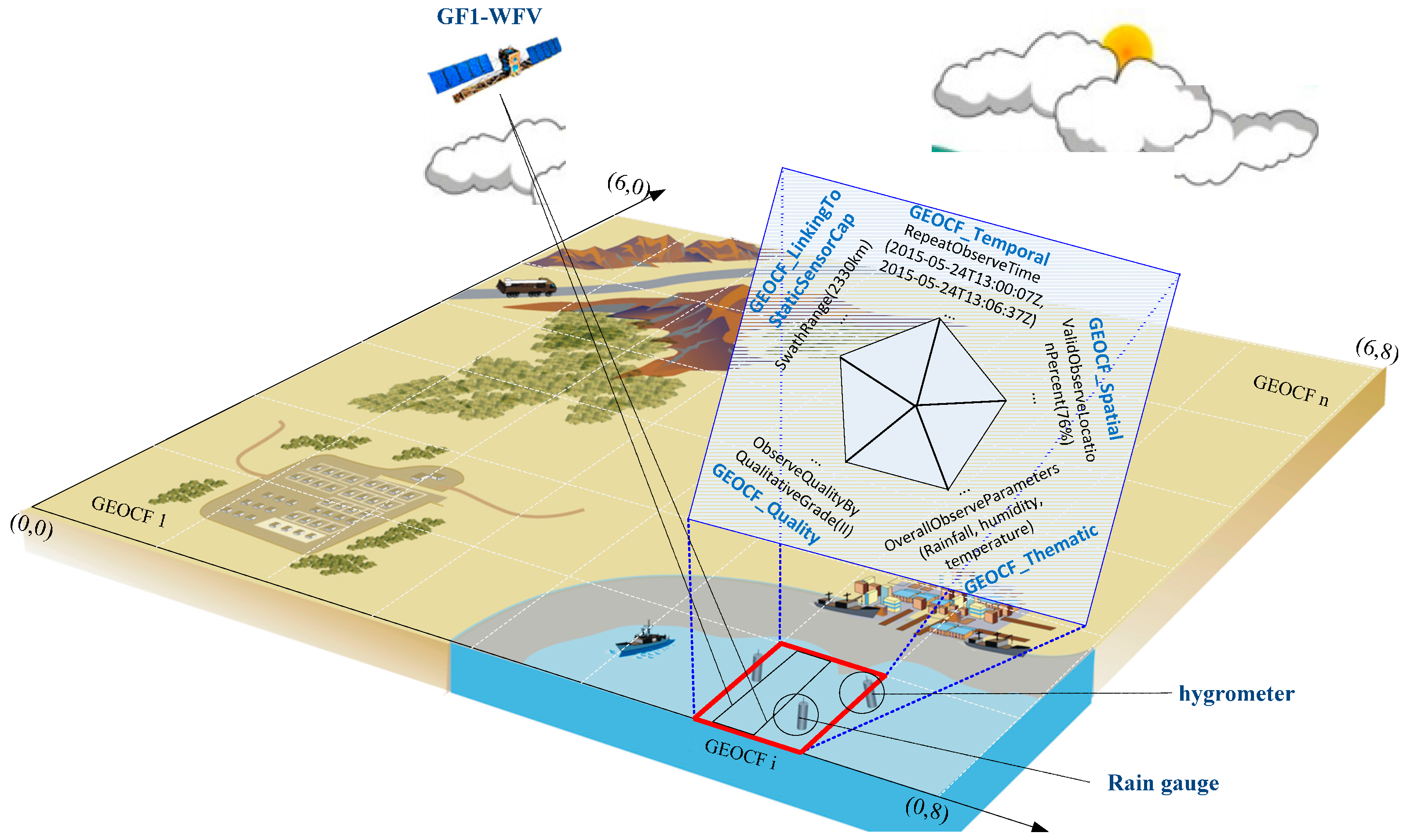

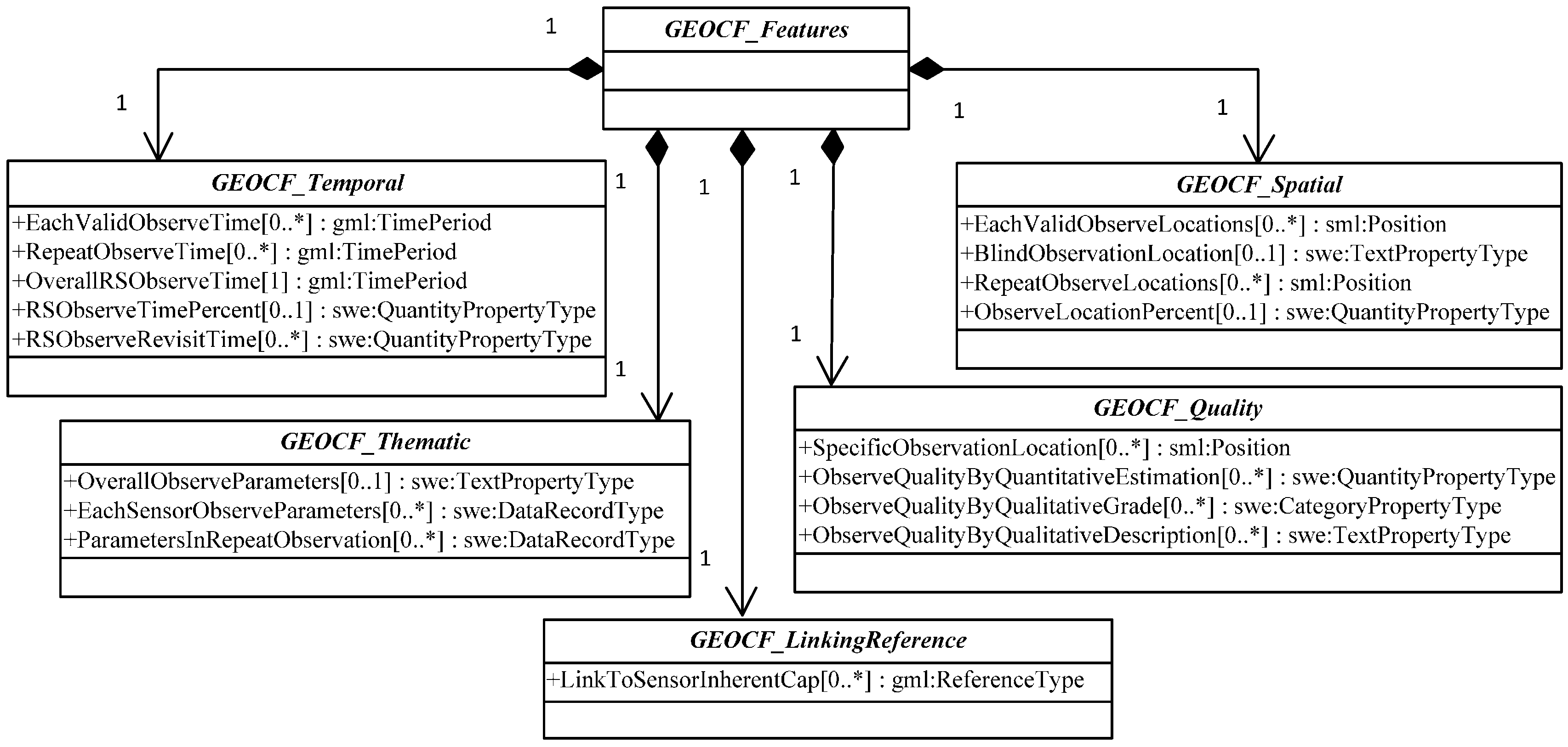

2.3. Feature Components of GEOCF Information Representation Model

- (1)

- GEOCF_Temporal: This feature dimension identifies the period when a certain environmental disaster occurs, and sensor observation planning decisions are needed. The features regarded as GEOCF_Temporal features include EachValidObserveTime, RepeatObserveTime, OverallRSObserveTime, and RSObserveTimePercent.

- (2)

- GEOCF_Spatial: This feature dimension refers to the spaces where an environmental disaster occurs and may include a valid, repeatedly observed, or blind observation location. Therefore, GEOCF_Spatial features include EachValidObserveLocations, BlindObservationLocation, RepeatObserveLocations, SensorsObserveCoverageInterlinkedLocations, and ValidObserveLocationPercent.

- (3)

- GEOCF_Thematic: This feature dimension presents the intended applications of the available environmental sensors, including OverallObserveParameters, EachSensorObserveParameters, ParametersInRepeatObservationLocations, and ParametersInInterlinkedObserveCoverageLocations.

- (4)

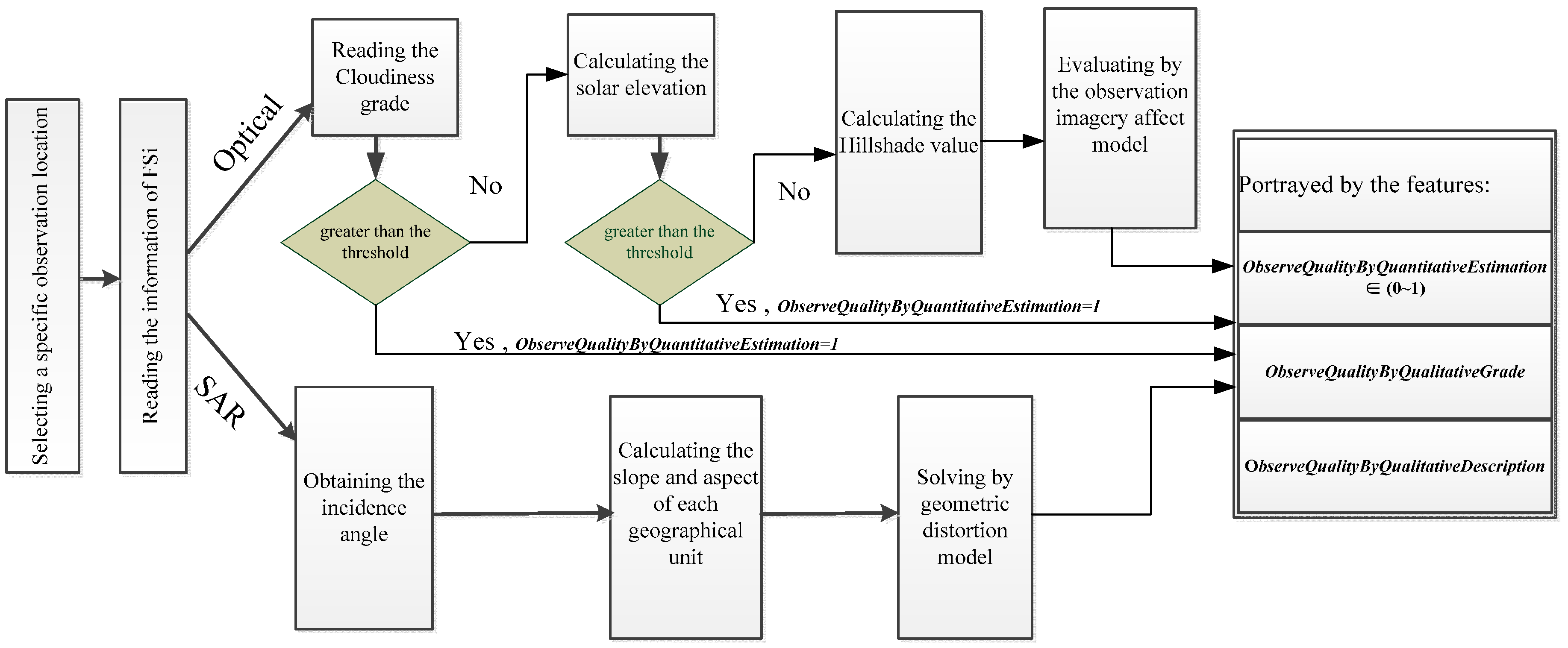

- GEOCF_Quality: This feature dimension is used to quantitatively and qualitatively illustrate the observation quality of the sensors, which may be affected by the geospatial environmental features in a specific geospatial unit. The features of this dimension include ObserveQualityByQuantitativeEstimation, ObserveQualityByQualitativeGrade, and ObserveQualityBy QualitativeDescription.

- (5)

- GEOCF_LinkingReference: In addition to the dynamic observation capability features, the features of sensor-inherent static observation capabilities should be included, such as SwathRange, BandsCategory, BandCharacteristics, and NadirResolution. These features are linked from our previous representation model of Earth observation sensor static observation capability information [11].

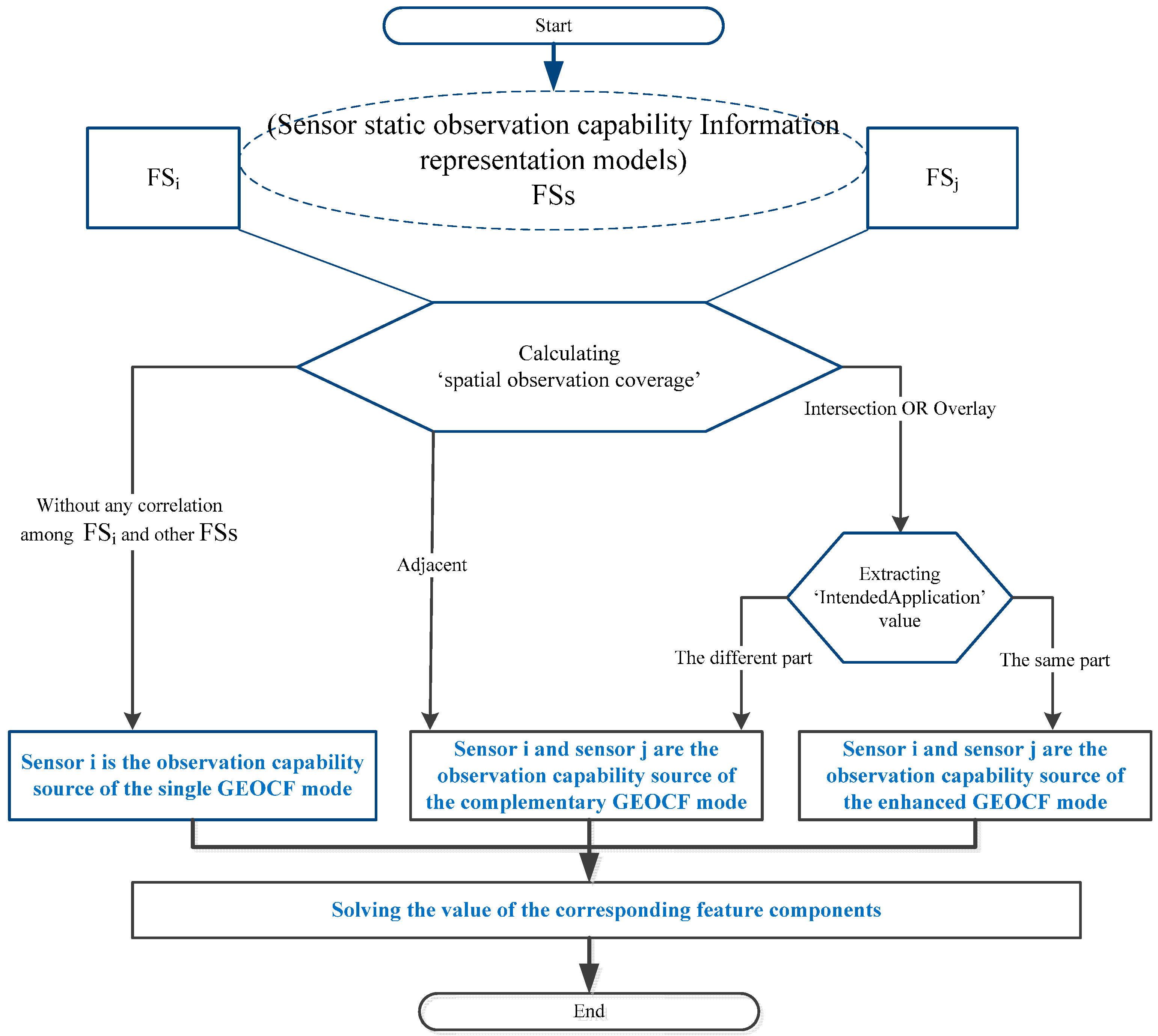

2.4. Operation Workflows of the GEOCF Information Representation Model

- (1)

- If the spatial observation coverage of sensor i does not have any correlation with the observation coverage of the other sensors during the given requested period, then sensor i will be classified as the observation capability source in the single GEOCF mode.

- (2)

- If the spatial observation coverage of sensor i bears a spatial relationship with the other sensor j, such as the intersection or overlay, the value for the “Sensor_designed_applications” property of sensor i and sensor j should be determined (which can be extracted from the SensorML-based static sensor observation capability information representation model). If the sensors have the same value set, Same_ObservP {Pk|Pk ∈ FSi, Pk ∈ FSj}, we deem that in their intersected or overlapped observation areas, sensor i and sensor j can be combined for an enhanced GEOCF mode in the observation parameters of Same_ObservP.

- (3)

- For the different value set, Diff_ObservP {Pk,Ph|Pk ∈ FSi, Ph ∈ FSj }, sensor i and sensor j are classified as a combination of a complementary GEOCF mode in the observation themes of Diff_ObservP.

- (4)

- In a special case, in which the spatial observation coverage of sensor i is spatially adjacent to that of sensor j, then sensor i and sensor j can be categorized into the complementary GEOCF mode.

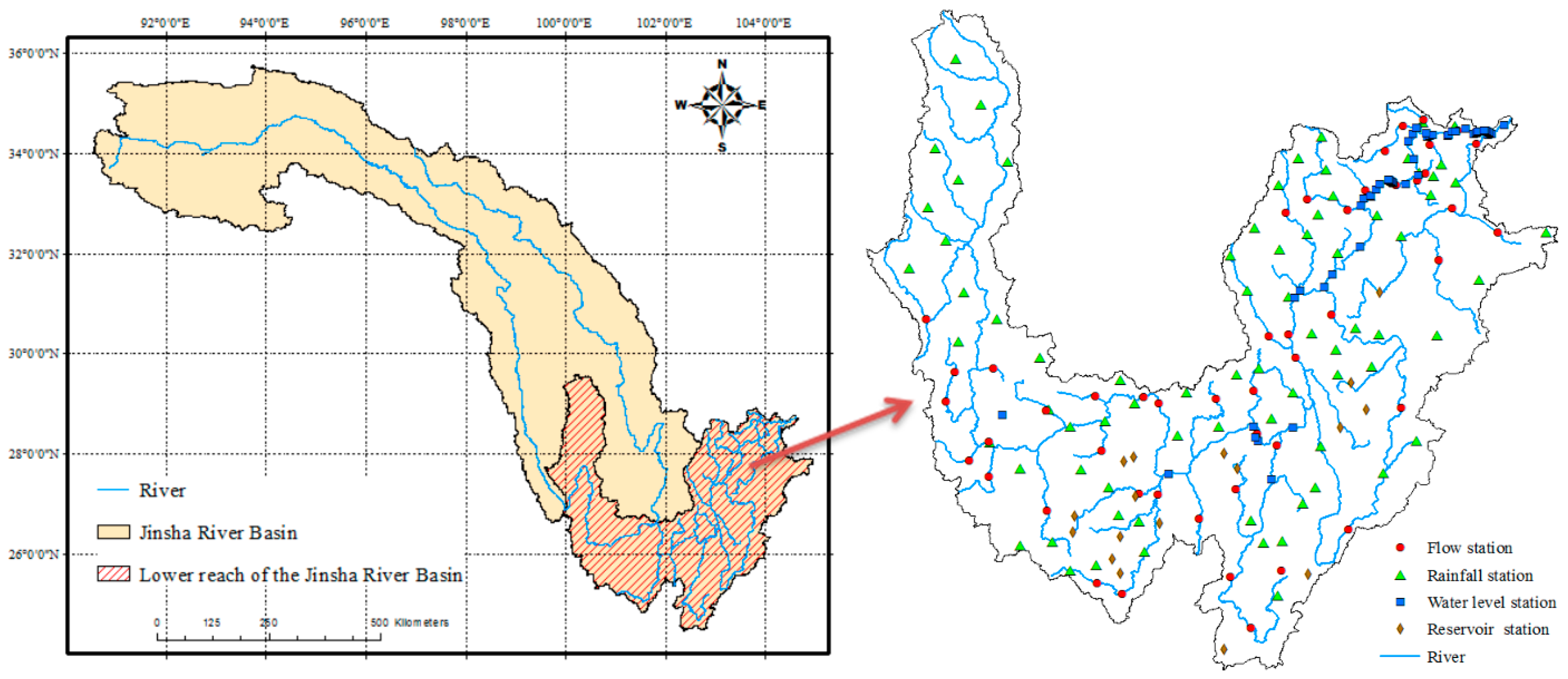

3. Sensor Discovery and Planning Experiment of Flood Observation in the Jinsha River Basin

3.1. Flood Observation of the Jinsha River Basin

3.1.1. Flood Observation Requirement

3.1.2. Existing Sensor Resources

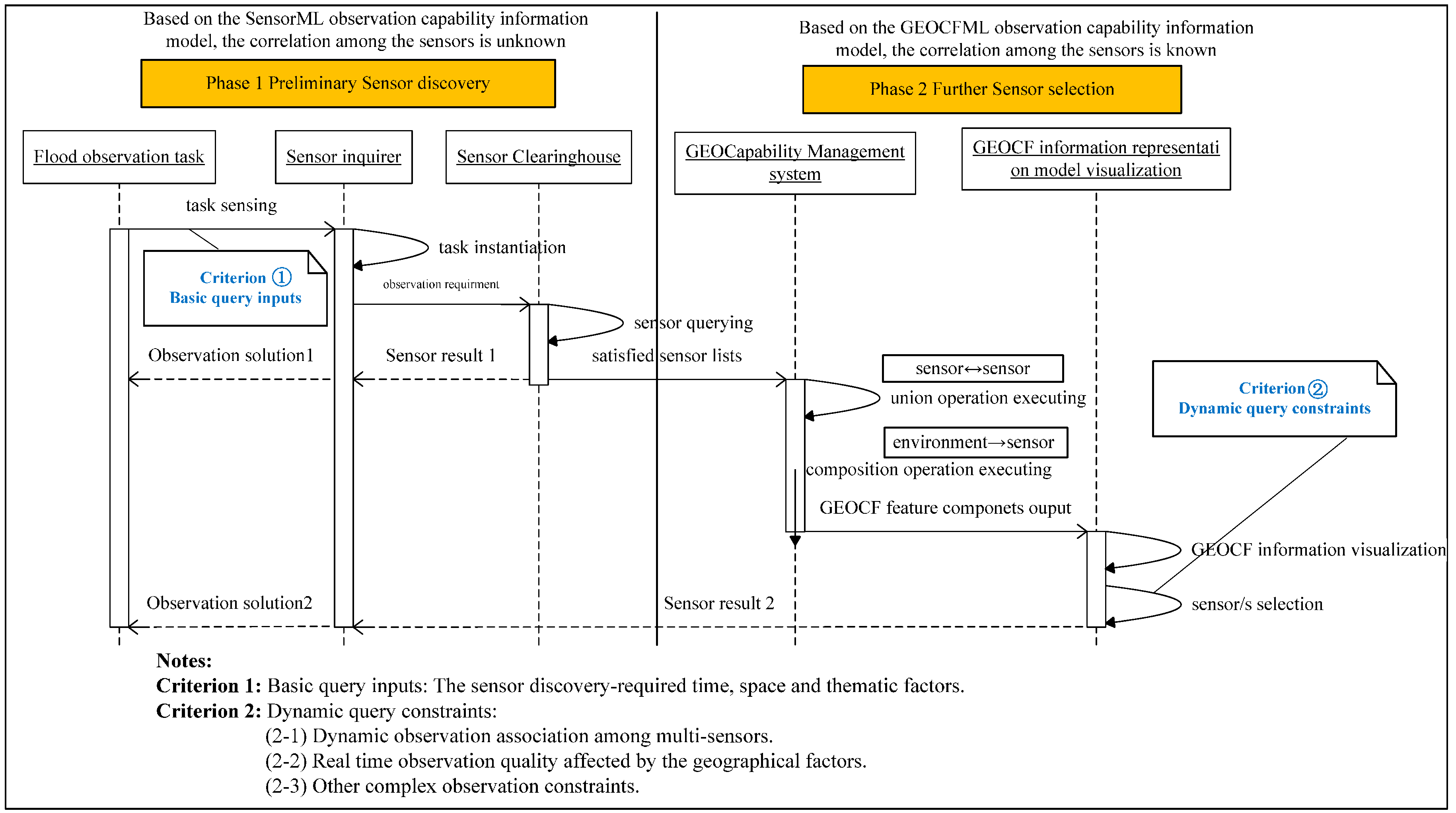

3.2. Realistic Problem before Using the GEOCF as the Information Foundation

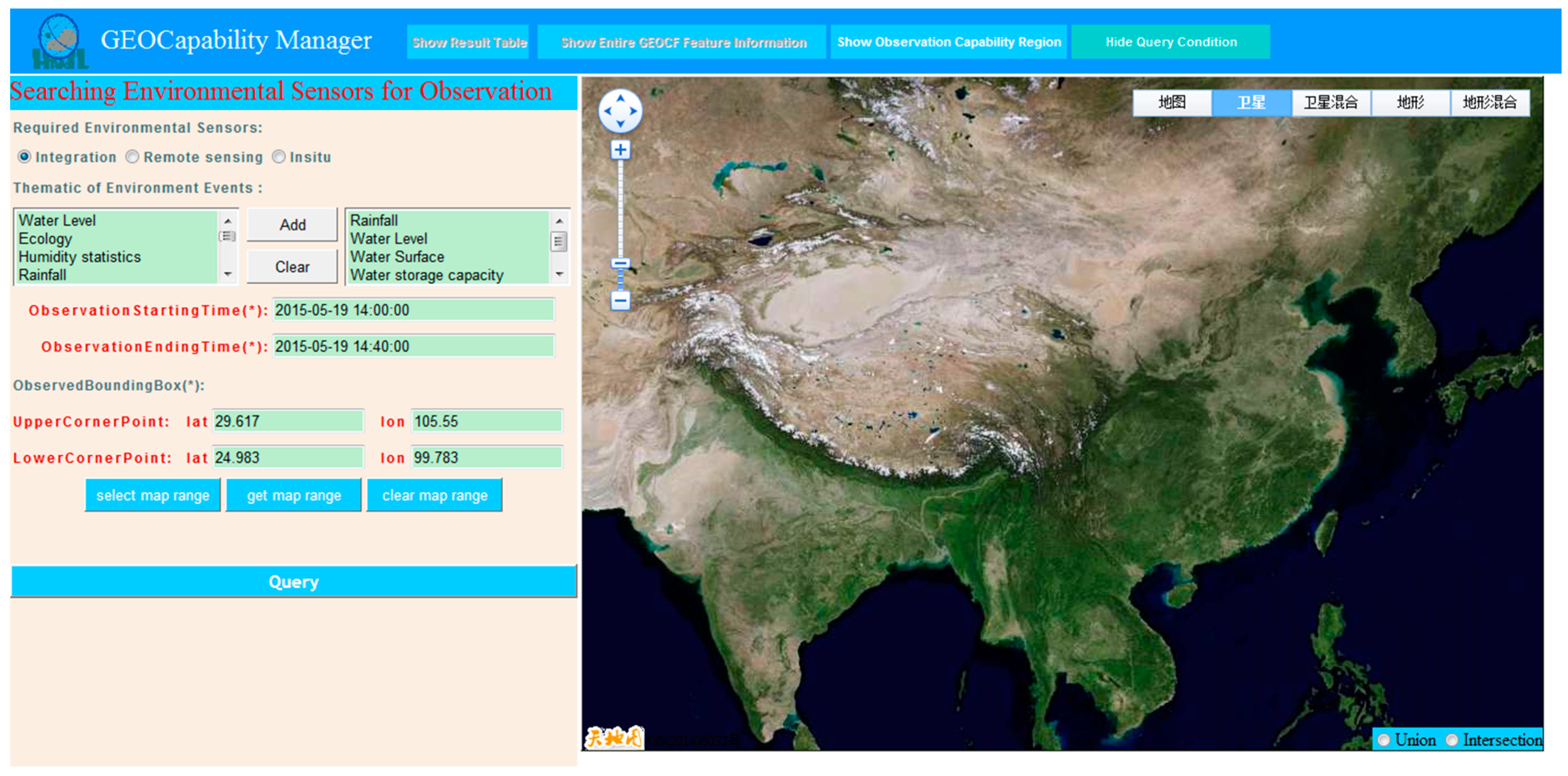

3.3. Flood Sensor Discovery and Planning in GEOCapabilityManager

3.3.1. Basic Flood Observation Query

3.3.2. Considering Multi-Sensors’ Union

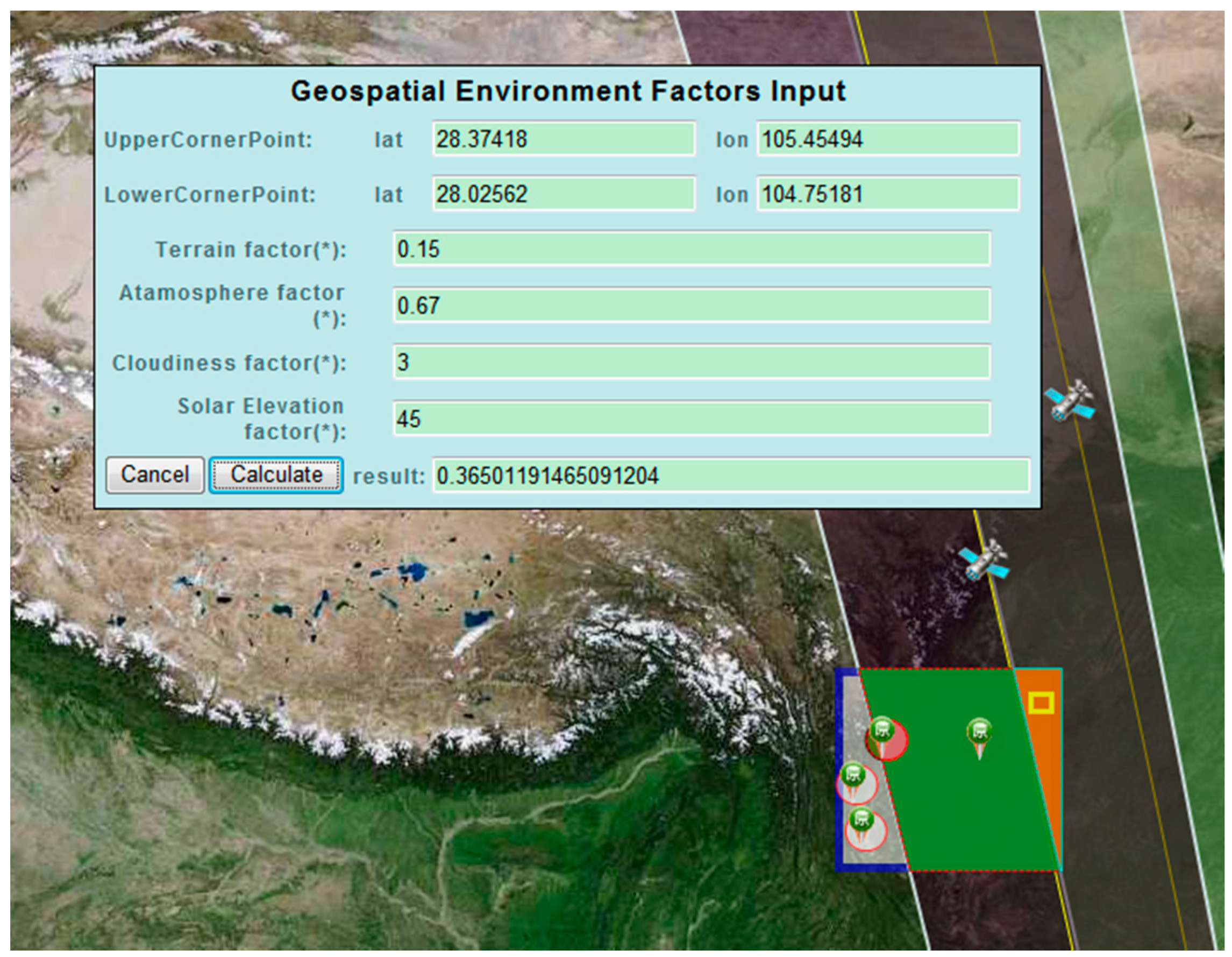

3.3.3. Considering the Effect of Geospatial Environmental Feature Factors

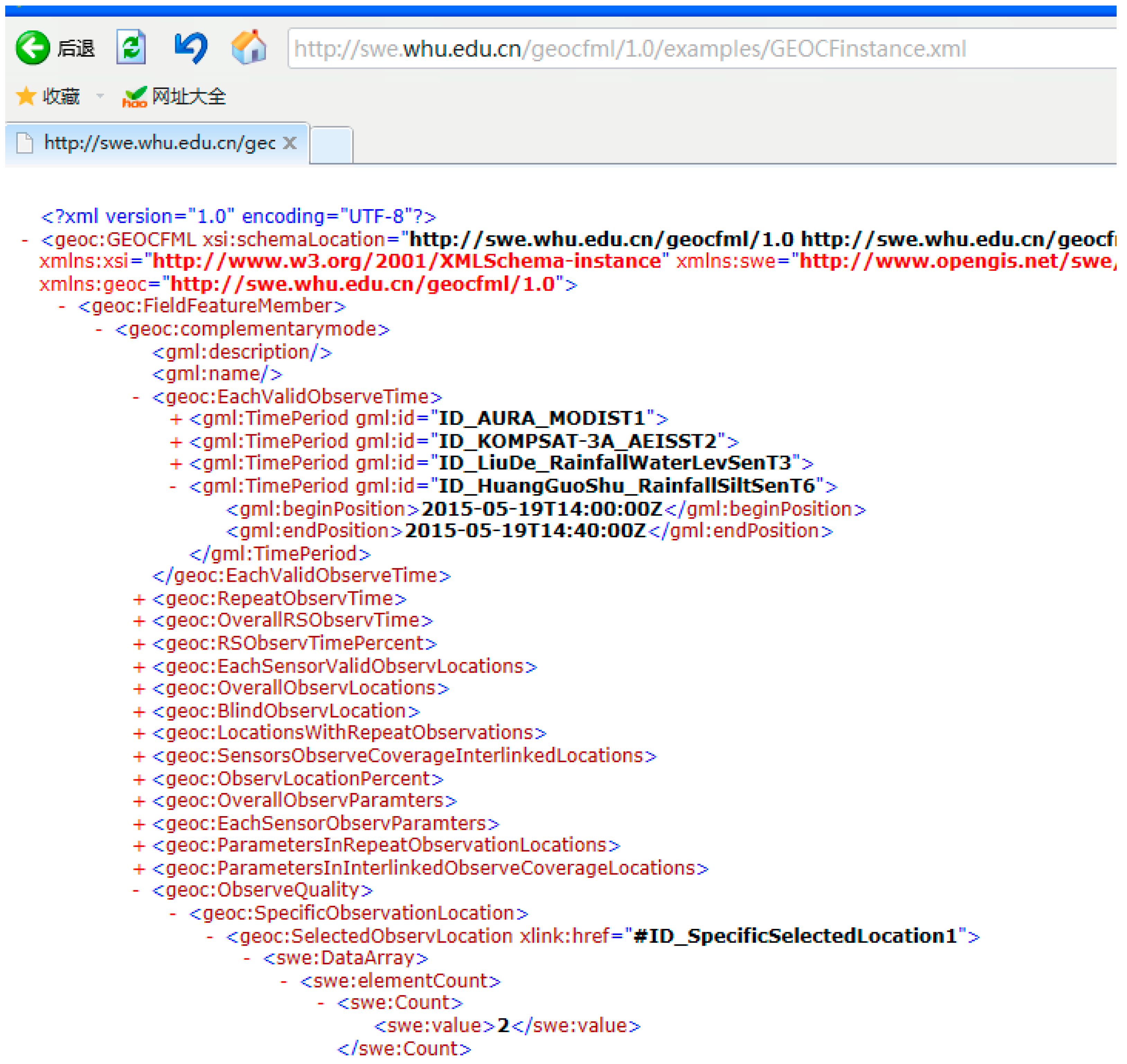

3.3.4. Entire GEOCF Represented in a Uniform Information Model

4. Discussion

4.1. Comparison to the SWE Sensor Observation Capability Information Model

4.2. Comparison with Existing Sensor Discovery and Planning Systems

4.3. Extension to Other Environmental Observation Applications

5. Conclusions and Outlook

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kussul, N.; Skakun, S.; Shelestov, A.Y.; Kussul, O.; Yailymov, B. Resilience aspects in the sensor Web infrastructure for natural disaster monitoring and risk assessment based on Earth observation data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3826–3832. [Google Scholar] [CrossRef]

- Mandl, D.; Frye, S.W.; Goldberg, M.D.; Habib, S.; Talabac, S. Sensor webs: Where they are today and what are the future needs? In Proceedings of the Second IEEE Workshop on Dependability and Security in Sensor Networks and Systems, Columbia, MD, USA, 24–28 April 2006; pp. 65–70.

- Bröring, A.; Echterhoff, J.; Jirka, S.; Simonis, I.; Everding, T.; Stasch, C.; Liang, S.; Lemmens, R. New generation sensor web enablement. Sensors 2011, 11, 2652–2699. [Google Scholar] [CrossRef] [PubMed]

- Botts, M.; Percivall, G.; Reed, C.; Davidson, J. OGC® sensor web enablement: Overview and high level architecture. In International Conference on GeoSensor Networks; Springer: Berlin/Heidelberg, Germany, 2006; pp. 175–190. [Google Scholar]

- Zyl, T.V. Sensor web technology underpins GEOSS objectives: Applications. CSIR Sci. Scope 2008, 3, 42–43. [Google Scholar]

- Granell, C.; Díaz, L.; Gould, M. Service-oriented applications for environmental models: Reusable geospatial services. Environ. Model. Softw. 2010, 25, 182–198. [Google Scholar] [CrossRef]

- Díaz, L.; Bröring, A.; McInerney, D.; Liberta, G.; Foerster, T. Publishing sensor observations into Geospatial Information Infrastructures: A use case in fire danger assessment. Environ. Model. Softw. 2013, 48, 65–80. [Google Scholar] [CrossRef]

- Mandl, D. Experimenting with sensor webs using earth observing 1. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 1–7 March 2014; pp. 176–183.

- Chen, N.; Hu, C. A sharable and interoperable meta-model for atmospheric satellite sensors and observations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1519–1530. [Google Scholar] [CrossRef]

- Fan, H.; Li, J.; Chen, N.; Hu, C. Capability representation model for heterogeneous remote sensing sensors: Case study on soil moisture monitoring. Environ. Model. Softw. 2015, 70, 65–79. [Google Scholar] [CrossRef]

- Fleischer, J.; Häner, R.; Herrnkind, S.; Kloth, A.; Kriegel, U.; Schwarting, H.; Wächter, J. An integration platform for heterogeneous sensor systems in GITEWS–Tsunami Service Bus. Nat. Hazards Earth Syst. Sci. 2010, 10, 1239–1252. [Google Scholar] [CrossRef]

- Klopfer, M.; Simonis, I. SANY—An Open Service Architecture for Sensor Networks; The Open Geospatial Consortium: Wayland, MA, USA, 2010. [Google Scholar]

- Tacyniak, D.; Ghirardelli, Y.; Perez, O.; Perez, R.F.; Gonzalez, E.; Bobis, J.M. OSIRIS: Combining Pollution Measurements with GPS Position Data for Air Quality Monitoring in Urban Environments Making Use of Smart Systems and SWE Technologies; International Workshop Sensing a Changing World, Kooistra, L., Ligtenberg, A., Eds.; Wageningen University and Research Centre: Wageningen, The Netherlands, 2008; pp. 75–78. [Google Scholar]

- Mandl, D.; Frye, S.; Cappelaere, P.; Handy, M.; Policelli, F.; Katjizeu, M.; Silva, J.A.; Aubé, G.; Sohlberg, R.; Kussul, N.; et al. Use of the earth observing one (EO-1) satellite for the Namibia sensor web flood early warning pilot. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 298–308. [Google Scholar] [CrossRef]

- Kussul, N.; Mandl, D.; Moe, K.; Mund, J.P.; Post, J.; Shelestov, A.; Skakun, S.; Szarzynski, J.; Van Langenhove, G. Interoperable infrastructure for flood monitoring: Sensor Web, grid and cloud. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1740–1745. [Google Scholar] [CrossRef]

- Mandl, D.; Cappelaere, P.; Frye, S.; Sohlberg, R.; Ong, L.; Chien, S.; Tran, D.; Davies, A.; Sullivan, D.; Falke, S.; et al. Sensor Web 2.0: Connecting Earth’s Sensors via the Internet. In Proceedings of the NASA Earth Science Technology Conference 2008, Columbia, MD, USA, 27–30 May 2008; pp. 24–26.

- Simonis, I.; Echterhoff, J. GEOSS and the Sensor Web. In GEOSS Sensor Web Workshop Report; Open Geospatial Consortium, Inc.: Geneva, Switzerland, 2008. [Google Scholar]

- Di, L. Geospatial sensor web and self-adaptive Earth predictive systems (SEPS). In Proceedings of the Earth Science Technology Office (ESTO)/Advanced Information System Technology (AIST) Sensor Web Principal Investigator (PI) Meeting, San Diego, CA, USA, 13–14 February 2007; pp. 1–4.

- Chien, S.; Sherwood, R.; Tran, D.; Cichy, B.; Rabideau, G.; Castano, R.; Davis, A.; Mandl, D.; Trout, B.; Boyer, D.; et al. Using autonomy flight software to improve science return on Earth Observing One. J. Aerosp. Comput. Inf. Commun. 2005, 2, 196–216. [Google Scholar] [CrossRef]

- Jirka, S.; Bröring, A.; Stasch, C. Discovery mechanisms for the sensor web. Sensors 2009, 9, 2661–2681. [Google Scholar] [CrossRef] [PubMed]

- Liang, S.H.; Huang, C.Y. GeoCENS: A geospatial cyberinfrastructure for the world-wide sensor web. Sensors 2013, 13, 13402–13424. [Google Scholar] [CrossRef] [PubMed]

- Kontoes, C.; Sykioti, O.; Paronis, D.; Harisi, A. Evaluating the performance of the space-borne SAR sensor systems for oil spill detection and sea monitoring over the south-eastern Mediterranean Sea. Int. J. Remote Sens. 2005, 26, 4029–4044. [Google Scholar] [CrossRef]

- Hadria, R.; Duchemin, B.; Lahrouni, A.; Khabba, S.; Er-Raki, S.; Dedieu, G.; Chehbouni, A.; Olioso, A. Monitoring of irrigated wheat in a semi-arid climate using crop modelling and remote sensing data: Impact of satellite revisit time frequency. Int. J. Remote Sens. 2006, 27, 1093–1117. [Google Scholar] [CrossRef]

- Chen, N.; Zhang, X. A Dynamic Observation Capability Index for Quantitatively Pre-Evaluating Diverse Optical Imaging Satellite Sensors. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 515–530. [Google Scholar] [CrossRef]

- Hu, C.; Guan, Q.; Chen, N.; Li, J.; Zhong, X.; Han, Y. An Observation Capability Metadata Model for EO Sensor Discovery in Sensor Web Enablement Environments. Remote Sens. 2014, 6, 10546–10570. [Google Scholar] [CrossRef]

- Malewski, C.; Simonis, I.; Terhorst, A.; Bröring, A. StarFL—A modularised metadata language for sensor descriptions. Int. J. Digit. Earth 2014, 7, 450–469. [Google Scholar] [CrossRef]

- Compton, M.; Barnaghi, P.; Bermudez, L.; García-Castro, R.; Corcho, O.; Cox, S.; Graybeal, J.; Hauswirth, M.; Henson, C.; Herzog, A.; et al. The SSN ontology of the W3C semantic sensor network incubator group. Web Semant. 2012, 17, 25–32. [Google Scholar] [CrossRef]

- Zyl, T.V.; Simonis, I.; McFerren, G. The sensor web: Systems of sensor systems. Int. J. Digit. Earth 2009, 2, 16–30. [Google Scholar] [CrossRef]

- Balazinska, M.; Deshpande, A.; Franklin, M.J.; Gibbons, P.B.; Gray, J.; Hansen, M.; Liehbold, M.; Szalay, A.; Tao, V. Data management in the worldwide sensor web. IEEE Pervasive Comput. 2007, 6, 30–40. [Google Scholar] [CrossRef]

- Liu, Y.; Goodchild, M.F.; Guo, Q.; Tian, Y.; Wu, L. Towards a General Field model and its order in GIS. Int. J. Geogr. Inf. Sci. 2008, 22, 623–643. [Google Scholar] [CrossRef]

- Worboys, M.F. GIS: A Computing Perspective; CRC Press: Boca Raton, FL, USA, 1995. [Google Scholar]

- Cova, T.J.; Goodchild, M.F. Extending geographical representation to include fields of spatial objects. Int. J. Geogr. Inf. Sci. 2002, 16, 509–532. [Google Scholar] [CrossRef]

- Goodchild, M.F. Geographical data modeling. Comput. Geosci. 1992, 18, 401–408. [Google Scholar] [CrossRef]

- Bian, L. Object-oriented representation of environmental phenomena: Is everything best represented as an object? Ann. Assoc. Am. Geogr. 2007, 97, 267–281. [Google Scholar] [CrossRef]

- Giles, P.T.; Franklin, S.E. Comparison of derivative topographic surfaces of a DEM generated from stereoscopic SPOT images with field measurements. Photogramm. Eng. Remote Sens. 1996, 62, 1165–1170. [Google Scholar]

- Stefanidis, A.; Nittel, S. GeoSensor Networks; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Park, T.W.; Chang, S.M.; Lee, B. Benchmark tests for CFD codes for the analysis of wind field in the forest. J. Comput. Fluids Eng. 2012, 17, 11–20. [Google Scholar] [CrossRef]

- Kjenstad, K. On the integration of object-based models and field-based models in GIS. Int. J. Geogr. Inf. Sci. 2006, 20, 491–509. [Google Scholar] [CrossRef]

- Gross, N. The Earth Will Don an Electronic Skin. Business Week Online, 1999. Available online: http://www.businessweek.com (accessed on 28 July 2015).

- Butler, D. 2020 computing: Everything, everywhere. Nature 2006, 440, 402–405. [Google Scholar] [CrossRef] [PubMed]

- Shekhar, S.; Chawla, S. Spatial Databases: A Tour; Prentice Hall: Upper Saddle River, NJ, USA, 2003. [Google Scholar]

- Kramer, H.J. Observation of the Earth and Its Environment: Survey of Missions and Sensors; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- GEOCFML Schema. Available online: http://swe.whu.edu.cn/geocfml/1.0/GEOCFML.xsd (accessed on 3 September 2016).

- Static Sensor Observation Capability Information Representation Model Instance. Available online: http://gsw.whu.edu.cn:8080/MODIS-SensorML2.0.xml (accessed on 3 September 2016).

- Yu, J.; Chen, H.; Li, J. Influence pre-evaluation technique of geography factors on satellite imaging. Remote Sens. Inf. 2011, 5, 104–108. [Google Scholar]

- Chen, N.C.; Wang, K.; Xiao, C.J.; Gong, J.Y. A heterogeneous sensor web node meta-model for the management of a flood monitoring system. Environ. Modell. Softw. 2014, 54, 222–237. [Google Scholar] [CrossRef]

- Committee on Earth Observation Satellites (CEOS) System Database. Available online: http://ceos-sysdb.com/CEOS/db_includes/sp_flood.php (accessed on 28 July 2015).

- Hu, C.L.; Chen, N.C.; Li, J. Geospatial web-based sensor information model for integrating satellite observation: An example in the field of flood disaster management. Photogramm. Eng. Remote Sens. 2013, 79, 915–927. [Google Scholar] [CrossRef]

- Menasce, D.A.; Dowdy, L.W.; Almeida, V.A.F. Performance by Design: Computer Capacity Planning by Example; Prentice Hall PTR: Upper Saddle River, NJ, USA, 2004. [Google Scholar]

- Open Weather Map Website. Available online: http://openweathermap.org/city (accessed on 28 July 2015).

- GEOCF instance. Available online: http://swe.whu.edu.cn/geocfml/1.0/examples/GEOCFinstance.xml (accessed on 3 September 2016).

- The NASA Global Change Master Directory Retrieval Portal. Available online: http://gcmd.gsfc.nasa.gov/KeywordSearch/Freetext.do?KeywordPath=&Portal=GCMD&Freetext=hyperion&MetadataType=0 (accessed on 28 July 2015).

- The Remote Sensing Planning Tool. Available online: http://ww2.rshgs.sc.edu/pg_Predict.aspx (accessed on 28 July 2015).

- The CEOS Database. Available online: http://database.eohandbook.com/database/instrumenttable.aspx (accessed on 28 July 2015).

- The World Meteorological Organization Observing Systems Capability Analysis and Review Tool. Available online: http://www.wmo-sat.info/oscar/ (accessed on 28 July 2015).

- Geosensor. Available online: http://gsw.whu.edu.cn:9002/SensorWebPro (accessed on 28 July 2015).

- 52° North Open Source Package. Available online: http://52north.org/downloads/sensor-web (accessed on 28 July 2015).

- The Sensor Instance Registry. Available online: https://wiki.52north.org/SensorWeb/SensorInstanceRegistry (accessed on 28 July 2015).

| The Entire GEOCF Features Info | ||||

|---|---|---|---|---|

| Feature Components of GEOCF Information Representation Model | GEOCF Modes | |||

| Feature Dimensions | Basic GEOCF Feature Components | Complementary | Enhanced | Single |

| Temporal | EachSensorValidObserveTime | √ | √ | √ |

| RepeatObserveTime | √ | √ | × | |

| OverallRSObserveTime | √ | √ | √ | |

| RSObserveTimePercent | √ | √ | √ | |

| Spatial | EachSensorValidObserveLocations | √ | √ | √ |

| BlindObservationLocation | √ | √ | √ | |

| LocationsWithRepeatObservation | √ | √ | × | |

| SensorsObserveCoverageInterlinkedLocations | √ | × | × | |

| OverallObserveLocations | √ | √ | √ | |

| ObserveLocationPercent | √ | √ | √ | |

| Thematic | OverallObserveParameters | √ | √ | √ |

| EachSensorObserveParameters | √ | √ | √ | |

| ParametersInRepeatObservationLocations | √ | √ | × | |

| ParametersInInterlinkedObserveCoverageLocations | √ | × | × | |

| Quality | SpecificObservationLocation | √ | √ | √ |

| ObserveQualityByQuantitativeEstimation | ||||

| ObserveQualityByQualitativeGrade | ||||

| ObserveQualityByQualitativeDescription | ||||

| LinkingReference | LinkToSensorInherentCap | √ | √ | √ |

| Station ID | Station Name | Longitude (°E) | Latitude (°N) | Observe Parameters | Administrative Department |

|---|---|---|---|---|---|

| 60405250 | DeZe | 103.598889 | 25.993333 | Water Quality | Yunnan Provincial Hydrology Bureau |

| 60407110 | HengJiangQiao | 104.411585 | 28.613476 | Evaporation | South Central Survey and Design Institute |

| 60426800 | QingNian | 103.015833 | 25.203889 | Reservoir silt | Yunnan Provincial Hydrology Bureau |

| 60102525 | WuDongDe | 102.622822 | 26.299007 | Flow, flow rate | Changjiang Water Resources Commission |

| 60224950 | LiuDe | 101.0063889 | 26.484444 | Rainfall | Yunnan Provincial Hydrology Bureau |

| …… | |||||

| Aspects | Tools or Systems for Sensor Planning and Discovering Management | ||||||

|---|---|---|---|---|---|---|---|

| GCMD | Google/Yahoo | RESPT | WMO/CEOS | Geosensor | SIR | GEOCapability Manager | |

| Sensor object | Remote sensing & in-situ sensors | All types of sensors | Remote sensing satellite sensors | Remote sensing sensors | Remote sensing & in-situ sensors | In-situ sensors | Remote sensing & in-situ sensors |

| Main usage | Sensor searching | Sensor researching | Sensor planning | Sensor capability review | Sensor observation discovery & service | Sensor discovery | Sensor discovery & planning |

| Sensor Modeling mode | Single & Static sensor | Single & Static sensor | Single & dynamic sensor | Single & Static sensor | Single & Static sensor | Single & Static sensor | Multiple & Dynamic sensors |

| Representing format | Html text | N/A | N/A | text | SensorML | SensorML | GEOCFML |

| Supporting Multi-sensors collaboration | NO | NO | NO | NO | NO | NO | YES |

| Considering the geospatial environment features | NO | NO | NO | NO | NO | NO | YES |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, C.; Guan, Q.; Li, J.; Wang, K.; Chen, N. Representing Geospatial Environment Observation Capability Information: A Case Study of Managing Flood Monitoring Sensors in the Jinsha River Basin. Sensors 2016, 16, 2144. https://doi.org/10.3390/s16122144

Hu C, Guan Q, Li J, Wang K, Chen N. Representing Geospatial Environment Observation Capability Information: A Case Study of Managing Flood Monitoring Sensors in the Jinsha River Basin. Sensors. 2016; 16(12):2144. https://doi.org/10.3390/s16122144

Chicago/Turabian StyleHu, Chuli, Qingfeng Guan, Jie Li, Ke Wang, and Nengcheng Chen. 2016. "Representing Geospatial Environment Observation Capability Information: A Case Study of Managing Flood Monitoring Sensors in the Jinsha River Basin" Sensors 16, no. 12: 2144. https://doi.org/10.3390/s16122144

APA StyleHu, C., Guan, Q., Li, J., Wang, K., & Chen, N. (2016). Representing Geospatial Environment Observation Capability Information: A Case Study of Managing Flood Monitoring Sensors in the Jinsha River Basin. Sensors, 16(12), 2144. https://doi.org/10.3390/s16122144