1. Introduction

The three-dimensional modeling of an object begins with the required data acquisition process for the reconstruction of its geometry and ends with the formation of a virtual 3D model that can be viewed interactively on a computer [

1]. The information provided by the display of these models makes its application possible for different uses [

2], such as the inspection of elements, navigation, the identification of objects and animation, making them particularly useful in applications such as artificial intelligence [

3], criminology [

4], forestry applications [

5,

6], the study of natural disasters [

7,

8], the analysis of structural deformation [

9,

10], geomorphology [

11,

12] or cultural heritage conservation [

13,

14].

In particular, the generation of point clouds and 3D models has important applications, especially in Building Information Modeling (BIM). This digital representation of the physical and functional characteristics of the buildings serves as an information repository for the processes of design and construction, encouraging the use of 3D visualizations [

15]. In the future, devices could include different types of sensors to capture all kind of information for BIM applications. In addition, important technological advances in automated data acquisition has led to the production of more specific models tailored to Historic Building Information Modeling (HBIM) for the preservation of historical or artistic heritage [

16,

17].

In recent years, different techniques have been developed to acquire data [

18]. On the one hand, there are active measurement techniques, carrying out modeling based on scans (range-based modeling), which uses instruments equipped with sensors that emit a light with a structure defined and known by another sensor that has to capture it [

19]. On the other hand, there are passive measurement techniques, with modeling based on images (image-based modeling), which use optical or optical-electronic capture systems for extracting geometric information in the construction of 3D models [

19]. The former uses different types of laser scanners, while the latter employs photogrammetric or simple conventional cameras. In each case, specific software for data processing is used.

One of the most important geometric aspects is the verification of the accuracy and reliability of measurements with which data are acquired and the resulting 3D models are obtained, since, according to the tolerances and maximum permissible errors required for the use of certain models, for example in BIM working environment, the final accuracy and reliability obtained with a specific device will determine its suitability for certain works [

20]. Many studies have carried out such analysis for active measurement techniques [

21,

22,

23] as in the case of passive measurement techniques [

4,

24,

25]. These are deduced in the first case for objects of medium format, with the use of handheld laser scanners, where an accuracy up to 0.1 mm can be achieved [

26]; in the second case, using techniques of automated digital photogrammetry, precision is of the order of about 5 mm [

27], but with the advantage of a smaller economic cost.

There are instruments equipped with a low-cost sensor on the market: the David laser scanner [

28], Microsoft Kinetic v1 and v2 sensors, and RGB-D cameras. These cameras are easy to manage and they are being used for applications that require a precision of about 5 mm to a measured distance of 2 m [

29]. There are also middle-cost devices, based on structured light technology, such as the DPI-8 Handheld Scanner (from DotProduct LLC, Boston, MA, USA) and the FARO Freestyle

3D Scanner (FARO, Lake Mary, FL, USA).

Nowadays, there are new projects that are trying to enter the market using instruments based on a smartphone or a tablet including a range imaging camera and special vision sensor, which are user-friendly, affordable and offer accuracy for a wide range of applications. These include Google’s Tango project from 2014, the Structure Sensor from Occipital from 2015 and EyesMap (EM) carried by e-Capture Research and Development from 2014.

Nonetheless, one of the main problems encountered when performing 3D modeling is to determine the accuracies obtained with these devices, especially when taking into account the rate of information uptake and the intended product. Normally, the two products we are trying to obtain are geometric models and 3D point clouds. The first is used to describe the shape of an object, by means of an analytical, mathematical and abstract model. The second produces very dense and elaborate coordinate data points for the surfaces of a physical object [

30,

31]. For this reason, one objective of this paper is to perform an analysis of the accuracy of the EM, in two modes of data capture: (1) Photogrammetry to get 3D point coordinates; and (2) Photomodeling to get 3D point cloud and the color of the object observed.

This accuracy was evaluated by comparison with the EM measurements and the data acquired by a Total Station. On the other hand, operator error was estimated by comparison with the coordinates of symmetrical target centers measured by EM and by a Scanstation. Additionally, to investigate the feasibility of coordinates, measurements and point cloud acquisition from a BIM perspective, further evaluation was performed in reference to the guidelines of the GSA for BIM Guide for 3D Imaging [

32].

2. Materials and Methods

This study was conducted with an EM tablet from e-capture Research & Development Company. It has dimensions of 303 × 194 × 56 mm

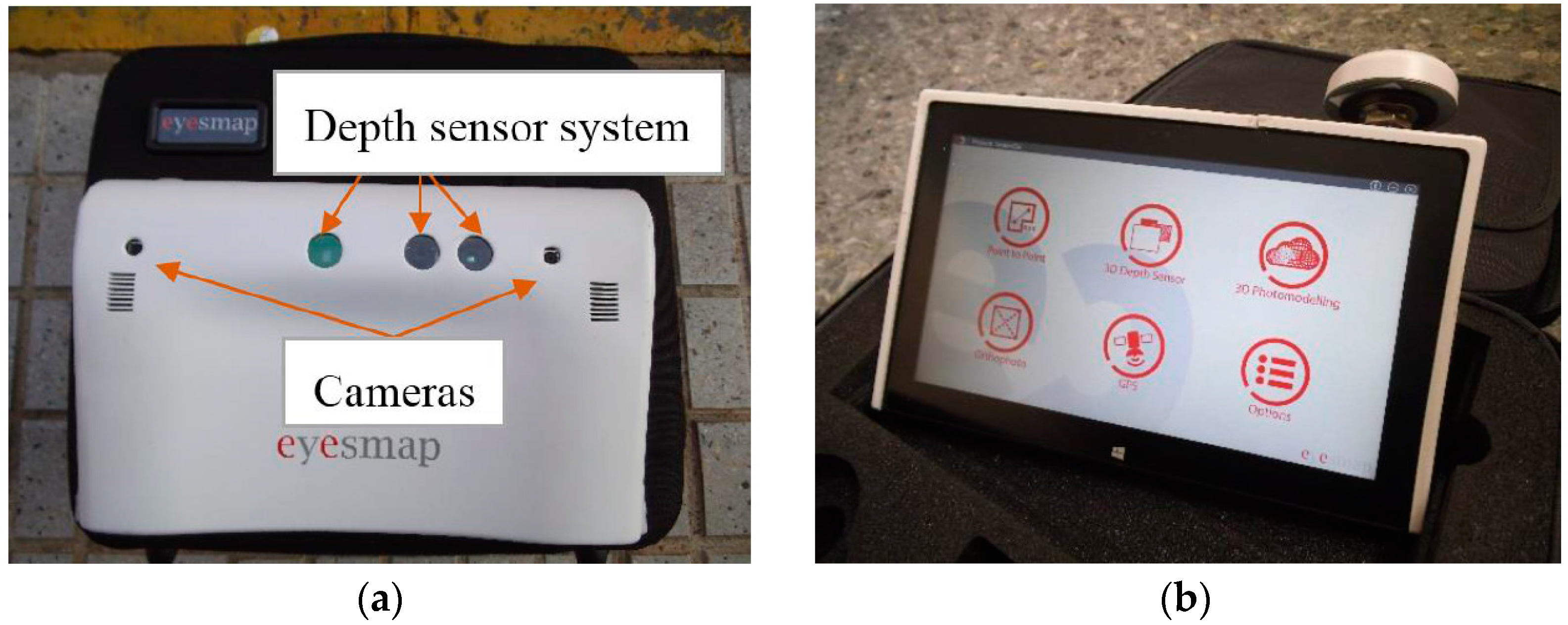

3, a weight of 1.9 kg and a screen of 11.6 inches. The device has a processor Intel Core i7, 16 gigabytes of RAM and runs on the Windows 8 operating system. It has an Inertial Measurement Unit and a GNSS system, which allow for measuring speed, orientation and gravitational forces, as well as the positioning of the instrument in real time. To capture the three-dimensional information, on the back of the tablet (

Figure 1), there is a depth sensor and two cameras with a focal length of 2.8 mm and a 13 megapixel resolution, that form a base line of 230 mm, with a field of view up to 67°.

The beta version of EM costs around €9500. The basic principle of operation is based on photogrammetry techniques, which reconstruct a scene in real time. The precision indicated by the manufacturer (

Table 1) for both measurement modes are:

Precisely in order to achieve the precisions expressed in the previous table, the recommendations of the manufacturer for the Photogrammetry measurement are: (1) take at least 2 pictures; (2) 80% overlap/2 pictures; and (3) capture in parallel or convergent. In the case of measurement by 3D Photomodeling, the same recommendations apply, but take at least five pictures instead of two. EM uses a computer vision approach based on general method of Photogrammetry [

33].

In this sense, obtaining coordinates (

, is computed by Digital Image Correlation (DIC). In this way, 3D cloud points are achieved to a very high density from the surface of the studied object, moreover, storing color information (RGB). The calculation process of the coordinates of the points that compose the cloud, from a pair of oriented pictures is carried out by the method of triangulation [

34].

The continuous evolution of algorithms that perform DIC has been reaching very high levels of precision and automation. Currently, the most effective are Structure from Motion (SFM) and the algorithms of Reconstruction in 3D in high density named Digital Multi-View 3D Reconstruction (DMVR) which produce 3D models of high precision and photorealistic quality from a collection of disorganized pictures of a scene or object, taken from different points of view [

35].

2.1. EM Workflow

The processes of calibration and orientation of cameras are implemented in the EM software. The orientation of pictures can be done in three ways: (1) automatic orientation, matching homologous points that the system finds in both pictures; (2) manual orientation, in which the user chooses at least 9 points in common in both pictures; and (3) automatic orientation by means of targets, which require the existence of at least 9 asymmetrical targets in common. The latter one offers major precision and requires a major processing time. The information obtained can also be viewed in real dimension by means of the target named the Stereo target. EM offers the following options: Photogrammetry, 3D Photomodeling, 3D Modeling with Depth Sensor and Orthophoto. Photogrammetry allows for measuring coordinates, distances and areas between points, as well as exporting its coordinates in different formats (*.txt and *.dxf) so other computer aided design programs can be used. 3D Photomodeling and 3D Modeling with Depth Sensor allow 3D point clouds with XYZ and color information (PLY and RGB formats respectively), from an object. However, modeling with the support of the depth sensor is restricted for indoor work, offering less precise results than the 3D Photomodeling. The last gives an orthophotograph of the work area.

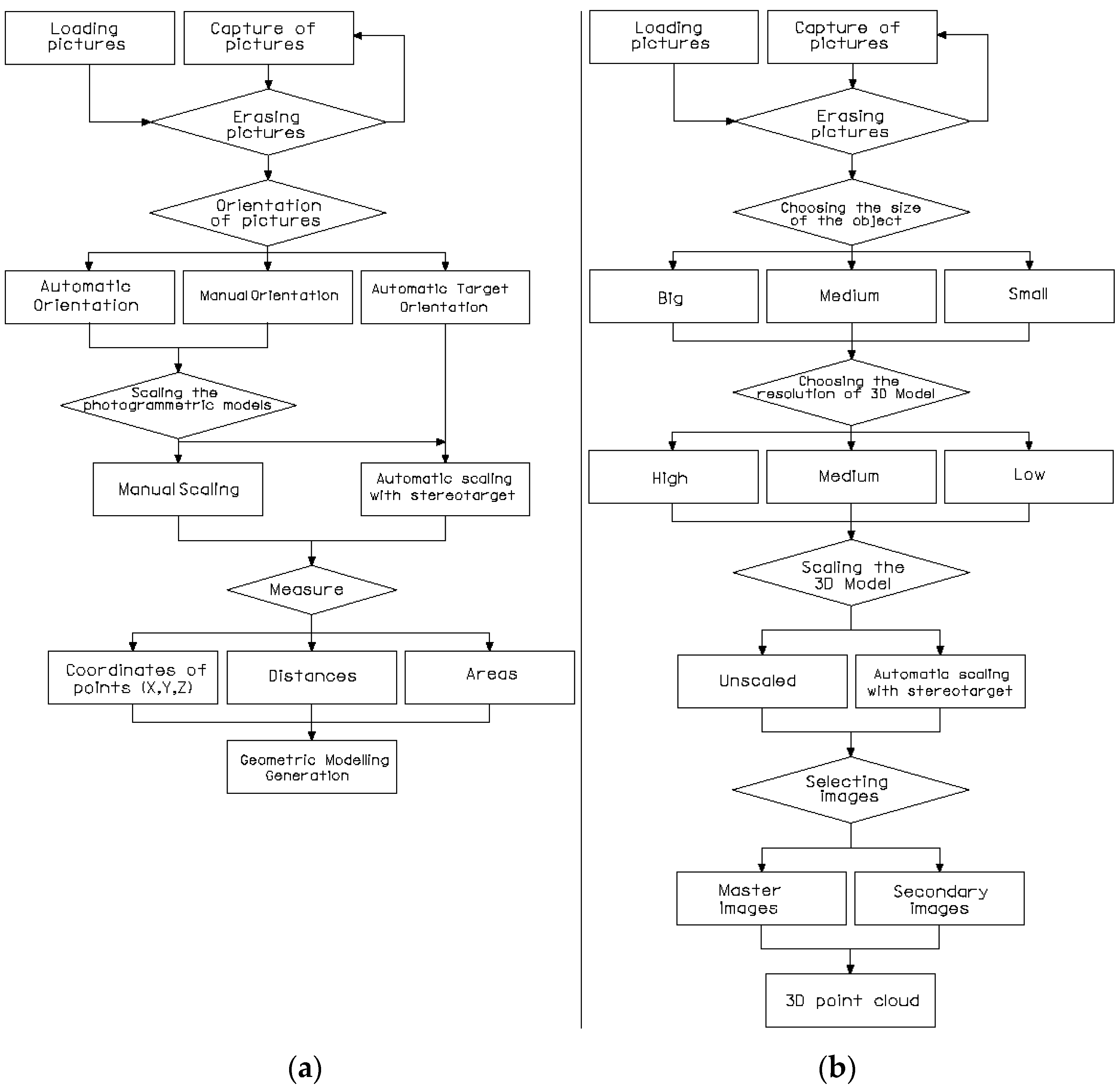

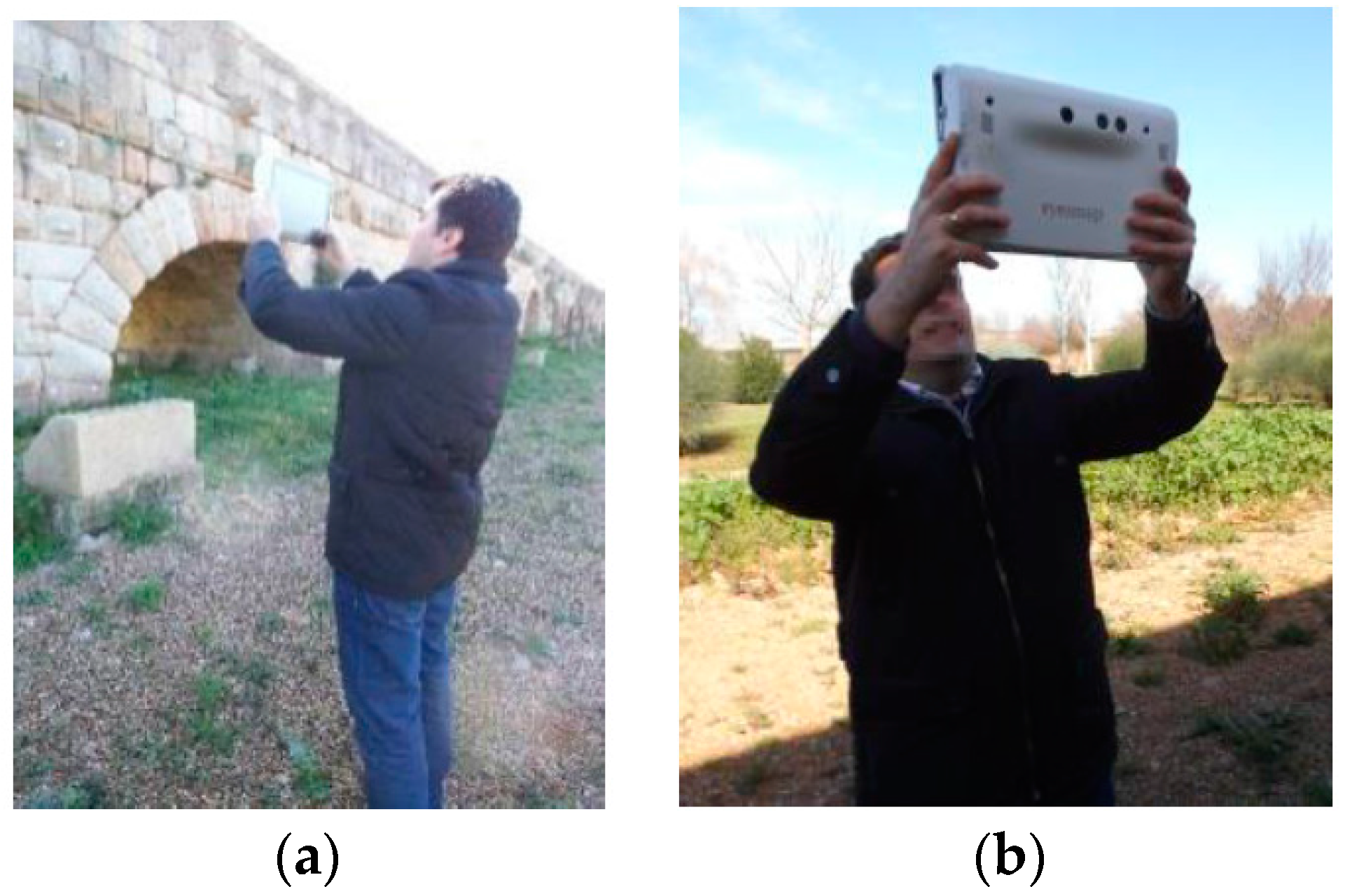

In Photogrammetry (

Figure 2a), pictures can be either captured or loaded. Secondly, pictures have to be managed and the desired pictures selected. Thirdly, we can choose: (1) automatic orientation; (2) manual orientation; or (3) automatic target orientation, in order to achieve the relative orientation of the pictures. In this regard, an automatic scale is made by means of automatic target orientation and the Stereo target is used. After this, the following measurements can be obtained: (1) coordinates of points; (2) distances; or (3) areas. Finally, the geometric model is obtained.

In 3D Photomodeling (

Figure 2b), pictures are managed in the same way as Photogrammetry. Secondly, the object to be measured according to its size is selected: small if dimensions are less than one meter, medium-sized if the dimensions are below 10 m and large for all other dimensions. Consequently, high, medium or low resolution must be selected. The final model will be scaled or unscaled by means of the Stereo target. After this, the master picture can be selected.

In each of these four options, different working procedures are followed, depending on capture methodology, shooting planning, and the size and characteristics of the object to measure.

Figure 2 shows the two options that were used in this study.

2.2. Data Acquisition Systems

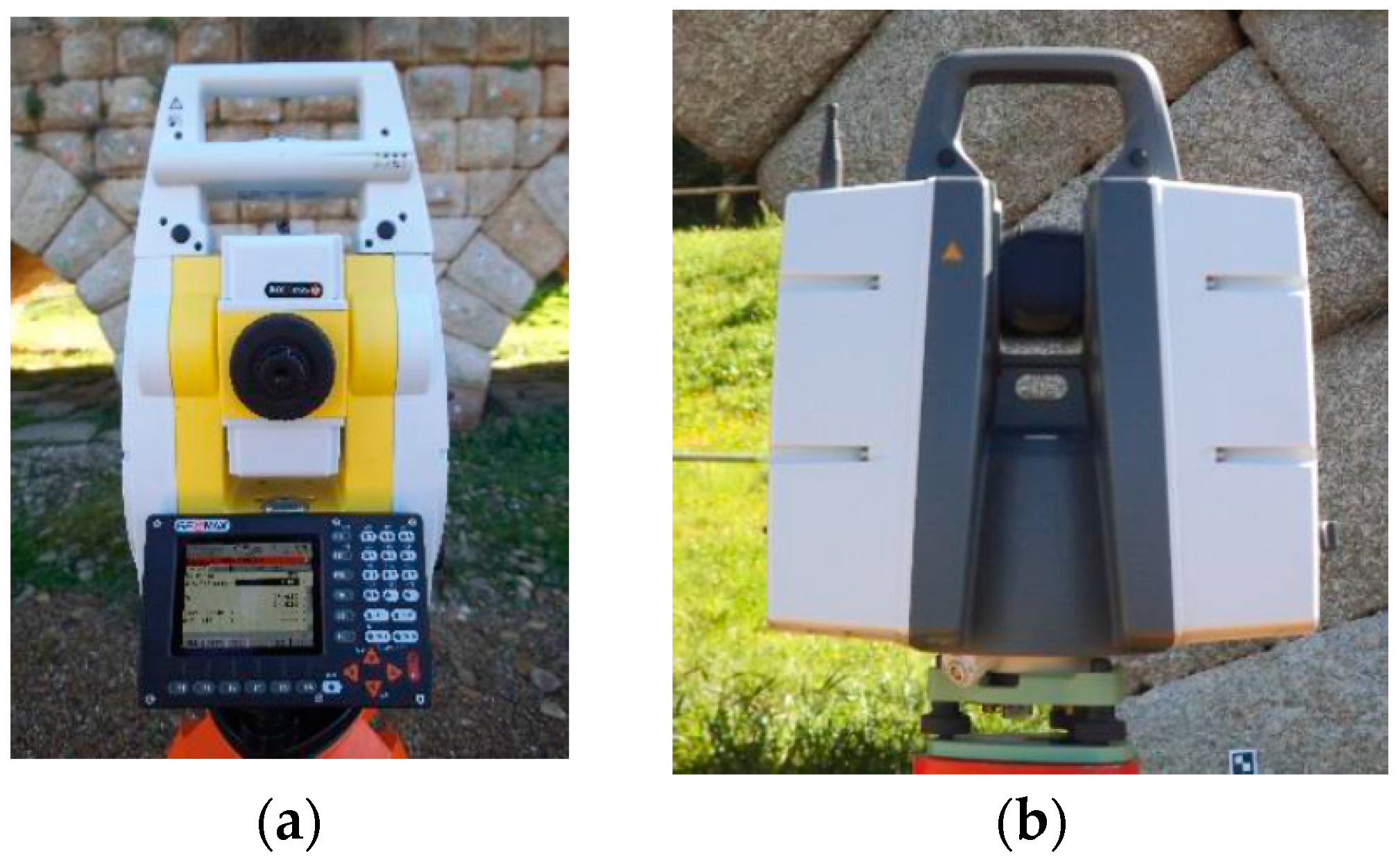

This work is going to determine the instrumental errors of EM for two of the measurement options available: (1) Photogrammetry; and (2) 3D Photomodeling. To achieve it, we have resorted to two other, more precise, measurement instruments [

28,

31,

35]. The Geomax Zoom 80 (GeoMax AG, Widnau, Switzerland) high precision Total Station, with a standard deviation of 2″ (0.6 mgon) for the angular measures and 2 mm ± 2 ppm for the distance measurements (

Figure 3a), and the Scanstation Leica P30 (Leica Geosystems AG, Heerbrug, Switzerland), with a standard deviation in the 3D position of 3 mm (

Figure 3b).

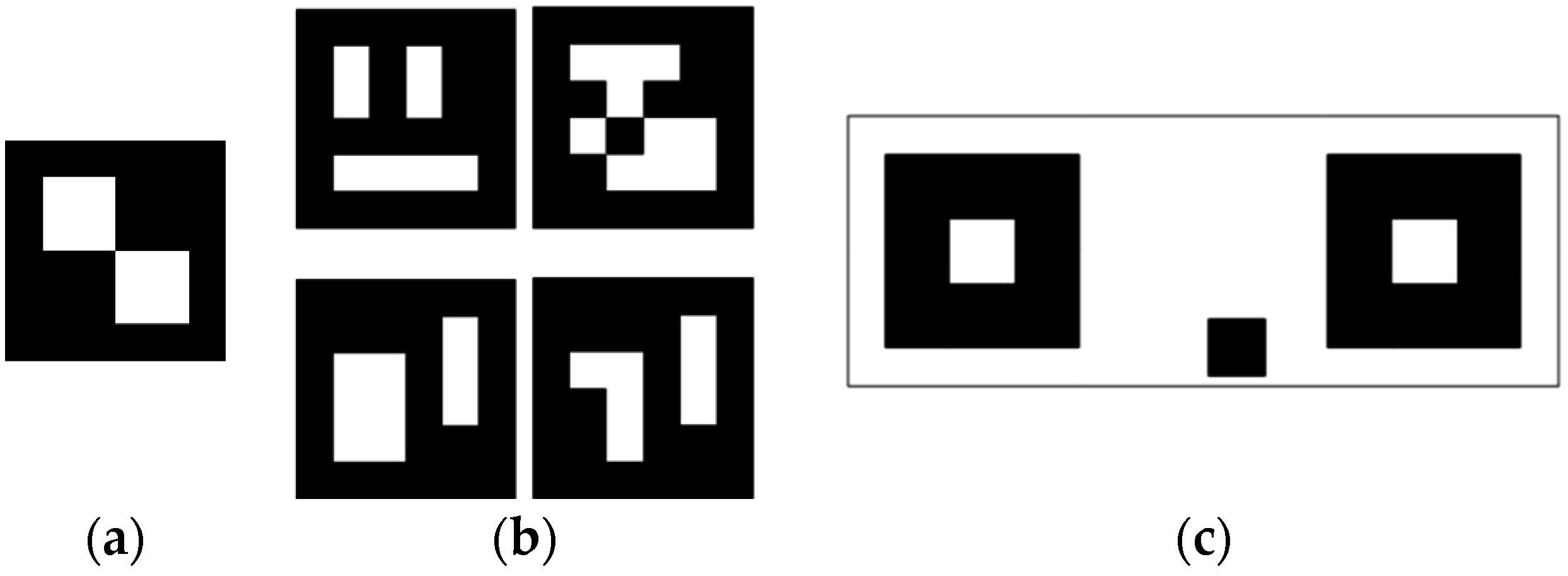

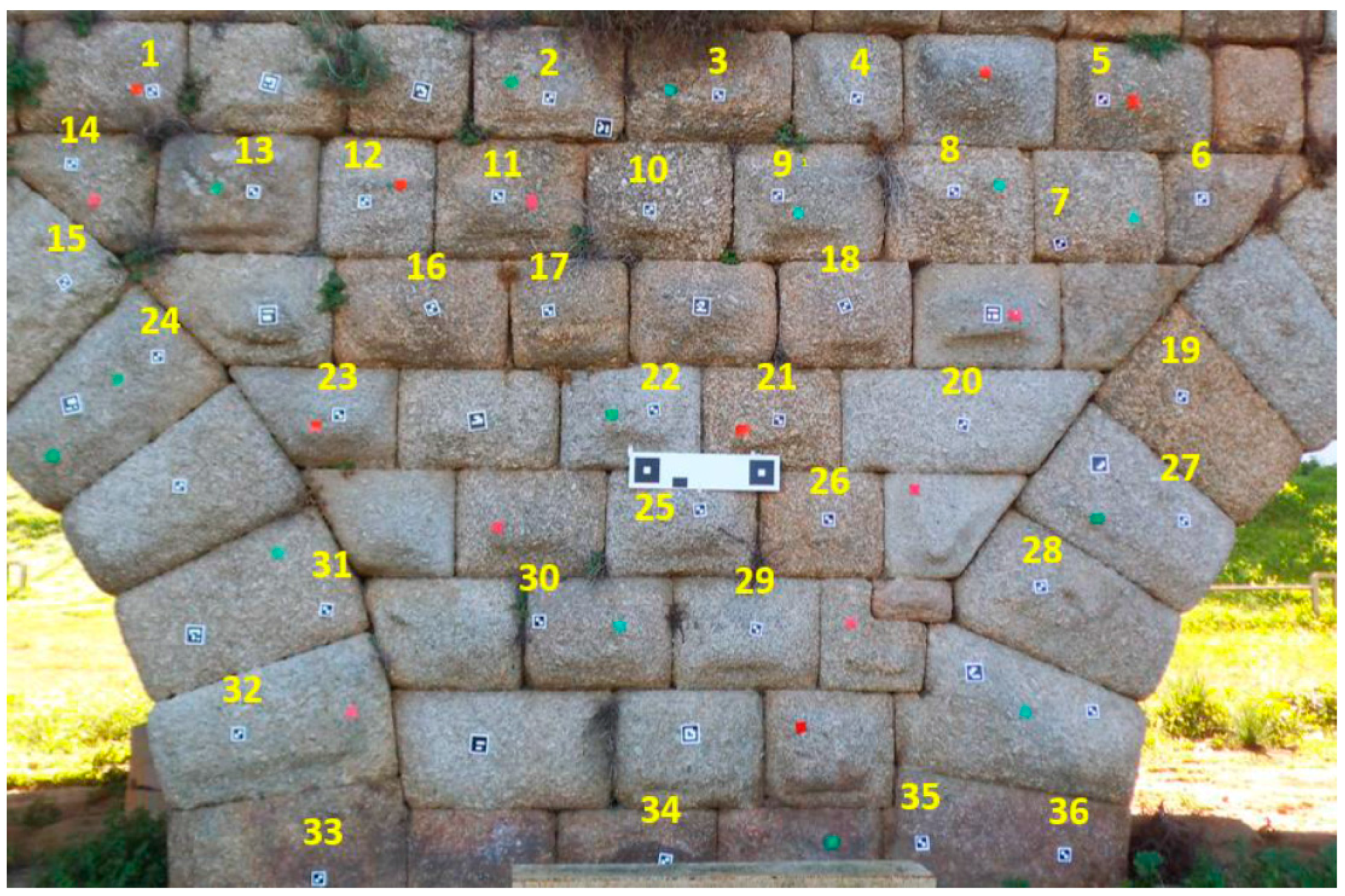

Regarding Photogrammetry, the coordinates of the center of the symmetrical targets (

Figure 4) were measured by EM, on a canvas 1, 2, 3, 4, 5 and 6 m away. Subsequently, these measurements and the measurements obtained by means of the high precision Total Station were compared.

Symmetrical targets were used with asymmetric targets and the Stereo target. The asymmetric targets served for the automatic orientation of the stereoscopic pairs, because this is the most accurate way according to the manufacturer. The Stereo target was also used to scale the obtained measurements.

Regarding the measurement by 3D Photomodeling, high-resolution point clouds were achieved by EM from 1–6 m to the canvas. Subsequently, the centers of symmetrical targets were measured from the point clouds by means of CloudCompareV2 and they were compared with the coordinates obtained by the high precision Total Station. In any case, no point of the clouds obtained by EM coincides exactly with the center of a target and it is necessary to locate and measure the closest point to this center (not the real center) using CloudCompareV2. On the other hand, only the coordinates of the targets that could be correctly identified were measured.

2.3. Data Processing

The coordinates measured by EM (

x,

y,

z); and those obtained by the Total Station and the Scan station (

X,

Y,

Z) are geo referenced on different coordinate systems. To be able to compare them, the coordinates obtained by EM were transformed to the coordinate system provided by the Total Station. The transformation that was used was the so-called Helmert or 7 parameters. The three steps of this transformation are: (1) three rotation angles (Ω, Φ, Κ); (2) three translations (

Tx,

Ty,

Tz); and (3) a change of scale (λ), which except for the last step were calculated using the EM coordinates system. Both systems of coordinates were parallel. Through the translations, both systems would have the same origin of coordinates. Finally, the scale factors of both systems of coordinates have the same measurement units. Nonetheless, the application of the scale factor may alter the measurements [

36], which was not applied for this reason.

where:

The equations were linearized for a point P by means of the development in Taylor series to the first term:

On the basis of the linearized equations of general expression and knowing the coordinates in both systems of at least two points, the following equations were formed:

Expressing the system of equations in matrix form:

Applying the adjustment by least squares, the system of equations is solved and 6 transformation parameters were obtained (Ω, Φ, Κ, Tx, Ty, and Tz). Nevertheless, half of the coordinates of the center of the symmetrical measured targets were used. These were called Transformation Points. Subsequently, with the transformation parameters obtained, the other half of the coordinates of the center of symmetrical targets measured were transformed from the system of coordinates of EM to the system of coordinates of Total Station. The resulting Validation Points have two sets of coordinates in the coordinate system established by the Total Station: (1) coordinates transformed to the Total Station coordinate system; from the measured performed by EM; and (2) coordinates of reference directly measured by Total Station.

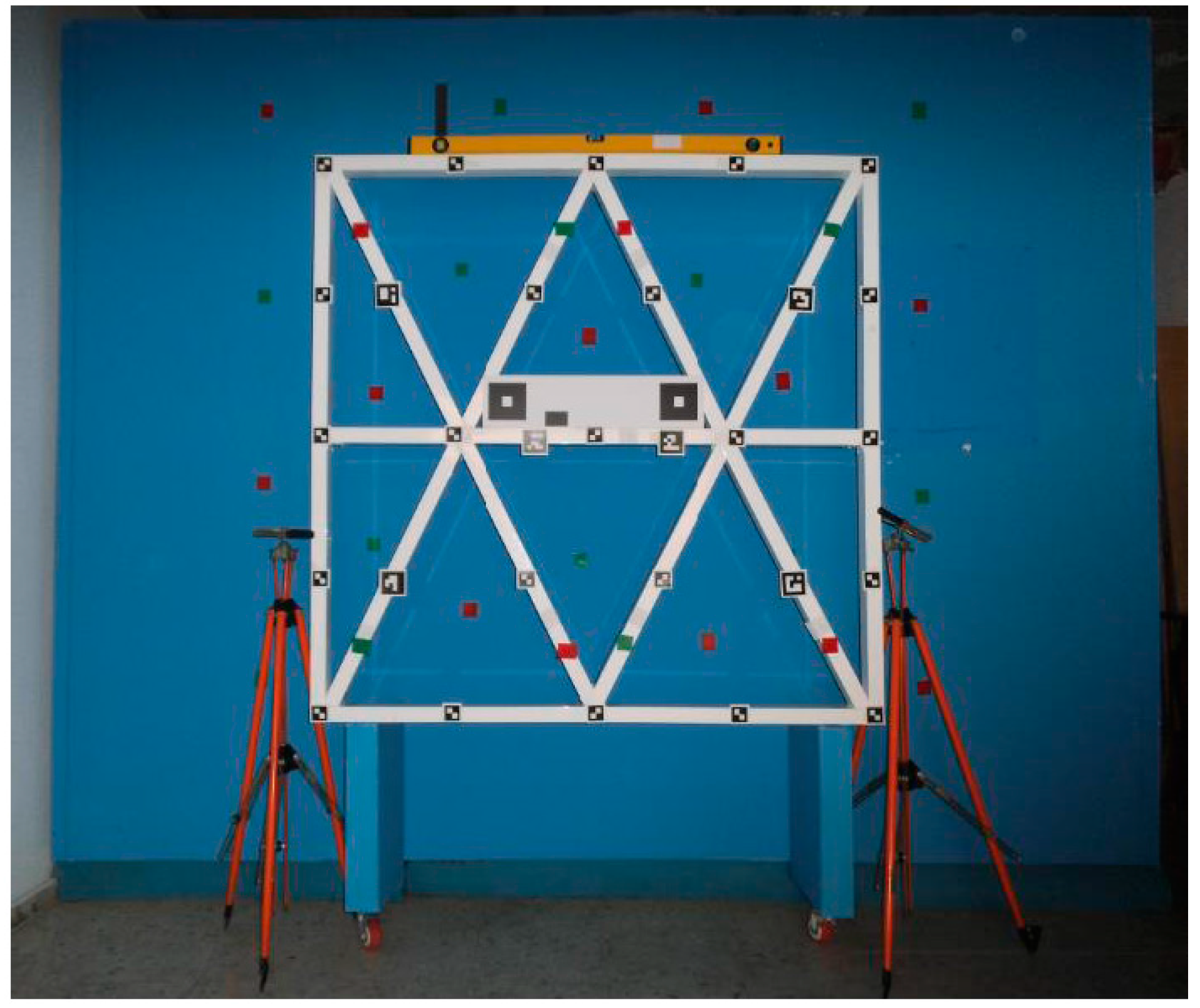

2.4. Uncertainty Assessment

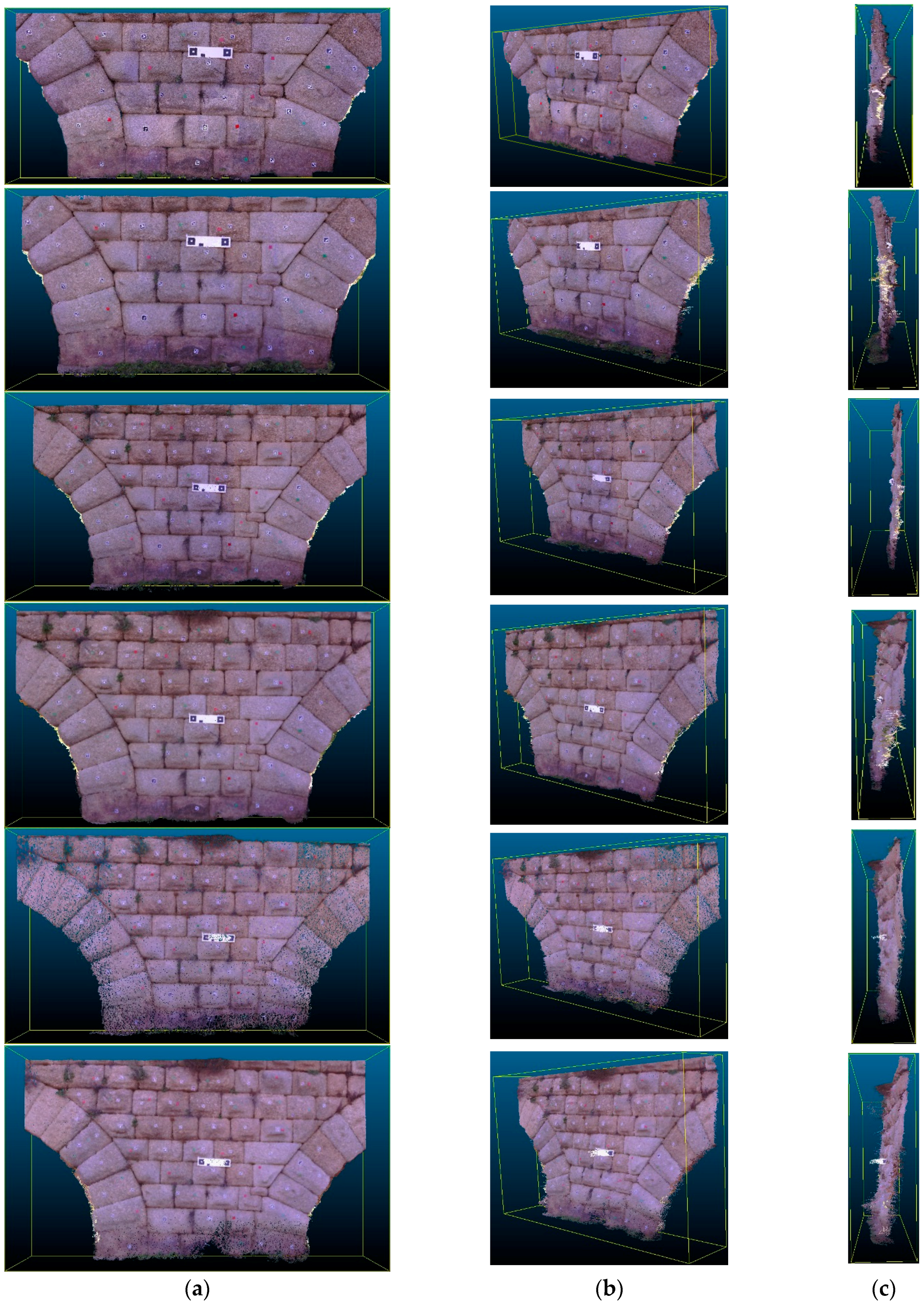

The measurements were made at the Roman Bridge in Merida (Spain), on a canvas of approximately 6 × 5 m

2 (

Figure 5 and

Figure 6). This bridge, being of granite, presents an optimal texture for automatic correlation of images. EM was evaluated according to how correctly it measured elements placed at different depth levels.

The metric quality of measurements obtained by EM was evaluated using the method proposed by Hong et al. [

31]. The three-dimensional coordinate measurements and point clouds obtained by EM (

Figure 7) were compared to a set of Total Station point measurements used as reference points. In the mapping accuracy assessment, comparisons were based on identifiable target centers. These targets were distributed across the canvas. The accuracy assessment was based on the well-distributed and clearly identifiable point targets. A number of reference points were measured for each test side. In addition, using as a reference the tolerances established in the guidelines of the GSA for BIM Guide for 3D Imaging [

32], the viability and acceptability of this measurement device for BIM generation was determined. According to [

32], tolerance is the dimensional deviation allowed as error from the true value in the specified coordinate frame. The true value is a measurement obtained by other means.

Firstly, the precision of the measurements made by EM by Photogrammetry and 3D Photomodeling are evaluated through the Euclidean average distance error (

).

where

corresponding to the measurement is carried out by EM for the

i-th check point in one case by Photogrammetry and in the other case by 3D Photomodeling and

is the measurement made for the same point by Total Station. In addition, the rotation and translation parameters of the 3D Helmert transformation are

R and

T, respectively. Note that scale was not considered in this transformation [

37].

Secondly, the corresponding average error is calculated, together with the error vectors in the

x,

y and

z directions. The Root Mean Square Error (RMSE) is then calculated to assess the quality of the points captured by EM and measured by means of photogrammetry and 3D Photomodeling.

where

shows the point transformed to the coordinates of the Total Station.

Thirdly, the quality of the points measured by EM is also assessed by calculating the Spherical Accuracy Standard (SAS). The SAS, which represents the spherical radius of a 90% probability sphere [

38], is defined as

This represents a positional accuracy of the coordinates obtained by Photogrammetry and the point cloud obtained by 3D Photomodeling with a 90% confidence level. The calculation of errors was repeated for the measurements carried out by EM from 1–6 m to the object measured by Photogrammetry.

3. Results

Results were tabulated (

Table 2 and

Table 3) and shown in graphs. Different points were chosen in each case (3D Photomodeling and Photogrammetry modes) depending on the correct identification of target centers by the operator.

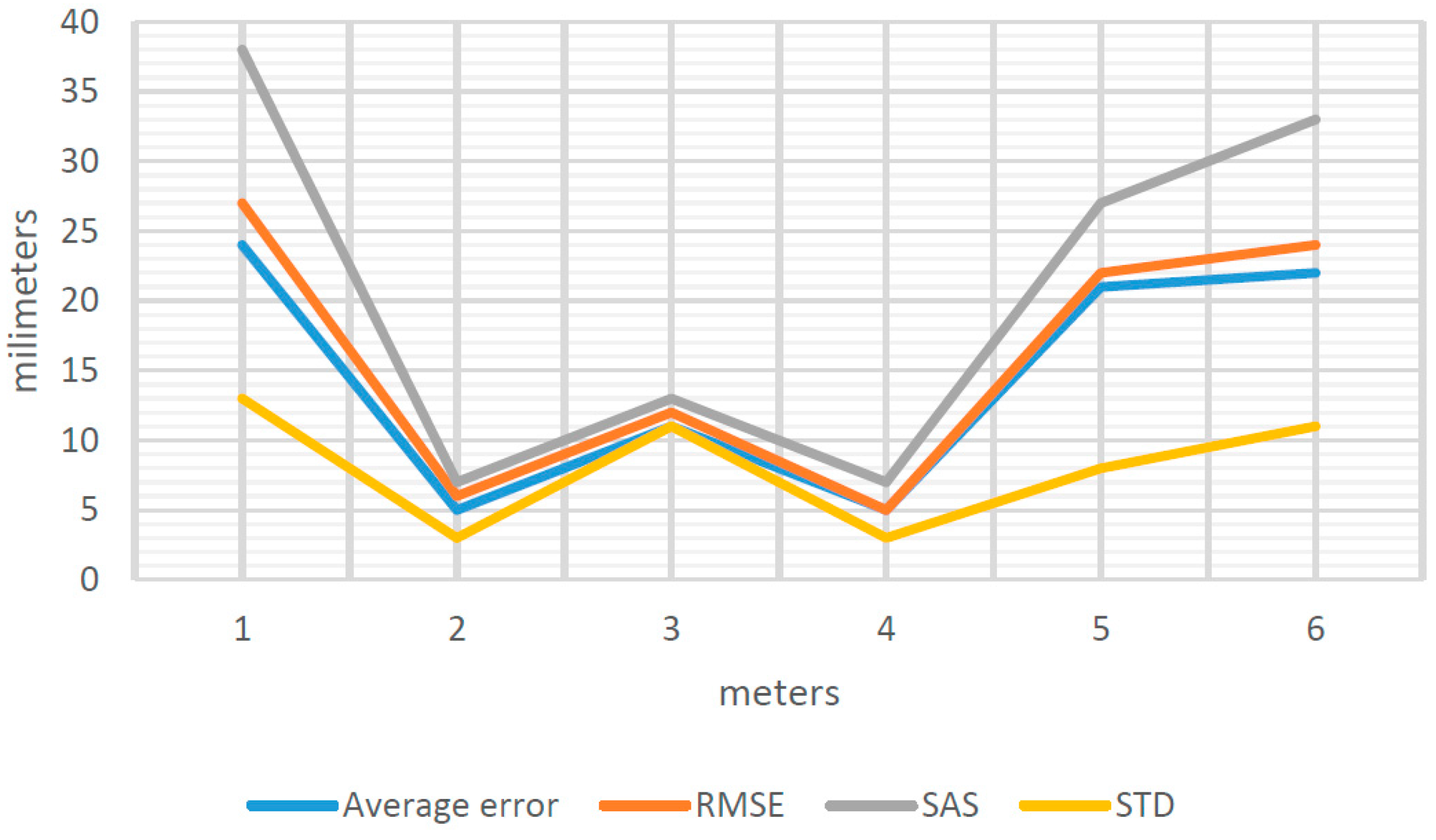

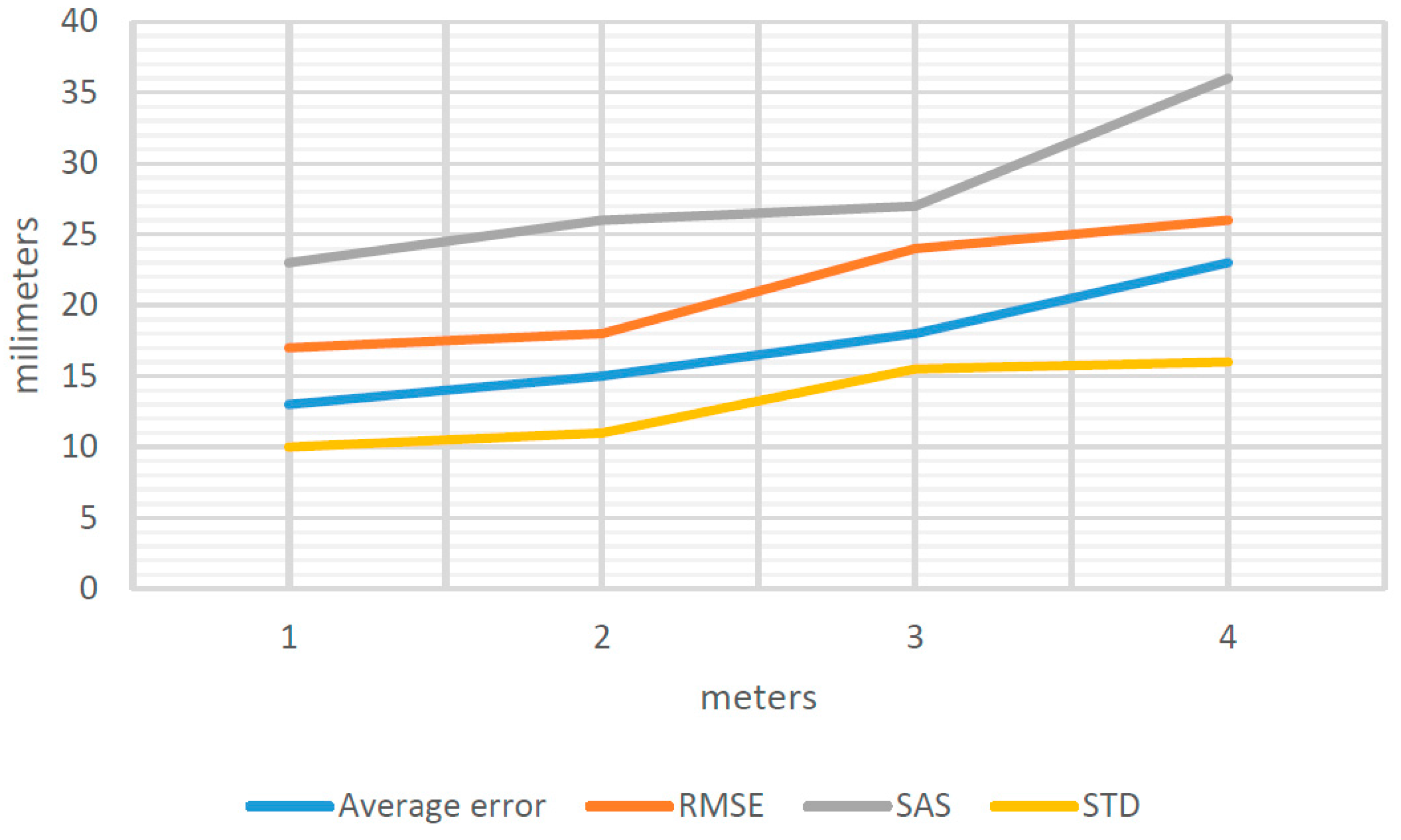

Similarly, there are estimates for observation distances from 1 to 6 m, obtaining the general results shown in

Figure 8.

The value of Average Error, RMSE, SAS and STD (

Figure 8) varied depending on the distance of separation between the object to be measured and the position from which we perform data capture by means of EM.

Nonetheless, as shown in

Figure 8, error does not increase progressively as the separation distance increases. In fact, the optimum separation distances are 2, 3 and 4 m and not 1 m, as could be supposed. At 1, 5 and 6 m, the errors increase considerably.

The results obtained demonstrate that geometric models from between 2 and 4 m of distance to the object measured, satisfy the requirements of the GSA for BIM Guide for 3D Imaging [

32] (

Section 2.3. types of deliverables from 3D data) for projects in urban design, architectural design, room space measurement, historic documentation, renovation (level 2) and above ceiling condition capture. Subsequently, the quality of the point clouds obtained by EM by Photomodeling was evaluated. The point clouds were obtained from 1–6 m to the measured object. However, it was not possible to obtain errors for 5 and 6 m, since the low density of the mesh does not allow for correctly identifying the centers of the symmetrical targets. As a result, it was impossible to measure the coordinates of these targets.

As before, the measurements carried out by EM and measurements made by 3D Photomodeling are evaluated through the Euclidean average distance error () (see Equation (10)).

Similarly, there are estimates for distances of observation from 1 to 4 m, producing the general results that are shown in

Figure 9.

The value of Average Error, RMSE, SAS and STD (

Figure 9) varies depending on the distance of separation between the object to be measured and the position from which we perform data capture by EM.

As shown in

Figure 9, error increases in proportion to the increase in separation distance from the object being measured. Therefore, the most appropriate distance for taking measurements is 1 m.

These errors show that point clouds between 1 and 4 m of distance from the measured object satisfy the requirements of the GSA for BIM Guide for 3D Imaging [

32] for level 1 projects, urban design and historic documentation.

Nonetheless, errors for measurements obtained by both Photogrammetry and 3D Photomodeling are influenced by the operator error. This error is produced by the visual acuity of the operator, when the operator identifies a dot that appears in each picture. The identification of these types of point is done for different purposes, such as the adjustment of photographic pairs and the generation of geometric models. The estimate of this error allows evaluation of their influence on the previously obtained errors. To estimate error, the centers of symmetrical targets were identified at the 3D point clouds achieved by the Scanstation (only considering targets with a point measured close to their centers), the coordinates are measured and these are compared with the coordinates measured by Total Station, since these data are correct and associated errors are known.

The differences between coordinates are used to calculate the error for the vectors

x,

y,

z. Error for each target is measured. In this case, we use the average distance of separation from the measured object, 3 m, in order to determine the standard deviation for the point cloud (

Table 4). In this manner, the standard deviation of the measurements for the targets

is equal to 11 mm.

In addition,

is related to: (1) the standard deviation for the Scanner Station

STDSC = ±3 mm in

X,

Y,

Z coordinates; (2) the standard deviation for the Total Station

STDST = 2 mm ± 2 ppm and 2″ (0.6 mgon) also supplied by the manufacturer; and (3) the standard deviation of the operator

when the operator measure the targets:

The estimation of the error committed by the operator in the identification of the targets, in this case, is equal to 10 mm (

Table 5). Likewise, if we take into account the standard deviation for measurements by Photogrammetry

and Photomodelling

(

Figure 8 and

Figure 9) and

estimated previously, it was observed (

Table 5) that there is a huge influence for this error on the measures carried out.

In this respect, the estimated error of the operator is roughly 91% of the total error measured by Photogrammetry and 62% when we measure with 3D Photomodeling. Note that the color of targets should be in black and white, since when we carried out tests with red and green targets, the error estimate for the operator was even higher.

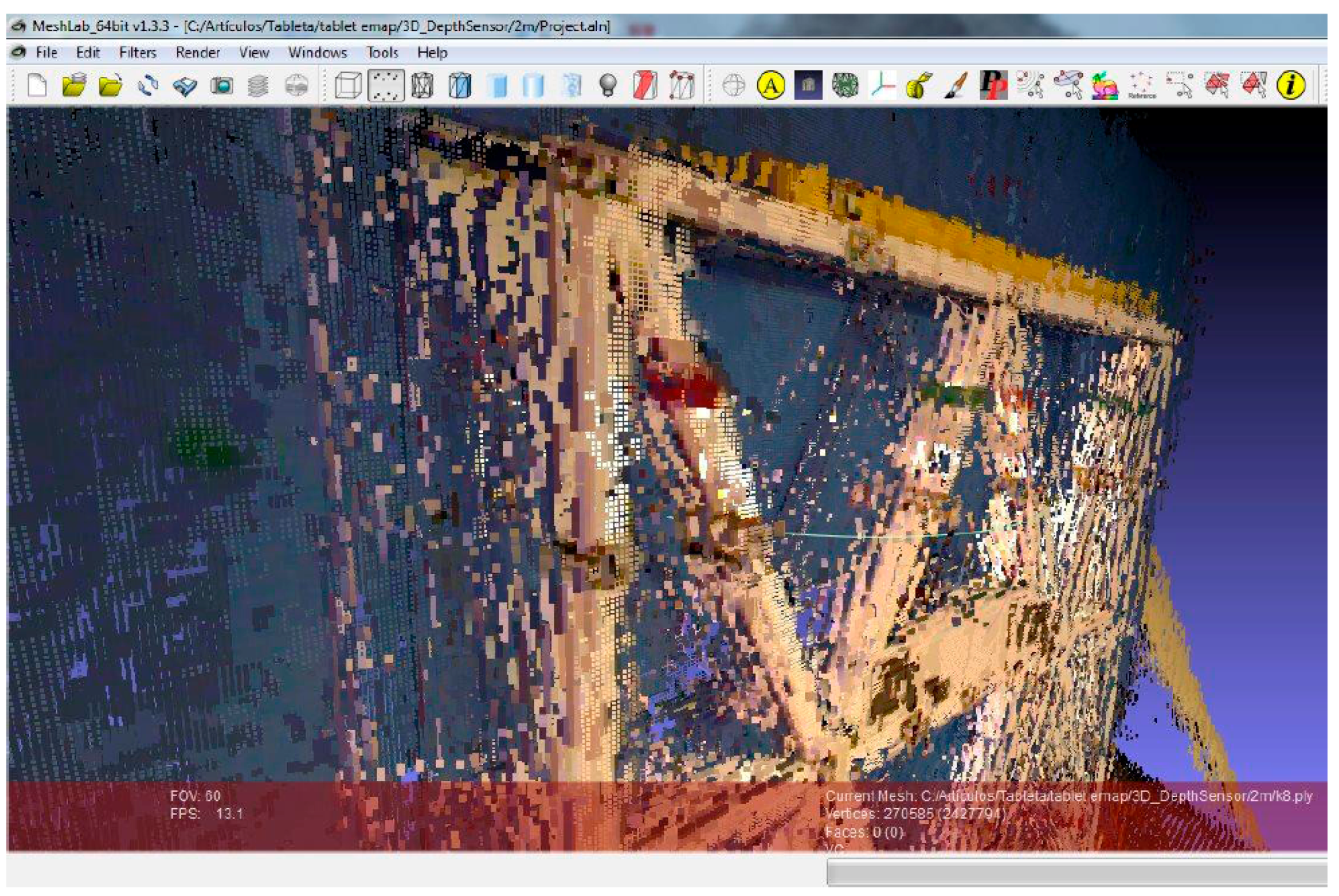

4. 3D Modeling of Indoor Environments with EM

The instrument under study (EM) allows obtaining 3D models inside buildings and structures (indoor). For this, the manufacturer recommends using the option of working with the depth sensor system of the instrument (

Figure 1), with which we can create a complete and scaled point cloud of an indoor environment in real time, with an object to device distance less than 4 m.

In order to perform the analysis of the accuracy of the EM in this mode of data capture, we have designed two experiments: the first, which was conducted inside a historic building that presents an optimal texture for the automatic correlation of images (

Figure 10), and the second, which has the purpose of checking the operation of the equipment in unfavorable working conditions, has been carried out in premises where we have placed a metal aluminum structure, with a smooth, bright and white surface placed in front of a smooth blue wall and separated at a distance of 0.5 m (

Figure 11).

Table 6 and the models of

Figure 12 show the results obtained in the first experiment.

However, the tests conducted in the second experiment have not been successful because the resulting models are not acceptable (

Figure 13) with these working conditions.

5. Conclusions

The tests show that precisions indicated by the EM manufacturer are greater than those obtained. Likewise, errors could not be quantified for measurements exceeding four meters from the object to be measured, as it was impossible to identify the center of symmetrical targets.

Errors vary in the distance of separation when capturing data by means of EM, a key factor in the precision of measurements. Error obtained following GSA requirements for the BIM Guide for 3D Imaging [

32] shows that measurements by Photogrammetry are suitable for urban design projects, room space measurement, historical documentation, renovation and above ceiling condition. The measurements obtained by 3D Photomodeling (outdoor) and 3D Modeling with Depth Sensor (indoor) are conducive to level 1 projects for urban design and historical documentation.

Nonetheless, to reduce this error, an algorithm within the software for automatic recognition of the center of symmetrical targets or singular homologous points that serves to take some measurements is proposed. In this way, the estimated error produced by the operator would be minimized.

In addition, an error report that comments on the adjustment of photogrammetric models is recommended prior to obtaining the coordinates by Photogrammetry or the cloud points using 3D Photomodeling. Thus, the user would know whether the dimension of error in the photogrammetric adjustment is acceptable when performing a particular task.

Furthermore, it would be convenient for EM to report on what parameter values were used for internal, relative and absolute orientation for each picture once the adjustment has been made. In this sense, EM should also enter the precise value of these parameters. Thus, a user can resume a working session without having to start the entire process of adjusting each picture. Users could even work with historical pictures where orientation parameters were known.

Finally, the convenient portability of EM and its calculation of error make it complementary to the Scanstation, particularly with measurements difficult to obtain by the latter device.