PIMR: Parallel and Integrated Matching for Raw Data

Abstract

:1. Introduction

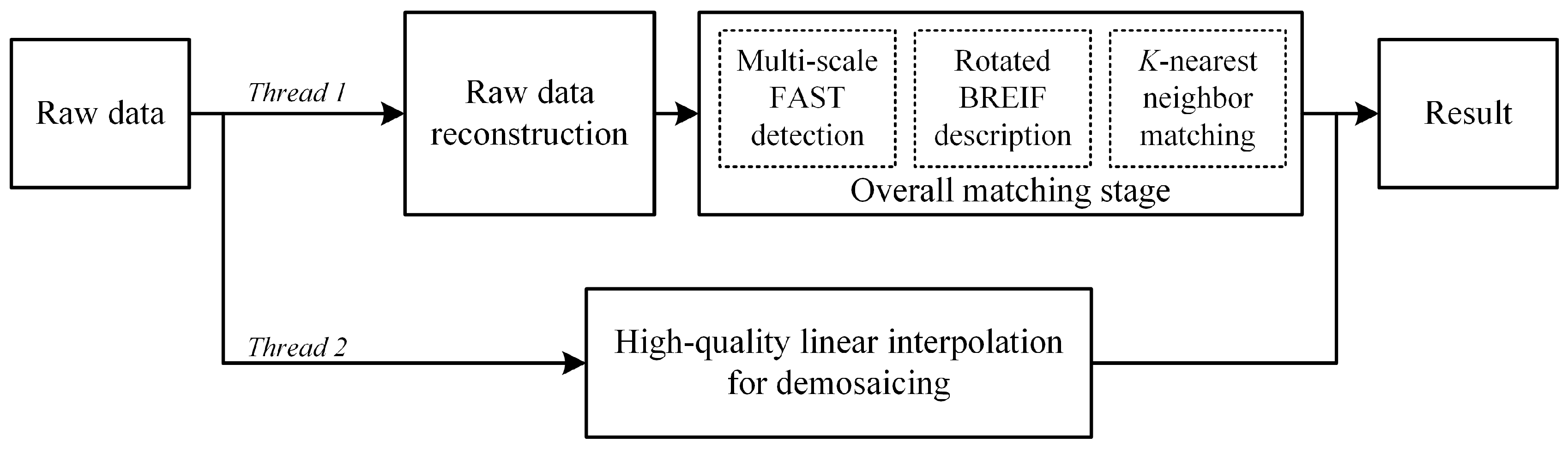

2. Parallel and Integrated Matching for Raw Data

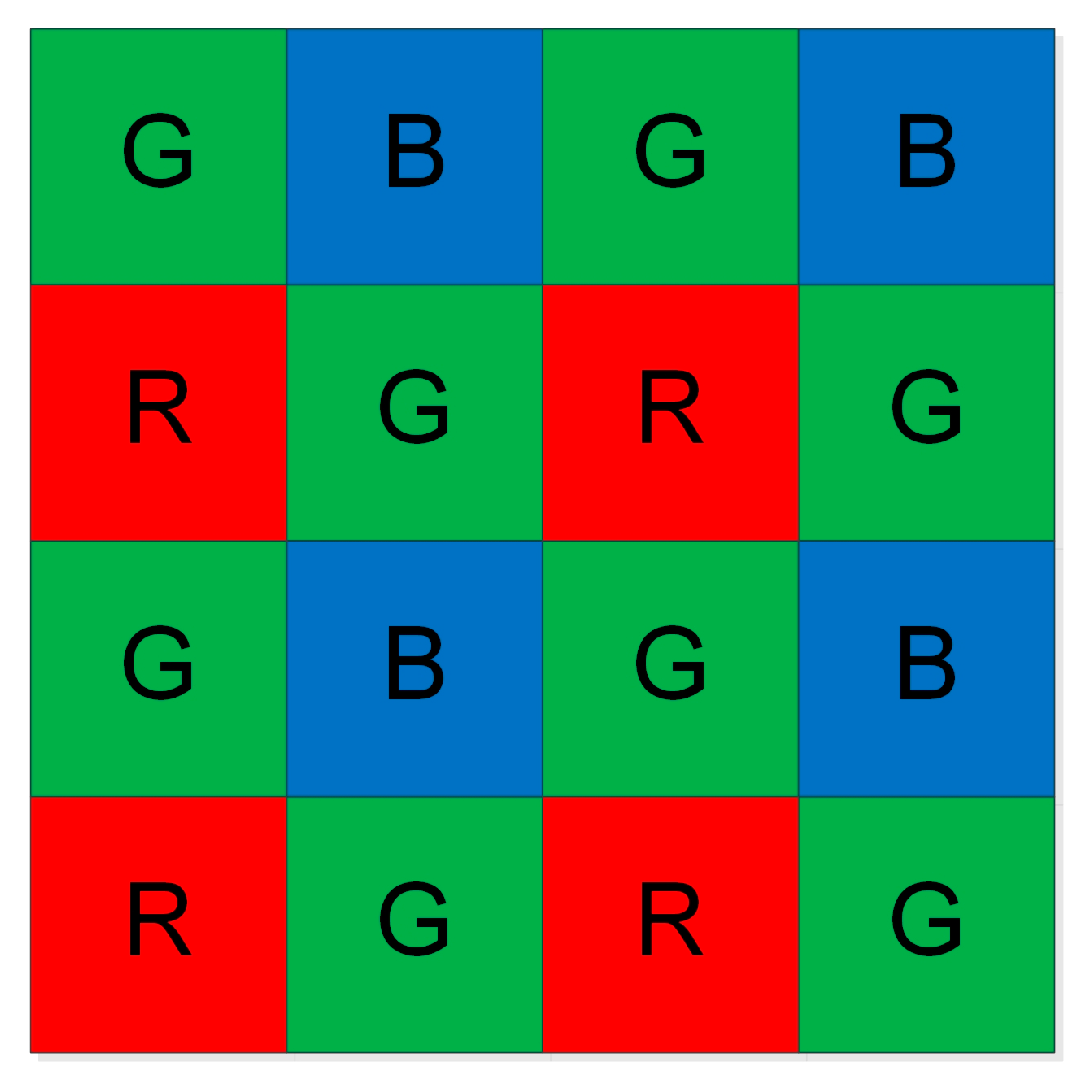

2.1. Raw Data

2.2. Reconstruction for Raw Data

2.3. Parallel and Integrated Framework

3. Performance Evaluation

3.1. Experimental Details

3.2. Accuracy

3.3. Time-Cost

| Methods | Demosaicing (s) | Raw Data Reconstruction (s) | Overall Matching (s) | Total (s) |

|---|---|---|---|---|

| ORB | 0.009 | - | 0.040 | 0.049 |

| BRIEF | 0.009 | - | 0.049 | 0.058 |

| BRISK | 0.009 | - | 0.225 | 0.234 |

| FREAK | 0.009 | - | 0.231 | 0.240 |

| PIMR | - | 0.004 | 0.030 | 0.034 |

3.4. Matching Samples with PIMR

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zaragoza, J.; Tat-Jun, C.; Tran, Q.H.; Brown, M.S.; Suter, D. As-projective-as-possible image stitching with moving dlt. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1285–1298. [Google Scholar] [PubMed]

- Mei, X.; Ma, Y.; Li, C.; Fan, F.; Huang, J.; Ma, J. A real-time infrared ultra-spectral signature classification method via spatial pyramid matching. Sensors 2015, 15, 15868–15887. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Tang, J.; Zhang, X.; Liu, X.; Zhang, H.; Qiu, Y. Exploring techniques for vision based human activity recognition: Methods, systems, and evaluation. Sensors 2013, 13, 1635–1650. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.D.; Dong, Z.C.; Wang, S.H.; Ji, G.L.; Yang, J.Q. Preclinical diagnosis of magnetic resonance (MR) brain images via discrete wavelet packet transform with tsallis entropy and generalized eigenvalue proximal support vector machine (GEPSVM). Entropy 2015, 17, 1795–1813. [Google Scholar] [CrossRef]

- Hu, M. Visual-pattern recognition by moment invariants. Ire Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Zhang, Y.D.; Wang, S.H.; Sun, P.; Phillips, P. Pathological brain detection based on wavelet entropy and hu moment invariants. Bio-Med. Mater. Eng. 2015, 26, S1283–S1290. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Li, Z.; Gong, W.; Nee, A.Y.C.; Ong, S.K. Region-restricted rapid keypoint registration. Opt. Express 2009, 17, 22096–22101. [Google Scholar] [CrossRef] [PubMed]

- Calonder, M.; Lepetit, V.; Ozuysal, M.; Trzcinski, T.; Strecha, C.; Fua, P. Brief: Computing a local binary descriptor very fast. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1281–1298. [Google Scholar] [CrossRef] [PubMed]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Computer Vision—Eccv 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 430–443. [Google Scholar]

- Agrawal, M.; Konolige, K.; Blas, M. Censure: Center surround extremas for realtime feature detection and matching. In Computer Vision—Eccv 2008; Forsyth, D., Torr, P., Zisserman, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5305, pp. 102–115. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571.

- Rosin, P.L. Measuring corner properties. Comput. Vis. Image Underst. 1999, 73, 291–307. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. Brisk: Binary robust invariant scalable keypoints. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2548–2555.

- Alahi, A.; Ortiz, R.; Vandergheynst, P. Freak: Fast retina keypoint. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence Rhode Island Convention Center Providence, RI, USA, 16–21 June 2012; pp. 510–517.

- Schafer, R.W.; Mersereau, R.M. Demosaicking: Color filter array interpolation. IEEE Signal Process. Mag. 2005, 22, 44–54. [Google Scholar]

- Li, X.; Gunturk, B.; Zhang, L. Image demosaicing: A systematic survey. Proc. SPIE 2008, 6822. [Google Scholar] [CrossRef]

- Lu, Y.; Jian, S. High quality image reconstruction from raw and jpeg image pair. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2158–2165.

- Macfarlane, C.; Ryu, Y.; Ogden, G.N.; Sonnentag, O. Digital canopy photography: Exposed and in the raw. Agric. For. Meteorol. 2014, 197, 244–253. [Google Scholar] [CrossRef]

- Verhoeven, G.J.J. It’s all about the format—Unleashing the power of raw aerial photography. Int. J. Remote Sens. 2010, 31, 2009–2042. [Google Scholar] [CrossRef]

- Bayer, B.E. Color Imaging Array. U.S. Patent US3971065, 20 July 1976. [Google Scholar]

- Maschal, R.A.; Young, S.S.; Reynolds, J.P.; Krapels, K.; Fanning, J.; Corbin, T. New image quality assessment algorithms for cfa demosaicing. IEEE Sens. J. 2013, 13, 371–378. [Google Scholar] [CrossRef]

- Intel’s Software Network, Sofwareprojects. Intel. com/avx; programming reference; SSE Intel.: Santa Clara, CA, USA, 2007; Volume 2.

- Malvar, H.S.; He, L.-W.; Cutler, R. High-quality linear interpolation for demosaicing of bayer-patterned color images. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’04), Montreal, QC, Canada, 17–21 May 2004; pp. 485–488.

- Point Grey Research. Different Color Processing Algorithms. Available online: http://www.ptgrey.com/KB/10141 (accessed on 14 December 2015).

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Yang, J.; Zhao, J.; Han, P.; Chai, Z. PIMR: Parallel and Integrated Matching for Raw Data. Sensors 2016, 16, 54. https://doi.org/10.3390/s16010054

Li Z, Yang J, Zhao J, Han P, Chai Z. PIMR: Parallel and Integrated Matching for Raw Data. Sensors. 2016; 16(1):54. https://doi.org/10.3390/s16010054

Chicago/Turabian StyleLi, Zhenghao, Junying Yang, Jiaduo Zhao, Peng Han, and Zhi Chai. 2016. "PIMR: Parallel and Integrated Matching for Raw Data" Sensors 16, no. 1: 54. https://doi.org/10.3390/s16010054

APA StyleLi, Z., Yang, J., Zhao, J., Han, P., & Chai, Z. (2016). PIMR: Parallel and Integrated Matching for Raw Data. Sensors, 16(1), 54. https://doi.org/10.3390/s16010054