An Efficient Data Compression Model Based on Spatial Clustering and Principal Component Analysis in Wireless Sensor Networks

Abstract

:1. Introduction

- We propose a model based on spatial clustering and principal component analysis to compress the transmission data in wireless sensor networks, while the idea of taking the strong correlation among sensor data into consideration in the process of PCA is novel.

- We propose an adaptive strategy to guarantee the error bound of each sensor node, ensuring the precision of our compression model.

- We extend the cluster head selection strategy in [12], which can be used to reduce the energy consumption further.

- We verify the powerful performances of our proposed model through computer simulations.

2. Background

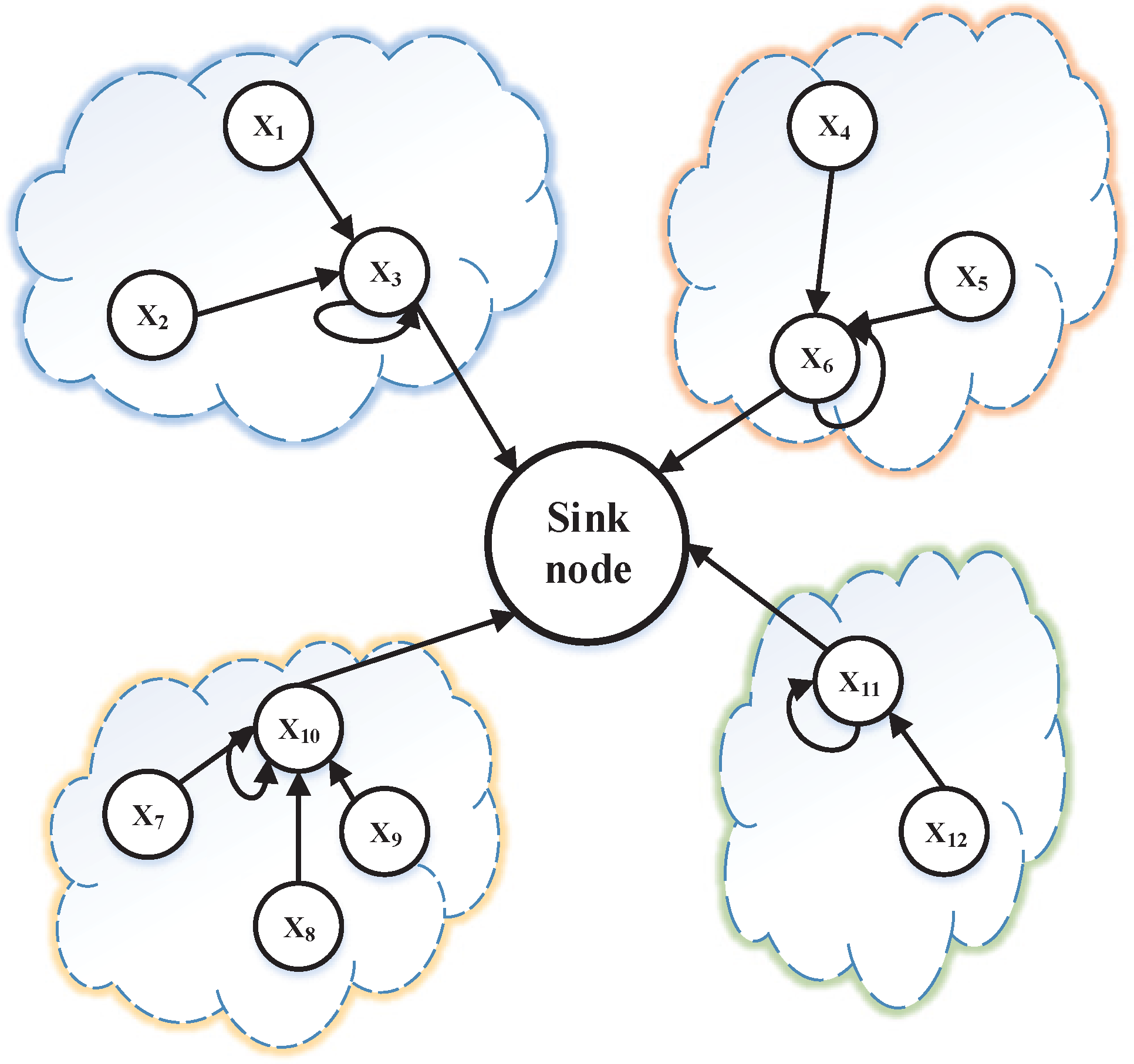

2.1. Spatial Clustering and Autoregressive Model

2.2. Principal Component Analysis

3. The Cluster Head Selection Strategy

4. System Model

4.1. Notations and Formalization

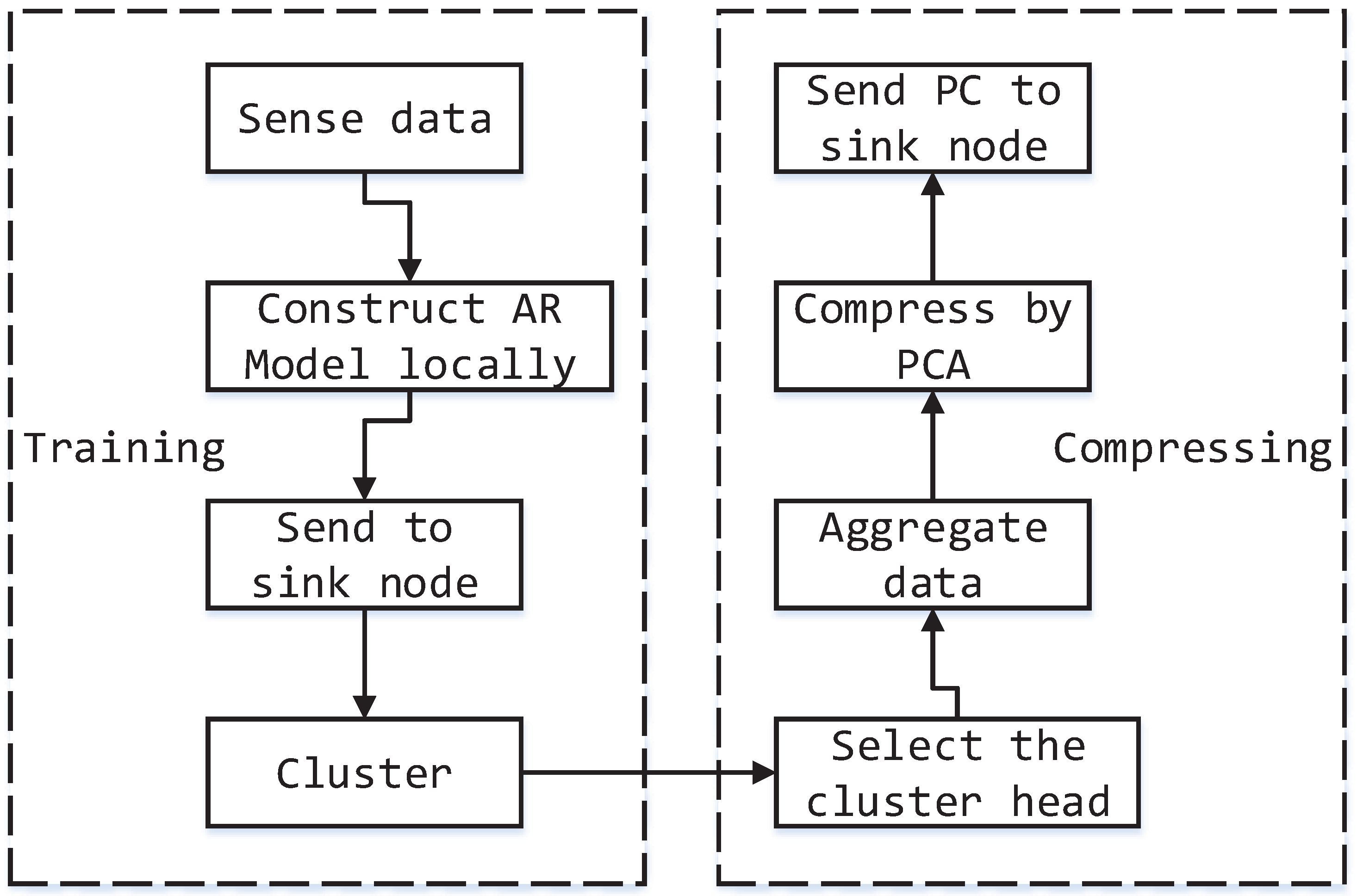

4.2. Compression Model

- First, a set of historical data of each sensor is collected and processed into a matrix by the method proposed in Section 2.1, i.e., using the latest readings as the input and the n-th data as the output. The n readings can be regarded as an observation, and m observations are obtained in the same way. The data of each sensor can be represented by a matrix of size .

- Then, to avoid the cost of transmitting data to the sink node, we construct an autoregressive (AR) model for each sensor locally based on the data matrix acquired by the above process. The learning phase of the AR model can be considered as a linear regression, which is universal in machine learning. The method of minimizing the mean square error between the real data and predicted data can be used to estimate the parameter through gradient descent. For more details, refer to [21].

- Next, the AR parameters and a sequence of sensor readings of each node will be sent to the sink node. The power cost of this transmitting can be ignored, because the temporal-spatial correlation will not change frequently, and the relevancy needs to be updated at a long interval. After all of the data has been gathered into the sink node, we use a clustering algorithm to group the sensor nodes into different clusters [22]. The process of clustering can discover the underlying pattern and correlation of different sensor nodes. In our model, we use the k-means clustering algorithm, which aims to partition the m observations into k collections , so as to minimize the sum of the distance between samples and the corresponding cluster centroid, which can be formalized by:where is the mean of points in . Now, the training stage has come to an end.

- Further, the result of clustering will be distributed to each sensor node, and the correlation between sensor nodes has been clear and definite. Then, the cluster head selection strategy mentioned in Section 3 will be used at each transmission epoch to ensure the head of each cluster, and all if the data of each cluster will be gathered into the cluster head and then compressed by principal component analysis, i.e., each sensor node transmits a k-bit message synchronously. Suppose that there are m sensor nodes in the current cluster, then the head of the current cluster will get a data matrix. Accordingly, we can get the covariance matrix through the equation:The eigenvector matrix of the covariance matrix Σ can be calculated through the eigenvalue decomposition. Following this, we can get the transformation matrix based on the number of PCs by selecting the first p columns of the eigenvector matrix W. The value of p can be decided by Equation (5) to guarantee the error bound of our model. To elaborate, we calculate the at each cluster head node and set p to the minimum value that satisfies the inequation , where δ is a predefined value to measure the error bound that the system can tolerate. Afterwards the data matrix can be transformed into a new space by (The formula is a little different from Equation (3), just owing to the difference between the form of the original data expression. In Equation (3), observations are arranged by columns; however, they are arranged by rows here). Due to the strong temporal-spatial correlation between different nodes in the same cluster, we can use fewer PCs to transform the original data while retaining a considerable variance. Thus, the goal of compressing will come true at a lower cost.

- Finally, the data matrix after compression and the transformation matrix will be sent to the sink node, and the data matrix can be reconstructed at the sink node by . Thereafter, we can calculate the mean square reconstruction error to evaluate the accuracy of the compression model, which can be used for the reference of tuning parameters.

4.3. Cluster Maintenance

5. Performance Evaluation

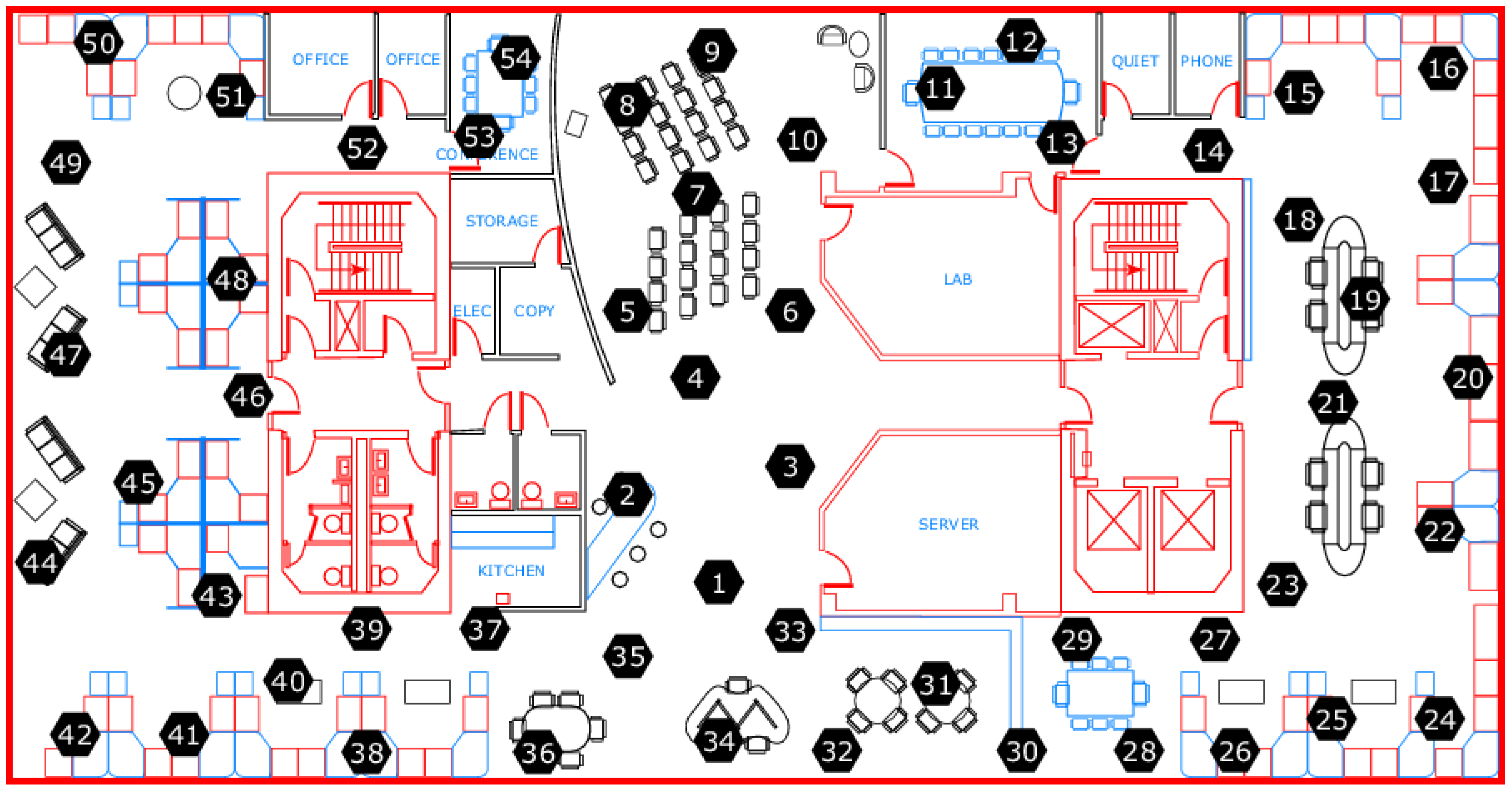

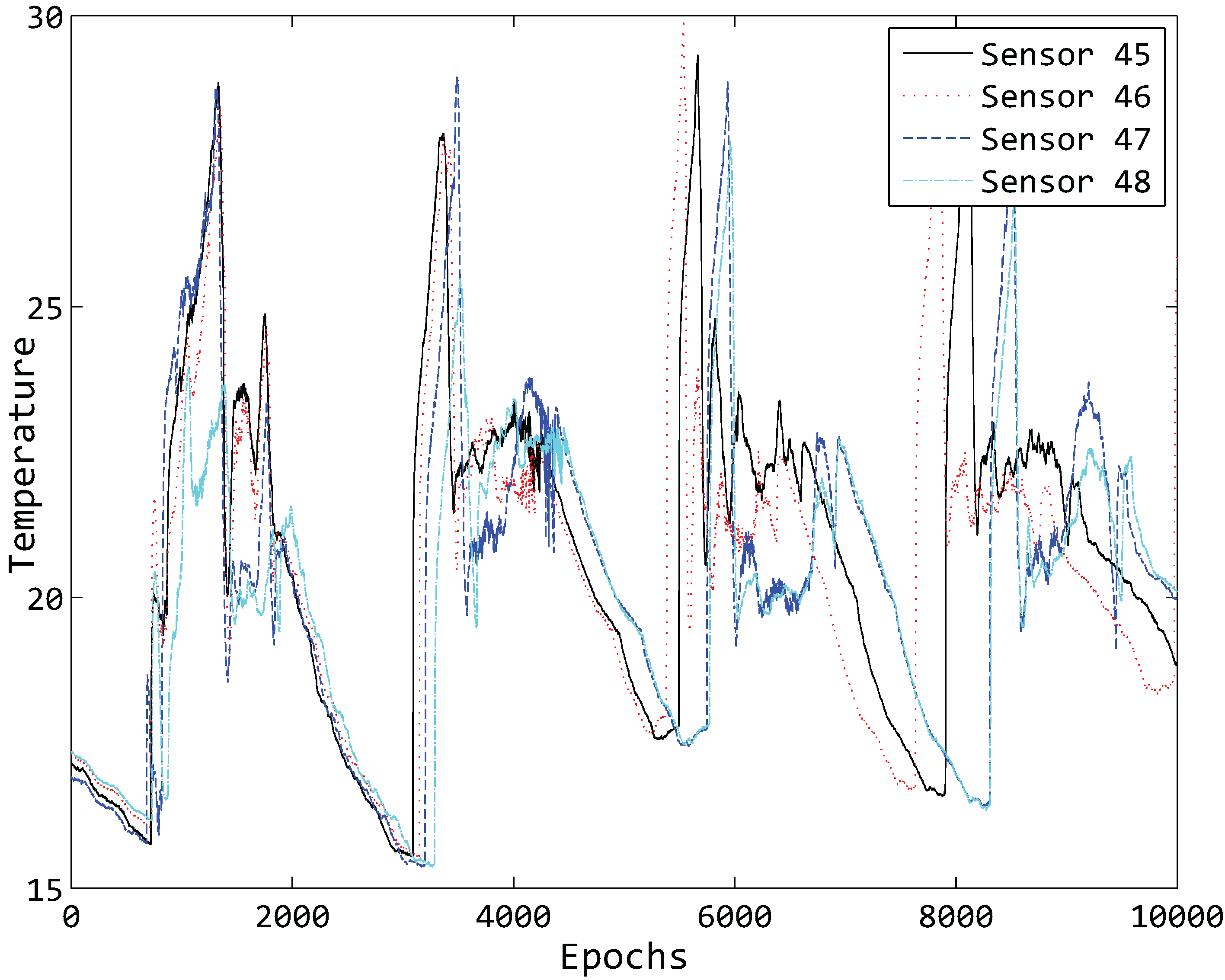

5.1. Data

5.2. Parameters Setting

| Parameter | Value |

|---|---|

| Number of AR model parameters | 50 |

| Size of the training sample set | |

| Number of iterations in batch gradient descent | 5000 |

| Footstep of each iteration |

| Parameter | Value |

|---|---|

| Energy dissipation | nJ/bit |

| Radio amplifier | pJ/bit/m |

| Number of bits in each packet | 1000 bits |

| Radio range of sensor nodes | 10 m |

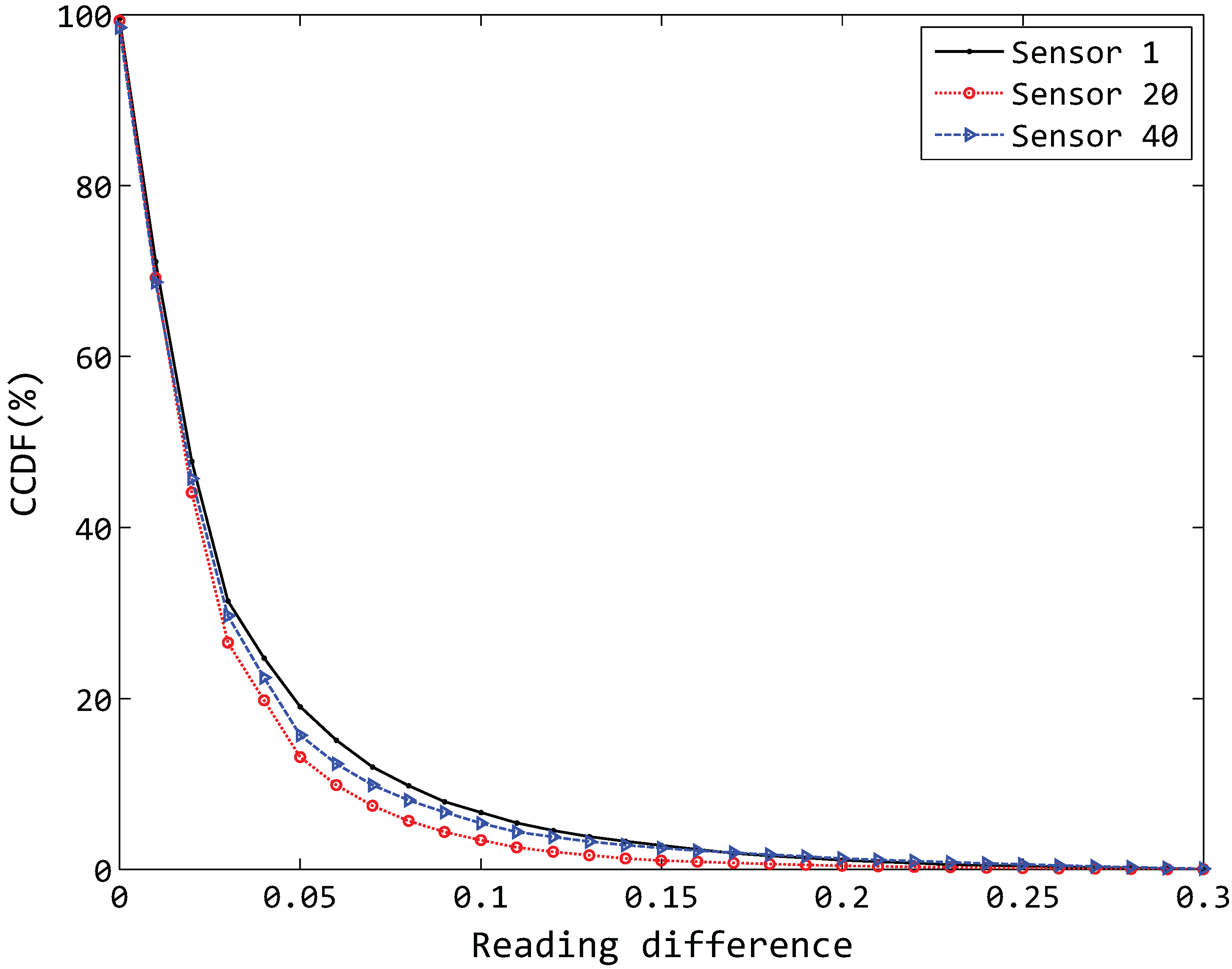

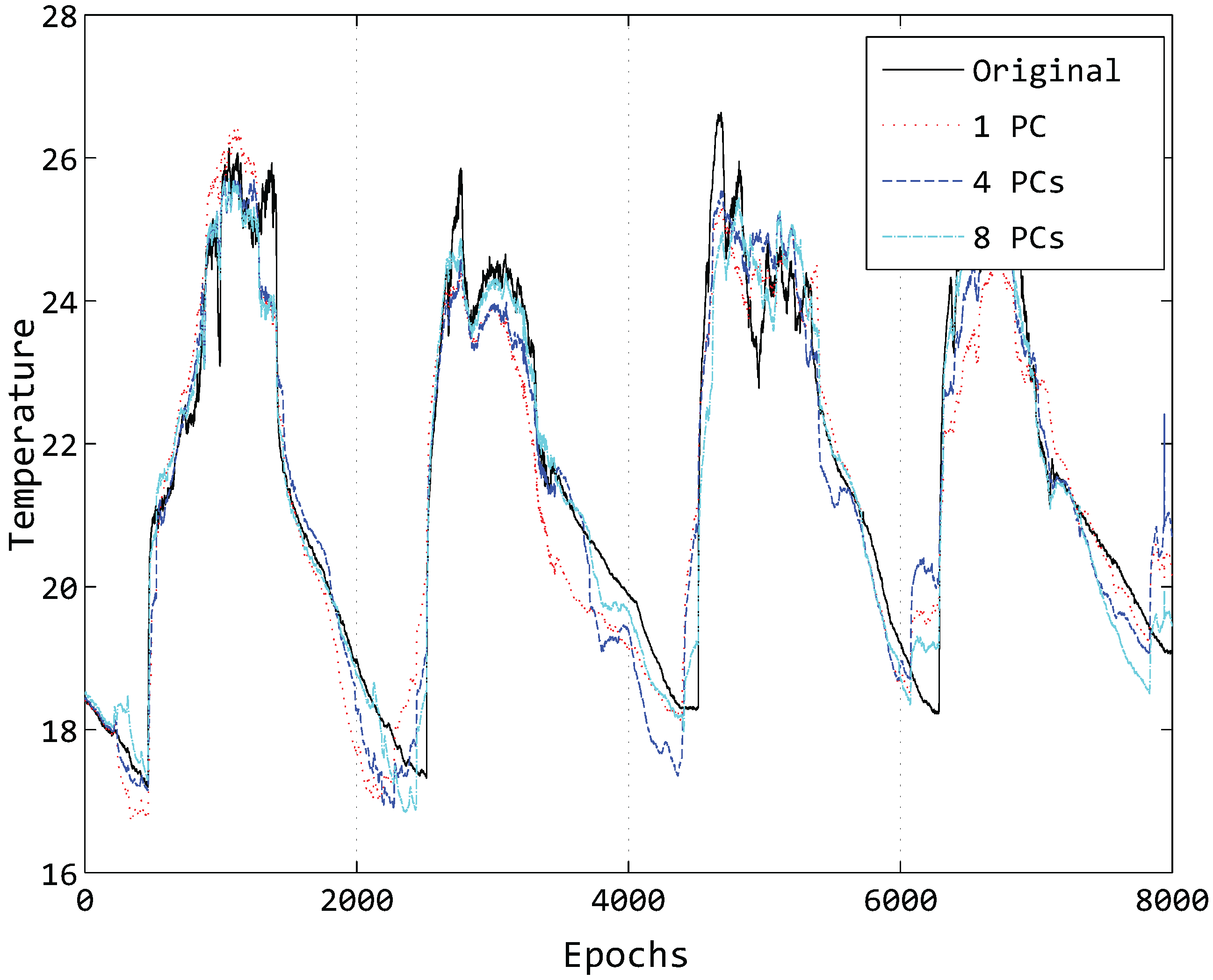

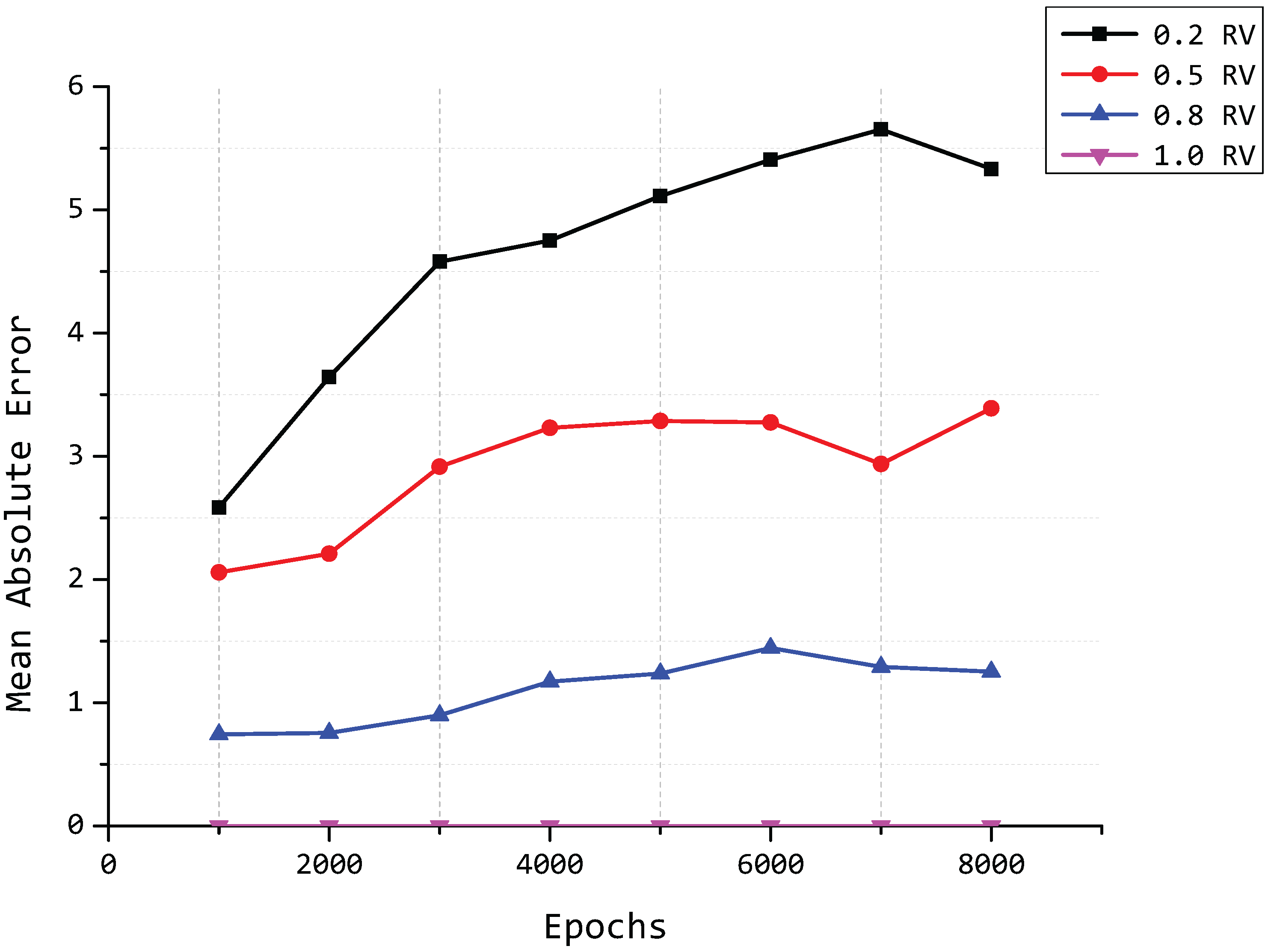

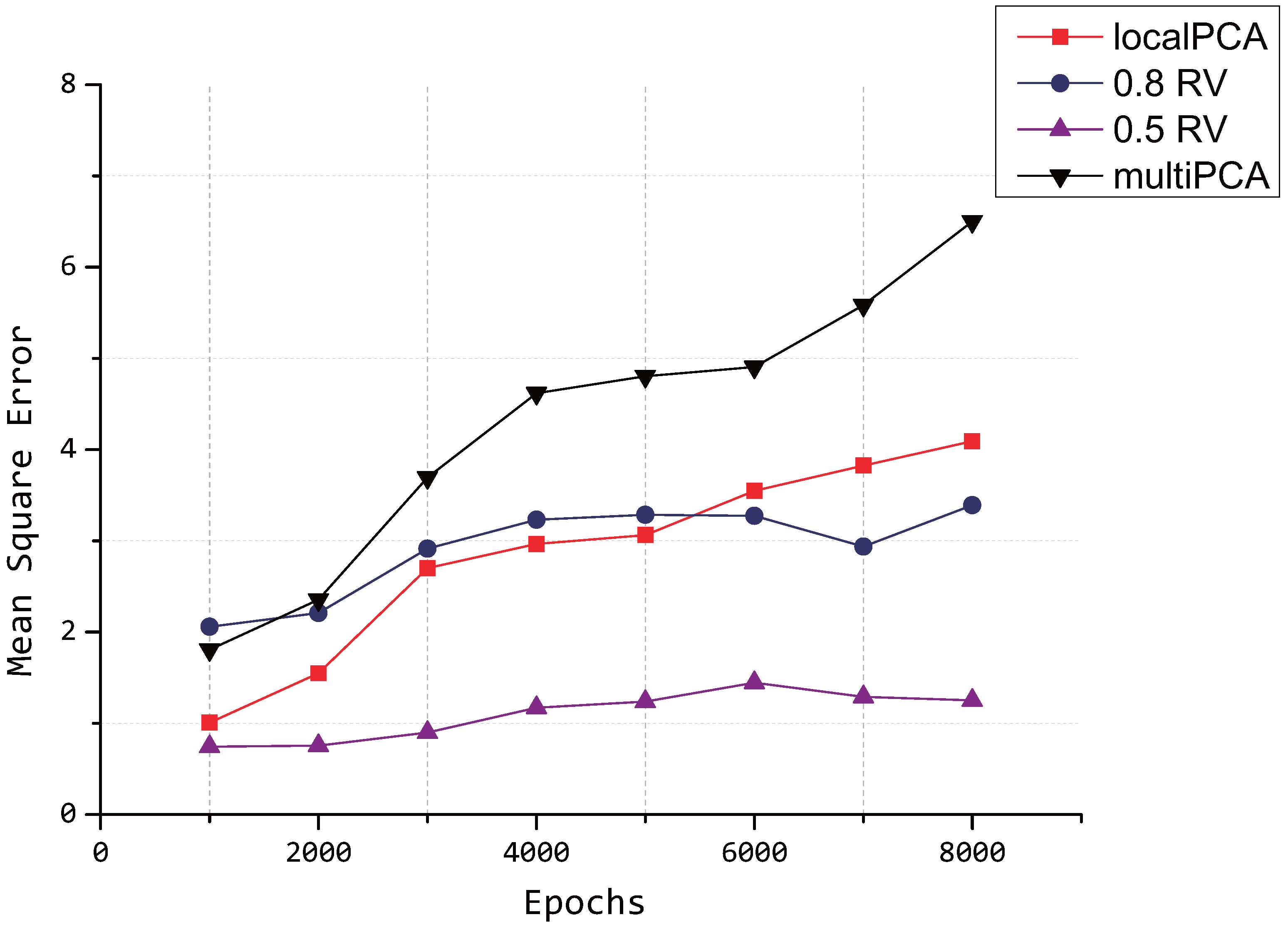

5.3. Compression Accuracy

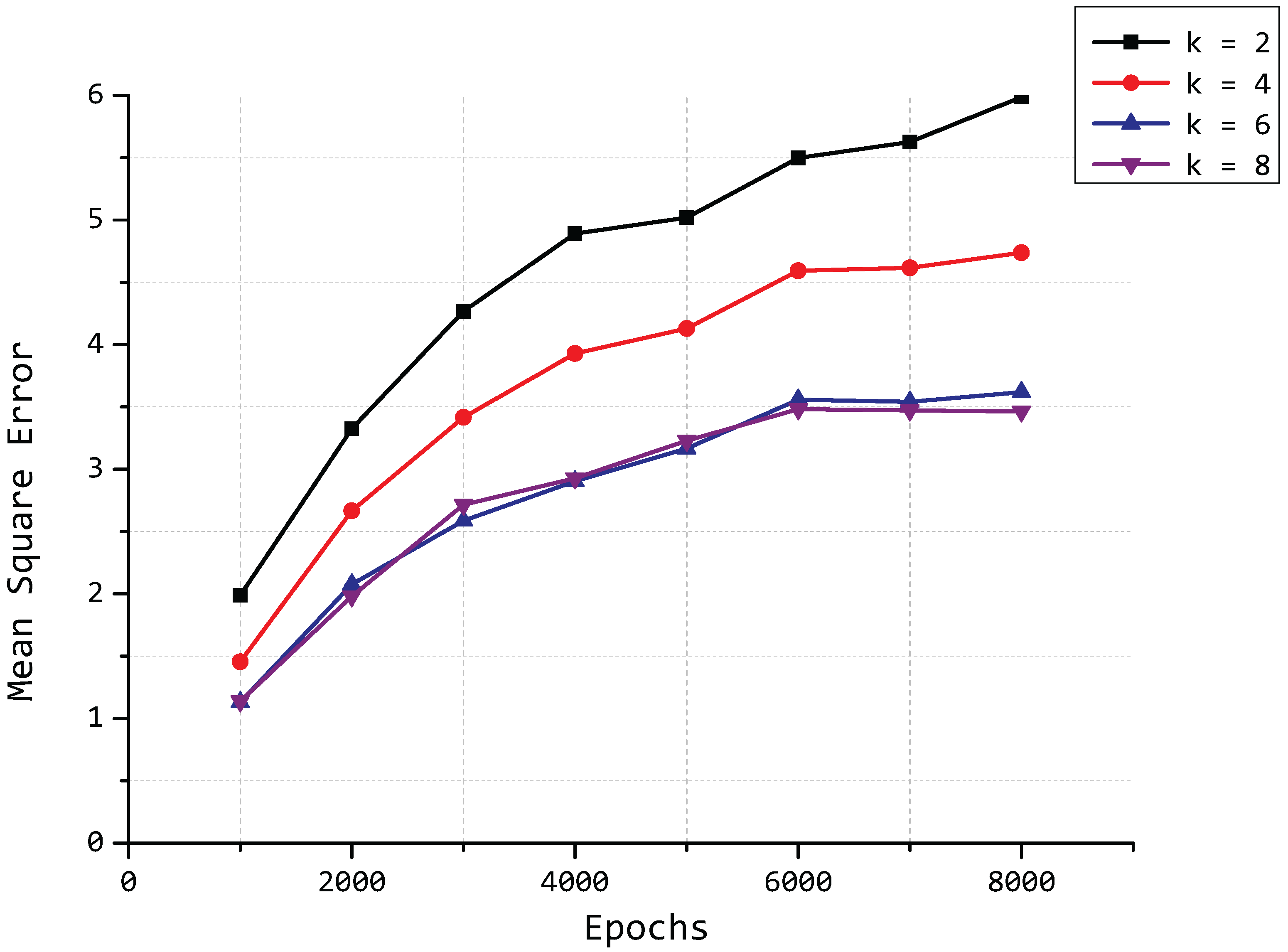

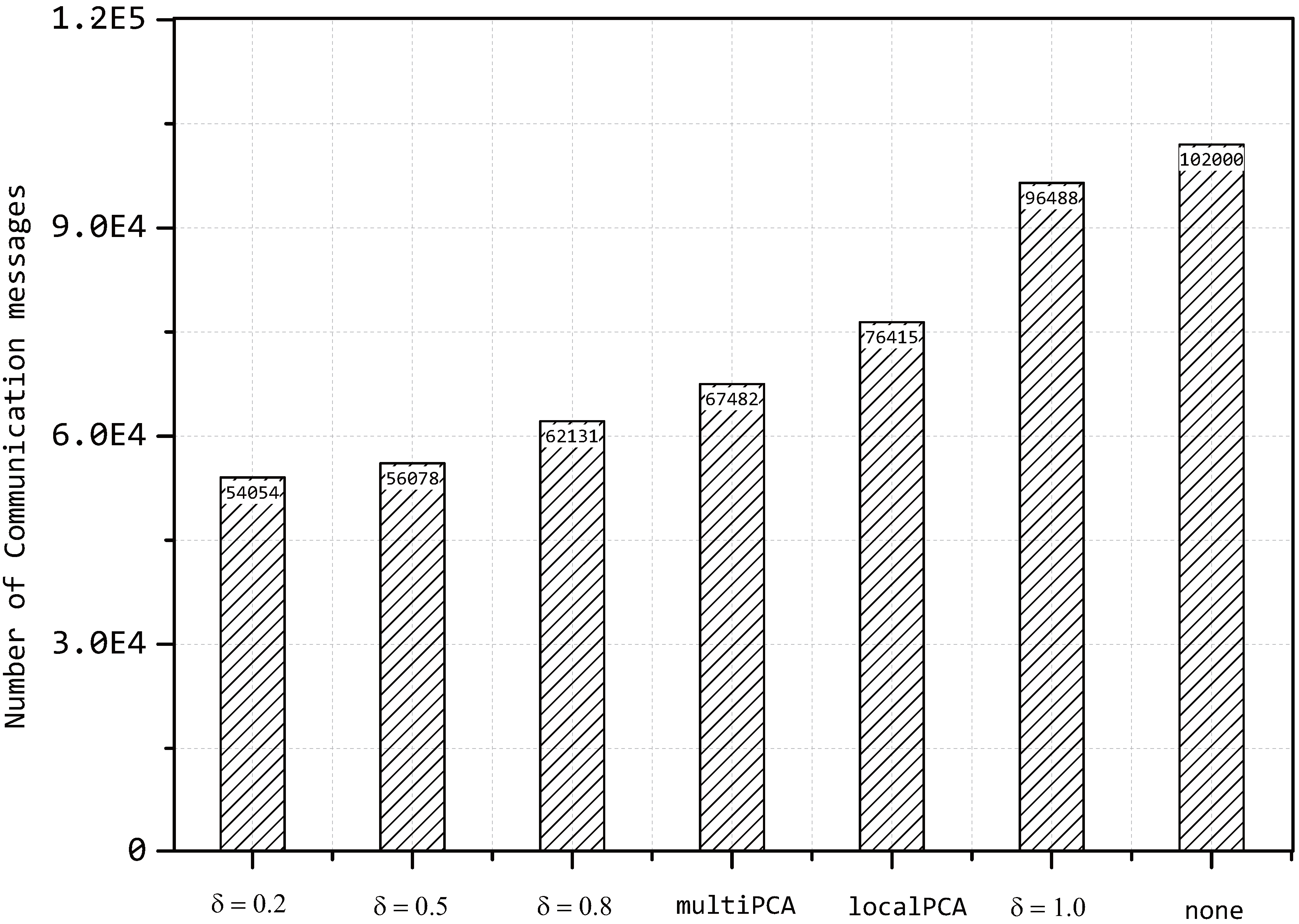

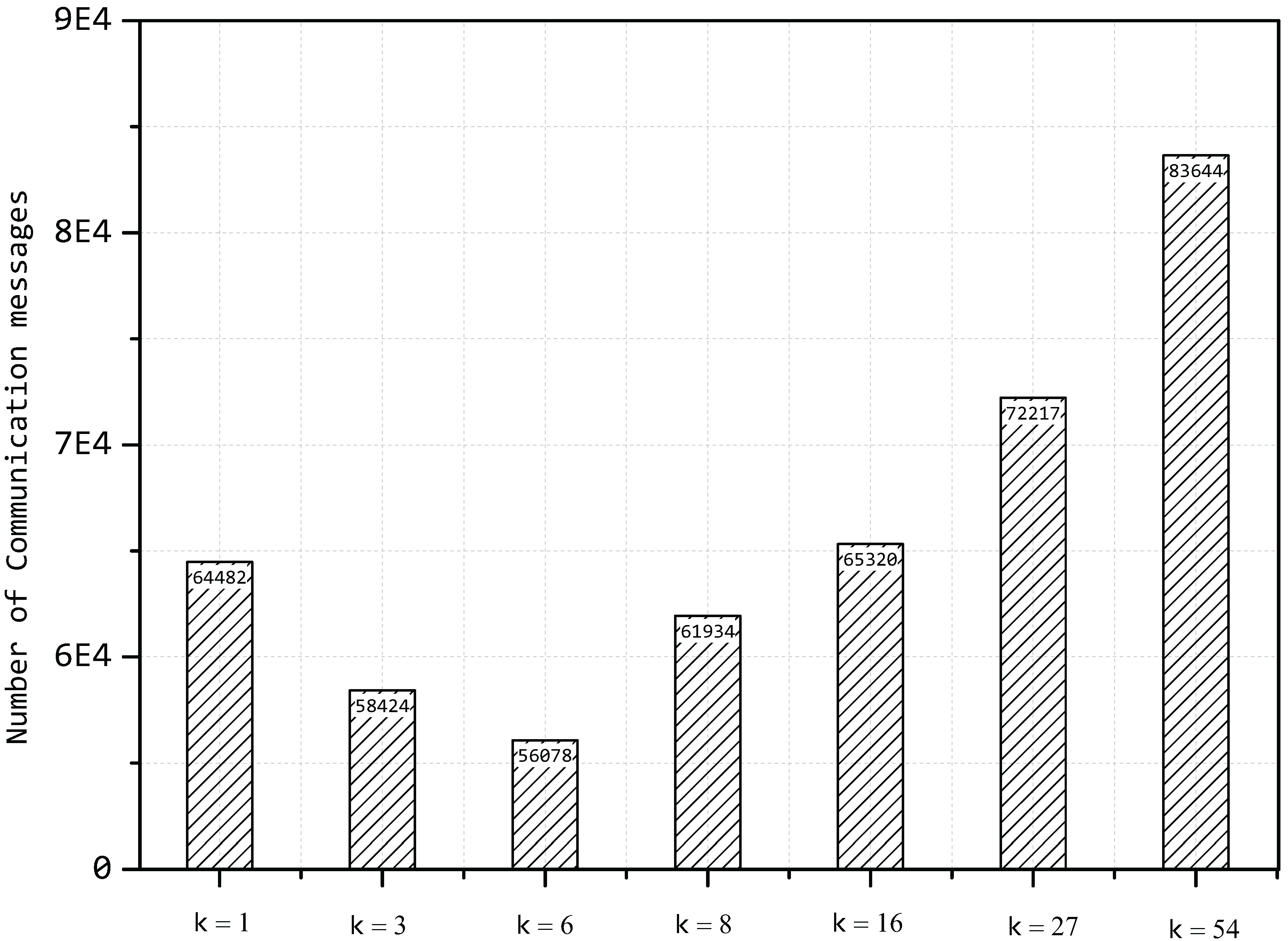

5.4. Compression Ratio

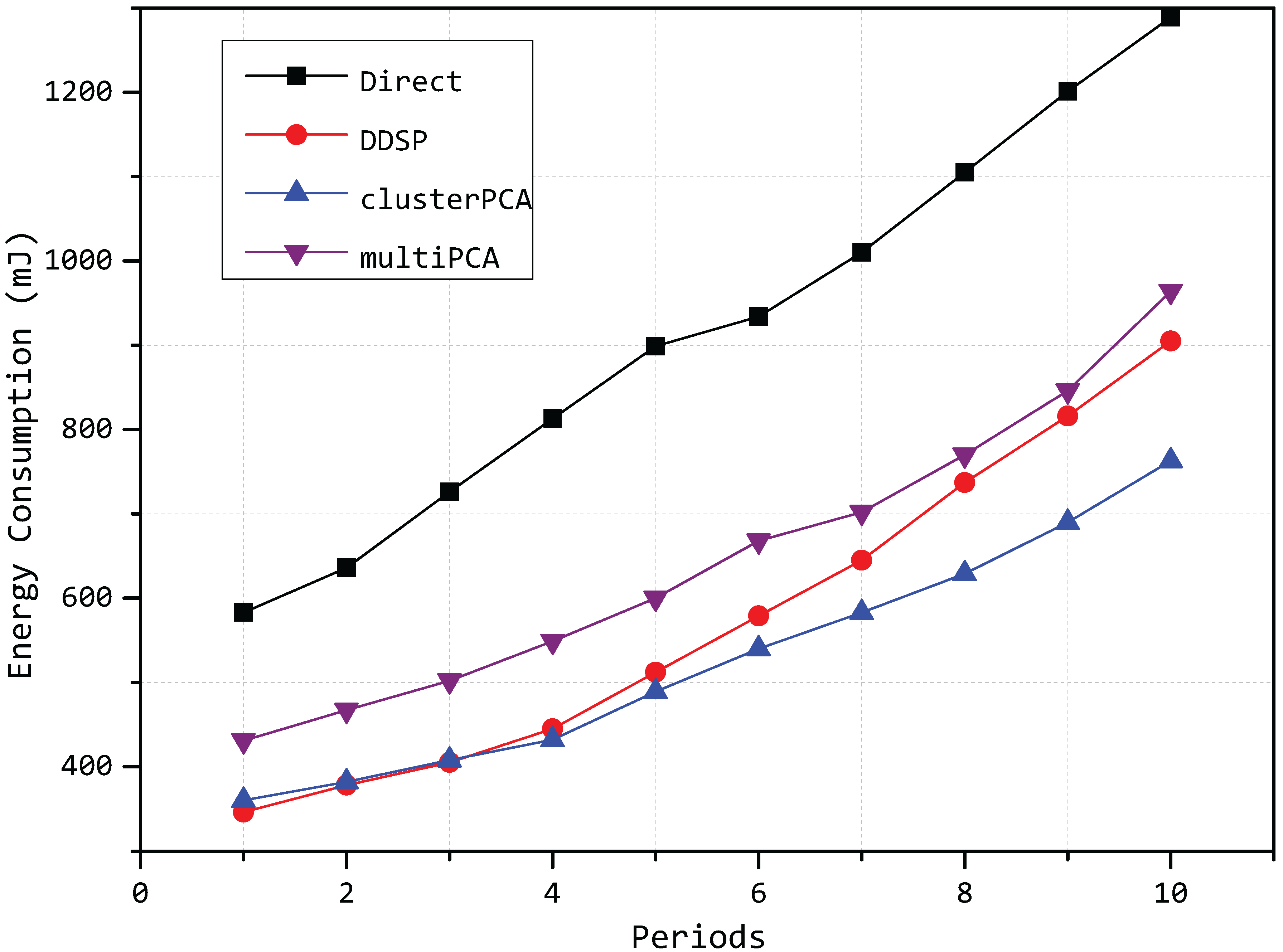

5.5. Energy Efficiency

| Model | Periods |

|---|---|

| Our proposed model | 14 |

| Same model with DDSPcluster head selection strategy | 11 |

| Multi-PCA model | 9 |

| Based on a multi-hop route tree | 7 |

6. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Srisooksai, T.; Keamarungsi, K.; Lamsrichan, P.; Araki, K. Practical data compression in wireless sensor networks: A survey. J. Netw. Comput. Appl. 2012, 35, 37–59. [Google Scholar] [CrossRef]

- Safa, H.; Moussa, M.; Artail, H. An energy efficient Genetic Algorithm based approach for sensor-to-sink binding in multi-sink wireless sensor networks. Wirel. Netw. 2014, 20, 177–196. [Google Scholar] [CrossRef]

- Jolliffe, I. Principal Component Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2002. [Google Scholar]

- Anagnostopoulos, C.; Hadjiefthymiades, S. Advanced Principal Component-Based Compression Schemes for Wireless Sensor Networks. ACM Trans. Sens. Netw. 2014, 11, 1–34. [Google Scholar] [CrossRef]

- Anagnostopoulos, C.; Hadjiefthymiades, S.; Georgas, P. PC3: Principal Component-based Context Compression: Improving energy efficiency in wireless sensor networks. J. Parallel Distrib. Comput. 2012, 72, 155–170. [Google Scholar] [CrossRef]

- Borgne, Y.L.; Bontempi, G. Unsupervised and Supervised Compression with Principal Component Analysis in Wireless Sensor Networks. In Proceedings of the 13th ACM International Conference on Knowledge Discovery and Data Mining Workshop on Knowledge Discovery from Data, San Jose, CA, USA, 12–15 August 2007; pp. 94–103.

- Borgne, Y.L.; Raybaud, S.; Bontempi, G. Distributed Principal Component Analysis for Wireless Sensor Networks. Sensors 2008, 8, 4821–4850. [Google Scholar] [CrossRef]

- Rooshenas, A.; Rabiee, H.R.; Movaghar, A.; Naderi, M.Y. Reducing the data transmission in wireless sensor networks using the principal component analysis. In Proceedings of the 2010 Sixth International Conference on Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), Brisbane, Australia, 7–10 December 2010; pp. 133–138.

- Chen, F.; Li, M.; Wang, D.; Tian, B. Data Compression through Principal Component Analysis over Wireless Sensor Networks. J. Comput. Inf. Syst. 2013, 9, 1809–1816. [Google Scholar]

- Liu, Z.; Xing, W.; Wang, Y.; Lu, D. Hierarchical Spatial Clustering in Multihop Wireless Sensor Networks. Int. J. Distrib. Sens. Netw. 2013, 2013. [Google Scholar] [CrossRef]

- Bandyopadhyay, S.; Coyle, E.J. An Energy Efficient Hierarchical Clustering Algorithm for Wireless Sensor Networks. In Proceedings of the The 22nd Annual Joint Conference of the IEEE Computer and Communications Societies, San Franciso, CA, USA, 30 March–3 April 2003.

- Dardari, D.; Conti, A.; Buratti, C. Mathematical evaluation of environmental monitoring estimation error through energy-efficient wireless sensor networks. IEEE Trans. Mob. Comput. 2007, 6, 790–802. [Google Scholar] [CrossRef]

- Hung, C.; Peng, W.; Lee, W. Energy-Aware Set-Covering Approaches for Approximate Data Collection in Wireless Sensor Networks. IEEE Trans. Knowl. Data Eng. 2012, 24, 1993–2007. [Google Scholar] [CrossRef]

- Bahrami, S.; Yousefi, H.; Movaghar, A. DACA: Data-Aware Clustering and Aggregation in Query-Driven Wireless Sensor Networks. In Proceedings of the 21st International Conference on Computer Communications and Networks, ICCCN 2012, Munich, Germany, 30 July 30–2 August 2012; pp. 1–7.

- Meka, A.; Singh, A.K. Distributed Spatial Clustering in Sensor Networks. In Proceedings of the Advances in Database Technology—EDBT 2006, 10th International Conference on Extending Database Technology, Munich, Germany, 26–31 March 2006; pp. 980–1000.

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Available online: http://scipy-lectures.github.io/packages/scikit-learn/ (accessed on 15 November 2013).

- Jeong, D.H.; Ziemkiewicz, C.; Ribarsky, W. Understanding Principal Component Analysis Using a Visual Analytics Tool; Charlotte Visualization Center: Charlotte, NC, USA, 2009. [Google Scholar]

- Heinzelman, W.R.; Chandrakasan, A.; Balakrishnan, H. Energy-Efficient Communication Protocol for Wireless Microsensor Networks. In Proceedings of the 33rd Annual Hawaii International Conference on System Sciences (HICSS-33), Maui, HI, USA, 4–7 January 2000.

- Ghazisaidi, N.; Assi, C.M.; Maier, M. Intelligent wireless mesh path selection algorithm using fuzzy decision making. Wirel. Netw. 2012, 18, 129–146. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Jurek, A.; Nugent, C.; Bi, Y.; Wu, S. Clustering-Based Ensemble Learning for Activity Recognition in Smart Homes. Sensors 2014, 14, 12285–12304. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Li, S. A Sensor Network Data Compression Algorithm Based on Suboptimal Clustering and Virtual Landmark Routing Within Clusters. Sensors 2010, 10, 9084–9101. [Google Scholar] [CrossRef] [PubMed]

- Intel Lab Data webpage. Available online: http://db.csail.mit.edu/labdata/labdata.html (accessed on 2 June 2004).

- Zordan, D.; Quer, G.; Zorzi, M. Modeling and Generation of Space-Time Correlated Signals for Sensor Network Fields. In Proceedings of the Global Telecommunications Conference (GLOBECOM 2011), IEEE, Houston, TX, USA, 5–9 December 2011; pp. 1–6.

- Arthur, D.; Vassilvitskii, S. K-Means++: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2007, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035.

- Liang, J.; Wang, J.; Cao, J.; Chen, J.; Lu, M. An Efficient Algorithm for Constructing Maximum lifetime Tree for Data Gathering Without Aggregation in Wireless Sensor Networks. In Proceedings of the INFOCOM 2010 29th IEEE International Conference on Computer Communications, Joint Conference of the IEEE Computer and Communications Societies, San Diego, CA, USA, 15–19 March 2010; pp. 506–510.

- Quer, G.; Masiero, R.; Pillonetto, G. Sensing, compression, and recovery for WSNs: Sparse signal modeling and monitoring framework. IEEE Trans. Wirel. Commun. 2012, 11, 3447–3461. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, Y.; Liu, F.; Zhou, X.; Li, Q. An Efficient Data Compression Model Based on Spatial Clustering and Principal Component Analysis in Wireless Sensor Networks. Sensors 2015, 15, 19443-19465. https://doi.org/10.3390/s150819443

Yin Y, Liu F, Zhou X, Li Q. An Efficient Data Compression Model Based on Spatial Clustering and Principal Component Analysis in Wireless Sensor Networks. Sensors. 2015; 15(8):19443-19465. https://doi.org/10.3390/s150819443

Chicago/Turabian StyleYin, Yihang, Fengzheng Liu, Xiang Zhou, and Quanzhong Li. 2015. "An Efficient Data Compression Model Based on Spatial Clustering and Principal Component Analysis in Wireless Sensor Networks" Sensors 15, no. 8: 19443-19465. https://doi.org/10.3390/s150819443

APA StyleYin, Y., Liu, F., Zhou, X., & Li, Q. (2015). An Efficient Data Compression Model Based on Spatial Clustering and Principal Component Analysis in Wireless Sensor Networks. Sensors, 15(8), 19443-19465. https://doi.org/10.3390/s150819443