1. Introduction

In recent decades, the rapid advancement of automated and noninvasive techniques has transformed the methods employed for species and habitat monitoring, and these techniques have swiftly become standard tools in ecology [

1]. These cutting-edge approaches empower researchers to increase both the spatial and temporal dimensions of their investigations, facilitating the collection of substantial datasets. Nevertheless, the large datasets often generated through acoustic monitoring present challenges for human surveyors because of the time-consuming, laborious, and difficult manual processing of such data. To circumvent these challenges, machine learning algorithms have emerged as powerful solutions for efficiently processing large acoustic datasets [

2,

3,

4].

Among the techniques for biomonitoring are passive acoustic monitoring, which has proven to be a useful tool for monitoring various vocally active groups, including anurans, mammals, insects, and birds [

5,

6]. One of the main disadvantages associated with passive acoustic monitoring surveys is that this technique may easily generate a vast number of recordings, which poses serious challenges or makes it impossible for the visual inspection of or manual listening to audio files (but see [

7]). Audio recordings can be automated via machine learning algorithms, which have become essential for managing large volumes of acoustic data [

4,

8]. State-of-the-art machine learning models can provide highly accurate audio recognition [

4,

9]. However, the complexity of developing state-of-the-art machine learning models, such as convolutional neural networks [

4], can deter their implementation by ecologists, managers, and the public owing to the significant level of informatics and engineering background needed [

9]. Fortunately, a new generation of user-friendly and readily accessible machine learning approaches has recently emerged, potentially enhancing the efficacy of automated audio recognition and opening the door to applying automated sound recognition to managers and researchers with limited machine learning backgrounds (e.g., [

10,

11,

12,

13,

14]).

Among these advances is BirdNET, which is a user-friendly and a ready-to-use machine learning tool that can provide multispecies-labeled output [

15] (for a review of its applications, see [

16]). BirdNET employs a deep neural network for the automated detection and classification of wildlife vocalizations [

15], and the last updated version (v2.4) includes sound recognizers for more than 6500 wildlife species. BirdNET can be easily accessed through various user-friendly interfaces, including a mobile application (see applications of the BirdNET App in [

17]) and a web-based platform (BirdNET-API; see [

18]). Scientists usually run batch analyses via BirdNET on a GUI interface (e.g., Windows) or via Python through the BirdNET Analyzer [

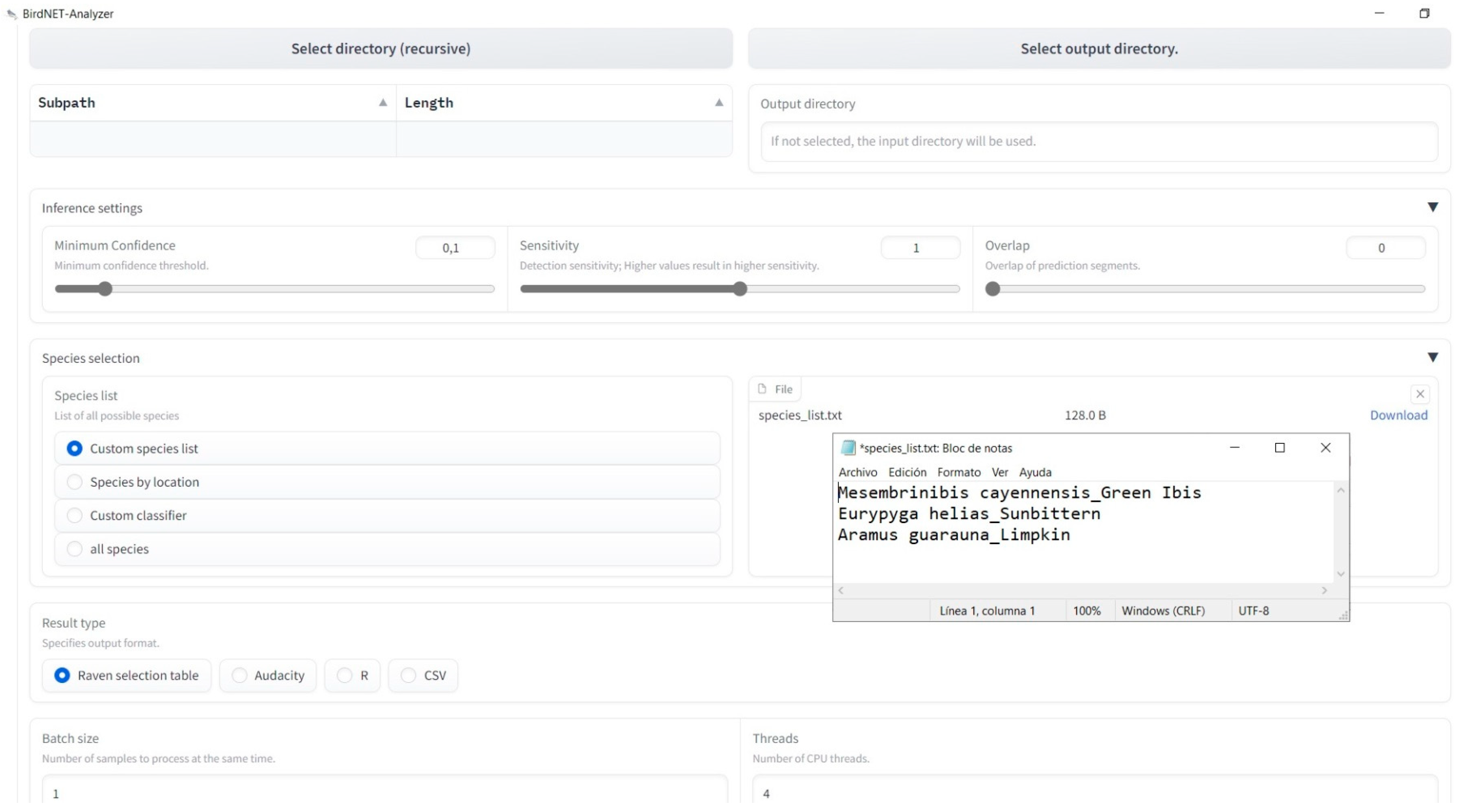

19,

20], which is openly accessible on GitHub (

https://github.com/kahst/BirdNET-Analyzer, 28 February 2025). The last version of BirdNET also allows users of Raven Pro, an audio software developed by the Cornell Lab of Ornithology [

21], to run BirdNET from that software.

In BirdNET, audio recordings are divided into 3-s segments, and multispecies predictions of wildlife species can be made for each segment [

15]. BirdNET predictions are accompanied by a quantitative confidence score that ranges from 0 to 1, which reflects the model’s confidence that a given prediction has been accurately recognized [

22]. Users are allowed to select a threshold value, enabling the filtering of BirdNET outputs at a desired confidence score threshold. Setting a high confidence score threshold increases the proportion of true positives (correct identifications) in the output but at the cost of reducing the number of predictions reported. Our current knowledge on how setting a confidence score threshold impacts BirdNET performance is limited to a few species and biomes (see [

15]; reviewed by [

16]), but it is known that the selection of an optimal threshold greatly varies between species and study areas [

14,

19,

23,

24].

BirdNET is a promising tool, but its effectiveness for bird monitoring has yet to be extensively assessed (see [

25]). For example, a recent review revealed that BirdNET studies (including gray literature) have thus far focused on species inhabiting North America or Europe [

16], likely because the first version of the software only included only species from these regions [

15]. However, the last update of BirdNET (v 2.4, June 2023) includes several species from the Southern Hemisphere, which offers new opportunities for expanding the use of BirdNET for monitoring tropical bird species. The effectiveness of BirdNET in correctly identifying a few tropical bird species has been recently assessed (see [

26]), but its ability to detect the presence of tropical birds has never been assessed.

Here, we aimed to (i) assess the ability of BirdNET to detect the presence of the three target species in sound recordings, (ii) estimate the precision of BirdNET in correctly identifying bird vocalizations, and (iii) determine the optimal confidence score threshold of each species, which may be used as a reliable criterion for considering only BirdNET detections with a high probability of being correct. Additionally, we estimated the computing time needed for scanning a large acoustic dataset collected over a complete annual cycle at three different stations (24,681 15-min recordings analyzed) and the amount of human time needed for verifying the output. Finally, we employed BirdNET detection above the optimal confidence score threshold to (iv) describe diel and seasonal changes in the vocal activity of the three considered species over a year and, therefore, improve our knowledge of the life history of these little-studied species. Our goal was to stimulate further research using BirdNET or other automated audio processing software and to better understand diel and annual variations in the vocal activity of tropical birds, an aspect that has been rarely studied (but see [

10,

27,

28,

29]).

4. Discussion

In this study, we validated the use of BirdNET with default values for detecting the presence of the three target species in sound recordings, with more than 80% of the recordings with known presences being also annotated by BirdNET for all three species; these values are like those proposed for other bird temperate species (e.g., [

14]). Although we were unable to provide a robust assessment regarding the ability of BirdNET to detect vocalizations by the target species, our experience from reviewing the test dataset allow us to share some insights as to when BirdNET more frequently failed to detect the presence of the species. Most of the false negatives (recordings of known presences but not those detected by BirdNET) were recordings with few vocalizations by the target species or with individuals vocalizing far from the recorded vocalizations (according to the sound level of the recorded vocalizations). Both factors may reduce the ability of BirdNET to detect and correctly identify bird vocalizations (see [

18,

24]). Overall, BirdNET showed high precision, with a limited number of mislabeled recordings (i.e., false positives;

Table 1). We are aware that the extended recording length (15 min) used in the study may have biased the percentage of presences detected using our technique. The percentage of presences detected would have been lower if the recording length had been shortened owing to the larger probability of detecting at least a single vocalization in longer recordings. In addition, our findings revealed, for the first time, that BirdNET has a compelling ability to detect the presence of tropical bird species in single recordings, making it suitable for describing bird communities and for performing occupancy modeling studies when detection is usually only needed at hourly or daily scales [

19] or to describe seasonal patterns of vocal activity. Here, as a preliminary assessment, we evaluated the ability of BirdNET to detect a species’ presence and to obtain new ecological insights regarding the target species (see also [

26]). The approach we followed was appropriate for reaching our goals with the three species considered, but further research may require the use of different approaches to assess the performance of BirdNET under different circumstances. These approaches may require assessing the recall rate, defined as the percentage of vocalizations automatically detected by BirdNET. Such validations may expand the use of BirdNET beyond simple presence/absence monitoring.

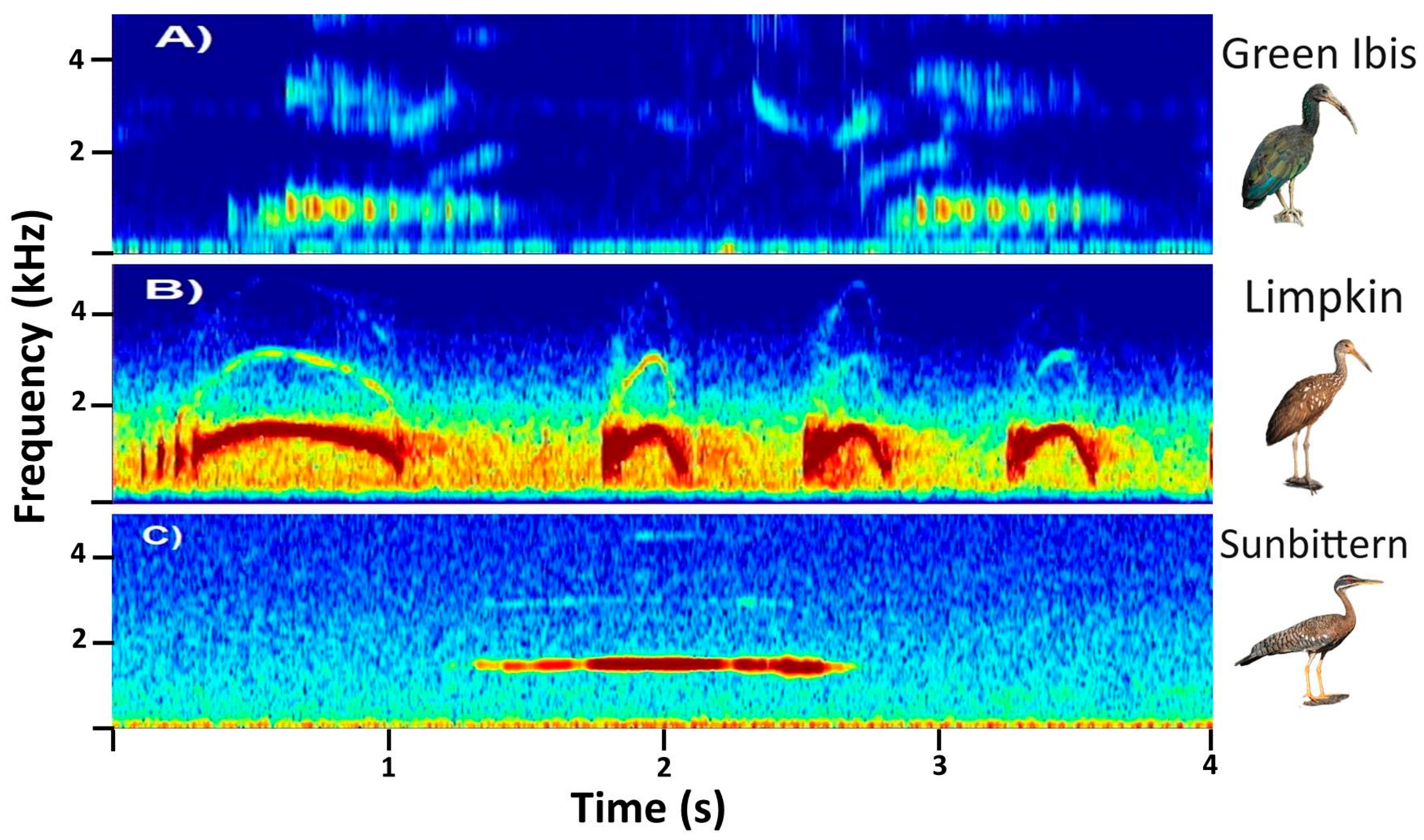

In this study, we demonstrated the ability of BirdNET to correctly identify the vocalizations of three Neotropical bird species from soundscape recordings (collected with omnidirectional microphones; precision > 77% for all species). Surprisingly, the mean precision reported in our study (86.2%) surpassed the overall precision reported for 984 European and North American bird species via focal (i.e., species-specific) recordings (mean precision of 79.0% [

15]). It is possible that the distinctive, relatively simple, and relatively unvaried vocalizations of the selected species may partly contribute to the high ability of BirdNET to correctly identify such vocalizations. Nonetheless, it is worth highlighting that the precision values reported here are very similar to those reported for BirdNET in a prior study with other three tropical passerine birds [

26]. We found slight variations among species, with precisions ranging from 77.6% for the Limpkin to 97.9% for the Sunbittern. These findings are consistent with those previous studies that stated that BirdNET’s precision may vary significantly among species and even within species between studies. For example, the BirdNET precision for correctly identifying the Common Raven (Corvus corax) ranged from 0.29 [

19] to 0.66 [

45] and 0.94 [

46].

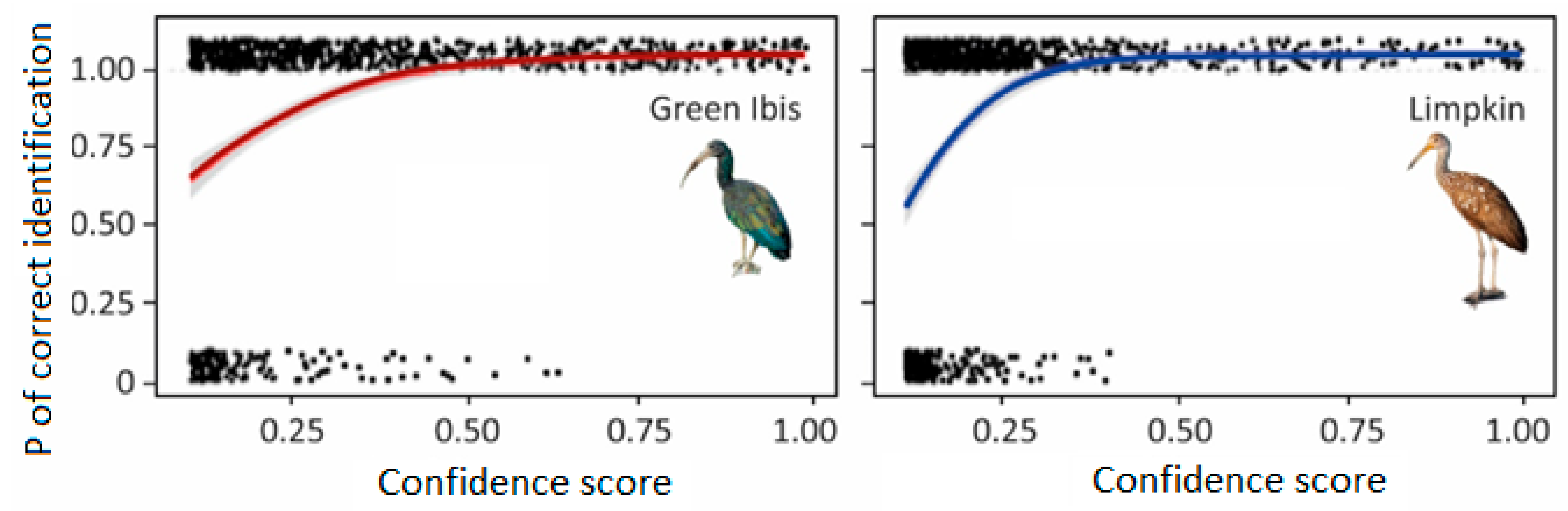

In this study, we corroborated the ability of BirdNET to scan large acoustic datasets and to provide valuable insights into ecological processes. To achieve this, we had to estimate the optimal confidence score threshold for each of the three monitored species (following [

13]; see a similar approach for birds in [

14,

22]). This approach allowed us to consider only predictions with a high probability (95%) of being correct to describe the diel and annual patterns of vocal behavior for the three target species. Nonetheless, the use of high confidence score thresholds decreases the percentage of presences and vocalizations detected; therefore, future studies should further examine the impact of using variable confidence score thresholds to detect bird vocalizations (see case studies for two and three bird species in [

14,

26]).

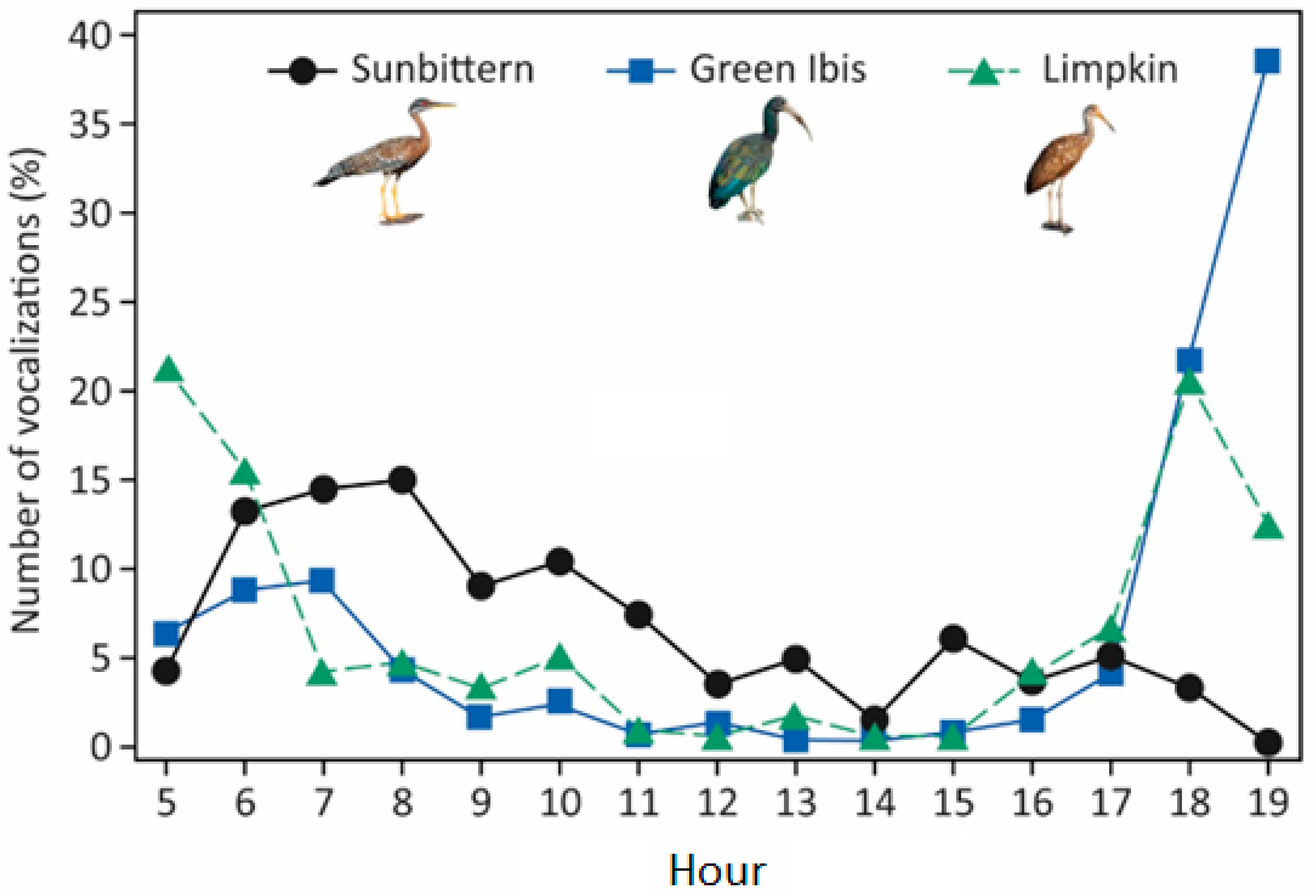

The Green Ibis and the Limpkin exhibited concentrated vocal activity during the crepuscular periods, with limited output during the day, whereas the Sunbittern showed pronounced vocal activity around sunrise, which remained relatively constant during the day and decreased toward sunset. The number of vocalizations detected would likely be greater if we had extended the study into the night, since the crepuscular patterns found for most species suggest that some of them may also vocalize at night, as has already been found for other nonpasserine diurnal species in the study area (see [

27]). The described patterns of vocal activity align with anecdotal descriptions in field guidebooks. For example, Hilty [

35] annotated the Green Ibis as being mostly silent while foraging during the day but as exhibiting loud vocalizations at sunset when the birds fly into or leave the roost, potentially explaining the crepuscular vocal behavior found in our study. Similarly, Ingalls [

47] described the Limpkin as calling more often in the early morning and evening, with vocal activity reduced at midday. Finally, the diel pattern found for the Sunbittern also corroborates prior descriptions, such as that by Stiles and Skutch [

48], who reported that this species is most frequently heard in the morning. Further research should explore the function of vocalizations for the studied species and investigate the relationship between daily vocal activity and climate conditions, an aspect that remains relatively understudied for tropical birds (but see, e.g., [

27,

49,

50]).

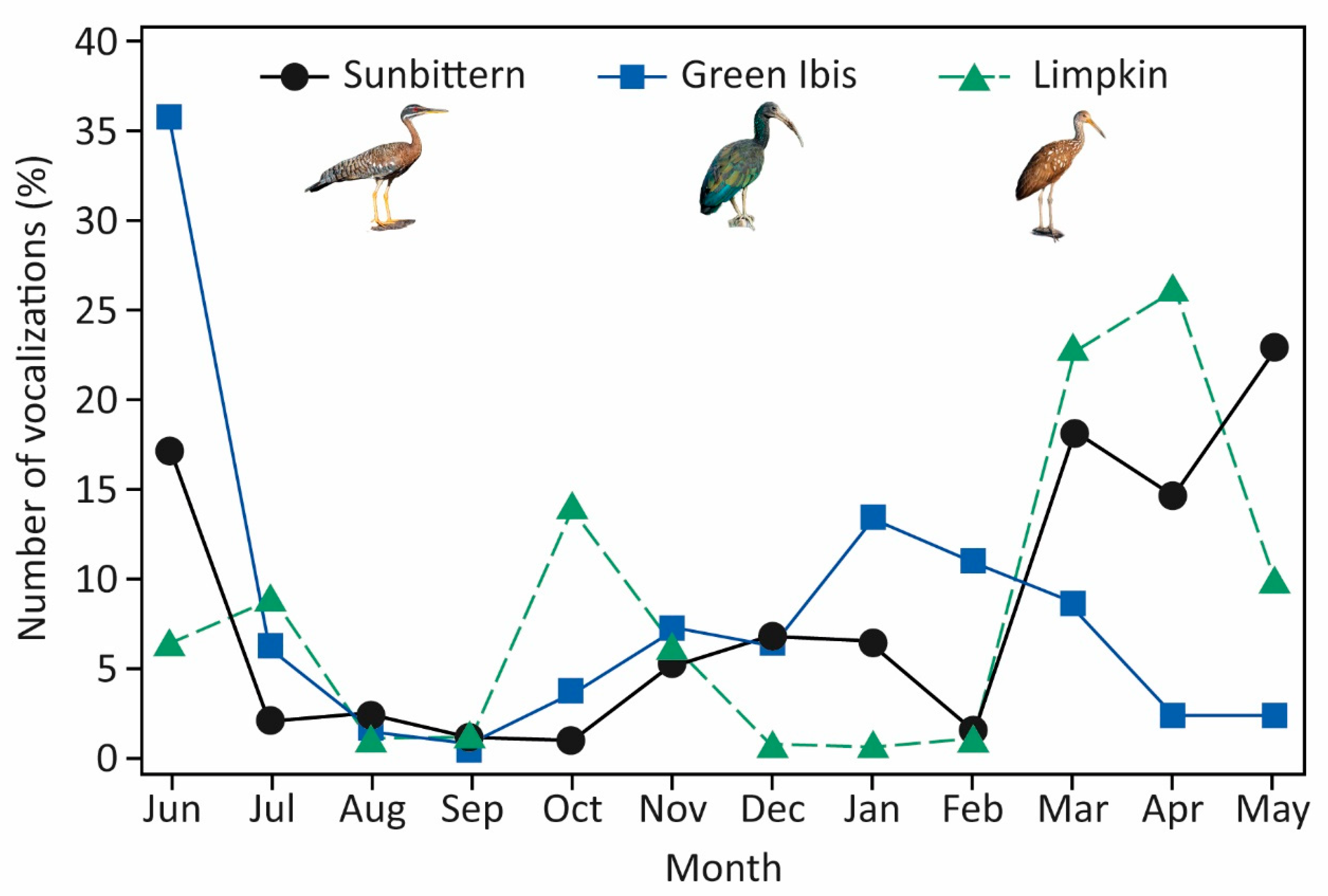

In addition to seasonal changes in vocal activity, the three species were detected monthly in the study area, which suggests that they might be residents of the Brazilian Pantanal. Nonetheless, further research using more appropriate methods (e.g., GPS devices) to study the seasonal movements of the species in the study area could improve our knowledge regarding their migratory behavior. Our study also sheds light on the breeding schedules of the three monitored species in the Brazilian Pantanal, providing valuable insights into their natural history, which is very limited (but see [

33,

36]). Previous research has suggested that limpkins vocalize primarily during pair bonding and territorial defense [

36], whereas sunbitterns vocalize more frequently during nest defense interactions [

41]; therefore, seasonal changes in their vocal activity may provide insight into their breeding periods. The breeding seasons for the Limpkin and the Sunbittern in the Brazilian Pantanal were similar and, according to the seasonal changes in vocal activity, seemed to occur between March and June, a period that corresponds to the receding season (April–June), when the water level starts to decline [

51]. Seasonal changes in the vocal activity of the Green Ibis, which exhibited two periods of vocal activity (January–February, during the flooded period, and July, toward the end of the receding season), suggest that the species may have reproduced twice in the study area, since peaks of vocal activity are commonly associated with breeding attempts (i.e., mate attraction and territory defense) in birds. Nonetheless, we lack observational data to confirm whether the Green Ibis is indeed a double-brooded species, and further field studies are necessary to verify our assumption. The hypothesized breeding periods align with records from the study area, such as the two records of copulations of sunbitterns in the Pantanal wetland, which occurred during the dry season (May–August [

52]), and in nearby regions, such as the breeding period hypothesized for the Green Ibis in Colombia and Panama (February–April [

53]).

The annual patterns of vocal activity observed in our study differed significantly from those described in the Brazilian Pantanal for various insectivorous (e.g., [

10,

27,

31]) and frugivorous bird species (e.g., [

10,

31]). The vocal activity of insectivorous and frugivorous species peaked at the beginning of the rainy season (September–October), coinciding with a period of abundant insects and fruits in the Brazilian Pantanal [

54,

55], which was driven by the onset of rainfall (which occurred in September of the studied year;

Figure 2). Our findings suggest that aquatic birds in seasonally flooded ecosystems, such as the Brazilian Pantanal, may exhibit a delayed breeding phenology to mitigate the risk of nest damage caused by flood pulses during the rainy period, as has been observed for the Sunbittern [

56]. Hancock et al. [

53] proposed that the Green Ibis breeding season typically begins a few months after the onset of the rainy season. Breeding during the receding season, when there are still significant water bodies but at reduced levels, may enhance the foraging success of aquatic species and provide abundant food for chicks, as well as accessible mud for nest construction [

41].