1. Introduction

Inflammation is a complex immune response to infection, injury, and other harmful stimuli, which not only initiates certain biological processes but also facilitates interactions among various cells and molecules [

1]. Acute inflammation serves as a vital physiological defense mechanism. Persistent inflammation accelerates cellular damage, disrupts tissue homeostasis, and contributes to major disease progression, including cancer, cardiovascular diseases, autoimmune disorders, neurodegenerative conditions and so on [

2]. Extensive investigation has been prompted into alternative anti-inflammatory approaches with enhanced selectivity. An in-depth investigation and enhanced understanding of inflammation will facilitate the development and refinement of anti-inflammatory strategies.

Pro-inflammatory peptides (PIPs) are crucial in the initiation, progression, and maintenance of inflammation. PIPs can induce cells to secrete multiple pro-inflammatory cytokines, such as TNF-α, IL-1, and IL-6 [

3,

4]. These cytokines form a positive feedback loop at inflammatory sites, further exacerbating the inflammatory response. Therefore, deeper research into the biological functions and mechanisms of PIPs is of significant importance for the development of alternative anti-inflammatory therapeutic strategies [

5], and the accurate prediction and identification of PIPs represents a critical research priority.

Algorithm-driven identification and prediction approaches could be cost-effective, time-efficient and highly accurate. Several computational tools have been developed for predicting PIPs, primarily utilizing sequence-based features. Among existing tools, ProInflam implements SVM-based algorithms incorporating hybrid features that combine dipeptide composition (DPC) with motif information derived from the Betts–Russell algorithm [

6]. PIP-EL employs an ensemble machine learning approach comprising 50 independent random forest (RF) models [

7]. Its feature set incorporates amino acid composition (AAC), DPC, composition–transition–distribution (CTD), physicochemical properties (PCP), and amino acid index (AA index). ProIn-Fuse utilizes eight sequence encoding schemes to generate probabilistic scores through RF models, including k-mer composition from profile (Kmer-pr), profile based composition of the amino acid (PKA), k-mer composition of the amino acid (Kmer-ac), k-space amino acid pairs (KSAP), binary encoding (BE), amino acid index properties (AIP), N-terminal 5 and C-terminal 5 dipeptides composition (C5N5-DC), and structural features (SF) [

8]. MultiFeatVotPIP integrates AAC, DPC, AA index, dipeptide deviation from expected mean (DDE), and grouped dipeptide composition (GDPC) features, employing a soft voting ensemble framework with five core learners (AdaBoost, XGBoost, RF, GBDT, and LightGBM) [

9]. Additional feature encoding schemes have been evaluated, including Composition of K-Spaced Amino Acid Group Pairs (CKSAAGP), Composition-Transition-Distribution of Codons (CTDC), Generalized Topological Polar Coefficient (GTPC), Composition-Transition-Distribution of Tripeptides (CTDT), and Pseudo Amino Acid Composition (PAAC). Notably, AA index and DDE features demonstrate superior discriminative capability for PIP identification. Detailed analytical studies have revealed distinct compositional and positional preferences of amino acid residues between PIPs and non-PIPs, highlighting the importance of sequence information for PIP identification. Additionally, various other machine learning algorithms have been assessed, including Extremely Randomized Trees (ERT), naïve Bayes (NB), K Nearest Neighbors (KNN), Logistic Regression (LR), Stochastic Gradient Descent (SGD), Linear Discriminant Analysis (LDA), Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM), BiLSTM, Transformer, Bert_CNN, and LSTM Attention. They were ultimately excluded from final model consideration due to inferior predictive performance.

These approaches have witnessed the development of PIP prediction, showcasing the potential of machine learning-driven innovations in bioinformatics. Nevertheless, the predictive performance of existing models remains suboptimal, highlighting the necessity for further refinement. The ESM-2 model [

10] demonstrates strong capability in extracting global contextual structure features from peptide sequences, and has been empirically validated as an effective tool for predicting peptide functional activities [

11]. The integration of advanced learning method and enhanced feature representations may help improve prediction performance.

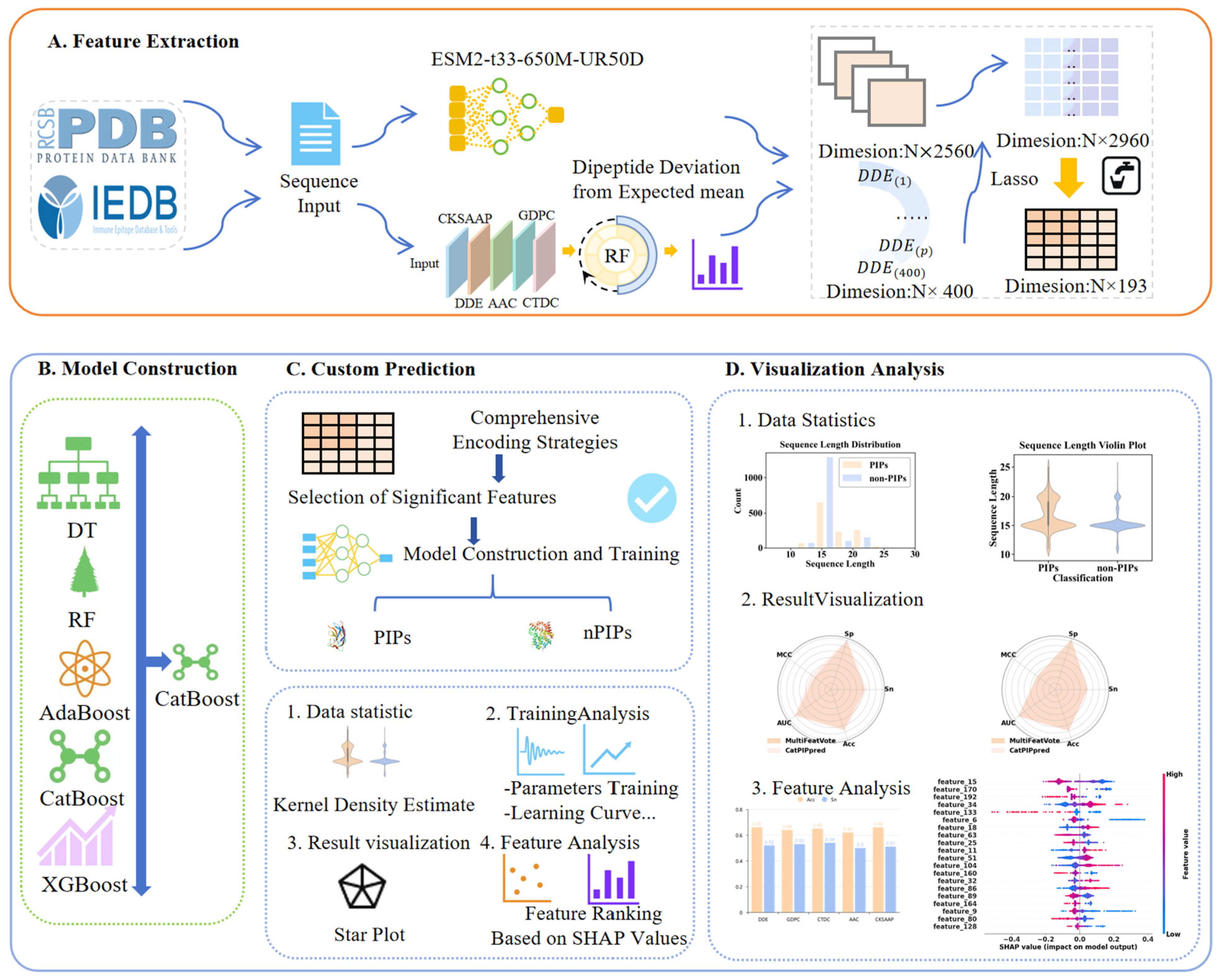

In this work, we propose Cat-PIPpred, an advanced computational framework for improved prediction of PIPs. Our approach involves the following: (1) Dual-encoding PIP and non-PIP sequences using protein language model (ESM-2) and evolutionary feature representations (DDE); ESM-2 is employed to capture high-level structural features of peptides, while DDE encoding is used to represent the implicit evolutionary relationships. (2) Integration and refinement of hybrid feature vectors for final classification. Features are selected and refined before being processed by a CatBoost classifier for PIP identification. Different feature refinement strategies and classification algorithms are evaluated systematically so as to optimize model performance. The final model Cat-PIPpred outperforms existing PIP-targeted predictors and state-of-the-art peptide functional classifiers by evaluating on cross-validation and independent tests. These results highlight the effectiveness of our hybrid feature representation scheme and the superiority of CatBoost architecture for PIP recognition tasks. The overall workflow of this study is illustrated in

Figure 1.

3. Discussion

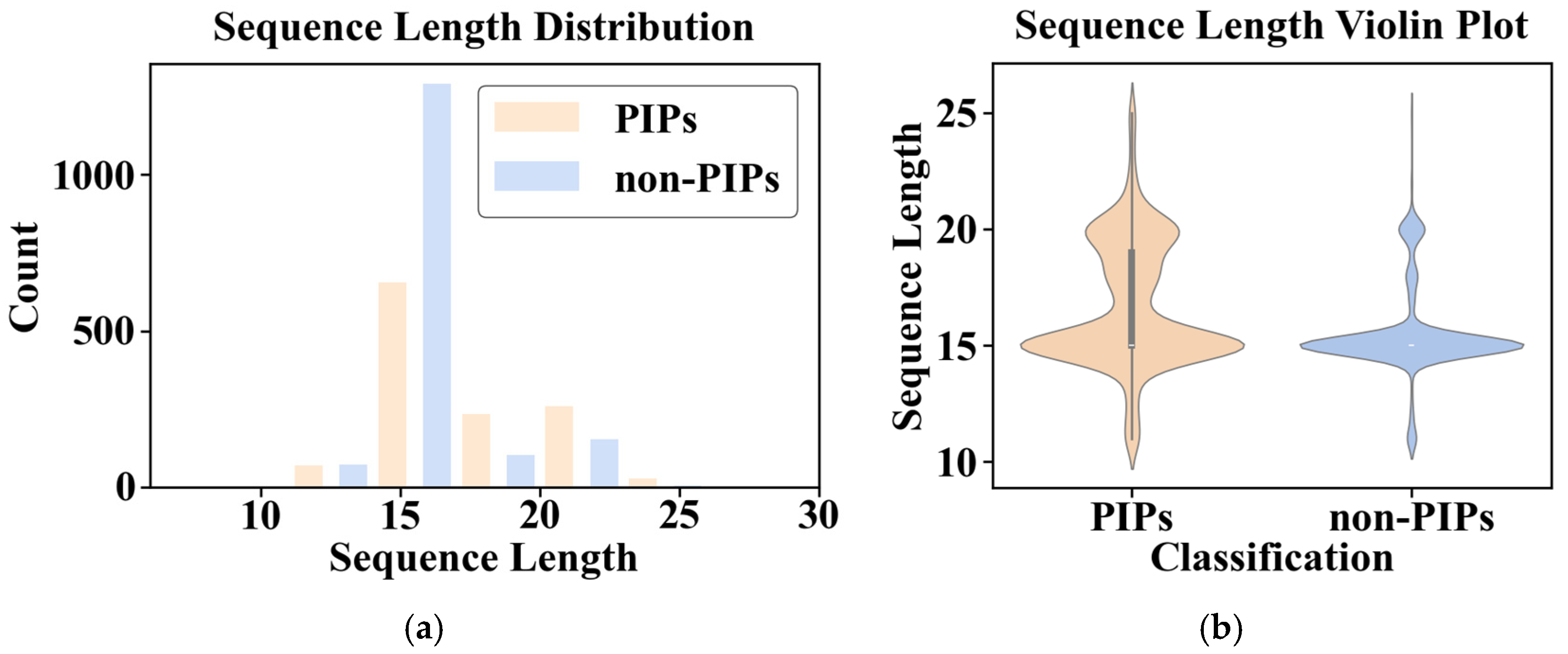

The statistical analysis reveals that PIP and non-PIP sequences exhibit similar length distributions (primarily 10–22 aa; median of 15 aa) and overall amino acid composition patterns, with minor but notable variations, as shown in

Figure 4. Specifically, PIP sequences exhibit enrichment in leucine (L), arginine (R) and serine (S), while showing depletion in aspartic acid (D), glycine (G), proline (P) and threonine (T).

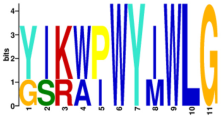

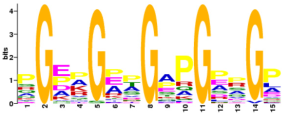

Comparative analysis reveals fundamental differences in the sequence architecture between PIPs and non-PIPs. We identified motifs ranging from 4 to 25 amino acids in both groups, as shown in

Table 8. Further motif analysis indicates that their immunomodulatory functions are closely associated with specific physicochemical motifs, such as amino acid composition, charge distribution, and amphipathicity, reflecting distinct structural mechanisms and functional orientations between the two classes. This clear differentiation provides a theoretical foundation for the rational design of peptide-based drugs with precise immunomodulatory activities.

In PIPs, two characteristic motifs are identified, both displaying strong targeting propensity and interaction tendencies. Pro-inflammatory Motif 1 is enriched with glutamine (Q) clusters. The side chain amide groups of glutamine serve as excellent hydrogen bond donors and acceptors, forming a polar, neutral, flexible, and hydrogen bond-rich “interaction platform”, which likely facilitates specific recognition and binding to pro-inflammatory receptors. For instance, hydrophilic interactions dominate the interaction on the buried surfaces of IL-18/IL-18Rα complex, a pattern that is broadly conserved among IL-1 family members [

14]. The presence of phenylalanine (F) and leucine (L) introduces hydrophobic patches, potentially facilitating initial peptide anchoring at the binding interface. Pro-inflammatory Motif 2 is rich in hydrophobic and aromatic amino acids. Its dense hydrophobic and aromatic side chains constitute an interaction hotspot that may guide binding to cytokines or receptors. The central tryptophan (W) anchors into the receptor pocket via hydrophobic and cation–π interactions, while tyrosine (Y) contributes to hydrogen-bonding networks, key forces in protein interactions. Although proline (P) is not generally enriched across PIPs, its strategic placement can introduce turns within the framework, reorienting adjacent residues, which is critical for shaping specific tertiary structures and binding interfaces. Moreover, variations at certain positions are tolerated or even favored, enabling fine-tuning of affinity and specificity toward different pro-inflammatory receptors across varying physiological environments.

In contrast, the motifs identified in non-PIPs follow entirely different structural principles. Non-pro-inflammatory Motif 3 features a glycine (G) and proline (P) backbone, with glycine recurring regularly and specific positions accommodating other amino acids such as glutamic acid (E) and alanine (A). This motif has previously been reported as a typical anti-inflammatory peptide motif [

15]. In non-pro-inflammatory Motif 4, positively charged arginine (R), lysine (K), and histidine (H) are positioned on one side, and negatively charged glutamic acid (E) is located centrally. These charged residues potentially influence interactions through electrostatic repulsion or competitive binding.

DDE encoding incorporates the natural occurrence probabilities of amino acids derived from the genetic codon table, offering a representation that accounts for codon usage bias—unlike simple frequency-based methods such as AAC or dipeptide composition. By quantifying the statistical significance of deviations between observed and expected dipeptide frequencies, DDE captures evolutionary and translational constraints inherent in peptide sequences. In contrast to standard dipeptide composition methods like GDPC, which merely report empirical frequencies, DDE provides a biologically informed, statistically robust, and computationally efficient representation of compositional properties.

Complementarily, ESM-2 captures high-level evolutionary and structural features through its deep transformer architecture, enabling the modeling of contextual and fold-specific information. The attention patterns in ESM-2 not only correspond to protein tertiary structure but also lead to a much deeper understanding of structural properties. Trained with masked language modeling (MLM), ESM-2 captures meaningful semantic relationships and long-term dependencies between amino acids.

The fusion of DDE and ESM-2 embeddings therefore achieves a synergistic integration of compositional attributes with semantic representations, enriching the feature space for enhanced discriminative capability. Building upon this hybrid representation, the global refinement strategy further strengthens feature integration by facilitating cross-modal alignment between DDE features and ESM-2 embeddings. This approach mitigates information loss associated with premature feature pruning while achieving a high compression ratio of 15.34 (reducing 2960 dimensions to 193), which directly enhances computational efficiency and minimizes memory footprint.

The generalization capability of models in practical applications necessitates rigorous evaluation using an independent test set. In the assessment on the independent test dataset, the overall performance of models generally declined compared to cross-validation, underscoring the critical generalization capability. The synergistic effect between feature refinement methods and algorithms critically determines model performance, and generalization capability must be stringently validated through independent testing. Models using Dual-channel features generally experienced performance degradation, indicating weaker generalization capability.

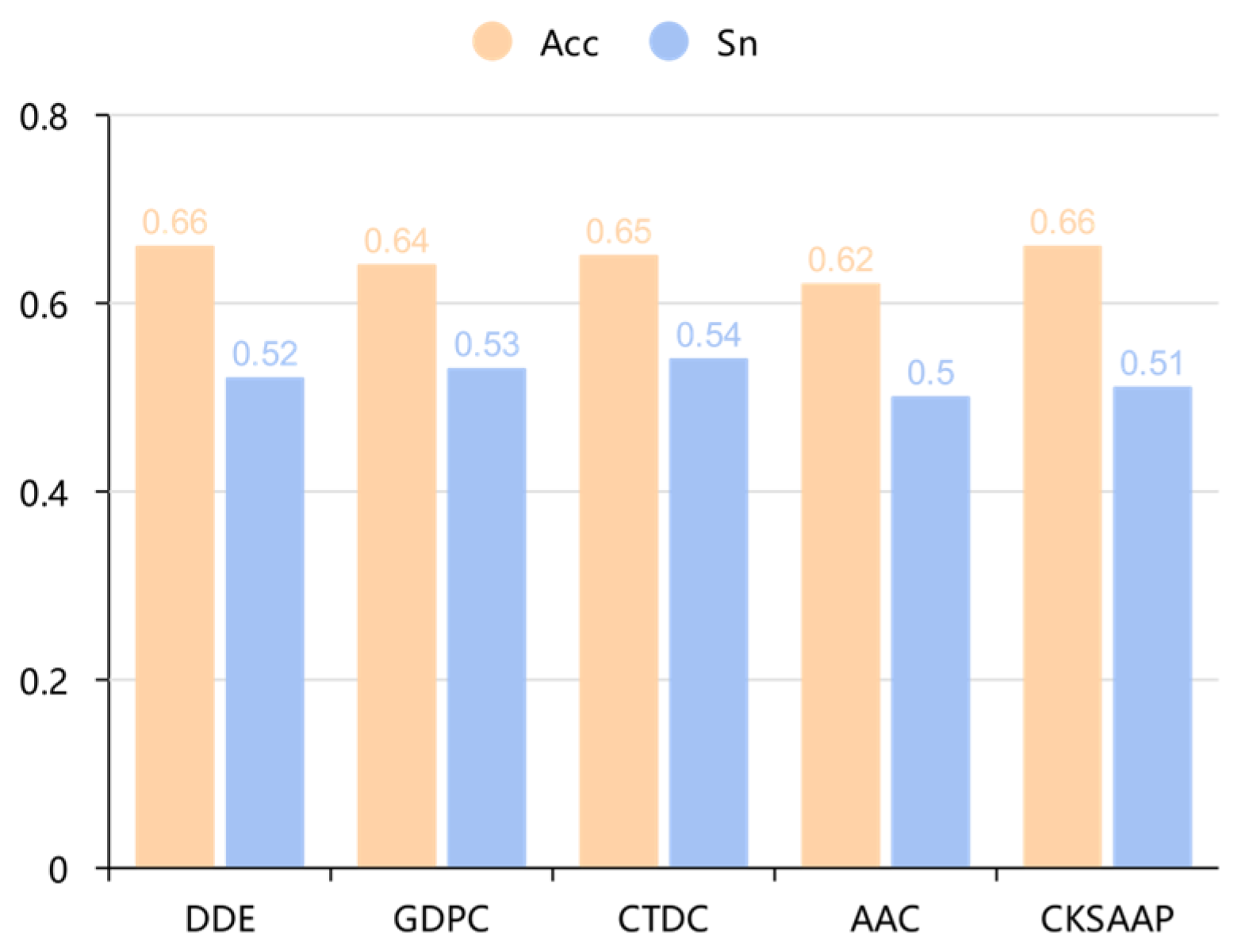

The comparative analysis between DDE and CKSAAP revealed important insights into feature encoding selection. While CKSAAP-based models demonstrated superior performance in cross-validation scenarios (achieving higher Sensitivity and AUC), DDE-based models exhibited significantly better generalization capability in independent testing. This divergence highlights that initial marginal differences in feature evaluation can indeed be amplified through the modeling pipeline, but the direction of amplification depends on the evaluation context. The superior stability of DDE across different datasets suggests it captures more robust and transferable patterns for PIP prediction. Our finding that subtle differences in feature encoding can lead to divergent model behaviors has important implications for computational biology methodology. The 0.01 sensitivity difference observed in the initial feature evaluation amplified to substantial performance variations in final models, underscoring the importance of evaluating features within the complete modeling pipeline rather than in isolation.

Importantly, the highest-dimensional features without refinement (ESM + DDE, 2960 dimensions) did not surpass other refinement methods across all algorithm combinations, indicating that feature dimensionality alone is not a decisive factor for performance. Particularly, the combination of ESM + DDE features with DT performed the poorest with Acc of 0.602 and MCC of 0.205, further substantiating the risk of overfitting associated with high-dimensional feature spaces. The benefits of Decision Trees include their interpretability and low requirements for data preprocessing. However, a major drawback is their propensity to overfitting, a limitation that motivated the development of ensemble methods to enhance robustness and accuracy.

Across all feature spaces, tree-based ensemble models (particularly Random Forest (RF), XGBoost, and CatBoost) significantly outperformed the single Decision Tree (DT) and AdaBoost algorithms, highlighting the effectiveness of ensemble learning. In RF, two strategies—bagging and random feature selection—enhance the model’s generalization abilities and robustness against overfitting. In contrast to bagging techniques which build trees independently, boosting is another ensemble approach which trains learners sequentially. Relative optimizations expand upon traditional gradient boosting, resulting in enhanced computational efficiency and scalability. Particularly, CatBoost is an advanced gradient boosting frameworks which trains learners sequentially and handles feature processing automatically, eliminating the necessity for manual preprocessing. Critically, the CatBoost algorithm employs symmetric (oblivious) tree structures to model complex feature interactions, and is further strengthened by gradient bias reduction mechanisms. Thus, it excels in handling high-dimensional data and demonstrates robustness to dataset shift. The capability for native categorical feature processing, combined with its sophisticated training algorithm, allows CatBoost to achieve superior predictive accuracy with minimal hyper parameter tuning.

The experimental results indicate that both CNN and Att-BiLSTM architectures were outperformed by the CatBoost classifier, despite their acknowledged representational power. Several interrelated factors may explain such outcome.

Deep Learning models, such as CNN and Att-BiLSTM, generally require large-scale datasets to fully learn underlying feature representations and avoid overfitting. In contrast, our benchmark dataset is of small-to-medium size, and CatBoost is particularly renowned for its high efficiency and robustness on data of this scale. Moreover, the feature space used in this work is inherently tabular, especially after feature selection and refinement. Gradient Boosting Decision Tree (GBDT) methods like CatBoost are explicitly well-suited for such data. CNNs, on the other hand, inherently excel with data possessing spatial or topological structure (e.g., images, sequences), and the Att_BiLSTM is primarily effective for capturing long-range temporal dependencies. This inherent inductive bias may make them suboptimal for generic tabular data where feature relationships are neither sequential nor spatial. The use of ESM-2 for feature extraction provides a further explanation. Given that ESM-2 is built on the Transformer architecture, its self-attention mechanisms are inherently designed to capture complex, long-range dependencies and global context in protein sequences. Consequently, applying additional deep neural networks (CNNs or Att-BiLSTMs) to these pre-processed representations may produce only marginal improvements, as the most salient contextual patterns have already been encoded. CatBoost circumvents this limitation through its tree-based inductive bias, which is fundamentally different from that of neural networks. This methodological complementarity allows CatBoost to utilize the high-quality embeddings more effectively for tabular prediction, achieving superior results without introducing redundant deep learning layers. Additionally, the performance of deep learning models is highly sensitive to hyperparameter configurations. Although we conducted a search over key parameters, the scope was necessarily limited. A more exhaustive hyperparameter optimization or the use of more advanced architectures might yield improved performance. Nevertheless, CatBoost achieved strong performance with minimal hyperparameter tuning, demonstrating its advantage as a readily deployable and effective solution for PIP prediction.

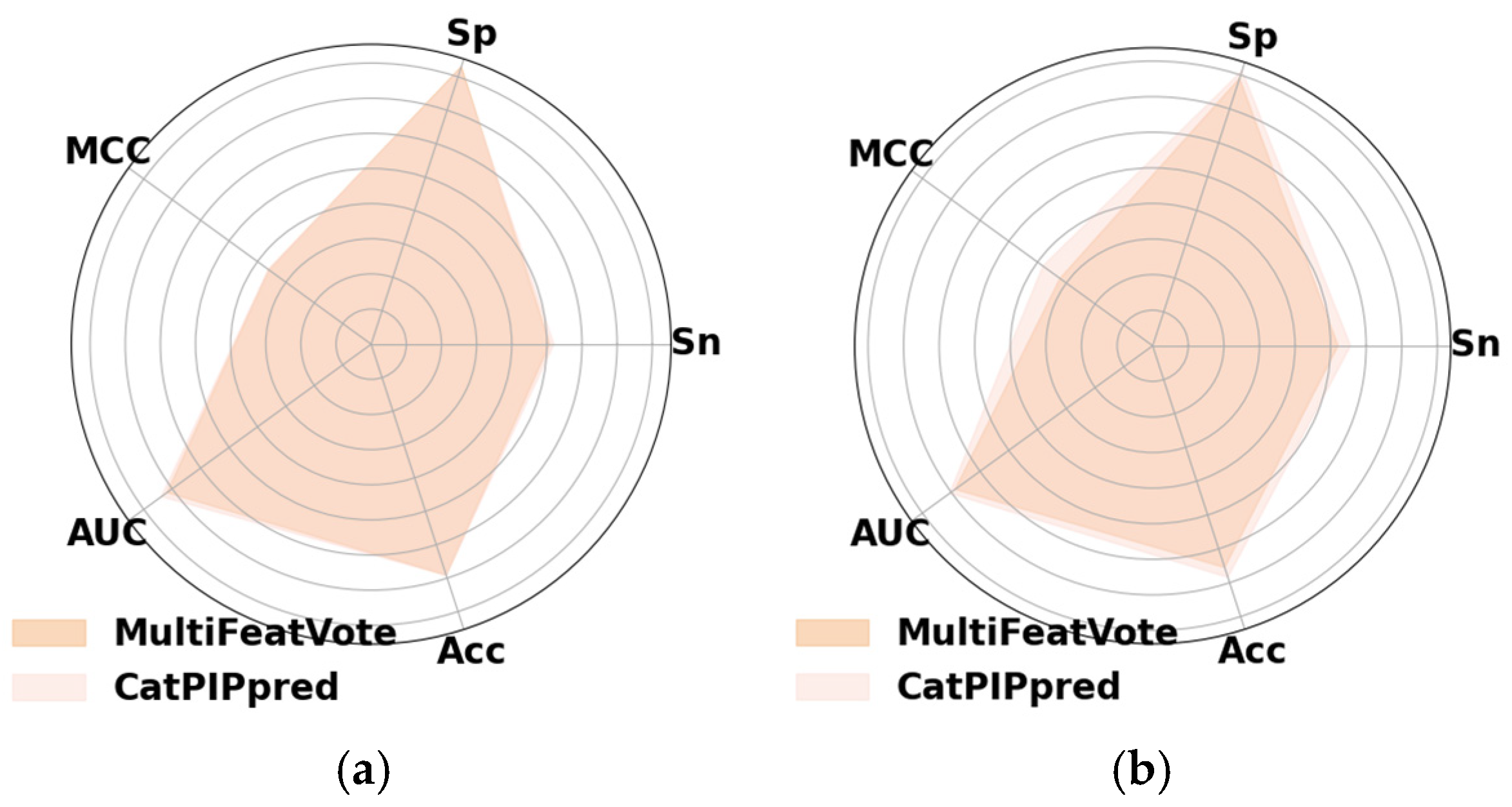

Cat-PIPpred delivers significant advantages in computational efficiency, predictive stability, and holistic performance, addressing critical requirements in contemporary proteomic research. Consequently, when benchmarked against existing methods, Cat-PIPpred demonstrates that incorporating structural insights from ESM-2 and leveraging advanced machine learning architectures yields significant gains in predictive performance. Deep_AMPpred and AMPpred_MFA are general-purpose predictors, and thus have considerable limitations in accurately detecting PIP, underscoring the need for specialized predictors such as Cat-PIPpred and MultiFeatVotPIP (reliant on handcrafted compositional features).

Cat-PIPpred exhibits enhanced efficacy relative to current PIP prediction methodologies due to two principal methodological and biological innovations.

Firstly, our framework employs a cross-modal feature fusion technique, significantly benefiting from the pre-trained ESM-2 features. In contrast, existing PIP predictors, such as MultiFeatVotPIP, predominantly depend on conventional compositional features. Although ProIn-Fuse integrates structural data, it is constrained to merely eight types of handcrafted structural descriptors, whereas our model assimilates high-dimensional evolutionary and structural representations derived from the ESM-2 model. ESM-2 represents a cutting-edge large-scale protein language model that effectively captures the fundamental principles governing protein evolution, structure, and function. The 2560-dimensional ESM-2 embedding utilized in this study provides a comprehensive, context-sensitive representation of each peptide. By amalgamating the ESM-2 embedding with traditional features, Cat-PIPpred formulates a more integrated and informative feature set, thereby enhancing the descriptor for PIP and facilitating more precise identifications. This further emphasizes the biological insight that pro-inflammatory functionality is intrinsically linked to the compositional, structural, and evolutionary attributes of peptide sequences.

Secondly, Cat-PIPpred utilizes the CatBoost classifier, which excels in managing categorical features and mitigating overfitting. The gradient boosting framework of CatBoost demonstrates superior generalization capabilities and robustness. In contrast, ProIn-Fuse combines eight single encoding-based random forest models linearly, while MultiFeatVotPIP employs a soft voting ensemble approach with five principal learners (AdaBoost, XGBoost, RF, GBDT, and LightGBM). The marginal enhancement of MultiFeatVotPIP compared to its individual components indicates a lack of diversity among its constituent models.

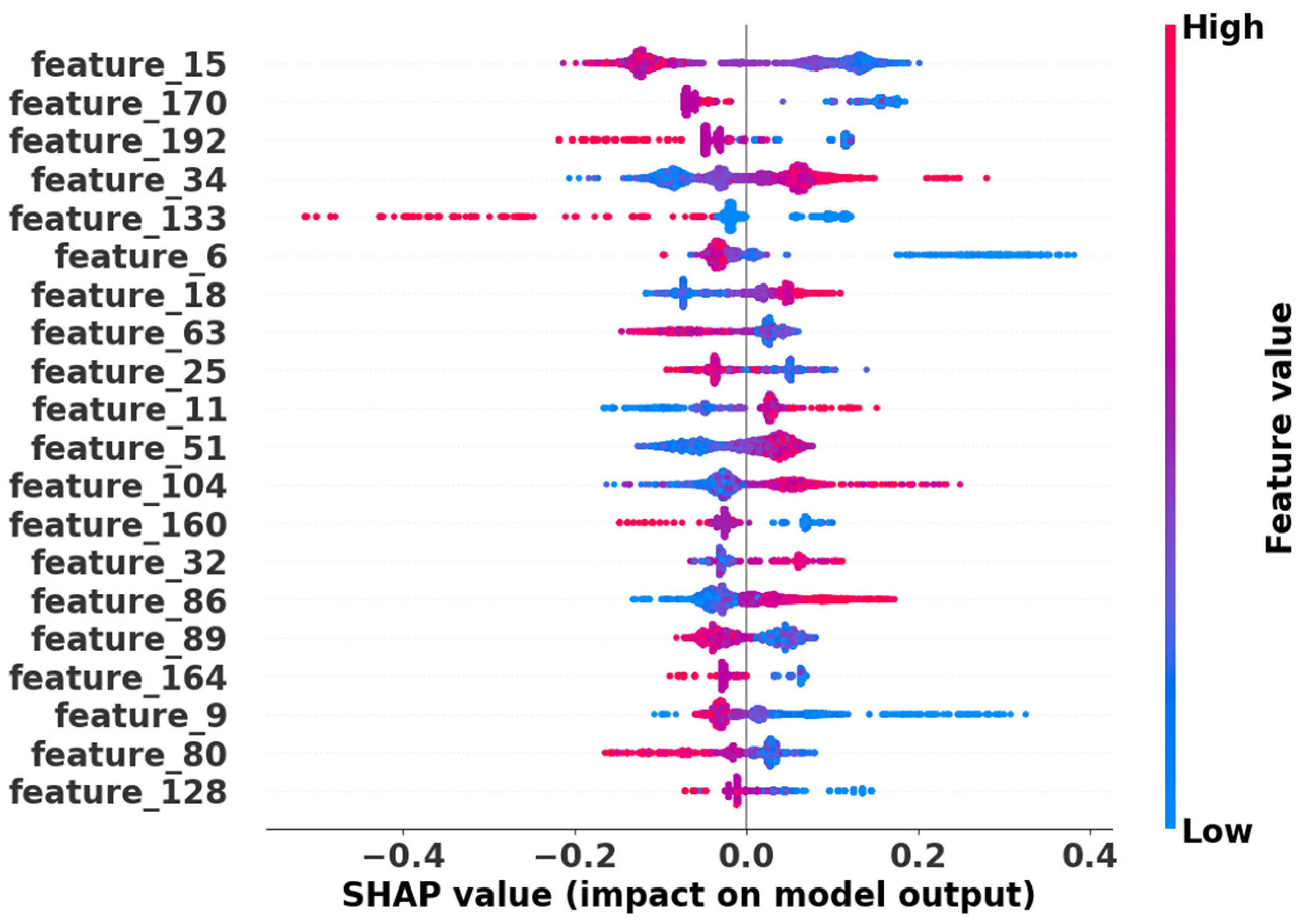

Figure 5 presents the SHAP (SHapley Additive exPlanations) summary plot of the top 20 features among the retained 193 features in Cat-PIPpred. The features are ordered by their mean absolute SHAP values, representing their overall importance in the model. This ranking reflects the average magnitude of feature contributions across all predictions in the dataset. The results demonstrate that these selected features significantly influence the model’s predictions, with higher absolute SHAP values indicating greater feature importance.

The distribution of points reveals the directionality of each feature’s impact. Specifically, the horizontal spread of points along the SHAP value axis indicates the magnitude and consistency of each feature’s impact across different samples, with wider distributions signifying stronger and more variable influence on predictions.

Our analysis identified distinct patterns of feature contributions: for features showing positive correlation (e.g., Feature 34, 11, and 86), red points (high feature values) cluster on the right (positive SHAP values) and blue points on the left, indicating that higher feature values increase interaction probability. Conversely, for features showing negative correlation (e.g., Feature 192, 133, and 160), red points concentrate on the left while blue points are distributed on the right, suggesting that higher feature values decrease prediction scores.

The clear separation of positive and negative impacts across different features demonstrates the model’s ability to capture biologically relevant determinants for predicting PIPs. This interpretability analysis strengthens the biological plausibility of our predictions and provides mechanistic insights into the factors driving pro-inflammatory activities.

As evidenced, this framework establishes a new paradigm for specialized peptide function prediction by synergizing pre-trained language models with evolution-aware feature engineering. This architecture explicitly accounts for the computational complexity inherent in the refinement process while maintaining robust predictive performance.