Artificial Intelligence-Assisted Breeding for Plant Disease Resistance

Abstract

1. Introduction

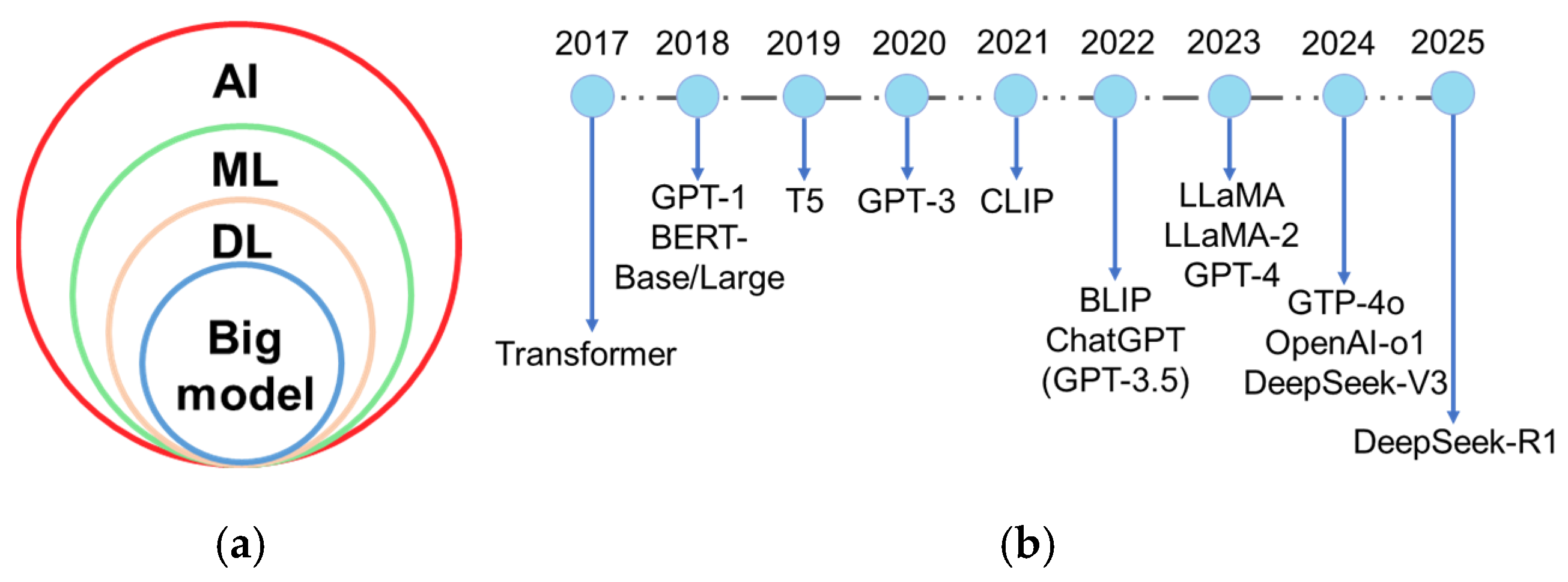

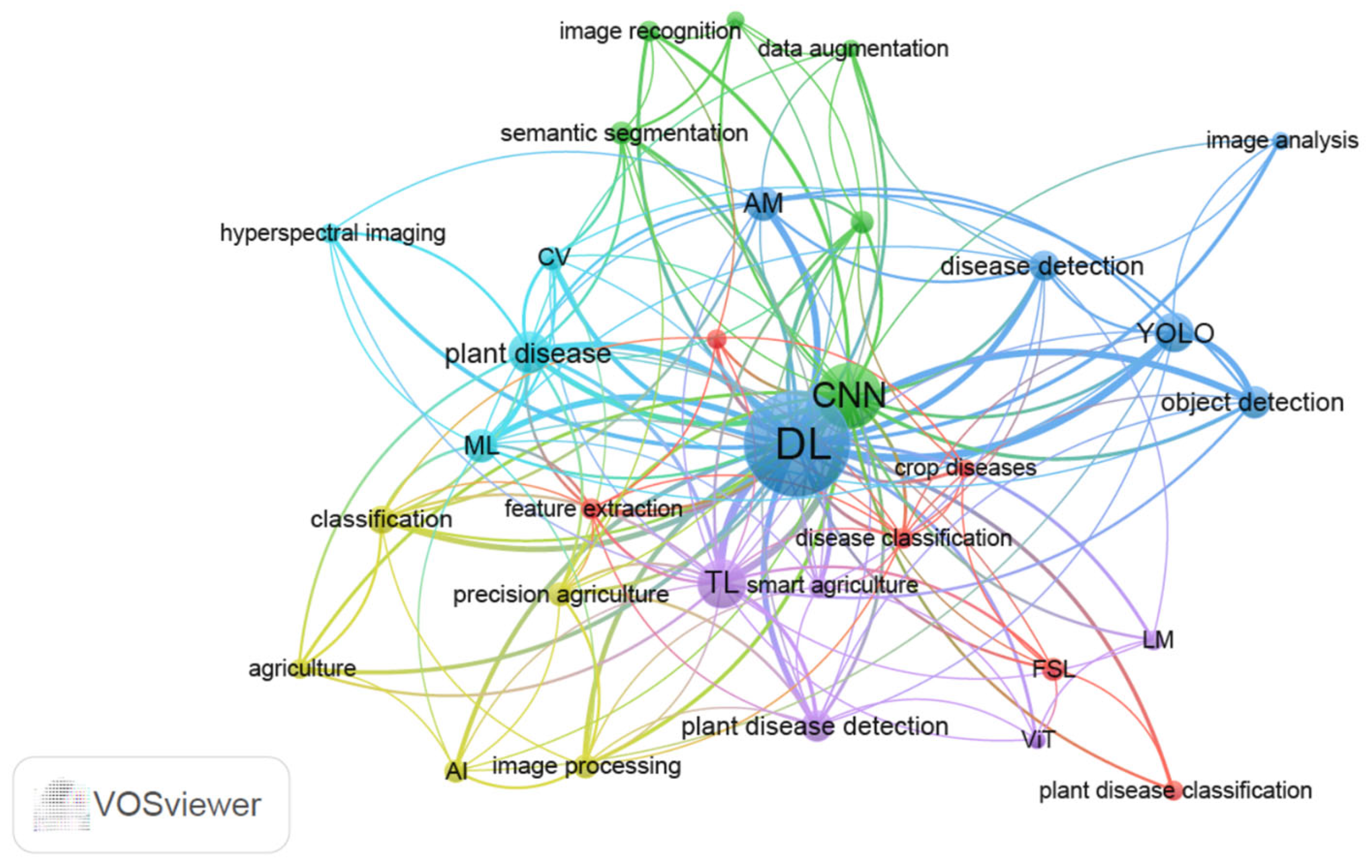

2. AI-Assisted Plant Disease Detection Based on Bibliographic Analysis

3. Big Model in Plant Disease Detection

4. AI-Driven Genomic Selection for Enhanced Disease Resistance

5. Leveraging AI for Phenomic Selection of Disease Resistance Phenotypes

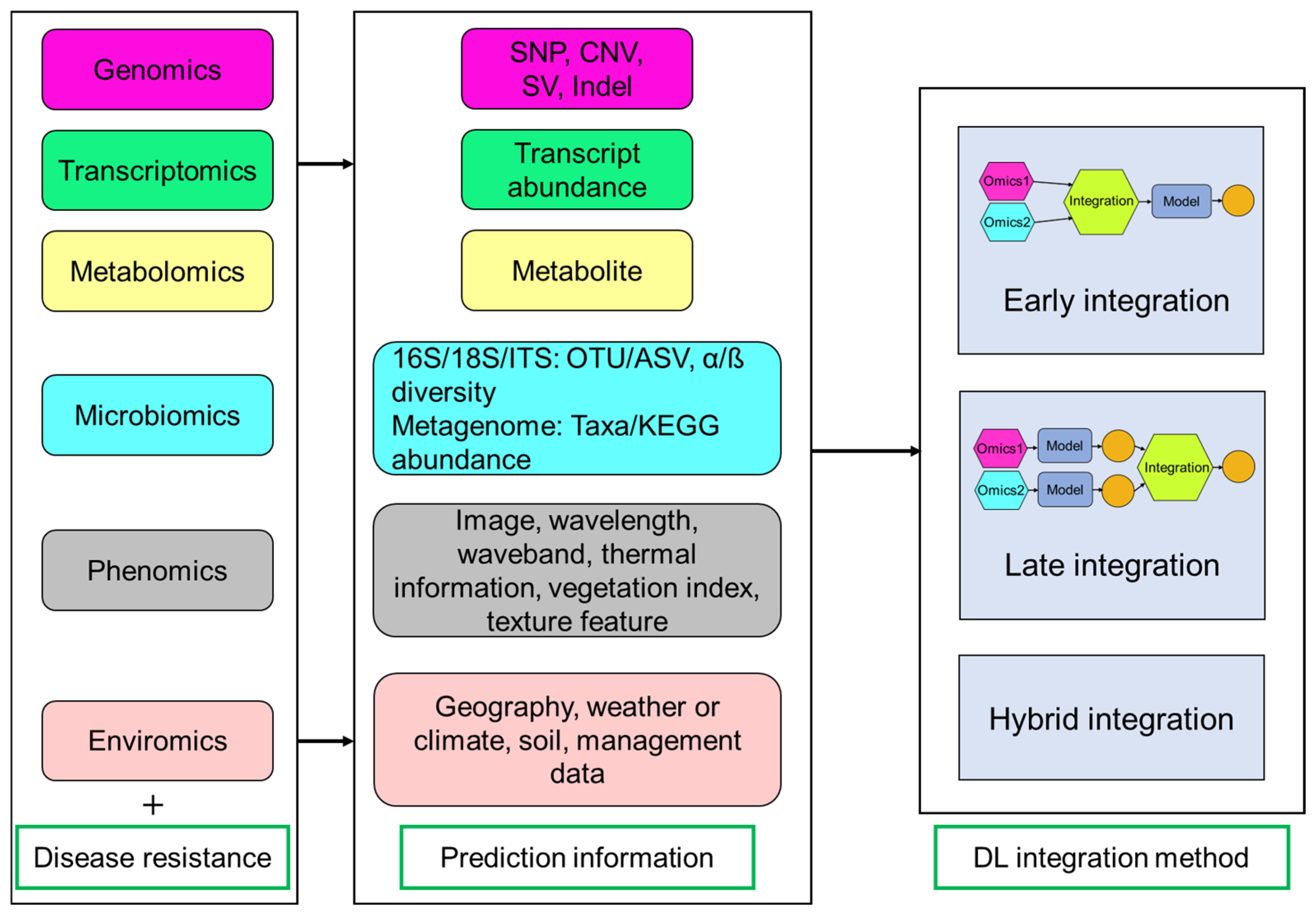

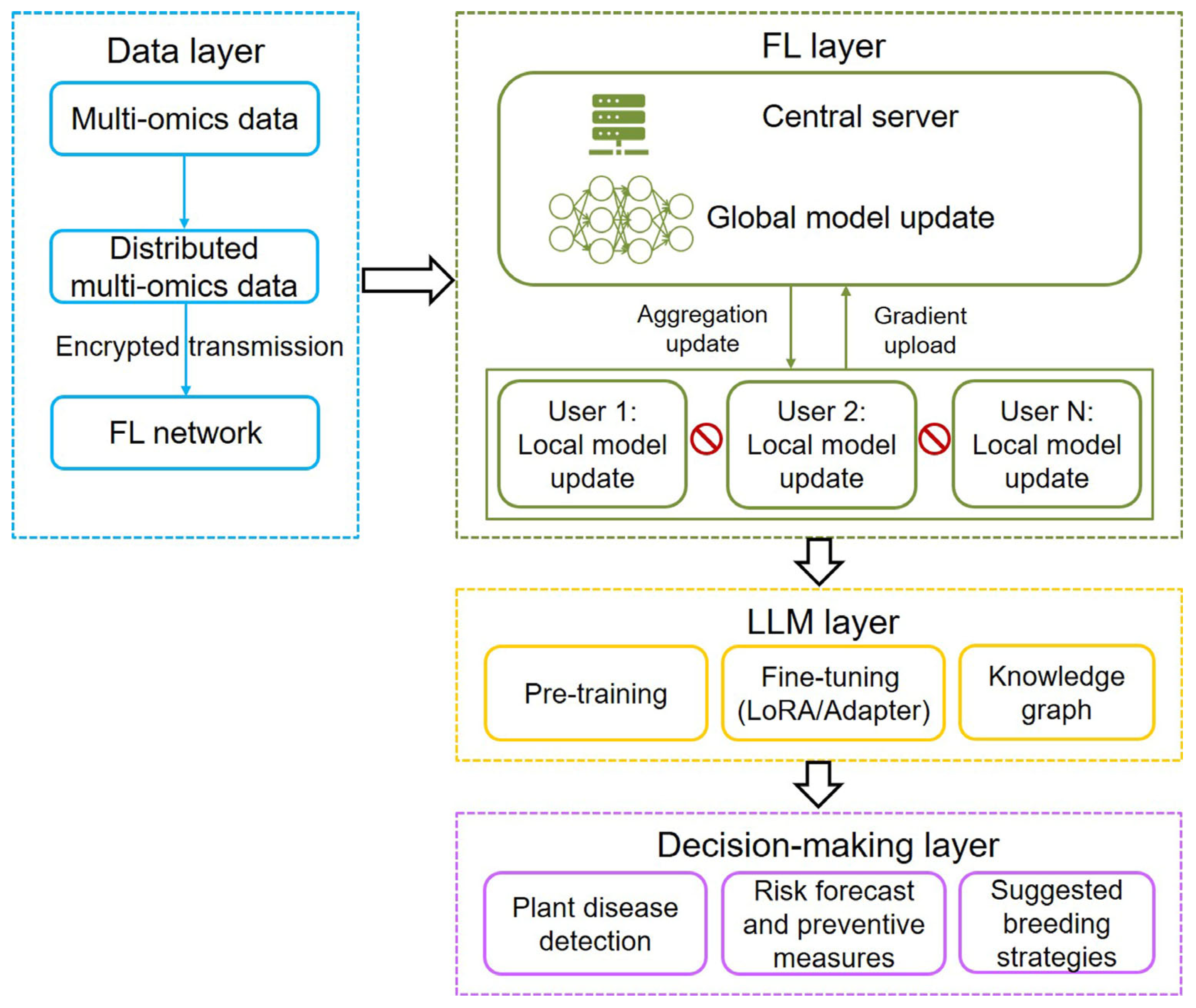

6. Leveraging AI to Align Multi-Omics Signatures with Disease Resistance Phenotypes

7. Challenges and Perspectives

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhu, H.; Lin, C.; Liu, G.; Wang, D.; Qin, S.; Li, A.; Xu, J.L.; He, Y. Intelligent agriculture: Deep learning in UAV-based remote sensing imagery for crop diseases and pests detection. Front. Plant Sci. 2024, 15, 1435016. [Google Scholar] [CrossRef] [PubMed]

- Wallace, J.G.; Rodgers-Melnick, E.; Buckler, E.S. On the road to Breeding 4.0: Unraveling the good, the bad, and the boring of crop quantitative genomics. Annu. Rev. Genet. 2018, 52, 421–444. [Google Scholar] [CrossRef] [PubMed]

- Farooq, M.A.; Gao, S.; Hassan, M.A.; Huang, Z.; Rasheed, A.; Hearne, S.; Prasanna, B.; Li, X.; Li, H. Artificial intelligence in plant breeding. Trends Genet. 2024, 40, 891–908. [Google Scholar] [CrossRef]

- Wang, K.; Abid, M.A.; Rasheed, A.; Crossa, J.; Hearne, S.; Li, H. DNNGP, a deep neural network-based method for genomic prediction using multi-omics data in plants. Mol. Plant 2023, 16, 279–293. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Wang, Y.; Zhang, Y.; Liu, Y.; Yang, Z.; Xiong, H.; Wang, Y.; Lin, J.; Yang, Y. From instructions to intrinsic human values: A survey of alignment goals for big models. arXiv 2023, arXiv:2308.12014. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2022, arXiv:2108.07258. [Google Scholar]

- Cao, Y.Y.; Chen, L.; Yuan, Y.; Sun, G.L. Cucumber disease recognition with small samples using image-text-label based multi-modal language model. Comput. Electron. Agric. 2023, 211, 107993. [Google Scholar] [CrossRef]

- Qing, J.; Deng, X.; Lan, Y.; Li, Z. GPT-aided diagnosis on agricultural image based on a new light YOLOPC. Comput. Electron. Agric. 2023, 213, 108168. [Google Scholar] [CrossRef]

- Sahin, Y.S.; Gençer, N.S.; Şahin, H. Integrating AI detection and language models for real-time pest management in tomato cultivation. Front. Plant Sci. 2025, 15, 1468676. [Google Scholar] [CrossRef]

- Holzinger, A.; Saranti, A.; Angerschmid, A.; Retzlaff, C.O.; Gronauer, A.; Pejakovic, V.; Medel-Jimenez, F.; Krexner, T.; Gollob, C.; Stampfer, K. Digital transformation in smart farm and forest operations needs human-centered AI: Challenges and future directions. Sensors 2022, 22, 3043. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Boyle, K.; Brule-Babel, A.; Fedak, G.; Gao, P.; Djama, Z.R.; Polley, B.; Cuthbert, R.; Randhawa, H.; Graf, R.; et al. Evaluation of genomic prediction for Fusarium head blight resistance with a multi-parental population. Biology 2021, 10, 756. [Google Scholar] [CrossRef] [PubMed]

- Semagn, K.; Iqbal, M.; Jarquin, D.; Crossa, J.; Howard, R.; Ciechanowska, I.; Henriquez, M.A.; Randhawa, H.; Aboukhaddour, R.; McCallum, B.D.; et al. Genomic predictions for common bunt, FHB, stripe rust, leaf rust, and leaf spotting resistance in spring wheat. Genes 2022, 13, 565. [Google Scholar] [CrossRef]

- Butoto, E.N.; Brewer, J.C.; Holland, J.B. Empirical comparison of genomic and phenotypic selection for resistance to Fusarium ear rot and fumonisin contamination in maize. Theor. Appl. Genet. 2022, 135, 2799–2816. [Google Scholar] [CrossRef]

- Islam, M.S.; McCord, P.H.; Olatoye, M.O.; Qin, L.; Sood, S.; Lipka, A.E.; Todd, J.R. Experimental evaluation of genomic selection prediction for rust resistance in sugarcane. Plant Genome 2021, 14, e20148. [Google Scholar] [CrossRef]

- Pincot, D.D.A.; Hardigan, M.A.; Cole, G.S.; Famula, R.A.; Henry, P.M.; Gordon, T.R.; Knapp, S.J. Accuracy of genomic selection and long-term genetic gain for resistance to Verticillium wilt in strawberry. Plant Genome 2020, 13, e20054. [Google Scholar] [CrossRef]

- Liu, Q.; Zuo, S.-M.; Peng, S.; Zhang, H.; Peng, Y.; Li, W.; Xiong, Y.; Lin, R.; Feng, Z.; Li, H.; et al. Development of machine learning methods for accurate prediction of plant disease resistance. Engineering 2024, 40, 100–110. [Google Scholar] [CrossRef]

- Jubair, S.; Tucker, J.R.; Henderson, N.; Hiebert, C.W.; Badea, A.; Domaratzki, M.; Fernando, W.G.D. GPTransformer: A transformer-based deep learning method for predicting Fusarium related traits in barley. Front. Plant Sci. 2021, 12, 761402. [Google Scholar] [CrossRef] [PubMed]

- Thapa, S.; Gill, H.S.; Halder, J.; Rana, A.; Ali, S.; Maimaitijiang, M.; Gill, U.; Bernardo, A.; St Amand, P.; Bai, G.; et al. Integrating genomics, phenomics, and deep learning improves the predictive ability for Fusarium head blight-related traits in winter wheat. Plant Genome 2024, 17, e20470. [Google Scholar] [CrossRef]

- Merrick, L.F.; Lozada, D.N.; Chen, X.; Carter, A.H. Classification and regression models for genomic selection of skewed phenotypes: A case for disease resistance in winter wheat (Triticum aestivum L.). Front. Genet. 2022, 13, 835781. [Google Scholar] [CrossRef]

- Chen, C.; Bhuiyan, S.A.; Ross, E.; Powell, O.; Dinglasan, E.; Wei, X.; Atkin, F.; Deomano, E.; Hayes, B. Genomic prediction for sugarcane diseases including hybrid Bayesian-machine learning approaches. Front. Plant Sci. 2024, 15, 1398903. [Google Scholar] [CrossRef]

- Montesinos-López, O.A.; Montesinos-López, J.C.; Singh, P.; Lozano-Ramirez, N.; Barrón-López, A.; Montesinos-López, A.; Crossa, J. A multivariate Poisson deep learning model for genomic prediction of count data. G3 Genes|Genomes|Genet. 2020, 10, 4177–4190. [Google Scholar] [CrossRef] [PubMed]

- González-Camacho, J.M.; Ornella, L.; Pérez-Rodríguez, P.; Gianola, D.; Dreisigacker, S.; Crossa, J. Applications of machine learning methods to genomic selection in breeding wheat for rust resistance. Plant Genome 2018, 11, 170104. [Google Scholar] [CrossRef] [PubMed]

- Rincent, R.; Charpentier, J.P.; Faivre-Rampant, P.; Paux, E.; Le Gouis, J.; Bastien, C.; Segura, V. Phenomic selection is a low-cost and high-throughput method based on indirect predictions: Proof of concept on wheat and poplar. G3 Genes|Genomes|Genet. 2018, 8, 3961–3972. [Google Scholar] [CrossRef]

- Galán, R.J.; Bernal-Vasquez, A.M.; Jebsen, C.; Piepho, H.P.; Thorwarth, P.; Steffan, P.; Gordillo, A.; Miedaner, T. Integration of genotypic, hyperspectral, and phenotypic data to improve biomass yield prediction in hybrid rye. Theor. Appl. Genet. 2020, 133, 3001–3015. [Google Scholar] [CrossRef] [PubMed]

- Maggiorelli, A.; Baig, N.; Prigge, V.; Bruckmüller, J.; Stich, B. Using drone-retrieved multispectral data for phenomic selection in potato breeding. Theor. Appl. Genet. 2024, 137, 70. [Google Scholar] [CrossRef]

- Jackson, R.; Buntjer, J.B.; Bentley, A.R.; Lage, J.; Byrne, E.; Burt, C.; Jack, P.; Berry, S.; Flatman, E.; Poupard, B.; et al. Phenomic and genomic prediction of yield on multiple locations in winter wheat. Front. Genet. 2023, 14, 1164935. [Google Scholar] [CrossRef]

- DeSalvio, A.J.; Adak, A.; Murray, S.C.; Wilde, S.C.; Isakeit, T. Phenomic data-facilitated rust and senescence prediction in maize using machine learning algorithms. Sci. Rep. 2022, 12, 7571. [Google Scholar] [CrossRef]

- Adak, A.; Kang, M.; Anderson, S.L.; Murray, S.C.; Jarquin, D.; Wong, R.K.W.; Katzfuß, M. Phenomic data-driven biological prediction of maize through field-based high-throughput phenotyping integration with genomic data. J. Exp. Bot. 2023, 74, 5307–5326. [Google Scholar] [CrossRef]

- Adak, A.; DeSalvio, A.J.; Arik, M.A.; Murray, S.C. Field-based high-throughput phenotyping enhances phenomic and genomic predictions for grain yield and plant height across years in maize. G3 Genes|Genomes|Genet. 2024, 14, jkae092. [Google Scholar] [CrossRef]

- Togninalli, M.; Wang, X.; Kucera, T.; Shrestha, S.; Juliana, P.; Mondal, S.; Pinto, F.; Govindan, V.; Crespo-Herrera, L.; Huerta-Espino, J.; et al. Multi-modal deep learning improves grain yield prediction in wheat breeding by fusing genomics and phenomics. Bioinformatics 2023, 39, btad336. [Google Scholar] [CrossRef] [PubMed]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Kaushal, S.; Gill, H.S.; Billah, M.M.; Khan, S.N.; Halder, J.; Bernardo, A.; Amand, P.S.; Bai, G.; Glover, K.; Maimaitijiang, M.; et al. Enhancing the potential of phenomic and genomic prediction in winter wheat breeding using high-throughput phenotyping and deep learning. Front. Plant Sci. 2024, 15, 1410249. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Kang, M.; Ko, E.; Mersha, T.B. A roadmap for multi-omics data integration using deep learning. Brief. Bioinform. 2022, 23, bbab454. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Choi, S.R.; Lee, M. Transformer architecture and attention mechanisms in genome data analysis: A comprehensive review. Biology 2023, 12, 1033. [Google Scholar] [CrossRef]

- Mi, Z.; Zhang, X.; Su, J.; Han, D.; Su, B. Wheat stripe rust grading by deep learning with attention mechanism and images from mobile devices. Front. Plant Sci. 2020, 11, 558126. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Gu, Y.H.; Yin, H.; Jin, D.; Park, J.H.; Yoo, S.J. Image-based hot pepper disease and pest diagnosis using transfer learning and fine-tuning. Front. Plant Sci. 2021, 12, 724487. [Google Scholar] [CrossRef]

- Krishnamoorthy, N.; Prasad, L.N.; Kumar, C.P.; Subedi, B.; Abraha, H.B.; Easwaramoorthy, S.V. Rice leaf diseases prediction using deep neural networks with transfer learning. Environ. Res. 2021, 198, 111275. [Google Scholar]

- Shahoveisi, F.; Taheri Gorji, H.; Shahabi, S.; Hosseinirad, S.; Markell, S.; Vasefi, F. Application of image processing and transfer learning for the detection of rust disease. Sci. Rep. 2023, 13, 5133. [Google Scholar] [CrossRef]

- Kini, A.S.; Prema, K.V.; Pai, S.N. Early stage black pepper leaf disease prediction based on transfer learning using ConvNets. Sci. Rep. 2024, 14, 1404. [Google Scholar]

- Shafik, W.; Tufail, A.; De Silva Liyanage, C.; Apong, R.A.A.H.M. Using transfer learning-based plant disease classification and detection for sustainable agriculture. BMC Plant Biol. 2024, 24, 136. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Ma, X.; Liu, H.; Bi, C.; Yu, H.; Liang, M.; Zhang, J.; Li, Q.; Tang, Y.; Ye, G. A classification method for soybean leaf diseases based on an improved ConvNeXt model. Sci. Rep. 2023, 13, 19141. [Google Scholar] [CrossRef]

- Xu, M.; Yoon, S.; Jeong, Y.; Park, D.S. Transfer learning for versatile plant disease recognition with limited data. Front. Plant Sci. 2022, 13, 1010981. [Google Scholar] [CrossRef]

- Wu, X.; Deng, H.; Wang, Q.; Lei, L.; Gao, Y.; Hao, G. Meta-learning shows great potential in plant disease recognition under few available samples. Plant J. 2023, 114, 767–782. [Google Scholar] [CrossRef]

- Li, Y.; Chao, X. Semi-supervised few-shot learning approach for plant diseases recognition. Plant Methods 2021, 17, 68. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Tse, R.; Tang, S.K.; Qiang, Z.P.; Pau, G. Few-shot learning approach with multi-scale feature fusion and attention for plant disease recognition. Front. Plant Sci. 2022, 13, 907916. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Qiang, Z.; Tse, R.; Tang, S.K.; Pau, G. A few-shot learning method for tobacco abnormality identification. Front. Plant Sci. 2024, 15, 1333236. [Google Scholar] [CrossRef]

- Li, G.; Wang, Y.; Zhao, Q.; Yuan, P.; Chang, B. PMVT: A lightweight vision transformer for plant disease identification on mobile devices. Front. Plant Sci. 2023, 14, 1256773. [Google Scholar] [CrossRef]

- Zhang, E.; Zhang, N.; Li, F.; Lv, C. A lightweight dual-attention network for tomato leaf disease identification. Front. Plant Sci. 2024, 15, 1420584. [Google Scholar] [CrossRef]

- Prince, R.H.; Mamun, A.A.; Peyal, H.I.; Miraz, S.; Nahiduzzaman, M.; Khandakar, A.; Ayari, M.A. CSXAI: A lightweight 2D CNN-SVM model for detection and classification of various crop diseases with explainable AI visualization. Front. Plant Sci. 2024, 15, 1412988. [Google Scholar] [CrossRef]

- Mazumder, M.K.A.; Mridha, M.F.; Alfarhood, S.; Safran, M.; Abdullah-Al-Jubair, M.; Che, D. A robust and light-weight transfer learning-based architecture for accurate detection of leaf diseases across multiple plants using less amount of images. Front. Plant Sci. 2024, 14, 1321877. [Google Scholar] [CrossRef] [PubMed]

- Pan, P.; Guo, W.; Zheng, X.; Hu, L.; Zhou, G.; Zhang, J. Xoo-YOLO: A detection method for wild rice bacterial blight in the field from the perspective of unmanned aerial vehicles. Front. Plant Sci. 2023, 14, 1256545. [Google Scholar] [CrossRef]

- Gómez, D.; Selvaraj, M.G.; Casas, J.; Mathiyazhagan, K.; Rodriguez, M.; Assefa, T.; Mlaki, A.; Nyakunga, G.; Kato, F.; Mukankusi, C.; et al. Advancing common bean (Phaseolus vulgaris L.) disease detection with YOLO driven deep learning to enhance agricultural AI. Sci. Rep. 2024, 14, 15596. [Google Scholar] [CrossRef]

- Yan, C.; Liang, Z.; Yin, L.; Wei, S.; Tian, Q.; Li, Y.; Cheng, H.; Liu, J.; Yu, Q.; Zhao, G.; et al. AFM-YOLOv8s: An accurate, fast, and highly robust model for detection of sporangia of Plasmopara viticola with various morphological variants. Plant Phenomics 2024, 6, 0246. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J. TomatoGuard-YOLO: A novel efficient tomato disease detection method. Front. Plant Sci. 2025, 15, 1499278. [Google Scholar] [CrossRef]

- Zhu, H.; Shi, W.; Guo, X.; Lyu, S.; Yang, R.; Han, Z. Potato disease detection and prevention using multimodal AI and large language model. Comput. Electron. Agric. 2025, 229, 109824. [Google Scholar] [CrossRef]

- Guo, R.; Zhang, R.; Zhou, H.; Xie, T.; Peng, Y.; Chen, X.; Yu, G.; Wan, F.; Li, L.; Zhang, Y.; et al. CTDUNet: A multimodal CNN-transformer dual U-shaped network with coordinate space attention for camellia oleifera pests and diseases segmentation in complex environments. Plants 2024, 13, 2274. [Google Scholar] [CrossRef]

- Nanavaty, A.; Sharma, R.; Pandita, B.; Goyal, O.; Rallapalli, S.; Mandal, M.; Singh, V.K.; Narang, P.; Chamola, V. Integrating deep learning for visual question answering in agricultural disease diagnostics: Case study of wheat rust. Sci. Rep. 2024, 14, 28203. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Feng, X.; Li, Y.; Lyu, L.; Zhou, J.; Zheng, X.; Yin, J. Integration of large language models and federated learning. Patterns 2024, 5, 101098. [Google Scholar] [CrossRef] [PubMed]

- Zeng, S.; Wang, D.; Jiang, L.; Xu, D. Parameter-efficient fine-tuning on large protein language models improves signal peptide prediction. Genome Res. 2024, 34, 1445–1454. [Google Scholar] [CrossRef]

- Kainer, D. The effectiveness of large language models with RAG for auto-annotating trait and phenotype descriptions. Biol. Methods Protoc. 2025, 10, bpaf016. [Google Scholar] [CrossRef]

- Xie, C.; Gao, J.; Chen, J.; Zhao, X. PotatoG-DKB: A potato gene-disease knowledge base mined from biological literature. PeerJ 2024, 12, e18202. [Google Scholar] [CrossRef]

- Azimi, I.; Qi, M.; Wang, L.; Rahmani, A.M.; Li, Y. Evaluation of LLMs accuracy and consistency in the registered dietitian exam through prompt engineering and knowledge retrieval. Sci. Rep. 2025, 15, 1506. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. arXiv 2022, arXiv:2203.02155. [Google Scholar]

- Pérez-Rodríguez, P.; Flores-Galarza, S.; Vaquera-Huerta, H.; Del Valle-Paniagua, D.H.; Montesinos-López, O.A.; Crossa, J. Genome-based prediction of Bayesian linear and non-linear regression models for ordinal data. Plant Genome 2020, 13, e20021. [Google Scholar] [CrossRef] [PubMed]

- Montesinos-López, O.A.; Montesinos-López, J.C.; Salazar, E.; Barron, J.A.; Montesinos-López, A.; Buenrostro-Mariscal, R.; Crossa, J. Application of a Poisson deep neural network model for the prediction of count data in genome-based prediction. Plant Genome 2021, 14, e20118. [Google Scholar] [CrossRef]

- Ren, Y.; Wu, C.; Zhou, H.; Hu, X.; Miao, Z. Dual-extraction modeling: A multi-modal deep-learning architecture for phenotypic prediction and functional gene mining of complex traits. Plant Commun. 2024, 5, 101002. [Google Scholar] [CrossRef] [PubMed]

- Dallinger, H.G.; Löschenberger, F.; Bistrich, H.; Ametz, C.; Hetzendorfer, H.; Morales, L.; Michel, S.; Buerstmayr, H. Predictor bias in genomic and phenomic selection. Theor. Appl. Genet. 2023, 136, 235. [Google Scholar] [CrossRef]

- Zhu, X.; Maurer, H.P.; Jenz, M.; Hahn, V.; Ruckelshausen, A.; Leiser, W.L.; Würschum, T. The performance of phenomic selection depends on the genetic architecture of the target trait. Theor. Appl. Genet. 2022, 135, 653–665. [Google Scholar] [CrossRef]

- Roscher-Ehrig, L.; Weber, S.E.; Abbadi, A.; Malenica, M.; Abel, S.; Hemker, R.; Snowdon, R.J.; Wittkop, B.; Stahl, A. Phenomic selection for hybrid rapeseed breeding. Plant Phenomics 2024, 6, 0215. [Google Scholar] [CrossRef]

- DeSalvio, A.J.; Adak, A.; Murray, S.C.; Jarquín, D.; Winans, N.D.; Crozier, D.; Rooney, W.L. Near-infrared reflectance spectroscopy phenomic prediction can perform similarly to genomic prediction of maize agronomic traits across environments. Plant Genome 2024, 17, e20454. [Google Scholar] [CrossRef]

- Robert, P.; Goudemand, E.; Auzanneau, J.; Oury, F.X.; Rolland, B.; Heumez, E.; Bouchet, S.; Caillebotte, A.; Mary-Huard, T.; Le Gouis, J.; et al. Phenomic selection in wheat breeding: Prediction of the genotype-by-environment interaction in multi-environment breeding trials. Theor. Appl. Genet. 2022, 135, 3337–3356. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Shah, B.; Sayed, N.; Ali, F.; Ullah, R.; Hussain, I. Deep learning for plant bioinformatics: An explainable gradient-based approach for disease detection. Front. Plant Sci. 2023, 14, 1283235. [Google Scholar] [CrossRef]

- Tang, X.; Prodduturi, N.; Thompson, K.J.; Weinshilboum, R.; O’Sullivan, C.C.; Boughey, J.C.; Tizhoosh, H.R.; Klee, E.W.; Wang, L.; Goetz, M.P.; et al. OmicsFootPrint: A framework to integrate and interpret multi-omics data using circular images and deep neural networks. Nucleic Acids Res. 2024, 52, e99. [Google Scholar] [CrossRef]

- Benkirane, H.; Pradat, Y.; Michiels, S.; Cournède, P.H. CustOmics: A versatile deep-learning based strategy for multi-omics integration. PLoS Comput. Biol. 2023, 19, e1010921. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Guo, X.; Zhu, H.; Wang, L. A text-speech multimodal Chinese named entity recognition model for crop diseases and pests. Sci. Rep. 2025, 15, 5429. [Google Scholar] [CrossRef] [PubMed]

- Feng, J.; Zhang, S.; Zhai, Z.; Yu, H.; Xu, H. DC2Net: An Asian soybean rust detection model based on hyperspectral imaging and deep learning. Plant Phenomics 2024, 6, 0163. [Google Scholar] [CrossRef]

- Li, H.; Li, X.; Zhang, P.; Feng, Y.; Mi, J.; Gao, S.; Sheng, L.; Ali, M.; Yang, Z.; Li, L.; et al. Smart Breeding Platform: A web-based tool for high-throughput population genetics, phenomics, and genomic selection. Mol. Plant 2024, 17, 677–681. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Han, R.; Zhao, L.; Liu, M.; Chen, H.; Li, W.; Li, L. AutoGP: An intelligent breeding platform for enhancing maize genomic selection. Plant Commun. 2025, 6, 101240. [Google Scholar] [CrossRef]

- Zhu, W.; Han, R.; Shang, X.; Zhou, T.; Liang, C.; Qin, X.; Chen, H.; Feng, Z.; Zhang, H.; Fan, X.; et al. The CropGPT project: Call for a global, coordinated effort in precision design breeding driven by AI using biological big data. Mol. Plant 2024, 17, 215–218. [Google Scholar] [CrossRef]

- Danek, B.P.; Makarious, M.B.; Dadu, A.; Vitale, D.; Lee, P.S.; Singleton, A.B.; Nalls, M.A.; Sun, J.; Faghri, F. Federated learning for multi-omics: A performance evaluation in Parkinson’s disease. Patterns 2024, 5, 100945. [Google Scholar] [CrossRef]

- Wang, Q.; He, M.; Guo, L.; Chai, H. AFEI: Adaptive optimized vertical federated learning for heterogeneous multi-omics data integration. Brief. Bioinform. 2023, 24, bbad269. [Google Scholar] [CrossRef]

- Kabala, D.M.; Hafiane, A.; Bobelin, L.; Canals, R. Image-based crop disease detection with federated learning. Sci. Rep. 2023, 13, 19220. [Google Scholar]

- Zhou, J.; Zhang, B.; Li, G.; Chen, X.; Li, H.; Xu, X.; Chen, S.; He, W.; Xu, C.; Liu, L.; et al. An AI agent for fully automated multi-omic analyses. Adv. Sci. 2024, 11, e2407094. [Google Scholar] [CrossRef]

| Omics Type | Crop Species | Disease 1 | Model 2 | Type | Accuracy 3 | Reference |

|---|---|---|---|---|---|---|

| Genomics | Rice, wheat | RB, RBSDV, RSB, WB, WSR | RF, SVM, lightGBM, RFC_K, SVC_K, lightGBM_K, DNNGP, DenseNet | Classification | 0.71–0.98 | [17] |

| Barley | FHB | GPTransformer, RFCNN, DT | Regression | 0.34–0.62 | [18] | |

| Wheat | SR | SVM, SVMR | Classification, regression | - | [20] | |

| Sugarcane | Smut, PRR | Attention network, RF, MLP, modified CNN | Regression | 0.28–0.49 | [21] | |

| Wheat | SN, PTR, SB | MPDN, UPDN, GPR | Regression | 0.33–0.66 | [22] | |

| Wheat, Maize | Septoria, GLS | BRNNO | Regression | 0.31–0.87 | [73] | |

| Wheat | FHB | PDNN, DNN, GPER | Regression | 0.35–0.81 | [74] | |

| Phenomics | Wheat | FHB-related traits | One-dimensional CNN | Regression | 0.45–0.55 | [19] |

| Maize | Southern rust | RF, SVM (radial and linear kernel), EN, KNN | Regression | - | [28] | |

| Multi-Omics | Rice | SS, BR | DEM | Classification | 0.62–0.70 | [75] |

| Rice | SS, BR | CustOmics | Classification | 0.50–0.60 | [75] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, J.; Cheng, Z.; Cao, Y. Artificial Intelligence-Assisted Breeding for Plant Disease Resistance. Int. J. Mol. Sci. 2025, 26, 5324. https://doi.org/10.3390/ijms26115324

Ma J, Cheng Z, Cao Y. Artificial Intelligence-Assisted Breeding for Plant Disease Resistance. International Journal of Molecular Sciences. 2025; 26(11):5324. https://doi.org/10.3390/ijms26115324

Chicago/Turabian StyleMa, Juan, Zeqiang Cheng, and Yanyong Cao. 2025. "Artificial Intelligence-Assisted Breeding for Plant Disease Resistance" International Journal of Molecular Sciences 26, no. 11: 5324. https://doi.org/10.3390/ijms26115324

APA StyleMa, J., Cheng, Z., & Cao, Y. (2025). Artificial Intelligence-Assisted Breeding for Plant Disease Resistance. International Journal of Molecular Sciences, 26(11), 5324. https://doi.org/10.3390/ijms26115324