Abstract

Angiogenesis is the process of new blood vessels growing from existing vasculature. Visualizing them as a three-dimensional (3D) model is a challenging, yet relevant, task as it would be of great help to researchers, pathologists, and medical doctors. A branching analysis on the 3D model would further facilitate research and diagnostic purposes. In this paper, a pipeline of vision algorithms is elaborated to visualize and analyze blood vessels in 3D from formalin-fixed paraffin-embedded (FFPE) granulation tissue sections with two different staining methods. First, a U-net neural network is used to segment blood vessels from the tissues. Second, image registration is used to align the consecutive images. Coarse registration using an image-intensity optimization technique, followed by finetuning using a neural network based on Spatial Transformers, results in an excellent alignment of images. Lastly, the corresponding segmented masks depicting the blood vessels are aligned and interpolated using the results of the image registration, resulting in a visualized 3D model. Additionally, a skeletonization algorithm is used to analyze the branching characteristics of the 3D vascular model. In summary, computer vision and deep learning is used to reconstruct, visualize and analyze a 3D vascular model from a set of parallel tissue samples. Our technique opens innovative perspectives in the pathophysiological understanding of vascular morphogenesis under different pathophysiological conditions and its potential diagnostic role.

1. Introduction

Angiogenesis represents the formation of new blood vessels from existing vasculature, involving the migration, growth and differentiation of endothelial cells, which line the inner surface of blood vessels. The process plays an integral part in the proliferative stage of wound healing, forming new blood vessels from pre-existing ones by invading the wound clot and organizing into a microvascular network throughout the granulation tissue [1]. Angiogenesis is also a crucial prerequisite for invasive tumor growth and metastasis and constitutes an important point of control with respect to cancer progression [2]. Consequently, it is one of the eight hallmarks of cancer [3]. The detailed processes of forming three-dimensional (3D) vascular networks during morphogenesis are not yet fully understood in detail, even with the help of powerful hybrid mathematical and computational models [4]. To enhance the research in angiogenesis, it is essential to focus on innovative ideas to improve the research into angiogenesis. Specifically, this includes the need for the 3D visualization and analysis of a vascular network.

Recent studies have demonstrated that it is possible to reconstruct objects as a 3D model using a set of parallel consecutive images through image registration and segmentation [5,6]. For medical research, neurons were reconstructed in 3D to study the relationship between cell morphology and function [7]. A human Schlemm’s canal was visualized in 3D to provide diagnostic information in the eye [8]. Furthermore, with the availability of 3D data points, several other applications are possible in the medical domain. For example, a quantitative characterization of the vascular network was studied with the help of skeletonization on 3D photoacoustic images [9]. Skeletonization on a 3D model also aids in path finding in the virtual endoscopy and analysis of 3D pathological sample images [10].

The challenge of this paper is the concept of image registration to align images and image segmentation to isolate blood vessels to build and reconstruct a 3D model. To understand the specifics of the overall pipeline, the region of interest (ROI) to be visualized is selected by a pathologist. The first step involves using an image segmentation algorithm to segment the blood vessels as relevant objects in the specified ROI. The next step is to ensure the strict alignment of consecutive images using image registration. The corresponding segmented masks are aligned using the results of the registration algorithm and are interpolated to reconstruct and visualize a 3D model. For analysis, a skeleton model is derived from the 3D reconstructed model, with which the branching of blood vessels could be analyzed. The next section elaborates on the relevant state-of-the-art algorithms used and the basis for choosing them.

The aim of the present report is to demonstrate a pipeline of computer vision and deep-learning-based algorithms to accurately visualize blood vessels in 3D from a set of consecutive tissue samples.

2. Results

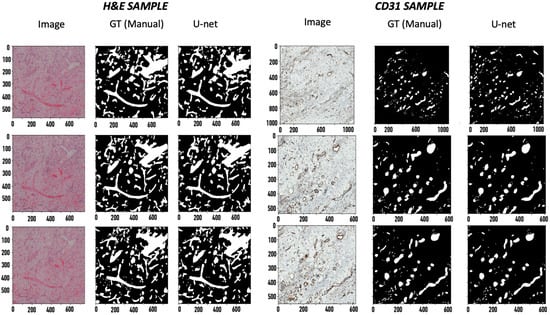

After training the tissue samples with 70% of the patched Ground-Truth (GT) data via the U-net segmentation network [11] to a validation dice score of greater than 90%, dice score accuracies of 92.1% and 91.7% were observed on the H&E-stained tissues and CD31-stained tissues, respectively (Figure 1). The dice score accuracy using the U-net segmentation algorithm is compared to the traditional block-based Otsu method (Table 1).

Figure 1.

Segmentation results. 1. Image, 2. GT, 3. U-net predicted mask. The x axis applies to the respective column. The values are specified in pixels (magnification: 20× for H&E and 40× for CD31).

Table 1.

Image segmentation results.

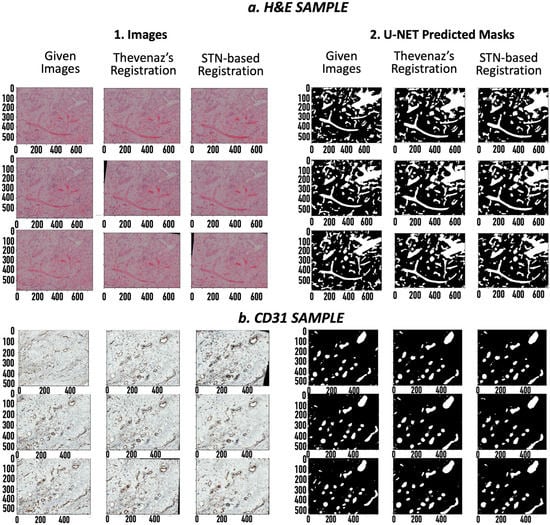

After coarse image registration applying Thevenaz’s algorithm [13], the SSIM score for the H&E sample was improved from 29.2% to 46.3%, and for the CD31 images, from 9.1% to 14.9%. A significant leap was seen in the mutual dice scores for the corresponding binary GT masks of the H&E samples and CD31 samples, from 3.8% to 31.8% and 0% to 14.3% (Table 2), respectively. Finetuning the registration algorithm using the STN-based registration network [14] further increased the SSIM score to 57.0% for H&E and 25.6% for CD31. Also, the mutual dice score on the corresponding GT masks improved to 32.4% for H&E and 18.2% for CD31. The mutual dice metrics on the predicted masks reciprocated the results for both the H&E (Table 3) and CD31 samples (Table 4). A visual overview of the images and their corresponding masks before and after image registration gives us an idea of the extent of the achieved image registration (Figure 2).

Table 2.

Coarse-to-fine image registration.

Table 3.

Pair-wise image registration for H&E sample.

Table 4.

Pair-wise image registration for CD31 sample.

Figure 2.

Coarse-to-fine registered images: (a1). H&E images (20× magnification), (a2). H&E masks, (b1). CD31 images (40× magnification), (b2). CD31 masks. For each set, left to right: i. Original image/mask, ii. Coarsely registered image/mask using Thevenaz’s algorithm, iii. Finely registered images/masks using spatial transformers. The x axis applies to the respective column. The values are specified in pixels.

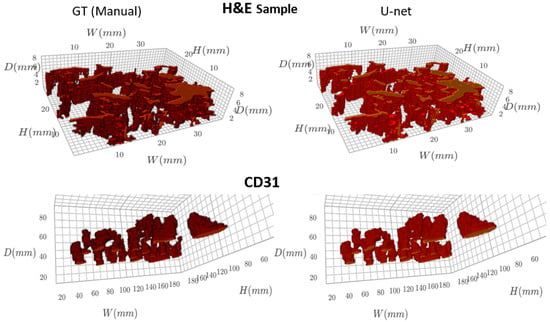

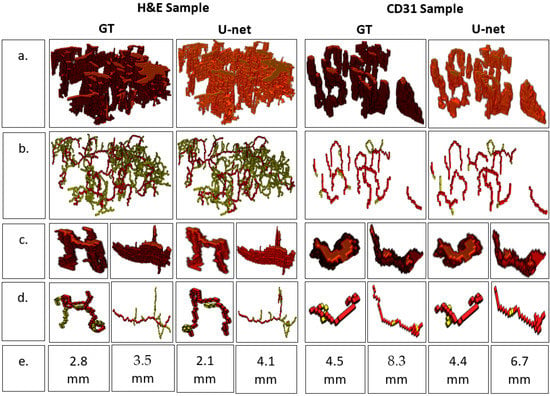

The finely registered binary masks, both the GT and the predicted vessels, were interpolated to the tissue dimensions using bilinear interpolation [15], and a 3D model was reconstructed (Figure 3). A video of the reconstructed 3D models is available in the Supplementary Material. The resulting 3D models were skeletonized using Lee’s skeletonization algorithm [16]. Subsequently, it was possible to identify and evaluate the branching statistics. It was also possible to find the length of the main branch from the skeletonized 3D model (Figure 4).

Figure 3.

Visualizing the 3D model of blood vessels (GT and U-net predicted masks).

Figure 4.

Analyzing branch statistics of the 3D models. (a) 3D model, (b) Skeletonized model highlighting the main branch for each individual blood vessel, (c) 3D models of individual blood vessels, (d) 3D skeletonized model of individual blood vessels, (e) Main branch length (mm).

3. Discussion

Image registration and segmentation are the two most significant steps of this pipeline, followed by image interpolation and skeletonization algorithms. Several approaches in semi-supervised image segmentation, including the well-known U-net segmentation algorithm [11], are reported in the literature, which produce excellent results for the task of image segmentation [17]. In contrast, unsupervised image registration is an extremely challenging task to accomplish, with much more scope for research. The two techniques used in our work are known to be among the most reliable algorithms for coarse-to-fine image registration [18,19], resulting in excellent 3D models.

The reliability of image segmentation can be easily estimated using dice score accuracies, which provide a measure of the amount of overlap between the automatically predicted annotations and the GT annotations. The observed dice scores of 92.1% and 91.7% on the two samples show the excellent reliability of the deep-learning-based U-net segmentation network. Moreover, comparing it to the results of a simple block-based Otsu approach shows a difference of 25–35% in the dice score accuracy (Table 1), which reinforces the dominance of deep learning algorithms for image segmentation. The reliability of an image registration algorithm is difficult to estimate as image registration is an unsupervised algorithm, and it is not feasible to manually register images. However, the proposed analysis, using similarity scores (SSIM) and the mutual dice score, provides a reasonable justification to show the reliability of the image registration algorithms on the given set of images. This can evidently be seen by visually viewing the images before and after the registration for the two samples, where inconsistencies in the rotation and translation were corrected (Table 2 and Figure 1). The increase in the SSIM using coarse and fine registration showed that the given set of misaligned images were aligned (Table 2). Interestingly, the corresponding dice score accuracies for the consecutive images provided even stronger evidence of image alignment. The dice score accuracies for the consecutive image pairs increased from ~30% for the unregistered images to ~80% after registration (Table 3 and Table 4). Considering that the consecutive images need to be as close to each other as possible, the high dice scores provide strong grounds to suggest excellent registration results. Moreover, the mutual dice score, which indicates the amount of overlap across the complete set of images, increased from ~0% for the misaligned images to a significant value (~35% for the H&E samples and ~18% for the CD31 samples). Thus, we provided strong evidence that the given set of images are reliably aligned, making the corresponding masks suitable for 3D visualization. Therefore, the aligned images provided a meaningful 3D model of the blood vessels.

The main purpose of this work is to introduce a reliable pipeline to reconstruct a 3D model of blood vessels from a set of parallel slides of granulation tissue samples by employing algorithms based on computer vision and deep learning. Reconstructing vessels manually in 3D is very tedious and requires a lot of time and manual labor. An automatic approach would help pathologists to improve the research and diagnostic potency of structural defects in the vascular network or during angiogenesis, respectively.

The successful reconstruction of a 3D model from two independent tissue samples with completely different staining techniques (H&E and CD31) implies that the type of staining has no effect on the reconstruction. Similarly, the type of tissue plays no role if it is possible to obtain parallel slides of the tissue. As an example, one could use this pipeline to reconstruct blood vessels from endomyocardial tissue from several heart diseases [20,21]. In this context, the ability to reuse heart tissues from biobanks [22] for 3D reconstruction could be a future research approach. Furthermore, our pipeline could also be used for the 3D reconstruction of tumor vessels in cancer patients to better understand the morphogenesis of the vasculature in invasive tumor masses.

The 3D model of blood vessels could also be used for various applications in analyzing wound healing or wound healing deficiency, respectively. In addition, this model could be used to verify mathematical models describing the geometry of blood vessels in angiogenesis [4].

A branching analysis of the 3D model was possible by post-processing the 3D model using the skeletonization algorithm devised by Lee [16]. The skeletal 3D model was analyzed using the Skan library [23], which focuses on finding individual branches of a skeletal model. As a result, a visualization model of individual blood vessels was achieved, and the length of the branches could be estimated for the individual blood vessels in the 3D model (Figure 2).

There are limitations to this approach. Firstly, obtaining these stained images involves tissue destructive histological methods. Secondly, the tissues need to be carefully stained for a reliable 3D reconstruction. This could otherwise lead to false results as the algorithm could converge to a local minimum. This challenge can be overcome through manual intervention, by choosing selected regions to run the algorithms. Lastly, the mutual dice score relies on the assumption that the blood vessels follow a continuous flow structure. For a large number of consecutive samples, the blood vessels could gradually flow out of the considered frame. For such cases, it could be better to consider the mutual dice score for overlapped batches of consecutive images for reliable registration.

For future research, the use of Generative Adversarial Networks (GANs) [24] could be investigated for even better registration results [25]. In contrast, a stable convergence is difficult and time consuming, even with the availability of high computational hardware, along with the risk of the introduction of undesirable artifacts using GANs. The currently used bilinear interpolation gives reasonable accuracy to visualize the model. Nonetheless, research in deep learning approaches to performing interpolation would further improve the visualization, resulting in an even better 3D model. Another area to focus on is the use of vision-based approaches in radiomics. There has already been some progress in using artificial intelligence in radiomics, which is a novel approach for solving precision methods using non-invasive, low-cost and fast multimodal medical images [26]. The application of the random forests algorithm was studied to predict the prognostic factors of breast cancer by integrating tumor heterogeneity and angiogenesis properties on MRI [27]. Low-field nuclear magnetic resonance (NMR) relaxometry, with the aid of machine learning, could rapidly and accurately detect objects [28]. The speed of such algorithms has been improved, as well using low-field NMR relaxometry [29]. Although the results obtained by algorithms on non-invasive, low-cost images would not be as reliable as images obtained through invasive methods, it would be interesting to understand the available data from radiomics and to analyze the applications of vision-based algorithms in the future.

4. Materials and Methods

4.1. Tissue Preparation and Data Acquisition

Our experiments were performed on two samples of human granulation tissue. The first sample was a set of eight consecutively sliced tissue sections of H&E-stained granulation tissue. The second one was a set of ten consecutive tissue sections of a CD31-stained granulation tissue. The thickness of the sections was 4 µm for the H&E and 3 µm for the CD31 samples. For both staining methods, the fresh tissue was first fixed in 5% formalin and then embedded in paraffin. The formalin-fixed paraffin-embedded (FFPE) tissue blocks were cut into consecutive sections and mounted onto glass slides. For the H&E staining, the tissue was deparaffinized, rehydrated and then stained in Mayer’s hematoxylin and eosin. The immunohistochemical staining of CD31 was performed with the help of a BenchMark Ultra autostainer (Ventana Medical Systems, Oro Valley, AZ, USA) employing a mouse anti-CD31 antibody (1:40; clone JC70A; Dako, Glostrup, Denmark). After staining both the H&E and CD31, the slides were covered with glass cover slips and were scanned as whole slide images (WSI) via the PreciPoint M8 slide scanner (PreciPoint GmbH, Freising, Germany) with a 20× objective (Olympus UPlan FLN 20×, Olympus, Tokyo, Japan). The resolution observed at maximum zoom was 0.28 µm per pixel.

4.2. Region of Interest (ROI) Selection

The acquired whole slide images were stored in Omero [30], an image server that can be used to view images and store annotations. A particular relevant ROI, where the vessels need to be visualized as a 3D model, was chosen in each consecutive image for the given samples. The chosen ROIs were around 0.5 × 0.4 mm for the H&E samples and 1.3 × 1.2 mm for the CD31 samples. The blood vessels inside the ROI were manually annotated by a medical expert. The manual annotations are referred to as Ground-Truth data (GT). The GT annotations were used to generate a GT mask for semi-supervised segmentation algorithms. They were also used to compare the statistics of the reconstructed 3D model of the GT data and the algorithm-predicted data. Additionally, several metrics to validate the algorithm performance were evaluated using the GT annotations. Consequently, two datasets were generated, which acted as the inputs to the pipeline of the algorithms for the 3D visualization and analysis.

4.3. Image Segmentation

Image segmentation is an approach used to partition an image into multiple image segments. The popular traditional approaches, such as Otsu’s method [12], k-Means clustering [31] and the watershed algorithm [32], involve the design of complex hand-crafted features. Although these approaches are unsupervised and computationally efficient, the design of specific hand-crafted features requires extensive pre-processing, and the resulting accuracy of the segmented models is relatively low [33].

On the contrary, recent advances in deep learning have provided a framework to segment images with extremely high accuracy [34]. With advances in improving deep learning architecture using residual layers [35] and inception layers [36], there has been significant progress in improving deep-learning-based segmentation models [17]. A popular architecture extensively used for medical research is the U-net architecture [11], which uses deconvolution layers and skip-connections to improve the segmentation accuracy [37].

Therefore, a U-net-based segmentation network was chosen to perform image segmentation on blood vessels for our research. The GT data were used for training the semi-supervised algorithm. The dataset was converted into a patched dataset with a patch size of 224 × 224 pixels. For training, the patched dataset was split into 70% training dataset and 30% validation dataset. The network was then trained using an AdamW optimizer [38] with a OneCycleLR scheduler [39] by minimizing the cross-entropy loss. The trained model could then be used to segment blood vessels for the given dataset.

4.4. Image Registration

Image registration is a process in which different images are transformed into the same coordinate system by matching the image contents [40]. A broad category of transformation models includes linear transformations involving rotation, scaling, translation, and shear and non-linear transformations comprising elastic, nonrigid or deformable transformations [41,42]. Image registration is extensively used in medical research for several imaging techniques, such as X-ray, CT scans and MRI [43]. Factors such as non-linear geometric distortions, noisy image data and computational complexity contribute to the fact that image registration is one of the most challenging tasks in computer vision [44].

A variety of classical algorithms, which are based on hand-crafted features for image registration, can be used to perform image registration. They are broadly classified into feature-based methods and area-based methods [18]. Feature-based methods extract salient features, such as edges or corners, to align images with the presumption that they stay at fixed positions during the whole experiment [44]. Area-based methods use metrics such as cross-correlation to match image intensities, without performing any structural analysis [32]. An area-based method by Thevenaz et al., which maximizes mutual information using the Marquardt-Levenberg method, has gained tremendous popularity in classical image registration for biomedical research, especially due to its computation-efficient hierarchical search strategy applying a pyramidal approach [13].

Due to its popularity, this paper uses Thevenaz’s algorithm to coarsely register the given dataset. To align the images and remove inconsistencies caused by translation, scale, rotation or shear, Thevenaz’s algorithm [13] was used. The mutual information was minimized between consecutive image pairs to result in a set of affine matrices. The images need to be transformed using the resulting affine matrices through homogeneous transformations. One might need to pre-process the choice of the rectangular sections of the image pair to ensure a reliable coarse alignment and avoid converging to a local minimum. After using the coarse registration approach, images are aligned to a single coordinate system.

4.5. Image Registration Finetuning

In the last few years, deep learning has significantly contributed to more accurate image registration using unsupervised transformation estimation methods, especially to predict a deformable transformation model [19]. In particular, Kuang et al. [45] demonstrated excellent results on public datasets using a convolutional neural network (CNN)- and a Spatial Transformer Network (STN)-inspired framework [14] for deformable image registration. This was achieved with the help of a normalized Cross-Correlation (NCC) loss function. Yan et al. [46] showed that a Generative Adversarial Networks (GANs)-based [24] network could estimate rigid transformations. Although GANs are effective in image registration, they are very unstable and prone to synthetic data.

Consequently, to solve non-linear and geometric distortions, an unsupervised deep-learning-based image registration network with a U-net architecture [11] using STNs [14] was used to finetune the coarsely registered images. Pairs of consecutive images were passed as inputs through a neural network for deformable registration via a voxelmorph framework [47]. Flow vectors, which describe the flow of each pixel in the moving image, were predicted using the trained spatial transformers for better registration. To train the network, an AdamW optimizer with a OneCycleLR scheduler was used for smart convergence. A bidirectional loss function based on NCC loss [47], which encourages accurate forward and backward flow vectors, was minimized. A gradient loss was also defined to control the values of the flow vector. After training, the relevant forward flow vectors could be predicted and applied to the coarsely registered stack of images and its corresponding masks, resulting in a finely registered dataset.

4.6. Interpolation and 3D Visualization

Image segmentation predicts blood vessels as binary masks. Image registration results in affine matrices and flow vectors to transform and align the given set of unaligned images. The corresponding binary masks can also be aligned using this information, resulting in a sequence of aligned binary masks, which gives us a set of 3D points of blood vessels.

Interpolation is a method to estimate or find new data points based on a range of discrete sets of known data points [48]. A Nearest-Neighbor interpolation [49] introduces significant distortion in the model. A bilinear interpolation [15] gives a better approximation of the 3D model, but the image contours become fuzzy and the high frequency components are faded. A bicubic interpolation [50] would require large amounts of calculation [51].

As a compromise, a bilinear interpolation is chosen for interpolation in our pipeline. The set of 3D points is interpolated, corresponding to the original dimensions of the tissue using bilinear interpolation [15], resulting in the final 3D model. A 3D model is obtained for both the manually annotated (GT) masks and the predicted masks. As a postprocessing step, small irrelevant objects in the 3D models are filtered out. The final models can be visualized and rendered using visualization libraries such as K3D [52] or 3D Slicer [53].

4.7. Skeletonization and Analysis

Skeletonization reduces binary objects to a one-pixel-wide representation. This provides a compact representation of the image by reducing the dimensionality to a skeleton and is useful for analyzing the topology and structure of the object. Several skeletonization approaches exist in the literature [54]. Blum and Nagel [55] established the foundation of skeletonization using a Blum’s Grassfire transformation to find a medial axis. After several adaptations, Lee et al. [16] proposed a popular digital approach to simulate Blum’s algorithm by applying a constrained iterative erosion process.

To visualize the structure and flow of blood vessels, a 3D skeletal model of the 3D blood vessel model was obtained using Lee’s skeletonization algorithm [16] from the scikit-image library [56]. With the help of Skan [23], a python library for skeletal analysis, the branch statistics of the skeletal model, was computed. This included the length of the main branch and the number of sub-branches for each blood vessel, along with their lengths. It was also possible to reconstruct a branched skeletal 3D model for visualization.

4.8. Metrics

The results of the image registration were analyzed using a Structural Similarity Index Measure (SSIM) [57], which helps to predict the perceived quality of two images. SSIM considers image degradation as a perceived change in the structural information, along with other properties of an image, such as luminance and contrast. An SSIM value of 0 indicates no structural similarity and a value of 1 is only reachable for two identical images. The SSIM value was determined across pairs of images, as well as for the complete sample before and after registration.

The image segmentation was evaluated by calculating the Sorensen-dice coefficient [58,59] or dice score on the segmented vessels. The dice score can be interpreted as a measure to estimate the overlap between binary masks. The GT vessels can be compared to the predicted vessels using this dice score. A dice score of 100 (in percentage) means that the predicted mask perfectly overlaps with the target mask. Mathematically, it can be formulated as:

where X and Y are the two masks to be compared.

The dice score can also be used to evaluate the metrics after image registration. It can be computed across two consecutive binary masks after registration. Similarly, the dice score can also be evaluated across the complete sequence, using the following expression:

where X1, X2, …, Xn are the binary masks for each image in an image stack. A measure of overlap between the vessels was estimated between the parallel images in an image stack.

5. Conclusions

In conclusion, a pipeline of vision-based algorithms, mainly comprising image registration and segmentation, is proposed to visualize and analyze blood vessels as a 3D model. The branching of vasculature could be analyzed using a skeletonization algorithm on the resulting 3D model. Computer vision and deep learning have huge potential to accelerate research in medicine. At present, there is a huge gap between the current developments and the potential of vision-based algorithms. In future studies, we will bridge this gap to accelerate the research in angiogenesis through the use of a 3D model of blood vessels.

Supplementary Materials

The following supporting information can be downloaded at: https://figshare.com/collections/Data_Angeogenesis_3D_Reconstruction/6339176 (accessed on 20 April 2023).

Author Contributions

Conceptualization: V.R., C.B., S.S. (Samuel Sossala), C.B.W.; methodology: V.R., P.A., A.T., J.D.; software: V.R., A.A., H.B.; validation: T.N., C.B., S.S. (Samuel Sossalla), V.H.S.; formal analysis: V.R., R.S., A.A.; investigation: V.R.; resources, R.S., F.G.; data curation: R.S., L.W.; writing—original draft preparation: V.R., L.W., H.B.; writing—review & editing: T.N., A.A., H.B., F.G., L.W., S.S. (Stephan Schreml), J.D., V.H.S., A.M., P.A., A.T., M.R., C.B.; visualization: V.R., A.A., H.B., L.W.; supervision: C.B.; project administration: T.N., L.W., M.K., O.T., M.R.; funding acquisition: C.B., T.N., M.K., O.T., M.R. All authors have read and agreed to the published version of the manuscript.

Funding

This project was generously funded by the European Union, Ziel ETZ 2014–2020 (Ref.: Interreg 352).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of the University of Regensburg (22-2930-104 (12 May 2022) and 22-2931_1-101 (10 November 2022)).

Informed Consent Statement

Not Applicable.

Data Availability Statement

The data presented in this study are openly available in the Supplementary Materials.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

3D: three dimensional, FFPE: formalin-fixed paraffin embedded, H&E: hematoxylin & eosin, CNN: convolutional neural network, STN: Spatial Transformer Network, GAN: Generative Adversarial Networks, GT: Ground-Truth, SSIM: Structural Similarity Index Measure, NCC: normalized Cross-Correlation, NMR: nuclear magnetic resonance, ROI: Region of Interest.

References

- Honnegowda, T.M.; Kumar, P.; Udupa, E.G.; Kumar, S.; Kumar, U.; Rao, P. Role of angiogenesis and angiogenic factors in acute and chronic wound healing. Plast. Aesthetic Res. 2015, 2, 239–242. [Google Scholar] [CrossRef]

- Folkman, J. Role of angiogenesis in tumor growth and metastasis. Semin. Oncol. 2002, 29, 15–18. [Google Scholar] [CrossRef]

- Hanahan, D. Hallmarks of Cancer: New Dimensions. Cancer Discov. 2022, 12, 31–46. [Google Scholar] [CrossRef]

- Guerra, A.; Belinha, J.; Jorge, R.N. Modelling skin wound healing angiogenesis: A review. J. Theor. Biol. 2018, 459, 1–17. [Google Scholar] [CrossRef]

- Pollefeys, M.; Koch, R.; Vergauwen, M.; Van Gool, L. Automated reconstruction of 3D scenes from sequences of images. ISPRS J. Photogramm. Remote Sens. 2001, 55, 251–267. [Google Scholar] [CrossRef]

- Gallo, A.; Muzzupappa, M.; Bruno, F. 3D reconstruction of small sized objects from a sequence of multi-focused images. J. Cult. Herit. 2014, 15, 173–182. [Google Scholar] [CrossRef]

- Carlbom, I.; Terzopoulos, D.; Harris, K.M. Computer-assisted registration, segmentation, and 3D reconstruction from images of neuronal tissue sections. IEEE Trans. Med. Imaging 1994, 13, 351–362. [Google Scholar] [CrossRef]

- Tom, M.; Ramakrishnan, V.; van Oterendorp, C.; Deserno, T. Automated Detection of Schlemm’s Canal in Spectral-Domain Optical Coherence Tomography. In Medical Imaging 2015: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2015. [Google Scholar]

- Meiburger, K.M.; Nam, S.Y.; Chung, E.; Suggs, L.J.; Emelianov, S.Y.; Molinari, F. Skeletonization algorithm-based blood vessel quantification using in vivo 3D photoacoustic imaging. Phys. Med. Biol. 2016, 61, 7994–8009. [Google Scholar] [CrossRef] [PubMed]

- Toriwaki, J.-i.; Mori, K. Distance transformation and skeletonization of 3D pictures and their applications to medical images. In Digital and Image Geometry: Advanced Lectures; Springer: Berlin/Heidelberg, Germany, 2002; pp. 412–429. [Google Scholar]

- Swedlow, J. Open Microscopy Environment: OME Is a Consortium of Universities, Research Labs, Industry and Developers Producing Open-Source Software and Format Standards for Microscopy Data. 2020. Available online: https://discovery.dundee.ac.uk/en/publications/open-microscopy-environment-ome-is-a-consortium-of-universities-r (accessed on 20 April 2023).

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Li, Y.; Wu, H. A Clustering Method Based on K-Means Algorithm. Phys. Procedia 2012, 25, 1104–1109. [Google Scholar] [CrossRef]

- Pratt, W.K. Introduction to Digital Image Processing; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Zaitoun, N.M.; Aqel, M.J. Survey on image segmentation techniques. Procedia Comput. Sci. 2015, 65, 797–806. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep learning vs. traditional computer vision. In Proceedings of the Advances in Computer Vision: Proceedings of the 2019 Computer Vision Conference (CVC), Las Vegas, NV, USA, 2–3 May 2019; Volume 11, pp. 128–144. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Lateef, F.; Ruichek, Y. Survey on semantic segmentation using deep learning techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hesamian, M.H.; Jia, W.; He, X.; Kennedy, P. Deep learning techniques for medical image segmentation: Achievements and challenges. J. Digit. Imaging 2019, 32, 582–596. [Google Scholar] [CrossRef] [PubMed]

- Loshchilov, I.; Hutter, F. Fixing Weight Decay Regularization in Adam. 2017. Available online: https://openreview.net/forum?id=rk6qdGgCZ (accessed on 20 April 2023).

- Smith, L.N.; Topin, N. Super-convergence: Very fast training of neural networks using large learning rates. In Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications, Baltimore, MA, USA, 15–17 April 2019; pp. 369–386. [Google Scholar]

- Szeliski, R. Image alignment and stitching. In Handbook of Mathematical Models in Computer Vision; Springer: New York, NY, USA, 2006; pp. 273–292. [Google Scholar]

- Sotiras, A.; Davatzikos, C.; Paragios, N. Deformable medical image registration: A survey. IEEE Trans. Med. Imaging 2013, 32, 1153–1190. [Google Scholar] [CrossRef]

- Song, G.; Han, J.; Zhao, Y.; Wang, Z.; Du, H. A review on medical image registration as an optimization problem. Curr. Med. Imaging 2017, 13, 274–283. [Google Scholar] [CrossRef] [PubMed]

- Maintz, J.A.; Viergever, M.A. A survey of medical image registration. Med. Image Anal. 1998, 2, 1–36. [Google Scholar] [CrossRef]

- Adel, E.; Elmogy, M.; Elbakry, H. Image stitching based on feature extraction techniques: A survey. Int. J. Comput. Appl. 2014, 99, 1–8. [Google Scholar] [CrossRef]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Thevenaz, P.; Ruttimann, U.E.; Unser, M. A pyramid approach to subpixel registration based on intensity. IEEE Trans. Image Process. 1998, 7, 27–41. [Google Scholar] [CrossRef]

- Chen, X.; Diaz-Pinto, A.; Ravikumar, N.; Frangi, A.F. Deep learning in medical image registration. Prog. Biomed. Eng. 2021, 3, 012003. [Google Scholar] [CrossRef]

- Kuang, D.; Schmah, T. Faim–a convnet method for unsupervised 3d medical image registration. In Proceedings of the Machine Learning in Medical Imaging: 10th International Workshop, MLMI 2019, Held in Conjunction with MICCAI 2019, Shenzhen, China, 13 October 2019; pp. 646–654. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef]

- Yan, P.; Xu, S.; Rastinehad, A.R.; Wood, B.J. Adversarial image registration with application for MR and TRUS image fusion. In Proceedings of the Machine Learning in Medical Imaging: 9th International Workshop, MLMI 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 16 September 2018; pp. 197–204. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Dalca, A.; Rakic, M.; Guttag, J.; Sabuncu, M. Learning conditional deformable templates with convolutional networks. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

- Steffensen, J. Interpolation; Courier Corporation: North Chelmsford, MA, USA, 2006. [Google Scholar]

- Yonghong, J. Digital Image Processing, 2nd ed.; Prentice Hall Press: Hoboken, NJ, USA, 2010. [Google Scholar]

- Wang, S.; Yang, K. An image scaling algorithm based on bilinear interpolation with VC++. Tech. Autom. Appl. 2008, 27, 44–45. [Google Scholar]

- Feng, J.-F.; Han, H.-J. Image enlargement based on non-uniform B-spline interpolation algorithm. J. Comput. Appl. 2010, 30, 82. [Google Scholar] [CrossRef]

- Han, D. Comparison of commonly used image interpolation methods. In Proceedings of the Conference of the 2nd International Conference on Computer Science and Electronics Engineering (ICCSEE 2013), Hangzhou, China, 22–23 March 2013; pp. 1556–1559. [Google Scholar]

- Saltar, G.; Aiyer, A.; Meneveau, C. Developing Notebook-based Flow Visualization and Analysis Modules for Computational Fluid Dynamics. In Proceedings of the APS Division of Fluid Dynamics Meeting Abstracts, Seattle, WA, USA, 23–26 November 2019; p. NP05.021. [Google Scholar]

- Kikinis, R.; Pieper, S.D.; Vosburgh, K.G. 3D Slicer: A platform for subject-specific image analysis, visualization, and clinical support. In Intraoperative Imaging and Image-Guided Therapy; Springer: Berlin/Heidelberg, Germany, 2013; pp. 277–289. [Google Scholar]

- Saha, P.K.; Borgefors, G.; di Baja, G.S. A survey on skeletonization algorithms and their applications. Pattern Recognit. Lett. 2016, 76, 3–12. [Google Scholar] [CrossRef]

- Blum, H.; Nagel, R.N. Shape description using weighted symmetric axis features. Pattern Recognit. 1978, 10, 167–180. [Google Scholar] [CrossRef]

- Lee, T.-C.; Kashyap, R.L.; Chu, C.-N. Building skeleton models via 3-D medial surface axis thinning algorithms. CVGIP Graph. Model. Image Process. 1994, 56, 462–478. [Google Scholar] [CrossRef]

- Van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T. scikit-image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef]

- Nunez-Iglesias, J.; Blanch, A.J.; Looker, O.; Dixon, M.W.; Tilley, L. A new Python library to analyse skeleton images confirms malaria parasite remodelling of the red blood cell membrane skeleton. PeerJ 2018, 6, e4312. [Google Scholar] [CrossRef]

- Nilsson, J.; Akenine-Möller, T. Understanding ssim. arXiv 2020, arXiv:2006.13846. [Google Scholar]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Sorensen, T.A. A method of establishing groups of equal amplitude in plant sociology based on similarity of species content and its application to analyses of the vegetation on Danish commons. Biol. Skar. 1948, 5, 1–34. [Google Scholar]

- Ahmed, T.; Goyal, A. Endomyocardial biopsy. In StatPearls [Internet]; StatPearls Publishing: Tampa, FL, USA, 2022. [Google Scholar]

- AlJaroudi, W.A.; Desai, M.Y.; Tang, W.W.; Phelan, D.; Cerqueira, M.D.; Jaber, W.A. Role of imaging in the diagnosis and management of patients with cardiac amyloidosis: State of the art review and focus on emerging nuclear techniques. J. Nucl. Cardiol. 2014, 21, 271–283. [Google Scholar] [CrossRef] [PubMed]

- Lal, S.; Li, A.; Allen, D.; Allen, P.D.; Bannon, P.; Cartmill, T.; Cooke, R.; Farnsworth, A.; Keogh, A.; Dos Remedios, C. Best practice biobanking of human heart tissue. Biophys. Rev. 2015, 7, 399–406. [Google Scholar] [CrossRef] [PubMed]

- Mahapatra, D.; Antony, B.; Sedai, S.; Garnavi, R. Deformable medical image registration using generative adversarial networks. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 1449–1453. [Google Scholar]

- Arimura, H.; Soufi, M.; Kamezawa, H.; Ninomiya, K.; Yamada, M. Radiomics with artificial intelligence for precision medicine in radiation therapy. J. Radiat. Res. 2019, 60, 150–157. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.Y.; Lee, K.-s.; Seo, B.K.; Cho, K.R.; Woo, O.H.; Song, S.E.; Kim, E.-K.; Lee, H.Y.; Kim, J.S.; Cha, J. Radiomic machine learning for predicting prognostic biomarkers and molecular subtypes of breast cancer using tumor heterogeneity and angiogenesis properties on MRI. Eur. Radiol. 2022, 32, 650–660. [Google Scholar] [CrossRef]

- Peng, W.K. Clustering Nuclear Magnetic Resonance: Machine learning assistive rapid two-dimensional relaxometry mapping. Eng. Rep. 2021, 3, e12383. [Google Scholar] [CrossRef]

- Kickingereder, P.; Götz, M.; Muschelli, J.; Wick, A.; Neuberger, U.; Shinohara, R.T.; Sill, M.; Nowosielski, M.; Schlemmer, H.P.; Radbruch, A.; et al. Large-scale Radiomic Profiling of Recurrent Glioblastoma Identifies an Imaging Predictor for Stratifying Anti-Angiogenic Treatment Response. Clin. Cancer Res. 2016, 22, 5765–5771. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).