AK-Score: Accurate Protein-Ligand Binding Affinity Prediction Using an Ensemble of 3D-Convolutional Neural Networks

Abstract

1. Introduction

2. Results and Discussion

2.1. Binding Affinity Prediction Accuracy

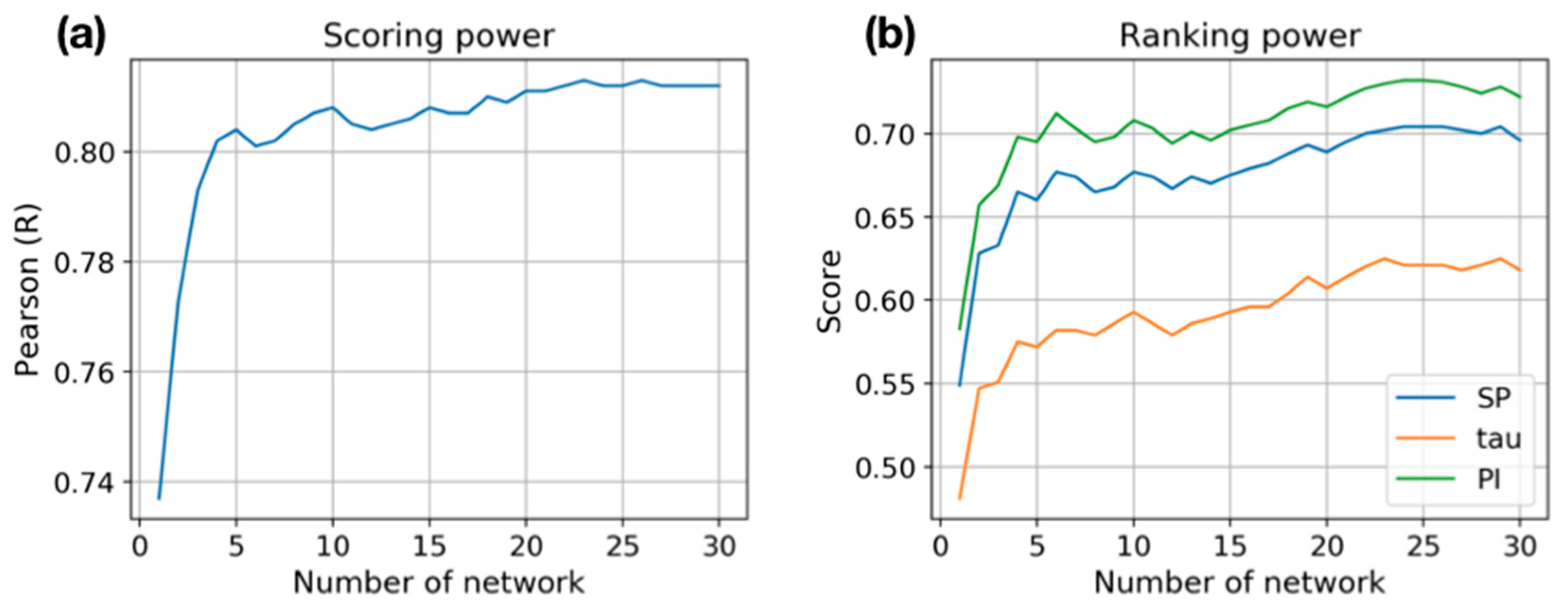

2.2. An Ensemble of Networks Improves the Quality of Prediction

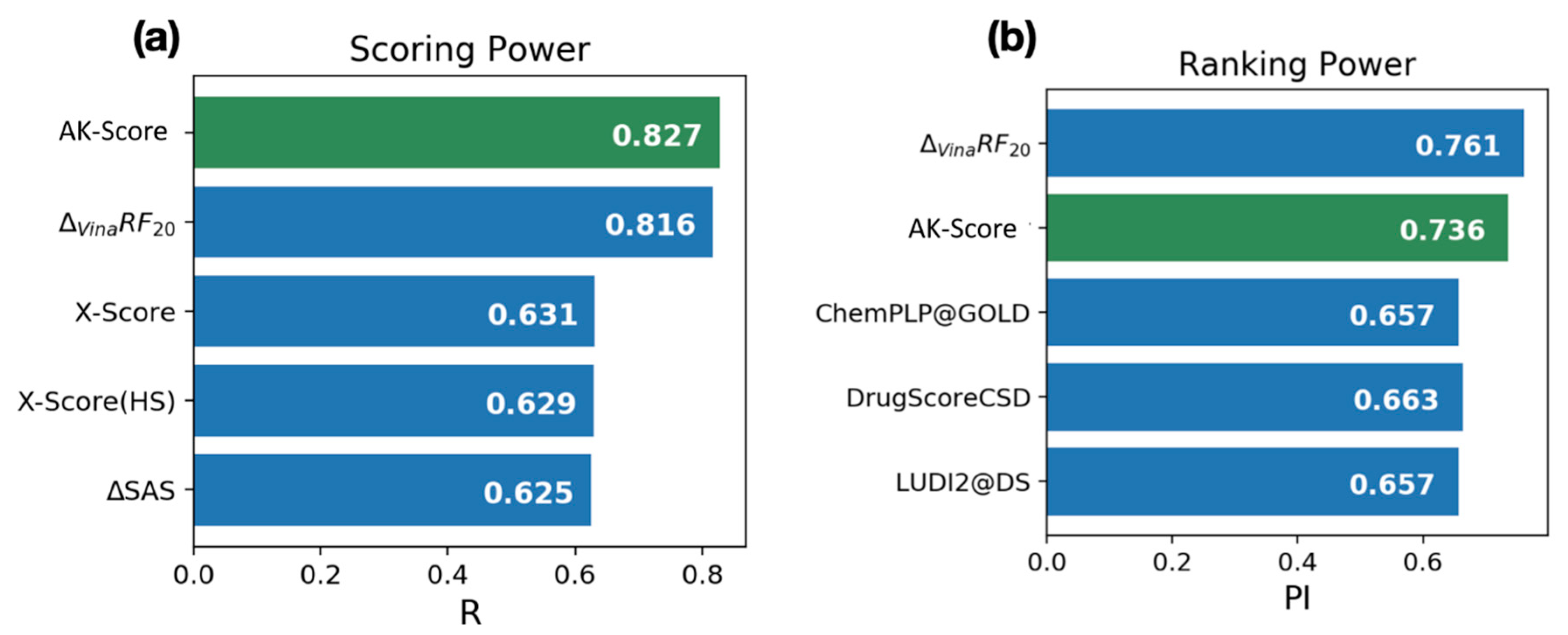

2.3. Comparison with Other Scoring Functions

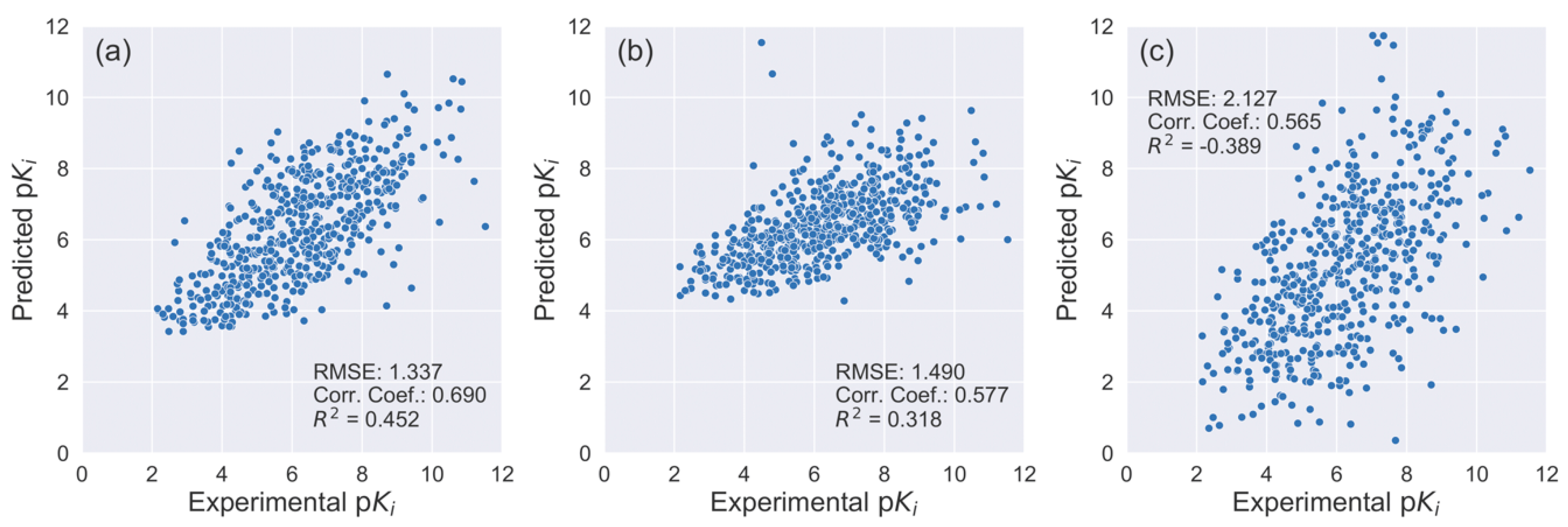

2.4. Assessment with an Additional Dataset

2.5. Identifying Hot Spots for Binding Affinity Determination Using Grad-CAM

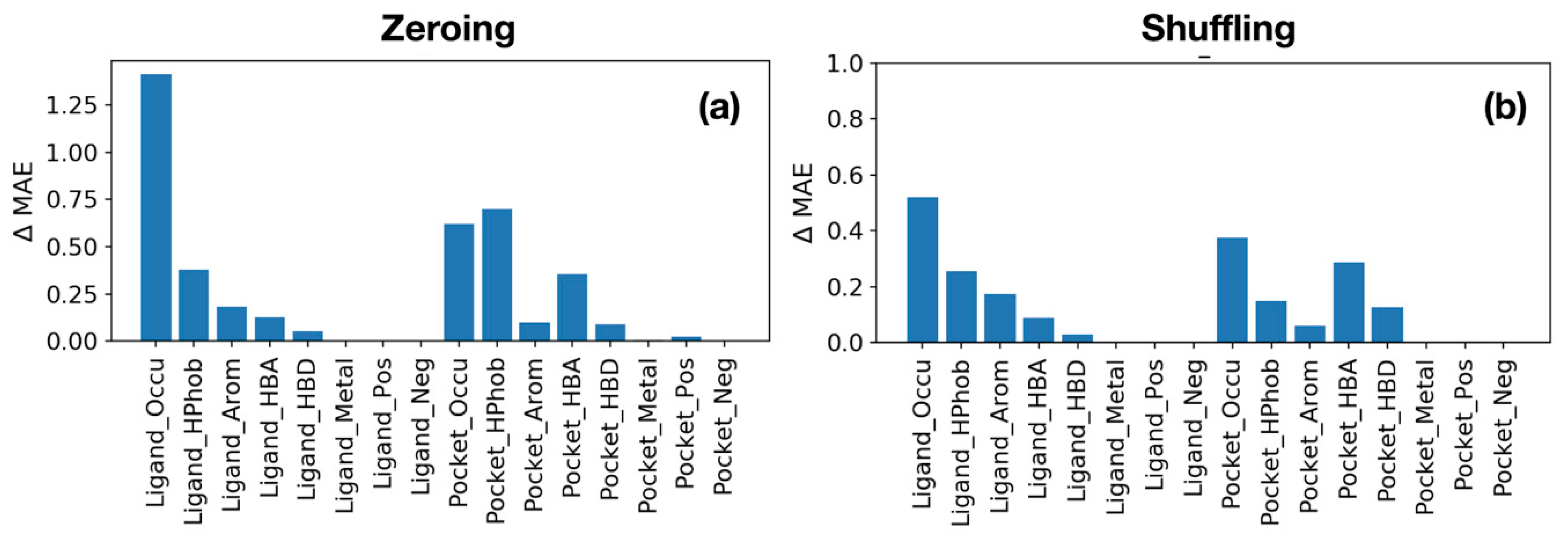

2.6. Assessment of Feature Importance via Ablation Test

3. Methods

3.1. Data Preparation

3.2. Convolutional Neural Network

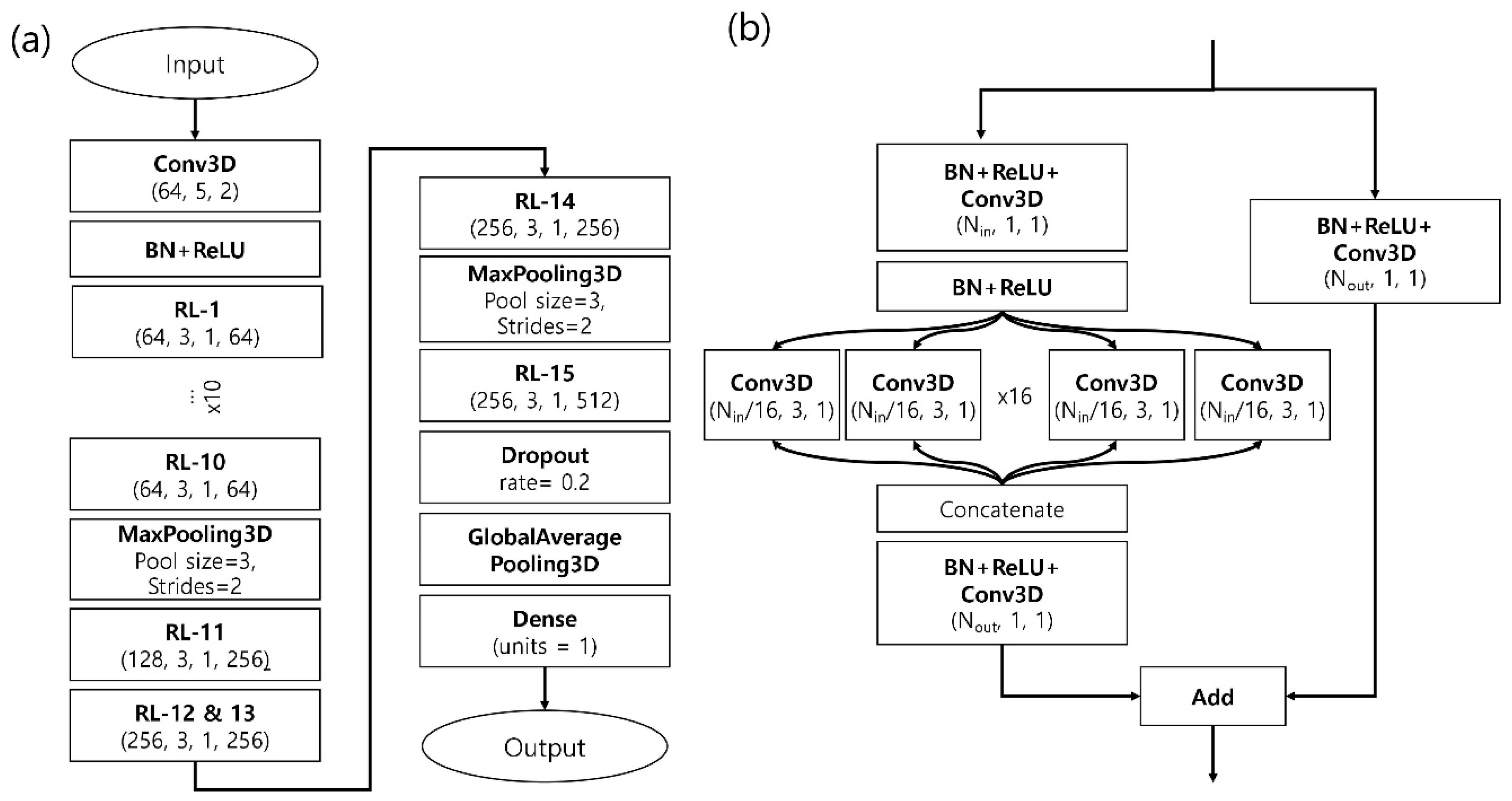

3.3. Network Architecture

3.4. Ensemble Prediction

3.5. Performance Assessment

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| FEP | Free energy perturbation |

| MD | Molecular dynamics |

| GPU | Graphics processing unit |

| CASF | Comparative assessment of scoring functions |

| 3-D-CNN | 3D convolutional neural network |

| Conv3D | 3D convolutional neural network layer |

| AK-score | Arontier-Kangwon docking scoring function |

| BN | Batch normalization |

| RL | Residual layer |

| ReLU | Rectified linear unit |

| MAE | Mean absolute error |

| PI | Predictive index |

| RMSE | Root mean squared error |

References

- Wang, L.; Wu, Y.; Deng, Y.; Kim, B.; Pierce, L.; Krilov, G.; Lupyan, D.; Robinson, S.; Dahlgren, M.K.; Greenwood, J.; et al. Accurate and reliable prediction of relative ligand binding potency in prospective drug discovery by way of a modern free-energy calculation protocol and force field. J. Am. Chem. Soc. 2015, 137, 2695–2703. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Sze, K.H.; Lu, G.; Ballester, P.J. Machine-learning scoring functions for structure-based drug lead optimization. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2020, 1–20. [Google Scholar] [CrossRef]

- Shen, C.; Ding, J.; Wang, Z.; Cao, D.; Ding, X.; Hou, T. From machine learning to deep learning: Advances in scoring functions for protein–ligand docking. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2020, 10, 1–23. [Google Scholar] [CrossRef]

- Abel, R.; Wang, L.; Harder, E.D.; Berne, B.J.; Friesner, R.A. Advancing Drug Discovery through Enhanced Free Energy Calculations. Acc. Chem. Res. 2017, 50, 1625–1632. [Google Scholar] [CrossRef]

- Schindler, C.E.M.; Baumann, H.; Blum, A.; Böse, D.; Buchstaller, H.-P.; Burgdorf, L.; Cappel, D.; Chekler, E.; Czodrowski, P.; Dorsch, D.; et al. Large-Scale Assessment of Binding Free Energy Calculations in Active Drug Discovery Projects. J. Chem. Inf. Model. 2020. [Google Scholar] [CrossRef] [PubMed]

- Irwin, J.J.; Shoichet, B.K. Docking Screens for Novel Ligands Conferring New Biology. J. Med. Chem. 2016, 59, 4103–4120. [Google Scholar] [CrossRef]

- Plotnikov, N.V.; Kamerlin, S.C.L.; Warshel, A. Paradynamics: An effective and reliable model for Ab initio QM/MM free-energy calculations and related tasks. J. Phys. Chem. B 2011, 115, 7950–7962. [Google Scholar] [CrossRef]

- Plewczynski, D.; Łaźniewski, M.; Augustyniak, R.; Ginalski, K. Can we trust docking results? Evaluation of seven commonly used programs on PDBbind database. J. Comput. Chem. 2011, 32, 742–755. [Google Scholar] [CrossRef]

- Aqvist, J.; Marelius, J. The Linear Interaction Energy Method for Predicting Ligand Binding Free Energies. Comb. Chem. High Throughput Screen. 2001, 4, 613–626. [Google Scholar] [CrossRef]

- Aqvist, J.; Guitiérrez-de-Terán, H. Linear Interaction Energy: Method and Applications in Drug Design. Methods Mol. Biol. 2012, 819, 295–303. [Google Scholar] [CrossRef]

- Rastelli, G.; Del Rio, A.; Degliesposti, G.; Sgobba, M. Fast and Accurate Predictions of Binding Free Energies Using MM-PBSA and MM-GBSA. J. Comput. Chem. 2010, 31, 797–810. [Google Scholar] [CrossRef] [PubMed]

- Tatum, N.J.; Duarte, F.; Kamerlin, S.C.L.; Pohl, E. Relative Binding Energies Predict Crystallographic Binding Modes of Ethionamide Booster Lead Compounds. J. Phys. Chem. Lett. 2019, 10, 2244–2249. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Lu, Y.; Wang, S. Comparative evaluation of 11 scoring functions for molecular docking. J. Med. Chem. 2003, 46, 2287–2303. [Google Scholar] [CrossRef] [PubMed]

- Perryman, A.L.; Santiago, D.N.; Forli, S.; Santos-Martins, D.; Olson, A.J. Virtual screening with AutoDock Vina and the common pharmacophore engine of a low diversity library of fragments and hits against the three allosteric sites of HIV integrase: Participation in the SAMPL4 protein–ligand binding challenge. J. Comput. Aided Mol. Des. 2014, 28, 429–441. [Google Scholar] [CrossRef]

- Moustakas, D.T.; Lang, P.T.; Pegg, S.; Pettersen, E.; Kuntz, I.D.; Brooijmans, N.; Rizzo, R.C. Development and validation of a modular, extensible docking program: DOCK 5. J. Comput. Aided Mol. Des. 2006, 20, 601–619. [Google Scholar] [CrossRef]

- Lorber, D.; Shoichet, B. Hierarchical Docking of Databases of Multiple Ligand Conformations. Curr. Top. Med. Chem. 2005, 5, 739–749. [Google Scholar] [CrossRef]

- Morris, G.M.; Ruth, H.; Lindstrom, W.; Sanner, M.F.; Belew, R.K.; Goodsell, D.S.; Olson, A.J. AutoDock4 and AutoDockTools4: Automated docking with selective receptor flexibility. J. Comput. Chem. 2009, 30, 2785–2791. [Google Scholar] [CrossRef]

- Trott, O.; Olson, A.J. AutoDock Vina: Improving the speed and accuracy of docking with a new scoring function, efficient optimization and multithreading. J. Comput. Chem. 2010, 31, 455–461. [Google Scholar] [CrossRef]

- Eldridge, M.D.; Murray, C.W.; Auton, T.R.; Paolini, G.V.; Mee, R.P. Empirical scoring functions: I. The development of a fast empirical scoring function to estimate the binding affinity of ligands in receptor complexes. J. Comput. Aided Mol. Des. 1997, 11, 425–445. [Google Scholar] [CrossRef]

- Jones, G.; Willett, P.; Glen, R.C.; Leach, A.R.; Taylor, R. Development and validation of a genetic algorithm for flexible docking. J. Mol. Biol. 1997, 267, 727–748. [Google Scholar] [CrossRef]

- Rarey, M.; Kramer, B.; Lengauer, T.; Klebe, G. A Fast Flexible Docking Method using an Incremental Construction Algorithm. J. Mol. Biol. 1996, 261, 470–489. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.N. Surflex-Dock 2.1: Robust performance from ligand energetic modeling, ring flexibility, and knowledge-based search. J. Comput. Aided Mol. Des. 2007, 21, 281–306. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Lai, L.; Wang, S. Further development and validation of empirical scoring functions for structure-based binding affinity prediction. J. Comput. Aided Mol. Des. 2002, 16, 11–26. [Google Scholar] [CrossRef] [PubMed]

- Friesner, R.A.; Banks, J.L.; Murphy, R.B.; Halgren, T.A.; Klicic, J.J.; Mainz, D.T.; Repasky, M.P.; Knoll, E.H.; Shelley, M.; Perry, J.K.; et al. Glide: A New Approach for Rapid, Accurate Docking and Scoring. 1. Method and Assessment of Docking Accuracy. J. Med. Chem. 2004, 47, 1739–1749. [Google Scholar] [CrossRef] [PubMed]

- Korb, O.; Stuzle, T.; Exner, T.E. Empirical Scoring Functions for Advanced Protein-Ligand Docking with PLANTS. J. Chem. Inf. Model. 2009, 49, 84–96. [Google Scholar] [CrossRef]

- Thomas, P.D.; Dill, K.A. Statistical potentials extracted from protein structures: How accurate are they? J. Mol. Biol. 1996, 257, 457–469. [Google Scholar] [CrossRef]

- Velec, H.F.G.; Gohlke, H.; Klebe, G. DrugScoreCSD-knowledge-based scoring function derived from small molecule crystal data with superior recognition rate of near-native ligand poses and better affinity prediction. J. Med. Chem. 2005, 48, 6296–6303. [Google Scholar] [CrossRef]

- Huang, S.Y.; Zou, X. Inclusion of Solvation and Entropy in the Knowledge-Based Scoring Function for Protein−Ligand Interactions. J. Chem. Inf. Model. 2010, 50, 262–273. [Google Scholar] [CrossRef]

- Ishchenko, A.V.; Shakhnovich, E.I. SMall Molecule Growth 2001 (SMoG2001): An Improved Knowledge-Based Scoring Function for Protein−Ligand Interactions. J. Med. Chem. 2002, 45, 2770–2780. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, S.; Zhu, Q.; Zhou, Y. A knowledge-based energy function for protein-ligand, protein-protein, and protein-DNA complexes. J. Med. Chem. 2005, 48, 2325–2335. [Google Scholar] [CrossRef]

- Muegge, I. PMF scoring revisited. J. Med. Chem. 2006, 49, 5895–5902. [Google Scholar] [CrossRef] [PubMed]

- Senior, A.W.; Evans, R.; Jumper, J.; Kirkpatrick, J.; Sifre, L.; Green, T.; Qin, C.; Žídek, A.; Nelson, A.W.R.; Bridgland, A.; et al. Protein structure prediction using multiple deep neural networks in the 13th Critical Assessment of Protein Structure Prediction (CASP13). Proteins Struct. Funct. Bioinforma 2019, 87, 1141–1148. [Google Scholar] [CrossRef] [PubMed]

- Senior, A.W.; Evans, R.; Jumper, J.; Kirkpatrick, J.; Sifre, L.; Green, T.; Qin, C.; Žídek, A.; Nelson, A.W.R.; Bridgland, A.; et al. Improved protein structure prediction using potentials from deep learning. Nature 2020, 577, 706–710. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Sze, K.H.; Lu, G.; Ballester, P.J. Machine-learning scoring functions for structure-based virtual screening. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2020, 7, 1–21. [Google Scholar] [CrossRef]

- Ragoza, M.; Hochuli, J.; Idrobo, E.; Sunseri, J.; Koes, D.R. Protein-Ligand Scoring with Convolutional Neural Networks. J. Chem. Inf. Model. 2017, 57, 942–957. [Google Scholar] [CrossRef] [PubMed]

- Stepniewska-Dziubinska, M.M.; Zielenkiewicz, P.; Siedlecki, P. Development and evaluation of a deep learning model for protein–ligand binding affinity prediction. Bioinformatics 2018, 34, 3666–3674. [Google Scholar] [CrossRef] [PubMed]

- Jiménez, J.; Škalič, M.; Martínez-Rosell, G.; De Fabritiis, G. KDEEP: Protein-Ligand Absolute Binding Affinity Prediction via 3D-Convolutional Neural Networks. J. Chem. Inf. Model. 2018, 58, 287–296. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zhang, H.; Liao, L.; Saravanan, K.M.; Yin, P.; Wei, Y. DeepBindRG: A deep learning based method for estimating effective protein–ligand affinity. PeerJ 2019, 7, e7362. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Zheng, L.; Fan, J.; Mu, Y. OnionNet: A Multiple-Layer Intermolecular-Contact-Based Convolutional Neural Network for Protein-Ligand Binding Affinity Prediction. ACS Omega 2019, 4, 15956–15965. [Google Scholar] [CrossRef] [PubMed]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. arXiv 2016, arXiv:1611.05431. [Google Scholar]

- Su, M.; Yang, Q.; Du, Y.; Feng, G.; Liu, Z.; Li, Y.; Wang, R. Comparative Assessment of Scoring Functions: The CASF-2016 Update. J. Chem. Inf. Model. 2019, 59, 895–913. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Meyes, R.; Lu, M.; de Puiseau, C.W.; Meisen, T. Ablation Studies in Artificial Neural Networks. arXiv 2019, arXiv:1901.08644. [Google Scholar]

- Wang, R.; Fang, X.; Lu, Y.; Yang, C.Y.; Wang, S. The PDBbind database: Methodologies and updates. J. Med. Chem. 2005, 48, 4111–4119. [Google Scholar] [CrossRef]

- Gasteiger, J.; Marsili, M. Interative partial equalization of orbital electronegativity-a rapid assess to atomic charges. Tetrahedron 1980, 36, 3219–3228. [Google Scholar] [CrossRef]

- TensorFlow White Papers. Available online: https://www.tensorflow.org/about/bib (accessed on 23 September 2020).

- Cheng, T.J.; Li, X.; Li, Y.; Liu, Z.H.; Wang, R.X. Comparative assessment of scoring functions on a diverse test set. J. Chem. Inf. Model. 2009, 49, 1079–1093. [Google Scholar] [CrossRef]

- Li, Y.; Han, L.; Liu, Z.H.; Wang, R.X. Comparative Assessment of Scoring Functions on an Updated Benchmark: II. Evaluation Methods and General Results. J. Chem. Inf. Model. 2014, 54, 1717–1736. [Google Scholar] [CrossRef]

- Pearlman, D.A.; Charifson, P.S. Are free energy calculations useful in practice? A comparison with rapid scoring functions for the p38 MAP kinase protein system. J. Med. Chem. 2001, 44, 3417–3423. [Google Scholar] [CrossRef]

| Model | Learning Rate | MAE (kcal/mol) | RMSE (kcal/mol) |

|---|---|---|---|

| KDEEP | 0.0001 | 1.131 | 1.462 |

| 0.0005 | 1.200 | 1.519 | |

| 0.0006 | 1.164 | 1.534 | |

| 0.0010 | 1.219 | 1.536 | |

| AK-score-single | 0.0001 | 1.159 | 1.511 |

| 0.0005 | 1.101 | 1.415 | |

| 0.0007 | 1.130 | 1.425 | |

| 0.0010 | 1.110 | 1.406 | |

| AK-score-ensemble | 0.0007 | 1.014 | 1.293 |

| Model | Scoring | Ranking | Docking | |||||

|---|---|---|---|---|---|---|---|---|

| learning rate | Pearson (R) | Spearman (SP) | Kendall (tau) | Predictive Index (PI) | Top 1 (%) | Top 2 (%) | Top 3 (%) | |

| KDEEP | 0.0001 | 0.738 | 0.539 | 0.435 | 0.559 | 24.8 | 38.5 | 52.2 |

| 0.0005 | 0.709 | 0.486 | 0.389 | 0.535 | 29.1 | 39.9 | 49.6 | |

| 0.0006 | 0.701 | 0.528 | 0.439 | 0.558 | 29.1 | 39.9 | 49.6 | |

| 0.0010 | 0.715 | 0.479 | 0.400 | 0.492 | 24.8 | 36.3 | 44.6 | |

| AK-score-single | 0.0001 | 0.719 | 0.572 | 0.456 | 0.600 | 34.9 | 48.6 | 56.1 |

| 0.0005 | 0.755 | 0.596 | 0.512 | 0.616 | 29.9 | 43.2 | 54.0 | |

| 0.0007 | 0.759 | 0.616 | 0.526 | 0.640 | 31.3 | 47.1 | 57.9 | |

| 0.0010 | 0.760 | 0.598 | 0.505 | 0.627 | 26.3 | 43.9 | 54.0 | |

| AK-score-ensemble | 0.0007 | 0.812 | 0.670 | 0.589 | 0.698 | 36.0 | 51.4 | 59.7 |

| Atom Type | Definition |

|---|---|

| Hydrophobic | Aliphatic or aromatic C |

| Aromatic | Aromatic C |

| Hydrogen bond donor | Hydrogen bonded to N, O, or S |

| Hydrogen bond acceptor | N, O, and S with lone electron pairs |

| Positive | Ionizable Gasteiger positive charge |

| Negative | Ionizable Gasteiger negative charge |

| Metallic | Mg, Zn, Mn, Ca, or Fe |

| Excluded Volume | All atom-types |

| Category | Aim of a Category | Metric |

|---|---|---|

| Scoring | How well the scoring function correlates with the experimental binding affinities? | Pearson correlation coefficient |

| Ranking | How relative order of binding affinities is correctly predicted? | Spearman correlation coefficient Kendall tau Predictive index |

| Docking | Can the scoring function find the native ligand binding pose? | Top 1 (%), Top 2 (%), Top 3(%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwon, Y.; Shin, W.-H.; Ko, J.; Lee, J. AK-Score: Accurate Protein-Ligand Binding Affinity Prediction Using an Ensemble of 3D-Convolutional Neural Networks. Int. J. Mol. Sci. 2020, 21, 8424. https://doi.org/10.3390/ijms21228424

Kwon Y, Shin W-H, Ko J, Lee J. AK-Score: Accurate Protein-Ligand Binding Affinity Prediction Using an Ensemble of 3D-Convolutional Neural Networks. International Journal of Molecular Sciences. 2020; 21(22):8424. https://doi.org/10.3390/ijms21228424

Chicago/Turabian StyleKwon, Yongbeom, Woong-Hee Shin, Junsu Ko, and Juyong Lee. 2020. "AK-Score: Accurate Protein-Ligand Binding Affinity Prediction Using an Ensemble of 3D-Convolutional Neural Networks" International Journal of Molecular Sciences 21, no. 22: 8424. https://doi.org/10.3390/ijms21228424

APA StyleKwon, Y., Shin, W.-H., Ko, J., & Lee, J. (2020). AK-Score: Accurate Protein-Ligand Binding Affinity Prediction Using an Ensemble of 3D-Convolutional Neural Networks. International Journal of Molecular Sciences, 21(22), 8424. https://doi.org/10.3390/ijms21228424