Recent Advances in Deep Learning for Protein-Protein Interaction Analysis: A Comprehensive Review

Abstract

1. Introduction

2. Literature Review Methods

2.1. Study Selection Process

2.2. An Analysis of Selected Papers

2.3. Journals of Publications

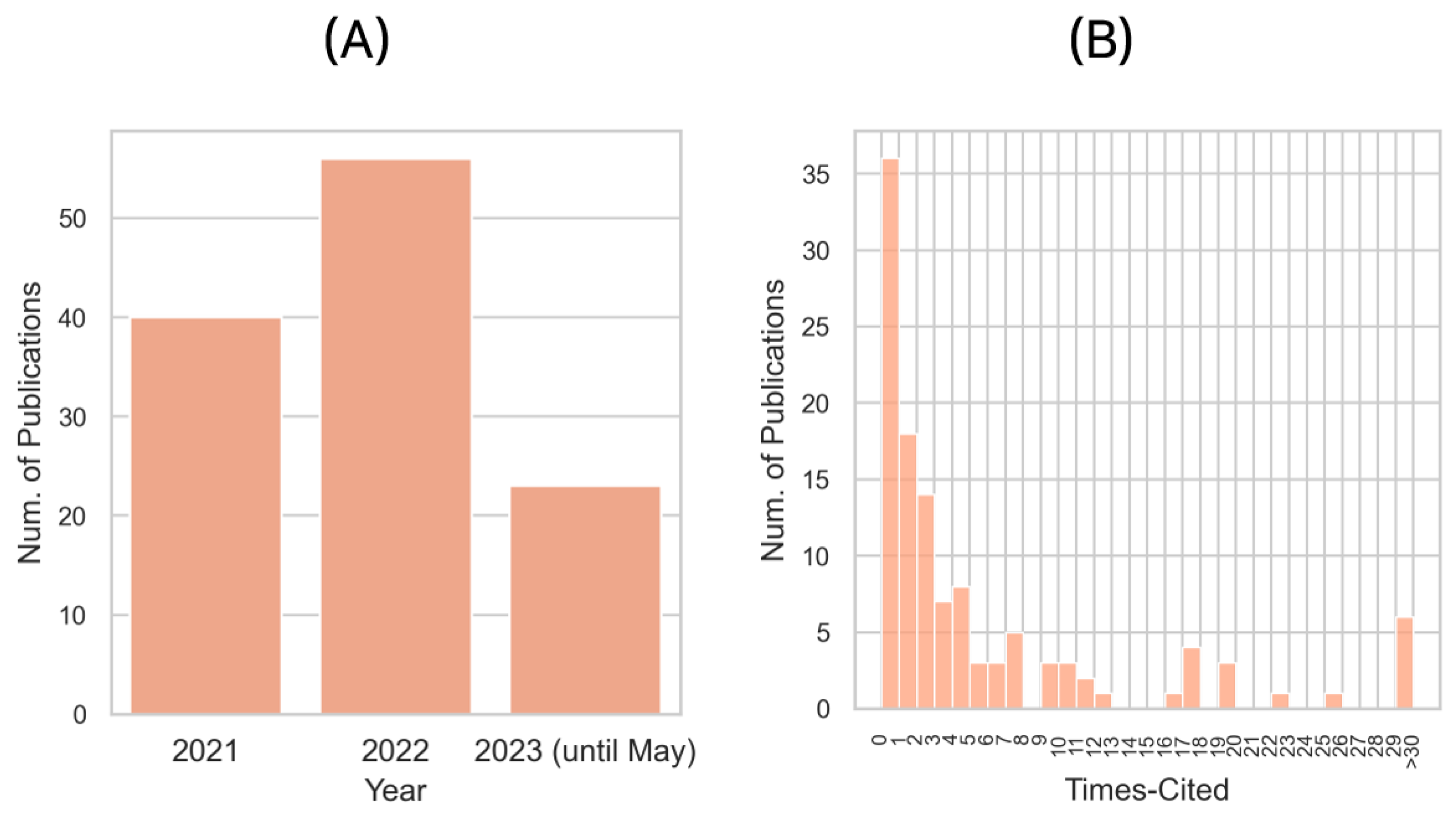

2.4. Year and Citations of Publications

3. Historical Deep Learning Methods for Protein–Protein Interaction Analysis

4. Graph Neural Networks for Protein–Protein Interactions

4.1. Pairwise PPI Prediction

4.2. PPI Network Prediction

4.3. PPI Site Prediction

4.4. Docking

4.5. Auxiliary PPI Prediction Tasks

5. Convolutional Neural Networks for Protein–Protein Interactions

5.1. Pairwise PPI Prediction

5.2. PPI Network Prediction

5.3. PPI Site Prediction

5.4. Docking

5.5. Auxiliary PPI Prediction Tasks

6. Representation Learning and Autoencoder for Protein–Protein Interactions

6.1. Pairwise PPI Prediction

6.2. PPI Network Prediction

6.3. PPI Site Prediction

6.4. Auxiliary PPI Prediction Tasks

7. Recurrent Neural Networks for Protein–Protein Interactions

7.1. Pairwise PPI Prediction

7.2. PPI Site Prediction

7.3. PPI Network Prediction

7.4. Auxiliary PPI Prediction Tasks

8. Attention Mechanism and Transformer for Protein–Protein Interactions

8.1. Pairwise PPI Prediction

8.2. PPI Site Prediction

8.3. PPI Network Prediction

8.4. Auxiliary PPI Prediction Tasks

8.5. Protein Docking

9. Multi-task or Multi-modal Deep Learning Models for Protein–Protein Interactions

9.1. Pairwise PPI Prediction

9.2. PPI Network Prediction

9.3. PPI Site Prediction

9.4. Auxiliary PPI Prediction Tasks

10. Transfer Learning for Protein–Protein Interactions

11. Other Emerging Topics for Protein–Protein Interactions

12. Challenges and Future Directions in Recent Studies

13. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rajpurkar, P.; Chen, E.; Banerjee, O.; Topol, E.J. AI in health and medicine. Nat. Med. 2022, 28, 31–38. [Google Scholar] [CrossRef]

- Cetinic, E.; She, J. Understanding and creating art with AI: Review and outlook. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022, 18, 1–22. [Google Scholar] [CrossRef]

- Chamola, V.; Hassija, V.; Gupta, V.; Guizani, M. A comprehensive review of the COVID-19 pandemic and the role of IoT, drones, AI, blockchain, and 5G in managing its impact. IEEE Access 2020, 8, 90225–90265. [Google Scholar] [CrossRef]

- Aggarwal, A.; Mittal, M.; Battineni, G. Generative adversarial network: An overview of theory and applications. Int. J. Inf. Manag. Data Insights 2021, 1, 100004. [Google Scholar] [CrossRef]

- Jabbar, A.; Li, X.; Omar, B. A survey on generative adversarial networks: Variants, applications, and training. ACM Comput. Surv. (CSUR) 2021, 54, 1–49. [Google Scholar] [CrossRef]

- Cai, Z.; Xiong, Z.; Xu, H.; Wang, P.; Li, W.; Pan, Y. Generative adversarial networks: A survey toward private and secure applications. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, X.H.; Wei, Z.; Heidari, A.A.; Zheng, N.; Li, Z.; Chen, H.; Hu, H.; Zhou, Q.; Guan, Q. Generative adversarial networks in medical image augmentation: A review. Comput. Biol. Med. 2022, 144, 105382. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A review on generative adversarial networks: Algorithms, theory, and applications. IEEE Trans. Knowl. Data Eng. 2021, 35, 3313–3332. [Google Scholar] [CrossRef]

- Barron, J.T.; Mildenhall, B.; Verbin, D.; Srinivasan, P.P.; Hedman, P. Mip-nerf 360: Unbounded anti-aliased neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5470–5479. [Google Scholar]

- Martin-Brualla, R.; Radwan, N.; Sajjadi, M.S.; Barron, J.T.; Dosovitskiy, A.; Duckworth, D. Nerf in the wild: Neural radiance fields for unconstrained photo collections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7210–7219. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Zhang, K.; Riegler, G.; Snavely, N.; Koltun, V. Nerf++: Analyzing and improving neural radiance fields. arXiv 2020, arXiv:2010.07492. [Google Scholar]

- Yu, A.; Li, R.; Tancik, M.; Li, H.; Ng, R.; Kanazawa, A. Plenoctrees for real-time rendering of neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5752–5761. [Google Scholar]

- Pumarola, A.; Corona, E.; Pons-Moll, G.; Moreno-Noguer, F. D-nerf: Neural radiance fields for dynamic scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10318–10327. [Google Scholar]

- OpenAI. GPT-4 Technical Report. OpenAI Technical Report. 2023. Available online: https://cdn.openai.com/papers/gpt-4.pdf (accessed on 15 May 2023).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners. OpenAI Technical Report. 2019. Available online: https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 15 May 2023).

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. OpenAI Technical Report. 2018. Available online: https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 15 May 2023).

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Zhang, Y.; Shi, X.; Zhang, H.; Cao, Y.; Terzija, V. Review on deep learning applications in frequency analysis and control of modern power system. Int. J. Electr. Power Energy Syst. 2022, 136, 107744. [Google Scholar] [CrossRef]

- Yazici, I.; Beyca, O.F.; Delen, D. Deep-learning-based short-term electricity load forecasting: A real case application. Eng. Appl. Artif. Intell. 2022, 109, 104645. [Google Scholar] [CrossRef]

- Choudhary, K.; DeCost, B.; Chen, C.; Jain, A.; Tavazza, F.; Cohn, R.; Park, C.W.; Choudhary, A.; Agrawal, A.; Billinge, S.J.; et al. Recent advances and applications of deep learning methods in materials science. npj Comput. Mater. 2022, 8, 59. [Google Scholar] [CrossRef]

- Tang, B.; Pan, Z.; Yin, K.; Khateeb, A. Recent advances of deep learning in bioinformatics and computational biology. Front. Genet. 2019, 10, 214. [Google Scholar] [CrossRef] [PubMed]

- Yazdani, A.; Lu, L.; Raissi, M.; Karniadakis, G.E. Systems biology informed deep learning for inferring parameters and hidden dynamics. PLoS Comput. Biol. 2020, 16, e1007575. [Google Scholar] [CrossRef] [PubMed]

- Zampieri, G.; Vijayakumar, S.; Yaneske, E.; Angione, C. Machine and deep learning meet genome-scale metabolic modeling. PLoS Comput. Biol. 2019, 15, e1007084. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Zhou, Q.; He, J.; Jiang, Z.; Peng, C.; Tong, R.; Shi, J. Recent advances in the development of protein–protein interactions modulators: Mechanisms and clinical trials. Signal Transduct. Target. Ther. 2020, 5, 213. [Google Scholar] [CrossRef]

- Bryant, P.; Pozzati, G.; Elofsson, A. Improved prediction of protein–protein interactions using AlphaFold2. Nat. Commun. 2022, 13, 1265. [Google Scholar] [CrossRef]

- Hu, L.; Wang, X.; Huang, Y.A.; Hu, P.; You, Z.H. A survey on computational models for predicting protein–protein interactions. Brief. Bioinform. 2021, 22, bbab036. [Google Scholar] [CrossRef]

- Richards, A.L.; Eckhardt, M.; Krogan, N.J. Mass spectrometry-based protein–protein interaction networks for the study of human diseases. Mol. Syst. Biol. 2021, 17, e8792. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Albu, A.I.; Bocicor, M.I.; Czibula, G. MM-StackEns: A new deep multimodal stacked generalization approach for protein–protein interaction prediction. Comput. Biol. Med. 2023, 153, 106526. [Google Scholar] [CrossRef] [PubMed]

- Azadifar, S.; Ahmadi, A. A novel candidate disease gene prioritization method using deep graph convolutional networks and semi-supervised learning. BMC Bioinform. 2022, 23, 422. [Google Scholar] [CrossRef] [PubMed]

- Baranwal, M.; Magner, A.; Saldinger, J.; Turali-Emre, E.S.; Elvati, P.; Kozarekar, S.; VanEpps, J.S.; Kotov, N.A.; Violi, A.; Hero, A.O. Struct2Graph: A graph attention network for structure based predictions of protein–protein interactions. BMC Bioinform. 2022, 23, 370. [Google Scholar] [CrossRef] [PubMed]

- Dai, X.; Xu, F.; Wang, S.; Mundra, P.A.; Zheng, J. PIKE-R2P: Protein-protein interaction network-based knowledge embedding with graph neural network for single-cell RNA to protein prediction. BMC Bioinform. 2021, 22 (Suppl. 6), 139. [Google Scholar] [CrossRef]

- Gao, J.; Gao, J.; Ying, X.; Lu, M.; Wang, J. Higher-Order Interaction Goes Neural: A Substructure Assembling Graph Attention Network for Graph Classification. IEEE Trans. Knowl. Data Eng. 2023, 35, 1594–1608. [Google Scholar] [CrossRef]

- Hinnerichs, T.; Hoehndorf, R. DTI-Voodoo: Machine learning over interaction networks and ontology-based background knowledge predicts drug-target interactions. Bioinformatics 2021, 37, 4835–4843. [Google Scholar] [CrossRef]

- Jha, K.; Saha, S.; Singh, H. Prediction of protein–protein interaction using graph neural networks. Sci. Rep. 2022, 12, 8360. [Google Scholar] [CrossRef]

- Kim, S.; Bae, S.; Piao, Y.; Jo, K. Graph Convolutional Network for Drug Response Prediction Using Gene Expression Data. Mathematics 2021, 9, 772. [Google Scholar] [CrossRef]

- Kishan, K.C.; Li, R.; Cui, F.; Haake, A.R. Predicting Biomedical Interactions with Higher-Order Graph Convolutional Networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 19, 676–687. [Google Scholar]

- Mahbub, S.; Bayzid, M.S. EGRET: Edge aggregated graph attention networks and transfer learning improve protein–protein interaction site prediction. Brief. Bioinform. 2022, 23, bbab578. [Google Scholar] [CrossRef]

- Quadrini, M.; Daberdaku, S.; Ferrari, C. Hierarchical representation for PPI sites prediction. BMC Bioinform. 2022, 23, 96. [Google Scholar] [CrossRef]

- Reau, M.; Renaud, N.; Xue, L.C.; Bonvin, A.M.J.J. DeepRank-GNN: A graph neural network framework to learn patterns in protein–protein interfaces. Bioinformatics 2023, 39, btac759. [Google Scholar] [CrossRef]

- Saxena, R.; Patil, S.P.; Verma, A.K.; Jadeja, M.; Vyas, P.; Bhateja, V.; Lin, J.C.W. An Efficient Bet-GCN Approach for Link Prediction. Int. J. Interact. Multimed. Artif. Intell. 2023, 8, 38–52. [Google Scholar] [CrossRef]

- Schapke, J.; Tavares, A.; Recamonde-Mendoza, M. EPGAT: Gene Essentiality Prediction with Graph Attention Networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 19, 1615–1626. [Google Scholar] [CrossRef]

- Lemieux, G.S.P.; Paquet, E.; Viktor, H.L.; Michalowski, W. Geometric Deep Learning for Protein-Protein Interaction Predictions. IEEE Access 2022, 10, 90045–90055. [Google Scholar] [CrossRef]

- Strokach, A.; Lu, T.Y.; Kim, P.M. ELASPIC2 (EL2): Combining Contextualized Language Models and Graph Neural Networks to Predict Effects of Mutations. J. Mol. Biol. 2021, 433. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, L.L.; Wong, L.; Li, Y.; Wang, L.; You, Z.H. SIPGCN: A Novel Deep Learning Model for Predicting Self-Interacting Proteins from Sequence Information Using Graph Convolutional Networks. Biomedicines 2022, 10, 1543. [Google Scholar] [CrossRef]

- Wang, R.H.; Luo, T.; Zhang, H.L.; Du, P.F. PLA-GNN: Computational inference of protein subcellular location alterations under drug treatments with deep graph neural networks. Comput. Biol. Med. 2023, 157, 106775. [Google Scholar] [CrossRef] [PubMed]

- Williams, N.P.; Rodrigues, C.H.M.; Truong, J.; Ascher, D.B.; Holien, J.K. DockNet: High-throughput protein–protein interface contact prediction. Bioinformatics 2023, 39, btac797. [Google Scholar] [CrossRef]

- Yuan, Q.; Chen, J.; Zhao, H.; Zhou, Y.; Yang, Y. Structure-aware protein–protein interaction site prediction using deep graph convolutional network. Bioinformatics 2022, 38, 125–132. [Google Scholar] [CrossRef]

- Zaki, N.; Singh, H.; Mohamed, E.A. Identifying Protein Complexes in Protein-Protein Interaction Data Using Graph Convolutional Network. IEEE Access 2021, 9, 123717–123726. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, W.; Jin, J.; Zheng, Z.; Zhou, B. Graph Neural Network for Protein-Protein Interaction Prediction: A Comparative Study. Molecules 2022, 27, 6135. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Jiang, Y.; Yang, Y. AGAT-PPIS: A novel protein–protein interaction site predictor based on augmented graph attention network with initial residual and identity mapping. Brief. Bioinform. 2023, 24, bbad122. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Wang, S.; Song, T.; Li, X.; Han, P.; Gao, C. DCSE: Double-Channel-Siamese-Ensemble model for protein protein interaction prediction. BMC Genom. 2022, 23, 555. [Google Scholar] [CrossRef] [PubMed]

- Gao, H.; Chen, C.; Li, S.; Wang, C.; Zhou, W.; Yu, B. Prediction of protein–protein interactions based on ensemble residual convolutional neural network. Comput. Biol. Med. 2023, 152, 106471. [Google Scholar] [CrossRef]

- Guo, L.; He, J.; Lin, P.; Huang, S.Y.; Wang, J. TRScore: A 3D RepVGG-based scoring method for ranking protein docking models. Bioinformatics 2022, 38, 2444–2451. [Google Scholar] [CrossRef]

- Hu, X.; Feng, C.; Zhou, Y.; Harrison, A.; Chen, M. DeepTrio: A ternary prediction system for protein–protein interaction using mask multiple parallel convolutional neural networks. Bioinformatics 2022, 38, 694–702. [Google Scholar] [CrossRef]

- Hu, J.; Dong, M.; Tang, Y.X.; Zhang, G.J. Improving protein–protein interaction site prediction using deep residual neural network. Anal. Biochem. 2023, 670, 115132. [Google Scholar] [CrossRef]

- Kozlovskii, I.; Popov, P. Protein-Peptide Binding Site Detection Using 3D Convolutional Neural Networks. J. Chem. Inf. Model. 2021, 61, 3814–3823. [Google Scholar] [CrossRef] [PubMed]

- Mallet, V.; Ruano, L.C.; Franel, A.M.; Nilges, M.; Druart, K.; Bouvier, G.; Sperandio, O. InDeep: 3D fully convolutional neural networks to assist in silico drug design on protein–protein interactions. Bioinformatics 2022, 38, 1261–1268. [Google Scholar] [CrossRef] [PubMed]

- Song, T.; Markham, K.K.; Li, Z.; Muller, K.E.; Greenham, K.; Kuang, R. Detecting spatially co-expressed gene clusters with functional coherence by graph-regularized convolutional neural network. Bioinformatics 2022, 38, 1344–1352. [Google Scholar] [CrossRef]

- Tsukiyama, S.; Kurata, H. Cross-attention PHV: Prediction of human and virus protein–protein interactions using cross-attention-based neural networks. Comput. Struct. Biotechnol. J. 2022, 20, 5564–5573. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Z.; Zhang, Y.; Ma, Y.; Huang, Q.; Chen, X.; Dai, Z.; Zou, X. Performance improvement for a 2D convolutional neural network by using SSC encoding on protein–protein interaction tasks. BMC Bioinform. 2021, 22, 184. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Gao, Y.; Wang, Y.; Guan, J. Protein-protein interaction prediction based on ordinal regression and recurrent convolutional neural networks. BMC Bioinform. 2021, 22, 485. [Google Scholar] [CrossRef]

- Yang, H.; Wang, M.; Liu, X.; Zhao, X.M.; Li, A. PhosIDN: An integrated deep neural network for improving protein phosphorylation site prediction by combining sequence and protein–protein interaction information. Bioinformatics 2021, 37, 4668–4676. [Google Scholar] [CrossRef]

- Yuan, X.; Deng, H.; Hu, J. Constructing a PPI Network Based on Deep Transfer Learning for Protein Complex Detection. IEEJ Trans. Electr. Electron. Eng. 2022, 17, 436–444. [Google Scholar] [CrossRef]

- Asim, M.N.; Ibrahim, M.A.; Malik, M.I.; Dengel, A.; Ahmed, S. LGCA-VHPPI: A local-global residue context aware viral-host protein–protein interaction predictor. PLoS ONE 2022, 17, e0270275. [Google Scholar] [CrossRef]

- Czibula, G.; Albu, A.I.; Bocicor, M.I.; Chira, C. AutoPPI: An Ensemble of Deep Autoencoders for Protein-Protein Interaction Prediction. Entropy 2021, 23, 643. [Google Scholar] [CrossRef]

- Hasibi, R.; Michoel, T. A Graph Feature Auto-Encoder for the prediction of unobserved node features on biological networks. BMC Bioinform. 2021, 22, 525. [Google Scholar] [CrossRef]

- Ieremie, I.; Ewing, R.M.; Niranjan, M. TransformerGO: Predicting protein–protein interactions by modelling the attention between sets of gene ontology terms. Bioinformatics 2022, 38, 2269–2277. [Google Scholar] [CrossRef]

- Jha, K.; Saha, S.; Tanveer, M. Prediction of protein–protein interactions using stacked auto-encoder. Trans. Emerg. Telecommun. Technol. 2022, 33. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, Y.; Shen, L.; Adjeroh, D.A.; Liu, Z.; Lin, J. Identification of all-against-all protein–protein interactions based on deep hash learning. BMC Bioinform. 2022, 23, 266. [Google Scholar] [CrossRef]

- Liu, Y.; He, R.; Qu, Y.; Zhu, Y.; Li, D.; Ling, X.; Xia, S.; Li, Z.; Li, D. Integration of Human Protein Sequence and Protein-Protein Interaction Data by Graph Autoencoder to Identify Novel Protein-Abnormal Phenotype Associations. Cells 2022, 11, 2485. [Google Scholar] [CrossRef] [PubMed]

- Nourani, E.; Asgari, E.; McHardy, A.C.; Mofrad, M.R.K. TripletProt: Deep Representation Learning of Proteins Based On Siamese Networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 19, 3744–3753. [Google Scholar] [CrossRef] [PubMed]

- Orasch, O.; Weber, N.; Mueller, M.; Amanzadi, A.; Gasbarri, C.; Trummer, C. Protein-Protein Interaction Prediction for Targeted Protein Degradation. Int. J. Mol. Sci. 2022, 23, 7033. [Google Scholar] [CrossRef]

- Ray, S.; Lall, S.; Bandyopadhyay, S. A Deep Integrated Framework for Predicting SARS-CoV2-Human Protein-Protein Interaction. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 1463–1472. [Google Scholar] [CrossRef]

- Sledzieski, S.; Singh, R.; Cowen, L.; Berger, B. D-SCRIPT translates genome to phenome with sequence-based, structure-aware, genome-scale predictions of protein–protein interactions. Cell Syst. 2021, 12, 969. [Google Scholar] [CrossRef]

- Soleymani, F.; Paquet, E.; Viktor, H.L.; Michalowski, W.; Spinello, D. ProtInteract: A deep learning framework for predicting protein–protein interactions. Comput. Struct. Biotechnol. J. 2023, 21, 1324–1348. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, G.; Yu, Z.G.; Huang, G. A Deep Learning and XGBoost-Based Method for Predicting Protein-Protein Interaction Sites. Front. Genet. 2021, 12, 752732. [Google Scholar] [CrossRef]

- Yue, Y.; Ye, C.; Peng, P.Y.; Zhai, H.X.; Ahmad, I.; Xia, C.; Wu, Y.Z.; Zhang, Y.H. A deep learning framework for identifying essential proteins based on multiple biological information. BMC Bioinform. 2022, 23, 296–305. [Google Scholar] [CrossRef]

- Alakus, T.B.; Turkoglu, I. A Novel Protein Mapping Method for Predicting the Protein Interactions in COVID-19 Disease by Deep Learning. Interdiscip. Sci. Comput. Life Sci. 2021, 13, 44–60. [Google Scholar] [CrossRef] [PubMed]

- Aybey, E.; Gumus, O. SENSDeep: An Ensemble Deep Learning Method for Protein-Protein Interaction Sites Prediction. Interdiscip. Sci. Comput. Life Sci. 2023, 15, 55–87. [Google Scholar] [CrossRef] [PubMed]

- Fang, H.; Zhong, C.; Tang, C. Predicting protein–protein interactions between banana and Fusarium oxysporum f. sp. cubense race 4 integrating sequence and domain homologous alignment and neural network verification. Proteome Sci. 2022, 20, 4. [Google Scholar] [CrossRef]

- Li, Y.; Golding, G.B.; Ilie, L. DELPHI: Accurate deep ensemble model for protein interaction sites prediction. Bioinformatics 2021, 37, 896–904. [Google Scholar] [CrossRef]

- Mahdipour, E.; Ghasemzadeh, M. The protein–protein interaction network alignment using recurrent neural network. Med. Biol. Eng. Comput. 2021, 59, 2263–2286. [Google Scholar] [CrossRef]

- Ortiz-Vilchis, P.; De-la Cruz-Garcia, J.S.; Ramirez-Arellano, A. Identification of Relevant Protein Interactions with Partial Knowledge: A Complex Network and Deep Learning Approach. Biology 2023, 12, 140. [Google Scholar] [CrossRef]

- Szymborski, J.; Emad, A. RAPPPID: Towards generalizable protein interaction prediction with AWD-LSTM twin networks. Bioinformatics 2022, 38, 3958–3967. [Google Scholar] [CrossRef] [PubMed]

- Tsukiyama, S.; Hasan, M.M.; Fujii, S.; Kurata, H. LSTM-PHV: Prediction of human-virus protein–protein interactions by LSTM with word2vec. Brief. Bioinform. 2021, 22, bbab228. [Google Scholar] [CrossRef]

- Zeng, M.; Li, M.; Fei, Z.; Wu, F.X.; Li, Y.; Pan, Y.; Wang, J. A Deep Learning Framework for Identifying Essential Proteins by Integrating Multiple Types of Biological Information. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 18, 296–305. [Google Scholar] [CrossRef]

- Zhang, J.; Zhu, M.; Qian, Y. protein2vec: Predicting Protein-Protein Interactions Based on LSTM. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 19, 1257–1266. [Google Scholar] [CrossRef]

- Zhou, X.; Song, H.; Li, J. Residue-Frustration-Based Prediction of Protein-Protein Interactions Using Machine Learning. J. Phys. Chem. B 2022, 126, 1719–1727. [Google Scholar] [CrossRef]

- Asim, M.N.; Ibrahim, M.A.; Malik, M.I.; Dengel, A.; Ahmed, S. ADH-PPI: An attention-based deep hybrid model for protein–protein interaction prediction. iScience 2022, 25, 105169. [Google Scholar] [CrossRef]

- Baek, M.; DiMaio, F.; Anishchenko, I.; Dauparas, J.; Ovchinnikov, S.; Lee, G.R.; Wang, J.; Cong, Q.; Kinch, L.N.; Schaeffer, R.D.; et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science 2021, 373, 871. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Y.; Qin, Y.; Hu, Y.; Huang, R.; Zheng, Q. Protein-protein interaction relation extraction based on multigranularity semantic fusion. J. Biomed. Inform. 2021, 123, 103931. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Han, P.; Wang, G.; Chen, W.; Wang, S.; Song, T. SDNN-PPI: Self-attention with deep neural network effect on protein–protein interaction prediction. BMC Genom. 2022, 23, 474. [Google Scholar] [CrossRef]

- Nambiar, A.; Liu, S.; Heflin, M.; Forsyth, J.M.; Maslov, S.; Hopkins, M.; Ritz, A. Transformer Neural Networks for Protein Family and Interaction Prediction Tasks. J. Comput. Biol. 2023, 30, 95–111. [Google Scholar] [CrossRef] [PubMed]

- Tang, M.; Wu, L.; Yu, X.; Chu, Z.; Jin, S.; Liu, J. Prediction of Protein-Protein Interaction Sites Based on Stratified Attentional Mechanisms. Front. Genet. 2021, 12, 784863. [Google Scholar] [CrossRef] [PubMed]

- Warikoo, N.; Chang, Y.C.; Hsu, W.L. LBERT: Lexically aware Transformer-based Bidirectional Encoder Representation model for learning universal bio-entity relations. Bioinformatics 2021, 37, 404–412. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Guo, M.; Jin, X.; Chen, J.; Liu, B. CFAGO: Cross-fusion of network and attributes based on attention mechanism for protein function prediction. Bioinformatics 2023, 39, btad123. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, M. Graph neural networks with multiple kernel ensemble attention. Knowl.-Based Syst. 2021, 229, 107299. [Google Scholar] [CrossRef]

- Zhu, F.; Li, F.; Deng, L.; Meng, F.; Liang, Z. Protein Interaction Network Reconstruction with a Structural Gated Attention Deep Model by Incorporating Network Structure Information. J. Chem. Inf. Model. 2022, 62, 258–273. [Google Scholar] [CrossRef]

- Capel, H.; Feenstra, K.A.; Abeln, S. Multi-task learning to leverage partially annotated data for PPI interface prediction. Sci. Rep. 2022, 12, 10487. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, M.; Wu, Y.; Li, Y.; Li, M. Accurate Prediction of Human Essential Proteins Using Ensemble Deep Learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 19, 3263–3271. [Google Scholar] [CrossRef]

- Linder, J.; La Fleur, A.; Chen, Z.; Ljubetic, A.; Baker, D.; Kannan, S.; Seelig, G. Interpreting neural networks for biological sequences by learning stochastic masks. Nat. Mach. Intell. 2022, 4, 41. [Google Scholar] [CrossRef] [PubMed]

- Pan, J.; You, Z.H.; Li, L.P.; Huang, W.Z.; Guo, J.X.; Yu, C.Q.; Wang, L.P.; Zhao, Z.Y. DWPPI: A Deep Learning Approach for Predicting Protein-Protein Interactions in Plants Based on Multi-Source Information with a Large-Scale Biological Network. Front. Bioeng. Biotechnol. 2022, 10, 807522. [Google Scholar] [CrossRef] [PubMed]

- Peng, W.; Tang, Q.; Dai, W.; Chen, T. Improving cancer driver gene identification using multi-task learning on graph convolutional network. Brief. Bioinform. 2022, 23, bbab432. [Google Scholar] [CrossRef] [PubMed]

- Schulte-Sasse, R.; Budach, S.; Hnisz, D.; Marsico, A. Integration of multiomics data with graph convolutional networks to identify new cancer genes and their associated molecular mechanisms. Nat. Mach. Intell. 2021, 3, 513. [Google Scholar] [CrossRef]

- Dong, T.N.; Brogden, G.; Gerold, G.; Khosla, M. A multitask transfer learning framework for the prediction of virus-human protein–protein interactions. BMC Bioinform. 2021, 22, 572. [Google Scholar] [CrossRef]

- Zheng, J.; Yang, X.; Huang, Y.; Yang, S.; Wuchty, S.; Zhang, Z. Deep learning-assisted prediction of protein–protein interactions in Arabidopsis thaliana. Plant J. 2023, 114, 984–994. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Huang, C.; Wang, L.; Zhou, S. A disease-related essential protein prediction model based on the transfer neural network. Front. Genet. 2023, 13, 1087294. [Google Scholar] [CrossRef]

- Derry, A.; Altman, R.B.B. COLLAPSE: A representation learning framework for identification and characterization of protein structural sites. Protein Sci. 2023, 32, e4541. [Google Scholar] [CrossRef] [PubMed]

- Si, Y.; Yan, C. Improved inter-protein contact prediction using dimensional hybrid residual networks and protein language models. Brief. Bioinform. 2023, 24, bbad039. [Google Scholar] [CrossRef]

- Yang, X.; Yang, S.; Lian, X.; Wuchty, S.; Zhang, Z. Transfer learning via multi-scale convolutional neural layers for human-virus protein–protein interaction prediction. Bioinformatics 2021, 37, 4771–4778. [Google Scholar] [CrossRef]

- Zhang, W.; Meng, Q.; Wang, J.; Guo, F. HDIContact: A novel predictor of residue-residue contacts on hetero-dimer interfaces via sequential information and transfer learning strategy. Brief. Bioinform. 2022, 23, bbac169. [Google Scholar] [CrossRef]

- Abdollahi, S.; Lin, P.C.; Chiang, J.H. WinBinVec: Cancer-Associated Protein-Protein Interaction Extraction and Identification of 20 Various Cancer Types and Metastasis Using Different Deep Learning Models. IEEE J. Biomed. Health Inform. 2021, 25, 4052–4063. [Google Scholar] [CrossRef]

- Burke, D.F.; Bryant, P.; Barrio-Hernandez, I.; Memon, D.; Pozzati, G.; Shenoy, A.; Zhu, W.; Dunham, A.S.; Albanese, P.; Keller, A.; et al. Towards a structurally resolved human protein interaction network. Nat. Struct. Mol. Biol. 2023, 30, 216. [Google Scholar] [CrossRef] [PubMed]

- Dai, B.; Bailey-Kellogg, C. Protein interaction interface region prediction by geometric deep learning. Bioinformatics 2021, 37, 2580–2588. [Google Scholar] [CrossRef]

- Dholaniya, P.S.; Rizvi, S. Effect of Various Sequence Descriptors in Predicting Human Protein-protein Interactions Using ANN-based Prediction Models. Curr. Bioinform. 2021, 16, 1024–1033. [Google Scholar] [CrossRef]

- Dhusia, K.; Wu, Y. Classification of protein–protein association rates based on biophysical informatics. BMC Bioinform. 2021, 22, 408. [Google Scholar] [CrossRef] [PubMed]

- Han, Y.; Zhang, S.; He, F. A Point Cloud-Based Deep Learning Model for Protein Docking Decoys Evaluation. Mathematics 2023, 11, 1817. [Google Scholar] [CrossRef]

- Humphreys, I.R.; Pei, J.; Baek, M.; Krishnakumar, A.; Anishchenko, I.; Ovchinnikov, S.; Zhang, J.; Ness, T.J.; Banjade, S.; Bagde, S.R.; et al. Computed structures of core eukaryotic protein complexes. Science 2021, 374, 1340. [Google Scholar] [CrossRef]

- Jovine, L. Using machine learning to study protein–protein interactions: From the uromodulin polymer to egg zona pellucida filaments. Mol. Reprod. Dev. 2021, 88, 686–693. [Google Scholar] [CrossRef]

- Kang, Y.; Xu, Y.; Wang, X.; Pu, B.; Yang, X.; Rao, Y.; Chen, J. HN-PPISP: A hybrid network based on MLP-Mixer for protein–protein interaction site prediction. Brief. Bioinform. 2023, 24, bbac480. [Google Scholar] [CrossRef]

- Li, H.; Huang, S.Y. Protein-protein docking with interface residue restraints*. Chin. Phys. B 2021, 30, 018703. [Google Scholar] [CrossRef]

- Lin, P.; Yan, Y.; Huang, S.Y. DeepHomo2.0: Improved protein–protein contact prediction of homodimers by transformer-enhanced deep learning. Brief. Bioinform. 2023, 24, bbac499. [Google Scholar] [CrossRef] [PubMed]

- Ma, W.; Zhang, S.; Li, Z.; Jiang, M.; Wang, S.; Guo, N.; Li, Y.; Bi, X.; Jiang, H.; Wei, Z. Predicting Drug-Target Affinity by Learning Protein Knowledge From Biological Networks. IEEE J. Biomed. Health Inform. 2023, 27, 2128–2137. [Google Scholar] [CrossRef]

- Madani, M.; Behzadi, M.M.; Song, D.; Ilies, H.T.; Tarakanova, A. Improved inter-residue contact prediction via a hybrid generative model and dynamic loss function. Comput. Struct. Biotechnol. J. 2022, 20, 6138–6148. [Google Scholar] [CrossRef]

- Mahapatra, S.; Gupta, V.R.; Sahu, S.S.; Panda, G. Deep Neural Network and Extreme Gradient Boosting Based Hybrid Classifier for Improved Prediction of Protein-Protein Interaction. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 19, 155–165. [Google Scholar] [CrossRef]

- Nikam, R.; Yugandhar, K.; Gromiha, M.M. DeepBSRPred: Deep learning-based binding site residue prediction for proteins. Amino Acids 2022, Online ahead of print. [Google Scholar] [CrossRef]

- Pan, J.; Li, L.P.; You, Z.H.; Yu, C.Q.; Ren, Z.H.; Guan, Y.J. Prediction of Protein-Protein Interactions in Arabidopsis, Maize, and Rice by Combining Deep Neural Network with Discrete Hilbert Transform. Front. Genet. 2021, 12, 745228. [Google Scholar] [CrossRef]

- Pei, J.; Zhang, J.; Cong, Q. Human mitochondrial protein complexes revealed by large-scale coevolution analysis and deep learning-based structure modeling. Bioinformatics 2022, 38, 4301–4311. [Google Scholar] [CrossRef]

- Pei, J.; Zhang, J.; Wang, X.D.; Kim, C.; Yu, Y.; Cong, Q. Impact of Asp/Glu-ADP-ribosylation on protein–protein interaction and protein function. Proteomics 2022, online ahead of print. [Google Scholar] [CrossRef]

- Singh, R.; Devkota, K.; Sledzieski, S.; Berger, B.; Cowen, L. Topsy-Turvy: Integrating a global view into sequence-based PPI prediction. Bioinformatics 2022, 38, 264–272. [Google Scholar] [CrossRef]

- Song, B.; Luo, X.; Luo, X.; Liu, Y.; Niu, Z.; Zeng, X. Learning spatial structures of proteins improves protein–protein interaction prediction. Brief. Bioinform. 2022, 23, bbab558. [Google Scholar] [CrossRef] [PubMed]

- Sreenivasan, A.P.; Harrison, P.J.; Schaal, W.; Matuszewski, D.J.; Kultima, K.; Spjuth, O. Predicting protein network topology clusters from chemical structure using deep learning. J. Cheminform. 2022, 14, 47. [Google Scholar] [CrossRef] [PubMed]

- Stringer, B.; de Ferrante, H.; Abeln, S.; Heringa, J.; Feenstra, K.A.; Haydarlou, R. PIPENN: Protein interface prediction from sequence with an ensemble of neural nets. Bioinformatics 2022, 38, 2111–2118. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Frishman, D. Improved sequence-based prediction of interaction sites in alpha-helical transmembrane proteins by deep learning. Comput. Struct. Biotechnol. J. 2021, 19, 1512–1530. [Google Scholar] [CrossRef]

- Tran, H.N.; Xuan, Q.N.P.; Nguyen, T.T. DeepCF-PPI: Improved prediction of protein–protein interactions by combining learned and handcrafted features based on attention mechanisms. Appl. Intell. 2023. [Google Scholar] [CrossRef]

- Liu-Wei, W.; Kafkas, S.; Chen, J.; Dimonaco, N.J.; Tegner, J.; Hoehndorf, R. DeepViral: Prediction of novel virus–host interactions from protein sequences and infectious disease phenotypes. Bioinformatics 2021, 37, 2722–2729. [Google Scholar] [CrossRef]

- Wee, J.; Xia, K. Persistent spectral based ensemble learning (PerSpect-EL) for protein–protein binding affinity prediction. Brief. Bioinform. 2022, 23, bbac024. [Google Scholar] [CrossRef]

- Xie, Z.; Xu, J. Deep graph learning of inter-protein contacts. Bioinformatics 2022, 38, 947–953. [Google Scholar] [CrossRef]

- Xu, H.; Xu, D.; Zhang, N.; Zhang, Y.; Gao, R. Protein-Protein Interaction Prediction Based on Spectral Radius and General Regression Neural Network. J. Proteome Res. 2021, 20, 1657–1665. [Google Scholar] [CrossRef]

- Yan, Y.; Huang, S.Y. Accurate prediction of inter-protein residue-residue contacts for homo-oligomeric protein complexes. Brief. Bioinform. 2021, 22, bbab038. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.X.; Wang, P.; Zhu, B.T. Importance of interface and surface areas in protein–protein binding affinity prediction: A machine learning analysis based on linear regression and artificial neural network. Biophys. Chem. 2022, 283, 106762. [Google Scholar] [CrossRef] [PubMed]

- Yin, R.; Feng, B.Y.; Varshney, A.; Pierce, B.G. Benchmarking AlphaFold for protein complex modeling reveals accuracy determinants. Protein Sci. 2022, 31, e4379. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Zheng, W.; Cheng, M.; Omenn, G.S.; Freddolino, P.L.; Zhang, Y. Functions of Essential Genes and a Scale-Free Protein Interaction Network Revealed by Structure-Based Function and Interaction Prediction for a Minimal Genome. J. Proteome Res. 2021, 20, 1178–1189. [Google Scholar] [CrossRef]

- Zhong, W.; He, C.; Xiao, C.; Liu, Y.; Qin, X.; Yu, Z. Long-distance dependency combined multi-hop graph neural networks for protein–protein interactions prediction. BMC Bioinform. 2022, 23, 521. [Google Scholar] [CrossRef]

- Zhu, T.; Qin, Y.; Xiang, Y.; Hu, B.; Chen, Q.; Peng, W. Distantly supervised biomedical relation extraction using piecewise attentive convolutional neural network and reinforcement learning. J. Am. Med. Inform. Assoc. 2021, 28, 2571–2581. [Google Scholar] [CrossRef] [PubMed]

- Zhu, F.; Deng, L.; Dai, Y.; Zhang, G.; Meng, F.; Luo, C.; Hu, G.; Liang, Z. PPICT: An integrated deep neural network for predicting inter-protein PTM cross-talk. Brief. Bioinform. 2023, 24, bbad052. [Google Scholar] [CrossRef]

- Chen, M.; Ju, C.J.T.; Zhou, G.; Chen, X.; Zhang, T.; Chang, K.W.; Zaniolo, C.; Wang, W. Multifaceted protein–protein interaction prediction based on Siamese residual RCNN. Bioinformatics 2019, 35, i305–i314. [Google Scholar] [CrossRef]

- Wang, X.; Xu, J.; Shi, W.; Liu, J. OGRU: An Optimized Gated Recurrent Unit Neural Network. J. Phys. Conf. Ser. 2019, 1325, 012089. [Google Scholar] [CrossRef]

- Hashemifar, S.; Neyshabur, B.; Khan, A.A.; Xu, J. Predicting protein–protein interactions through sequence-based deep learning. Bioinformatics 2018, 34, i802–i810. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Philip, S.Y. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; Yin, D. Graph neural networks for social recommendation. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 417–426. [Google Scholar]

- Liu, M.; Gao, H.; Ji, S. Towards deeper graph neural networks. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual, 6–10 July 2020; pp. 338–348. [Google Scholar]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 1–23. [Google Scholar] [CrossRef]

- Wu, F.; Souza, A.; Zhang, T.; Fifty, C.; Yu, T.; Weinberger, K. Simplifying graph convolutional networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6861–6871. [Google Scholar]

- Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; Li, Y. Simple and deep graph convolutional networks. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1725–1735. [Google Scholar]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.S. Kgat: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 950–958. [Google Scholar]

- Song, W.; Xiao, Z.; Wang, Y.; Charlin, L.; Zhang, M.; Tang, J. Session-based social recommendation via dynamic graph attention networks. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, Melbourne, VIC, Australia, 11–15 February 2019; pp. 555–563. [Google Scholar]

- Wang, X.; Ji, H.; Shi, C.; Wang, B.; Ye, Y.; Cui, P.; Yu, P.S. Heterogeneous graph attention network. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 15–17 May 2019; pp. 2022–2032. [Google Scholar]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Han, K.; Wu, H.; Tang, Y.; Chen, X.; Wang, Y.; Xu, C. Cmt: Convolutional neural networks meet vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12175–12185. [Google Scholar]

- Li, M.M.; Huang, K.; Zitnik, M. Graph representation learning in biomedicine and healthcare. Nat. Biomed. Eng. 2022, 6, 1353–1369. [Google Scholar] [CrossRef]

- Wang, T.; Isola, P. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In Proceedings of the International Conference on Machine Learning. PMLR, Virtual, 13–18 July 2020; pp. 9929–9939. [Google Scholar]

- Donahue, J.; Simonyan, K. Large scale adversarial representation learning. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Jatnika, D.; Bijaksana, M.A.; Suryani, A.A. Word2vec model analysis for semantic similarities in english words. Procedia Comput. Sci. 2019, 157, 160–167. [Google Scholar] [CrossRef]

- Di Gennaro, G.; Buonanno, A.; Palmieri, F.A. Considerations about learning Word2Vec. J. Supercomput. 2021, 77, 12320–12335. [Google Scholar] [CrossRef]

- Grohe, M. word2vec, node2vec, graph2vec, x2vec: Towards a theory of vector embeddings of structured data. In Proceedings of the 39th ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems, Portland, OR, USA, 14–19 June 2020; pp. 1–16. [Google Scholar]

- Vahdat, A.; Kautz, J. NVAE: A deep hierarchical variational autoencoder. Adv. Neural Inf. Process. Syst. 2020, 33, 19667–19679. [Google Scholar]

- Zhai, J.; Zhang, S.; Chen, J.; He, Q. Autoencoder and its various variants. In Proceedings of the 2018 IEEE international conference on systems, man, and cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 415–419. [Google Scholar]

- Zhang, G.; Liu, Y.; Jin, X. A survey of autoencoder-based recommender systems. Front. Comput. Sci. 2020, 14, 430–450. [Google Scholar] [CrossRef]

- Pereira, R.C.; Santos, M.S.; Rodrigues, P.P.; Abreu, P.H. Reviewing autoencoders for missing data imputation: Technical trends, applications and outcomes. J. Artif. Intell. Res. 2020, 69, 1255–1285. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Smagulova, K.; James, A.P. A survey on LSTM memristive neural network architectures and applications. Eur. Phys. J. Spec. Top. 2019, 228, 2313–2324. [Google Scholar] [CrossRef]

- Hewamalage, H.; Bergmeir, C.; Bandara, K. Recurrent neural networks for time series forecasting: Current status and future directions. Int. J. Forecast. 2021, 37, 388–427. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Vithayathil Varghese, N.; Mahmoud, Q.H. A survey of multi-task deep reinforcement learning. Electronics 2020, 9, 1363. [Google Scholar] [CrossRef]

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Glaeser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1341–1360. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Kim, H.E.; Cosa-Linan, A.; Santhanam, N.; Jannesari, M.; Maros, M.E.; Ganslandt, T. Transfer learning for medical image classification: A literature review. BMC Med. Imaging 2022, 22, 69. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Zhang, S.; Qin, Y.; Estupinan, E. A systematic review of deep transfer learning for machinery fault diagnosis. Neurocomputing 2020, 407, 121–135. [Google Scholar] [CrossRef]

- Yang, Z.; Zeng, X.; Zhao, Y.; Chen, R. AlphaFold2 and its applications in the fields of biology and medicine. Signal Transduct. Target. Ther. 2023, 8, 115. [Google Scholar] [CrossRef]

- Pozzati, G.; Zhu, W.; Bassot, C.; Lamb, J.; Kundrotas, P.; Elofsson, A. Limits and potential of combined folding and docking. Bioinformatics 2022, 38, 954–961. [Google Scholar] [CrossRef]

| Deep Learning Methods | Brief Description | Studies |

|---|---|---|

| Graph Neural Networks (GNNs) | Utilize graph data processing with deep learning | Albu et al. [30], Azadifar and Ahmadi [31], Baranwal et al. [32], Dai et al. [33], Gao et al. [34], Hinnerichs and Hoehndorf [35], Jha et al. [36], Kim et al. [37], Kishan et al. [38], Mahbub and Bayzid [39], Quadrini et al. [40], Reau et al. [41], Saxena et al. [42], Schapke et al. [43], St-Pierre Lemieux et al. [44], Strokach et al. [45], Wang et al. [46], Wang et al. [47], Williams et al. [48], Yuan et al. [49], Zaki et al. [50], Zhou et al. [51], Zhou et al. [52] |

| Convolutional Neural Networks (CNNs) | Utilize spatial data processing with deep learning | Chen et al. [53], Gao et al. [54], Guo et al. [55], Hu et al. [56], Hu et al. [57], Kozlovskii and Popov [58], Mallet et al. [59], Song et al. [60], Tsukiyama and Kurata [61], Wang et al. [62], Xu et al. [63], Yang et al. [64], Yuan et al. [65] |

| Representation Learning and Autoencoder | Utilize autoencoding for learning representations with deep learning | Asim et al. [66], Czibula et al. [67], Hasibi and Michoel [68], Ieremie et al. [69], Jha et al. [70], Jiang et al. [71], Liu et al. [72], Nourani et al. [73], Orasch et al. [74], Ray et al. [75], Sledzieski et al. [76], Soleymani et al. [77], Wang et al. [78], Yue et al. [79] |

| Recurrent Neural Networks (including LSTM) | Utilize sequential data processing with deep learning | Alakus and Turkoglu [80], Aybey and Gumus [81], Fang et al. [82], Li et al. [83], Mahdipour et al. [84], Ortiz-Vilchis et al. [85], Szymborski and Emad [86], Tsukiyama et al. [87], Zeng et al. [88], Zhang et al. [89], Zhou et al. [90] |

| Attention Methods and Transformers | Based on attention mechanism and position-specific encoding with deep learning | Asim et al. [91], Baek et al. [92], Li et al. [93], Li et al. [94], Nambiar et al. [95], Tang et al. [96], Warikoo et al. [97], Wu et al. [98], Zhang and Xu [99], Zhu et al. [100] |

| Multi-task and Multi-modal Learning | Perform multiple task or use multiple types of data simultaneously | Capel et al. [101], Li et al. [102], Linder et al. [103], Pan et al. [104], Peng et al. [105], Schulte-Sasse et al. [106], Thi Ngan Dong et al. [107], Zheng et al. [108] |

| Transfer Learning | Use pretrained deep learning models for feature extraction | Chen et al. [109], Derry and Altman [110], Si and Yan [111], Yang et al. [112], Zhang et al. [113] |

| Generic/Applications (including MLP) and Others | Includes models that do not fit specifically into other categories, or using PPIs as inputs of deep learning models | Abdollahi et al. [114], Burke et al. [115], Dai and Bailey-Kellogg [116], Dholaniya and Rizvi [117], Dhusia and Wu [118], Han et al. [119], Humphreys et al. [120], Jovine [121], Kang et al. [122], Li et al. [123], Lin et al. [124], Ma et al. [125], Madani et al. [126], Mahapatra et al. [127], Nikam et al. [128], Pan et al. [129], Pei et al. [130], Pei et al. [131], Singh et al. [132], Song et al. [133], Sreenivasan et al. [134], Stringer et al. [135], Sun and Frishman [136], Tran et al. [137], Wang et al. [138], Wee and Xia [139], Xie and Xu [140], Xu et al. [141], Yan and Huang [142], Yang et al. [143], Yin et al. [144], Zhang et al. [145], Zhong et al. [146], Zhu et al. [147], Zhu et al. [148] |

| Journal | Counts | Percentage (%) |

|---|---|---|

| Bioinformatics | 21 | 17.6 |

| Briefings in Bioinformatics | 12 | 10.1 |

| BMC Bioinformatics | 12 | 10.1 |

| IEEE-ACM Transactions on Computational Biology and Bioinformatics | 7 | 5.9 |

| Computational and Structural Biotechnology Journal | 4 | 3.4 |

| Frontiers in Genetics | 4 | 3.4 |

| Computers in Biology and Medicine | 3 | 2.5 |

| BMC Genomics | 2 | 1.7 |

| IEEE Access | 2 | 1.7 |

| IEEE Journal of Biomedical and Health Informatics | 2 | 1.7 |

| Scientific Reports | 2 | 1.7 |

| Science | 2 | 1.7 |

| Protein Science | 2 | 1.7 |

| Journal of Proteome Research | 2 | 1.7 |

| Interdisciplinary Sciences-Computational Life Sciences | 2 | 1.7 |

| Mathematics | 2 | 1.7 |

| Journal of Chemical Information and Modeling | 2 | 1.7 |

| Nature Machine Intelligence | 2 | 1.7 |

| Others ( Publication) | 34 | 28.6 |

| Author | Metrics and Results | Contributions |

|---|---|---|

| Albu et al. [30] | AUC: 0.92 AUPRC: 0.93 | Developed MM-StackEns, a deep multimodal stacked generalization approach for predicting PPIs. |

| Azadifar and Ahmadi [31] | AUC: 0.8847 | Introduced a semi-supervised learning method for prioritizing candidate disease genes. |

| Baranwal et al. [32] | ACC: 0.9889 MCC: 0.9779 AUC: 0.9955 | Presented Struct2Graph, a GAT designed for structure-based predictions of PPIs. |

| Dai et al. [33] | MSE: 0.2446 PCC: 0.8640 | Formulated a method for predicting protein abundance from scRNA-seq data. |

| Gao et al. [34] | ACC: 0.778 | Developed the Substructure Assembling Graph Attention Network (SA-GAT) for graph classification tasks. |

| Hinnerichs and Hoehndorf [35] | AUC: 0.94 | Devised DTI-Voodoo, a method combining molecular features and PPI networks to predict drug-target interactions. |

| Jha et al. [36] | ACC: 0.9813 MCC: 0.9520 AUC: 0.9828 AUPRC: 0.9886 | Proposed the use of GCN and GAT to predict PPIs. |

| Kim et al. [37] | Precision: 0.60 F1: 0.52 NMI: 0.404 | Proposed DrugGCN, a GCN for drug response prediction using gene expression data. |

| Kishan et al. [38] | AUC: 0.936 AUPRC: 0.941 | Developed a higher-order GCN for biomedical interaction prediction. |

| Mahbub and Bayzid [39] | ACC: 0.715 MCC: 0.27 AUC: 0.719 AUPRC: 0.405 | Introduced EGRET, an edge aggregated GAT for PPI site prediction. |

| Quadrini et al. [40] | ACC: 0.731 MCC: 0.054 AUC: 0.588 | Explored hierarchical representations of protein structure for PPI site prediction. |

| Reau et al. [41] | AUC: 0.85 | Developed DeepRank-GNN, a graph neural network framework for learning interaction patterns. |

| Saxena et al. [42] | ACC: 0.9113 F1: 0.90 | Proposed a network centrality based approach combined with GCNs for link prediction. |

| Schapke et al. [43] | AUC: 0.9043 AUPRC: 0.7668 | Developed EPGAT, an essentiality prediction model based on GATs. |

| St-Pierre Lemieux et al. [44] | ACC: 0.84 MCC: 0.94 | Presented several geometric deep-learning-based approaches for PPI predictions. |

| Strokach et al. [45] | Spearman’s R: 0.62 | Described ELASPIC2 (EL2), a machine learning model for predicting mutation effects on protein folding and PPI. |

| Wang et al. [46] | ACC 0.9365 MCC 0.4301 AUC 0.6068 | Developed SIPGCN, a deep learning model for predicting self-interacting proteins. |

| Wang et al. [47] | ACC: 0.413 | Introduced PLA-GNN, a method for identifying alterations of protein subcellular locations. |

| Williams et al. [48] | AUC: 0.85 | Developed DockNet, a protein–protein interface contact prediction model. |

| Yuan et al. [49] | ACC: 0.776 MCC: 0.333 AUC: 0.786 AUPRC: 0.429 | Proposed GraphPPIS, a deep graph-based framework for PPI site prediction. |

| Zaki et al. [50] | F1: 0.616 | Developed a method for detecting protein complexes in PPI data using GCNs. |

| Zhou et al. [51] | AUC: 0.5916 AP: 0.85 | Conducted a comparative study on various graph neural networks for PPI prediction. |

| Zhou et al. [52] | ACC: 0.856 F1: 0.569 AUC: 0.867 AUPRC: 0.574 | Presented AGAT-PPIS, an augmented graph attention network for PPI site prediction. |

| Author | Metrics and Results | Contributions |

|---|---|---|

| Chen et al. [53] | ACC: 0.9303 F1: 0.9268 MCC: 0.8609 | Developed DCSE, a sequence-based model using MCN and MBC for feature extraction and PPI prediction. |

| Gao et al. [54] | ACC: 0.9534 MCC: 0.9086 AUC: 0.9824 | Introduced EResCNN, an ensemble residual CNN integrating diverse feature representations for PPI prediction. |

| Guo et al. [55] | ACC: 0.884 PCC: 0.366 | Introduced TRScore, a 3D RepVGG-based scoring method for ranking protein docking models. |

| Hu et al. [56] | ACC: 0.9755 MCC: 0.9515 F1: 0.9752 | Developed DeepTrio, a PPI prediction tool using mask multiple parallel convolutional neural networks. |

| Hu et al. [57] | ACC: 0.859 MCC: 0.399 AUC: 0.824 AUPRC: 0479 | Developed D-PPIsite, a deep residual network integrating four sequence-driven features for PPI site prediction. |

| Kozlovskii and Popov [58] | AUC: 0.91 MCC: 0.49 | Developed BiteNet, a 3D convolutional neural network method for protein–peptide binding site detection. |

| Mallet et al. [59] | ACC≃ 0.70 | Developed InDeep, a 3D fully convolutional network tool for predicting functional binding sites within proteins. |

| Song et al. [60] | ACC: 0.776 MCC: 0.333 AUC: 0.786 AUPRC: 0.429 | Presented a method for clustering spatially resolved gene expression using a graph-regularized convolutional neural network, leveraging the PPI network graph. |

| Tsukiyama and Kurata [61] | ACC: 0.956 F1: 0.955 MCC: 0.912 AUC: 0.988 | Proposed Cross-attention PHV, a neural network utilizing cross-attention mechanisms and 1D-CNN for human-virus PPI prediction. |

| Wang et al. [62] | ACC: 0.784 MCC:0.5685 | Proposed an enhancement to a 2D CNN using Sequence-Statistics-Content (SSC) protein sequence encoding format for PPI tasks. |

| Xu et al. [63] | ACC: 0.9617 F1: 0.9257 | Introduced OR-RCNN, a PPI prediction framework based on ordinal regression and recurrent convolutional neural networks. |

| Yang et al. [64] | AUC: 0.885 MCC: 0.390 | Proposed PhosIDN, an integrated deep neural network combining sequence and PPI information for improved prediction of protein phosphorylation sites. |

| Yuan et al. [65] | ACC: 0.9680 | Presented a deep-learning-based approach combining a semi-supervised SVM classifier and a CNN for constructing complete PPI networks. |

| Author | Metrics and Results | Contributions |

|---|---|---|

| Asim et al. [66] | ACC: 0.82 MCC: 0.6399 F1: 0.6399 AUC: 0.88 | Developed LGCA-VHPPI, a deep forest model for effective viral-host PPI prediction using statistical protein sequence representations. |

| Czibula et al. [67] | ACC: 0.983 F1: 0.984 AUC: 0.985 | Introduced AutoPPI, an ensemble of autoencoders designed for PPI prediction, yielding strong performance on several datasets. |

| Hasibi and Michoel [68] | MSE: 0.133 | Demonstrated a Graph Feature Auto-Encoder that utilizes the structure of gene networks for effective prediction of node features. |

| Ieremie et al. [69] | AUC: 0.939 | Proposed TransformerGO, a model predicting PPIs by modeling the attention between sets of Gene Ontology (GO) terms. |

| Jha et al. [70] | ACC: 0.8355 F1: 0.8349 | Utilized a stacked auto-encoder for PPI prediction, showcasing effective feature extraction approach for addressing PPI problems. |

| Jiang et al. [71] | ACC: 0.990 MCC: 0.975 F1 0.990 | Introduced DHL-PPI, a deep hash learning model to predict all-against-all PPI relationships with reduced time complexity. |

| Liu et al. [72] | AUC: 0.658 | Designed GraphPheno, a graph autoencoder-based method to predict relationships between human proteins and abnormal phenotypes. |

| Nourani et al. [73] | AP: 0.7704 | Presented TripletProt, a deep representation learning approach for proteins, proving effective for protein functional annotation tasks. |

| Orasch et al. [74] | AUC 0.88 | Presented a new deep learning architecture for predicting interaction sites and interactions of proteins, showing state-of-the-art performance. |

| Ray et al. [75] | ND | Presented a deep learning methodology for predicting high-confidence interactions between SARS-CoV2 and human host proteins. |

| Sledzieski et al. [76] | AUPRC: 0.798 | Presented D-SCRIPT, a deep-learning model predicting PPIs using only protein sequences, maintaining high accuracy across species. |

| Soleymani et al. [77] | ACC: 0.9568 AUC: 0.9600 | Proposed ProtInteract, a deep learning framework for efficient prediction of protein–protein interactions. |

| Wang et al. [78] | ACC: 0.633 AUC: 0.681 AUPRC: 0.339 | Introduced DeepPPISP-XGB, a method integrating deep learning and XGBoost for effective prediction of PPI sites. |

| Yue et al. [79] | ACC: 0.9048 AUC: 0.93 | Proposed a deep learning framework to identify essential proteins integrating features from the PPI network, subcellular localization, and gene expression profiles. |

| Author | Metrics and Results | Contributions |

|---|---|---|

| Alakus and Turkoglu [80] | ACC: 0.9776 F1: 0.7942 AUC: 0.89 | Proposed a deep learning method for predicting protein interactions in SARS-CoV-2. |

| Aybey and Gumus [81] | AUC: 0.715 MCC: 0.227 F1: 0.330 | Developed SENSDeep, an ensemble deep learning method, for predicting protein interaction sites. |

| Fang et al. [82] | ACC: 0.9445 ROC: 0.94 | Employed an integrated LSTM-based approach for predicting protein–protein interactions in plant-pathogen studies. |

| Li et al. [83] | ACC: 0.848 AUC: 0.746 AUPRC: 0.326 | Proposed DELPHI, a deep learning suite for PPI-binding sites prediction. |

| Mahdipour et al. [84] | ACC: 1.0 F1: 1.0 | Introduced RENA, an innovative method for PPI network alignment using a deep learning model. |

| Ortiz-Vilchis et al. [85] | ACC: 0.949 | Utilized LSTM model to generate relevant protein sequences for protein interaction prediction. |

| Szymborski and Emad [86] | AUC: 0.978 AUPRC: 0.974 | Introduced RAPPPID, an AWD-LSTM twin network, to predict protein–protein interactions. |

| Tsukiyama et al. [87] | ACC: 0.985 AUC: 0.976 | Presented LSTM-PHV, a model for predicting human-virus protein–protein interactions. |

| Zeng et al. [88] | ACC: 0.9048 F1: 0.7585 | Introduced a deep learning framework for identifying essential proteins by integrating multiple types of biological information. |

| Zhang et al. [89] | ACC: 0.83 AUC: 0.93 | Presented protein2vec, an LSTM-based approach for predicting protein–protein interactions. |

| Zhou et al. [90] | ACC: 0.75 | Implemented LSTM-based model for predicting protein–protein interaction residues using frustration indices. |

| Author | Metrics and Results | Contributions |

|---|---|---|

| Asim et al. [91] | ACC: 0.926 F1: 0.9195 MCC: 0.855 | Proposed ADH-PPI, an attention-based hybrid model with superior accuracy for PPI prediction. |

| Baek et al. [92] | ACC: 0.868 MCC: 0.768 F1: 0.893 AUC: 0.982 | Utilized a three-track neural network integrating information at various dimensions for protein structure and interaction prediction. |

| Li et al. [93] | F1: 0.925 | Offered a PPI relationship extraction method through multigranularity semantic fusion, achieving high F1-scores. |

| Li et al. [94] | ACC: 0.9519 MCC: 0.9045 AUC: 0.9860 | Introduced SDNN-PPI, a self-attention-based PPI prediction method, achieving up to 100% accuracy on independent datasets. |

| Nambiar et al. [95] | ACC: 0.98 AUC: 0.991 | Developed a Transformer neural network that excelled in protein interaction prediction and family classification. |

| Tang et al. [96] | ACC: 0.631 F1: 0.393 | Proposed HANPPIS, an effective hierarchical attention network structure for predicting PPI sites. |

| Warikoo et al. [97] | F1: 0.86 | Introduced LBERT, a lexically aware transformer-based model that outperformed state-of-the-art models in PPI tasks. |

| Wu et al. [98] | AUPRC: 0.8989 | Presented CFAGO, an efficient protein function prediction model integrating PPI networks and protein biological attributes. |

| Zhang and Xu [99] | ACC: 0.856 | Introduced a kernel ensemble attention method for graph learning applied to PPIs, showing competitive performance. |

| Zhu et al. [100] | ACC: 0.934 F1: 0.932 AUC: 0.935 | Introduced the SGAD model, improving the performance of Protein Interaction Network Reconstruction. |

| Author | Metrics and Results | Contributions |

|---|---|---|

| Capel et al. [101] | AUC: 0.7632 AUPRC: 0.3844 | Proposed a multi-task deep learning approach for predicting residues in PPI interfaces. |

| Li et al. [102] | AUC: 0.895 AUPRC: 0.899 | Developed EP-EDL, an ensemble deep learning model for accurate prediction of human essential proteins. |

| Linder et al. [103] | AUC: 0.96 | Introduced scrambler networks to improve the interpretability of neural networks for biological sequences. |

| Pan et al. [104] | ACC: 0.8947 MCC: 0.7902 AUC: 0.9548 | Proposed DWPPI, a network embedding-based approach for PPI prediction in plants. |

| Peng et al. [105] | AUC: 0.9116 AUPRC: 0.8332 | Introduced MTGCN, a multi-task learning method for identifying cancer driver genes. |

| Schulte-Sasse et al. [106] | AUPRC: 0.76 | Developed EMOGI, integrating MULTIOMICS data with PPI networks for cancer gene prediction. |

| Thi Ngan Dong et al. [107] | AUC: 0.9804 F1: 0.9379 | Developed a multitask transfer learning approach for predicting virus-human and bacteria-human PPIs. |

| Zheng et al. [108] | AUPRC: 0.965 | Developed DeepAraPPI, a deep learning framework for predicting PPIs in Arabidopsis thaliana. |

| Author | Metrics and Results | Contributions |

|---|---|---|

| Chen et al. [109] | ACC: 0.9745 | Developed TNNM, a model for predicting essential proteins with superior performance on two public databases. |

| Derry and Altman [110] | AUC: 0.881 | Proposed COLLAPSE, a framework for identifying protein structural sites, demonstrating excellent performance in various tasks including PPIs. |

| Si and Yan [111] | AvgPR: 0.576 | Presented DRN-1D2D_Inter, a deep learning method for inter-protein contact prediction with enriched input features. |

| Yang et al. [112] | ACC: 0.9865 F1: 0.9236 AUPRC: 0.974 | Utilized a Siamese CNN and a multi-layer perceptron for human-virus PPI prediction, applying transfer learning for human-SARS-CoV-2 PPIs. |

| Zhang et al. [113] | AvgPR: 0.6596 | Introduced HDIContact, a deep learning framework for inter-protein residue contact prediction, showcasing promising results for understanding PPI mechanisms. |

| Author | Contributions |

|---|---|

| Abdollahi et al. [114] | Developed WinBinVec, a window-based deep learning model to identify cancer PPIs. |

| Burke et al. [115] | Demonstrated a potential of AlphaFold2 in predicting structures for protein interactions. |

| Dai and Bailey-Kellogg [116] | Presented PInet, a Geometric Deep Neural Network that predicts PPI from point clouds encoding the structures of two partner proteins. |

| Dholaniya and Rizvi [117] | Examined the efficacy of various sequence-based descriptors in predicting PPIs. |

| Dhusia and Wu [118] | Proposed a neural network model to estimate protein–protein association rates. |

| Han et al. [119] | Applied PointNet for protein docking decoys evaluation. |

| Humphreys et al. [120] | Used proteome-wide amino acid coevolution analysis and deep-learning-based structure modeling for core eukaryotic protein complexes. |

| Jovine [121] | Used AlphaFold2 and ColabFold to investigate the activation of uromodulin. |

| Kang et al. [122] | Introduced HN-PPISP, a hybrid neural network for PPI site prediction. |

| Li et al. [123] | Proposed HDOCKsite, an approach incorporating interface residue restraints into protein–protein docking. |

| Lin et al. [124] | Proposed DeepHomo2.0, a model that predicts PPIs of homodimeric complexes. |

| Ma et al. [125] | Proposed MSF-DTA, a deep-learning-based method using PPI information for predicting drug-target affinity. |

| Madani et al. [126] | Proposed CGAN-Cmap, a novel hybrid model for protein contact map prediction. |

| Mahapatra et al. [127] | Developed DNN-XGB, a hybrid classifier for PPI prediction combining DNN and XGBoost. |

| Nikam et al. [128] | Developed DeepBSRPred for predicting PPI binding sites using protein sequence. |

| Pan et al. [129] | Presented a framework combining discrete Hilbert transform (DHT) with DNN for plant PPI prediction. |

| Pei et al. [130] | Utilized deep learning methods for analyzing coevolution of human proteins in mitochondria and modeling protein complexes. |

| Pei et al. [131] | Employed AlphaFold to predict PPIs and interfaces for coevolution signals. |

| Singh et al. [132] | Introduced Topsy-Turvy, a sequence-based multi-scale model for PPI prediction. |

| Song et al. [133] | Proposed TAGPPI, an end-to-end framework to predict PPIs using protein sequences and graph learning method. |

| Sreenivasan et al. [134] | Developed MolPMoFiT for predicting protein clusters based on chemical structure. |

| Stringer et al. [135] | Developed PIPENN, an ensemble of neural networks for protein interface prediction from protein sequences. |

| Sun and Frishman [136] | Developed DeepTMInter, a novel approach for sequence-based prediction of interaction sites in alpha-helical transmembrane proteins. |

| Tran et al. [137] | Introduced DeepCF-PPI, combining handcrafted and learned features for PPI prediction. |

| Wang et al. [138] | Developed DeepViral, a deep learning method that predicts PPIs between humans and viruses using protein sequences and infectious disease phenotypes. |

| Wee and Xia [139] | Proposed PerSpect-EL, an ensemble learning model for protein–protein binding prediction. |

| Xie and Xu [140] | Developed GLINTER, a deep learning method for inter-protein contact prediction, using protein tertiary structures and a pretrained language model. |

| Xu et al. [141] | Developed GRNN-PPI, a PPI prediction algorithm for multiple datasets. |

| Yan and Huang [142] | Proposed DeepHomo, a deep learning model for predicting inter-protein residue-residue contacts across homo-oligomeric protein interfaces. |

| Yang et al. [143] | Examined interface and surface areas in protein–protein binding prediction. |

| Yin et al. [144] | Benchmarked the use of AlphaFold for protein complex modeling. |

| Zhang et al. [145] | Predicted functions and a PPI network for proteins in the minimal genome JCVI-syn3A. |

| Zhong et al. [146] | Presented a multi-hop neural network model for predicting multi-label PPIs. |

| Zhu et al. [147] | Proposed PACNN+RL, a hybrid deep learning and reinforcement learning method, for biomedical relation extraction. |

| Zhu et al. [148] | Introduced PPICT, a deep neural network designed to predict PTM inter-protein cross-talk. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, M. Recent Advances in Deep Learning for Protein-Protein Interaction Analysis: A Comprehensive Review. Molecules 2023, 28, 5169. https://doi.org/10.3390/molecules28135169

Lee M. Recent Advances in Deep Learning for Protein-Protein Interaction Analysis: A Comprehensive Review. Molecules. 2023; 28(13):5169. https://doi.org/10.3390/molecules28135169

Chicago/Turabian StyleLee, Minhyeok. 2023. "Recent Advances in Deep Learning for Protein-Protein Interaction Analysis: A Comprehensive Review" Molecules 28, no. 13: 5169. https://doi.org/10.3390/molecules28135169

APA StyleLee, M. (2023). Recent Advances in Deep Learning for Protein-Protein Interaction Analysis: A Comprehensive Review. Molecules, 28(13), 5169. https://doi.org/10.3390/molecules28135169