Abstract

A great variety of computational approaches support drug design processes, helping in selection of new potentially active compounds, and optimization of their physicochemical and ADMET properties. Machine learning is a group of methods that are able to evaluate in relatively short time enormous amounts of data. However, the quality of machine-learning-based prediction depends on the data supplied for model training. In this study, we used deep neural networks for the task of compound activity prediction and developed dropout-based approaches for estimating prediction uncertainty. Several types of analyses were performed: the relationships between the prediction error, similarity to the training set, prediction uncertainty, number and standard deviation of activity values were examined. It was tested whether incorporation of information about prediction uncertainty influences compounds ranking based on predicted activity and prediction uncertainty was used to search for the potential errors in the ChEMBL database. The obtained outcome indicates that incorporation of information about uncertainty of compound activity prediction can be of great help during virtual screening experiments.

1. Introduction

Computational methods have now become indispensable part of drug design process, supporting its every stage, from proposing new drug candidates, via optimization of their activity, to tuning their physicochemical and pharmacokinetic properties and minimizing adverse effects (computer-aided drug design, CADD) [1,2,3,4,5,6,7,8]. Great desire for new medications for various diseases is an impulse for conduction of experiments in the field, which causes the exponential growth of the amount of pharmaceutical-related data that can then be used for modeling of compounds bioactivity and properties.

There is a number of databases storing information about various aspects of biologically active compounds—from data on compounds activity towards particular target, such as the ChEMBL database [9] or PDSP [10], through the information on 3-dimensional structure of proteins (PDB) [11], data on existing drugs (DrugBank) [12] or compounds toxicity (TOXNET) [13]. The information stored in such databases can be very useful during the design of new compounds with desired biological activity; however, the great amount of information stored there makes it impossible to be carried out by simple statistical tools. Therefore, more sophisticated tools need to be use in order to derive relationships that can facilitate the process of finding new drug candidates. This is the reason why machine learning (ML) methods have recently gained such great popularity in the field of drug design. They are used both to select potential drug candidates from large compounds databases, but also to generate the structures of new chemical compounds de novo—or to optimize their physicochemical and pharmacokinetic properties [14,15,16,17,18,19,20,21,22,23,24,25,26,27].

Despite a wide range of possibilities offered by ML methods, there are also problems which leads to inaccurate predictions of compounds activity or other evaluated properties. First of all, in order to apply ML methods for dealing with cheminformatic problems, the structures of chemical compounds need to be properly represented. One of the most popular approaches to this task is fingerprinting that is translating a compound into the form of a bit string coding information about its structure [28,29,30,31,32]. There are two main types of this way of compound transformation: hashed fingerprints and key-based fingerprints and each type of compounds translation is connected with losing some pieces of information about compound structure. For example, in the key-based fingerprints, subsequent positions provide information about the presence or absence of particular chemical moieties in the molecule; however, after representing compound in such a form, information about the connections between these moieties is lost.

Another problem with application of ML methods in the process of selecting new drug candidates is related to the fact that the already known ligands of a given receptor usually cover relatively narrow chemical space [33]. It then causes, that if they are used for training a ML model, we obtain correct predictions on the activity of compounds that are structurally close to the previously examined ones, but there are difficulties in evaluation of structurally novel compounds. Generalization issues are difficult to be solved and various approaches have already been tested to improve the prediction accuracy on the molecules with dissimilar structures to known ligands [34,35].

Each computational model, before application in CADD tasks, needs to be evaluated in terms of its prediction accuracy. Such retrospective studies are also challenging, as the proper testing approach needs to be selected. It was already reported in several studies, that cross-validation (CV) with random splitting leads to overoptimistic results as we rather obtain information on a model that works via memorizing the training set than generalizing it on new data. However, other splitting approaches (such as cluster-based or scaffold-based splitting) are also not perfect; they also do not fully solve the problem of providing information about the ability of a model to evaluate structurally novel compounds [33,34].

Another problem related to application of computational tools in CADD is the fact that most of them (not only ML-based, but also pharmacophore modeling or homology modeling when model evaluation is considered) need first to be trained, which is usually performed on experimental data stored in various databases. However, as it was already indicated and what is also a subject of this study, experimental data are not always reproducible and the provided compound activity values are not always reliable [36].

There are different types of uncertainty that can be considered. Two most important categories of this problem are epistemic and aleatoric uncertainty. The latter type of uncertainty is sometimes called also a systematic uncertainty and its source is lack of knowledge of various types. It can be related to misunderstanding of the analyzed process or missing data of a particular type. Epistemic uncertainty influences evaluations of events of ‘accident’ types, such as probability of failure of particular machine and evaluation of probability of human error (when the analyst does not possess enough data to make proper decision). On the other hand, aleatoric uncertainty is also known as statistical uncertainty and is related to randomness occurring during experiment (causing differences in the obtained outcome when experiment is run several times with the same settings) [37].

Out of a wide range of ML models applied in CADD, great popularity is now gained by deep learning (DL) approaches. DL methods are known for their ability to model complicated dependencies in data, much more efficiently than their shallow counterparts. In CADD, they are both used for evaluation of compounds activity and other properties (physicochemical, ADMET), as well as for the generation of molecules with properties falling in specific range of values (e.g., with defined solubility, stability, etc.) [38,39,40,41,42,43,44,45].

There are different approaches to estimate prediction uncertainty. At first, we would like to remark on the use of the soft-max probabilities in uncertainty estimation. It should be pointed out that measures calculated solely on the output of soft-max probability distribution are not actually modeling uncertainty. As shown by Gal et al. [46], the model can be certain (meaning high probability of class assignment) for a data point that was never seen by the model during training. Given a perfect classifier, for a sample out of the training distribution, but with some features resembling an specific subgroup of the training set (e.g., active compounds), we would like to predict the ligand active; however, with a measurable margin of uncertainty (as the model has not observed such an exact sample before). The certainty of such activity prediction is the expected outcome in the soft-max distribution, as it does not provide any additional information about its decision.

In this study, we used a method for uncertainty estimation proposed by Gal et al.—dropout-based uncertainty. It uses an indeterministic model both during training and evaluation. The stochasticity is expressed by the dropout mechanic [47], which was originally developed to combat overfitting of neural networks. In the original formulation, some of the network weights (i.e., neurons) are dropped out, zeroing their weighs, which in turn means that they do not contribute to the prediction. The set of neurons that are dropped out is different in each iteration (for each data batch, different neurons are dropped). In the typical dropout setting, none of the weights are dropped during evaluation, as we typically want the prediction to be deterministic.

Nevertheless, for the dropout-based uncertainty, the dropout on during inference is kept. Moreover, each testing sample is passed through the network multiple times, each with different dropout mask (i.e., different set of neurons dropped) and prediction statistics are calculated based on those outputs. Measuring the variance of each run for a given data point yields the model uncertainty.

We would like also to mention two other approaches for estimating model uncertainty. Bayesian neural networks are a popular framework for models with built-in uncertainty weights, with Probabilistic Backpropagation [48] as an example have already been used to estimate model uncertainty. Other approach, related to Bayesian models belongs to the group of Variational Inference methods, which provide an approximation to Bayesian inference over network’s weights [49]. The drawback of those methods is computational complexity, whereas the approach used in the study requires only few additional forwards passes through the model.

In the study, several types of experiments have been performed:

- the relationships between the prediction error, similarity to the training set and prediction uncertainty for the data from the test set were examined, together with analysis of correlation between uncertainty and the number of activity values provided—and also between uncertainty and standard deviation of activity values

- we tested whether incorporation of information about prediction uncertainty improves the compounds ranking on the basis of predicted activity

- uncertainty of predictions was used to search for the potential errors in the ChEMBL database.

The study was carried out for two sets of targets: 10 targets from previous benchmark experiments [35] and additional 15 targets from various G protein coupled receptors (GPCRs) families. The predictions (numerical regression of bioactivity of ligands) were carried out in two settings: random CV and balanced agglomerative clustering (BAC) for two compounds representations.

2. Results and Discussion

2.1. General Observations

Table 1 and Table 2 gather values of mean squared error (MSE) for CV and BAC splitting, together with the estimation of uncertainty.

Table 1.

Regression results obtained for random CV.

Table 2.

Regression results obtained for BAC.

The results gathered in Table 1 and Table 2 show that in general, MSE values were much higher for BAC splitting than random CV, which is related to increased task simplicity when compounds are divided into folds randomly [35]. In BAC splitting, the compounds from the test set are supposed to be structurally dissimilar to those that are present in the training set; therefore, via this approach we can evaluate the true ability of ML models to assess compounds covering broad chemical space. Nevertheless, using such an approach for ML methods evaluation is always related to worse performance, as CV-based output provides overoptimistic results (the compounds from the test set resemble examples from the training set, so the evaluation task is relatively simple) that are not reflected in real application of such models.

Interestingly, MSE values obtained for MACCSFP are higher in both CV and BAC splitting in comparison to hashed Morgan FP representation. The difference is higher for BAC splitting, but it is related to higher MSE values for these experiments.

Another consistent observation is that MSE is higher than dropout MSE, which means that the non-deterministic MSE is lower than its deterministic equivalent. It can be explained in such a way, that multiple drawing of dropout’s masks is identical to the prediction of the network committee, which usually is characterized by slightly better results.

Our last observation is connected with uncertainty evaluation. It also reaches higher values for BAC experiments in comparison to CV, while on the other hand, when different compound representations are compared, uncertainty values are higher for MACCSFP in comparison to Morgan FP.

When the results are examined from the target point of view, the highest error rate is observed for ACM1, mGluR5, ACM1 and ACM2 for both representations and splitting approaches and the lowest for D2 and MC3. In general, smaller datasets led to worse prediction efficiency.

2.2. Analysis of Uncertainties, Errors and Compound Similarities

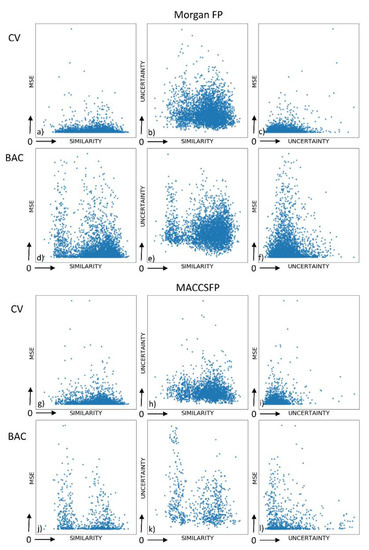

The visualization of dependencies between the regression error, similarity to the training set (calculated for Morgan FP and with the use of Tanimoto coefficient) and uncertainty was performed (Figure 1, Supporting Information File S1).

Figure 1.

Visual analysis of dependencies between mean squared error (MSE), prediction uncertainty and compound similarity for adenosine A1 receptor. (a) MSE vs. similarity for Morgan FP in CV; (b) uncertainty vs. similarity for Morgan FP in CV (c) MSE vs. uncertainty for Morgan FP in CV; (d) MSE vs. similarity for Morgan FP in BAC; (e) uncertainty vs. similarity for Morgan FP in BAC; (f) MSE vs. uncertainty for Morgan FP in BAC; (g) MSE vs. similarity for MACCSFP in CV; (h) uncertainty vs. similarity for MACCSFP in CV (i) MSE vs. uncertainty for MACCSFP in CV; (j) MSE vs. similarity for MACCSFP in BAC; (k) uncertainty vs. similarity for MACCSFP in BAC; (l) MSE vs. uncertainty for MACCSFP in BAC.

The first observation coming from Figure 1 is that there is no significant difference between results obtained for two representation used—the only qualitative and strong indicated differentiation is the uncertainty vs. similarity dependence for random CV, which is spread over greater area for Morgan FP than MACCSFP. For this dependency it is also visible that the data points are placed differently when random CV vs. BAC splitting is considered—for random CV, the datapoints are concentrated closer to higher values of similarity coefficients, whereas for BAC they are spreading from the corner with lower similarity and uncertainty values. The highest concentration of datapoints in lower values of both parameters considered (MSE and uncertainty) is also observed for MACCSFP and random CV; for other combinations of dataset splitting approach and representation the datapoints cover much broader space of the respective chart although and MSE is more concentrated parameter than uncertainty, which adopts quite broad range of values. MSE vs. similarity charts also much more depended on the splitting approach than the compound representation and the highest concentration of points is shifted towards higher similarity values for random CV than for BAC.

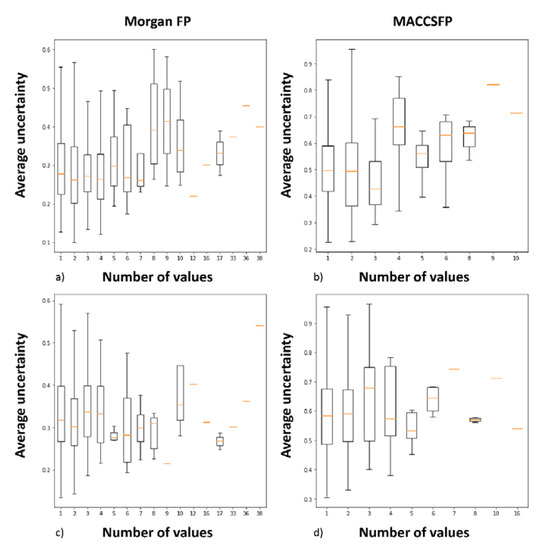

Another type of analysis involved the examination of relationships between the number of different activity values reported and average prediction uncertainty (Figure 2, Supporting Information File S2). In the case, no ‘box’ is presented on the chart, only one compound was reported for particular number of activity values. Activity range is shown by lines, box size refers to first and last quartile and orange line to median activity value. The results show that there is no direct relationship between the number of activity values provided for particular compound and uncertainty of predictions obtained for them, for both random CV and BAC. No direct conclusion that increasing number of activity values reported led to higher uncertainty can be drawn.

Figure 2.

Analysis of dependency between prediction uncertainty and number of activity values provided for particular compound in the ChEMBL database for dopamine D2 ligands. (a) for Morgan FP in CV experiments; (b) for MACCFP in CV experiments; (c) for Morgan FP in BAC experiments; (d) for Morgan FP in BAC experiments.

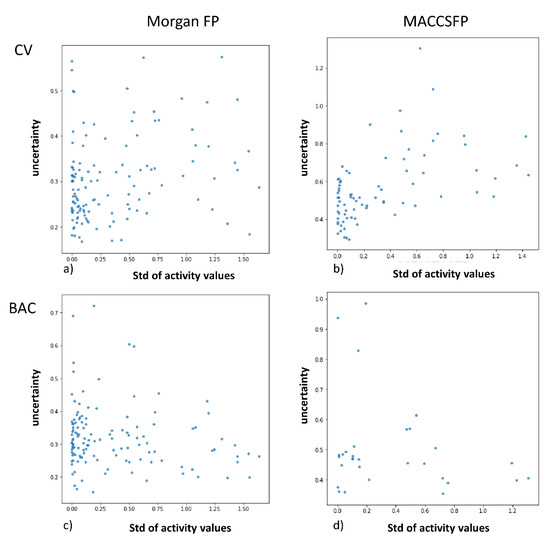

Last type of analysis involved the examination of correlation between the standard deviation of activity values reported for given compound and prediction uncertainty (Figure 3, Supporting Information File S3).

Figure 3.

Analysis of dependency between prediction uncertainty and standard deviation of activity values provided for particular compound in the ChEMBL database for adenosine A1 ligands. (a) for Morgan FP in CV experiments; (b) for MACCFP in CV experiments; (c) for Morgan FP in BAC experiments; (d) for Morgan FP in BAC experiments.

The first observation coming from Figure 4 is that the consistency in data in training set (standard deviation of activity values equal to zero) is not related to clear and certain predictions for given compound and wide range of uncertainty is assigned to compounds with standard deviation of activity values equal to zero. Further, there is no correlation between standard deviation and uncertainty of predictions and even for compounds with wide range of activity values reported, predictions with low uncertainty can be obtained.

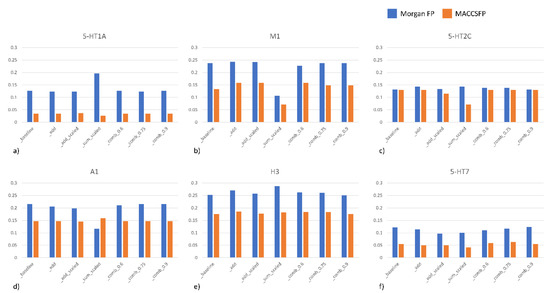

Figure 4.

Precision at top 10% for various ranking strategies for selected targets. (a) 5-HT1A; (b) M1; (c) 5-HT2C; (d) A1; (e) H3; (f) 5-HT7.

2.3. Compounds Ranking

In this experiment, we sorted compounds using particular strategy and the results were compared with the sorting based on true activities. The baseline model performs sorting only on the basis of prediction of a model, remaining strategies use also information about model uncertainty in various ways. It was indicated that using information about uncertainty in general improves sorting efficiency.

For the ranking strategies, we will assume that is the predicted bioactivity (in terms of affinity values—Ki) and ui is the prediction uncertainty. We will denote as output of ranking function, meaning the lower the R value, the higher in our ranking the compound is.

The following ranking strategies were used:

- Baseline—only prediction of a model is taken into account

- Add—to model prediction, information about uncertainty is added directly; the less uncertain the model is about the sample, the better

- Scale—the uncertainty estimation is normalized to fit the range of [0,1]) and used to scale the predictionwhere is a normalized uncertainty based on the measures for the whole test set.

- Add scaled—the uncertainty estimation is normalized to fit into [0,1] and added directly to the prediction

- Sum scaled—both prediction of a model and its uncertainty are normalized and then summed upwhere is a normalized prediction based on the predictions over the whole dataset.

- Comb λ—linear combination of prediction and uncertainty with the λ coefficient (various λ values were tested).

The results are presented in the form of the so-called “precision at top 10%”, which means that the compounds are sorted on the basis of their activity (two lists are prepared: based on true activity and based on the predicted values of activity parameters). Then, the 10% of top scored compounds from the list of the most active compounds is picked up and it is cross-checked with the list of the 10% of top scored compounds on the basis of predicted activity. The percentage of overlapping compounds between these two lists for different strategies for BAC splitting for example targets is gathered in Figure 4.

We can notice that the results and prediction effectiveness strongly depend both on the target, as well as compound representation. For all targets presented in Figure 4 Morgan FP appeared to be more effective representation. The differences in precision between different compound representations also vary for various targets—from slight differences for 5-HT2C, via a ~0.1 difference for M1, A1, H3 and 5-HT7, to up to 0.15 difference for 5-HT1A. On the other hand, the differences related to various compound representations were higher than differences related to application of different approaches. When information on uncertainty is added, the precision values averaged over all targets considered revealed that for both compound representations _add approach was the best. Nevertheless, in both cases, the improvement in comparison to baseline was relatively low—0.004 and 0.007 for MorganFP and MACCSFP, respectively when averaged values for all targets are taken into account.

However, considering particular cases separately, there was an approach that improved the accuracy of ML predictions in comparison to baseline; there were examples where these improvement was quite significant. For example, for D2 and H3 ligands, precision at top 10% was higher by ~0.03 when _sum_scaled approach is compared to baseline (for Morgan FP). _sum_scaled approach gave the highest improvement over baseline for A2A, for MACCSFP representation (~0.04).

In order to examine the influence of incorporation of uncertainty into ML models, the heat maps were prepared comparing the precision at top 10% for baseline and other approaches (Figure 5).

Figure 5.

Heat maps presenting differences in precision at top 10% between approaches that take into account uncertainty and baseline for BAC splitting for 10 targets from benchmark studies.

Figure 5 clearly show that in the majority of cases incorporation of information about prediction uncertainty improved efficiency of predictions of ML models (indicated by pink and red cells on heat maps). Nevertheless, the results strongly depend on target and compound representation. For M1, A2A and 5-HT6, strong improvement is observed for MACCSFP; for Morgan FP, it was D2, A2A and H3 that led to the highest improvement. This effect (for both representations is observed mainly for _add, _add_scaled and _sum_scaled approaches. On the other hand, the _sum_scaled approach was the only one for which the worsening of the results was observed for some targets (M1, A1 and 5-HT6 for Morgan FP and M1 and 5-HT2C for MACCSFP).

2.4. Detection of Potential Errors in Bioactivity Databases

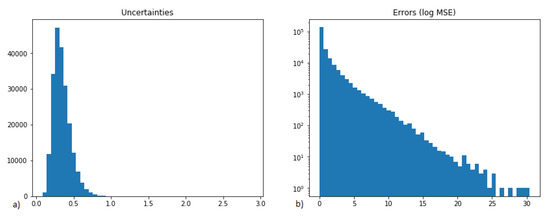

The developed methodology was used for detection of potential errors in the ChEMBL database. First of all, for all targets considered, the MSE of activity prediction together with uncertainties were calculated for the test set (Figure 6).

Figure 6.

Analysis of uncertainty and MSE of activity prediction (calculated for all targets considered). (a) uncertainty; (b) prediction error expressed as log(MSE).

On the basis of these data, the ‘suspected’ data points were indicated; they were defined as those for which the MSE was in the 95 percentile (and higher) and uncertainty on the 5th percentile (and higher).

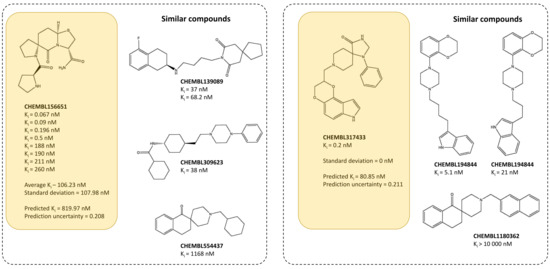

Examples of such compounds—together with the true activities reported and activities of 10 most similar compounds—are presented below (Figure 7).

Figure 7.

Analysis of selected dopamine D2 ligands with terms of affinity values provided in ChEMBL, predicted activity, prediction uncertainty and activity of structurally similar ligands (for Morgan FP).

The provided examples of dopamine D2 ligands prove that the prediction uncertainty is not correlated with the standard deviation of activity values provided in activity databases. For ligand CHEMBL156651, there were 8 Ki values towards D2 receptor reported, ranging from 0.067 nM to 260 nM (with the average Ki equal to 106.23 nM) and standard deviation of 108 nM. The predicted Ki for this compound is however much lower than the actual affinity values and is equal to 11.11 nM which is expressed also by high uncertainty factor (0.708). The relatively low value of predicted Ki is a result of activities of similar compounds present in the dataset. Figure 7 presents only selected examples, but even among them can be found compounds with much lower Ki than actual average Ki, such as CHEMBL139089, for which two Ki values are reported (37 and 68.2 nM). On the other hand, there is compound CHEMBL317433, for which only one Ki value is available (0.2 nM). Although for this compound, the standard deviation of Ki values is equal to zero, the prediction uncertainty is similar to the uncertainty determined for CHEMBL156651 (0.211) and the predicted Ki for this compound is equal to 80.85 nM. In this case, the activity of similar compounds is in narrower range, as the most active CHEMBL194844 has affinity of 5.1 nM, but there is also CHEMBL196171, whose Ki is equal to 21 nM.

3. Materials and Methods

The ChEMBL database [9] was used as a data source. Experiments were performed on 10 target proteins that were previously subject to detailed study in terms of dataset preparation for ML experiments [35]: serotonin receptors 5-HT1A, 5-HT2A, 5-HT2C, 5-HT6, 5-HT7 [50,51,52,53], muscarinic receptor ACM1 [54], adenosine receptors A1 [55] and A2A [56,57], histamine receptor H3 [58] and dopamine receptor D2 [59,60]. The targets are mostly representatives of aminergic GPCRs and were selected due to the knowledge of ligands of these receptors and the datasets themselves due to previous studies performed on these targets [35]. In addition, 15 proteins covering also other GPCRs’ families were selected to minimize results bias related to target selection: bradykinin B1 receptor [61], melanocortin (MC) receptors subtype 3, 4 and 5 [62], kappa opioid receptor (KOR) [63,64], mu opioid receptor (MOR) [64], delta opioid receptor (DOR) [64] orexin receptors 1 and 2 (OX1R, OX2R) [65], cannabinoid CB1 receptor [66], cannabinoid CB2 receptor [66], melatonin receptors MT1A and MT1B [67], metabotropic glutamate receptor mGluR5 [68] and C-C chemokine receptor type 1 (CCR1) [69,70]. The respective datasets were extracted using the following protocol: all records referring to human-related data were gathered and all cases that were not describing binding data (activity parameter included in the list: Ki, log(Ki), pKi, IC50, log(IC50), pIC50) were filtered out. Then, only ‘equal to’ relation between activity parameter and its value were kept and units of activity parameters values should belong to the set {M, mM, µM, nM, pM, fM}; additional results were produced for extended set of relations between activity parameter and its value (“=”, “<”, “>”, “≤”, “≥”, “~”)—the results are presented in the Supporting Information and were obtained for the set of 10 targets from benchmark studies. Recalculation of IC50 into Ki was performed using the following formula: Ki = IC50/2 [35] Finally, the Ki values were converted to the logarithmic form and such datasets were used in the study.

The predictions were carried out in two settings: random CV and BAC [35]. The compounds structures were represented by Morgan Fingerprint (radius equal to 2) [71] and MACCSFP [72] calculated by RDKit [73]. The dataset sizes in these two splitting types are presented in Table 3.

Table 3.

Size of datasets used in the study.

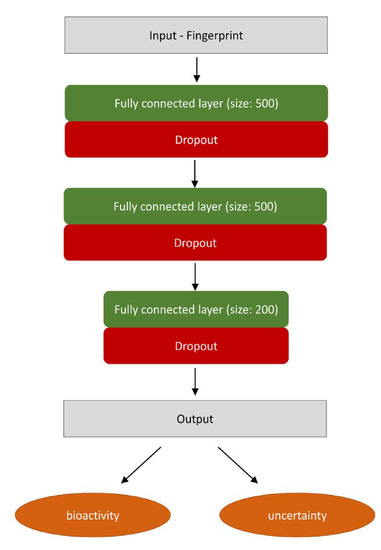

The problem considered in the study is numerical regression of bioactivity of ligands toward a particular target. For all of the experiments we used the same model architecture, a 3-hidden layer Multi-layer Perceptron with following hidden layer sizes: 500, 500, 200 and a single-neuron regression output layer. After each fully connected layer, except the final output layer, there was an ReLU nonlinearity activation function. Additionally, to enable uncertainty estimation, after each hidden layer, there is a dropout layer with probability of a neuron to be dropped set to 0.5 (Scheme 1), according to Gal et al. [46]. No additional regularization penalty were used throughout the training procedure. For the learning process, data points were supplied in mini batches of 100 examples, Adam method [74] was used for the optimizer with learning rate set to 0.001, each model was trained for 200 epochs. For each protein-representation pair a 5-fold cross-validation scheme was performed using two different splitting strategies mentioned in the earlier (random CV, BAC). The model, the training procedure as well as the uncertainty estimation algorithm was implemented using DeepChem package [75]. If a specific hyperparameter value is not mentioned, the default value provided by DeepChem was used.

Scheme 1.

Neural network used in the study.

4. Conclusions

In the study, we presented a DL-based approach for examination of uncertainty of compounds activity prediction. Several approaches were considered and their influence on activity prediction by ML methods was examined. Extended examination of the relationships between prediction uncertainty and compound similarity, number of activity values provided, and their standard deviation was carried out. We developed several dropout-based approaches for estimation of prediction uncertainty and applied uncertainty analysis for detection of potential errors in the ChEMBL database. The developed methodology can be of great help during virtual screening experiments, as information about prediction uncertainty for compounds indicated as potentially active might have crucial impact on making decision about their purchase.

Supplementary Materials

The following are available online. File S1. Visual analysis of dependencies between MSE, prediction uncertainty and compound similarity for all targets considered in the study for only ‘=’ relation between activity parameter and its value; File S2. Analysis of dependency between prediction uncertainty and number of activity values provided for particular compound in the ChEMBL database for all targets considered in the study for only ‘=’ relation between activity parameter and its value; File S3. Analysis of dependency between prediction uncertainty and standard deviation of activity values provided for particular compound in the ChEMBL database for all targets considered in the study for only ‘=’ relation between activity parameter and its value. File S4. Visual analysis of dependencies between MSE, prediction uncertainty and compound similarity for benchmark targets for extended set of relations between activity parameter and its value. File S5. Analysis of dependency between prediction uncertainty and number of activity values provided for particular compound in the ChEMBL database for benchmark targets for extended set of relations between activity parameter and its value; File S6. Analysis of dependency between prediction uncertainty and standard deviation of activity values provided for particular compound in the ChEMBL database for benchmark targets for extended set of relations between activity parameter and its value.

Author Contributions

All authors designed experiments; I.S. and D.L. carried out the experiments. All authors analyzed results and prepared the manuscript.

Funding

This research was supported by the grant SONATINA 2018/28/C/NZ7/00145 funded by the National Science Centre, Poland. SP is supported by the Foundation of Polish Science within the START scholarship.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript or in the decision to publish the results.

References

- Sliwoski, G.; Kothiwale, S.; Meiler, J.; Lowe, E.W. Computational methods in drug discovery. Pharmacol. Rev. 2013, 66, 334–395. [Google Scholar] [CrossRef] [PubMed]

- Reddy, A.S.; Pati, S.P.; Kumar, P.P.; Pradeep, H.; Sastry, G.N. Virtual screening in drug discovery—A computational perspective. Curr. Protein Pept. Sci. 2007, 8, 329–351. [Google Scholar] [CrossRef] [PubMed]

- Nicholls, A. What do we know and when do we know it? J. Comput. Mol. Des. 2008, 22, 239–255. [Google Scholar] [CrossRef] [PubMed]

- Rao, V.S.; Srinivas, K. Modern drug discovery process: An in silico approach. J. Bioinform. Seq. Anal. 2011, 2, 89–94. [Google Scholar]

- Egan, W.J.; Merz, K.M.; Baldwin, J.J. Prediction of drug absorption using multivariate statistics. J. Med. Chem. 2000, 43, 3867–3877. [Google Scholar] [CrossRef]

- Jorgensen, W.L.; Duffy, E.M. Prediction of drug solubility from structure. Adv. Drug Deliv. Rev. 2002, 54, 355–366. [Google Scholar] [CrossRef]

- Ou-Yang, S.-S.; Lu, J.; Kong, X.-Q.; Liang, Z.-J.; Luo, C.; Jiang, H.-L. Computational drug discovery. Acta Pharmacol. Sin. 2012, 33, 1131–1140. [Google Scholar] [CrossRef]

- Chiba, S.; Ikeda, K.; Ishida, T.; Gromiha, M.M.; Taguchi, Y.-H.; Iwadate, M.; Umeyama, H.; Hsin, K.-Y.; Kitano, H.; Yamamoto, K.; et al. Identification of potential inhibitors based on compound proposal contest: Tyrosine-protein kinase Yes as a target. Sci. Rep. 2015, 5, 17209. [Google Scholar] [CrossRef]

- Gaulton, A.; Bellis, L.; Bento, A.P.S.F.F.; Chambers, J.; Davies, M.; Hersey, A.; Light, Y.; McGlinchey, S.; Michalovich, D.; Al-Lazikani, B.; et al. ChEMBL: A large-scale bioactivity database for drug discovery. Nucleic Acids Res. 2011, 40, D1100–1107. [Google Scholar] [CrossRef]

- Besnard, J.; Ruda, G.F.; Setola, V.; Abecassis, K.; Rodriguiz, R.M.; Huang, X.-P.; Norval, S.; Sassano, M.F.; Shin, A.I.; Webster, L.A.; et al. Automated design of ligands to polypharmacological profiles. Nature 2012, 492, 215–220. [Google Scholar] [CrossRef]

- Berman, H.M.; Bhat, T.N.; Bourne, P.; Feng, Z.; Gilliland, G.; Weissig, H.; Westbrook, J. The Protein Data Bank and the challenge of structural genomics. Nat. Genet. 2000, 7, 957–959. [Google Scholar]

- Wishart, D.S. DrugBank: A comprehensive resource for in silico drug discovery and exploration. Nucleic Acids Res. 2006, 34, 668–672. [Google Scholar] [CrossRef] [PubMed]

- Wexler, P. TOXNET: An evolving web resource for toxicology and environmental health information. Toxicology 2001, 157, 3–10. [Google Scholar] [CrossRef]

- Melville, J.L.; Burke, E.; Hirst, J. Machine learning in virtual screening. Comb. Chem. High. Throughput Screen. 2009, 12, 332–343. [Google Scholar] [CrossRef] [PubMed]

- Tao, L.; Zhang, P.; Qin, C.; Chen, S.; Zhang, C.; Chen, Z.; Zhu, F.; Yang, S.; Wei, Y.; Chen, Y.Z. Recent progresses in the exploration of machine learning methods as in-silico ADME prediction tools. Adv. Drug Deliv. Rev. 2015, 86, 83–100. [Google Scholar] [CrossRef] [PubMed]

- Fukunishi, Y. Structure-based drug screening and ligand-based drug screening with machine learning. Comb. Chem. High. Throughput Screen. 2009, 12, 397–408. [Google Scholar] [CrossRef]

- Agarwal, S.; Dugar, D.; Sengupta, S. Ranking Chemical Structures for Drug Discovery: A New Machine Learning Approach. J. Chem. Inf. Model. 2010, 50, 716–731. [Google Scholar] [CrossRef]

- Sakiyama, Y.; Yuki, H.; Moriya, T.; Hattori, K.; Suzuki, M.; Shimada, K.; Honma, T. Predicting human liver microsomal stability with machine learning techniques. J. Mol. Graph. Model. 2008, 26, 907–915. [Google Scholar] [CrossRef]

- Ma, X.H.; Jia, J.; Zhu, F.; Xue, Y.; Li, Z.R.; Chen, Y.Z. Comparative analysis of machine learning methods in ligand-based virtual screening of large compound libraries. Comb. Chem. High. Throughput Screen. 2009, 12, 344–357. [Google Scholar] [CrossRef]

- Schwaighofer, A.; Schroeter, T.; Mika, S.; Blanchard, G. How wrong can we get? A review of machine learning approaches and error bars. Comb. Chem. High. Throughput Screen. 2009, 12, 453–468. [Google Scholar] [CrossRef]

- Douguet, M. Ligand-Based Approaches in Virtual Screening. Curr. Comput. Drug Des. 2008, 4, 180–190. [Google Scholar] [CrossRef]

- Chen, B.; Harrison, R.; Papadatos, G.; Willett, P.; Wood, D.J.; Lewell, X.Q.; Greenidge, P.; Stiefl, N. Evaluation of machine-learning methods for ligand-based virtual screening. J. Comput. Mol. Des. 2007, 21, 53–62. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, J. Machine learning methods in chemoinformatics. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2014, 4, 468–481. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Ji, L.; Chen, Y.; Tang, K.; Wang, H.; Zhu, R.; Wei, J.; Cao, Z.; Liu, Q. When drug discovery meets web search: Learning to Rank for ligand-based virtual screening. J. Cheminformatics 2015, 7, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Alberga, D.; Trisciuzzi, D.; Montaruli, M.; Leonetti, F.; Mangiatordi, G.F.; Nicolotti, O. A New Approach for Drug Target and Bioactivity Prediction: The Multifingerprint Similarity Search Algorithm (MuSSeL). J. Chem. Inf. Model. 2018, 59, 586–596. [Google Scholar] [CrossRef]

- Acharya, C.; Coop, A.; Polli, J.E.; MacKerell, A.D. Recent advances in ligand-based drug design: Relevance and utility of the conformationally sampled pharmacophore approach. Curr. Comput. Drug Des. 2011, 7, 10–22. [Google Scholar] [CrossRef]

- Yasuo, N.; Sekijima, M. Improved Method of Structure-Based Virtual Screening via Interaction-Energy-Based Learning. J. Chem. Inf. Model. 2019, 59, 1050–1061. [Google Scholar] [CrossRef]

- Duan, J.; Sastry, M.; Dixon, S.; Lowrie, J.; Sherman, W. Analysis and comparison of 2D fingerprints: Insights into database screening performance using eight fingerprint methods. J. Chemin- 2011, 3, P1. [Google Scholar] [CrossRef][Green Version]

- Nisius, B.; Bajorath, J. Molecular Fingerprint Recombination: Generating Hybrid Fingerprints for Similarity Searching from Different Fingerprint Types. ChemMedChem 2009, 4, 1859–1863. [Google Scholar] [CrossRef]

- Gardiner, E.J.; Gillet, V.J.; Haranczyk, M.; Hert, J.; Holliday, J.D.; Malim, N.H.A.H.; Patel, Y.; Willett, P. Turbo similarity searching: Effect of fingerprint and dataset on virtual-screening performance. Stat. Anal. Data Mining: ASA Data Sci. J. 2009, 2, 103–114. [Google Scholar] [CrossRef]

- Heikamp, K.; Bajorath, J. How Do 2D Fingerprints Detect Structurally Diverse Active Compounds? Revealing Compound Subset-Specific Fingerprint Features through Systematic Selection. J. Chem. Inf. Model. 2011, 51, 2254–2265. [Google Scholar] [CrossRef] [PubMed]

- Sastry, M.; Lowrie, J.F.; Dixon, S.L.; Sherman, W. Large-Scale Systematic Analysis of 2D Fingerprint Methods and Parameters to Improve Virtual Screening Enrichments. J. Chem. Inf. Model. 2010, 50, 771–784. [Google Scholar] [CrossRef] [PubMed]

- Leśniak, D.; Jastrzębski, S.; Podlewska, S.; Czarnecki, W.M.; Bojarski, A. Quo vadis G protein-coupled receptor ligands? A tool for analysis of the emergence of new groups of compounds over time. Bioorganic Med. Chem. Lett. 2017, 27, 626–631. [Google Scholar] [CrossRef] [PubMed]

- Wallach, I.; Heifets, A. Most Ligand-Based Classification Benchmarks Reward Memorization Rather than Generalization. J. Chem. Inf. Model. 2018, 58, 916–932. [Google Scholar] [CrossRef]

- Leśniak, D.; Podlewska, S.; Jastrzębski, S.; Sieradzki, I.; Bojarski, A.; Tabor, J. Development of New Methods Needs Proper Evaluation—Benchmarking Sets for Machine Learning Experiments for Class A GPCRs. J. Chem. Inf. Model. 2019, 59, 4974–4992. [Google Scholar] [CrossRef]

- Smusz, S.; Czarnecki, W.M.; Warszycki, D.; Bojarski, A. Exploiting uncertainty measures in compounds activity prediction using support vector machines. Bioorganic Med. Chem. Lett. 2015, 25, 100–105. [Google Scholar] [CrossRef]

- Der Kiureghian, A.; Ditlevsen, O. Aleatory or epistemic? Does it matter? Struct. Saf. 2009, 31, 105–112. [Google Scholar] [CrossRef]

- Unterthiner, T.; Mayr, A.; Klambauer, G.; Steijaert, M.; Ceulemans, H.; Wegner, J.; Hochreiter, S. Deep Learning as an Opportunity in Virtual Screening. In Proceedings of the NIPS Workshop on Deep Learning and Representation Learning, Montreal, QC, Canada, 8–13 December 2014; pp. 1058–1066. Available online: http://www.bioinf.at/publications/2014/NIPS2014a.pdf (accessed on 5 December 2019).

- Lusci, A.; Pollastri, G.; Baldi, P. Deep Architectures and Deep Learning in Chemoinformatics: The Prediction of Aqueous Solubility for Drug-Like Molecules. J. Chem. Inf. Model. 2013, 53, 1563–1575. [Google Scholar] [CrossRef]

- Ekins, S. The Next Era: Deep Learning in Pharmaceutical Research. Pharm. Res. 2016, 33, 2594–2603. [Google Scholar] [CrossRef]

- Kim, I.-W.; Oh, J.M. Deep learning: From chemoinformatics to precision medicine. J. Pharm. Investig. 2017, 13, 317–323. [Google Scholar] [CrossRef]

- Koutsoukas, A.; Monaghan, K.J.; Li, X.; Huan, J. Deep-learning: Investigating deep neural networks hyper-parameters and comparison of performance to shallow methods for modeling bioactivity data. J. Chemin- 2017, 9, 42. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Dai, Z.; Chen, F.; Gao, S.; Pei, J.; Lai, L. Deep Learning for Drug-Induced Liver Injury. J. Chem. Inf. Model. 2015, 55, 2085–2093. [Google Scholar] [CrossRef]

- Ma, J.; Sheridan, R.P.; Liaw, A.; Dahl, G.E.; Svetnik, V. Deep Neural Nets as a Method for Quantitative Structure–Activity Relationships. J. Chem. Inf. Model. 2015, 55, 263–274. [Google Scholar] [CrossRef] [PubMed]

- Ragoza, M.; Hochuli, J.; Idrobo, E.; Sunseri, J.; Koes, D.R. Protein–Ligand Scoring with Convolutional Neural Networks. J. Chem. Inf. Model. 2017, 57, 942–957. [Google Scholar] [CrossRef] [PubMed]

- Gal, Y.; Ghahramani, Z. Dropout dropout as a Bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1050–1059. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Hernández-Lobato, J.M.; Adams, R. Probabilistic backpropagation for scalable learning of bayesian neural networks. In Proceedings of the International Conference on Machine Learning (ICML 2015), Lille, France, 6–11 July 2015. [Google Scholar]

- Graves, A. Practical variational inference for neural networks. Adv. Neural Inf. Process. Syst. 2011, 24, 2348–2356. [Google Scholar]

- Oh, S.; Ha, H.-J.; Chi, D.; Lee, H. Serotonin Receptor and Transporter Ligands - Current Status. Curr. Med. Chem. 2001, 8, 999–1034. [Google Scholar] [CrossRef]

- Westkaemper, R.B.; Roth, B.L. Structure and Function Reveal Insights in the Pharmacology of 5-HT Receptor Subtypes. In The Serotonin Receptors; Humana Press: Totowa, NJ, USA, 2008; pp. 39–58. [Google Scholar]

- Glennon, R.A. Higher-End serotonin receptors: 5-HT5, 5-HT6, and 5-HT7. J. Med. Chem. 2003, 46, 2795–2812. [Google Scholar] [CrossRef]

- Wang, C.; Jiang, Y.; Ma, J.; Wu, H.; Wacker, D.; Katritch, V.; Han, G.W.; Liu, W.; Huang, X.-P.; Vardy, E.; et al. Structural Basis for Molecular Recognition at Serotonin Receptors. Science 2013, 340, 610–614. [Google Scholar] [CrossRef]

- Eglen, R.M.; Choppin, A.; Watson, N. Therapeutic opportunities from muscarinic receptor research. Trends Pharmacol. Sci. 2001, 22, 409–414. [Google Scholar] [CrossRef]

- Hocher, B. Adenosine A1 receptor antagonists in clinical research and development. Kidney Int. 2010, 78, 438–445. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Moreau, J.-L.; Huber, G. Central adenosine A2A receptors: An overview. Brain Res. Rev. 1999, 31, 65–82. [Google Scholar] [CrossRef]

- Xu, F.; Wu, H.; Katritch, V.; Han, G.W.; Jacobson, K.A.; Gao, Z.-G.; Cherezov, V.; Stevens, R.C. Structure of an Agonist-Bound Human A2A Adenosine Receptor. Science 2011, 332, 322–327. [Google Scholar] [CrossRef] [PubMed]

- Passani, M.B.; Lin, J.-S.; Hancock, A.; Crochet, S.; Blandina, P. The histamine H3 receptor as a novel therapeutic target for cognitive and sleep disorders. Trends Pharmacol. Sci. 2004, 25, 618–625. [Google Scholar] [CrossRef]

- Missale, C.; Nash, S.R.; Robinson, S.W.; Jaber, M.; Caron, M.G. Dopamine receptors: From structure to function. Physiol. Rev. 1998, 78, 189–225. [Google Scholar] [CrossRef]

- Wang, S.; Che, T.; Levit, A.; Shoichet, B.K.; Wacker, D.; Roth, B.L. Structure of the D2 dopamine receptor bound to the atypical antipsychotic drug risperidone. Nature 2018, 555, 269–273. [Google Scholar] [CrossRef]

- Qadri, F.; Bader, M. Kinin B1 receptors as a therapeutic target for inflammation. Expert Opin. Ther. Targets 2017, 22, 31–44. [Google Scholar] [CrossRef]

- Cai, M.; Hruby, V.J. The Melanocortin Receptor System: A Target for Multiple Degenerative Diseases. Curr. Protein Pept. Sci. 2016, 17, 488–496. [Google Scholar] [CrossRef]

- Lalanne, L.; Ayranci, G.; Kieffer, B.L.; Lutz, P.-E. The Kappa Opioid Receptor: From Addiction to Depression, and Back. Front. Psychol. 2014, 5, 170. [Google Scholar] [CrossRef]

- Valentino, R.J.; Volkow, N.D. Untangling the complexity of opioid receptor function. Neuropsychopharmacol. 2018, 43, 2514–2520. [Google Scholar] [CrossRef] [PubMed]

- Scammell, T.E.; Winrow, C.J. Orexin receptors: Pharmacology and therapeutic opportunities. Annu. Rev. Pharmacol. Toxicol. 2011, 51, 243–266. [Google Scholar] [CrossRef]

- Zou, S.; Kumar, U. Cannabinoid Receptors and the Endocannabinoid System: Signaling and Function in the Central Nervous System. Int. J. Mol. Sci. 2018, 19, 833. [Google Scholar]

- Liu, J.; Clough, S.J.; Hutchinson, A.J.; Adamah-Biassi, E.B.; Popovska-Gorevski, M.; Dubocovich, M.L. MT1 and MT2 Melatonin Receptors: A Therapeutic Perspective. Annu. Rev. Pharmacol. Toxicol. 2015, 56, 361–383. [Google Scholar] [CrossRef] [PubMed]

- Crupi, R.; Impellizzeri, D.; Cuzzocrea, S. Role of Metabotropic Glutamate Receptors in Neurological Disorders. Front. Mol. Neurosci. 2019, 12, 20. [Google Scholar] [CrossRef] [PubMed]

- Hughes, C.E.; Nibbs, R.J.B. A guide to chemokines and their receptors. FEBS J. 2018, 285, 2944–2971. [Google Scholar] [CrossRef]

- Griffith, J.W.; Sokol, C.L.; Luster, A.D. Chemokines and Chemokine Receptors: Positioning Cells for Host Defense and Immunity. Annu. Rev. Immunol. 2014, 32, 659–702. [Google Scholar] [CrossRef]

- Morgan, H.L. The Generation of a Unique Machine Description for Chemical Structures-A Technique Developed at Chemical Abstracts Service. J. Chem. Doc. 1965, 5, 107–113. [Google Scholar] [CrossRef]

- Accelrys, MACCS Structural Keys. Available online: http://www.3dsbiovia.com (accessed on 5 December 2019).

- RDKit: Open-Source Cheminformatics. Available online: http://www.rdkit.org. (accessed on 5 December 2019).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. Available online: https://arxiv.org/abs/1412.6980v9 (accessed on 5 December 2019).

- Ramsundar, B.; Eastman, P.; Walters, P.; Pande, V. Deep Learning for the Life Sciences: Applying Deep Learning to Genomics, Microscopy, Drug Discovery, and More; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2019. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).