1. Introduction

Resilience is a concept that has been defined in many scientific fields. For instance, resilience is studied in natural science: physics [

1], biology [

2], ecology [

3], and climate [

4]; in social sciences: psychology [

5], sociology [

6], and economics [

7]; in formal sciences: mathematics [

8] and computer science [

9]; and in applied sciences: engineering [

10], health [

11], and supply chain [

12]. Depending on the field, the interpretation of what is and what is not resilience is very different and sometimes contradictory. However, most of the literature understands resilience as the capacity for a system to maintain a desirable state or desirable functions while undergoing adversity or to return to a desirable state as quickly as possible after being impacted. In this paper, we focus on the resilience of sociotechnical systems (STS) with an emphasis on those that are safety-critical. Safety-critical STS are open systems where both humans and technology interact with each other within an environment and where safety is a critical aspect. Examples of such systems are airports, hospitals, nuclear plants, and offshore platforms [

13].

In this paper, we propose a formalisable conceptual framework for adaptive resilience of safety-critical STS. The novelty of our approach is the integration of all components in a coherent way, where human capacities and capabilities are placed in the core.

1.1. Related Works: Frameworks for Resilience in the Literature

Numerous literature reviews on the subject of resilience have been conducted [

14,

15,

16,

17,

18,

19]. They all discuss fundamental aspects of resilience for the different application fields, although desiderata for resilient behavior are not systematically structured in a coherent framework. In [

16], the authors formulate the rationale of resilience studies and structure resilience as a scientific object. The emphasis regarding adaptive capacity for resilience is provided in [

14], where the authors reviewed many works to support this claim, in particular [

20,

21,

22,

23,

24]. Additionally, the necessity for a large variety of systems to adapt in order to be resilient is analyzed and presented in [

3,

25,

26]. In [

17], the authors present a review on several resilience measures. This review shows the importance to relate resilience to the relationships between the engineered systems’ performances and the disruptive events. In [

19], the authors present an extensive, up-to-date literature review on the specific field of resilience engineering. What scholars consider as required to have resilient systems is categorized in [

18]. Among others, anticipation, adaptation, responding, restoring, learning, monitoring, planning, preparedness, awareness, cognitive strategies, and need for resources: all of these aspects are present in this literature review.

There are several frameworks in the literature. The authors in [

27] proposed that the resilience paradigm can be understood according to three capacities: the absorptive capacity, the adaptive capacity, and the recovery/restorative capacity. Following the definition of [

28], the absorptive capacity is “the degree to which a system can absorb the impacts of system perturbations and minimize consequences with little effort”. The adaptive capacity is the “ability of a system to adjust to undesirable situations by undergoing some changes”. The recovery/restorative capacity is “the rapidity of return to normal or improved operations and system reliability”. The Francis and Bekera framework describes resilience as the way a system deals efficiently with adversity. However, in this paper, we show that adaptive capacity fully belongs to the concept of resilience, but the absorptive does not and recovery/restorative only partially. The absorptive capacity fully belongs to robustness, and more generally to resistance (see

Section 5 for elaborate consideration about robustness and resistance), while the recovery/restorative capacity contributes to both resilient and resistant behaviors as they are defined here-in.

A well-known approach to quantifying resilience is the so-called resilience triangle, which is a measure of how efficiently a system recovers after a disruptive event. It is thus a relationship between a system’s performance and the time to recover. The resilience triangle metric was originally introduced to study the seismic resilience of communities to earthquakes [

29] and is used in other fields to measure the resilience of all kinds of systems (see for instance [

30] for application to underground urban transportation). However, STS resilience is also about anticipating undesired situations, whereas the resilience triangle metric does not take this capability into account. Moreover, this conceptual approach does not give information about the system’s adaptive capacity but only regarding the recovery/restorative capacity and consequently is a partial representation of resilience.

According to [

31], resilience is the “intrinsic ability of a system to adjust its functioning prior to, during, or following changes and disturbances, so that it can sustain required operations under both expected and unexpected conditions”. This definition integrates several important aspects such as the need to perform modifications, the time dimension, the concept of undesirable events and unexpected events, and the concept of a system’s performance. Moreover, Ref. [

32] identifies four basic potentials for resilient behavior. These are the potential to (1) monitor: knowing what to look for or being able to recognize what is or could seriously affect the system’s performance in the near future and monitoring one’s own system performance as well as the environment; (2) anticipate: knowing what to expect and being able to act on developments further into the future; (3) respond: knowing what to do to regular and irregular changes, disturbances, and opportunities by activating prepared actions or by adjusting a current mode of functioning; and (4) learn: knowing what has happened and using this experience to be better prepared for disruption in the future. The method to empirically assess systems’ resilience performance, called the RAG, can be applied to many different domains such as water management [

33] or healthcare [

34]. The conceptual framework we present here-in is inspired from the RAG’s structure, in the sense that there is a symmetry between the anticipation and responding processes since they are both adaptation processes. However the monitoring and learning processes are what is necessary to adapt and therefore belong to the adaptive capacity.

The main concepts to be integrated in the core of the unified conceptual framework come from the aforementioned literature, in particular from [

14,

16,

17,

18,

19]. They are up-to-date reviews of STS resilience. The definitions of these concepts are provided in

Table A1, and the relationships between these concepts are represented in the

Figure A1 in the

Appendix A.

1.2. Requirements and Research Objective

It is recognized that the most challenging aspect of studies into STS resilience is the understanding of how systems are able to handle unexpected events, meaning how to anticipate, to monitor, and to respond to something which is unknown. Moreover, human cognition and social aspects both play an important role in the capacity to adapt and thus in the process for a system to be resilient. We can understand resilience as an emergent phenomenon caused by a combination of system modifications, to handle unplanned and unexpected adverse events before, during or after they occur, making possible the pursuit of the system’s goals. Taking into account the existing studies on sociotechnical resilience and the necessity to enhance resilient behavior, there is a need for a framework that coherently establishes relationships between multilevel capacities, mechanisms, or characteristics which enable the STS to be resilient. The framework should integrate humans in the core of its structure since they are the main intelligent entities capable to deal with unexpected adverse events. Moreover, the framework should enable formalization, which facilitates automated analyses of resilience of different types of STS and for different scenarios.

The objective of this paper is to present and substantiate such a framework. Furthermore, it presents the first steps toward the formal modeling of resilience mechanisms, including human cognition and behavior. Such a formal approach enables the modeling, implementation, and computational simulation of different scenarios to increase the understanding and resilience of real-world STS.

1.3. Design of a Conceptual Framework: Methodology

In [

35], the author presents a method composed of eight phases to build conceptual frameworks for phenomena that are linked to multidisciplinary fields. This method in particular is appropriate for STS resilience since STS resilience is a phenomenon emerging from multiple fields such as engineered systems, human–machine interactions, social and cognitive processes, and organizational modeling, among others. To build this framework, we followed the eight phases of the method. 1 Map the selected data sources. We gathered papers from domains and systems of our interest (sociotechnical systems and safety domains) and adjacent domains for resilience, namely from: transportation, supply chain, ecology and socioecology, human–machine systems, healthcare, urban infrastructure, networks, organizations, chemical plants, aviation, nuclear plant, railways, natural disasters, and critical infrastructures. 2 Perform extensive reading and categorizing of the selected data. We read peer-reviewed papers, books, and conference proceedings; in particular, we considered several literature review papers as cited in

Table A1 and how they explain resilience and its requirements. 3 Identify and name concepts We identified key concepts for resilience from the papers. In particular, this framework integrates: absorption, adaptation, adaptive capacity, anticipation, avoidance, coordination, decision making, expectation, learning, monitoring, perception, prediction making, projection, recovery, resilience, resistance, resources, responding, robustness, sensemaking, situation awareness, threat recognition, and unexpectation. 4 Deconstruct and categorize the concepts. We separated the three system’s capacities absorption, adaptation, and recovery into resilience (adaptation, recovery) and resistance (absorption, recovery); we separated STS’s potential (adaptive capacity) to STS’s dynamics (adaptation); and we separated early adaptation (anticipation) from late adaptation (responding). Definitions of the concepts and related references are presented in the

Table A1. 5 Integrate the concepts.

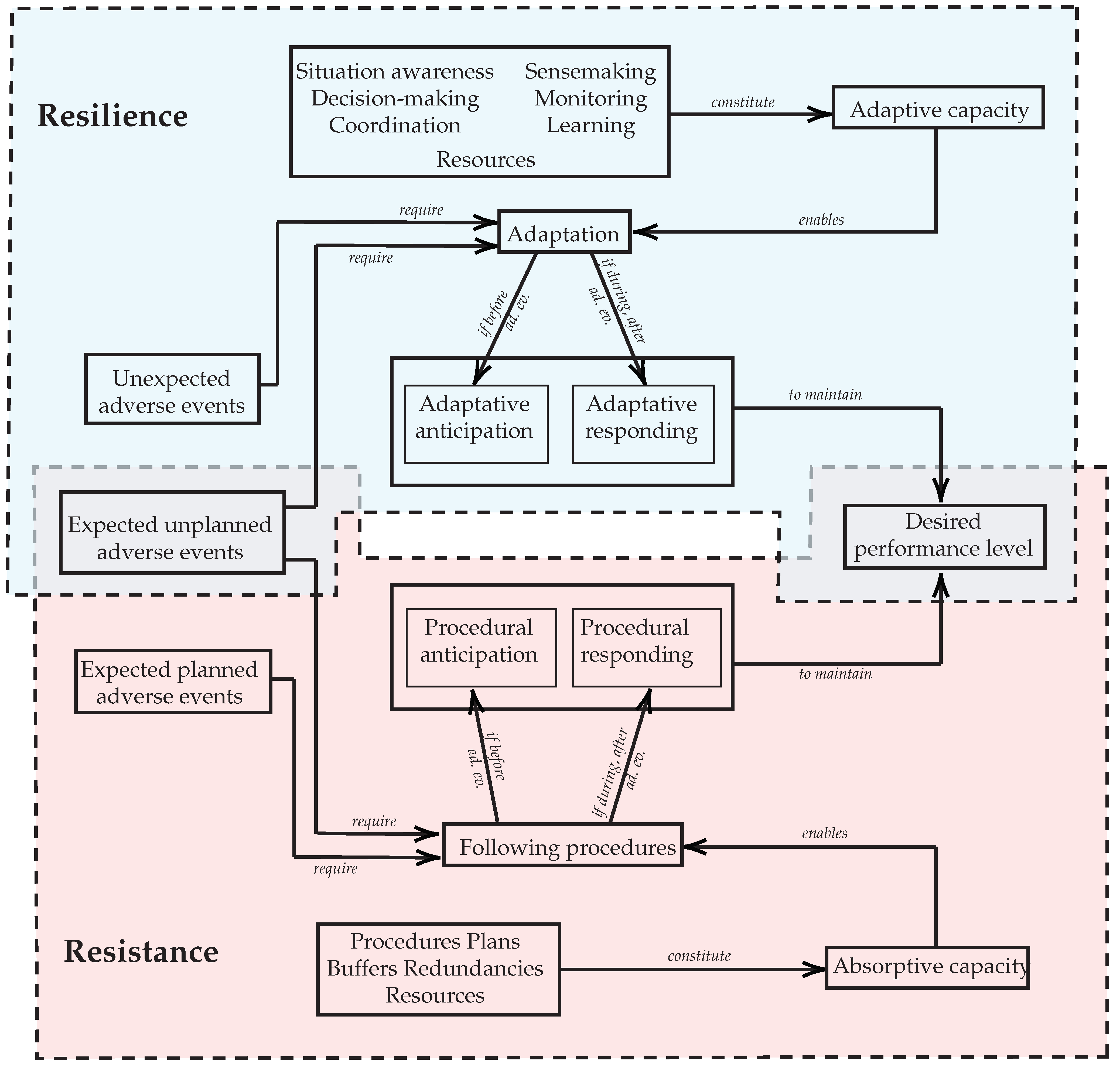

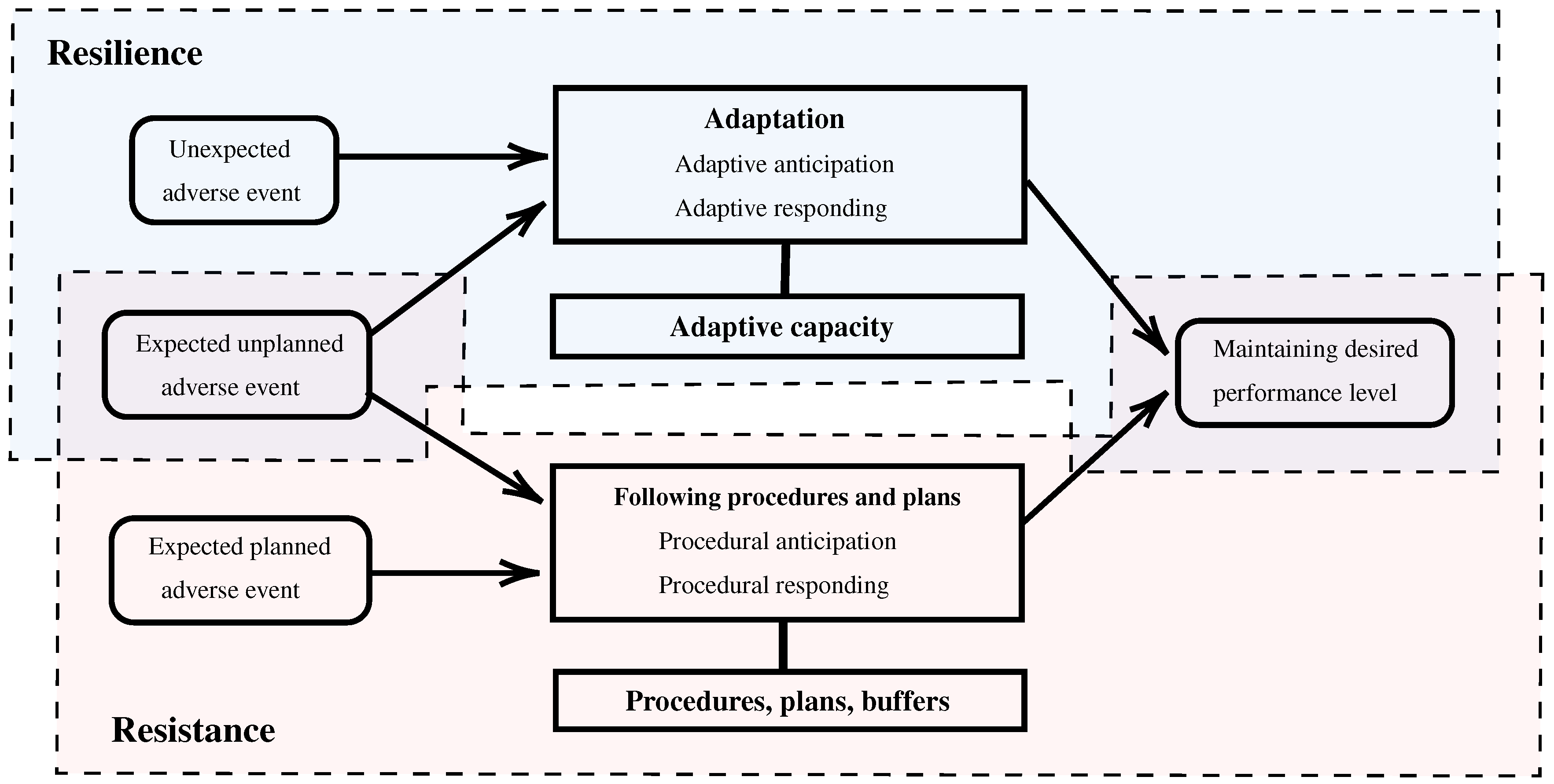

Figure 1 and

Figure 2 provide an overview of how the main concepts are related. However, since resilience is a multifaceted and multilevel phenomenon, the other considered subconcepts are integrated and explained throughout the paper. 6 Synthesis and make it all make sense.

Figure A1 placed in the

Appendix A provides a detailed overview of the nature of the relationships between the concepts. 7 Validate the conceptual framework, and 8 rethink the conceptual framework. These two final steps are future oriented: they will be refined after performing several case studies to illustrate the framework.

1.4. Structure of the Framework

The conceptual framework is structured as follows: Depending on the nature of adverse events, the considered system has or does not have the procedures and plans to be followed. If the system has procedures, for instance in dealing with planned adverse events or unplanned adverse events, then their execution is assumed to lead to maintaining a desired performance level. Therefore, the system is considered to exhibit a resistant behavior, meaning that the system stays in a desired performance envelope due to a designed management disruption planning. If the system does not have procedures and plans, or has them only partially, then the system has to adapt its functioning, either by anticipation or by responding, to maintain a desired performance level. The possibility of performing adaptation is given by the system’s adaptive capacity, and consequently, the system is able to exhibit a resilient behavior, meaning that the system stays in a desired envelope of performance as a result of the adaptation.

Figure 1 presents the overview of the conceptual framework for resilience relative to the relation with resistance. It shows that resilience and resistance are intertwined, and both adaptation and procedures, plans, and buffers contribute to the maintaining of the desired level of performance. Adverse events are considered as input and the performance level as output. They are represented by rounded rectangles. The internal dynamic of the system and its capacities are represented by regular rectangles.

2. Sociotechnical Systems as Complex Adaptive Systems

Complex Systems (CS) can be defined as systems being composed of numerous heterogeneous components at multiple scales with nonlinear interactions between components and with the environment. Some CS may have adaptive abilities. Such systems, called Complex Adaptive Systems (CAS), have components that adapt or learn as they interact [

36] and are able to modify their local or global behavior. Such CAS are, for instance, complex STS.

In [

37], the authors identify certain characteristics of complex STS, with the most relevant being:

Large number of interacting elements

Dynamic interactions, that change with time, evolving agents, and evolving environment

Nonlinear interactions: small changes in the cause implying dramatic effects; with sensitivity to initial conditions

Large diversity of elements, such as in terms of: hierarchical levels, division of tasks, specializations, inputs and outputs, goals, workers with different backgrounds and disciplines, and distributed system control

Many different kinds of relations between the elements

Emergent phenomena.

An essential characteristic of a large STS is its multilevel structure. Three levels can be distinguished in the system: individual, social, and organizational. The individual level represents the level of a single human or technical system and considers cognitive abilities and behavior. The social level is the level where several system’s actors interact by means of direct or indirect communication and coordination. The organizational level addresses a system’s goals, rules, norms, procedures, and strategy. Actors can be present at each level and are able to interact with other actors at the same level but also with actors of other levels. The interdependencies between agents of all levels may be defined with respect to goals, tasks, roles, information, and resources. According to the definition of [

38], a task is an action to be executed. A role is a class of behaviors or services offered by an agent in the system, e.g., a set of tasks. Resources are objects, conditions, characteristics, or energies that people value [

23]. A norm is a behavioral constraint established by the organization. Moreover, interactions between actors at a same level or between levels can be nonlinear, for instance, because of human behavior which may produce chaoticity [

39]. To summarize, since STS are large and open, with numerous interacting heterogeneous components, with nonlinear dynamics, within unpredictable environments, they belong to the class of CAS. Moreover, open STS functions in an environment in which diverse adverse events may take place.

The notion of a sociotechnical system is formalized using the many-sorted Temporal Trace Language (TTL) [

40] as a set of sorts (types) representing social system components (humans), technical system components, and relations between them expressed using order-sorted constants, variables, functions, and predicates of TTL. Furthermore, both technical and social system components are characterized by their state properties representing their states at every point in time and dynamic properties representing their behavior as transitions between their states. The states can be characterized by random variables to express uncertainty in these states. In addition, the transitions between states can be probabilistic, representing uncertainty in the component’s behavior and interactions.

Examples of STS where safety plays an important role include airports, hospitals, nuclear plants, refineries, offshore drilling rigs, mines, electricity power plant, space stations, submarines, chemical plants, manufacturing plants, and transportation systems [

37,

41].

3. Adversity

The concept of adverse events is a significant concept within the framework of STS resilience. Since resilience is a particular relation between an STS and adverse events, there is a need to define specifically what those events might be. Adverse events can be seen as anything that impacts—or may impact—the functioning of a system undesirably. In the literature, numerous terms are used, all describing the same concept of undesirability, such as disturbances, disruptions, interruptions, perturbations, hazards, threats, alterations, shocks, pitfalls, degradation, accidents, surprise events, challenging events, and undesired events.

Several kinds of events can be distinguished, and their nature may have a different origin, thus implying different consequences. First, a distinction is made between internal and external adverse events. An internal adverse event is an event which occurs in the system and originates from the system itself, for instance, a technological component failure. An external event is an event which disrupts a system but originates from the system’s environment, such as bad weather conditions in aviation causing flight arrival delay. Second, a distinction is made between sudden and long-term adverse events, where a sudden event is precise in time and can be inscribed as a point on a timeline, while a long-term event is that which happens over a period of time.

Such distinctions may allow actors which belong to a system to change the way of managing adversity: where a long-term internal event may have its negative consequences mitigated due to a monitoring capacity. If the nature of a sudden external event is known, it may be handled by virtue of the capacity to anticipate. Moreover, depending on the nature of the adverse events, adaptation can be executed during and/or after occurrence. For instance, if an adverse event is the eruption of a volcano disturbing an airport’s traffic, the executed adaptations occur during and after the adverse event. On the other hand, if an adverse event is a collision between two aircraft on the ground surface of an airport, the executed adaptations occur after the adverse event.

Unplanned events and unexpected events can be distinguished as shown in

Table 1. An adverse event is called

planned if its nature and time of occurrence are known to the system: for instance, the saturation of an airport terminal during the first weekend of summer holidays. An adverse event is called

unplanned if its nature is known to the system but not when it might occur: for instance, a volcano eruption impacting the surrounding air traffic.

Expected events are events for which the nature of adversity is known. This is the case when a situation has already been encountered previously by the system and thus the system has been instructed on what to do to avoid being more negatively impacted due to designed procedures, protocols, rules, etc. The system may be unprepared if it did not learn from previous experience or if it is unable to predict the moment of occurrence of such a situation.

Unexpected events are events for which the nature of adversity is not known. There are situations never encountered previously by the system or not learned from previous similar situations. For such events, the moment of occurrence is not known by the system and, such unexpected events are also termed

surprises.

Consequently, for STS, adverse events have at least four dimensions: time of occurrence (planned/unplanned), duration of occurrence (sudden/long-term), nature (expected/unexpected), and origin (internal/external). Adverse events from the system may originate from any of the system’s components: humans (e.g., misinterpretation of their environment and habituation creating an insensitivity to potential risks in familiar situations), technology (e.g., electronic devices break down), social (e.g., miscoordination between agents, such as working at cross purposes), and organizational (e.g., faults in tasks distribution or ill-designed procedures).

Adverse events can arise from the system’s environment where the environment has a great impact on the functioning of the STS and is the main source of disruptions. It is argued that environmental uncertainty is a central variable that describes the organization–environment interface [

42]. Such disruptions can be natural events such as earthquakes [

43] or bad weather conditions. Moreover, adverse events may also be complex, and for instance, they may produce nonlinear cascade effects, and consequently, several disconnected local disruptions may produce a systemic disruption.

Resilient systems are therefore referred to as those that are able to manage unexpected events efficiently. Since unexpected events mean that the nature of the adversity is not known to the system’s actors, they have to find new ways to manage the disruption. This is the reason that no deterministic system can be considered as truly resilient. For instance, an adaptive road traffic light controlling an intersection is able to sense the environment and to react in consequence, thereby modifying its functioning in case of saturation, and to act before, during, and after the disruption. However, crucially, the system is unable to manage unexpected events because it has not been programmed for them.

4. Capacity to Adapt and Adaptation

For a system to be resilient, some mechanisms or capacities are needed. Mechanisms are internal dynamical processes present in a system. In particular, in the considered literature reviews on resilience: [

14,

16,

17,

18,

19], mechanisms can be considered to be adjusting, anticipating, controlling, coordinating, decision-making, learning, mobilizing, modifying, monitoring, need for resources, planning, preparedness, recovering, reorganizing, regulating, responding, restoring, sensing, sensemaking, and updating. It is recognized that some processes may sometimes be seen as synonyms, and some are nested.

The presence of some of these mechanisms is not necessary for a system to be resilient, but their presence can be seen to enhance the ability of the system to manage adverse events more effectively. For instance, if a system does not have the anticipatory capacity, then it is unable to predict potential disruption and adapt accordingly. Nevertheless, it may still respond efficiently after disruption due to appropriate modification. Therefore, the anticipating capacity might not be necessary to obtain a resilient system, but if the system possesses this capacity, then it certainly enhances resilient behavior.

We interpret

adaptive capacity and

adaptation as two distinct concepts. The

adaptive capacity of a system is seen as that which is necessary to perform an adaptation. The adaptive capacity is composed of mechanisms or properties such as situation awareness, sensemaking, monitoring, decision making, coordination, learning, and resources. An

adaptation is a modification at any level (individual, social, and/or organizational) of plans, schedules, human behavior, skills, knowledge, goals, use of resources, tasks, roles, ways and means of coordination, relations, norms, etc., as a reaction to adversity. The capacity to adapt is called

adaptive capacity, but

adaptation refers to both the process to adapt and the outcome of the process. Consequently, an STS is considered as adaptive if it is able to perform any modification of its functioning as a reaction to unplanned adversity. Moreover, if after the performed adaptations the system stays in its envelope of desired level of functioning due to the modifications executed, it is considered to be resilient.

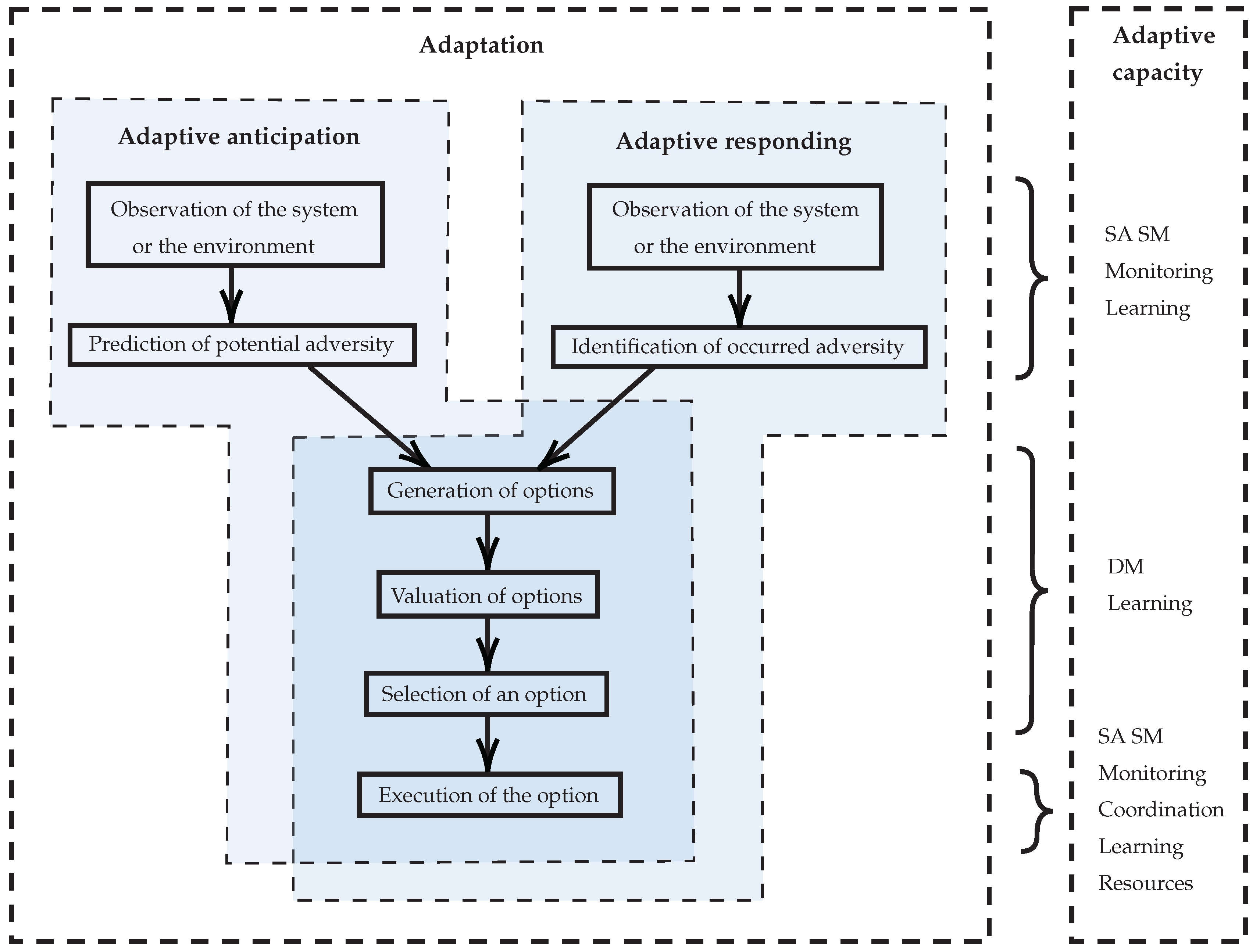

Figure 2 presents the relationship between adaptation and adaptive capacity. Adaptations executed before adversity are adaptive anticipation, whereas those executed during or after adversity are adaptive responding. Each step of the process requires mechanisms, abilities, or characteristics that belong to the adaptive capacity.

4.1. Adaptive Capacity

The adaptive capacity—or the capacity to adapt—is the capacity that allows systems to perform adaptations. It is understood to be composed of numerous mechanisms or processes that are present at all three system levels: individual, social, and organizational. As previously stated, the adaptive capacity of an STS comprises at least situation awareness, sensemaking, monitoring, decision making, coordination, learning, and resources.

In [

44], the authors state that the concept of resilience is characterized by involving the mental processes of sensemaking [

45], improvization [

46], innovation, and problem solving [

47]. Moreover, Reference [

48] argues that other cognitive processes are needed to manage disruptions, such as team situation awareness and other aspects of macrocognition (see [

49,

50]). More generally, all cognitive capacities, including intuition, prediction making, decision making, learning, and attention, contribute to the adaptive capacity because these are the intelligent components in the system which are able to recognize adversity and to act accordingly.

Most of these mechanisms are present in large STS to ensure normal functioning. For example, coordination between agents is needed for large STS. It does not mean that the system is resilient, but modifications of relative to the ability to coordinate may correspond to an adaptation so that an adverse event is handled efficiently. In the same way, situation awareness is needed for an agent in executing its tasks, but it is particularly necessary in predicting abnormal situations.

To model STS resilience, there is a need to identify mechanisms that allow the STS to adapt, i.e., those forming the adaptive capacity. Each mechanism plays a role in the process to adapt. Among these mechanisms are situation awareness, sensemaking, monitoring, decision making, coordination, and learning. In the following subsections, we elaborate on the mechanisms of adaptive capacity and propose formal methods that have been identified.

4.1.1. Situation Awareness

Situation Awareness (SA) is considered either to be the level of awareness that an agent has of a situation or the process to gain awareness. It is a state or a process that exists at the individual (agent) and social (multiagent) levels. According to [

51], SA is an internalized mental model of the current state of one’s environment consisting of a perception of the elements in the environment within a volume of time and space and the comprehension of their meaning and the projection of their status in the near future. SA is thus made up of three levels. Level 1 is perception of the agent’s environment where the agent perceives the status, attributes, and dynamics of relevant elements in its environment. Level 1 encompasses sensing, the input stimuli coming from the agent’s environment. Level 2 comprises the interpretation of the information from level 1. The agent understands the significance of the situation based on a synthesis of the disjointed environment’s elements in light of its goals. In level 3, information from level 1 and 2 is projected as a mental representation of the near future. The different levels are interconnected and executed at the same time. SA plays a major role in resilience since it is a key process for an agent understanding its environment. It is related to sensemaking, to monitoring, and to observation, with the latter being the first step toward achieving the behavioral trait of an adaptation (

Figure 2). It enables the identification of likely or occurred failures, and thus, it is required in the capacity to anticipate or to respond.

To formalize multiagent situation awareness, a mathematical framework using set theory and vector representation was developed by [

52]. It defines SA relations for multiagent systems (humans and nonhumans), shared SA, and also asymmetric multiagent SA, i.e., for when an agent A maintains SA regarding an agent B, while agent B does not maintain the SA of agent A. As such, the framework can be used for both prospective and retrospective analyses.

4.1.2. Sensemaking

SA is closely related to the concept of sensemaking (SM), existing at the individual and social levels. SM is “a motivated, continuous effort to understand connections, which can be among people, places, and events, to anticipate their trajectories and act effectively” [

53]. SM is a process for constructing a meaningful and functional representation of some aspects of the world [

54]. The process is based on active seeking and the processing of information to achieve an understanding of a situation. SM is then a process of transforming information into a knowledge product. SM is required for resilient behavior, because it is necessary to make predictions and to identify the occurred adversity, as shown in step 2 of

Figure 2. Moreover, it is necessary for the decision-making process to take place before executing any modification in the system.

A promising theory for formalizing SM is the Data/Frame model [

55], where frames are proposed as meaningful mental representations. They define what is seen as data and how those data are structured for mental processing. In the Data/Frame model, new perceived data are analyzed through an existing frame. This can result in refining an existing frame due to inferences, questioning the frame (if inconsistencies are detected), or reframing the frame [

54]. In [

56], the authors identify possible activities during the reframing process following a surprise for an agent. They are questioning, preserving, elaborating, comparing, switching, and abandoning the search for a frame and rapid frame-switching. These processes allow agents to give sense to the situation at the individual level. Reference [

57] developed a formal model for team sensemaking using network theory. Each agent’s cognitive state (beliefs, opinions, attitudes, etc.) is modeled, and all agents are connected in a communication network, while the effects of the network structure on the team sensemaking is computationally investigated.

4.1.3. Monitoring

According to [

32], monitoring is termed as looking for what could affect the system’s performance as well as what happens in the environment for short and longer terms. Monitoring is thus the process for a system knowing what is happening within the system or in the system’s environment. It can be a human monitoring process, for instance, a human directly observing the system or indirectly by using cameras, radar, etc. It can be indirect as well through self-monitoring [

58], for instance, looking at a system’s performance indicators. Monitoring can also be automated in nature with sensors, and data may be automatically analyzed by computers, such as with a nuclear plant’s control. Consequently, monitoring is observing something with intention and includes the assessment of the perception. Monitoring is related to the concept of attention. Moreover, it includes the mechanisms of situation awareness and sensemaking for human agents. Complementary to SA and SM, monitoring enables threat recognition. It is also connected to supervision, inspection, and maintenance more generally, which play a role in resistant behaviors.

A major component of monitoring is attention. There is a need that humans focus on specific components. Reference [

59] developed a formal model using mathematical functions and the LEADSTO language [

60] that describes the process of a human’s state of attention over time. According to the authors, the human

state of attention refers to all objects in the environment that are in the person’s working memory at a certain time point. The model includes the person’s gaze direction, as well as the location and the characteristics of the objects involved. Furthermore, for automated monitoring, diverse formal and computational methods and tools developed through for example machine learning and data mining can be used.

4.1.4. Decision Making

Once an adversity has been predicted or recognized, decisions must be taken, and actions executed. Inappropriate actions might compromise the desired performance and resilience. The available resources in a system have to be used in an appropriate way. The way to use them based on decisions made plays a role in the limitation of resilient behavior, so that the system extends gracefully under increasing demands and does not collapse [

61]. Decision making (DM) is the process of choosing an option among several options. DM is present at the individual, social (collaborative DM), and organizational levels, as stated earlier here-in. A particular type of DM plays a major role in resilience: Natural Decision Making (NDM). Here, NDM is seen as a mental process based on reasoning, describing how people make decisions under time pressure and incomplete information [

62]. Klein encompasses the processes of SA and SM in the NDM process.

The process of DM can be modeled as following three main steps. First, different options to cope with a potential or effective adversity are generated. Second, each identified option is valuated based on its degree of desirability. Those first and second steps can be made in parallel as an iterative process. Finally, depending on the valuation, an option is selected to be executed. According to [

62], the NDM process includes a prior stage of perception and recognition of situations, as well as the generation of appropriate options, which are evaluated and one is chosen, as in the classical DM process. A formal mathematical model was designed by [

63] to represent NDM through fast-and-frugal heuristics. Fast-and-frugal heuristics are task-specific decision strategies that are part of a decision makers’ repertoire of strategies for judgment and decision making. Moreover, two formal models for experienced-based decision making using mathematics and LEADSTO language were developed by [

64,

65]. The first model is based on the expectancy theory. The second model is based on the simulation hypothesis developed by [

66], where it is argued that felt emotions play a important role in the DM process.

4.1.5. Coordination

Coordination is a social mechanism that exists in any STS where several agents are present and have to execute distributed tasks. It is defined as the management of interdependencies to achieve common goals [

67]. In other terms, it is the management of interdependencies between tasks, activities and resources, such as equipment and tools [

68]. An interdependency is a relationship between a local and a nonlocal task of an agent, where the execution of one changes some performance-related characteristics associated with the other. Moreover, interdependencies between agents of all levels may be defined with respect to goals, tasks, roles, information, and resources. Coordination is necessary to perform successful adaptations for distributed tasks and consequently for an STS to exhibit resilient behavior.

The authors in [

69] argue that since humans are social beings, social aspects should be addressed not in addition but at the core of any model that involves interaction between agents. According to a well-accepted classification framework [

70], coordination mechanisms can be characterized by two dimensions. The first dimension refers to the point in time when the coordination mechanism is applied: prior to or during the actual interaction among agents. Prior coordination is used in feed-forward control, whereas feedback control is often required for coordination during the execution of tasks, especially when unexpected situations are encountered. The second dimension distinguishes explicit and implicit coordination. Agents coordinate explicitly by using task-programming mechanisms (e.g., schedules, plans, and procedures) or by verbal or written communication [

67]. Several computational frameworks have been developed to model coordination in organizational systems, included multiagent planning and multiagent scheduling, such as the ANTE and the JaCaMo frameworks [

71]. Planning is “the process of generating (possibly partial) representations of future behavior prior to the use of such plans to constrain or control that behavior. The outcome is usually a set of actions, with temporal and other constraints on them, for execution by some agent or agents” [

72]. Implicit coordination is realized through group cognition, which is based on shared knowledge of the interacting agents about the task being executed, the environment, and each other. Using this knowledge, agents anticipate actions and needs of other agents and adjust their own behavior and plans accordingly [

67,

73]. Explicit and implicit coordination mechanisms may complement each other and be used dynamically in a coordination structure.

Another important aspect in planning for resilient systems is its implementation stage. In [

74], the authors emphasize that the need to integrate flexibility is planning implementation. They developed a formal model to minimize the vulnerability of the considered system while improving several parts of it, through the process of the selection of the most adequate planning alternative. Moreover, planning plays a major role when coordination is distributed among several actors in a system [

75,

76,

77,

78,

79]. In [

79], the authors propose and illustrate the use of Multiagent System (MAS) as an effective tool to investigate the effects of local coordination on the global system’s performances in a context of airport movement operations.

4.1.6. Learning Capacity

Learning expands the adaptive capacity [

80]. Learning occurs at all system levels: individual, social, and organizational. At the individual level, it is a cognitive process that aims to improve future behavior based on past experience. The acquisition of skills and knowledge or the success to achieve tasks and goals for a system, or for a system’s parts, occurs at different times in the process of adverse event management. First, the process of knowledge acquisition is needed during and after disruptive events. Learning allows a system to transform

unknown unknowns events into

known unknowns events and may transform

known unknowns events into

known events (

Table 1). Causal relations between actions chosen and consequences can be learned. Errors can be corrected for upcoming similar situations. Second, the knowledge acquired from previous adverse situations is needed to manage potential or occurred adverse situations. Indeed, in the anticipation process, the prediction making is possible specifically due to experience and thus to learning. Therefore, the results of learning at earlier times influence the efficiency of the anticipatory capacity for later times. Learning is also an important component of preparedness. It gives the possibility of being better prepared and how to react appropriately, in other terms to manage the situation efficiently, if a similar situation arises again. Learning does not contribute directly to a recovery process but contributes to the system adaptation in increasing the skills and knowledge of its components. If a system does not have strong learning abilities, it may still be resilient, but learning enhances a system’s resilience. Learning abilities in an STS enhance anticipatory abilities because most predictions are based on existing knowledge. Consequently, without learning abilities, the system can only react and not proact. It should be noticed that learning from the past at the organizational level trades resilient behaviors for resistant behaviors. When a system learns, new procedures or protocols to manage similar situations are established. Consequently, organizational learning increases the resistance of the system.

In STS, learning is mostly performed by humans because learning is closely related to intelligence. However, technology is also capable of such an ability by the implementation of machine learning techniques. Reference [

81] proposes a way to model different types of learning as follows: First, single-loop learning is a simple error-and-correction process. Then, double-loop learning is an error-and-correction process modifying an organization’s underlying norms, policies, or objectives. Finally, deuterolearning is learning about how to carry out single-loop and double-loop learning.

4.2. Adaptation

Adaptation is needed when the system is challenged by adversity and there are no or incomplete procedures and plans. If the system fails to adapt, its local and global performance may decrease and possibly lead to a systemic disruption. As a consequence, the system’s goals cannot be pursued anymore. Reference [

82] states that for human systems, adaptation includes adjusting behavior and changing priorities in the pursuit of goals. In this framework, we consider that an adaptation is any unplanned modification of the system as a reaction to potential or actual adversity. For instance, an adaptation can be a modification of plans, schedules, human behavior, skills, knowledge, tasks, roles, goals, resources or way to use of resources, ways and means of coordination, relations, norms, etc. It can be at any system level: individual, social, and organizational.

Certain adaptations can be classified. A modification of schedules can be labeled

re-allocation of resources. Modifications of goals, tasks, roles, plans, and coordination are

reorganization in nature. The modification of skills and knowledge, either at the individual or organizational level, is

learning. Large modifications of the formal organization are called

transformations [

83]: they are fundamental changes in the STS organization, where the whole organizational structure is modified as a response to a systemic disruption. All these adaptations can be divided into two categories depending on the moment of occurrence. An

adaptive anticipation is any adaptation performed before potential adversity. An

adaptive response is any adaptation performed during or after undergoing adversity (

Table 2).

Another aim of adaptations that is sometimes present in the literature (see for instance [

84] in the nuclear power plant context or [

74] in the infrastructure planning context) is to improve the functioning of a system, often in the mid- or long-term after the occurrence of an adverse event. Stress and disturbance can bring positive consequences on the systems’ performances. In particular, new behaviors, skills, procedures, and use of resources, at the individual, social, and organizational levels, can be adopted and maintained in a way that the systems’ performances postadversity are enhanced compared to preadversity.

According to [

85], a characteristic of adaptive systems is to seek out and decrease vulnerabilities. Following this idea, the authors of [

86] developed an analytical framework to assess progress in the development of vulnerability research over six dimensions: robustness-uncertainty, participatory-subjectivity, multiscale-complexity, dynamics, multiobjective strategic capacity, and cognitive-cause effect. The authors of [

87] developed a framework called the decision framework for risk assessment methodologies, in particular for decision scaling and robust, adaptive, and flexible decision making.

4.2.1. Adaptive Anticipation

The process of identifying a potential adversity for a system or any system’s parts to make predictions, and to act accordingly, is called

anticipation. It is a future-oriented action based on a prediction [

88]. To be able to perform predictions, the system must possess the monitoring capacity and consequently SA and SM abilities. A system cannot predict if it is not able to observe and to understand what is happening within itself and its environment. Anticipation is possible for events for which their nature is known (expected planned and expected unplanned). There is no possible anticipation for unexpected events because their nature is not known so they cannot be predicted.

We distinguish two kinds of anticipation: those following procedures (such as preventive maintenance) and those performing adaptations (when there are no or incomplete procedures). They are correspondingly labeled

procedural anticipation and

adaptive anticipation. Prevention and protection are procedural anticipations, for instance, preventive or predictive maintenance which relates to planned procedures for checking or signaling a potential problem. Anticipations that follow procedures and plans contribute to resistant behavior and not to resilient behavior (

Figure 1).

The process of adaptive anticipation is described as follows (

Figure 2): (1) observation of the system itself or its environment, (2) cues accumulation leads to prediction, (3) decision options are generated, (4) options are valuated, (5) a choice is made, and (6) then executed. These steps can be made at the individual level, i.e., as a human mental process, or at the social and organizational levels. Since the adaptive capacity is what is needed to perform an adaptation, the mechanisms of the adaptive capacity as described in

Section 4.1 are represented for each step of the process (

Figure 2).

The capacity to learn is essential to the capacity to adapt. The possibility to make predictions of a potential adverse event is based on knowledge acquired during previous similar situations. Moreover, in the DM process, the knowledge learned extends the number of options, and makes the valuation of these options more accurate, as well as the choice of the most appropriate one. As soon as an option is executed, the observation of the effects is also a way of learning for the system or for parts of the system. Moreover, humans or technical systems can learn while observing other humans or technical systems doing their tasks. Therefore, learning plays a role in most of the steps of the process of adaptation.

The goal of anticipation is to avoid adverse events, to delay the time of occurrence of adverse events, or to mitigate the negative effects of adverse events. Moreover, anticipation can be considered at different aggregation levels of an organization. For example, individual anticipation is essential for implicit coordination, whereas anticipation at the organizational level may concern large-scale hazards or issues with the organizational functioning.

There is an inherent uncertainty in the process of adaptive anticipation. First, predictions made regarding a certain potential adverse events are always uncertain. Because of the complexity of STS, these predictions might or might not come true. Second, during the process of decision making, the generated options are associated with a certain uncertainty regarding the efficiency of solving the problem. There is a difficulty, or sometimes an impossibility, of knowing in advance whether an option or a strategy to solve the considered problem will effectively solve it. Large sociotechnical systems, because of their numerous components and interdependencies, are too complex to be able to fully predict the real consequences of a system’s reorganisation. In particular, the chosen strategy to allocate some resources might not correspond to the real need while the adversity has just occurred and is being managed. A new strategy can be decided at that moment, integrating the new information of the situation. In the case of very large uncertainty, called deep uncertainty [

89], the actors can decide to either gain time to delay the adverse event effects or to choose a preliminary option while being prepared for a change of options in case the prediction was inaccurate [

90]. Deep uncertainty is defined as a situation in which the relevant actors do not know or cannot agree upon: how likely various futures states are, how the system would work, and how to value the various outcomes of interest [

89]. An effective approach to dealing with deep uncertainty is the Dynamic Adaptive Policy Pathways [

91]. In this approach, system’ actors use transient scenarios that integrate planning processes. Plans are made for anticipating and corrective actions to handle vulnerabilities and opportunities. Moreover, a monitoring system allows actors to assess if the actions’ consequences follow to preferred pathway or if other adaptations are required.

The mechanism of adaptive anticipation has been formalized by [

92] using an expressive formal language that is able to represent temporal, spatial, qualitative, quantitative, and stochastic properties called Temporal Trace Language [

40]. The aforementioned steps of the anticipatory process are detailed and formalized due to the use of the Temporal Trace Language.

4.2.2. Adaptive Responding

The process of executing responses to adversity is called

responding. Similarly to anticipation, we distinguish two kinds of responses: those following procedures and plans (such as corrective or reactive maintenance) and those that are adaptations (when there are no or incomplete procedures). They are correspondingly labeled

procedural responding and

adaptive responding. An adaptive response is an adaptation performed during or after the system undergoes adversity. The goal is to minimize negative consequences following an adverse event and/or to recover from it (

Table 2). Adaptive responding follows the process as described in

Figure 2: (1) observation of the system itself or its environment, (2) occurred adversity is identified, (3) decision options are generated, (4) options are valuated, (5) a choice is made, and (6) then executed. These steps can be made at the individual level, i.e., as a human mental process, or at the social and organizational levels.

One of the possible responses to adversity a system can make is its reorganization. Reorganization is a system’s ability to change such as through modification of plans, schedules, tasks, goals, resources, or roles. Reorganization can be investigated through the formal framework developed by [

93]. Moreover, the Adaptive Multiagent Systems (AMAS) field formalizes the dynamics of multiagent organizations (see for instance [

93,

94,

95,

96]).

5. Resistance Versus Resilience

There is a large debate among scholars about the nature of robustness/resistance and resilience. In accordance with several authors [

61,

97], we consider that robustness is a different concept than resilience. In this framework, we understand robustness following the Anderson and Doyle scheme: “Robustness is always of the form: system

X has property

Y that is robust in sense

Z to perturbation

W” [

98]. For STS, one can apply this definition as follows: a system (

X) is robust if it maintains a desirable level of performance (

Y) by use of buffers or following designed procedures or plans (sense

Z) for a given adverse event (

W). Robustness can be interpreted as having two components. The first component is passive, represented by buffers, such as redundancies or consumables. This is related to the absorptive capacity as defined by [

28]. The second component is active, for instance when procedures, protocols, or rules have to be followed in response to a particular adverse event. This is relative to both the absorptive and recovery capacities. As a consequence of Alderson and Doyle’s definition, a system may be robust regarding an adverse event

but vulnerable with regards to a different adverse event

. Robustness is closely related to the concept of resistance.

In this framework, resistance is defined as follows: “a system is resistant if it is robust regarding a large set of adverse events”. Resistance is then the generalization of robustness. Following the definitions of resilience and resistance, resistance is not a sort of low-degree resilience but a property of a different nature than resilience. When procedures are incomplete, there is a need to adapt, which cannot be achieved through by resistance. It should be noted that what is termed a “large set” of adverse events is subjective and depends on the interests of the different system’s stakeholders.

A consequence of the distinction between resistance and resilience is that the absorptive capacity is not considered to be a resilience capacity. Reference [

28] defines absorptive capacity as the degree to which a system can absorb the impacts of a system perturbations and minimize subsequent consequences with little or no effort. We consider “little or no effort” to be when a system undergoes adverse events and that only the availability of resources is challenging, because they are usable immediately.

Functional and physical redundancies are the extent to which elements, systems, or other units of analysis exist that are substitutable, i.e., capable of satisfying functional requirements in the event of disruption [

29]. Functional redundancy (i.e., having multiple ways to perform functions) and physical redundancy (several entities performing the same functions but spatially separated) are consequently buffers because they have been designed and integrated into the system. The presence of redundancies and protections reinforces the absorptive capacity of a system and therefore its resistance.

According to [

28], recovery/restorative capacity is “the rapidity of return to normal operations and system reliability”. It includes all necessary processes and characteristics that increase the rapidity of returning to a normal system functioning such as the repairability. Repairability refers to the ease of repair in a technological system or a part of it in terms of time, knowledge, and costs. The speed of recovery is an indicator of adversity management performance. However, since both procedural responding and adaptive responding contribute to the process of recovering a system’s functions, the recovery process and its speed are not an attribute of only resilience—except for when there are unexpected events.

Metaphorically, resistance is about stability, and resilience is about adaptivity, because resistance is related to the management of expected adversity, and resilience is related to the management of unexpected adversity. They are intrinsically intertwined because both play a role in the maintaining a desirable level of performance of real-world STS [

99].

To summarize, the adaptive capacity contributes to resilient behavior. The absorptive capacity contributes to the resistance of a system. The process to recover plays a role in both resistance and resilience. To efficiently manage adversity, an STS should be resistant and resilient. However, surprises can be managed only by means of adaptation.

6. Discussion

In this section, we discuss three main aspects of STS resilience and give research directions for future work. First, we suggest that resilience is an emergent phenomenon. Second, we discuss systems performance indicators as the measure of the effects of performed adaptions. Third, we discuss a promising way to formally model STS resilience at the systemic level.

6.1. The Nature STS Resilience

The presented framework shows that many mechanisms and capabilities are required for a system to be resilient. Safety-critical STS resilience can then be understood as an emergent phenomenon produced by a set of nonlinear dynamical adaptive mechanisms (such as situation awareness, sensemaking, monitoring, decision making, coordination, and learning), and system modifications, that handle unplanned and unexpected adverse events before, during, or after they occur, making possible the pursuit of associated objectives. The emergence of STS resilience has several origins. Resilience is intrinsically related to intelligence. It is problem-solving oriented, where the appropriate decisions have to be made and the actions appropriately executed. Cognitive processes are necessary to perform adaptations, such as mentioned in the framework: perception, understanding, attention, learning, and reasoning, as these are involved in decision making and planning processes. As a consequence of STS resilience being an emergent phenomenon, it is difficult to measure the resilience itself. However, the effects of performed adaptations reflected in a set of performance indicators can be quantitatively measured through formal modeling and simulations.

6.2. Desired Level of Performance

It is possible to define and measure the performance indicators (PIs) of a system. Examples of PIs include risk, costs, throughput, achieved goals, and utility. Often, they depend on the considered STS. PIs can be defined at the level of mechanisms (SA, coordination, anticipation, etc.) and combinations of mechanisms, as well as at the systemic level. For unexpected events, the time to recover is a PI measure for resilience. The relationships between inputs (characteristics of adverse events) and PIs can be investigated in the context of specific case studies.

Performance indicators are often divided into two categories: leading indicators and lagging indicators. Traditionally, in particular in the area of safety, lagging indicators are often used to measure organizational performance over some past period. Although such indicators provide aggregated statistics and information concerning general past trends, they are of the reactionary nature and are not well suited for anticipation or even prevention of undesirable events. The leading indicators are, on the contrary, focused on future (safety) performance, and they are of the proactive nature. Such indicators are essential for the processes of adaptive anticipation. Since these indicators are future oriented, they are more difficult to measure. Such indicators could, for example, be captured by machine learning models, in particular, to predict future trends. Furthermore, some of these indicators could be inferred from the analysis of formal models of adaptive resilience mechanisms. In this way, they would form precursors to some essential model outputs and could be measured and acted upon before these outputs have taken place.

In the framework, a system exhibits resilient behavior if it stays in its envelope of desired level of performance while undergoing unplanned or unexpected adversity. The concept of “desired level” is subjective; it depends on the values of the stakeholders. If the level of performance goes below the desired level because of an adverse event, there is a need to recover the system’s performances. However, if it happens, the system was not resilient or resistant in the first place. Furthermore, for safety-critical STS, it is not acceptable for the performance level (in particular, risks) to drop below its defined envelope of performance because unacceptable risks need to be avoided in any situation.

6.3. Modeling STS Resilience

Safety-critical STS are complex systems. Because of their complexity and to quantify the effects of adaptation, there is a need for formal models at the systemic level to analyze resilience more extensively. Such formal models enable the understanding and the improvement of real-world STS resilience. Using such models, one can computationally simulate different scenarios and analyze the results. As a possible way to formalize resilience, a theory developed by [

82] integrates a base adaptive capacity to deal with expected adverse events and an additional adaptive capacity to deal with unexpected events. A well-known method in the field of resilience engineering is the FRAM (Functional Resonance Analysis Method). This method consists of producing a representation of daily activities of a system in terms of the functions needed to execute them and the ways they are interdependent [

100]. Although there are some attempts to formalize this method to quantitatively investigate STS resilience (see [

101] for the complex network approach and [

102] for the fuzzy logic approach), no model exists that formally integrates the functioning of the individual/social cognition and behavior mechanisms.

Models for resilience for interdependent critical infrastructural systems have been reviewed by [

103]. Six groups of models are identified: empirical approaches, agent-based approaches, system-dynamics-based approaches, economic-theory-based approaches, network-based approaches, and others. However, those models do not address all the essential aspects of safety-critical STS. On the one hand, to model the organizational layer of safety-critical STS, top-down control structure needs to be formally represented, for example, by hierarchical components. On the other hand, to model the adaptive capacity of STS, the bottom-up self-adaptations need to be formally represented. General Systems Theory (GST) provides a suitable formal language for modeling hierarchical, multilevel systems [

104]. Specifically, organizational structures can be represented by a hierarchical arrangement of interconnected decision-making components. The main focus in such hierarchies is on top-down control and coordination, and interlevel interdependencies among components. GST is a language based on set theory. Complementary to GST, languages of Complex Adaptive Systems theory and its prominent tool, Agent-Based Modeling (ABM), can capture the effects of bottom-up self-adaptations. Agents are autonomous decision-making entities, able to interact with the environment and other agents by observation, communication, and actions. Both humans and technical systems can be represented by agents. A computational agent cognitive architecture developed by [

105] is used to investigate the behavior of safety-critical STS such as airports. Several of the adaptive capacity mechanisms are present or can be added: situation awareness, sensemaking, monitoring, and decision-making. The modularity of the ABM permits the inclusion of other adaptive mechanisms such as learning or coordination. The three layers: operational, tactical, and strategic, correspond to three agent’s behaviors, depending on its current state and its interactions with the environment. The ABM paradigm can be applied to the domains of aviation, health care, and nuclear energy production (for aviation, see for instance [

106,

107]). A suitable formal language for the specification of ABM of complex STS is the Temporal Trace Language [

40,

108]. Based on logic, the Temporal Trace Language (TTL) can formally represent the dynamic properties of systems and can be used to formalize this framework.

6.4. Limitations of the Study and Future Work

The presented framework has some limitations. The methodological steps described in the

Section 1.3 “7 Validate the conceptual framework” and “8 Rethink the conceptual framework” are not included in the study. The validation will be performed after conducting several case studies. Each case study should investigate a mechanism that contributes to the adaptive capacity of the considered system. The eighth and last step: “Rethink the framework” corresponds to the revision of the framework to integrate the next research on STS resilience, coming from both scholars and practitioners. Since different scientific fields contribute to the understanding of STS resilience (such as human cognitive and social modeling, disruption and crisis management, resilience engineering, safety and risk assessment, and organizational research), new insights could be integrated in the framework in the future. In particular, additional mechanisms, properties, and capacities could be added if considered to be necessary to STS resilience.

Future research will extend the presented framework. Two adaptive mechanisms have been already been formalized and analyzed in a context of adaptive STS resilience (the anticipation mechanism [

92] and the coordination mechanism for decentralized control [

79]); however, more elaboration is required to individually investigate the effects of the other mechanisms and also of combinations of these mechanisms, on the system’s adaptive capacity and the system’s adaptation on the global PIs. This constraint comes from the inherent emergent nature of resilience, namely local adaptations causing certain global behaviors. Relations between inputs (such as adverse events and their characteristics) to mechanisms and outputs indicators (such as PIs) that need to be maintained will be investigated through case studies. PIs could also be indicators reflecting the workings of mechanisms and characteristics of both resilience and resistance. Moreover, output indicators can come from different stakeholders, and utility functions could be defined through them. Finally, the unification of GST and TTL-based ABM in a hybrid formal modeling framework will be possible because both languages are based on set theory and use its algebraic structure.

7. Conclusions

This paper presents a conceptual framework for the resilience of safety-critical STS which integrates different capacities and mechanisms required for an STS to be resilient. It emphasizes the need to connect local modifications executed in a system, as a reaction to potential or occurred adversity, to a global system’s performance to model and understand STS resilience. Four main conclusions can be made about this framework.

First, comprehensive meanings are given to the relationships adaptation-resilience and resistance-resilience. In terms of the relationships associated with adaptation-resilience, the distinction is made through the actual efficiency of the adaptations performed. In a resilient system, the adaptations should lead to the maintenance of the desired performance. For the relationship of resistance-resilience, resistance is the capacity of a system to be challenged by adversity but not to be impacted because the system has buffers (such as physical redundancies or unused resources) or formal procedures to manage adverse events.

Second, many identified mechanisms and capacities presented in other frameworks for resilience are integrated in a coherent way. This framework includes: absorption, adaptation, adaptive capacity, anticipation, avoidance, coordination, decision making, expectation, learning, monitoring, perception, prediction making, projection, recovery, resilience, resistance, resources, responding, robustness, sensemaking, situation awareness, threat recognition, and unexpectation. Relevant relations between these concepts are also explained.

Third, the framework places humans in the center. Human intelligence plays a key role in successful adaptations, mainly because resilience is problem-solving oriented. Therefore, to formally study STS resilience, human capabilities must be represented at the core of the models.

Fourth, conceptual insights into the understanding that resilience is an emergent phenomenon are provided. This argument is supported by the presence of numerous multilevel multiscale heterogeneously nonlinear mechanisms and capabilities all interacting together to maintain the desirable global level of performance.

In conclusion, the proposed framework provides more structure and meaning to the complex and multifaceted concept of sociotechnical adaptive resilience. As first steps toward the formal modeling of resilience, we also suggested relevant formal frameworks for the identified mechanisms for adaptive capacity and adaptation. The proposed framework and many of its components can be used to pursue the formalization of resilience, its modeling, and its operationalization in real-world STS.