An Efficient Approach to Automatic Construction of 3D Watertight Geometry of Buildings Using Point Clouds

Abstract

1. Introduction

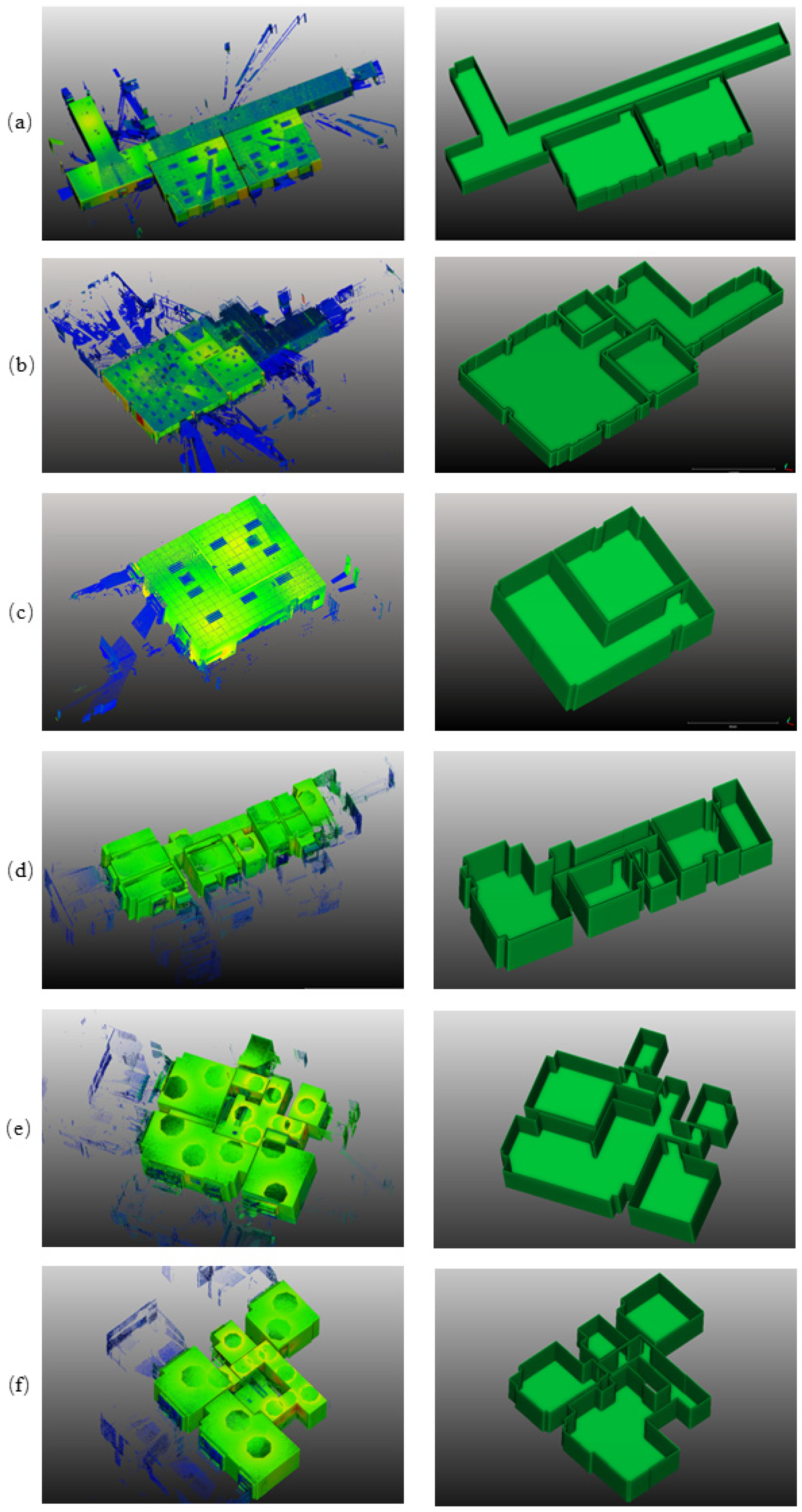

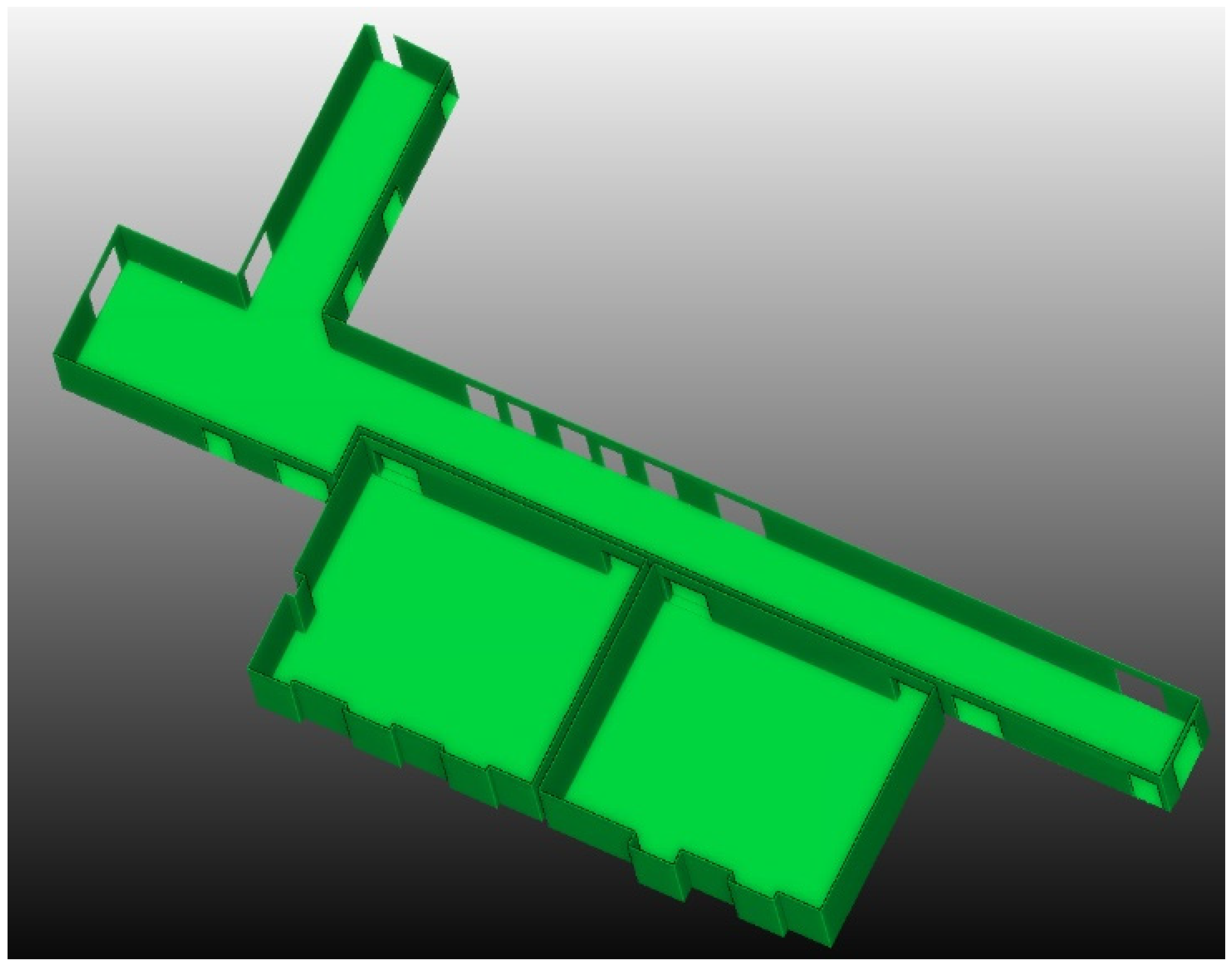

2. Materials and Methods

2.1. Study Sites and Data

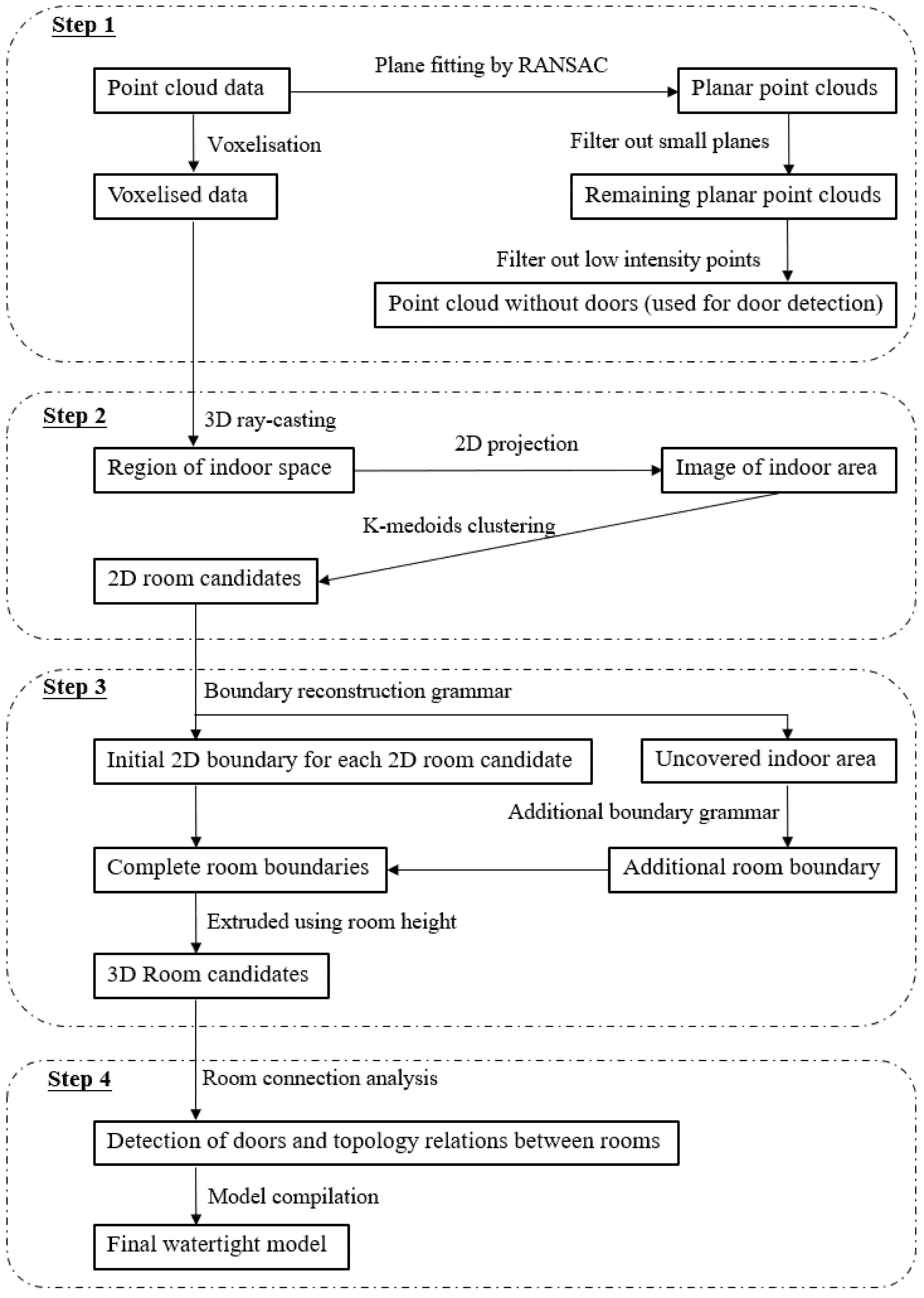

2.2. Methodology

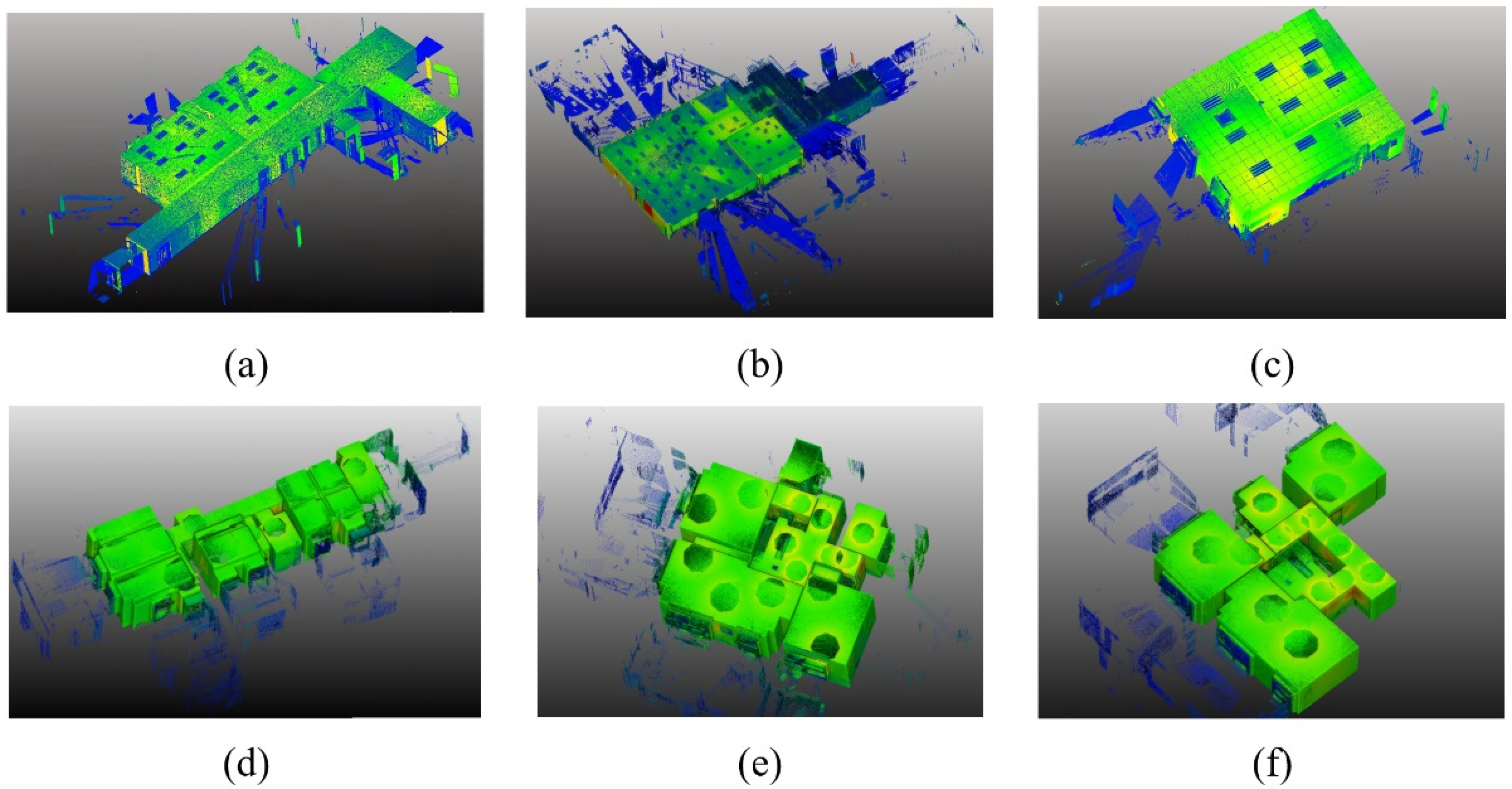

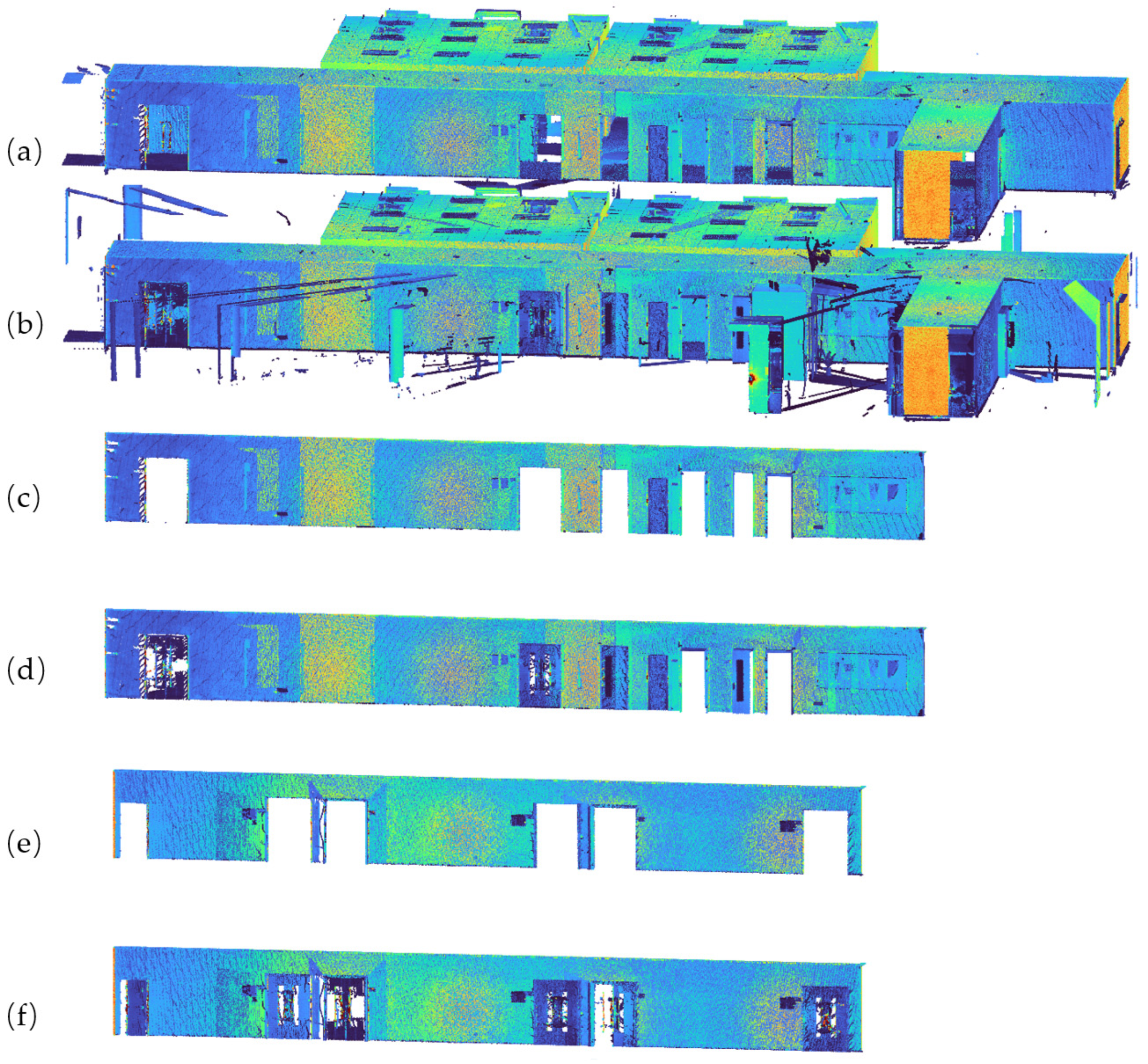

2.2.1. Pre-Processing (Step 1)

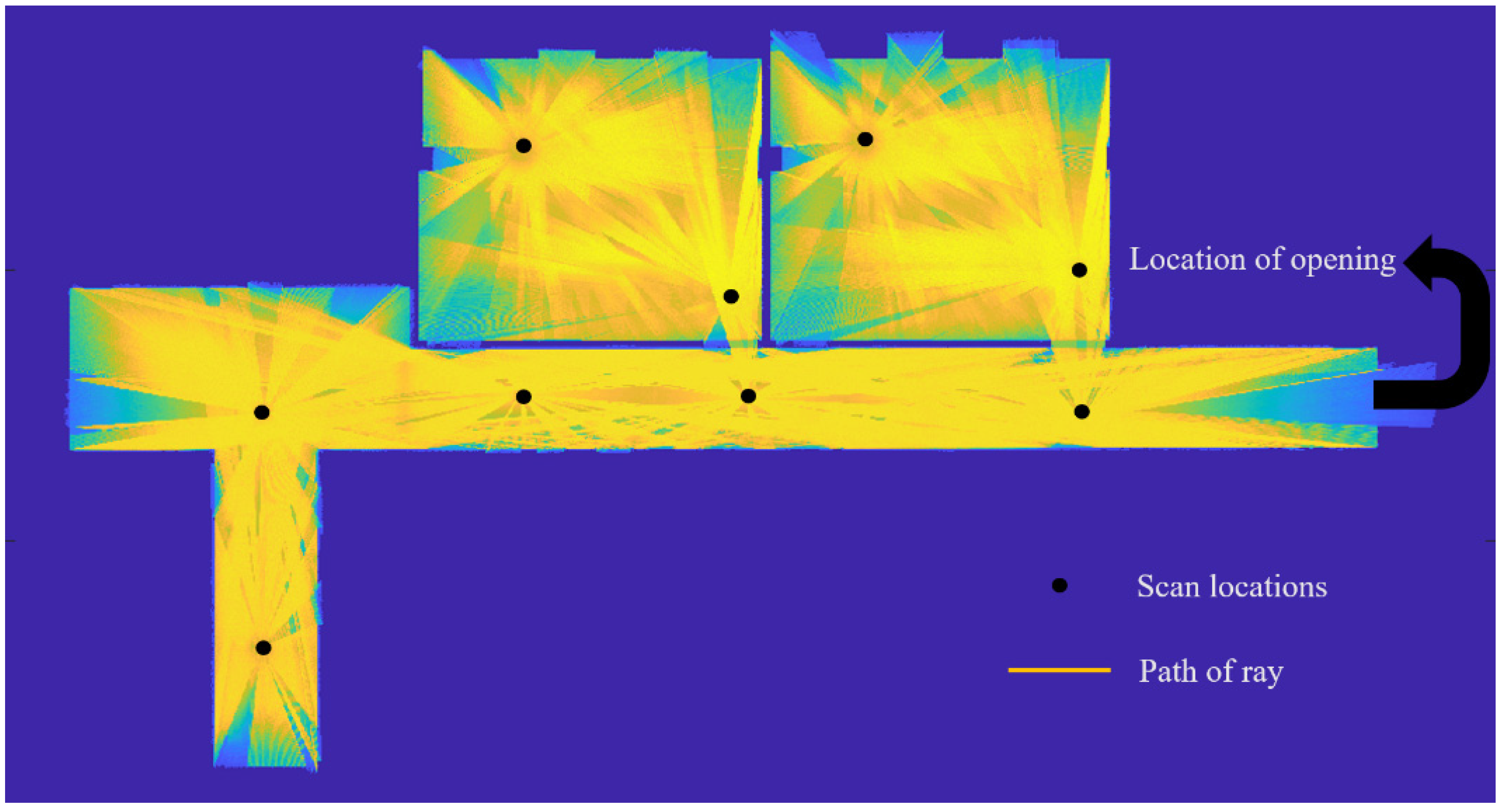

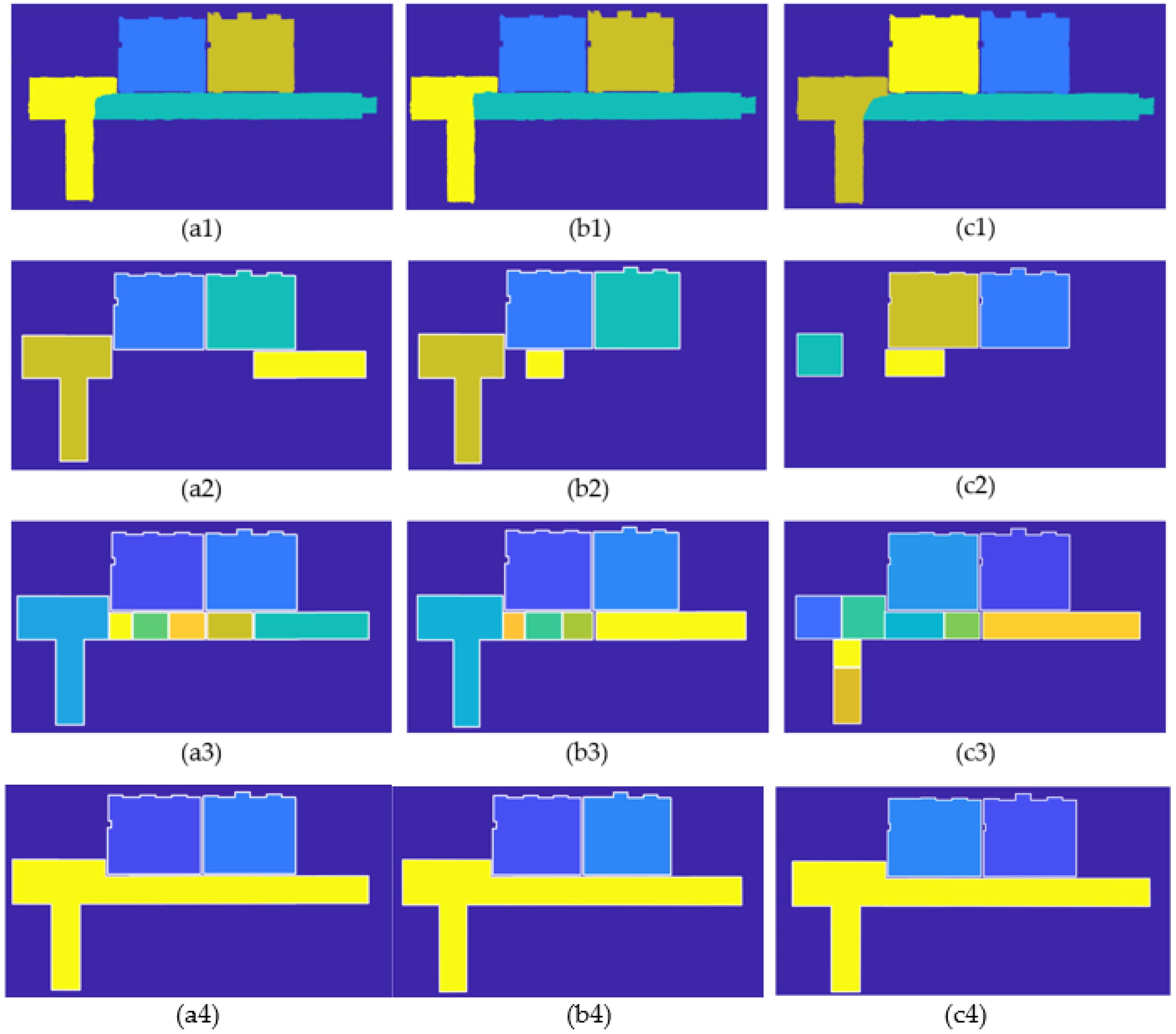

2.2.2. Segmentation of 2D Room Candidates (Step 2)

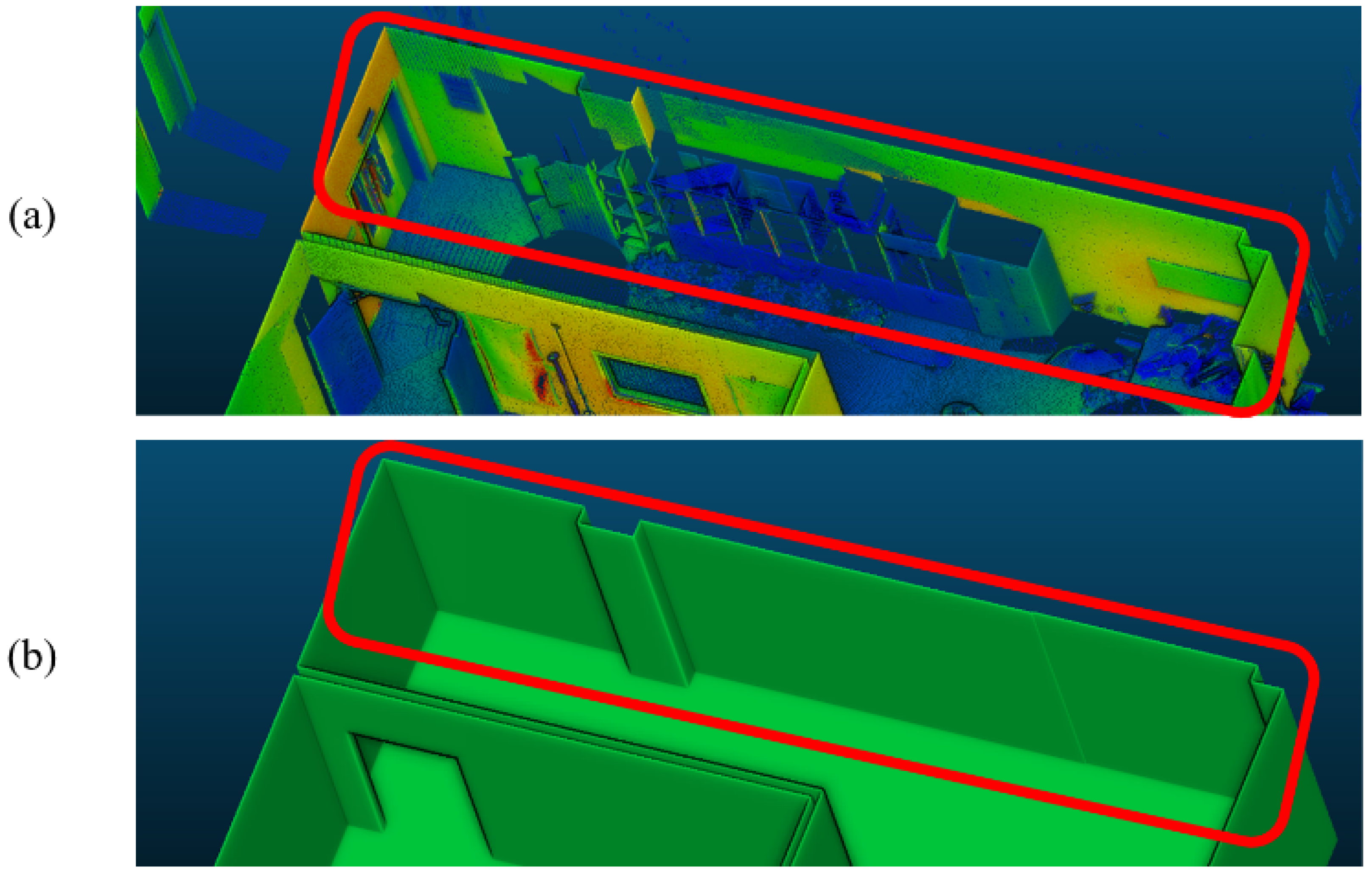

2.2.3. Three-Dimensional Reconstruction of Room Candidates (Step 3)

- For all rectangles, at least one edge is in contact with the boundary pixel of the segmented indoor area (Rule 1).

- The first rectangle is fitted through the minimization process shown in Equation (1), which maximises the size of the first rectangle and keeps an aspect ratio of approximately 1. Based on this rule, it was found that the total number of subsequently filled rectangles needed to cover the entire indoor area can be significantly reduced, and thus the overall total processing time can be reduced.where is the width of the rectangle, and is the height of the rectangle.

- The newly fitted rectangles must touch but not intersect with the existing rectangles.

- When no more rectangles can be fitted, the boundary of each 2D room candidate is determined using the Dijkstra algorithm (i.e., searching for the shortest path along the boundary of the fitted rectangles) [61].

- The sizes of the uncovered indoor areas are larger than 5% (determined by tests) of the average size of the reconstructed 2D boundaries. This rule is a quick filter for small independent areas.

- The size of the largest rectangle within the uncovered indoor area is larger than 5% (determined by tests) of the average size of the reconstructed 2D boundaries. This rule is a more detailed filter for small independent areas.

- The distance between the largest rectangle within the uncovered indoor area and the closest reconstructed boundary should be within 0.5 m.

- The average point density within the uncovered indoor area is larger than 50% of the average point density in the reconstructed 2D boundaries.

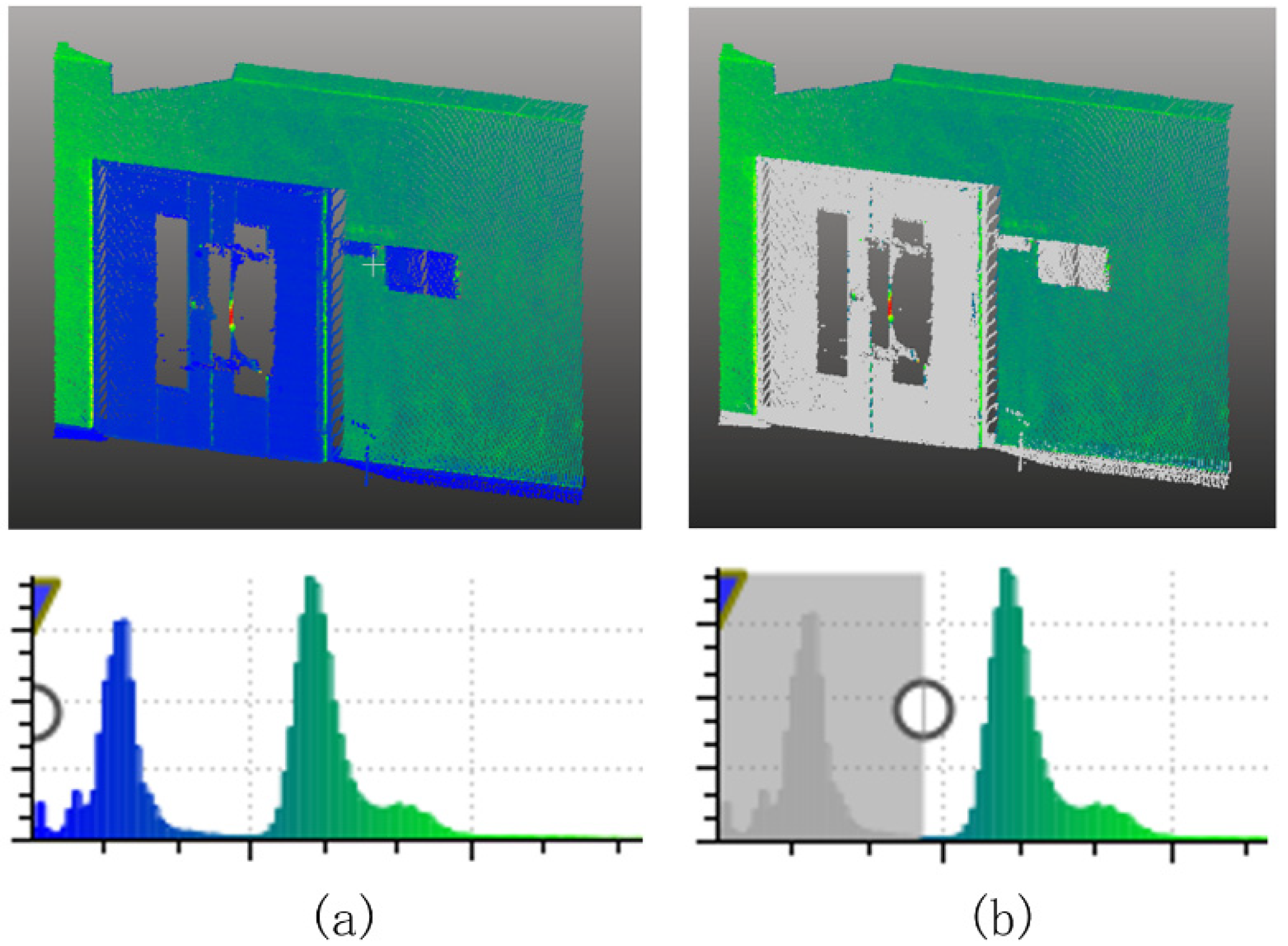

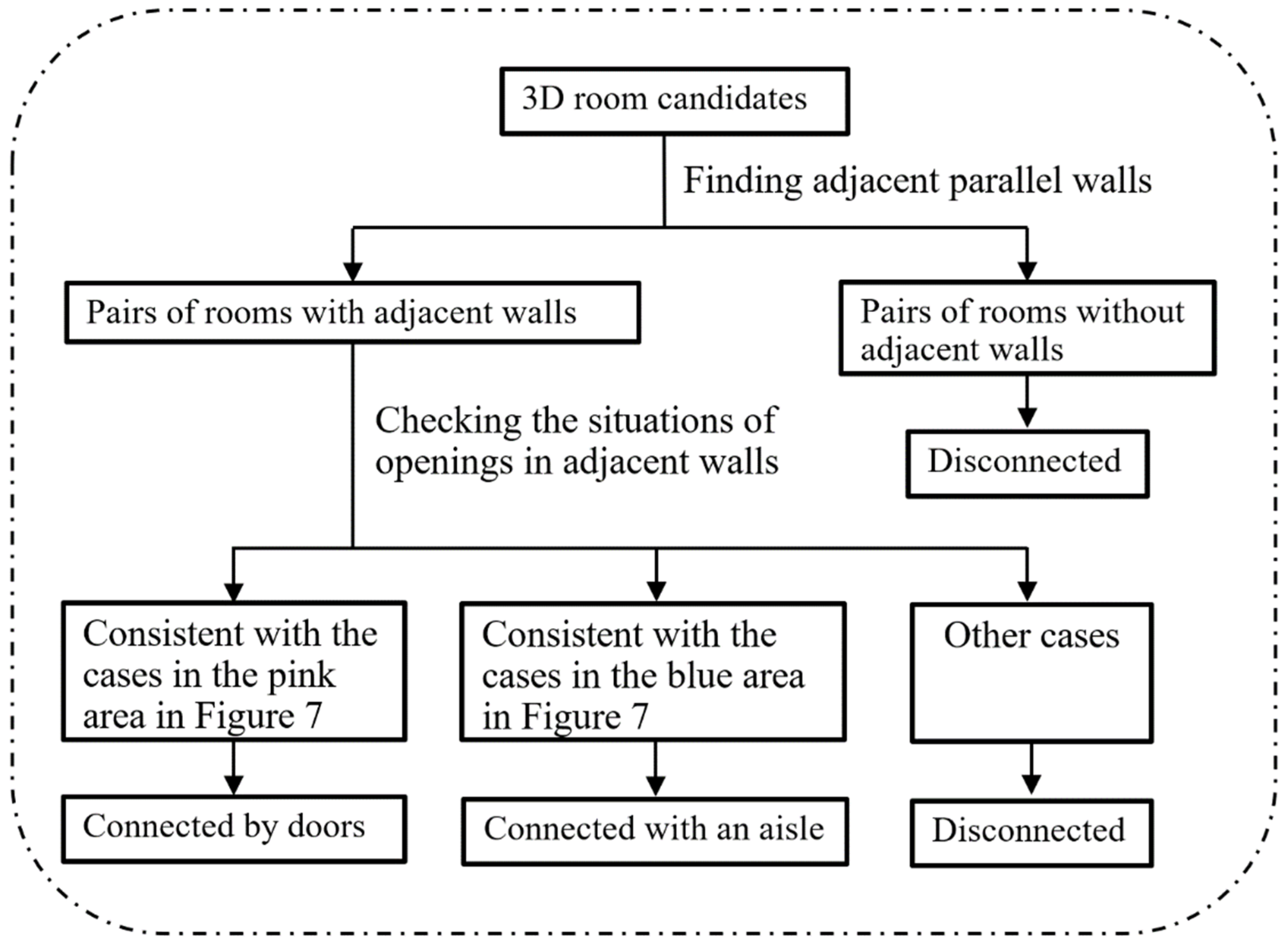

2.2.4. Connection Analysis for 3D Room Candidates (Step 4)

- If there is no opening on two adjacent walls or the openings do not fall into one of the situations shown in Figure 7, they are labelled as disconnected.

- The adjacent 3D room candidates are merged if they are connected by an aisle.

- Otherwise, doors are constructed at the openings between the 3D room candidates.

3. Results

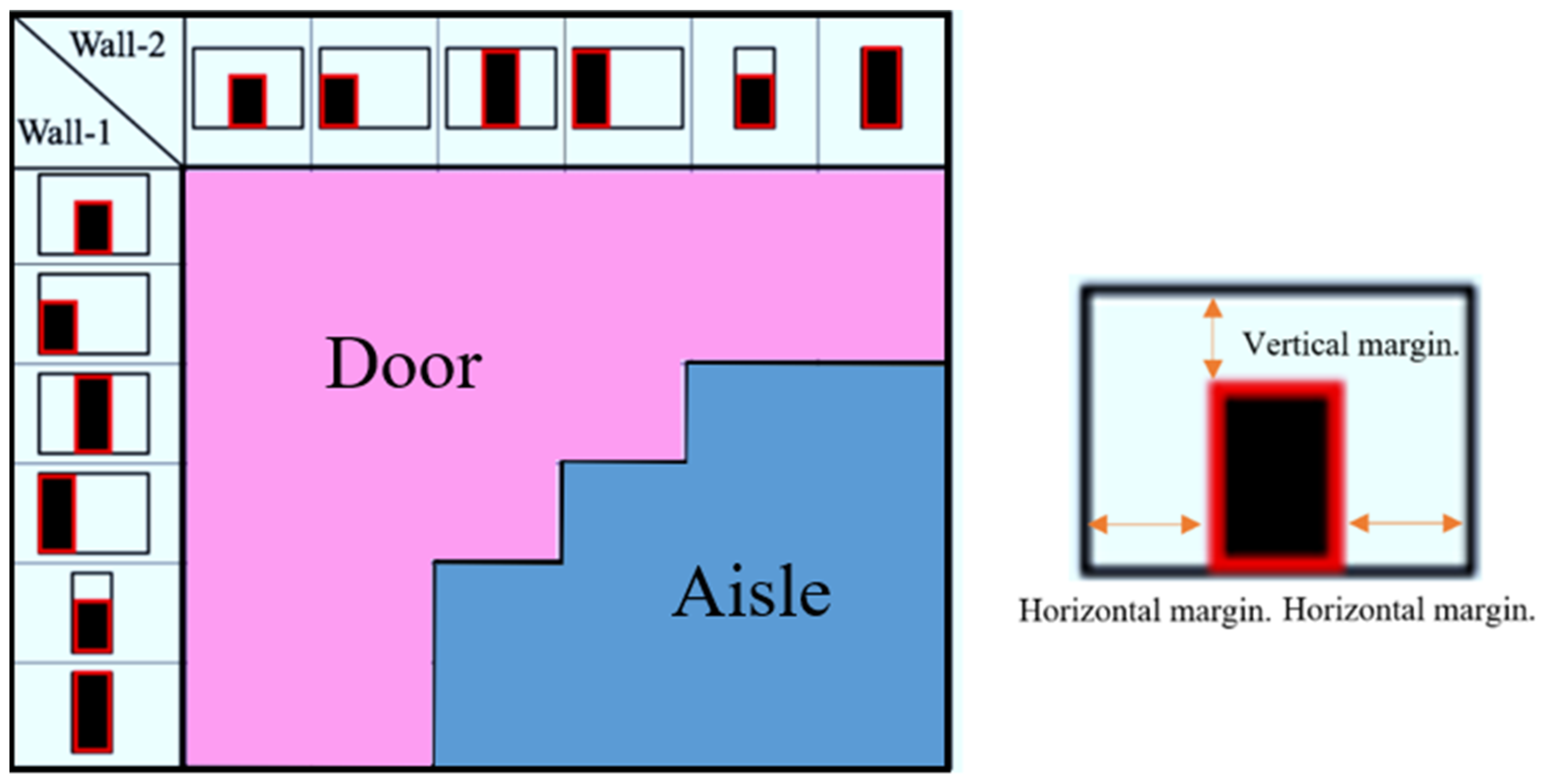

- The thresholds of the wall opening are 0.6 m (width) by 1.8 m (height) since most doors have larger sizes than this.

- At least one margin between the opening and its nearest wall boundary is larger than the corresponding margin threshold mentioned in Section 2.2.3.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- EUR-Lex-31993L0076. Council Directive 93/76/EEC of 13 September 1993 to Limit Carbon Dioxide Emissions by Improving Energy Efficiency (SAVE). Available online: https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=CELEX%3A31993L0076 (accessed on 1 April 2021).

- EUR-Lex-02012L0027-20200101. Directive 2012/27/EU of the European Parliament and of the Council of 25 October 2012 on Energy Efficiency. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:02012L0027-20200101 (accessed on 1 April 2021).

- IDEA. Plan Nacional Integrado de Energía y Clima (PNIEC) 2021–2030. Available online: https://www.idae.es/informacion-y-publicaciones/plan-nacional-integrado-de-energia-y-clima-pniec-2021-2030 (accessed on 1 April 2021).

- Becerik-Gerber, B.; Jazizadeh, F.; Li, N.; Calis, G. Application areas and data requirements for BIM-enabled facilities management. J. Constr. Eng. Manag. 2012, 138, 431–442. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Drury, B.; Curtis, O.; Linda, K.; Frederick, C. EnergyPlus: Energy simulation program. ASHRAE J. 2000, 42, 49–56. [Google Scholar]

- Diakité, A.A.; Zlatanova, S. Spatial subdivision of complex indoor environments for 3D indoor navigation. Int. J. Geogr. Inf. Sci. 2018, 32, 213–235. [Google Scholar] [CrossRef]

- Montilla, Y.M.; León-Sánchez, C. 3D modelling of a building oriented to indoor navigation system for users with different mobility conditions. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, VI-4/W2-2020, 103–109. [Google Scholar] [CrossRef]

- Zhang, C.; Ong, L. Sensitivity analysis of building envelop elements impact on energy consumptions using BIM. Open J. Civ. Eng. 2017, 7, 488–508. [Google Scholar] [CrossRef][Green Version]

- Nizam, R.S.; Zhang, C.; Tian, L. A BIM based tool for assessing embodied energy for buildings. Energy Build. 2018, 170, 1–14. [Google Scholar] [CrossRef]

- Nikoohemat, S.; Diakité, A.A.; Zlatanova, S.; Vosselman, G. Indoor 3D reconstruction from point clouds for optimal routing in complex buildings to support disaster management. Autom. Constr. 2020, 113, 103109. [Google Scholar] [CrossRef]

- Akcamete, A.; Akinci, B.; Garrett, J.H., Jr. Potential utilization of building information models for planning maintenance activities. In Proceedings of the 17th International Workshop on Intelligent Computing in Civil and Building Engineering, Tampere, Finland, 5–7 July 2018. [Google Scholar]

- Valinejadshoubi, M.; Bagchi, A.; Moselhi, O. Development of a BIM-based data management system for structural health monitoring with application to modular buildings: Case study. J. Comput. Civ. Eng. 2019, 33, 5019003. [Google Scholar] [CrossRef]

- O’Shea, M.; Murphy, J. Design of a BIM integrated structural health monitoring system for a historic offshore lighthouse. Buildings 2020, 10, 131. [Google Scholar] [CrossRef]

- Zou, Y.; Kiviniemi, A.; Jones, S.W. A review of risk management through BIM and BIM-related technologies. Saf. Sci. 2017, 97, 88–98. [Google Scholar] [CrossRef]

- Enshassi, M.S.A.; Walbridge, S.; West, J.S.; Haas, C.T. Integrated risk management framework for tolerance-based mitigation strategy decision support in modular construction projects. J. Manag. Eng. 2019, 35, 5019004. [Google Scholar] [CrossRef]

- Chen, K.; Lu, W. Bridging BIM and building (BBB) for information management in construction: The underlying mechanism and implementation. Eng. Constr. Archit. Manag. 2019, 26, 1518–1532. [Google Scholar] [CrossRef]

- Darko, A.; Chan, A.P.C.; Yang, Y.; Tetteh, M.O. Building information modeling (BIM)-based modular integrated construction risk management—Critical survey and future needs. Comput. Ind. 2020, 123, 103327. [Google Scholar] [CrossRef]

- Adan, A.; Huber, D. 3D reconstruction of interior wall surfaces under occlusion and clutter. In Proceedings of the International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, Zurich, Switzerland, 13–15 October 2012. [Google Scholar]

- Sanchez, V.; Zakhor, A. Planar 3D modeling of building interiors from point cloud data. In Proceedings of the International Conference on Image Processing, ICIP, Orlando, FL, USA, 30 September–3 October 2012. [Google Scholar]

- Wang, R.; Xie, L.; Chen, D. Modeling indoor spaces using decomposition and reconstruction of structural elements. Photogramm. Eng. Remote Sensing 2017, 83, 827–841. [Google Scholar] [CrossRef]

- Tran, H.; Khoshelham, K.; Kealy, A.; Díaz-Vilariño, L. Shape grammar approach to 3D modeling of indoor environments using point clouds. J. Comput. Civ. Eng. 2019, 33, 4018055. [Google Scholar] [CrossRef]

- Ochmann, S.; Vock, R.; Klein, R. Automatic reconstruction of fully volumetric 3D building models from oriented point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 151, 251–262. [Google Scholar] [CrossRef]

- Sanchez, J.; Denis, F.; Dupont, F.; Trassoudaine, L.; Checchin, P. Data-driven modeling of building interiors from lidar point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, V-2-2020, 395–402. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Belton, D.; West, G. Diagnostic-robust statistical analysis for local surface fitting in 3D point cloud data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 269–274. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Budroni, A.; Boehm, J. Automated 3D reconstruction of interiors from point clouds. Int. J. Archit. Comput. 2010, 8, 55–73. [Google Scholar] [CrossRef]

- Barnea, S.; Filin, S. Segmentation of terrestrial laser scanning data using geometry and image information. ISPRS J. Photogramm. Remote Sens. 2013, 76, 33–48. [Google Scholar] [CrossRef]

- Torr, P.H.S.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Holzbach, A.; Beetz, M. Model-based and learned semantic object labeling in 3D point cloud maps of kitchen environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Pu, S.; Vosselman, G. Automatic extraction of building features from terrestrial laser scanning. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 25–27. [Google Scholar]

- Previtali, M.; Scaioni, M.; Barazzetti, L.; Brumana, R. A flexible methodology for outdoor/indoor building reconstruction from occluded point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II-3, 119–126. [Google Scholar] [CrossRef]

- Thomson, C.; Boehm, J. Automatic geometry generation from point clouds for BIM. Remote Sens. 2015, 7, 11753–11775. [Google Scholar] [CrossRef]

- Tran, H.; Khoshelham, K. A stochastic approach to automated reconstruction of 3D models of interior spaces from point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W5, 299–306. [Google Scholar] [CrossRef]

- Díaz-Vilariño, L.; Khoshelham, K.; Martínez-Sánchez, J.; Arias, P. 3D modeling of building indoor spaces and closed doors from imagery and point clouds. Sensors 2015, 15, 3491–3512. [Google Scholar] [CrossRef]

- Hong, S.; Jung, J.; Kim, S.; Cho, H.; Lee, J.; Heo, J. Semi-automated approach to indoor mapping for 3D as-built building information modeling. Comput. Environ. Urban Syst. 2015, 51, 34–46. [Google Scholar] [CrossRef]

- Xiong, X.; Adan, A.; Akinci, B.; Huber, D. Automatic creation of semantically rich 3D building models from laser scanner data. Autom. Constr. 2013, 31, 325–337. [Google Scholar] [CrossRef]

- Gröger, G.; Plümer, L. Derivation of 3D indoor models by grammars for route planning. Photogramm. Fernerkundung Geoinf. 2010, 2010, 191–206. [Google Scholar] [CrossRef]

- Khoshelham, K.; Díaz-Vilariño, L. 3D modelling of interior spaces: Learning the language of indoor architecture. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-5, 321–326. [Google Scholar] [CrossRef]

- Becker, S. Generation and application of rules for quality dependent façade reconstruction. ISPRS J. Photogramm. Remote Sens. 2009, 64, 640–653. [Google Scholar] [CrossRef]

- Müller, P.; Wonka, P.; Haegler, S.; Ulmer, A.; van Gool, L. Procedural modeling of buildings. In Proceedings of the ACM SIGGRAPH 2006 Papers, SIGGRAPH ’06, Boston, MA, USA, 30 July–3 August 2006. [Google Scholar]

- Wonka, P.; Wimmer, M.; Sillion, F.; Ribarsky, W. Instant architecture. In Proceedings of the ACM SIGGRAPH 2003 Papers, SIGGRAPH ’03, San Diego, CA, USA, 27–31 July 2003. [Google Scholar]

- Becker, S.; Peter, M.; Fritsch, D. Grammar-supported 3D indoor reconstruction from point clouds for “as-Built” BIM. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, II-3/W4, 301–302. [Google Scholar] [CrossRef]

- Marvie, J.E.; Perret, J.; Bouatouch, K. The FL-system: A functional L-system for procedural geometric modeling. Vis. Comput. 2005, 21, 329–339. [Google Scholar] [CrossRef]

- Sanabria, S.L.; Mitchell, W.J. The logic of architecture: Design, computation, and cognition. Technol. Cult. 1992, 33, 625. [Google Scholar] [CrossRef]

- Bassier, M.; Yousefzadeh, M.; Vergauwen, M. Comparison of 2d and 3d wall reconstruction algorithms from point cloud data for as-built bim. J. Inf. Technol. Constr. 2020, 25, 173–192. [Google Scholar] [CrossRef]

- Macher, H.; Landes, T.; Grussenmeyer, P. From point clouds to building information models: 3D semi-automatic reconstruction of indoors of existing buildings. Appl. Sci. 2017, 7, 1030. [Google Scholar] [CrossRef]

- Turner, E.; Zakhor, A. Watertight planar surface meshing of indoor point-clouds with voxel carving. In Proceedings of the International Conference on 3D Vision, 3DV 2013, Seattle, WA, USA, 29 June–1 July 2013. [Google Scholar]

- Parish, Y.I.H.; Müller, P. Procedural modeling of cities. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH 2001, Los Angeles, CA, USA, 12–17 August 2001. [Google Scholar]

- Kelly, G.; McCabe, H. A survey of procedural techniques for city generation. ITB J. 2006, 7, 342–351. [Google Scholar] [CrossRef]

- Stiny, G.; Gips, J. Shape grammars and the generative specification of painting and sculpture. In Proceedings of the Information Processing, IFIP Congress 1971, Volume 2, Ljubljana, Slovenia, 23–28 August 1971. [Google Scholar]

- Boulch, A.; Houllier, S.; Marlet, R.; Tournaire, O. Semantizing complex 3D scenes using constrained attribute grammars. Eurograph. Symp. Geom. Process. 2013, 32, 33–34. [Google Scholar] [CrossRef]

- Dick, A.R.; Torr, P.H.S.; Cipolla, R. Modelling and interpretation of architecture from several images. Int. J. Comput. Vis. 2004, 60, 111–134. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M. Topology reconstruction of BIM wall objects from point cloud data. Remote Sens. 2020, 12, 1800. [Google Scholar] [CrossRef]

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor scene reconstruction using feature sensitive primitive extraction and graph-cut. ISPRS J. Photogramm. Remote Sens. 2014, 90, 68–82. [Google Scholar] [CrossRef]

- Turner, E.; Zakhor, A. Multistory floor plan generation and room labeling of building interiors from laser range data. In Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2015; Volume 550. [Google Scholar]

- CBRE Build. Available online: https://www.cbrebuild.com/ (accessed on 5 January 2021).

- Ochmann, S.; Vock, R.; Wessel, R.; Klein, R. Automatic reconstruction of parametric building models from indoor point clouds. Comput. Graph. 2016, 54, 94–103. [Google Scholar] [CrossRef]

- Ambrus, R.; Claici, S.; Wendt, A. Automatic room segmentation from unstructured 3-D data of indoor environments. IEEE Robot. Autom. Lett. 2017, 2, 749–756. [Google Scholar] [CrossRef]

- Turner, E.; Zakhor, A. Floor plan generation and room labeling of indoor environments from: Laser range data. In Proceedings of the 9th International Conference on Computer Graphics Theory and Applications, Lisbon, Portugal, 5–8 January 2014. [Google Scholar]

- Dijkstra, E.W. A note on two problems in connexion with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef]

- Park, H.S.; Jun, C.H. A simple and fast algorithm for K-medoids clustering. Expert Syst. Appl. 2009, 36, 3336–3341. [Google Scholar] [CrossRef]

| Performance Metrics | Site 1 | Site 2 | Site 3 | Site 4 | Site 5 | Site 6 |

|---|---|---|---|---|---|---|

| Number of input points | 476,417,203 | 469,140,411 | 40,143,412 | 4,933,172 | 4,534,136 | 4,227,235 |

| Run time (s) | 237 | 284 | 131 | 107 | 122 | 149 |

| Mean point-to-model error (mm) | 13 | 18 | 13 | 21 | 19 | 16 |

| Max point-to-model error (mm) | 21 | 29 | 20 | 27 | 27 | 25 |

| The detection rate of doors (%) | 100 | 100 | 100 | 100 | 100 | 100 |

| The detection rate of rooms (%) | 100 | 100 | 100 | 100 | 100 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, Y.; Fan, L. An Efficient Approach to Automatic Construction of 3D Watertight Geometry of Buildings Using Point Clouds. Remote Sens. 2021, 13, 1947. https://doi.org/10.3390/rs13101947

Cai Y, Fan L. An Efficient Approach to Automatic Construction of 3D Watertight Geometry of Buildings Using Point Clouds. Remote Sensing. 2021; 13(10):1947. https://doi.org/10.3390/rs13101947

Chicago/Turabian StyleCai, Yuanzhi, and Lei Fan. 2021. "An Efficient Approach to Automatic Construction of 3D Watertight Geometry of Buildings Using Point Clouds" Remote Sensing 13, no. 10: 1947. https://doi.org/10.3390/rs13101947

APA StyleCai, Y., & Fan, L. (2021). An Efficient Approach to Automatic Construction of 3D Watertight Geometry of Buildings Using Point Clouds. Remote Sensing, 13(10), 1947. https://doi.org/10.3390/rs13101947