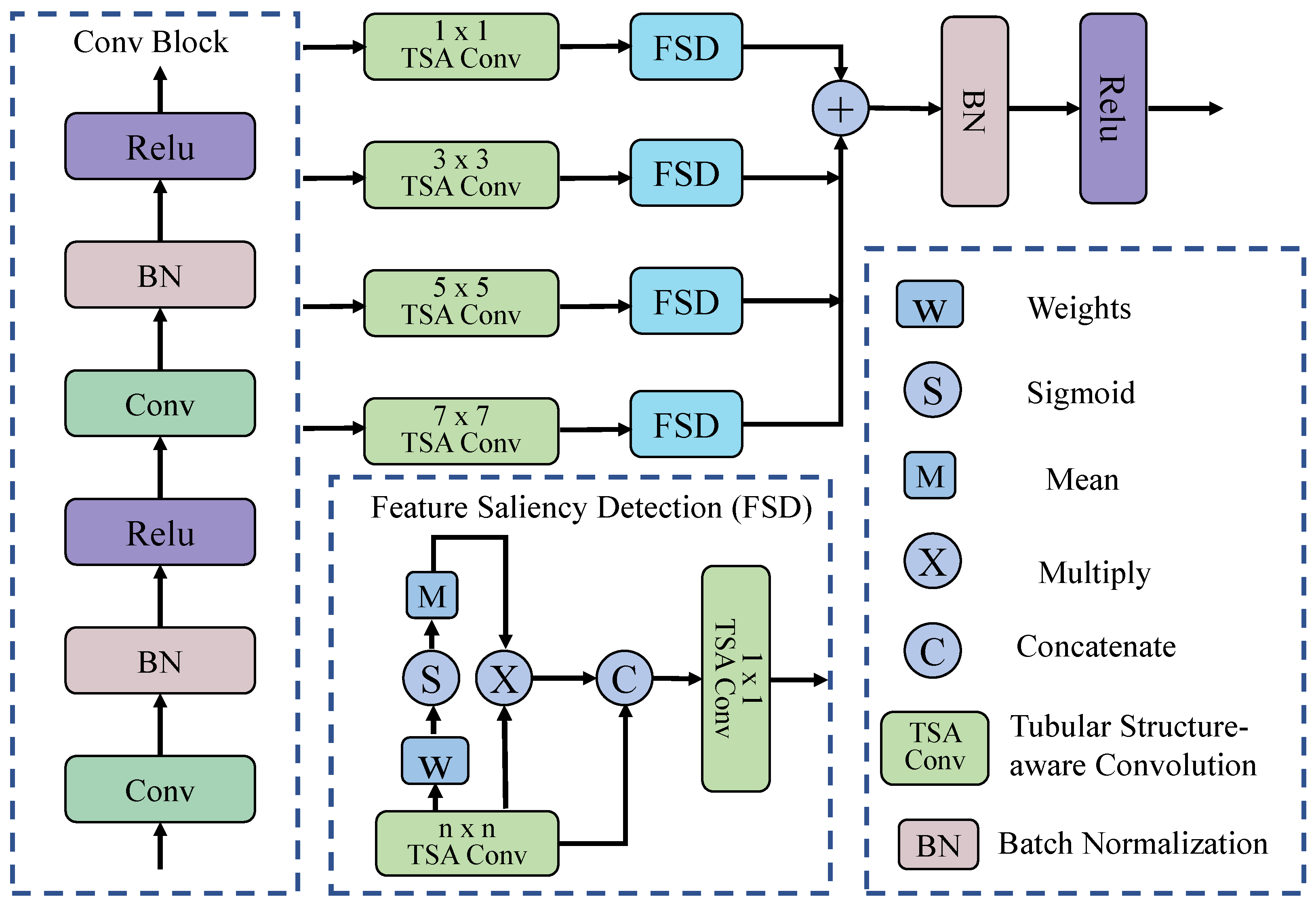

3.2. MSTS-Conv Block

Tubular Structure-Aware Convolution: As shown in

Figure 2, given an input feature map

, the convolution proceeds through the following steps. We first establish a normalized coordinate grid

for convolutional kernels of size

, where each position defines centered spatial offsets relative to the kernel’s central pixel:

To parameterize orientation selectivity, each channel

learns an angular projection

via a differentiable transformation, which can be expressed as:

where

is a learnable parameter. Next, directional sensitivity is encoded through harmonic modulation to generate anisotropic filtering kernels, thereby enhancing alignment with vascular structures. This can be computed as follows:

These orientation-selective components are combined with learnable Gaussian bases

, initialized as:

where

controls the initial spatial bandwidth. The kernel synthesis process combines these elements through normalized Hadamard products, which can be formulated as:

where ⊙ denotes the element-wise (Hadamard) product between corresponding entries of the two matrices, and

to ensure numerical stability. Finally, a depthwise convolution is applied using these orientation-specialized kernels. The computation is given as follows:

where

maintains spatial resolution, and for all

,

, and

. Finally, the complete operation is expressed as

, implementing parameter-efficient, channel-specific filtering while preserving structural homogeneity and adapting to local vascular geometry through differentiable orientation learning. Here,

is formulated as in Equation (

7):

where ∗ denotes 2D convolution and ⨁ represents channel-wise concatenation. Finally, we define this complete operation as a Tubular Structure-aware Convolution (TSA-Conv), denoted by the following operator:

This design maintains architectural simplicity while dynamically adapting to tubular anatomical structures such as retinal vessels and elongated lesions through differentiable harmonic filtering.

Multi-Scale Fusion: To effectively enhance vessel tubular features, we introduce a dual-stage multi-scale feature fusion module that incorporates two standard convolutional blocks for enhanced feature representation, along with a dedicated multi-scale fusion mechanism designed to adaptively refine tubular structures. Let

denote the input feature map. In the first phase, hierarchical features

are extracted through two sequential convolutions:

where

are convolutional kernel weights, BN denotes batch normalization, and dropout regularization is applied to mitigate overfitting.

In the second stage, multi-scale tubular structure-aware modules are incorporated to model vascular continuity and capture morphological features across scales, effectively suppressing false segmentations in non-tubular regions and enhancing the understanding of vascular topology. We perform the TSA-Conv operation on the input feature

using convolution kernels of size

,

,

, and

, respectively. Let this operation be denoted as

, where

k represents the convolution kernel size. The execution of multi-scale feature extraction is as follows:

Each feature

generated by TSAConv is then fed into the Feature Saliency Detection (FSD) module for saliency-oriented feature modeling. Let the FSD module be the function

, which can be expressed as follows:

Finally, the outputs of the FSD modules across all scales are summed to obtain the fused feature map. Specifically, the saliency-enhanced features

are summed, followed by Batch Normalization (BN) and ReLU activation to produce the final output:

The FSD module integrates both channel-wise and spatial saliency information in a lightweight yet effective manner. Given an input feature map

, the module applies a learnable

convolution to generate a raw per-pixel importance map

, as shown in Equation (

13):

This operation enables the model to learn a task-specific weighting for each spatial location in every channel. Next, the sigmoid function is applied element-wise to convert the raw scores

into probabilistic attention weights. It follows that:

Here,

represents the probability of importance for each spatial location in every channel. Then, a global attention coefficient for each channel is computed by averaging over the spatial dimensions:

Finally, channel-wise recalibration is performed by reweighting the input feature map using the derived attention coefficients:

where ⊗ denotes element-wise multiplication with broadcasting across spatial dimensions.

To further refine spatial regions of interest, the channel-attended feature

is concatenated with the original feature map

, and TSAConv is applied to reduce the channel dimension to match that of the base feature map

. Consequently, we derive the following:

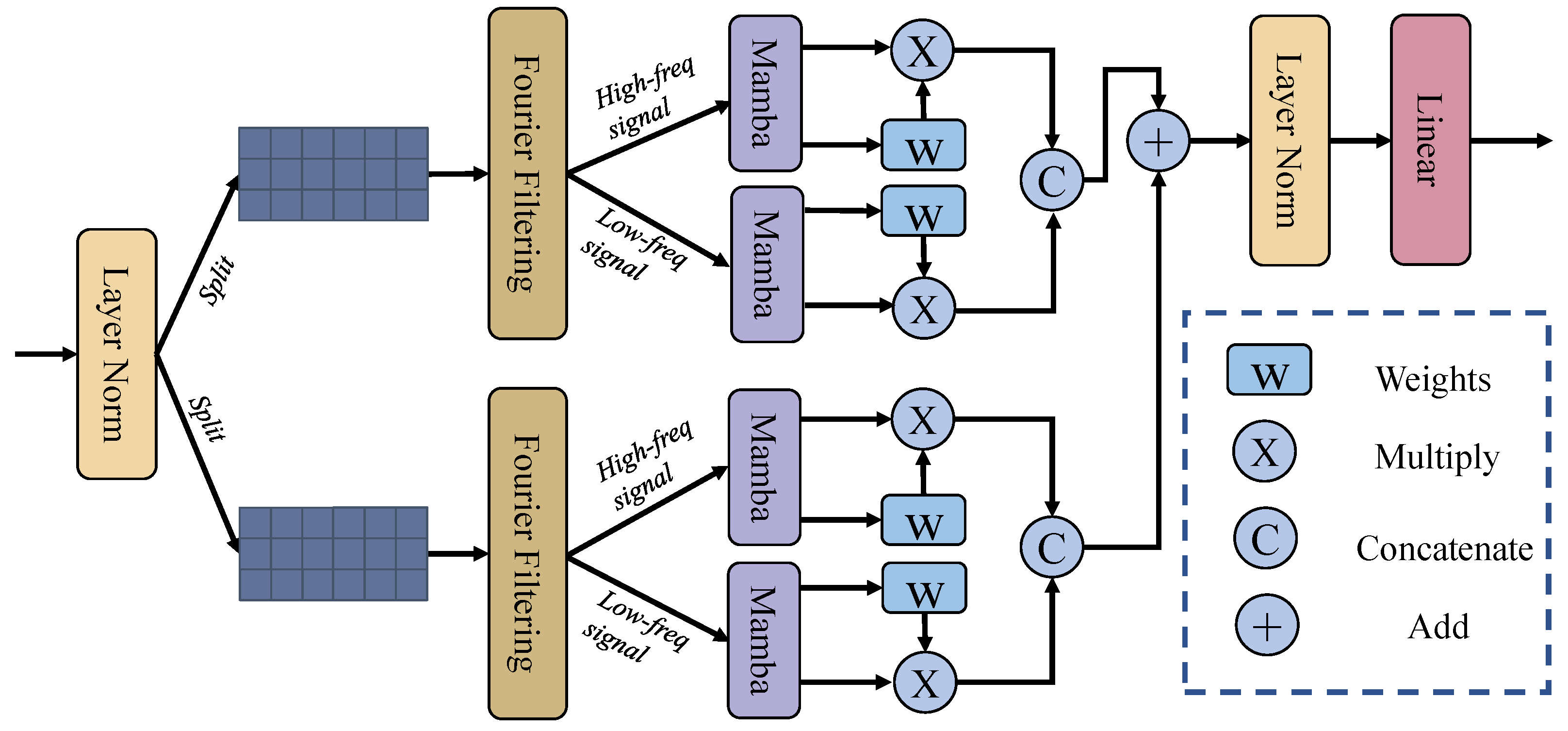

3.3. Multi-Branch Fourier-Mamba Block

As depicted in

Figure 3, the Multi-Branch Fourier-Mamba Block primarily comprises two components: the Fourier transform and the Mamba layer. The core idea is to apply Fourier transforms to extract complementary high- and low-frequency components, followed by employing Mamba modules to learn frequency-aware global contextual representations. Take an input feature

from stage

s, where

. First, the input is normalized, and the features are decoupled:

where LN denotes LayerNorm.

Then, frequency-domain filtering is performed separately on the two branches, where each input undergoes a Fourier transform, frequency component decomposition, and inverse transformation. For the two input feature maps

and

, frequency domain filtering is independently applied to each branch. Specifically, both inputs are first transformed into the frequency domain using the Fourier transform:

Low-frequency and high-frequency components are then extracted using the operator

, with thresholds

and

, respectively. The corresponding components are subsequently transformed back to the spatial domain via the inverse Fourier transform:

where

denotes the high-frequency and low-frequency information extraction operations, with

and

as the corresponding thresholds for high-frequency and low-frequency filtering, respectively, and

and

representing the Fourier transform and its inverse. As a result, each input produces two spatial-domain outputs corresponding to its low- and high-frequency components.

Next, for the frequency domain feature maps

,

,

,

obtained in the previous step, first, the representation features are extracted through the Mamba operation:

Here, Mamba is used to capture the long-range dependencies in each feature map. The process can be simply expressed as:

where

,

and the parameters

and

are dynamically generated via a selective scanning mechanism. At the same time, for the input feature

, the weight tensor is first constructed through a learnable linear projection and then normalized to the unit interval using a sigmoid activation function to obtain the normalized weight

. This mechanism realizes adaptive feature importance calibration and interference signal suppression in the channel dimension by dynamically evaluating the significance distribution of multi-frequency features. Next, the previously obtained

is multiplied with its corresponding

to achieve feature-level attention weighting, strengthen important feature responses, and suppress noise. This process can be expressed by the following formula:

Here, ⊙ represents the Hadamard product. Next, the concatenation operation along the channel dimension retains the complementary information of multi-resolution features and forms a hierarchical feature representation as shown below:

Finally, the features from the two branches are fused, followed by layer normalization to reduce internal covariate shift and stabilize the training process:

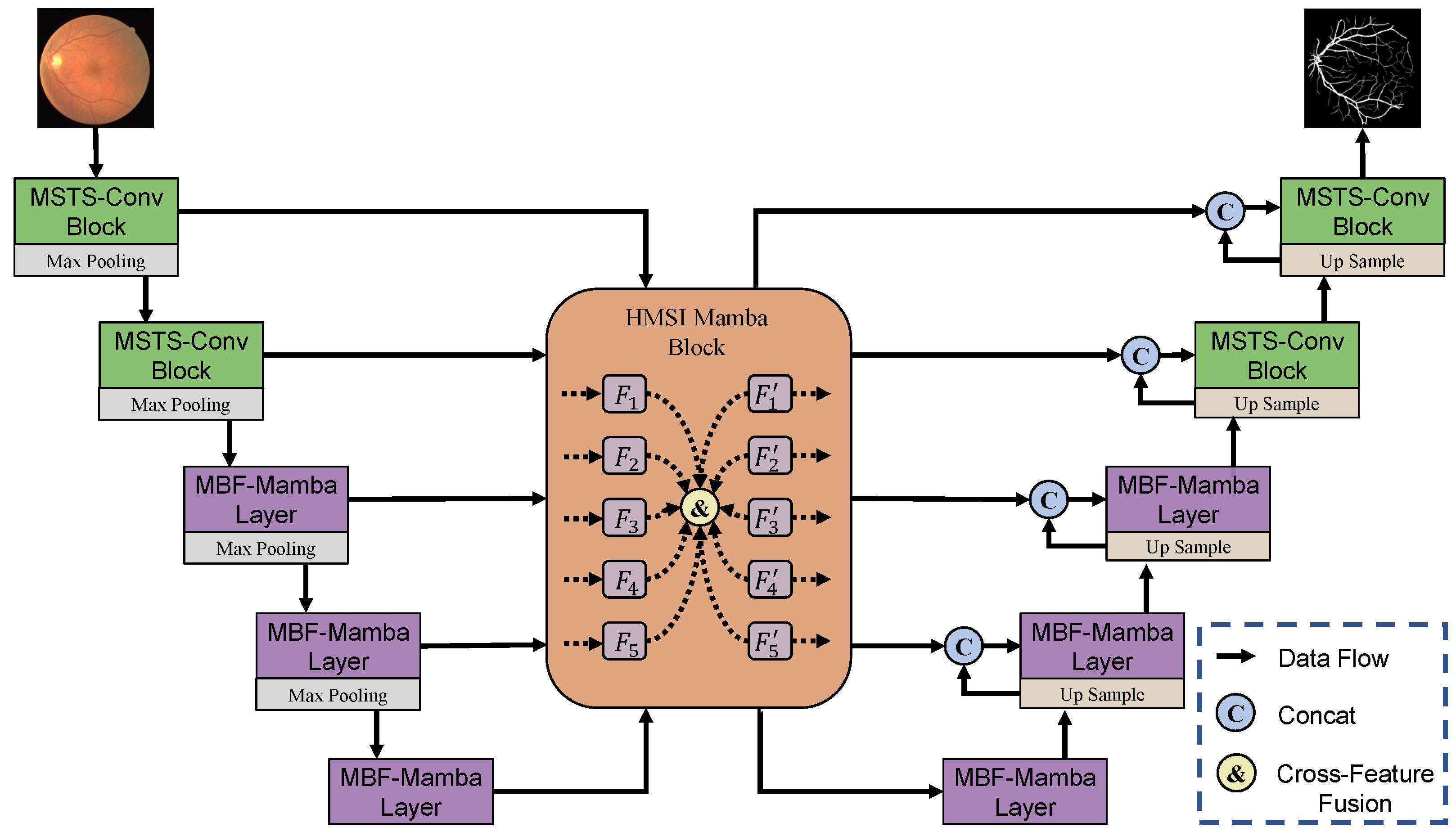

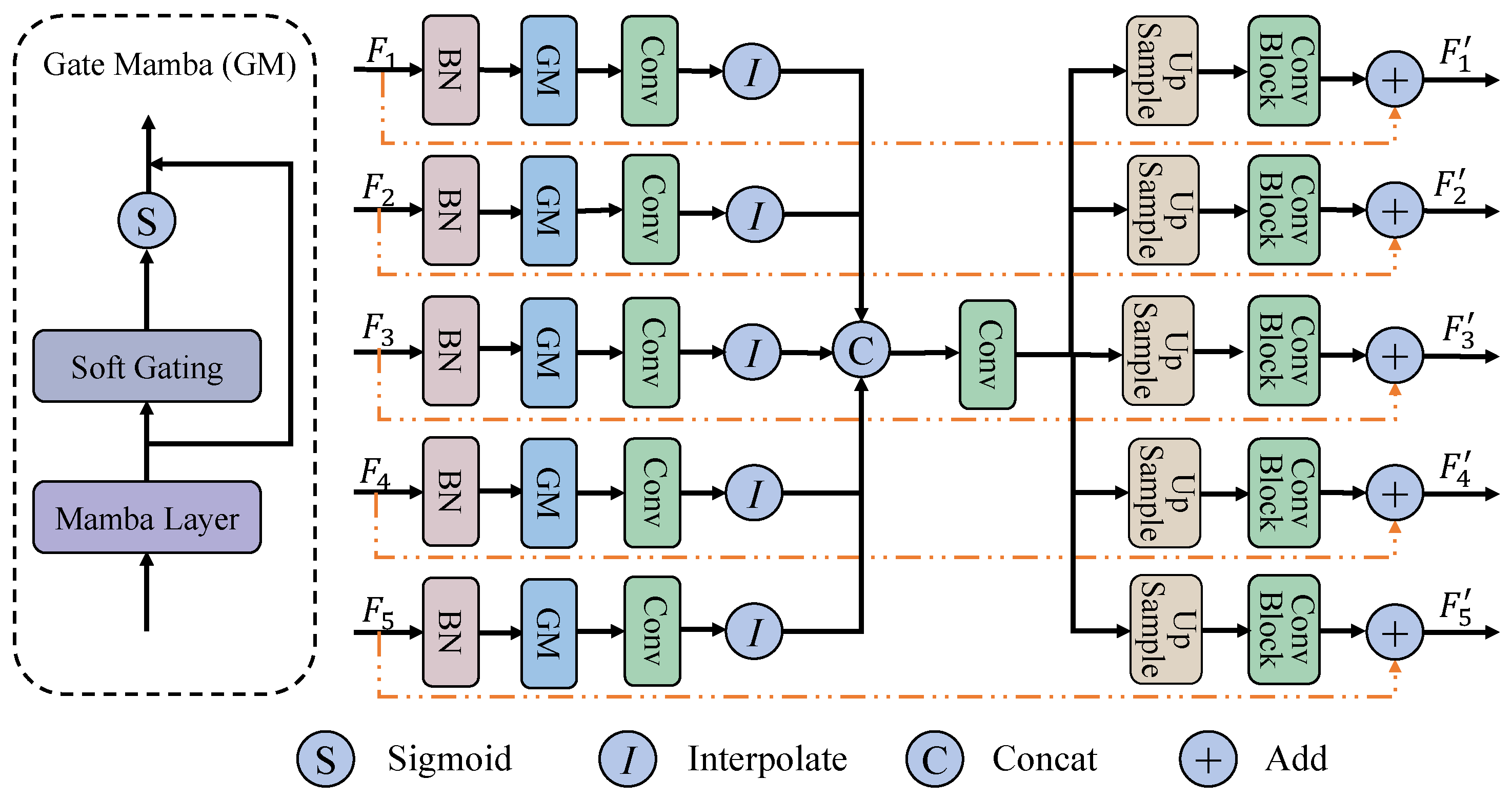

3.4. Hierarchical Multi-Scale Interactive Mamba Block

As shown in

Figure 4, unlike the skip connections in traditional U-shaped networks, which directly concatenate the features of the encoder and decoder, we introduce the Hierarchical Multi-Scale Interactive Mamba Block (HMSI-Mamba Block) within the skip connections to more effectively exploit multi-scale information. Suppose the input consists of five-stage encoder features

, where

denotes the predefined number of channels. Initially, features from different encoder stages are subjected to batch normalization to mitigate inter-stage distribution discrepancies. Subsequently, a Gated Mamba (GM) module is employed to capture global contextual representations through the Mamba layer. A learnable channel-wise attention mechanism is then applied to adaptively modulate the response strength of each feature channel, thereby enhancing the overall discriminative capacity of the representations. The overall process can be formulated as

and can be further decomposed into the following steps:

where

denotes the input feature map,

represents the Sigmoid activation function, and

is a learnable parameter associated with the channel dimension.

Subsequently, the previously obtained features

are first processed through a

convolution to align their channel dimensions, producing

. These features are then upsampled to a unified spatial resolution of

, yielding

. Finally, all spatially aligned features

are concatenated along the channel dimension to construct the final fused representation, denoted as

. This process can be expressed as:

where

I denotes the interpolation operation. Similarly, a

convolution is applied to the fused feature map to project it onto a predefined channel dimension, yielding the refined representation

. At this stage, the features extracted from different encoder levels are effectively integrated, resulting in a unified representation enriched with multi-scale semantic information. Subsequently,

is individually resampled to match the spatial resolution of each input feature map

. To further improve the representational capacity of the features, two standard convolutional blocks are employed to enhance the model’s ability to capture and integrate semantic information across multiple scales. This mapping is formally defined as:

where

denotes an upsampling or downsampling operation, and

denotes a standard convolution operation consisting of two Conv-BN-ReLU layers. Finally, to enhance the original features with enriched semantic cues, a residual connection is introduced between each

and its corresponding input feature

, formulated as follows:

3.5. Loss Function

To effectively train the proposed HM-Mamba model, we adopt the hybrid BceDice loss introduced in VM-UNet [

37]. This composite loss function synergistically combines the advantages of binary cross-entropy (BCE) and Dice loss, aiming to optimize both pixel-level classification accuracy and global region-level overlap. Concretely, given the predicted segmentation probability map

and the ground truth mask

Y, the loss is defined as:

where

is the binary cross-entropy loss:

and

is the soft Dice loss, formulated as follows:

From an information-theoretic perspective, the BCE component seeks to minimize the conditional entropy

, thereby reducing predictive uncertainty, while the Dice term implicitly maximizes the mutual information between

and

Y, promoting strong region-level consistency and overlap. This joint formulation is especially beneficial in medical and natural image segmentation tasks where foreground–background imbalance and structural coherence are critical. Accordingly, we set

and

in our experiments, unless otherwise specified.