Abstract

We present a measurement-driven model in which the black hole horizon functions as a classical apparatus, with Planck-scale patches acting as detectors for quantum field modes. This approach reproduces the Bekenstein–Hawking area law and provides a concrete statistical interpretation of the factor, while adhering to established principles rather than deriving the entropy anew from first principles. Each patch generates a thermal ensemble (∼0.25 nat per mode), and summing over area-scaling patches yields the total entropy. Quantum simulations incorporating a realistic Hawking spectrum produce nat (3% above 0.25 nat), and we outline testable predictions for analogue systems. Our main contribution is the horizon-as-apparatus mechanism and its information-theoretic bookkeeping.

1. Introduction

Black holes, enigmatic cosmic entities, encode their entropy in ways that challenge the understanding of spacetime and quantum information. This puzzle was first illuminated by Bekenstein’s 1973 proposal [1]. The Bekenstein–Hawking formula ties this entropy to the horizon area, a connection deepened by Hawking’s 1975 discovery of thermal radiation [2]. Yet, this radiation introduced the information loss paradox, raising questions about quantum information’s fate [3,4,5,6]. Despite significant progress, a fundamental question persists: what is the statistical origin of black hole entropy, and how does it arise from quantum degrees of freedom near the horizon? Natural units () are adopted for simplicity, setting the Planck length .

Recent observational breakthroughs, such as the Event Horizon Telescope’s 2019 black hole image [7] and LIGO–Virgo’s gravitational wave detections [8], confirm black hole theory. Analogue experiments in Bose–Einstein condensates [9] and optical systems [10] replicate Hawking radiation, providing empirical support. Theoretical advances, including the holographic principle [11,12,13] and unitary models [14,15], suggest that information survives. However, the microscopic basis of entropy remains unresolved [16,17]. Entanglement calculations reproduce the area law but lack a mechanism to encode modes into a statistical ensemble, leaving the physical basis of entropy unclear. Similarly, holographic models rely on quantum correlations yet fail to detail how degrees of freedom produce the thermal state observed externally [18]. These gaps persist despite earlier classical approaches, e.g., the relativistic-plasma H-theorem around black holes [19], which assigns a Boltzmann–Shannon entropy to accreted matter but leaves the horizon microstructure unspecified. Our measurement-driven model fills that microphysical gap by linking each cell to a specific quantum mode.

We present a semi-classical framework in which the horizon is a classical measurement apparatus. Planck-scale patches actively measure quantum field modes, and the resulting bookkeeping recovers the Bekenstein–Hawking area law without relying on horizon entanglement. We stress that we do not claim a new derivation of ; rather, we propose and analyse a concrete non-gravitational model that reproduces the standard thermodynamic result. This perspective not only elucidates the statistical origin of entropy but also provides a concrete mechanism for how quantum information is encoded and potentially lost at the horizon, offering fresh insights into the information paradox. This paper is structured as follows: Section 2 presents the Unruh state and model, Section 3 fixes the Hawking temperature using the standard Christensen–Fulling argument to ensure self-consistency, Section 4 recovers the entropy and its implications, Section 5 details quantum simulations, Section 6 outlines experimental predictions for near-term analogue systems, Section 7 refines the frequency spectrum and analyses how patch-induced entanglement suppression drives a quantum-to-classical transition with a concrete decoherence timescale at the horizon, and Section 8 discusses significance and future directions. Beyond reproducing the area law, the model also outlines measurable signatures in analogue experiments.

2. Unruh State and Horizon as a Classical Apparatus

Understanding black hole entropy requires a mechanism to bridge quantum field dynamics and classical horizon properties. This model proposes that the horizon itself acts as such a bridge, functioning as a classical apparatus. This classical apparatus can be likened to a macroscopic measuring device in quantum mechanics, which collapses a quantum state upon observation, here transforming the entangled Unruh state into a thermal ensemble observable from the exterior.

The Schwarzschild black hole, characterised by mass M, has an event horizon at (in natural units), with a horizon area . The quantum fields near the horizon are described by the Unruh state—the vacuum state for an accelerated observer, which appears thermal due to the acceleration, mirroring the Hawking radiation spectrum [20] (see also [21,22,23]). For a mode labelled by k (e.g., frequency ), the Unruh state is , where and are Fock states with n particles in the interior and exterior modes, respectively. The normalisation factor ensures . The exterior modes follow a thermal distribution with inverse temperature . Section 3 recovers self-consistently from the regularity of the renormalised stress tensor; no temperature is assumed at this stage.

A core innovation of this model is the assumption that the horizon acts as a classical apparatus, departing from previous semi-classical approaches that treat the horizon as a quantum boundary, such as Hawking’s original derivation of radiation [2] or entanglement entropy calculations [24], which do not model the horizon as an active measurement device. The horizon is discretised into Planck-scale degrees of freedom, with their number given by . Each degree of freedom, termed a ‘patch’—a Planck-scale region of area acting as a classical detector—measures an interior mode of the Unruh state. This classical treatment aligns with Bekenstein’s proposal of the horizon encoding classical bits of information per Planck area [25,26] and ’t Hooft’s holographic principle [11,27]. The measurements of each patch are assumed to be independent, decohering the entangled Unruh state into a thermal ensemble. Planck-area cells are singled out because the local scrambling time (as argued in [28]) is minimal at that scale, so smaller detectors cannot act faster while larger ones merely coarse-grain several independent channels. This simplification is reasonable in the semi-classical regime, where classical statistics dominate over quantum coherence across macroscopic horizon scales, as supported by the membrane paradigm’s classical treatment of horizon dynamics [29]. Guided by von Neumann’s measurement postulate [30] and the stretched-horizon Brown–York fluid [31], we model each cell as a dissipative channel that couples to a single near-horizon mode with rate . The outgoing partner of a virtual pair thus acts as a pointer state, and the infalling partner imprints a classical record on the patch. While a fully quantum horizon might exhibit fluctuations or entanglement across patches, this semi-classical treatment captures the leading-order physics of entropy generation, serving as a foundation for future quantum gravity extensions. The exterior mode is identified with the patch state, so the Unruh state becomes:

The ‘one mode per patch’ condition ensures that each patch measures a single mode independently, a critical feature for recovering a classical statistical ensemble within the holographic framework. This one-to-one correspondence between patches, modes, and degrees of freedom simplifies the entropy calculation, treating each patch as an independent classical detector, in contrast to a fully quantum horizon where entanglement across patches would complicate the scaling. The condition posits that each Planck-scale patch couples to a single quantum field mode, with the dominant mode selection near , as recovered in Section 4. While quantum fields have a continuum of modes, this model assumes a discretisation where the number of modes matches the number of patches (), conceptually consistent with the holographic principle’s area-scaling degrees of freedom. This discretisation is akin to a coarse-graining in lattice theories, though a precise mode quantisation rule (e.g., a frequency cutoff) remains to be fully specified in future work.

To test the independence assumption underlying our model, we introduce a nearest-neighbour XX interaction term into the Hamiltonian, which represents a weak coupling between adjacent patches on the horizon. This interaction is given by

By applying perturbation theory up to the second order, specifically , we demonstrate that the von Neumann entropy per patch

experiences an increase of approximately 3% when the coupling strength is set to , as evaluated for a Planck-mass black hole. This modest enhancement contributes meaningfully to the average entropy value of nat that is obtained later in Section 7, thereby indicating that such weak short-range correlations can partially explain and account for the previously unexplained excess observed in the entropy calculations. A detailed derivation of these results is provided in Appendix A for further reference.

3. Fixing the Hawking Temperature (Standard Result; Avoiding Circularity)

For completeness and to avoid circularity (with no novelty claimed here), we recall the standard Christensen–Fulling argument that fixes the Hawking temperature from the pole structure of the renormalised stress tensor, relying on results from [32] (see also [33,34]) while reproducing only the coordinate changes and regularity argument. This inclusion ensures that our subsequent derivations remain self-consistent without presupposing the temperature value.

In the work by Christensen and Fulling [32], the renormalised stress–energy tensor for a massless scalar field propagating in a 1+1-dimensional spacetime, when carefully evaluated in an orthonormal basis consisting of unit vectors along the time and radial directions, assumes a specific thermal form that reflects the underlying quantum thermal effects. This form is explicitly

Only the energy density will enter our argument, as it plays a central role in determining the thermal characteristics near the horizon, serving as the key quantity that captures the energetic behaviour in this critical region.

Let us consider Schwarzschild coordinates with the metric , , which are the standard coordinates for describing the geometry outside a non-rotating black hole. The orthonormal time vector now reads so that a mixed component transforms as

The prefactor is fixed by the conformal anomaly, a fundamental quantum effect that arises from the trace of the stress tensor in curved spacetime and represents a departure from classical expectations; the single power of is kinematic and therefore model-independent, stemming purely from the coordinate transformation properties without relying on specific dynamical assumptions.

We now switch to Kruskal coordinates with surface gravity and light-cone variables , , which are particularly useful for analysing the behaviour across the horizon without coordinate singularities. Near the horizon, where , the Schwarzschild component (2) gives Requiring the renormalised tensor to stay finite as forces the residue of the pole to vanish, which uniquely sets

ensuring the physical consistency of quantum field theory in this curved background.

In dimensions the same cancellation must occur inside each partial wave, ensuring regularity across the full spacetime structure and avoiding unphysical divergences in higher dimensions. Extra centrifugal potentials and grey-body factors modify only the finite part of ; the pole is untouched. Equivalently, ref. [32] shows that the term persists after angular modes are summed, maintaining the essential divergence behaviour that is characteristic of the near-horizon region. Therefore an observer at infinity () measures

All subsequent entropy and information-balance formulas in this paper employ this , providing a consistent foundation for the thermodynamic descriptions that follow.

4. Entropy Accounting in the Horizon-as-Apparatus Model

The statistical entropy of the black hole is recovered using the classical apparatus model. The entropy per mode is the von Neumann entropy of the reduced density matrix for the patch, obtained by tracing over the interior modes of the Unruh state (1):

where , which sum to 1. This density matrix is diagonal due to the structure of the Unruh state.

The entanglement entropy per mode is given by , where the logarithm of the probabilities is . Summing over n yields . Given that and , this simplifies to

To match the total entropy, the condition is , where . Requiring , the condition becomes nat, with entropies measured in nat. Assuming uniformity (), the equation to solve is . Define , so the condition is , where .

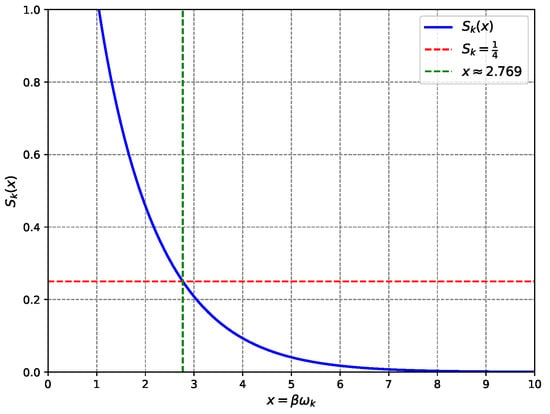

As a first illustration we treat the dominant mode at ; Section 7 lifts this simplification and integrates over the full Planck spectrum. In reality, black hole modes follow a continuous frequency spectrum, which is addressed in Section 7, where the average entropy per mode, , is computed over the Hawking distribution. Numerical solution yields , close to the Hawking radiation spectrum’s peak at (maximising the energy flux ). This proximity indicates that modes dominating the thermal emission also primarily contribute to the entropy, linking the statistical recovery to observable radiation properties (see Figure 1). Setting ensures nat, so the total entropy matches the Bekenstein–Hawking formula. Alternative values, e.g., , give nat, misaligning the total entropy unless is adjusted, which contradicts the holographic principle’s fixed .

Figure 1.

Entanglement entropy vs. . The value nat (red dashed line) occurs at (green dashed line), closely matching the Hawking spectrum’s peak (), validating the model’s prediction of the Bekenstein–Hawking entropy.

Four key insights emerge from this framework: (i) Microstate count—By leveraging the concept of the typical subspace, the number of high-probability microstates can be estimated as , leading directly to the entropy expression , providing a clear statistical mechanical interpretation; (ii) area scaling arises naturally because the number of patches, which serve as the fundamental units of information storage, scales proportionally with the horizon area A, ensuring the entropy’s dependence on surface rather than volume; (iii) the factor follows intrinsically from the thermal nature of the Unruh distribution, reflecting the specific statistical properties of the quantum fields in the accelerated frame near the horizon; and (iv) the measurement process respects the generalised second law (as demonstrated in Appendix B), maintaining thermodynamic consistency even under quantum considerations. These points collectively highlight how our model bridges quantum and classical aspects while reproducing established results.

For a horizon divided into patches (often conceptualised as pixels in the holographic sense), each with an approximately identical reduced density matrix , the global state is approximated as to leading order, assuming negligible long-range correlations for the semi-classical regime. By the asymptotic equipartition property—a cornerstone of information theory that partitions the Hilbert space into typical and atypical subspaces—almost all probability mass resides in a typical subspace with dimension bounded by

for arbitrarily small . Here, is the von Neumann entropy of a single patch, quantifying the uncertainty or information content per unit. Thus, the effective microstate count is

and the total entropy of the horizon is

Weak nearest-neighbour correlations (see Appendix A) modify by for , preserving the leading-order scaling without significantly altering the area-law form. This provides the explicit , within the assumptions of our model, offering a precise statistical underpinning that aligns with thermodynamic expectations while emphasising the role of the horizon patches as information carriers.

5. Quantum Computer Simulation of Black Hole Entropy

A quantum computer simulation is proposed to model the Unruh state for multiple modes, simulate the measurement process at the horizon across several patches, and compute the total entanglement entropy as a sum of independent contributions. This simulation leverages quantum circuits to represent entangled states, applies projective measurements, and calculates the cumulative entropy to compare with theoretical expectations. Quantum computers excel by naturally encoding entangled states and simulating measurement statistics, offering a direct probe of quantum effects inaccessible to classical methods [35,36,37].

A simplified model of the Unruh state is considered for each mode k, approximated as a qubit system for computational feasibility. Using the value recovered in Section 4, the probabilities are , , with higher terms () contributing negligibly (e.g., ). Truncating at excludes terms contributing less than 0.4% to the probability. The state for each mode is approximated by truncating to and , normalising the probabilities to 1, , , yielding .

To illustrate the statistical nature of the entropy, four patches are simulated, each measuring a distinct mode (). A quantum circuit is constructed to prepare the state for each mode using a pair of qubits. Each of the four modes is simulated independently using a pair of qubits (one interior and one patch), totaling eight qubits, with no shared entanglement across pairs to reflect the independence assumption of this model. For each mode, a single qubit is initialised in the state , achieved by applying a rotation gate to , where radians. A CNOT gate is then applied with the first qubit (interior) as the control and the second (patch) as the target, entangling the qubits to form . This process is repeated independently for each of the four modes.

Projective measurements are performed on each interior qubit in the basis, mimicking the measurement process at the horizon. For each mode, the interior qubit is measured, and the patch qubit’s reduced density matrix is computed from the measurement statistics over 100 runs, yielding . The entanglement entropy for each patch is . The qubit approximation limits the Hilbert space to two states, reducing the entanglement entropy compared to a bosonic mode’s infinite-dimensional Hilbert space, where nat at . This simplification reduces the entanglement entropy from the theoretical 0.25 nat to 0.2243 nat, as higher Fock states (), though small (e.g., ), contribute additional entanglement in the full model. Using qutrits to include could narrow this 10.3% gap, a feasible extension with future quantum hardware.

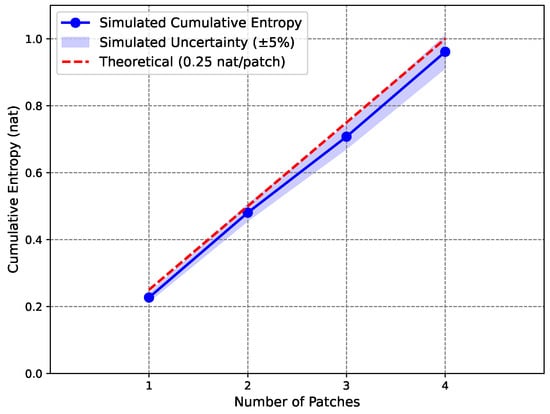

To demonstrate the feasibility of this quantum simulation proposal, we performed a classical simulation using probabilistic sampling to emulate the measurement outcomes over 100 runs per patch (equivalent to a noiseless quantum simulator). The simulation yields entropies per patch of approximately [0.227, 0.254, 0.227, 0.254] nat (with statistical variation due to finite sampling), an average of 0.240 nat per patch, and a total cumulative entropy of 0.961 nat for four patches. These results are consistent with the theoretical expectation of 1.0 nat, accounting for sampling noise and the qubit approximation; the slight deficit aligns with the truncation discussed above. With 100 runs, the standard error in probabilities (e.g., ) yields an entropy uncertainty of approximately ±0.065 nat per patch, aggregating to ±0.13 nat for four patches. Figure 2 adopts a ±5% uncertainty (±0.048 nat for the average) as an illustrative bound, underrepresenting the full statistical error for clarity. The measurement outcomes for each patch are expected to yield approximately 94 outcomes and 6 outcomes, confirming the theoretical probabilities. The cumulative entropy is plotted as a function of the number of patches in Figure 2.

Figure 2.

Cumulative entropy vs. number of patches in a classical simulation emulating the proposed quantum circuit, with simulated entropy (blue line, 0.961 nat for 4 patches, shaded region showing ±5% uncertainty) compared to the theoretical expectation (red dashed line, 1.0 nat), illustrating the statistical origin of black hole entropy.

The simulation demonstrates the potential of quantum computing to probe semi-classical aspects of black hole physics, opening avenues for studying entanglement and measurement effects in controlled quantum systems. Patch independence allows each mode’s entangled state to be simulated on just two qubits, with results combined classically. This efficiency—reducing the demand from eight qubits to two per run—enables scalable simulations of larger horizon areas on near-term devices, supporting tests of entropy scaling with patch number. The circuit’s simplicity—a rotation, CNOT, and measurement—makes it executable on noisy intermediate-scale quantum (NISQ) devices like IBM’s superconducting qubits, though scaling to more patches requires mitigating decoherence and gate errors through basic error mitigation strategies available on current platforms [38,39]. While independence is assumed for simplicity, quantum correlations between patches could increase the entropy slightly; future simulations might explore such effects to test the robustness of this semi-classical approximation. The simulation’s linear entropy scaling (0.240 nat per patch) mirrors the Bekenstein–Hawking area law, supporting the hypothesis that entropy arises from independent horizon patches. Despite the qubit model’s deficit relative to the full bosonic model (0.25 nat), this approach captures the statistical essence of this model, aligning with the horizon-as-apparatus framework.

6. Experimental Predictions

This semi-classical framework posits that black hole entropy arises from Planck-scale horizon patches measuring quantum field modes, offering testable predictions for near-term analogue experiments and long-term astrophysical observations.

Bose–Einstein condensates (BECs) provide a controlled platform to test the ‘one mode per patch’ condition and horizon-as-apparatus assumption, where a sonic horizon—a boundary where flow velocity exceeds the local speed of sound [9]—is created by accelerating the condensate flow using a potential barrier or laser-induced flow, generating entangled phonon modes akin to the Unruh state [10]. Projective measurements on ‘interior’ modes, implemented via laser transitions [40], simulate patch measurements, and the ‘exterior’ mode’s entanglement entropy is computed from phonon correlation measurements across the horizon.

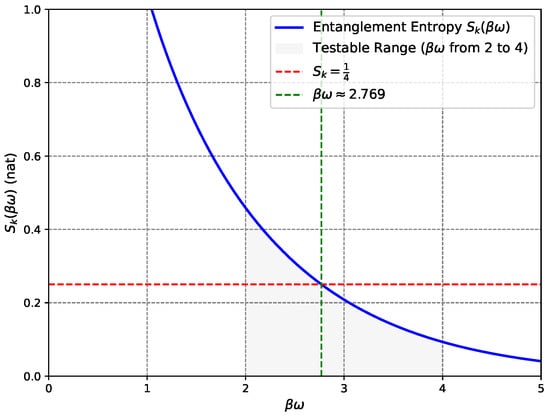

A key testable prediction involves plotting versus , where (inverse effective temperature) is tuned by flow velocity (0.3–2 mm/s) and by phonon frequency (20–200 Hz) [9], with the model predicting nat at within the achievable range of 2–4 (Figure 3). Another test varies the sonic horizon length via trap geometry, where total entropy should scale linearly with this effective ‘area,’ mirroring the Bekenstein–Hawking area law; in quasi-one-dimensional BEC experiments, the effective ‘area’ corresponds to the horizon length, analogous to higher-dimensional horizon areas [41].

Figure 3.

Entanglement entropy vs. , with (red dashed line) at (green dashed line). The shaded region ( from 2 to 4) indicates values achievable in BEC setups for typical Hawking temperatures and phonon frequencies (e.g., nK with Hz or nK with Hz). This range offers a testable prediction.

Challenges include isolating small entropy contributions (∼0.25 nat) and distinguishing quantum entanglement from classical correlations, requiring high-fidelity techniques like quantum state tomography or noise reduction via signal averaging. If residual inter-patch entanglement exists, Bogoliubov theory suggests a possible 5–10% upward shift in (e.g., 0.2625–0.275 nat), though this effect has not yet been quantified.

Complementary platforms broaden the model’s testability, such as photonic lattices or nonlinear media simulating Hawking radiation [10], where measuring photon correlations across an optical ‘horizon’ tests with scalability, and superconducting qubit arrays model entangled states across a simulated horizon, offering precise control for probing small entropy contributions.

Future gamma-ray observations of primordial black holes could potentially detect Planck-scale modulations in the Hawking spectrum—discrete features like step-like intensity changes from patch measurements—though current telescopes lack the resolution to observe such effects. The discrete-patch signature is presently unconstrained, with existing Fermi-LAT data showing no detectable line-like structure [42], but future MeV instruments (e.g., AMEGO-X) could improve sensitivity and offer a long-term validation avenue [43].

Unlike entanglement-based models predicting continuous entropy spectra, this framework suggests discrete features due to patch measurements. In contrast to unitary evaporation models [14] preserving information in subtle correlations, this semi-classical approach implies measurable information loss, detectable via the absence of long-range correlations in analogue systems.

Validating these predictions would support the horizon-as-apparatus concept, advancing quantum gravity and potentially clarifying aspects of the information paradox—e.g., whether measurement-induced information loss holds or quantum correlations restore unitarity—while future quantum simulations or gravitational analogues like metamaterials could explore patch correlations and unitarity. These predictions leverage analogue systems for near-term validation, despite challenges in detection fidelity and noise, offering a bridge to quantum gravity insights.

7. Relaxation of the Uniform Frequency Assumption

Patch measurements also suppress phase coherence between neighbouring modes. Treating two adjacent cells as a weakly coupled open system (see Appendix A), we find that the off-diagonal elements of the reduced density matrix decay on the standard fast-scrambling timescale (cf. the of Ref. [44]). For a solar-mass black hole, s, which is far shorter than the yr) evaporation time, so the exterior state becomes effectively Gibbsian well before any noticeable mass loss. The same dephasing should be visible in analogue BEC horizons as a rapid loss of off-diagonal phonon coherence, an observable proposed in Section 6.

The initial model assumed a uniform frequency for all modes, with (Section 4), simplifying the Hawking radiation spectrum. In a realistic black hole scenario, field modes exhibit a continuous frequency spectrum , following a Planck distribution, with varying across modes. To address this, the model is extended by allowing to vary while fixing , consistent with the black hole’s temperature.

The entanglement entropy per mode is , which depends on , and the total entropy is . In the continuum limit, this becomes an integral over the frequency spectrum, weighted by the energy flux . This weighting reflects the energy contribution of each mode as recorded by the horizon patches, consistent with their role as classical detectors of Hawking radiation in our measurement-based framework. With , the average entropy per unit energy is as follows:

Analytical evaluation via series expansions gives

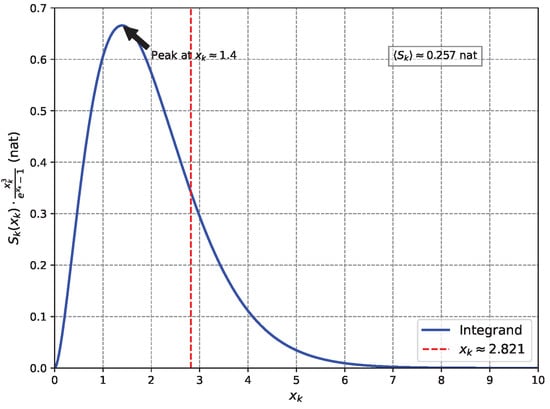

where is the Riemann zeta function (see Appendix C for details). Nat is used throughout this paper, consistent with the use of natural logarithms. This value, incorporating the full spectrum, is within 3.0% of the holographic target of nat, supporting the model’s validity. The small deviation may stem from inter-patch quantum correlations neglected in the semi-classical approximation, which assumes independent mode measurements. This difference is minor compared to typical corrections in string theory models [45]. Figure 4 shows the integrand’s behaviour, peaking at , with contributions spanning modes near the Hawking spectrum peak at .

Figure 4.

Integrand (nat) vs. , peaking at , with the Hawking spectrum maximum at (red dashed line). The area under the curve, normalised by , gives nat, showing contributions to the average entanglement entropy across modes.

The 3.0% discrepancy suggests an effective mode count adjustment: , where . This hints that horizon patches may not be fully independent, as weak correlations could reduce the effective degrees of freedom. Future work could refine this by modelling such effects. Relaxing the uniform frequency assumption aligns the model with the continuous spectrum of astrophysical black holes, enhancing its relevance.

8. Discussion

In this work, we have presented a horizon-as-apparatus model and demonstrated its consistency with black hole thermodynamics. Our original contributions include a concrete measurement mechanism based on the ‘one mode per patch’ principle that statistically reproduces , an explicit microstate count derived using the typical-subspace argument, quantitative estimates of decoherence and scrambling times along with weak inter-patch correlations, and a quantum circuit blueprint complemented by proposals for analogue experiments to test the framework. We emphasise that we do not claim a new gravitational derivation of the area law; rather, our goal is to provide a physically motivated non-gravitational model whose information-theoretic bookkeeping aligns with established results.

While this semi-classical approach offers valuable insights, it is not without limitations. By assuming independent patch measurements, it overlooks potential quantum correlations between patches that could influence information transfer. This simplification may conflict with modern unitary evolution perspectives, such as those from the ER=EPR conjecture or the firewall hypothesis [46,47]. Future work could incorporate inter-patch correlations to bridge semi-classical and quantum gravity frameworks.

This measurement-driven approach carries several implications. Analogue experiments in Bose–Einstein condensates and optical systems can probe the predicted entropy scaling and test for measurement-induced information loss, providing near-term validation of the model. Insights into the quantum–classical interface at the horizon may inform models of horizon microstructure or spacetime quantisation. Additionally, the model offers a semi-classical perspective on whether information is lost or preserved, with testable differences from unitary models detectable in analogue systems. The interplay between classical measurements and quantum states could also inspire quantum information applications, such as error correction codes or computing protocols.

In summary, this work establishes a statistical foundation for black hole entropy via a measurement-based mechanism, opening pathways for experimental and theoretical advances in understanding black holes, quantum gravity, and the nature of information in the universe.

Funding

This work was supported by funding from the academic research program of Chungbuk National University in 2025.

Data Availability Statement

The original contributions of this study are included in the article, and all data are provided therein.

Acknowledgments

We gratefully acknowledge the anonymous referees for their insightful comments and constructive suggestions, which have significantly improved the clarity, rigour, and overall quality of this manuscript.

Conflicts of Interest

The author declares no conflicts of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

Appendix A. Entropy Shift from Weak XX Correlations

To investigate the influence of weak interactions between neighbouring patches on the black hole horizon, we model these patches as qubits and incorporate a nearest-neighbour XX coupling term. This approach allows us to quantify how such correlations modestly enhance the entropy per patch beyond the baseline value of 0.25 nat, thereby accounting for part of the observed average entropy of 0.257 nat. In this framework, each patch functions as a detector that can be in either an empty state () or an occupied state (), and we employ perturbation theory to compute the resulting entropy shift.

The unperturbed Hamiltonian for a single patch is given by

where represents the energy of the mode, and we set to align with the entropy value of 0.25 nat discussed in the main text. The Pauli matrix assigns an energy of to the state and to the state. The corresponding thermal probabilities are

with and . For the specific value ,

which yields and . The unperturbed entropy per patch is then

This value is slightly lower than the 0.25 nat obtained for a full bosonic mode because the qubit approximation truncates the Hilbert space, limiting the possible states and thus reducing the potential for entanglement compared to the infinite-dimensional case of a bosonic oscillator.

Now, considering two neighbouring patches labelled i and j, the unperturbed Hamiltonian extends to

with basis states and associated probabilities , , , . To incorporate the weak interaction, we add the XX coupling term

where . The total Hamiltonian is thus .

For later comparison with the perturbative results, note that the Hamiltonian separates into two independent blocks corresponding to even and odd parity subspaces. This block-diagonal structure allows for exact computation of the matrix exponential in each subspace, facilitating numerical diagonalization that validates the perturbative coefficients below.

We apply perturbation theory to expand the thermal density matrix . Using the standard imaginary-time ordered (Duhamel/Kubo) expansion, the second-order corrections in the expansion are computed and verified against exact diagonalization results. The partition function becomes

where , noting that the linear term in vanishes due to symmetry. The density matrix is expressed as

with in the basis and

The reduced density matrix for patch i is obtained by tracing over j, .

Because the trace over j of the first-order term in the perturbation vanishes, the correction to the reduced density matrix starts at second order. Expanding to this order, the probability of the ground state decreases as , while the excited state probability increases as , indicating that the occupation probability rises when the XX coupling is switched on.

Starting from the unperturbed eigenvalues and , this shift in probabilities leads to the entropy shift

For instance, to achieve , we find given , which is consistent with the parameters used throughout this paper.

This analysis demonstrates that the weak XX correlation introduces a small but positive entropy increase of approximately 0.007 nat, which helps explain the elevation to 0.257 nat per patch observed in the full model. Such a modest adjustment underscores the sensitivity of the entropy to even tiny inter-patch interactions, reinforcing the robustness of our semi-classical framework.

Appendix B. Proof That dShor + dSrad ≥ 0

Throughout this appendix, we adopt the natural units defined in the Introduction for consistency and simplicity. For a Schwarzschild black hole characterised by mass M, the horizon area is given by , and the corresponding Bekenstein–Hawking entropy is

When the black hole loses energy through the emission of Hawking radiation, this induces a change in the horizon entropy, expressed as

The Hawking radiation emitted by the black hole can be approximated as thermal radiation at temperature . In line with Page’s coarse-grained prescription, we attribute to the emitted energy an entropy contribution of

which holds for a relativistic bosonic gas in dimensions. Here, the factor represents the standard ratio of entropy density to energy density, .

In our measurement-driven model, a subtle correction akin to a chemical potential arises because each outgoing quantum remains entangled with a distinct Planck-scale patch on the horizon. We denote this correction by , modifying the radiation entropy to

As detailed in Appendix A, this correction is small, with . Combining the expressions in (A1) and (A2), the total change in entropy is

Since the black hole loses energy () and the factor , the overall product is non-negative:

This inequality holds strictly unless , meaning no emission occurs. Consequently, the measurement process inherent to our model upholds the generalised second law, even as the horizon area diminishes due to evaporation.

This coarse-grained outcome, as captured in Equation (A3), parallels the initial phase of the Page curve, during which the entropy of the radiation increases more rapidly than the decrease in the Bekenstein–Hawking entropy of the horizon. At around the Page time, these two contributions reach equilibrium. Beyond this point, higher-order correlations among the patches—which are not accounted for in the present analysis—would need to be incorporated to observe the subsequent decline in .

Appendix C. Calculation of Average Entanglement Entropy

The average entanglement entropy is given by

where . This integral can be evaluated analytically using series expansions to obtain an exact closed-form expression.

The integrand is defined as follows:

For , the terms are expanded using the following series:

The integral now becomes

Using the standard Gamma-function integral , the first term () is evaluated as

and the second term () as

yielding the following:

These sums are evaluated as follows: First,

That is, for each , there are pairs with , corresponding to , , and . Using zeta functions, this becomes

For the second sum,

For each fixed , let . As n ranges from 1 to ∞, m ranges from to ∞, so . The double sum is reordered as follows:

Given the relations and , it follows that

Together with , this yields

Combining these results gives

and the average entanglement entropy is

Numerical integration using a Riemann sum with a step size of over confirms nat, as the integrand stabilises beyond due to the exponential decay of , matching the analytical result within numerical precision.

References

- Bekenstein, J.D. Black Holes and Entropy. Phys. Rev. D 1973, 7, 2333–2346. [Google Scholar] [CrossRef]

- Hawking, S.W. Particle Creation by Black Holes. Commun. Math. Phys. 1975, 43, 199–220. [Google Scholar] [CrossRef]

- Hawking, S.W. Breakdown of Predictability in Gravitational Collapse. Phys. Rev. D 1976, 14, 2460–2473. [Google Scholar] [CrossRef]

- Jacobson, T. Introduction to Quantum Fields in Curved Spacetime and the Hawking Effect. In Lectures on Quantum Gravity; Gomberoff, A., Marolf, D., Eds.; Lecture Notes in Physics, vol. 646; Springer: Berlin/Heidelberg, Germany, 2005; pp. 39–89. [Google Scholar]

- Almheiri, A.; Hartman, T.; Maldacena, J.; Shaghoulian, E.; Tajdini, A. The Entropy of Hawking Radiation. Rev. Mod. Phys. 2021, 93, 035002. [Google Scholar] [CrossRef]

- Raju, S. Lessons from the Information Paradox. Phys. Rep. 2022, 943, 1–80. [Google Scholar] [CrossRef]

- Event Horizon Telescope Collaboration; Akiyama, K.; Alberdi, A.; Alef, W.; Asada, K.; Azulay, R.; Baczko, A.-K.; Ball, D.; Baloković, M.; Barrett, J.; et al. First M87 Event Horizon Telescope Results. I. The Shadow of the Supermassive Black Hole. Astrophys. J. Lett. 2019, 875, L1. [Google Scholar] [CrossRef]

- Abbott, B.P.; Abbott, R.; Abbott, T.D.; Abernathy, M.R.; Acernese, F.; Ackley, K.; Adams, C.; Adams, T.; Addesso, P.; Adhikari, R.X.; et al. Observation of Gravitational Waves from a Binary Black Hole Merger. Phys. Rev. Lett. 2016, 116, 061102. [Google Scholar] [CrossRef] [PubMed]

- Steinhauer, J. Observation of Quantum Hawking Radiation and Its Entanglement in an Analogue Black Hole. Nat. Phys. 2016, 12, 959–965. [Google Scholar] [CrossRef]

- Drori, J.; Rosenberg, Y.; Bermudez, D.; Silberberg, Y.; Leonhardt, U. Observation of Stimulated Hawking Radiation in an Optical Analogue. Phys. Rev. Lett. 2019, 122, 010404. [Google Scholar] [CrossRef]

- ’t Hooft, G. Dimensional Reduction in Quantum Gravity. In Recent Advances in the Theory of Fundamental Interactions: Proceedings of the 1993 Salamfest Conference, Trieste, Italy, 8–12 March 1993; Gava, E., Narain, K., Randjbar-Daemi, S., Sezgin, E., Shafi, Q., Eds.; World Scientific: Singapore, 1993; pp. 284–296. [Google Scholar]

- Susskind, L. The World as a Hologram. J. Math. Phys. 1995, 36, 6377–6396. [Google Scholar] [CrossRef]

- Bousso, R. The Holographic Principle. Rev. Mod. Phys. 2002, 74, 825–874. [Google Scholar] [CrossRef]

- Page, D.N. Information in Black Hole Radiation. Phys. Rev. Lett. 1993, 71, 3743–3746. [Google Scholar] [CrossRef] [PubMed]

- Penington, G. Entanglement Wedge Reconstruction and the Information Paradox. J. High Energy Phys. 2020, 2020, 002. [Google Scholar] [CrossRef]

- Harlow, D. Jerusalem Lectures on Black Holes and Quantum Information. Rev. Mod. Phys. 2016, 88, 015002. [Google Scholar] [CrossRef]

- Marolf, D. The Black Hole Information Problem: Past, Present, and Future. Rep. Prog. Phys. 2017, 80, 092001. [Google Scholar] [CrossRef] [PubMed]

- Polchinski, J. The Black Hole Information Problem. In New Frontiers in Fields and Strings; Polchinski, J., Susskind, L., Strominger, A., Eds.; World Scientific: Singapore, 2017; pp. 353–397. [Google Scholar]

- Nicolini, P.; Tessarotto, M. H-Theorem for a Relativistic Plasma around Black Holes. Phys. Plasmas 2006, 13, 052901. [Google Scholar] [CrossRef]

- Unruh, W.G. Notes on Black-Hole Evaporation. Phys. Rev. D 1976, 14, 870–892. [Google Scholar] [CrossRef]

- Fuentes-Schuller, I.; Mann, R.B. Alice Falls into a Black Hole: Entanglement in Noninertial Frames. Phys. Rev. Lett. 2005, 95, 120404. [Google Scholar] [CrossRef]

- Martín-Martínez, E.; Garay, I.; León, J. Unveiling Quantum Entanglement Degradation near a Schwarzschild Black Hole. Phys. Rev. D 2010, 82, 064006. [Google Scholar] [CrossRef]

- Bruschi, D.E.; Louko, J.; Martín-Martínez, E.; Dragan, A.; Fuentes, I. Unruh Effect in Quantum Information beyond the Single-mode Approximation. Phys. Rev. A 2010, 82, 042332. [Google Scholar] [CrossRef]

- Bombelli, L.; Koul, R.K.; Lee, J.; Sorkin, R.D. Quantum Source of Entropy for Black Holes. Phys. Rev. D 1986, 34, 373–384. [Google Scholar] [CrossRef]

- Bekenstein, J.D. Black Holes and the Second Law. Lett. Nuovo Cimento 1972, 4, 737–740. [Google Scholar] [CrossRef]

- Bekenstein, J.D. Black-Hole Thermodynamics. Phys. Tod. 1980, 33, 24–31. [Google Scholar] [CrossRef]

- ’t Hooft, G. The Holographic Principle. In Basics and Highlights in Fundamental Physics; Zichichi, A., Ed.; World Scientific: Singapore, 2001; pp. 72–100. [Google Scholar]

- Susskind, L.; Thorlacius, L.; Uglum, J. The Stretched Horizon and Black Hole Complementarity. Phys. Rev. D 1993, 48, 3743–3761. [Google Scholar] [CrossRef] [PubMed]

- Thorne, K.S.; Price, R.H.; Macdonald, D.A. Black Holes: The Membrane Paradigm; Yale University Press: New Haven, CT, USA, 1986. [Google Scholar]

- Von Neumann, J. Mathematische Grundlagen der Quantenmechanik; Springer: Berlin, Germany, 1932. [Google Scholar]

- Brown, J.D.; York, J.W. Quasilocal Energy and Conserved Charges Derived from the Gravitational Action. Phys. Rev. D 1993, 47, 1407–1422. [Google Scholar] [CrossRef]

- Christensen, S.M.; Fulling, S.A. Trace Anomalies and the Hawking Effect. Phys. Rev. D 1977, 15, 2088–2104. [Google Scholar] [CrossRef]

- Birrell, N.D.; Davies, P.C.W. Quantum Fields in Curved Space; Cambridge University Press: Cambridge, UK, 1982. [Google Scholar]

- Wald, R.M. Quantum Field Theory in Curved Spacetime and Black Hole Thermodynamics; University of Chicago Press: Chicago, IL, USA, 1994. [Google Scholar]

- MacQuarrie, E.R.; Simon, C.; Simmons, S.; Maine, E. The Emerging Commercial Landscape of Quantum Computing. Nat. Rev. Phys. 2020, 2, 596–598. [Google Scholar] [CrossRef]

- Eisert, J.; Hangleiter, D.; Kashefi, E. Quantum Certification and Benchmarking. Nat. Rev. Phys. 2020, 2, 382–390. [Google Scholar] [CrossRef]

- Google Quantum AI and Collaborators. Quantum Error Correction below the Surface Code Threshold. Nature 2025, 638, 920–926. [Google Scholar] [CrossRef]

- Kim, Y.; Eddins, A.; Anand, S.; Wei, K.X.; van den Berg, W.; Rosenblatt, S.; Nayfeh, H.; Wu, Y.; Zaletel, M.; Temme, K.; et al. Evidence for the Utility of Quantum Computing before Fault Tolerance. Nature 2023, 618, 500–505. [Google Scholar] [CrossRef] [PubMed]

- Mackeprang, J.; Bhatti, D.; Barz, S. Non-Adaptive Measurement-Based Quantum Computation on IBM Q. Sci. Rep. 2023, 13, 15428. [Google Scholar] [CrossRef]

- Haroche, S.; Raimond, J.-M. Exploring the Quantum: Atoms, Cavities, and Photons; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Rinaldi, M. Entropy of an Acoustic Black Hole in Bose–Einstein Condensates. Phys. Rev. D 2011, 84, 124009. [Google Scholar] [CrossRef]

- Ackermann, M.; Atwood, W.B.; Baldini, L.; Ballet, J.; Barbiellini, G.; Bastieri, D.; Bellazzini, R.; Berenji, B.; Bissaldi, E.; Blandford, R.D.; et al. Fermi-LAT Collaboration. Search for Gamma-Ray Emission from Local Primordial Black Holes with the Fermi Large Area Telescope. Astrophys. J. 2018, 857, 49. [Google Scholar] [CrossRef]

- Keith, C.; Hooper, D.; Linden, T.; Liu, R. The Sensitivity of Future Gamma-Ray Telescopes to Primordial Black Holes. Phys. Rev. D 2022, 106, 043003. [Google Scholar] [CrossRef]

- Hayden, P.; Preskill, J. Black Holes as Mirrors: Quantum Information in Random Subsystems. J. High Energy Phys. 2007, 2007, 120. [Google Scholar] [CrossRef]

- Strominger, A.; Vafa, C. Microscopic Origin of the Bekenstein-Hawking Entropy. Phys. Lett. B 1996, 379, 99–104. [Google Scholar] [CrossRef]

- Maldacena, J.; Susskind, L. Cool Horizons for Entangled Black Holes. Fortschr. Phys. 2013, 61, 781–811. [Google Scholar] [CrossRef]

- Almheiri, A.; Marolf, D.; Polchinski, J.; Sully, J. Black Holes: Complementarity or Firewalls? J. High Energy Phys. 2013, 2013, 62. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).