1. Introduction

In the era of highly computerized communication, information security has emerged as a critical area of research and public concern. While technological advancements have significantly enhanced convenience, they have also heightened the risks of personal information leakage. To mitigate the unauthorized access and exploitation of digital images, which often contain sensitive and private data, image encryption has garnered substantial attention as a robust protective measure [

1]. Furthermore, the substantial storage requirements of image data during transmission necessitate efficient compression techniques to optimize space utilization and reduce transmission latency. Among the various compression algorithms available [

2,

3], the JPEG standard stands out for its exceptional performance. Consequently, to simultaneously address the dual challenges of image security and transmission efficiency, this study proposes an integrated approach that combines JPEG compression with advanced encryption techniques, thereby enhancing the overall security of digital images.

As an encryption method based on nonlinear dynamical systems, chaotic encryption technology has garnered significant attention in the field of information security in recent years. Chaotic systems exhibit properties such as sensitivity to initial conditions, pseudo-randomness, and ergodicity, which align closely with the requirements of encryption algorithms for randomness and unpredictability. These characteristics make chaotic systems an ideal tool for designing efficient encryption schemes. The chaos-based image encryption algorithm was first proposed by Fridrich in 1997 [

4]. Since then, numerous related papers have been proposed [

5,

6,

7,

8,

9,

10,

11,

12,

13,

14]. Most of them encrypted the raw pixels of the image without considering the compression, which led to the larger data volume during transmission.

The JPEG international standard [

15], established in 1993, has become a cornerstone technology widely adopted across various industries. In recent years, significant research efforts have been directed toward integrating JPEG compression with encryption algorithms [

16,

17,

18,

19,

20]. However, encryption and compression are inherently contradictory. Compression algorithms leverage the high correlation within the image, while encryption algorithms aim to eliminate such correlations within the image data to achieve high security. Therefore, such algorithms often struggle to balance both security and compression efficiency. Based on the sequence of applying encryption and compression techniques, the combined processes can be systematically categorized into three distinct approaches: encryption before compression, encryption during compression, and encryption after compression. This classification provides a comprehensive framework for understanding and optimizing the interplay between data security and compression efficiency in digital image processing.

(1) Encryption before compression. Cryptographic schemes based on chaotic systems, deoxyribonucleic acid (DNA) encoding, or permutation mechanisms can be classified solely within this category [

21,

22,

23,

24,

25,

26,

27,

28]. Nevertheless, such approaches exhibit limited applicability in lossy compression frameworks, primarily stemming from two technical constraints. First, the initial encryption stage substantially diminishes inter-pixel spatial correlations within digital images, thereby reducing the inherent redundancy required for efficient compression. Second, the non-reversible nature of lossy compression introduces irreversible quantization errors during decoding, preventing precise reconstruction of original pixel values from cipher images.

(2) Joint encryption with compression. Encryption within compression frameworks represents an integrated approach combining cryptographic operations with compression processes [

18,

29,

30,

31,

32,

33,

34,

35,

36,

37]. This methodology enables encryption implementation across multiple stages of JPEG compression, including Discrete Cosine Transform (DCT), quantization, and entropy coding phases. Among existing solutions, this architecture has attracted substantial research attention due to its demonstrated performance advantages. Previous studies have proposed various implementation strategies. Li et al. [

31] developed an 8

8 block transformation mechanism that integrates compression and encryption through alternating orthogonal transformations. While achieving 12.5% higher coding efficiency compared to conventional methods, this approach exhibits vulnerability to statistical analysis attacks due to its fixed block size constraint. He et al. [

34] systematically evaluated three JPEG encryption schemes employing identical cryptographic algorithms but differing in integration points within the compression pipeline. Their comparative analysis revealed a 15–20% reduction in compression efficiency despite maintaining computational efficiency. More recently, Liang et al. [

37] proposed a novel encryption framework featuring JPEG compatibility through entropy coding segment modification using cryptographic keys. However, their implementation demonstrates an inherent trade-off between security enhancement and file size expansion, with experimental results indicating a 25% average file size increase for optimal security configurations. Additionally, He et al. [

38] combine the reversible data hiding scheme with an encrypted algorithm to ensure the security of JPEG images, and data extraction and information recovery can be performed independently.

(3) Post-compression encryption represents a sequential processing architecture where compression precedes cryptographic operations [

39,

40,

41,

42]. This methodology specifically targets the encryption of entropy-encoded bitstreams, maintaining compression compatibility through isomorphic codeword mapping that preserves original code lengths. While this approach demonstrates superior compression efficiency, it introduces potential format compliance issues. Specifically, the decoding process may generate blocks containing more than 64 elements, violating standard format specifications and potentially compromising system interoperability.

All of the aforementioned methods share a common issue: the trade-off between compression ratio and security. Specifically, it is difficult to simultaneously achieve high compression ratios and robust security. To address this challenge, we conducted an in-depth investigation into the various stages and different syntactic elements of the JPEG compression standard. Our study also examined the impact of encrypting these syntactic elements on both the final compression ratio and the security of the system.

This paper presents a novel integrated framework for simultaneous data compression and encryption, employing three encryption steps across three critical stages of image compression: Discrete Cosine Transform (DCT) transformation, quantization, and entropy encoding. The proposed scheme can be summarized as follows:

1. A 16 × 16 DCT transformation is initially executed during the DCT transformation phase, followed by applying a block segmentation algorithm to generate 8 × 8 sub-blocks.

2. The diffusion mechanisms are implemented through a dual approach in the quantization stage, including DC coefficient permutation and non-zero AC coefficient transformation algorithms.

3. An innovative RSV (Run/Size/Value) pair recombination algorithm is designed in the entropy encoding phase to significantly reduce spatial correlation among adjacent pixels.

4. Comparative analysis with existing methodologies demonstrates that the proposed framework achieves superior optimization between cryptographic robustness and compression efficiency, offering enhanced performance in both security and data compression domains.

The remainder of this paper is organized as follows.

Section 2 provides a comprehensive overview of the JPEG compression standard and the foundational principles of chaotic systems.

Section 3 elaborates on the proposed joint compression and encryption scheme, detailing its algorithmic framework and implementation specifics.

Section 4 presents a systematic evaluation of the simulation experiments, including performance metrics and comparative analyses. Finally,

Section 5 concludes the paper by summarizing the key findings, discussing their implications, and outlining potential directions for future research.

2. Preliminaries

2.1. JPEG Compression Standard

The JPEG (Joint Photographic Experts Group) standard, established by the International Standards Organization (ISO) and the International Telegraph and Telephone Consultative Committee (CCITT) [

43], represents the first international digital image compression standard for static images. As a widely adopted image compression standard, JPEG supports lossy compression, achieving significantly higher compression ratios compared to traditional compression algorithms. In practical implementations, the JPEG image encoding process employs a combination of Discrete Cosine Transform (DCT), Huffman encoding, and run-length encoding. For color images, the general architecture of the JPEG compression standard, as illustrated in

Figure 1, consists of four primary stages: color space conversion, DCT transformation, quantization, and entropy coding. These stages collectively enable efficient compression while maintaining acceptable image quality.

In the execution process of the JPEG compression algorithm, different stages generate distinct syntactic elements. Encrypting these syntactic elements has varying impacts on data security and compression efficiency. The main four compression steps are as follows:

Color Space Conversion: While the JPEG standard is capable of compressing RGB components, it demonstrates superior performance in the luminance/chrominance (YUV) color space. For color images, an initial step involves the transformation of the RGB color space into the YUV color space. It is important to note that this color space conversion process is lossless, as it solely entails a mathematical mapping of pixel values from one color representation to another without any degradation in image quality.

Discrete Cosine Transform (DCT): DCT is a widely utilized coding technique in rate-distortion optimization for image compression. It serves as a mathematical transformation that converts spatial domain image representations into their frequency domain counterparts. This process can be conceptualized as a mapping operation that transforms an array of pixel values into a new array of frequency coefficients, effectively converting spatial light intensity data into frequency-based information. The transformation is applied to each image block independently, resulting in the concentration of energy into a limited number of coefficients, predominantly located in the upper-left quadrant of the transformed matrix. This energy compaction property is a fundamental characteristic of DCT, enabling efficient compression by prioritizing significant frequency components.

Quantization: The quantization process involves dividing each DCT coefficient by its corresponding value in a predefined quantization table, yielding the “quantized DCT coefficients.” During this stage, frequency coefficients are transformed from floating-point representations into integers, which simplifies subsequent encoding operations. It is evident that quantization introduces a loss of precision, as the data are reduced to integer approximations, thereby discarding certain information. In the JPEG algorithm, distinct quantization tables are applied to luminance (brightness) and chrominance components, reflecting their differing accuracy requirements. The design and selection of the quantization table play a critical role in determining the overall compression ratio, making it a pivotal factor in balancing image quality and compression efficiency.

Entropy coding: For the DC coefficient, Differential Pulse Code Modulation (DPCM) is employed. Specifically, the difference between each DC value in the same image component and the preceding DC value is computed and encoded. For the AC coefficients, Run Length Coding (RLC) is utilized to further reduce data transmission by encoding sequences of zero-valued coefficients. Subsequently, Huffman coding is applied to assign shorter binary codes to symbols with higher probabilities of occurrence and longer binary codes to symbols with lower probabilities, thereby minimizing the average code length. Different Huffman coding tables are utilized for the DC and AC coefficients. Additionally, distinct Huffman coding tables are employed for luminance and chrominance components. Consequently, four Huffman coding tables are required to complete the entropy coding process.

Decompression is the inverse process of JPEG compression encoding. The primary steps involved in JPEG decompression are illustrated in

Figure 1.

2.2. Chaotic System

A chaotic system is defined as a deterministic system that exhibits seemingly random and irregular motion, characterized by behaviors that are uncertain, non-repeatable, and unpredictable. This phenomenon, known as chaos, has garnered significant attention and is widely applied in the field of image encryption due to its inherent complexity and sensitivity to initial conditions. Among the foundational discoveries in chaos theory, the Lorenz system stands as the first identified chaotic attractor, marking a pivotal milestone in the study of chaotic dynamics [

44]. This system represents the earliest dissipative system demonstrating chaotic motion, as revealed through numerical experiments. It is a three-dimensional system governed by three parameters, which collectively contribute to its rich and intricate dynamical behavior.

Low-dimensional chaotic systems are characterized by the presence of only one positive Lyapunov exponent, which results in a relatively limited key space. This limitation makes them vulnerable to contemporary brute-force attacks. To address this issue, this paper employs the four-dimensional hyperchaotic Lorenz system proposed in Ref. [

45]. This hyperchaotic system features two positive Lyapunov exponents, thereby enhancing the complexity and robustness of the chaotic behavior. The system’s definition is as follows:

where

are system parameters. When

,

,

, and

, the Lyapunov exponents of Equation (1) are

,

,

, and

; two of these Lyapunov exponents are positive. Under these conditions, Equation (1) exhibits hyperchaotic motion.

2.3. Analysis of Syntactic Elements for Compression and Encryption

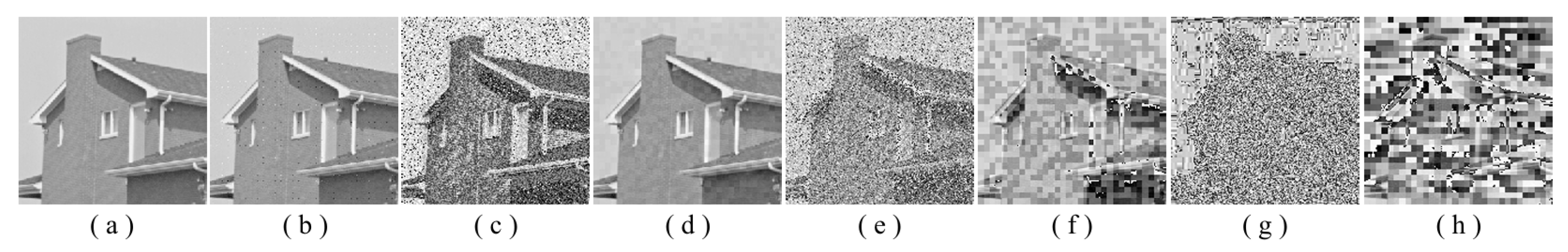

Two syntax elements have been universally accepted in the JPEG compression process: DC coefficients and AC coefficients. The DC and non-zero AC coefficients are encrypted in distinct phases, each significantly influencing communication efficiency, bit expansion, and perceptual security to varying degrees. By combining the BLAKE-256 hash function with the Lorenz hyperchaotic system, test keys are generated, exhibiting high stochasticity within the range [0, 31]. Extensive empirical studies, conducted using a simple XOR (Exclusive OR) operation, confirm the fairness of the process. The visual results are shown in

Figure 2, with a detailed analysis provided in

Table 1.

As shown in

Figure 2, the detailed information of the original image is concealed within a ciphertext image, where only part of the plain pixel values are encrypted. All information is encoded in the encrypted image, resulting in block effects, with the DC coefficients being encrypted after the DCT transformation. In contrast, the AC coefficients are encrypted following the DCT transformation, which enhances data security while still allowing for recognition of object contours. It is noteworthy that certain syntax elements, such as DC and AC coefficients after quantization and DC coefficients after DPCM coding, are selectively chosen for encryption, further strengthening information privacy.

3. Methodology

In the process of simultaneously performing image compression and encryption, the compression operation and the encryption operation can interfere with each other. The reason lies in the fact that the goal of encryption is to eliminate the correlation between image pixels, while compression exploits these correlations to reduce the image size. A poorly designed encryption algorithm can significantly degrade the compression ratio. Through a comprehensive analysis of the effects of different syntactic elements in the image compression process on both encryption and compression performance, we propose a joint compression and encryption algorithm based on chaos theory. This algorithm consists of the following four steps, as shown in

Figure 3.

3.1. Key Scheming

Secret keys play a crucial role in cryptographic systems, serving as the cornerstone of their security. According to the widely accepted Kerckhoffs’s principle, the robustness of a cryptosystem should remain intact even if all aspects of the system, excluding the secret key, are publicly disclosed. This principle underscores the importance of key secrecy in ensuring the overall security of encryption mechanisms. In the proposed scheme, the four initial values of the Lorenz hyperchaotic system are designated as the cryptographic key. Prior to iterating the chaotic system to generate pseudo-random sequences, the BLAKE-256 hash function is utilized to compute a 256-bit hash value, denoted as H. This hash value is subsequently employed to modify the four initial values of the Lorenz hyperchaotic system, resulting in the generation of four updated initial values. These modified values are then substituted into the Lorenz hyperchaotic system for iterative computation, producing four pseudo-random key sequences, , and . This approach enhances the security and randomness of the key generation process. The specific process is as follows:

Step 1: The 256-bit hash value

H is partitioned into 32 segments, each comprising 8 bits, expressed as

, where each hi falls within the range [0, 255]. Subsequently, the initial values of the Lorenz hyperchaotic system, denoted as

,

and

, are modified using Equation (2). This step ensures the initialization of the chaotic system with updated values derived from the hash segments.

Step 2: The Lorenz hyperchaotic system is iterated 10,000 times using the updated initial values

x0′,

y0′,

z0′, and

w0′. Each iteration can obtain four values

xi,

yi,

zi, and

wi,

i = 1, 2, …, 10,000. Let

M and

N represent the height and width of the image, respectively. These generated values are subsequently preprocessed using Equation (3) to ensure their suitability for further cryptographic operations.

Step 3: All values within the pseudo-random sequences X, Y, Z, and W are converted into binary format to govern the subsequent encryption process. Consequently, the encryption key comprises two distinct components: (1) a 256-bit hash value H, and (2) the four initial values of the Lorenz hyperchaotic system, denoted as X0, Y0, Z0, and W0. This dual-component structure ensures a robust and secure foundation for the encryption mechanism.

3.2. DCT Transformation Stage Encryption

The first-stage encryption process is implemented following the Discrete Cosine Transform (DCT), which begins by partitioning the image into 1616 blocks and subsequently performing the DCT transformation on these blocks. However, since the standard quantization table specified by the JPEG compression standard is designed for 88 blocks, each 1616 block (denoted as B16) is further divided into four 88 blocks (denoted as B8) after the DCT transformation. As a result, the 256 coefficients of each 1616 block are distributed across four 88 blocks. This division ensures compatibility with the standard JPEG encoding process, which can be seamlessly applied in subsequent steps. Below is the fundamental procedure of the block-splitting algorithm:

Step 1: The 1616 block (B16) is transformed into a one-dimensional sequence using zigzag scanning. This sequence is then evenly divided into two parts, denoted as B16_1 and B16_2, each containing 128 coefficients.

Step 2: The coefficients in B16_1 are allocated to four 88 blocks. For each allocation, two bits from the pseudo-random key stream X are used to determine the target 8 × 8 block, while three additional bits from X specify the number of coefficients to be assigned to the selected block. After each allocation, the corresponding five bits in X are removed. This process is repeated until all coefficients in B16_1 are distributed, ensuring that each 8 × 8 block contains 32 coefficients.

Step 3: The coefficients in B16_2 are allocated to the four 88 blocks using the same procedure as described in Step 2. Upon completion, each 88 block contains a total of 64 coefficients.

Step 4: Each one-dimensional 88 block (B8) is transformed back into a two-dimensional 8 × 8 block through the inverse zigzag scanning process.

During the block-splitting process, the number of 88 blocks to which the DC coefficient (the first coefficient of the original B16) is assigned is recorded. This information is utilized in the encryption algorithm during the quantization stage. The above block-splitting algorithm is applied to each 1616 block, thereby completing the encryption algorithm in the DCT transformation stage.

3.3. Quantization Stage Encryption

In the second encryption stage, block permutation is performed following the quantization process to achieve encryption scrambling and diffusion effects. Specifically, the DC coefficients undergo confusion, while the signs of the non-zero AC coefficients are transformed. These operations collectively enhance the security and robustness of the encryption scheme by introducing additional layers of complexity and unpredictability.

3.3.1. Blocks Permutation

To further enhance the chaotic nature of the encrypted image, a block permutation operation is introduced after the quantization process. This operation disrupts the original order of the 8

8 blocks based on the pseudo-random key sequence

Y before advancing to the entropy encoding stage. The permutation algorithm employed is a random permutation method. In the context of our encryption scheme,

B represents the original sequence of all 8

8 blocks,

n denotes the total number of 8

8 blocks, and the random integers required for permutation are derived from the pseudo-random key sequence

Y. The algorithm is described in Algorithm 1.

| Algorithm 1 Blocks permutation algorithm |

| Input: All 8 × 8 blocks after quantization; Y. |

| Output: The result of block permutation. |

| 1: | ; |

| 2: | where do |

| 3: | j pick r bits from Y, and convert to decimal; |

| 4: | exchange and ; |

| 5: | remove the first bits from . |

| 6: | end while |

3.3.2. DC Coefficients Confusion

After the permutation of blocks, the index sequence of the DC coefficients within the original 16

16 blocks undergoes a transformation. Consequently, the index sequence of the 8

8 blocks containing the DC coefficients from the original 16

16 blocks must be updated in accordance with the permutation vector

. To further enhance the diffusion and obfuscation characteristics of the encryption scheme, an XOR calculation is applied to the DC coefficients, following the order they appear after the block permutation. The calculation is formulated as follows:

where

represents the DC coefficient of

-th 8

8 block,

. In the decryption process, the original value of the DC coefficient can be restored by Equation (5).

where

.

3.3.3. Non-Zero AC Coefficients Sign Transformation

After quantization, the non-zero AC coefficients are identified and extracted. The encryption effect is achieved by altering the order of these non-zero AC coefficients using the pseudo-random key stream

, as well as modifying their values through sign transformation. This step preserves the distribution of zero-valued AC coefficients, ensuring that the length of the encrypted bitstream remains largely unaffected. The transformation is computed as follows, as defined in Equation (6):

where

represents the value of the

-th non-zero AC coefficient. Once the sign transformation of the non-zero AC coefficients is completed, these coefficients are restored to their original positions. This ensures that the structural integrity of the data is maintained while achieving the desired encryption effect.

3.4. Entropy Coding Stage Encryption

The primary objective of the encryption operation in the entropy encoding stage is to strengthen the correlation removal capability of the encryption scheme while preserving format consistency. Although the encryption algorithms in the first two stages contribute to security, they do not effectively eliminate the correlation among 8 × 8 blocks. To address this limitation, an RSV (Run/Size/Value) recombination strategy is implemented during the entropy coding stage. This strategy enhances the decorrelation of the data, further improving the security and robustness of the encryption scheme.

The primary objectives of the encryption at this stage are to maintain format consistency and to reduce the correlation within the image. Prior to entering the entropy encoding stage, zigzag scanning is applied to each 88 block to separate the DC and AC coefficients. For the DC coefficients, Difference Pulse Code Modulation (DPCM) is employed. For the AC coefficients, Run Length Coding (RLC) is utilized, converting them into data pairs known as RSV (Run/Size/Value) pairs. These RSV pairs are then subjected to Huffman encoding to generate variable-length codes. To enhance security, we propose encrypting these RSV pairs through an RSV pair recombination algorithm, and the specific realization is given in Algorithm 2. This recombination process is governed by the pseudo-random key sequence . The steps of the algorithm are outlined as follows:

Step 1: AC coefficients with a value of zero are referred to as zero-value blocks, meaning their RSV pairs consist solely of the end identifier (0, 0). Blocks containing non-zero AC coefficients are termed non-zero blocks, indicating the presence of non-zero RSV pairs. For non-zero blocks, all end identifiers are removed.

Step 2: All blocks are evenly divided into two parts, denoted as B1 and B2, with each block in B1 corresponding sequentially to a block in B2.

Step 3: A block from B1 and its corresponding block from B2 are sequentially processed, and the following conditions are evaluated:

1. If both blocks are zero-valued blocks, their values remain unchanged.

2. If one block is zero-valued and the other is non-zero-valued, the two blocks are swapped. Subsequently, all RSV pairs of the new non-zero block undergo cyclic shifting to disrupt their original distribution pattern. The shift amount is determined by the corresponding key sequence Z.

3. If both blocks are non-zero blocks, the number of RSV pairs in the first block is denoted as RSVnum. All RSV pairs from both blocks are concatenated and cyclically shifted based on the key sequence Z. The difference between the number of RSV pairs newly assigned to the first block and RSVnum is recorded as T. Initially, the division is performed according to the original number of RSV pairs in each block, with T = 0. Since the number of AC coefficients in each block cannot exceed 63 (to maintain format compatibility and avoid entropy decoding issues), the number of AC coefficients in the newly assigned blocks must be verified. If the number of AC coefficients in either block exceeds this limit, the division point is adjusted as follows:

(1) If neither block exceeds the limit, the division is performed at the original cutting point, and .

(2) If the first block exceeds the limit, the division point is shifted left until both blocks are within the acceptable range. The shift amount is recorded as a negative value.

(3) If the second block exceeds the limit, the division point is shifted right until both blocks are within the acceptable range. The shift amount is recorded as a positive value.

The value of

is converted into an RSV pair, and the corresponding relationship between the T value and the RSV pair is established using a variable-length integer coding table, as shown in

Figure 4. The RSV pair is appended to the end of the first block. The condition for determining whether the value is out of range is that it should not exceed 62.

To better illustrate the RSV pair recombination process, a simple example is provided in

Figure 5. In this example,

B1 and

B2 are two non-zero AC blocks with the original end identifier removed.

Loc1 and

Loc2 represent the results of the RSV pair recombination.

Step 4: Repeat the steps outlined in Step 3 until all blocks in B1 and B2 are complete.

Step 5: Add end identifiers to all non-zero blocks after recombination. If the number of AC coefficients in the block reaches the upper limit, no additional end identifiers will be added.

Step 6: Rearrange the positions of all blocks in a circular manner, with the displacement controlled by the key sequence

. This rearrangement only moves the RSV pairs within the block, while the position of the encoded DC coefficient remains unchanged. The RSV recombination strategy is shown in Algorithm 2.

| Algorithm 2 RSV recombination strategy |

| Input: All RSV pairs; Z. |

| Output: The result of RSV recombination. |

| 1: | Remove the end identifier of each block except comprise merely [0, 0]; |

| 2: | The adjacent blocks are denoted B1 and B2; |

| 3: | if zero value exhibits in B1 and B2 then |

| 4: | Remain unchanged; |

| 5: | else if zero value exhibits B1 or B2 then |

| 6: | Swapping B1 and B2 and connecting them in Lac; |

| 7: | All RSV of Lac are shifted cyclically according to Z; |

| 8: | else |

| 9: | Calculate the number of RSV in B1, denoted as RSVnum; |

| 10: | Connecting B1 and B2 in Lac; |

| 11: | All RSV of Lac are shifted cyclically according to Z; |

| 12: | Fn ← The number of RSV pairs in the first block; |

| 13: | Sn ← The number of RSV pairs in the second block; |

| 14: | times ← Number of moves; |

| 15: | if Fnthen |

| 16: | Split Lac using original point; |

| 17: | T ← The difference in the number of RSV pairs in the first block with RSVnum; |

| 18: | T ← 0; |

| 19: | The RSV pair corresponding to T is spliced into the rear of the first block; |

| 20: | else if Fn 63 then |

| 21: | Split point is left relocated until Fn; |

| 22: | T ← times |

| 23: | The RSV pair corresponding to T is spliced into the rear of the first block; |

| 24: | else if Sn 63 then |

| 25: | Split point is right relocated until Sn; |

| 26: | T ← times |

| 27: | The RSV pair corresponding to T is spliced into the rear of the first block; |

| 28: | Insert the end identifier in each block; |

| 29: | All RS pairs in each block are shifted cyclically according to Z. |