1. Introduction

Understanding equilibration in quantum systems—how a system evolves from an initial pure state to an apparent equilibrium—is a central problem in the foundations of quantum mechanics. Traditionally, this process is linked to the system reaching a state of maximal disorder or entropy. But what happens to the complexity of the quantum state during this process? This work explores whether a measure of complexity can serve as a meaningful quantifier of equilibration, with a focus on systems defined by a Hamiltonian H, an observable O, and a simple, zero-entropy initial state . We ask the following: Can we use a complexity measure to quantify how much a system has equilibrated? This question drives our investigation into the role of a complexity measure in observable equilibration processes.

The foundational question of how macroscopic irreversibility emerges from time-symmetric quantum dynamics dates back to Boltzmann and has shaped the development of statistical mechanics [

1,

2,

3,

4,

5]. In modern quantum theory, the puzzle reemerges in the form of understanding how closed quantum systems equilibrate [

6,

7]. Recent studies have shown that, despite unitary evolution, expectation values of observables can relax to long-time averages that exhibit an observable-dependent thermal equilibrium [

8,

9,

10]. This has led to the concept of

Observable Equilibration, which emphasizes the classical statistical behavior of measurement outcomes rather than the full quantum state [

11,

12,

13]. Recent results further support this perspective by showing that the emergence of a second law in isolated quantum systems can be captured through the statistical properties of observables [

14]. Furthermore, observable equilibrium states are, on average, diagonal in the Hamiltonian eigenbasis, lacking coherence [

15,

16]. This observation invites a resource-theoretic interpretation: on average, coherence, like free energy, becomes a resource consumed in the equilibration process [

17,

18]. This link aligns with the association of thermodynamic irreversibility with coherence depletion [

19]. In this work, we revisit this concept through the lens of statistical complexity. The classical statistical complexity measure introduced by López-Ruiz et al. quantifies structure in probability distributions by combining entropy and deviation from microcanonical state [

20]. This idea has been extended to quantum systems through the Quantum Statistical Complexity Measure (QSCM), which signals transitions between ordered and disordered quantum states [

21].

We propose a complexity-based approach to study equilibration that incorporates the statistical structure of observable outcomes. We aim to understand whether a measure of complexity can indicate that a system has equilibrated, and whether it can capture the subtle transition from quantum coherence to classical equilibrium. The formalism of observable equilibration builds on this by examining the long-time behavior of expectation values and probability distributions associated with physical observables. These distributions can exhibit relaxation, transient oscillations, and effective stabilization features, suggesting a rich structure in the system’s evolution.

These features are studied adopting a probabilistic and operational perspective. While the global quantum state remains pure throughout unitary evolution, the observable statistics, particularly those tied to physical measurements like total magnetization

per spin, etc., reveal information-theoretic signatures of equilibration. Based on the results obtained in Ref. [

14], we propose a Classical Complexity Statistical Measure, named as

Observable Equilibration Complexity measure (OECM), built from observable entropy and distance to equilibrium distributions. This leads to the notion of the observable equilibration complexity measure, which quantifies the state’s both structural and temporal informational contents concerning a chosen observable.

Numerical analysis confirms that the proposed Observable Equilibration Complexity Measure effectively captures the system’s equilibration behavior. Our simulations on a non-integrable Ising spin chain of spin- particles were initialized in three distinct pure quantum states, each exhibiting different dynamical regimes based on the effective dimension of the initial state: the fully polarized up state, (Up), the fully polarized down state, (Dw), and the alternating paramagnetic configuration, (Pm). These configurations allow us to explore a range of equilibration scenarios across different effective dimensions.

For initial configurations with higher effective dimensions, such as Dw and Pm states, we observe a gradual and sustained decay of the Observable Equilibration Complexity Measure towards zero, in agreement with theoretical predictions. This behavior reflects the system’s enhanced capacity to explore a larger portion of the Hilbert space and, therefore, it facilitates equilibration. Conversely, the Up state, characterized by a significantly lower effective dimension, exhibits quasi-periodic dynamics with limited delocalization across the energy eigenbasis. As a result, the Observable Equilibration Complexity Measure displays a comparatively faster decay, indicative of a less complex trajectory. These numerical results not only corroborate the analytical bounds derived in this work but also underscore the utility of statistical complexity measures as a diagnostic tool for distinguishing between complex equilibration dynamics and simpler, coherence-preserving evolutions that characterize transitions from quantum initial coherence states to classical-like equilibrium behavior.

The paper is structured as follows: In

Section 2, we present the mathematical setup and define equilibrium in terms of dephased states.

Section 3 introduces the statistical complexity measures, defines the Observable Equilibration Complexity Measure, and provides bounds on their evolution during equilibration.

Section 4 evinces these concepts numerically using a non-integrable Ising-like spin-chain model, and

Section 5 offers final remarks and open questions. Finally,

Appendix A complements the discussion by providing a numerical evaluation of the theoretical upper bounds for the observable complexity measures, offering further insight into their behavior and limitations in practical scenarios.

2. Framework

We consider a finite-dimensional quantum system of dimension

d, governed by a Hamiltonian

with spectral decomposition

where

n is the number of distinct eigenvalues (with

), and

corresponds to the degeneracy of the energy level

. The total system dimension satisfies

. The system evolves according to the unitary dynamics generated by

H, described by

Given an initial state

, the evolved state at time

t follows

which solves the Schrödinger equation

. The expectation values of observables

, decomposed as

, with

r the rank of

O, evolve as

The latter form of Equation (

4) reveals the evolution in the Heisenberg picture. An important quantity in the study of quantum equilibration is the

effective dimension of the initial state concerning the Hamiltonian statistics. This quantity is defined as

The effective dimension,

, is a measure of how the initial state

is spread across the eigenstates of the Hamiltonian. Specifically, it quantifies the degree to which the state is delocalized in the energy eigenbasis of the system. A high value of

implies that the initial state occupies many energy levels, whereas a low value indicates that the initial state is concentrated in a smaller number of energy eigenstates. This quantity is particularly relevant when analyzing the approach to equilibrium, as systems with a large effective dimension tend to exhibit faster relaxation to equilibrium due to the greater number of accessible states. Conversely, systems with a small effective dimension may exhibit slower equilibration, as fewer energy levels are involved in the evolution [

8]. For a function

defined over a finite time interval

, we define the time average of

as

which represents the average value of the function over the time interval

. This quantity is useful for quantifying the behavior of a system over a finite period, providing an estimate of the long-term behavior for systems that exhibit periodic or transient dynamics. The infinite-time average is defined as the limit of the time average as

, given by

This quantity describes the steady-state behavior of the system, where approaches a constant value as time progresses. The infinite-time average is particularly important when studying equilibrium states, as it represents the asymptotic value that observables reach after a sufficiently long time, assuming the system has equilibrated.

2.1. Equilibration of Observables

In isolated quantum systems, equilibration refers to the process in which the expectation values of observables stabilize at long-time averages. This occurs due to the unitary evolution of the system, and the dynamics is influenced by the triple , where H is a non-integrable Hamiltonian, is the initial state, and O is the observable.

The equilibrium state, denoted by

, represents the long-time average state of the system. It is obtained by taking the time integral of the system’s state

over the interval

and then letting

. Mathematically, the equilibrium state is expressed as

It can be shown [

9] that the equilibrium state

is the dephased version of the initial state

in the Hamiltonian eigenbasis. This means that

is a diagonal matrix in the eigenbasis of the Hamiltonian, and it can be written as

where

are the projectors onto the eigenstates of the Hamiltonian. This dephasing process is a key feature of equilibration, as it effectively removes any off-diagonal coherence in the energy eigenbasis, thus leading to a state where all observable quantities are stationary.

The concept of effective dimension is intimately related to the equilibrium state. The effective dimension quantifies how widely the initial state is distributed over the energy eigenstates of the Hamiltonian. It can be equivalently expressed as

According to Reimann and Kastner [

22], under suitable conditions, the time-averaged deviation of an observable from its equilibrium expectation is bounded by

where

denotes the usual operator norm, and

captures properties of the energy spectrum

with

being the maximal number of distinct energy gaps within an interval

[

22].

To characterize observable equilibration, one may define a time-dependent probability vector

associated with a complete set of measurement operators

. Each component

represents the probability of obtaining outcome

l at time

t and is given by

where

are measurement operators, typically projectors corresponding to a specific observable. The infinite-time average of this probability distribution is defined as

This quantity defines the long-term distribution of measurement outcomes and captures the steady-state behavior of the system as it reaches equilibrium.

Following [

14], it can be shown that for the on-time-average in the interval

, the distance between

and

in the 1-norm satisfies

with

(

i.e., the dimension of

), and

2.2. Classical Statistical Complexity Measures

Let

be the probability vector of a random variable that takes

r possible values. The Classical Statistical Complexity Measure of

is defined as [

20]

where

is the observable Shannon entropy and

, which quantifies the deviation from the uniform distribution

.

Order and disorder represent two fundamental regimes in the study of physical and informational systems. The system’s configuration is entirely predictable in perfectly ordered states, such as a crystal lattice, leading to minimal entropy. On the other hand, maximal disorder, exemplified by an ideal gas in thermal equilibrium, is characterized by uniform probability distributions over all accessible microstates, maximizing entropy. These extreme cases are straightforward to describe, as there is either complete structure or complete randomness.

The classical complexity measure is designed to capture the richness of configurations that exist between these two extremes of order-disorder patterns. When the system is perfectly ordered, the entropy term vanishes, resulting in . Similarly, when the system is maximally disordered, on this scale, the disequilibrium term vanishes, again leading to . Nontrivial complexity emerges only in intermediate configurations.

The Classical Statistical Complexity Measure (CSCM) is inherently dependent on both the descriptive framework adopted for a system and the scale of observation [

20]. Defined as a functional of a probability distribution, this measure is closely associated with the analysis of time series generated by classical dynamical systems. Its formulation is based on two essential components. The first component is an entropy function that quantifies the informational content of the system. While the observable Shannon entropy is conventionally employed for this purpose, other generalized entropy measures may also be utilized, such as Tsallis entropy [

23], Escort–Tsallis [

24], or Rényi entropy [

25]. The second fundamental element is a distance measure defined on the space of probability distributions, designed to quantify the disequilibrium relative to a reference distribution, typically the microcanonical distribution. Various measures can serve this role, including the Euclidean distance (or, more generally, any p-norm [

26]), the Bhattacharyya distance [

27], and Wootters’ distance [

28]. Additionally, statistical divergences such as the classical relative entropy (also known as the Kullback-Leibler divergence [

29]), the Hellinger distance [

30], and the Jensen–Shannon divergence [

31,

32] may be employed. It is worth noting that several generalized versions of complexity measures have been proposed in recent years, and these advancements have proven to be highly valuable in various areas of classical information theory [

33,

34,

35,

36,

37,

38,

39,

40,

41,

42,

43,

44,

45,

46,

47].

The Quantum Statistical Complexity Measure (QSCM) is defined for a quantum state

, over an

dimensional Hilbert space as the following functional of

(see Ref. [

21])

where

is the von Neumann entropy, and

is a distinguishability (usually the trace distance) quantity between the state

and the normalized maximally mixed state

. Since the system evolves under closed unitary dynamics, any initial pure state remains pure at all times, and consequently, its von Neumann entropy vanishes. It then follows directly from Equation (

15) that the quantum statistical complexity measure becomes identically zero, rendering it uninformative in this closed-system context [

21]. Therefore, we restrict our analysis to the classical measure defined in Equation (

14).

3. Observable Equilibration Complexity Measure

Our primary interest lies in quantifying the degree of order and disorder relative to the equilibrium state . Unlike typical complexity measures that use the maximally mixed state as a reference, we redefine the classical statistical complexity by considering the equilibrium state instead. This approach allows us to capture deviations not only from uniformity but also from the stabilized state that the system approaches over time.

The Observable Equilibration Complexity Measure (OECM)

, as stated in Definition 1, is designed to quantify the interplay between disorder and deviation from equilibrium (order) in the probability distribution associated with a quantum observable during its dynamical evolution. In this framework, a regime of minimal disorder corresponds to a highly localized probability vector in the eigenbasis of the observable, where the system exhibits minimal uncertainty. Consequently, the observable entropy

vanishes [

14], and the observable equilibration complexity measure

evaluates to zero, reflecting the absence of structural richness or dynamical tension.

In contrast, in the regime of minimal order, characterized by high uncertainty in the outcomes of the observable, the time-averaged effective probability vector converges towards the equilibrium distribution . Although the entropy may be significant in this limit, the deviation from equilibrium, quantified by norms such as or is small. Thus, the observable equilibration complexity measure is also small, as the system lacks any persistent dynamical structure differentiating it from thermal equilibrium.

A peak in complexity emerges in intermediate dynamical regimes, where the system is neither fully ordered nor completely equilibrated. In these states, the distribution is sufficiently delocalized to produce nonzero entropy , while still retaining a noticeable departure from equilibrium . In such cases, captures the transient coexistence of informational richness and nonequilibrium structure, thereby identifying regions of meaningful dynamical organization in the system’s evolution.

Definition 1 (Observable Equilibration Complexity Measure (OECM))

. The Observable Equilibration Complexity quantifies the extent to which the probability distribution associated with an observable deviates from its equilibrium distribution , and it is defined aswhere is the observable entropy, given by , is the probability vector associated with the observable, that is, , and is the infinite-time average distribution as defined in Equation (12). Within this formulation, the concept of order is thus operationally tied to the localization of

, while disorder is associated with delocalization and convergence towards equilibrium. The measure

captures the dynamically relevant structures that arise in the intermediate regime between these two extremes, quantifying the degree to which the observable’s distribution both exhibits uncertainty and retains memory of its initial conditions. However, it is essential to note that even when the observable exhibits substantial oscillations around the equilibrium distribution, as occurs in regular or quasi-periodic dynamics, it leads to a reduced effective complexity. In such cases, despite the absence of full equilibration, the system’s dynamics are less complex, as they remain confined to a limited subset of the phase space and follow predictable, structured trajectories. Conversely, for the system to effectively equilibrate, it must sufficiently explore its accessible phase space [

14], allowing

to progressively sample a broader set of configurations and thereby approach

. The Observable Equilibration Complexity Measure thus serves as a quantitative diagnostic for tracking this equilibration process through the joint analysis of entropy production and disequilibrium decay, while also distinguishing between complex, irregular dynamics and simpler, quasi-periodic behaviors.

As discussed in the introduction, our goal is to define a

bona fide measure to characterize and quantify how much a given observable

O equilibrates under the dynamics induced by a Hamiltonian

H. Assuming a past hypothesis where

, the observable equilibration complexity is expected to approach zero as the system converges towards equilibrium. Consequently, over long timescales, the time average of the complexity should tend to zero, since, on average,

, as indicated by the bound in Equation (

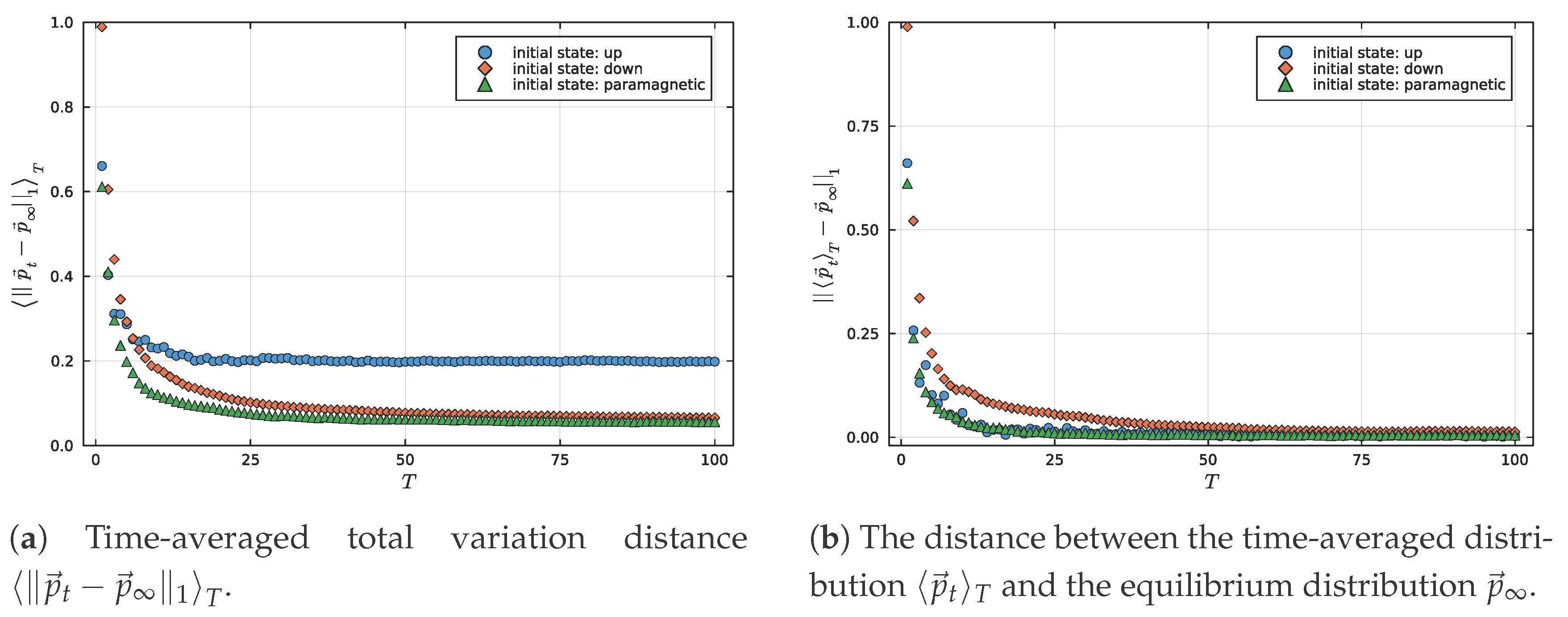

13). However, as illustrated in

Figure 1a, this is not always the case, since

. On the other hand, in

Figure 1b, we can observe that

. This bound is a fundamental result in mathematical analysis, known as Minkowski’s inequality, which extends the triangular inequality to integrals [

48]. As one can notice, this inequality imposes a limitation on how small

can be, and, as shown in Lemma 1, this quantity is bounded from below by

.

Lemma 1 (Variance bound on time-averaged deviation)

. Let be a time-dependent quantum state on a finite-dimensional Hilbert space , and let ω be its fixed reference state (e.g., the time-averaged state of ). Let O be a fixed Hermitian observable. Define the time-averaged expectation value of O over the interval as defined in Equation (6), the following inequality holds Proof. Equation (

17) is a direct consequence of Minkowski’s inequality. The corresponding temporal variance of a given Schur-convex function

over the interval

is

. □

To better understand how OECM behaves over time, we now derive an upper bound on its time average. This bound captures the interplay between the entropic content of measurement outcomes and their deviation from equilibrium. In particular, we show that the time-averaged complexity is controlled by the effective dimension of the initial state, the rank

r of the observable, and a function

that encodes the system’s dynamical timescales [

49]. Although this result does not yield a tight bound (since the observable entropy is not compared with its maximum over the constrained support), it still provides a meaningful characterization of the extent to which equilibration suppresses both uncertainty and distinguishability from the equilibrium distribution. In

Appendix A, we discuss the numerical bound for this measure, stated precisely in the following theorem.

Theorem 1 (OECM Upper Bound)

. Let be the probability distribution over the eigenbasis of a quantum observable O at time t, and let be its equilibrium distribution. Define the Observable Equilibration Complexity Measure, that is, (OECM), aswhere is the observable entropy. Then, the time-averaged observable equilibration complexity satisfies the upper boundwhere r is the rank of the observable O, is the effective dimension of the initial state, and is defined in (11). Proof. By applying the Cauchy-Schwarz inequality to the time-averaged observable equilibration complexity

Since the observable entropy

, we have

From the equilibration bound derived in Appendix 1 of [

14], one obtains

Substituting both results into the previous inequality, we find

This completes the proof. □

This approach results in a bound that is not particularly restrictive, since the rank of the observable

r does not impose a strong limitation on the equilibration complexity. Therefore, while the bound is useful for estimating the system’s behavior, it does not provide a precise and tight description of the equilibration process, as the entropy associated with the observable is not the maximum possible for all states. The bound described in Equation (

23) holds for large times

T, specifically for

, where the function

, that captures properties of the energy spectrum

, with

being the maximal number of distinct energy gaps within an interval

[

22]. As discussed in Ref. [

49], in this asymptotic regime, the bound simplifies to

revealing a direct inverse square-root dependence on the effective dimension. This behavior underscores the role of quantum state delocalization in suppressing observable complexity at equilibrium. A detailed numerical analysis of this behavior is presented in

Appendix A.

In the study of quantum equilibration, it is often assumed that the time-averaged deviation

vanishes as time increases, indicating convergence to equilibrium. However, this assumption generally holds only in the thermodynamic limit

. In finite systems, especially those exhibiting slow or incomplete equilibration, the instantaneous probability distribution

may not converge to the equilibrium distribution

, as shown in

Figure 1a. Nevertheless, the quantity

tends to zero and offers a robust alternative for characterizing equilibration. Building on this, we introduce the Time-Averaged Observable Equilibration Complexity Measure,

which quantifies how far the system remains from equilibrium by combining the observable entropy

with the trace distance

, as seen in

Figure 1b. This measure vanishes when the system is either in a pure measurement outcome or has equilibrated on average, and it captures the interplay between coherence, uncertainty, and relaxation dynamics in a compact, physically meaningful way.

In Definition 2, we present the Time-Average Observable Equilibration Complexity Measure, which quantifies this evolution by combining the observable Shannon entropy of the time-averaged probability vector and the distance to the equilibrium distribution. This measure captures the complexity of the equilibration process, being zero when the system is in a pure state or has fully equilibrated.

Definition 2 (Time-Average Observable Equilibration Complexity Measure)

. Considering, in a time interval , the time average probability vector with elements , such that . We can define the Time-Average Observable Equilibration Complexity Measure

as which is zero for that are pure probability vectors or when they approach equilibrium . In

Appendix A, we discuss the numerical bound for this measure; however, Definition 2 can be viewed as a particular instance of Definition 1. Within this framework, it satisfies the upper bound presented in Equation (

23) of Theorem 1.

The Time-Average Observable Equilibration Complexity Measure converges as converges to zero. Now, we compute a saturation bound for the probability of approaching in the limit of . Theorem 2 provides a probabilistic bound on the deviation between the time-averaged measurement statistics and their equilibrium values. It shows that large deviations are unlikely when the effective dimension is high.

Theorem 2 (Equilibrium Deviation Bound)

. Consider a random initial state drawn from an ensemble with an effective dimension , evolving under a Hamiltonian with non-degenerate energy gaps. For anywhere r is the number of measurement outcomes. Proof. For each component

, Jensen’s inequality yields

Taking the expectation over pure states

, where

is sampled according to the Haar measure on the Hilbert space, and applying Fubini’s theorem, we obtain

On the other hand, applying Riemann’s bound, for

with

, we have

Therefore,

as

Summing the elements, we obtain the

distance

Applying Markov’s inequality,

and this completes the proof. □

4. Numerical Applications

The Hamiltonian governing the time evolution of the system is a spin-

Ising-like model incorporating both longitudinal and transverse magnetic fields, expressed as

where

with

denote the Pauli spin operators acting on site

i of the chain. The parameters

g and

h correspond to the strengths of the transverse and longitudinal magnetic fields, respectively, while

J defines the strength of the spin-spin interaction coupling [

50].

In the simulations, the values of the model parameters were selected to emphasize the non-integrable regime, specifically

, see Ref. [

51]. These parameter choices are consistent with those employed in previous studies on equilibration and thermalization in isolated quantum systems, thereby ensuring a rich and nontrivial dynamical behavior [

10,

14]. For the numerical analysis, we considered the following initial states: the fully polarized

Up state,

(

Up); the fully polarized

Dw state,

(

Dw) and the paramagnetic configuration,

(

Pm), for a chain of

spins-

particles.

Given an initial state composed of

N spins-

particles and the Hamiltonian

H defined above, we perform the unitary time evolution according to the Schrödinger equation using the

QuantumOptics.jl library in Julia. The time-dependent state of the system is, thus, obtained as

Subsequently, we compute the equilibrium state

via exact diagonalization, corresponding to the infinite-time average of the evolved state. For a specific observable, namely the magnetization

per particle, we monitor its time evolution to analyze relaxation and equilibration phenomena.

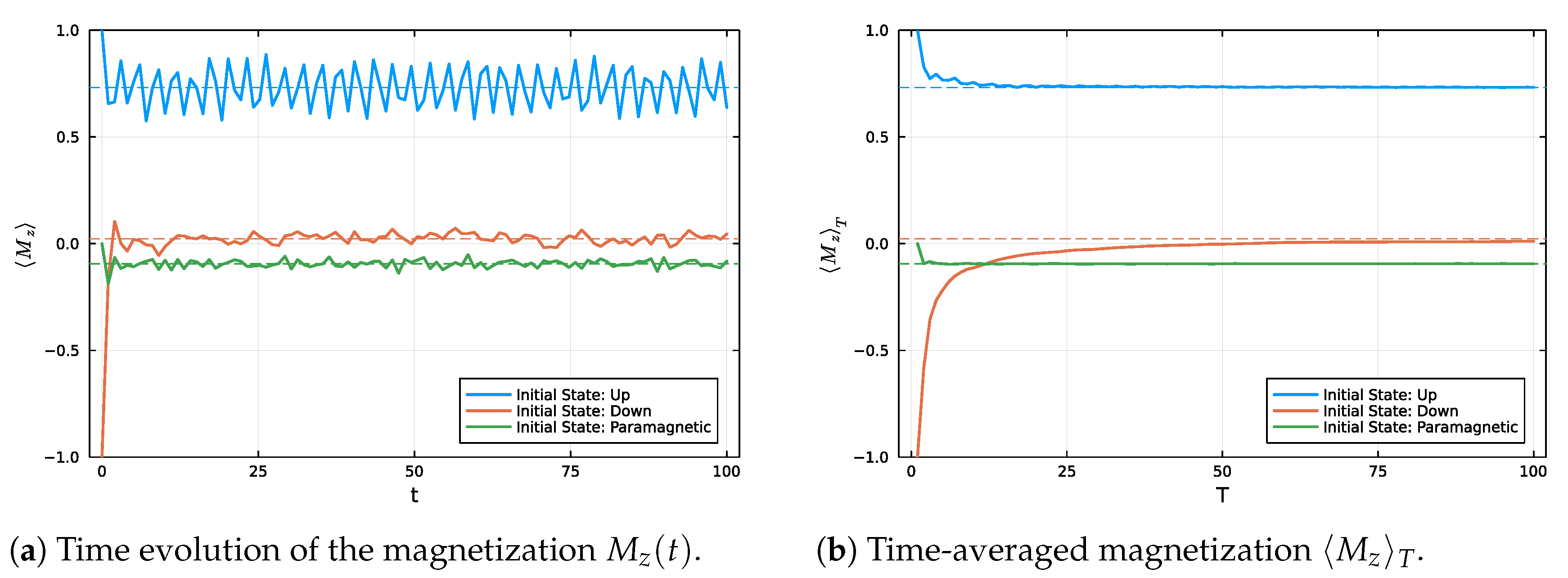

Figure 2 presents the temporal evolution of the magnetization

per particle for different initial states: the fully polarized up state (

Up), the fully polarized down state (

Dw), and an alternating paramagnetic configuration (

Pm). In

Figure 2a, we observe that each initial condition evolves distinctly, exhibiting characteristic oscillations before tending towards stabilization around a mean value.

Figure 2b shows the convergence of the time-averaged magnetization

towards its corresponding equilibrium value, indicated by dashed lines. These results confirm the occurrence of an equilibration process, whereby the system, despite being closed and evolving unitarily, displays relaxation of observables towards stable values.

The

Up state exhibits a more regular and quasi-periodic behavior, as depicted in

Figure 2. This distinctive dynamical pattern can be attributed to its relatively low effective dimension (

), which severely restricts the extent to which the state can explore the available Hilbert space. In comparison, the Down and Paramagnetic states possess significantly higher effective dimensions, with

and

, respectively. These larger effective dimensions facilitate more extensive mixing among energy eigenstates, thereby promoting richer and more complex dynamics, as will be further elucidated in

Figure 3. Consequently, the Down and Paramagnetic configurations exhibit dynamical behaviors that are characteristic of equilibration, with the system’s observables progressively relaxing towards their equilibrium values.

Through the procedure described above, we can monitor the time evolution of the magnetization and track the full probability distribution of measurement outcomes at each instant. This allows for the computation of the observable Shannon entropy associated with the observable, often referred to as the observable entropy, which quantifies the degree of uncertainty or disorder in the system at a given time.

In

Figure 3, we analyze the time evolution of the observable entropy

, which quantifies the uncertainty in the probability distributions associated with the magnetization measurement. In panel (a), all initial states begin with zero entropy, reflecting complete predictability in the measurement basis. As time progresses, the entropy increases due to the spreading of the probability distribution, signaling a loss of information about the measurement outcomes and a tendency toward equilibrium.

Panel (b), however, reveals that the time-averaged observable entropy for the Up state does not converge to its equilibrium value . This indicates that the probability distribution remains partially localized even after long-time evolution. The origin of this persistent deviation lies in the low effective dimension of the Up state, which restricts its dynamics to a narrow subset of the Hamiltonian’s eigenbasis. As a result, the evolution remains highly structured and quasi-periodic, preserving a significant amount of informational order over time.

It is noteworthy that although the

Up and

Pm states may exhibit similar instantaneous entropy in the observable basis, their dynamical behaviors differ fundamentally. The effective dimension of the

Pm state is substantially higher, allowing for broader spreading across the energy spectrum and enabling stronger equilibration of observable statistics. This highlights that observable entropy alone may be insufficient to characterize equilibration; the effective dimension and the spectral structure of the initial state also play a critical role. These quantities also exhibit features consistent with the framework of emergent equilibration laws based on constrained subspace dynamics, as discussed in Ref. [

14], where the long-time behavior of population observables reflects a form of the second law of thermodynamics in closed quantum systems.

Figure 4a illustrates the average observable complexity

, which quantifies the typical instantaneous deviation of the observable distribution from equilibrium. This measure captures how dynamically active and structured the observable remains throughout the evolution. The

Up state exhibits the highest average observable equilibration complexity, remaining persistently, i.e., pointwise, far from equilibrium with significant fluctuations in the observable distribution. This reflects a regime of strong, coherent revivals and poor dephasing, characteristic of a low effective dimension.

In contrast, the

Pm and

Dw states exhibit a rapid suppression of

, suggesting a faster approach to equilibrium-like behavior in the instantaneous statistics. However, while the

Pm state exhibits both low entropy and relatively small deviations from equilibrium, the

Dw state remains more complex due to its persistently high entropy. As shown in

Figure 3, the

Dw state’s entropy increases rapidly. Still, it stabilizes at a value significantly higher than that of the Paramagnetic configuration, indicating a broader and more uncertain observable distribution. At the same time,

Figure 1 reveals that neither the

Dw nor the

Pm state fully equilibrates pointwise, as the instantaneous distribution

retains a finite distance from

even at long times. Consequently,

remains nonzero in the asymptotic regime for both states.

This behavior highlights that the observable equilibration complexity measure captures two complementary sources of deviation from equilibrium: informational disorder, quantified by entropy, and temporal fluctuations, quantified by the lack of pointwise convergence. In the case of the Dw state, it is the combination of high entropy and residual dynamical structure that sustains a larger value of . For the Pm state, despite its lower entropy, the persistence of small but non-vanishing fluctuations prevents the complexity from vanishing entirely, reflecting incomplete equilibration in the instantaneous statistics.

Figure 4b reveals another important distinction in the observable equilibration behavior of the initial states. In particular, we examine the Observable Equilibration Complexity Measure (OECM) of the time-averaged distribution

. This measure provides a complementary perspective on the equilibration process, allowing us to quantify persistent informational structures encoded in the effective probability distribution. Although the

Dw state possesses the highest effective dimension among all initial configurations, it also exhibits the largest observable complexity

at finite times. This apparent paradox is reconciled by noting that a high effective dimension guarantees equilibration in the long-time limit, but not necessarily a rapid decay of observable structure. The

Dw state explores a broad energy subspace, leading to slow dephasing and a time-averaged observable distribution that retains nontrivial structure. As a result, the quantity

, which quantifies both the entropy and distinguishability of this distribution, remains elevated over the observed time window.

In contrast, the Up state, despite its low effective dimension and failure to equilibrate, exhibits a low value of . This is because its evolution remains confined to a small invariant subspace, generating a time-averaged distribution that is highly structured but dynamically simple. Importantly, this low complexity does not reflect proximity to equilibrium; rather, it results from persistent quasi-periodic behavior and strong memory of the initial state. These coherent revivals lead to a time-averaged distribution that lacks both entropy and mixing, hence producing a small value of , despite the absence of equilibration.

The findings underscore that a low value of observable complexity in the time-averaged sense does not necessarily indicate relaxation, and that a high effective dimension, while necessary for equilibration, can delay the suppression of structure in the observable distribution. The observable equilibration complexity measure

thus serves as a sensitive witness of equilibration structure and temporal memory. Taken together with

Figure 4a, these results reveal a fundamental distinction between dynamical persistence and structural convergence in quantum equilibration. The time-averaged complexity

captures the degree to which the system fluctuates away from equilibrium at each instant in time, whereas the complexity of the time-averaged distribution,

, reflects the cumulative structure retained by the observable statistics over time.

For example, while the Dw state exhibits high values of both measures (due to its slow dephasing and broad exploration of the Hilbert space), the Up state displays an intriguing asymmetry: it is dynamically active, as shown by its large , yet structurally simple in the time-averaged sense, with a small and quasi-periodic . This contrast arises because its evolution is confined to a small invariant subspace, leading to persistent quasi-periodic behavior and low entropy accumulation.

These observations emphasize the complementary roles of and : the former probes the persistence of temporal non-equilibrium, while the latter detects structural memory in the observable distribution. Their combined analysis provides a refined diagnosis of equilibration behavior that goes beyond traditional measures based solely on effective dimension or asymptotic values.

5. Conclusions

In this work, we investigated the role of statistical complexity as a diagnostic tool for analyzing observable equilibration in isolated quantum systems evolving under unitary dynamics. To this end, we introduced the Observable Equilibration Complexity Measure, defined as the product of the observable entropy and the trace distance to equilibrium. This construction provides a formal framework for quantifying the transient informational structures that arise during the system’s approach to equilibrium.

Our theoretical developments established upper bounds on the time-averaged complexity in terms of the effective dimension and spectral properties of the system. These predictions were corroborated by numerical simulations of a non-integrable Ising-like spin chain Hamiltonian. For initial states with high effective dimension, such as the Dw and Pm configurations, the system exhibited clear signatures of equilibration, with the complexity vanishing as the time-averaged probability vector converged to the equilibrium distribution. In contrast, for initial states with low effective dimension, such as the Up state, we observed a non-null due to the non-pointwise convergence of the probability vector.

Furthermore, we evaluated the complexity measure of the effective distribution through , which converges after a certain time threshold. We observed the persistence of a quasi-periodic regime for the Up state, which is consistent with the interpretation that a state with lower effective dimension has less difficulty in retaining information about the initial state and, as a result, explores only a limited portion of the Hamiltonian subspaces, leading to revivals in the complexity of the effective distribution.

Moreover, the analysis of the observable complexity, both in its instantaneous average form and in the complexity of the time-averaged distribution , captures distinct and complementary aspects of quantum equilibration. While a highly effective dimension is generally associated with equilibration, it does not guarantee rapid suppression of observable structure. The Dw state exemplifies this by exhibiting large complexity due to the coexistence of high entropy and persistent dynamical features. Conversely, the Up state, despite failing to equilibrate, shows low time-averaged complexity as a result of its confinement to a small invariant subspace with coherent quasi-periodic behavior. These findings emphasize that the proposed complexity measures offer refined probes of both informational disorder and temporal memory in isolated quantum systems.

In summary, our results demonstrated that the observable entropy serves as a reliable indicator of the transition from ordered to disordered regimes in the measurement statistics. At the same time, the complexity measure effectively captures the interplay between this increasing disorder and the relaxation towards equilibrium. Overall, this study advances the understanding of how classical-like equilibrium behavior emerges from unitary quantum dynamics, positioning our measure as a quantitative witness of equilibration and memory retention in closed quantum systems. Our findings suggest potential applications in characterizing equilibration phenomena in a broad class of quantum systems, including those relevant for quantum thermodynamics and quantum information processing. Future work may explore extensions of the proposed framework to open quantum systems and the incorporation of alternative complexity measures beyond those considered in this study.