Abstract

This study investigates the impact of data distribution and bootstrap resampling on the anomaly detection performance of the Isolation Forest (iForest) algorithm in statistical process control. Although iForest has received attention for its multivariate and ensemble-based nature, its performance under non-normal data distributions and varying bootstrap settings remains underexplored. To address this gap, a comprehensive simulation was performed across 18 scenarios involving log-normal, gamma, and t-distributions with different mean shift levels and bootstrap configurations. The results show that iForest substantially outperforms the conventional Hotelling’s T2 control chart, especially in non-Gaussian settings and under small-to-medium process shifts. Enabling bootstrap resampling led to marginal improvements across classification metrics, including accuracy, precision, recall, F1-score, and average run length (ARL)1. However, a key limitation of iForest was its reduced sensitivity to subtle process changes, such as a 1σ mean shift, highlighting an area for future enhancement.

1. Introduction

Anomaly detection has been widely studied and applied in quality engineering, where process variability and stability are monitored using statistical process control (SPC) charts. Modern SPC combines traditional control charts based on statistical theory with novelty detection algorithms. A key objective in modern SPC is to identify abnormalities in out-of-control processes as early as possible to prevent the production of defective products. Traditional SPC charts are either univariate, monitoring quality characteristics independently, or multivariate, which monitor interrelated quality characteristics simultaneously.

The Shewhart -chart [1] is an effective univariate control chart for detecting large shifts in process parameters. However, because it only considers current sample data, it does not utilize past information. It is also inefficient in detecting small process shifts, such as those within 1.5 sigma. To address this, the cumulative sum (CUSUM) [2] and exponentially weighted moving average (EWMA) [3] control charts were introduced. EWMA effectively detects small changes and long-term trends by assigning more weight () to recent data, with earlier values weighted by a decreasing exponential sequence: (), , … (, and so on (0 ≤ λ ≤ 1). When λ = 1, EWMA is equivalent to the -chart. Similarly, CUSUM is effective for detecting minor process changes by accumulating quality metrics over time. It quickly identifies shifts by synthesizing continuous sample data, making it well-suited for identifying small deviations in controlled processes.

Unlike univariate control charts, multivariate charts can monitor multiple quality characteristics simultaneously, such as Hotelling’s T2 [4], multivariate CUSUM [5], and multivariate EWMA [6] control charts. The robustness of the multivariate EWMA chart to non-normality has been examined [7]. Shewhart-type distribution-free control charts based on Wilcoxon signed-rank statistics and run-type rules were proposed in [8]. A multivariate non-parametric control chart based on a bivariate sign test was introduced in [9]. Recent studies have also developed non-parametric principal component analysis (PCA) control charts that do not require distributional assumptions [10]. A non-parametric control chart capable of adaptively monitoring time-varying and multimodal processes was presented in [11].

Existing novelty detection methods can be broadly categorized into three types: density-based, distance/reconstruction-based, and model-based. The expectation-maximization (EM) algorithm [12] is a density-based method consisting of an E-step, which computes conditional probabilities based on current parameter estimates, and an M-step, which updates parameters to maximize expected likelihood. In [13], the in-control performance of the phase I T2 chart was assessed by estimating missing values using four imputation techniques, including the EM algorithm. The local outlier factor (LOF) algorithm [14] is another density-based method that computes novelty scores based on local data density. The k-nearest neighbors (KNN) algorithm [15,16] determines novelty scores by measuring distances to the k-nearest neighbors without assuming prior distributions for the normal class. Among distance-based approaches, the k-means clustering method [17] calculates novelty scores using distance to the nearest centroid, also without requiring prior distributional assumptions. Autoencoder-based methods [18] can also be applied to novelty detection by nonlinear transformation and latent space representations. One-class support vector machine (1-SVM) [19] and support vector data description (SVDD) [20] are widely used model-based novelty detection techniques. The 1-SVM method maps data into a feature space defined by the kernel and separates them from the origin with a maximum margin. SVDD identifies a hypersphere that encloses all normal instances within the feature space. The Isolation Forest (iForest) algorithm, developed in [21], is another model-based novelty detection technique. It differs fundamentally by explicitly isolating anomalies rather than profiling normal points. Additionally, graph-based methods such as spectral clustering with graph structure learning [22] can be applied to novelty detection by leveraging network science concepts to capture topological dependencies among features.

Among the novelty detection methods mentioned above, the iForest algorithm has undergone several improvements and adaptations since its introduction in [21]. The SA-iForest and Extended-iForest variants were proposed in [23,24], and iForest was compared with neighbor-based isolation methods in [25]. These developments have progressively enhanced iForest’s performance. However, a significant gap remains in the analysis of non-normal data distributions—an essential aspect of data analytics [26] and a common characteristic of industrial processes. Moreover, the influence of the bootstrap setting—determining whether data sampling during tree construction occurs with or without replacement, has not been extensively studied. This setting can affect the diversity of isolation trees and, in turn, the model’s robustness and generalization performance. Despite its potential importance, the impact of bootstrap configuration on iForest remains largely unexplored.

This study systematically examines the effects of data distribution and bootstrap setting on the performance of iForest in process quality control. The remainder of this paper is structured as follows. Section 2 reviews the two algorithms (iForest and T2) evaluated in this study. Section 3 details the methodology used to assess their performance. Section 4 discusses the performance of iForest under varying data distributions and bootstrap settings in comparison with T2. Finally, Section 5 summarizes key findings, discusses practical implications, and outlines potential applications in process quality control.

2. Algorithm Review

2.1. iForest Algorithm

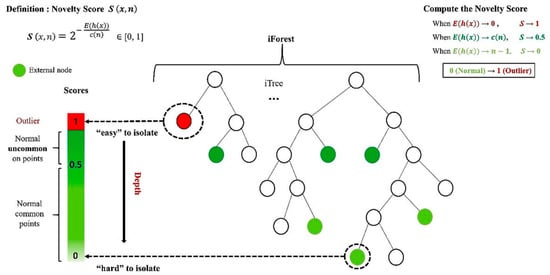

In the iForest algorithm, random decision trees (called iTrees) are constructed until each instance in the dataset is isolated in a separate leaf. This process is repeated multiple times, and the average path length is used to compute a novelty score ranging from 0 to 1 (0 for normal instances and 1 for anomalies), as illustrated in Figure 1.

Figure 1.

Schematic of isolation forest.

The core objective of iForest is to isolate individual observations by randomly selecting features and corresponding split values within their respective data ranges. “Isolation” refers to the separation of a given instance from the rest of the dataset, resulting in the creation of iTrees. Each node in an iTree represents a collection of vectors forming a subset of the original dataset, which consists of N-dimensional points. In iTrees, instances are recursively split until each one is isolated.

iTrees are built through a three-step random partitioning process. First, a subsample of size n, denoted as X′, is generated using bootstrapping from the original dataset X, which contains N instances with k-dimensional features. Second, X′ is recursively divided by randomly selecting a feature q and a split value p to construct the iTree. This continues until specific stopping conditions are met, such as reaching a maximum tree height or having only one instance in a node. Third, the procedure is repeated m times to generate m iTrees. Assuming all instances are distinct, each instance is isolated at an external node when the iTree is fully developed. In this case, the number of external nodes is n, and the number of internal nodes is n − 1. Therefore, each iTree contains a total of 2n − 1 nodes [27].

Instances with short average path lengths are likely to be outliers. The path length h(x) of a given instance x is defined by the number of edges traversed in an iTree from the root to the external node where the search ends. However, the average path length is influenced by the sample size n. To account for this, h(x) is normalized using the theoretical average path length. The estimated average path length c() is computed using Equation (1):

where denotes a harmonic number, which can be estimated by and denotes the number of instances.

The novelty score, denoted as the normalized score S, is calculated using Equation (2). Instances with S values close to 1 are clearly anomalies, while those with S values near 0, particularly below 0.5, are classified as normal [27].

where E(h(x)) is the average path length of instance x, and c(n) is the estimated average path length.

2.2. Hotelling’s T2

Hotelling’s T2 statistic is a multivariate extension of the Student’s t-test used to assess whether the mean vector of a multivariate sample significantly differs from a specified or known mean. It is widely employed in multivariate process monitoring for quality control applications, particularly when relationships among multiple correlated variables must be considered simultaneously. The T2 statistic incorporates the covariance structure among variables, making it more robust than univariate control charts for detecting multivariate shifts. Its formulation ensures that outlier detection reflects the overall Mahalanobis distance in multivariate space rather than being skewed by marginal distributions.

Given a multivariate sample where is the sample mean vector and is the population covariance matrix, the T2 statistic for testing the mean vector is defined as

where is the sample mean vector, is the sample covariance matrix, n is the sample size, and p is the number of variables.

Under the null hypothesis H0: = and assuming multivariate normality, the T2 statistic follows an exact F-distribution:

The T2 statistic offers several advantages in multivariate analysis. Unlike univariate tests, it accounts for the correlation structure among variables, enhancing sensitivity to joint anomalies. It is based on Mahalanobis distance, which measures the deviation from the multivariate while incorporating both variance and covariance. Its validity depends on the assumption of multivariate normality, under which reliable inference can be drawn. Due to these properties, T2 is widely used in multivariate SPC (MSPC), particularly in Phase I for establishing baseline control limits and in Phase II for identifying deviations that indicate abnormal process behavior.

3. Material and Methods

3.1. Evaluation Scenarios

Eighteen scenarios were designed based on combinations of non-normal distributions (log-normal, gamma, and t-distribution) and a bootstrap-related hyperparameter. These three distribution types, frequently used in prior studies [28,29,30], were selected to reflect diverse data patterns commonly observed in manufacturing processes. The bootstrap setting included two levels: false and true. When set to true, individual iTrees were trained on random subsets of the data sampled with replacement. When set to false, sampling was performed without replacement.

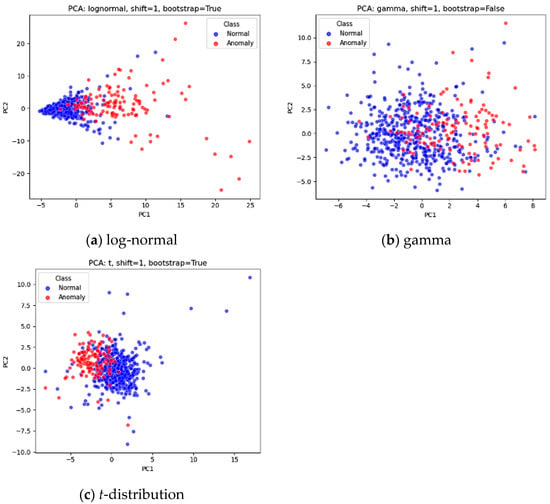

Datasets were generated using log-normal, gamma, and t-distributions, as illustrated in Figure 2. For Phase I analysis, 500 in-control observations with 10 attributes were generated following prior research [21,31,32]. For Phase II analysis, 100 out-of-control observations were created to simulate three levels of mean shift in manufacturing processes (1, 2, and 3 corresponding to small, medium, and large shifts, respectively). For the t-distribution, the degrees of freedom were set to three for both Phase I (in-control) and Phase II (out-of-control), which is in line with previous studies [33,34]. The log-normal distribution represented skewed process data, the gamma distribution captured skewness and high kurtosis, and the t-distribution reflected symmetry with high kurtosis.

Figure 2.

Examples of normal and anomaly (1 mean shift) data for three distributions (note: two principal components extracted for the 10 attributes were used for visualization purpose).

3.2. Control Limits

Control limits were set based on the average run length (ARL), defined as the average number of observations required to detect a process change [35]. To determine the control limits for iForest, anomaly scores were computed using an ensemble of 100 iTrees for each scenario. To ensure reliable and stable results—given the stochastic nature of iForest subsampling—this process was repeated 10 times. Since control charts are expected to provide early warnings when a process change occurs, a smaller out-of-control ARL (), given a fixed in-control ARL ( = 370, equivalent to 3 sigma level), allows for more accurate and timely detection of abnormal conditions [36]. refers to the expected number of samples until a signal is triggered while the process remains in control. Conversely, is the expected number of samples until a signal is triggered when the process is actually out of control. Finally, the control limits for T2 were calculated using the same procedure as that used for iForest.

3.3. Performance Measures

Appropriate use of evaluation metrics is critical for assessing model performance and guiding model selection in binary classification tasks. We used six evaluation metrics: accuracy, precision, recall (true positive rate, TPR), F1-score (F1), ROC area under the curve (AUC) (receiver operating characteristic—area under the curve), and PR AUC (precision–recall area under the curve). Accuracy measures overall classification correctness, representing the proportion of correctly predicted instances (both positive and negative) among all observations. It is calculated as TP + TN/(TP + TN + FP + FN). Although intuitive, accuracy can be misleading for imbalanced datasets, as they may be dominated by the majority class. Precision is the proportion of true positives among all positive predictions made by the model. It reflects the model’s ability to minimize false positives and is calculated as TP/(TP + FP). Precision is especially important when false positives carry a high cost. Recall indicates the model’s ability to correctly identify all actual positives. It is calculated as: TP/(TP + FN). Recall is crucial in applications where missing positive cases (false negatives) has serious consequences, such as in medical diagnostics or fault detection. False positive rate (FPR) represents the proportion of actual negatives incorrectly classified as positives. It is calculated as FP/(FP + TN). F1-score balances precision and recall and is particularly useful when an even trade-off is needed. It is calculated as

The ROC AUC quantifies a classifier’s overall ability to distinguish between positive and negative classes, regardless of the decision threshold. The ROC curve plots the TPR against the FPR. The AUC represents the probability that a randomly selected positive instance is ranked higher than a randomly selected negative one by the classifier. AUC values range from 0.5 (no discrimination) to 1.0 (perfect discrimination). The PR AUC represents the area under the precision–recall curve, which plots precision against recall across various threshold settings. It provides a comprehensive summary of model performance, which is particularly useful in imbalanced datasets. A higher PR AUC indicates a better trade-off between precision and recall. Unlike ROC AUC, PR AUC focuses solely on performance with respect to the positive class, making it more informative in skewed settings.

3.4. Statistical Analysis

A three-factor analysis of variance (ANOVA) was conducted to examine the effects of distribution type, mean shift level, and bootstrap setting, using a significance level of 0.05. For factors and interactions found to be significant, post hoc analyses were performed using Tukey’s test and simple effect tests at the same significance level.

4. Results

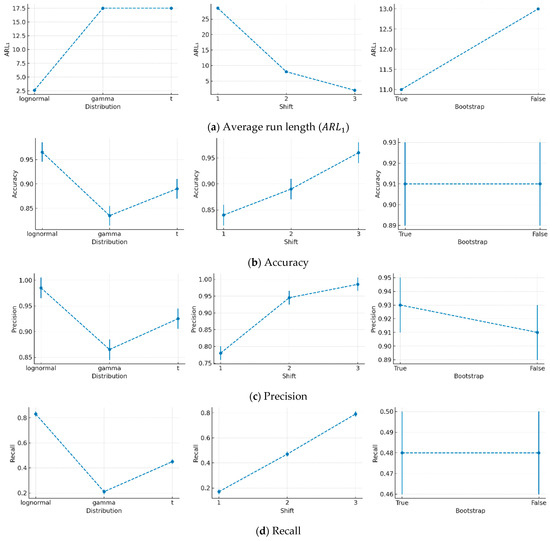

4.1. Effect of Distributions, Mean Shifts, and Bootstrap Settings in iForest

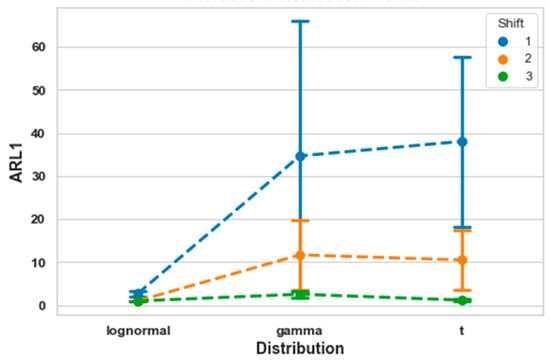

Two out of three factors—distribution type (F(2, 156) = 37.2, p < 0.001), mean shift (F(2, 156) = 73.3, p < 0.001), and bootstrap setting (F(1, 156) = 0.1, p = 0.74)—had a significant effect on , as shown in Figure 3a. Among the distributions, the log-normal distribution yielded the lowest ARL1 (mean ± SD; 1.6 ± 0.9), indicating superior detection performance. In contrast, the gamma (18.2 ± 24.4) and t-distributions (16.4 ± 27.3) produced similar values. The mean shift, which reflects the degree of separation between in-control and out-of-control data, significantly reduced as its level increased. However, the reduction in diminished with each successive shift level—from 23.6 to 7.4 between shift levels 1 and 2 and from 7.4 to 1.7 between levels 2 and 3. Although the bootstrap setting had an insignificant effect, enabling bootstrap resulted in a slight difference between the True (8.3 ± 13.3) and False (11.7 ± 23.4) conditions.

Figure 3.

Effect of data distribution, mean shift, and bootstrap setting in iForest.

All classification performance metrics followed consistent trends across the three factors, as shown in Figure 3b–g. The log-normal distribution produced the highest values for all metrics compared to the other distributions. While the gamma and t-distributions showed similar performance, the t-distribution slightly outperformed the gamma distribution. Increasing the mean shift significantly improved all metrics, and this trend remained consistent across all evaluation measures. Lastly, the true bootstrap setting yielded slightly better performance than false across all metrics.

Significant interactions were observed between distribution type and mean shift for all metrics (accuracy: F(4, 156) = 134.9, p < 0.001; precision: F(4, 156) = 69.7, p < 0.001; recall: F(4, 156) = 134.9, p < 0.001; F1-score: F(4, 156) = 82.4, p < 0.001; ROC AUC: F(4, 156) = 509.1, p < 0.001; PR AUC: F(4, 156) = 422.6, p < 0.001). For instance, as shown in Figure 4 for , the log-normal distribution remained stable across different mean shifts. In contrast, for the gamma and t-distributions, increased substantially as the mean shift decreased. This indicates that small process shifts are more difficult to detect when the data follow these distributions.

Figure 4.

Interaction between distribution and mean shift for .

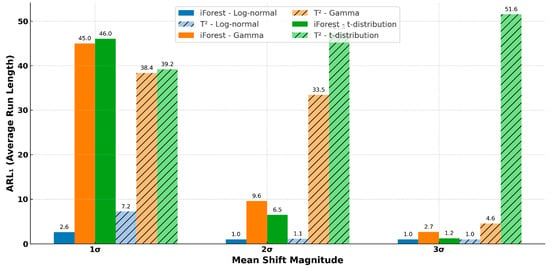

4.2. Comparison of iForest with T2

iForest consistently outperformed T2 in terms of across all distributions and mean shifts (t(85) = −2.5, p = 0.015), as shown in Figure 5. Its strongest performance was observed in environments involving gamma and t-distributions and under small-to-medium mean shifts. This suggests that iForest’s advantage over T2 is particularly notable in the presence of skewed (log-normal) or heavy-tailed (t-distribution) data.

Figure 5.

Comparison between iForest and Hotelling’s T2 on .

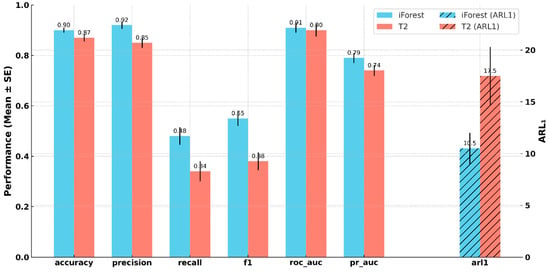

iForest also outperformed T2 in all classification performance metrics, as illustrated in Figure 6. Among these metrics, accuracy, precision, recall, and F1-score were significantly higher for iForest (accuracy: t(129) = 2.46, p = 0.015; precision: t(105) = 2.47, p = 0.015; recall: t(129) = 2.46, p = 0.015; F1-score: t(125) = 2.76, p = 0.007), indicating a more consistent and reliable classification of normal versus abnormal cases. ROC AUC and PR AUC were also higher for iForest, though not statistically significant (ROC AUC: t(128) = 0.7, p = 0.49; PR AUC: t(134) = 1.32, p = 0.19). Finally, iForest exhibited a significantly lower than T2 (p < 0.05), reflecting faster average detection times.

Figure 6.

Performance comparison between iForest and Hotelling’s T2 across classification metrics. Error bars represent standard error. is plotted on a secondary axis.

5. Discussion and Conclusions

The results across all scenarios consistently demonstrate that the iForest-based control chart performs better in environments involving non-normal distributions (ARL1 for log-normal distribution: iForest = 1.5, T2 = 3.1) and small-to-medium mean shifts (ARL1 at 1 sigma mean shift: iForest = 2.6, T2 = 7.2). Its advantage is particularly pronounced in the presence of skewness (log-normal) or heavy tails (t-distribution). The robustness of iForest, coupled with its non-parametric and ensemble-based nature, allows it to adapt to a wider range of process behaviors than the covariance-based T2 chart. Moreover, while bootstrap resampling introduces minor performance variations, it does not substantially affect the comparative outcome, reinforcing iForest’s reliability as a distribution-free tool for modern process monitoring.

Evaluation results further showed that iForest outperformed T2 across all performance metrics, as summarized in Table 1, suggesting a more reliable and consistent classification. Precision, in particular, exhibited a strong margin, reflecting iForest’s lower FPR. These metrics displayed the most notable differences between the two algorithms. iForest also achieved significantly higher recall, underscoring its strength in detecting true anomalies. As a result, the F1-score—defined as the harmonic mean of precision and recall—was also significantly higher. Lastly, iForest exhibited a substantially lower than T2, indicating faster detection times, which is especially valuable in real-time or high-risk monitoring contexts.

Table 1.

Summary of evaluation results for iForest and T2.

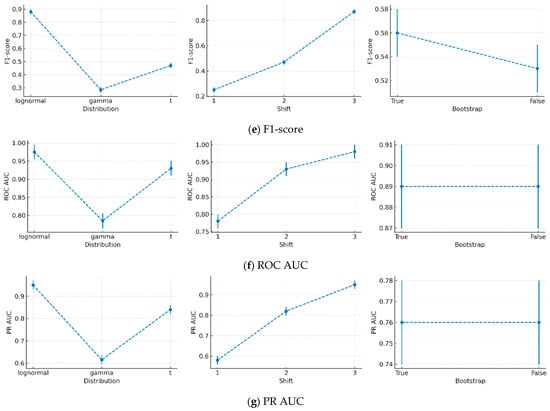

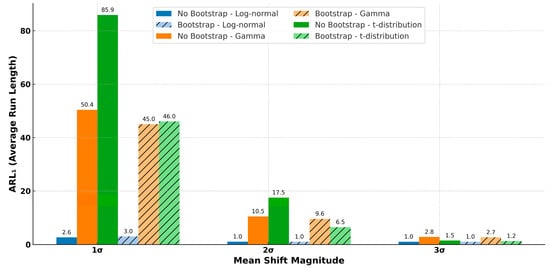

This study found that enabling the bootstrap setting can lead to slightly improved performance across all classification metrics, as summarized in Figure 7. This implies that practitioners implementing iForest-based control charts should consider enabling bootstrap resampling when (1) the data likely follow heavy-tailed distributions or (2) detecting small process shifts is critical. Conversely, bootstrap resampling may be omitted in low-noise environments or when computational resources are limited without significantly compromising performance under larger process shifts.

Figure 7.

Effect of enabling bootstrap to iForest control chart on .

The superiority of iForest was most evident in non-Gaussian contexts, such as log-normal and t-distributions, where the T2 chart exhibited significant detection delays in terms of . Notably, under a 1σ mean shift in a log-normal distribution, iForest achieved an as low as 2.612, whereas the T2 chart yielded 7.232—highlighting a detection delay nearly three times longer. This result is expected, as the T2 chart is theoretically grounded in the assumption of multivariate normality [37]. These findings strongly suggest that iForest is a more suitable choice for processes involving non-Gaussian data distributions.

The result of this study showed that iForest outperformed the T2 chart in many scenarios. However, iForest has a limitation in detecting subtle process shifts, such as a 1σ mean shift. The reason for this limitation is that iForest calculates anomaly scores based on tree depth, which may not sufficiently capture the proximity of observations with tree cluster. To address this limitation, a distance or likelihood-based [38] scoring approach that combines tree depth with the Euclidean distance or likelihood to the centroid of each partitioned cluster could enhance the novelty detection performance. Integrating spatial closeness or likelihood within a cluster into the scoring mechanism may improve sensitivity to small process variations. However, to empirically investigate the effects of incorporating spatial proximity or likelihood, future studies are required.

Although we aimed to compare iForest with Hotelling’s T2, our scope remains limited. In multivariate monitoring, various techniques, such as exponentially weighted moving average (EWMA), cumulative sum control chart (CUSUM), local outlier factor (LOF), one-class support vector machine (one-class SVM), and principal component analysis (PCA)-based control charts, are widely used. As this study focused on examining the effects of varying data distributions and bootstrap setting in iForest, Hotelling’s T2 was selected as a benchmark method for comparison. For a more comprehensive evaluation, future studies can include these additional methods.

The bootstrap setting (true vs. false) may indirectly influence training through interaction with parameters, such as sample size and tree depth. Although enabling bootstrap does not alter the nominal sample size per tree, it introduces duplicate observations that can influence the tree structure. For example, bootstrapping may result in shallower trees due to early isolation of redundant samples. However, as iForest normalizes the anomaly score based on the expected path length, this potential side effect can be effectively compensated for. To draw a more definitive conclusion, a more comprehensive investigation should be conducted in future studies.

We selected critical simulation parameters (sample size, dimensionality, and degrees of freedom for the t-distribution) based on values widely adopted in previous anomaly detection and process monitoring studies. However, these parameters can substantially influence the behavior of iForest and the generalizability of our findings. For example, changes in dimensionality or the tail-heaviness of the distribution may affect both the separability of anomalies and the stability of anomaly scores. Although our parameter settings were grounded in ranges frequently reported in the literature [33,34,39,40,41], a more systematic investigation on the effects of these parameters is needed for better generalization of our findings.

Our experimental design focused on synthetic datasets generated from well-defined distributions. Although this approach inevitably limits the generalizability of our findings to real-world manufacturing data, it allowed for us to rigorously examine the intrinsic behavior of iForest under controlled conditions. This controlled investigation serves as a foundational benchmarking to better understand and improve iForest algorithm. Nonetheless, future studies should empirically validate our findings using real manufacturing data, which may further reveal strengths and limitations of iForest algorithm in practical applications.

Author Contributions

Conceptualization, H.C.; methodology, H.C.; software, H.C. and K.J.; formal analysis, H.C. and K.J.; writing—original draft preparation, H.C.; writing—review and editing, H.C. and K.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ARL | Average run length |

| EWMA | Exponentially weighted moving average |

| EIF | Extended isolation forest |

| FPR | False positive rate |

| KNN | K-nearest neighbors |

| LOF | Local outlier factor |

| PCA | Principal component analysis |

| SPC | Statistical process control |

| SVDD | Support vector data description |

References

- Shewhart, W.A. Control of Quality of Manufactured Product; McGraw-Hill: New York, NY, USA, 1929. [Google Scholar]

- Page, E.S. Cumulative sum charts. Technometrics 1961, 3, 1–9. [Google Scholar] [CrossRef]

- Roberts, S.W. Control chart tests based on geometric moving averages. Technometrics 1959, 1, 239–250. [Google Scholar] [CrossRef]

- Hotelling, H. Multivariate quality control. In Techniques of Statistical Analysis; Eisenhart, C., Hastay, M.W., Wills, W.A., Eds.; McGraw-Hill: New York, NY, USA, 1947; pp. 111–184. [Google Scholar]

- Crosier, R.B. Multivariate generalizations of cumulative sum quality-control schemes. Technometrics 1988, 30, 291–303. [Google Scholar] [CrossRef]

- Lowry, C.A.; Woodall, W.H.; Champ, C.W.; Rigdon, S.E. A multivariate exponentially weighted moving average control chart. Technometrics 1992, 34, 46–53. [Google Scholar] [CrossRef]

- Stoumbos, Z.G.; Sullivan, J.H. Robustness to non-normality of the multivariate EWMA control chart. J. Qual. Technol. 2002, 34, 260–276. [Google Scholar] [CrossRef]

- Chakraborti, S.; Eryilmaz, S. A nonparametric Shewhart-type signed-rank control chart based on runs. Commun. Stat. Simul. Comput. 2007, 36, 335–356. [Google Scholar] [CrossRef]

- Das, N. A new multivariate non-parametric control chart based on sign test. Qual. Technol. Quant. Manag. 2009, 6, 155–169. [Google Scholar] [CrossRef]

- Phaladiganon, P.; Kim, S.B.; Chen, V.C.P.; Jiang, W. Principal component analysis-based control charts for multivariate nonnormal distributions. Expert Syst. Appl. 2013, 40, 3044–3054. [Google Scholar] [CrossRef]

- Kang, J.H.; Yu, J.; Kim, S.B. Adaptive nonparametric control chart for time-varying and multimodal processes. J. Process Control 2016, 37, 34–45. [Google Scholar] [CrossRef]

- Moon, T.K. The expectation-maximization algorithm. IEEE Signal Process. Mag. 1996, 13, 47–60. [Google Scholar] [CrossRef]

- Mahmoud, M.A.; Saleh, N.A.; Madbuly, D.F. Phase I analysis of individual observations with missing data. Qual. Reliab. Eng. Int. 2014, 30, 559–569. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. In Proceedings of the ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 15–18 May 2000; pp. 93–104. [Google Scholar] [CrossRef]

- Kang, P.; Cho, S. A hybrid novelty score and its use in keystroke dynamics-based user authentication. Pattern Recognit. 2009, 42, 3115–3127. [Google Scholar] [CrossRef]

- Harmeling, S.; Dornhege, G.; Tax, D.; Meinecke, F.; Müller, K.R. From outliers to prototypes: Ordering data. Neurocomputing 2006, 69, 1608–1618. [Google Scholar] [CrossRef]

- Jain, M.; Kaur, G.; Saxena, V.A. A K-means clustering and SVM based hybrid concept drift detection technique for network anomaly detection. Expert Syst. Appl. 2022, 193, 116510. [Google Scholar] [CrossRef]

- Charte, D.; Charte, F.; García, S.; del Jesus, M.J.; Herrera, F. A Practical Tutorial on Autoencoders for Anomaly Detection in High-Dimensional Data. Neurocomputing 2021, 455, 137–155. [Google Scholar] [CrossRef]

- Schölkopf, B.; Platt, J.C.; Shawe-Taylor, J.; Smola, A.J.; Williamson, R.C. Estimating the support of a high-dimensional distribution. Neural Comput. 2001, 13, 1443–1471. [Google Scholar] [CrossRef] [PubMed]

- Tax, D.M.J.; Duin, R.P.W. Support vector data description. Mach. Learn. 2004, 54, 45–66. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the IEEE International Conference Data Mining, Pisa, Italy, 15–19 December 2008; IEEE: New York, NY, USA, 2008; pp. 413–422. [Google Scholar] [CrossRef]

- Li, W.; Jiang, M.; Zhang, X. A Comprehensive Survey on Spectral Clustering with Graph Structure Learning. arXiv 2022, arXiv:2205.03057. [Google Scholar]

- Xu, D.; Wang, Y.; Meng, Y.; Zhang, Z. An improved data anomaly detection method based on isolation forest. In Proceedings of the IEEE International Symposium on Computational Intelligence and Design, Honolulu, HI, USA, 27 November–1 December 2017; IEEE: New York, NY, USA, 2017; Volume 2, pp. 287–291. [Google Scholar] [CrossRef]

- Hariri, S.; Kind, M.C.; Brunner, R.J. Extended isolation forest. IEEE Trans. Knowl. Data Eng. 2021, 33, 1479–1489. [Google Scholar] [CrossRef]

- Cheng, X.; Liu, Z.; Tang, M.; Du, Y.; Xu, C. Anomaly detection based on improved isolated forest. In Proceedings of the IEEE International Conference on Information Technology, Big Data and Artificial Intelligence, Chongqing, China, 26–28 May 2023. [Google Scholar]

- Chen, Q.; Kruger, U.; Meronk, M.; Leung, A.Y.T. Synthesis of T2 and Q statistics for process monitoring. Control Eng. Pract. 2004, 12, 745–755. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation-based anomaly detection. ACM Trans. Knowl. Discov. Data 2012, 6, 1–39. [Google Scholar] [CrossRef]

- Pyzdek, T. Non-normal Distributions in the Real World. 2006. Available online: https://www.researchgate.net/publication/230770738 (accessed on 2 May 2025).

- Genta, G.; Galetto, M. Study of measurement process capability with non-normal data distributions. Procedia CIRP 2018, 75, 385–390. [Google Scholar] [CrossRef]

- Alatefi, M.; Ahmad, S.; Alkahtani, M. Performance evaluation using multivariate non-normal process capability. Processes 2019, 7, 833. [Google Scholar] [CrossRef]

- Qiu, P. Some perspectives on nonparametric statistical process control. J. Qual. Technol. 2018, 50, 49–65. [Google Scholar] [CrossRef]

- Roscoe, J.T. Fundamental Research Statistics for the Behavioral Science; Holt, Rinehart & Winston: New York, NY, USA, 1975. [Google Scholar]

- Mukherjee, A.; Cheng, Y.; Gong, M. A new nonparametric scheme for simultaneous monitoring of bivariate processes and its application in monitoring service quality. Qual. Technol. Quant. Manag. 2018, 15, 143–156. [Google Scholar] [CrossRef]

- Tuerhong, G.; Bum Kim, S. Comparison of novelty score-based multivariate control charts. Commun. Stat. Simul. Comput. 2015, 44, 1126–1143. [Google Scholar] [CrossRef]

- Montgomery, D.C. Introduction to Statistical Quality Control, 5th ed.; John Wiley & Sons: New York, NY, USA, 2005. [Google Scholar]

- Jensen, W.A.; Jones-Farmer, L.A.; Champ, C.W.; Woodall, W.H. Effects of parameter estimation on control chart properties: A literature review. J. Qual. Technol. 2006, 38, 349–364. [Google Scholar] [CrossRef]

- Johnson, R.A.; Wichern, D.W. Applied Multivariate Statistical Analysis, 6th ed.; Pearson: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- Tan, C.W.; Yu, P. Contagion Source Detection in Epidemic and Infodemic Outbreaks: Mathematical Analysis and Network. Found. Trends Netw. 2023, 13, 107–251. [Google Scholar] [CrossRef]

- Gulanbaier, T.; Kim, S.B.; Kang, P.; Cho, S. Multivariate Control Charts Based on Hybrid Novelty Scores. Commun. Stat. Simul. Comput. 2014, 43, 115–131. [Google Scholar] [CrossRef]

- Haanchumpol, T.; Sudasna-na-Ayudthya, P.; Singhtaun, C. Modified Multivariate Control Chart Using Spatial Signs and Ranks for Monitoring Process Mean: A Case of t-Distribution. In Proceedings of the 9th International Conference on Industrial Engineering and Operations Management, Bangkok, Thailand, 5–7 March 2019; IEOM Society International. pp. 1415–1427. [Google Scholar]

- Lee, S. Machine Learning-Based Process Monitoring and Maintenance for Smart Manufacturing. Ph.D. Dissertation, Korea University, Seoul, Republic of Korea, 2018. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).