Abstract

A maximum entropy (ME) methodology was used to infer the Hubble constant from the temperature anisotropies in cosmic microwave background (CMB) measurements, as measured by the Planck satellite. A simple cosmological model provided physical insight and afforded robust statistical sampling of a parameter space. The parameter space included the spectral tilt and amplitude of adiabatic density fluctuations of the early universe and the present-day ratios of dark energy, matter, and baryonic matter density. A statistical temperature was estimated by applying the equipartition theorem, which uniquely specifies a posterior probability distribution. The ME analysis inferred the mean value of the Hubble constant to be about 67 km/sec/Mpc with a conservative standard deviation of approximately 4.4 km/sec/Mpc. Unlike standard Bayesian analyses that incorporate specific noise models, the ME approach treats the model error generically, thereby producing broader, but less assumption-dependent, uncertainty bounds. The inferred ME value lies within 1σ of both early-universe estimates (Planck, Dark Energy Signal Instrument (DESI)) and late-universe measurements (e.g., the Chicago Carnegie Hubble Program (CCHP)) using redshift data collected from the James Webb Space Telescope (JWST). Thus, the ME analysis does not appear to support the existence of the Hubble tension.

1. Introduction

Bayesian methods have played an important role in solving statistical inverse problems in the physical sciences. Research areas where orthodox Bayesian methods have been useful include geophysics [1,2,3] and condensed matter [4,5]. In the Bayesian approach, it is necessary to construct a likelihood where prior assumptions are required in, for example, the statistics of an error function that incorporate model and data errors. Therefore, from Bayes’ rule, the resulting posterior probability distribution (PPD) has the imprint of any biased prior assumptions made in the construction of the likelihood. In contrast, the maximum entropy (ME) concept introduced by Jaynes [6,7] offers an alternative to a strictly Bayesian-derived PPD in that the resulting ME PPD provides the least-informative or conservative model parameter probability densities. Jaynes argued that the ME is best used for constructing prior probability distributions with minimal assumptions, thereby making it a valuable tool in Bayesian analyses [8]. While setting prior distributions can be useful for certain applications, we agree with the sentiment expressed in [9] that the ME is significantly more general and useful when formulated with a relative entropy function [10], which results in full consistency with Bayes’ rule. In addition, an ME likelihood is derived using the equipartition theorem, which is consistent with a PPD that provides the most conservative degree of information on the state of knowledge of a system described by a physical model, given testable data.

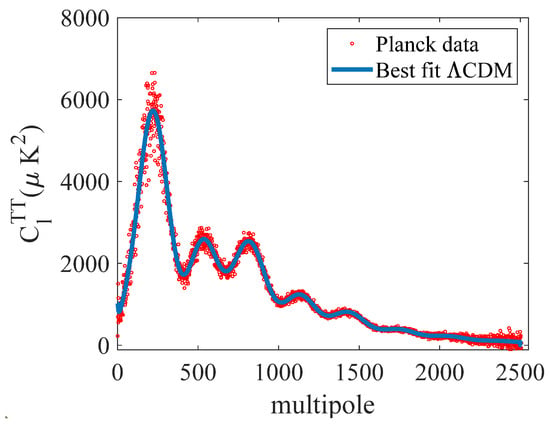

As an application, the ME methodology can be utilized to analyze the multipole power spectra of temperature anisotropies measured by the European Space Agency Planck satellite [11,12] for the purpose of conservatively inferring information on model cosmological parameter values, such as the present-day percentages of matter and dark energy and the adiabatic density fluctuations in the primordial universe. The measurements by the Planck satellite significantly extend the multipole scale and resolution of the power spectra relative to its critically important predecessor, the Wilkinson Microwave Anisotropy Probe (WMAP) [13]. Figure 1 shows the temperature anisotropies in the cosmic microwave background power spectrum measured by the Planck satellite. The top horizontal axis is a multipole scale that is inversely proportional to the angular scale on the bottom horizontal axis. The red dots are measurements made with Planck and the green curve represents the best fit of the ΛCDM model [14,15,16,17], also referred to as the standard model of cosmology. In the ΛCDM model, lambda and CDM refer to dark energy [18,19], or the cosmological constant, and cold dark matter [20,21], respectively. A damped acoustic wave, driven by quantum adiabatic density fluctuations that start during inflation, occurs in a viscous fluid that includes photons and baryonic plasma [22]. The acoustic signatures were frozen on the last scattering surface and are evident in the peak and null structure of the multipole power spectra. The region of or from 6 degrees to 90 degrees, known as the Sachs–Wolfe region [23], is where there are uncertainties in both the data and the theory.

Figure 1.

Power spectrum for temperature fluctuations. Graph made available online: https://www.esa.int/ESA_Multimedia/Images/2013/03/Planck_Power_Spectrum (accessed on 6 January 2025), courtesy of the ESA and the Planck Collaboration.

The ME method provides the proper treatment of the model error and its effects on parameter ambiguities and uncertainty. The cosmological model used in the current analysis is an approximate approach referred to as the hydrodynamical model (HM), previously discussed by Hu and Sugiyama [24] and Weinberg [25]. In the current analyses, we only considered the region of . The remainder of this paper is organized as follows. Section 2 presents the ME method and Section 3 presents the HM and parameter space and the assumed parameter constraints used to simulate the power spectra of the CMB temperature anisotropies. Section 4 presents the results and Section 5 and Section 6 provide a brief discussion and the conclusions, respectively.

2. Maximum Entropy

Jaynes [6,7] argued that the Shannon entropy [26,27] developed in information theory and the entropy that appears in the field of statistical mechanics possess the same mathematical logic. Namely, entropy is not just a term that appears in the second law of thermodynamics for a closed system in a thermal equilibrium, but rather is a more general mathematical concept. In this paper, this logic was utilized to infer probability distributions for model parameters Θ that appear in a cosmological model for the expansion of the universe from measured temperature anisotropy measurements of the cosmic microwave background radiation.

The development of the ME formalism began by introducing the Kullback relative entropy [10] functional :

where is a conditional posterior probability distribution (PPD), is the model prior distribution, and is the measured data. Note that (1) is the same as for statistical mechanics except that the Boltzmann constant is set to unity. The reason one wants to introduce is to restrict statistical sampling to physical portions of the parameter space. The objective is to find a conditional probability density subject to the following constraints:

and the average error satisfying

Lagrange multipliers, and , are introduced to find , which extremizes subject to the conditions in (2) and (3). The condition of extremization is

or

Since is arbitrary, one finds

or, equivalently,

It is customary to define the partition function as

Using (2) and (3), the D becomes

where the average error is

If and are identified as and the likelihood , respectively, then one can observe that (9) is Bayes’ rule. We will refer to (9) and (10) as the model space representation of the ME.

Jaynes did not explicitly discuss how to determine β in practice. A simple examination of (10) shows that, if one sets to the global minima , such that for all Θ, then β tends to ∞, which gives marginal distributions that are δ-like functions. On the other hand, if is set to the average error function in the volume of the N-dimensional parameter space, β tends to zero, which gives flat marginal distributions. To overcome this problem, the authors in [28] formulated the data ensemble approach to the ME, which originated with a methodology to train neural networks on multiple data sets [29,30,31]. The data, as opposed to the model formulation of the ME, afforded an estimate for , from which (10) could be solved for β. Instead of using (10) to evaluate for a single data set , it is assumed that each measurement is a random draw from a data ensemble possessing a continuum of samples , which permits an approximate evaluation of for the th data sample:

If one has a finite number of random measurements , then

which permits an estimate for () using (10). This concept was recently applied to multiple merchant ships in a shipping lane on the continental New England shelf [32]. However, for the case where is small or there is only a single data sample, as was the case for the current study, a different approach is required. For the CMB, even though what is observed today was a random process, one only observes a single realization in the data, and thus, (12) is not suitable.

Instead of trying to solve (10) for β, we used an analogy with classical statistical mechanics that relates β to a statistical temperature . We remark here that has nothing to do with the temperature T of a physical system, including the universe. One first identifies the error function as an energy, and as the Boltzmann factor. Further, one can view the N-dimensional space as a system that is in thermodynamic equilibrium with a heat bath at . In such a case, we used the Helmholtz free energy equation for the free energy , the entropy , , and the internal energy :

and

Continuing with Jaynes philosophy, one can identify and as the free Helmholtz error and the internal error, respectively. If we identify , then may be defined as , yielding the following:

Thus, if one identifies the exponential term as a likelihood and knowing that , (15) is once again Bayes’ rule.

Equation (15) seems to suggest that one has simply replaced the problem of estimating β with the problem of estimating . To address this apparent dilemma, it is instructive to derive the equipartition theorem from the first principles, and this is achieved in Appendix A. The result is

Equation (16) allows us to identify <E> as the computed global error minimum, and then use , which uniquely specifies . The idea of using (16) was first reported by Nuttall et al. [33] in the analysis of merchant ship noise for the information contained on parameter values that characterize a multilayer seabed. The applicability of the equipartition theorem in this context is heuristic, drawing from analogies with classical statistical mechanics. Although the CMB parameter space is not a literal thermodynamic system, the ME framework uses the theorem as a proxy to derive a statistical temperature that defines the shape of the posterior. This approach has precedent in inverse problems and provides a principled way to avoid underestimating the uncertainty given a single data realization.

With (16), one can then perform a statistical sampling of in the N-dimensional parameter space. To be self-consistent with the Boltzmann form of the likelihood function, was sampled with the Metropolis criteria [34] at a constant temperature . Specifically, we used the simulated annealing algorithm [35,36] of Goffe et al. [37] for sampling at a constant . Once is computed, the marginal probability densities can then be obtained by integrations:

In practice, one continues to sample the parameter space until the integrals in (17) converge.

Finally, it is of interest to compare the mathematical structure of the ME approach to a standard Bayesian method. The error function chosen for this analysis was

where and are the data and modeled vectors with multipole components and is the transpose. Thus, one can write the ME likelihood as

In contrast, the Bayesian likelihood is

where is the data noise covariance matrix, which generally is unknown. If the off-diagonal elements of are neglected, then

where 𝕀 is the identity matrix and is the data variance. Thus, the Bayesian likelihood is equivalent to the ME expression (up to a constant) in the case where it is assumed that the covariance matrix is diagonal and that = .

3. Model, Parameter Space, and Data Selection for Temperature Anisotropy Analysis

3.1. Temperature Anisotropy in the CMB

The quantity of interest in this paper is correlations or temperature anisotropies observed in the CMB that are usually expressed as an average of temperature differences from the mean value :

in two look directions, and :

Thus, the multipole expansion coefficients are

where is the Legendre polynomials and .

The theoretical description of the temperature anisotropies contained in the is centered on correlating them with gauge-invariant adiabatic quantum fluctuations during inflation [38,39,40,41,42]. Required is a physical model of the evolution of these small perturbations with an expanding multi-constituent universe. The two important aspects of this theory are (1) the Einstein field equations for the expansion of the universe and (2) the time evolution and wavenumber-dependent quantum fluctuations of the energy densities of the constituents comprising the universe.

3.1.1. Expansion of the Universe

The key relation derived from general relativity that describes the expansion of an approximate homogeneous and isotropic universe is the Freedman equation, and for a flat universe ( = 0), it is

where is the redshift and and are the expansion rate and Hubble constant, respectively. We used the common notation km/sec/Mpc. The expansion parameter is , which is evaluated at the present time and at an earlier time . The present-day ratios of density relative to the critical density for matter (hadrons, cold dark matter, leptons (not including neutrinos), and Higgs scalar fields), radiation (photons and neutrinos), and dark energy are , , and , respectively, with . It was further assumed that the dark energy density obeys an equation of state:

where is the pressure. When for all , the last term in the square root in (25) becomes the cosmological constant , which is thought to be the vacuum energy of an unknown substance. Komatsu et al. [13] reported . This value for , currently assumed in the ΛCDM model, gives which is why cosmologists believe that the present-day universe in a dark-energy-dominant universe (~70 %) is accelerating in its expansion. However, evidence has recently been reported by the Dark Energy Spectroscopic Instrument (DESI) that may not be constant [43]. Nevertheless, the current analysis assumed for all , as does the ΛCDM model.

3.1.2. Evolution of Quantum Perturbations

It is believed that the quantum perturbations responsible for the observed structure of the CMB and BAO started during the inflation era [42]. At the conclusion of inflation, the universe was cold and dark; it started to reheat as the energy of the vacuum was transferred into radiation and matter. But there are no physical models, such as a non-abelian gauge theory, of how this occurred. Following the reheating era, the early universe consisted primarily of four main constituents: (1) a very dense baryonic plasma consisting of electrons, protons, ions, and neutral atoms; (2) photons; (3) neutrinos; and (4) cold dark matter. The equations governing the mixture of photons and baryonic plasma are generally coupled Boltzmann equations driven by the primordial perturbations.

We require a theory that solves such equations from very early times during inflation through inflation, reheating, and the radiation-, recombination-, and matter-dominated eras and to the present-day dark-energy-dominated era. To this end, paraphrasing Weinberg [38], for a homogeneous universe for which the quantum perturbations of the Einstein field equations always have a solution, in the limit of a large wavelength, the time-dependent scalar fluctuations of the curvature are non-zero and constant in all stages of the evolution of the universe. Further, this condition remains valid whatever the constituents of the universe are; there is always a solution to the field equations for which is conserved outside the horizon, i.e., , and these solutions are referred to as adiabatic.

is conserved outside the horizon in inflation driven by a single scalar field. During inflation, the time-dependent scalar fluctuations of the curvature satisfy the Mukhanov–Sasaki equations [39,40,41], which are integrated from an early time during inflation to a time where , allowing one to write the main scalar adiabatic mode in the Newtonian gauge as

where are scalar perturbations. In inflation scenarios, is parameterized outside the horizon as

where , , and are known as the amplitude, the spectral tilt, and a reference wavenumber, respectively. The value refers to a scale-invariant spectrum. The conventional value of and due to the details of the normalization, its choice is arbitrary.

3.1.3. Hydrodynamic Model

The ΛCDM model, the standard cosmological model, solves the time evolution of wavenumber-dependent perturbations. It has had unprecedented success in describing many aspects of observational cosmology. For example, both the WMAP group and the Planck collaboration report that there was no clear evidence that the ΛCDM model requires any upgrades. However, for this study, we did not use the ΛCDM model; rather, we used an approximate model to predict the extrema multipoles for the scale power spectrum data. The reason for this was that the high-accuracy model that involves solving coupled Boltzmann equations provides little insight into parameter correlations that, in part, drives uncertainty. Instead, we followed Hu and Sugiyama [24] and Weinberg [25], who developed an approximate semi-analytic hydrodynamic model (HM) that permitted a transparent analytic solution for .

The equations for the perturbations were first developed in the hydrodynamic limit. Then, solutions were found for the long wavelength limit ( ) and also for the short wavelength limit (, ). Transfer functions interpolate between these two limits to provide full solutions. The main result for the hydrodynamic model is

where the transfer functions , S, and represent the q dependence for times well before matter–photon equilibrium to times well after. Expressions for , S, and in closed form are provided in [25] and are reproduced in Appendix A and in [17]. The main physics takeaway is that the solutions to the perturbations for the cold dark matter, photons, and baryons are valid from wavelengths that are far outside the horizon to very short wavelengths inside the horizon, and as such, the transfer functions provide the evolution of the perturbation from the primordial universe during inflation to the present day.

Additional approximations concerning the time evolution result in a simple expression for the scalar component of the power spectrum:

where , and .

The factor is an effective damping term that accounts for the effect of the scattering of photons by free electrons, especially following reionization. As a result of the approximations used to derive , the prefactor in (30) has the form , and thus, there exists an unresolvable ambiguity for and . In the current computation, we fixed = 0.80209 or [44] and then allowed to be one of the free parameters. This value for was consistent with the value of ≂ 0.09 using low- polarization data from the WMAP collaboration [45] in an analysis of the reionization history of intergalactic matter.

If we define , then the angular distance from Earth to the surface of the last scatter is

where is the redshift at last scatter. The acoustic horizon distance at the last scatter is

where is ¾ of the ratio of the baryon-to-photon energy density at the last scatter. The parameter is the distance from a receiver to the surface of the last scatter when is about 13.8 Gyr years into the past. It was at this time that the temperature of the universe had cooled sufficiently to allow light to escape the cosmic soup. The variables are thus and . Also, = 1090, and is evaluated at the time when there were equal amounts of radiation and matter. Equation (30) includes relations that allow for wavelengths to enter the horizon during the period when the universe was composed of roughly equal parts radiation and matter. Finally, is a damping length. One can see that the CMB multipole spectrum depends on , , , ,

and .

The advantage of the HM is that, despite the loss of accuracy as compared to the standard ΛCDM model, it contains the essential elements that afford the statistical inference of cosmological parameters from the extrema data. The ΛCDM model has one parameter that is a proxy for a physical parameter, and it is not totally clear why the model works as well as it does. The HM, on the other hand, while not as accurate as the ΛCDM model, is in closed analytic form and affords a clear understanding of the parameter sensitivities describing the multipole spectra of the CMB.

3.2. Parameter Space

A basic step in any statistical inference problem is to define the parameter space by identifying parameters that can be varied within the upper and lower bounds, the parameters that are considered fixed, and the parameter constraints. The parameter constraints allow one to implicitly infer additional parameter values and uncertainties outside the N-dimensional parameter space. The analysis in this paper considered the five-dimensional parameter space . For each point in the 5D space values, and were implicitly computed from the constraints and . In contrast, the ΛCDM model has a 6D parameter space that, instead of , includes , the percentage of cold dark matter in today’s universe. Neglecting the mass of neutrinos, . Furthermore, in addition to , the ΛCDM model makes an explicit search for , whereas in the HM model, we fixed from previous analyses of WMAP polarization data, as discussed in Section 3.1.3. The reason that ΛCDM solves for both and is that it is solving a complex set of coupled Boltzmann equations that arise when describing the time evolution of the quantum fluctuations, which consist of mostly cold dark matter, driving the acoustic oscillations of the baryonic plasma. Finally, the ΛCDM model has another parameter, , that is not in the HM model and may be viewed as a proxy parameter for , where r∗ is the sound horizon at the time of recombination and is the comoving angular distance.

The lower bounds (LB) and upper bounds (UB) of the parameter space are shown in Table 1. It was assumed that was uniform between the LB and the UB. The uniform priors were consistent with Jaynes’ ME philosophy. In the analysis, the UB and LB were made large enough so that the resulting PDF would satisfy (x) = 0 for x UB and for x LB.

Table 1.

Parameter space for hydrodynamic model.

3.3. Data Selection

The data made available are shown in Figure 2. Also made available was the best-fit curve of the data points shown in Figure 1 using the ΛCDM model. The goal in any data-selection process is to balance the benefits of a large band (in the present case, the multipole bandwidth) while minimizing model errors. A subset of the data was chosen where there was a small variance in the selected data points with the best-fit ΛCDM model. Further, the selected data included the reported multipole and amplitude values at the extrema, as reported in [11]. These points were derived by finding the best fit for an assumed Gaussian. Further, to avoid both data and model noise, the points selected for the inversion were in the range of . The upper value of 1446 was determined from pre-modeling, where, while the HM did a reasonably good job, it consistently underpredicted the amplitudes for l > 1500.

Figure 2.

The Planck power spectrum data and the best-fit ΛCDM model have been made available by the European Space Agency: https://irsa.ipac.caltech.edu/data/Planck/release_2/ancillary-data/ (accessed on 6 January 2025).

Once the parameter space and constraints were defined, a statistical inference began by defining , the model priors. The parameter estimation proceeded as follows: First, the global minimum of the error function was found in the 5D parameter space. Then, this minimum value of was used to compute the statistical temperature via the equipartition relation (16). Using this , we applied the Metropolis criteria within a simulated annealing framework at a constant to sample the parameter space. From the resulting ensemble, we evaluated the posterior probability density and computed the marginal distributions via integration (17). This process was repeated until the convergence of all the marginal densities was achieved.

4. Results

4.1. Probability Densities and Parameter Estimates

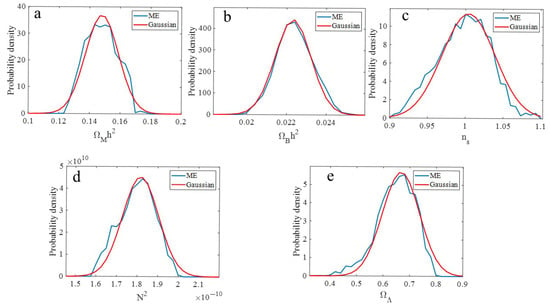

Figure 3 shows the probability densities derived from the ME PPD. The distribution exhibited Gaussian-like characteristics, as did and . In contrast, had a main peak, but was negatively skewed. The negative skew means that the average value of the inferred value of was less than the value where the peak occurred. To obtain estimates of the parameter mean value and standard deviations, a best-fit Gaussian was plotted with each . The selection of a Gaussian to extract information from the ME distribution was consistent with the goal of the ME to infer the most conservative estimate of the uncertainty, since a Gaussian is the least informative distribution that maximizes the entropy with constraints for both the mean and the variance.

Figure 3.

ME and Gaussian best-fit probability densities for (a) , (b) , (c) , (d) , and (e) .

Table 2 shows the ME Gaussian-inferred parameter values. Included in Table 2 are the optimal parameter values. While h and are not explicit parameters, one can use the constraints and with Monte Carlo sampling to find implicit values to find the mean values and uncertainties. These ME-inferred parameter values are compared to the most recent values reported by the Planck collaboration that fused together multiple data sets and other astrophysical constraints [12]. While the Planck values for suggest a small deviation from scale invariance (= 1), the ME values do not support deviations of scale invariance in the primordial density fluctuations. The mean values derived from the ME-inferred parameter , which had near Gaussian-like characteristics, agreed well with the Planck values. In contrast, the mean values derived from the ME-inferred parameter , which deviated slightly from Gaussian-like characteristics, agreed less well with the Planck values. Furthermore, the mean value derived from the ME-inferred parameter , which was negatively skewed, was less than those of the Planck values. Because of the constraints, the mean values for h and were in reasonable agreement with the Planck values; for example, the ME-derived value for was about 67.0 km/sec/Mpc as compared to the Planck value of 67.4 km/sec/Mpc.

Table 2.

Comparison of Gaussian fit to ME-inferred parameter probability densities to the Planck 2018 values [12]. The Planck results used a slightly different parameterization for the scalar curvature and it is not straightforward to compare values for . The Planck values shown here are from the TT, TE, EE+lowE+lensing combination. For a more direct comparison to the scalar-only analysis, the TT+lowE results from Table 2 of Planck 2018 [12] would be more appropriate. The Planck mean value for was 0.0544, as compared to the HM value fixed at 0.1100.

The full ME inference process, including the sampling of the 5D parameter space and posterior marginalization, was executed on a single-core CPU system. The total runtime to achieve the convergence of the marginal distributions was about 100 h. Parallelization or GPU-based approaches could significantly reduce this cost.

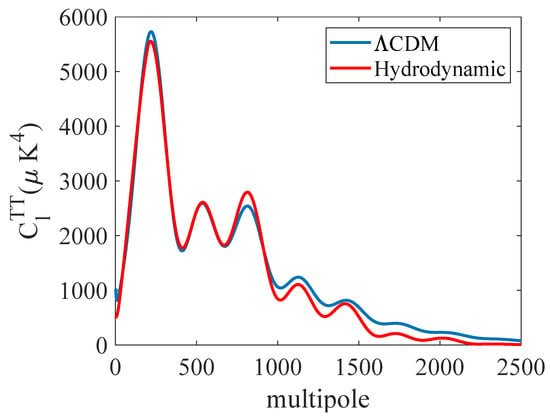

4.2. Modeled and Measured Power Spectra

Figure 4 compares the for the ΛCDM and the HM for the average ME Gaussian parameter values in Table 2. The sampling of the error function found parameter values that lowered the error by optimizing the fit to the first and second high-amplitude peaks at the expense of underfitting the power spectra for higher values of l. The hydrodynamical model predicted the first two peaks and troughs at about the correct amplitudes. However, the amplitude of the third peak predicted by the HM exceeded that of the ΛCDM prediction and was shifted to slightly higher multipole values.

Figure 4.

Comparison of the HM with average parameter values to the best fit made by the Λ model. The best-fit ΛCDM power spectrum was obtained online: https://irsa.ipac.caltech.edu/data/Planck/release_2/ancillary-data/ (accessed on 6 January 2025).

The main difference between the ΛCDM and the HM were the differences in the amplitude, especially for the higher multipoles, where the HM model tended to underpredict their value. However, if one examines the multipole values in Table 3 where the extrema occurred, the differences between the ΛCDM and HM models were smaller. On average, the ΛCDM slightly underpredicted the measured extrema multipole values, while the HM model slightly overpredicted the multipole values, albeit by a factor of about twice as much as the ΛCDM. This suggests that, if we were to have used the ΛCDM model, the residual error, and thus, the temperature, would be reduced by about a factor of four. Therefore, the probability distribution would be narrower, but not necessarily as narrow as those reported by the Planck collaboration.

Table 3.

Comparison of modeled and measured multipole extrema.

5. Discussion

Why do the HM and Planck inferences yield a reasonable level of agreement on the ? The short answer is that neither computation infers explicitly; rather, both infer implicitly via the constraints and , where the later constraint is a result of the fact that, at the time of decoupling to the present, the universe has approximately zero curvature. This is an example of how ambiguities in the explicit parameters can cancel in the inference of a parameter that is implicitly determined. This observation strengthens/reaffirms the Planck 2018 case that the value for is about 67.4 km/sec/Mpc.

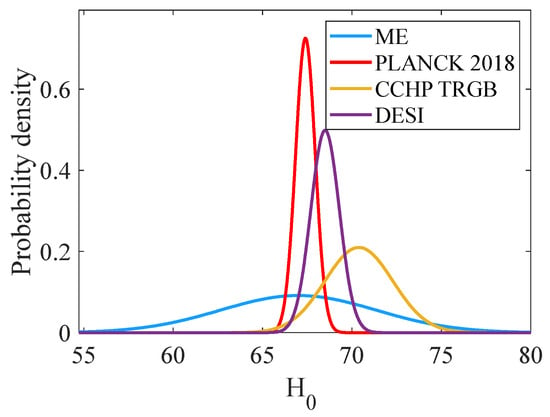

Figure 5 compares the ME Gaussian distributions for H0 to those obtained with the Kitt Peak DESI and from the CCHP using data collected from the James Webb Space Telescope (JWST). The mean ME value was consistent with the Planck average parameters and within 1σ of the mean values of the DESI and CCHP studies. Due to the conservative nature of the ME approach and the model error, the current analysis did not rule out the possible existence of the so-called Hubble tension, but did not exacerbate it. Since 2024, the CCHP study has decreased its estimate of H0 from about 72.5 to 70.5 km/sec/Mpc, bringing it closer to the Planck values [46,47]. Thus, one should exercise caution in declaring that there is a Hubble tension, since the analysis of the JWST data is ongoing.

Figure 5.

Comparison of the ME Gaussian distributions for to probability distributions from the Planck Collaboration [11,12], the Dark Energy Spectroscopic Instrument (DESI) Collaboration [43], and the Carnegie–Chicago Hubble Program (CCHP) using the tip of the red giant branch (TRGB) method measurements [46].

Although the present study did not directly evaluate the , the ME method offers a robust way to constrain cosmological parameters, including the and matter–energy densities, which together define the expansion history of the universe. By propagating uncertainties in a conservative manner, the ME provides a framework that could be extended to yield credible bounds on the cosmic age in future work.

6. Conclusions

This paper introduces a fully self-consistent statistical inference approach based on a variant of the Jaynes maximum entropy approach. The method was applied to the scalar component of the cosmic microwave background measurements made by the Planck satellite. A relative entropy function and the equipartition theorem, which provided a statistical temperature, were utilized to derive a posterior probability distribution. The statistical temperature was then used in Metropolis criteria sampling of the PPD. From the PPD, probability densities for the parameter values were then computed. Consistent with the goal of the ME was the use of a Gaussian fit to the probability densities to extract the average and standard deviations for the parameter space. In this sense, in contrast, the ME analysis presented in this study represents a self-consistent approach. A comparison of the Bayesian versus the ME method was presented, with the main difference being how the Bayesian approach estimates the noise covariance matrix, which includes both data and model errors, during the formulation of the likelihood function.

The ME approach adopts the position that the most conservative approach is to make no assumptions about the covariance matrix other than that one can ascertain an average error value. In the current study, one employed the equipartition theorem to relate the average error to a statistical temperature that uniquely specified the likelihood function, and thus, the PPD. This inherently conservative uncertainty reflects the ME approach’s avoidance of assumptions regarding the data covariance matrix, which distinguishes it from conventional Bayesian analyses with narrower error estimates. Once the marginal distributions are inferred, one still has the task of inferring the information content of the probability density functions, and this analysis used a best-fit Gaussian distribution to extract mean values and uncertainties.

This paper contributes to the ongoing Hubble tension controversy by providing an alternative method for estimating cosmological parameters through ME inference rather than the more commonly used Bayesian methods. The ME-inferred value was km/sec/Mpc, which is consistent with the Planck value of km/sec/Mpc; however, the ME uncertainty was about 4.3 km/sec/Mpc, compared to the Planck uncertainty value of about 0.05 km/sec/Mpc. These mean values contrast with the mean value of the CCHP study of about 70.4 km/sec/Mpc from redshift data. This discrepancy is at the heart of what has been called the Hubble tension, a major unresolved issue in modern cosmology. Although the ME approach does not definitively resolve the Hubble tension, it provides a valuable perspective. The inferred ME value lies within 1σ of both early-universe estimates (Planck, DESI) and late-universe measurements (e.g., CCHP). Thus, the ME analysis does not appear to support the existence of a tension. It is important to note that the analysis of the JWST data is an ongoing process, where the mean values and uncertainties for are still being evaluated. In addition to the Hubble tension, the new DESI measurements place the assumption that in (26) and independent of time into question [43].

The above discussion suggests that a potential future use of the ME approach would be possible extensions of the cosmological model, where one is, for example, testing alternative theories of dark energy and inflation scenarios on uncertain data such as those found in the Sachs–Wolfe region. The idea here is that one would expect model errors, and thus, the ME would conservatively estimate the uncertainty. Given its conservative nature, the ME could serve as a neutral arbiter in comparing models under uncertain data. The ME approach would mitigate accidental bias in the construction of a likelihood for such extensions of the cosmological model.

Author Contributions

Conceptualization, D.P.K.; Writing–original draft, D.P.K.; Writing – review & editing, M.F.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in https://irsa.ipac.caltech.edu/data/Planck/release_2/ancillary-data/ (assessed on 6 January 2025).

Acknowledgments

No financial or material support was received from Meta for the research presented in this paper. All the work was conducted independently, and any resources used were personally acquired or obtained through external means. The first author acknowledges a highly beneficial course in cosmology taught by E. Komatsu at the University of Texas at Austin in 2012.

Conflicts of Interest

Author Mark F. Westling was employed by the company Meta. The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A

There are various ways to derive the equipartition theorem. The following was taken from Reif [48]. Consider the energy E of a system as a function of N canonical coordinates and momenta :

A guiding principle in both classical and quantum physics is that separates into

and that has the functional form

which immediately allows one to identify Σ as a potential. A natural question is as follows: how does one construct in a thermodynamic equilibrium at a temperature if (A1), (A2) are satisfied?

Since the N components of the system are distributed by a canonical distribution, (A3) gives a modicum of mathematical steps:

and from (A2), this becomes

or

The integral terms in the numerator and denominator are common factors:

or

and one easily finds that

Thus, for N dimensions, we finally arrive at a relationship between the average energy E in an N-dimensional space as

For further reading on the equipartition theorem that places it on a firm mathematical foundation, the reader is referred to Tolman [49].

Appendix B

The transfer functions were constructed by interpolating their values between the limits where and . The arguments of the transfer functions are wavenumber . Weinberg [25] noted that D. Dicus created tables for these transfer functions along with fitting formulas:

In [25], the fitting formula for is missing the square root, as also noted in [17]. In the actual computations, Table 6.1 in [25] was used with a simple interpolation for . For , the fitting formulas were used.

References

- Sen, M.K.; Stoffa, P.L. Bayesian inference, Gibbs’ sampler and uncertainty estimation in geophysical inversion. Geophys. Prospect. 1996, 44, 313–350. [Google Scholar] [CrossRef]

- Green, P.J. Trans-dimensional Markov chain Monte Carlo. In Oxford Statistical Science Series; Oxford University Press: Oxford, UK, 2003; Chapter 6; Volume 4, pp. 179–198. [Google Scholar]

- Sambridge, M.; Gallagher, K.; Jackson, A.; Rickwood, P. Trans-dimensional inverse problems, model comparison and the evidence. Geophys. J. Int. 2006, 167, 528–542. [Google Scholar] [CrossRef]

- Parmer, V.; Thapa, V.B.; Kumar, A.; Bandyopadhyay, D.; Sinha, M. Bayesian inference of the dense-matter equation of state of neutron stars with antikaon condensation. Phys. Rev. C 2024, 110, 045804. [Google Scholar] [CrossRef]

- Beznogov, M.V.; Raduta, A.R. Bayesian survey of the dense matter equation of state built upon Skyrme effective interactions. Astrophys. J. 2024, 966, 216. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics II. Phys. Rev. 1957, 108, 171–190. [Google Scholar] [CrossRef]

- Jaynes, E.T. Prior probabilities. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 227–241. [Google Scholar] [CrossRef]

- Giffin, A.; Caticha, A. Updating probabilities with data and moments. In Proceedings of the 27th International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering, Saratoga Springs, NY, USA, 8–13 July 2007; pp. 1–5. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Aghanim, N.; Akrami, Y.; Ashdown, M.; Aumont, J.; Baccigalupi, C.; Ballardini, M.; Banday, A.J.; Barreiro, R.B.; Bartolo, N.; Basak, S.; et al. Planck 2018 results: Overview and the cosmological legacy of Planck. Astron. Astrophys. 2020, 641, 1–56. [Google Scholar]

- Aghanim, N.; Akrami, Y.; Ashdown, M.; Aumont, J.; Baccigalupi, C.; Ballardini, M.; Banday, A.J.; Barreiro, R.B.; Bartolo, N.; Basak, S.; et al. Planck 2018 results: VI. cosmological parameters. Astron. Astrophys. 2020, 641, 1–67. [Google Scholar]

- Komatsu, E.; Smith, K.M.; Dunkley, J.; Bennett, C.L.; Gold, B.; Hinshaw, G.; Jarosik, N.; Larson, D.; Nolta, M.R.; Page, L.; et al. Seven-year Wilkinson microwave anisotropy probe (WMAP) observations: Cosmological observations. Astrophys. J. Suppl. Ser. 2011, 192, 18. [Google Scholar] [CrossRef]

- Peebles, P.J.E.; Yu, J.T. Primeval adiabatic perturbation in an expanding universe. Astrophys. J. 1970, 162, 815–836. [Google Scholar] [CrossRef]

- Sunyaev, R.A.; Zel’dovich, Y.B. Small-scale fluctuations of relic radiation. Astrophys. Space Sci. 1970, 7, 3–19. [Google Scholar] [CrossRef]

- Lewis, A.; Challinor, A.; Lasenby, A. Efficient Computation of CMB anisotropies in closed FRW models. Astrophys. J. 2000, 538, 473–476. [Google Scholar] [CrossRef]

- Helm, J. A new version of the Lambda-CDM cosmological model, with extensions and new calculations. J. Mod. Phys. 2024, 15, 193–238. [Google Scholar] [CrossRef]

- Riess, A.G.; Filippenko, A.V.; Challis, P.; Clocchiatti, A.; Diercks, A.; Garnavich, P.M.; Gilliland, R.L.; Hogan, C.J.; Jha, S.; Kirshner, R.P.; et al. Observational evidence from supernovae for an accelerating universe and a cosmological constant. Astron. J. 1998, 116, 1009–1038. [Google Scholar] [CrossRef]

- Peebles, P.J.E.; Ratra, B. The cosmological constant and dark energy. Rev. Mod. Phys. 2003, 75, 559–606. [Google Scholar] [CrossRef]

- Frenk, C.S.; White, S.D.M. Dark matter and cosmic structure. Ann. Phys. 2012, 524, 507–534. [Google Scholar] [CrossRef]

- Diemand, J.; Moore, B. The structure and evolution of cold dark matter halos. Adv. Sci. Lett. 2011, 4, 297–310. [Google Scholar] [CrossRef]

- Eisenstein, D.J.; Hu, W. Baryonic features in the matter transfer function. Astrophys. J. 1998, 496, 605–614. [Google Scholar] [CrossRef]

- Sachs, R.K.; Wolfe, A.M. Perturbations of a cosmological model and angular variations of the microwave background. Astrophys. J. 1967, 147, 73. [Google Scholar] [CrossRef]

- Hu, W.; Sugiyama, N. Anisotropies in the cosmic microwave background: An Analytic Approach. Astrophys. J. 1995, 444, 489–506. [Google Scholar] [CrossRef]

- Weinberg, S. Cosmology; Oxford University Press: Oxford, UK, 2008; Chapters 5–7; p. 10. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 623–656. [Google Scholar] [CrossRef]

- Knobles, D.P.; Sagers, J.D.; Koch, R.A. Maximum entropy approach to statistical inference for an ocean acoustic waveguide. J. Acoust. Soc. Am. 2012, 131, 1087–1101. [Google Scholar] [CrossRef] [PubMed]

- Bilbro, G.; Van den Bout, D.E. Maximum entropy and learning theory. Neural Comput. 1992, 4, 839–853. [Google Scholar] [CrossRef]

- Tishby, N.; Levin, E.; Solla, S.A. Consistent inference of probabilities in layered networks: Predictions and generalization. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Washington, DC, USA, 18–22 June 1989; Volume 2, pp. 403–410. [Google Scholar]

- Levin, E.; Tishby, N.; Solla, S.A. A statistical approach to learning and generalization in layered neural networks. Proc. IEEE 1990, 78, 1568–1574. [Google Scholar] [CrossRef]

- Knobles, D.P.; Neilsen, T.; Hodgkiss, W. Inference of source signatures of merchant ships in shallow ocean environments. J. Acoust. Soc. Am. 2024, 155, 3144–3155. [Google Scholar] [CrossRef]

- Nuttall, J.R.; Neilsen, T.B.; Transtrum, M.K. Maximum entropy temperature selection via the equipartition theorem. Proc. Meet. Acoust. 2023, 52, 070004. [Google Scholar]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.H.; Teller, E. Equation of state calculations by fast computing machines. J. Chem. Phys. 1953, 21, 1087. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Sen, M.K.; Stoffa, P.L. Global Optimization Methods in Geophysical Inversion; Cambridge University Press: Cambridge, UK, 2013; Chapter 4. [Google Scholar]

- Goffe, W.L.; Ferrier, G.L.; Rogers, J. Global optimization of statistical functions with simulated annealing. J. Econom. 1994, 60, 65–100. [Google Scholar] [CrossRef]

- Weinberg, S. Adiabatic Modes in Cosmology. Phys. Rev. D 2003, 67, 123504. [Google Scholar] [CrossRef]

- Mukhanov, V.S. Quantum theory of gauge-invariant cosmological perturbations. Zh. Eksp. Teor. Fiz. 1988, 94, 1–11. [Google Scholar]

- Sasaki, S. Large scale quantum fluctuations in the inflationary universe. Prog. Theor. Phys. 1986, 76, 1036–1046. [Google Scholar] [CrossRef]

- Bardeen, J.M. Gauge invariant cosmological perturbations. Phys. Rev. D 1980, 22, 1882–1905. [Google Scholar] [CrossRef]

- Linde, A.D. A new inflationary universe scenario: A possible solution of the horizon, flatness, homogeneity, isotropy and primordial monopole problems. Phys. Lett. B 1982, 108, 389–392. [Google Scholar] [CrossRef]

- DESI Collaboration; Abdul-Karim, M.; Aguilar, J.; Ahlen, S.; Alam, S.; Allen, L.; Allende Prieto, C.; Alves, O.; Anand, A.; Andrade, U.; et al. DESI DR2 results II: Measurements of baryon acoustic oscillations and cosmological constraints. arXiv 2025, arXiv:2503.14738v2. [Google Scholar]

- Wilkinson Microwave Anisotropy Probe (WMAP) Year 1 Data Release. Available online: https://lambda.gsfc.nasa.gov/data/map/powspec/wmap_lcdm_pl_model_yr1_v1.txt (accessed on 1 January 2025).

- Page, L.; Hinshaw, G.; Komatsu, E.; Nolta, M.R.; Spergel, D.N.; Bennett, C.L.; Barnes, C.; Bean, R.; Doré, O.; Dunkley, J.; et al. Three year Wilkinson microwave anisotropy probe (WMAP) observations: Polarization analysis. Astrophys. J. Suppl. Ser. 2007, 170, 335–376. [Google Scholar] [CrossRef]

- Freedman, W.L.; Madore, B.F.; Hoyt, T.J.; Jang, I.S.; Lee, A.J.; Owens, K.A. Status report on the Chicago-Carnegie Hubble program (CCHP): Measurement of the Hubble constant using the Hubble and James Webb space telescopes. Astrophys. J. 2025, 985, 203. [Google Scholar] [CrossRef]

- Freedman, W.L.; Madore, B.F.; Jang, I.S.; Hoyt, T.J.; Lee, A.J.; Owens, K.A. Status report on the Chicago-Carnegie Hubble program (CCHP): Three independent astrophysical determinations of the Hubble constant using the James Webb space telescope. Astrophys. J. 2024, 985, 31. [Google Scholar]

- Reif, F. Fundamentals of Statistical and Thermal Physics; McGraw-Hill: New York, NY, USA, 1965; Chapter 7. [Google Scholar]

- Tolman, R.C. A general theory of energy partition with applications to quantum theory. Phys. Rev. 1918, 11, 261–275. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).