Entropy-Based Correlation Analysis for Privacy Risk Assessment in IoT Identity Ecosystem

Abstract

1. Introduction

- Cycle Decomposition for Bayesian Networks:We present a systematic cycle decomposition method that transforms cyclic Bayesian networks into acyclic subgraphs, enabling accurate and efficient privacy risk inference for identity theft scenarios in IoT ecosystems.

- Entropy-Based Quantification of Uncertainty: We apply Shannon entropy to quantify the informational uncertainty of privacy risk scores, offering a rigorous, interpretable metric to evaluate the reliability of privacy risk assessments across data types.

- Comparative Analysis of Privacy Scores: We conduct an empirical analysis comparing the PPA score and PrivacyCheck score, using correlation, regression, and feature importance methods to identify their respective strengths and limitations for different data types.

- Alignment with Standard Privacy Frameworks: We map our automated risk assessment approach to established frameworks such as CNIL and LINDDUN, demonstrating how our tools operationalize key risk analysis steps at scale.

- Validation on Real-World Data: We validate our methods on an open-source IoT app dataset, illustrating practical applications for risk detection and policy improvement in real-world settings.

2. Related Work

2.1. Identity Theft Simulation

2.2. Cyclic Bayesian Network

2.3. Privacy Risk Assessment Frameworks

2.4. Summary

3. UTCID PPA: Entropy-Enhanced Cycle Decomposition for Privacy Risk Assessment in Bayesian Networks

3.1. Threat Model

3.1.1. Adversary Profile

- External attacker: Eavesdropping on unencrypted communications, exploiting insecure APIs, or leveraging data breaches to obtain sensitive attributes (e.g., SSN, email, location).

- Malicious insider: An app developer or employee with legitimate access who misuses privileged information for unauthorized purposes.

- Third-party service: Entities with whom data is shared, either intentionally (analytics, advertising) or inadvertently (poor access controls).

3.1.2. Capabilities

- Access data transmitted or stored by the app, subject to permissions and technical controls;

- Analyze app privacy policies and user permissions to infer potential exposure;

- Aggregate data from multiple sources to enhance re-identification or profiling.

3.1.3. Limitations

3.1.4. Relation to Risk Scores

3.2. Entropy as a Measure of Informational Uncertainty in Privacy Risk Assessment

3.3. UTCID ITAP

- Understanding Identity Theft Processes: Analyze methods used by identity thieves and fraudsters to identify patterns and vulnerabilities;

- Risk Assessment: Quantify the exposure risks of different types of identity attributes;

- Loss Estimation: Determine the monetary losses associated with identity attribute exposure, enabling the identification of high-risk attributes.

3.3.1. Defining Identity Attributes

- Reviewing identity theft literature and reports;

- Identifying identity attributes most frequently mentioned in news articles and case studies.

3.3.2. Risk of Exposure for Identity Attributes

3.3.3. Monetary Loss Assignment

- Loss attributed to a specific identity attribute i;

- Total loss mentioned in the article;

- Frequency of the identity attribute i in the article;

- Sum of frequencies of all identity attributes in the article and n is the number of distinct identity attributes mentioned in the article.

3.3.4. Dependency Analysis

- is the estimated conditional probability that attribute j is breached, given that i is breached.

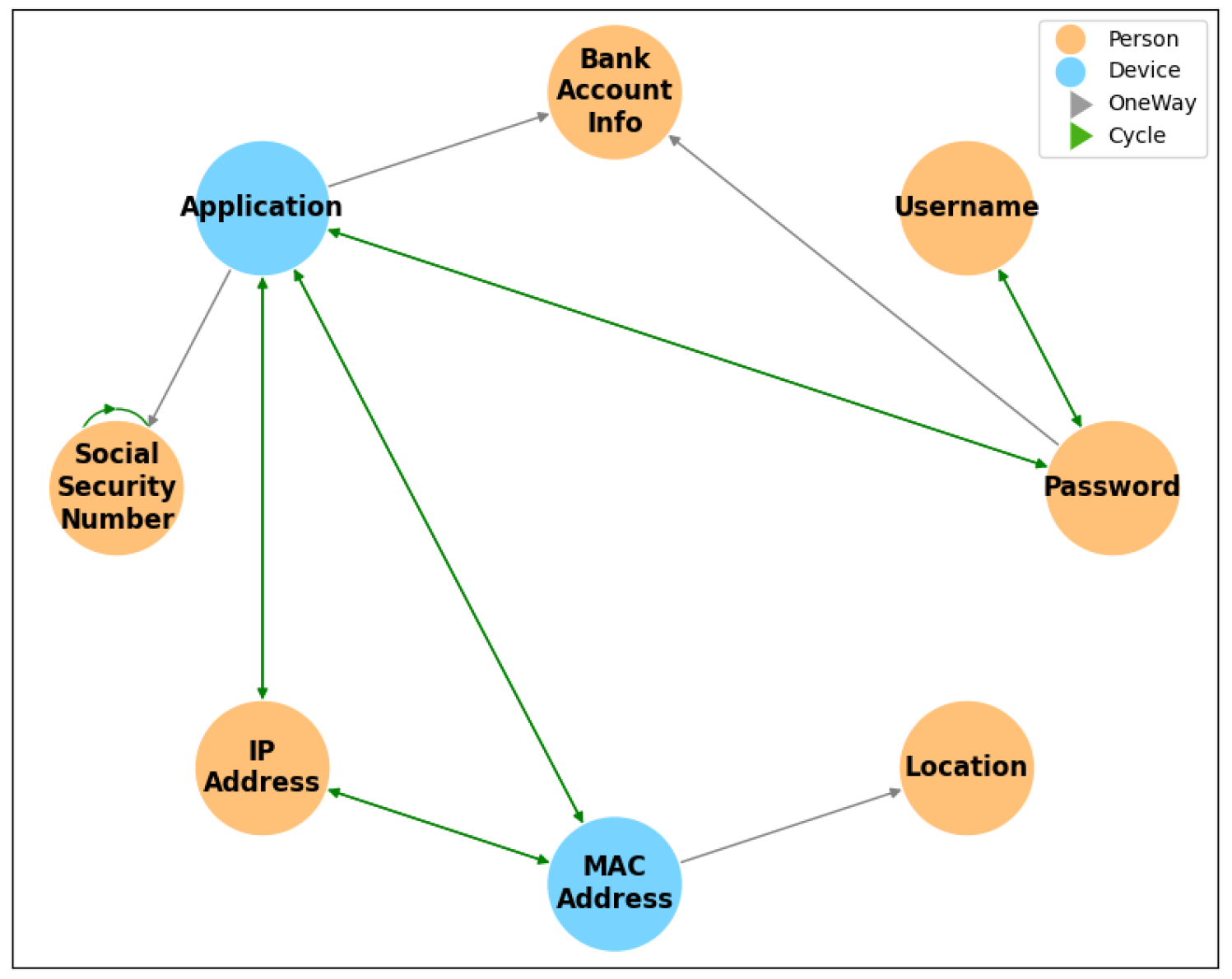

3.4. UTCID IoT Identity Ecosystem

- Causal Dependencies: They effectively capture the direct and indirect dependencies between identity attributes;

- Probabilistic Reasoning: They allow for inference under uncertainty, which is critical in predicting the risk of exposure and estimating potential liabilities;

- Scalability: The framework can handle a large number of interconnected variables, making it ideal for modeling the intricate nature of identity ecosystems.

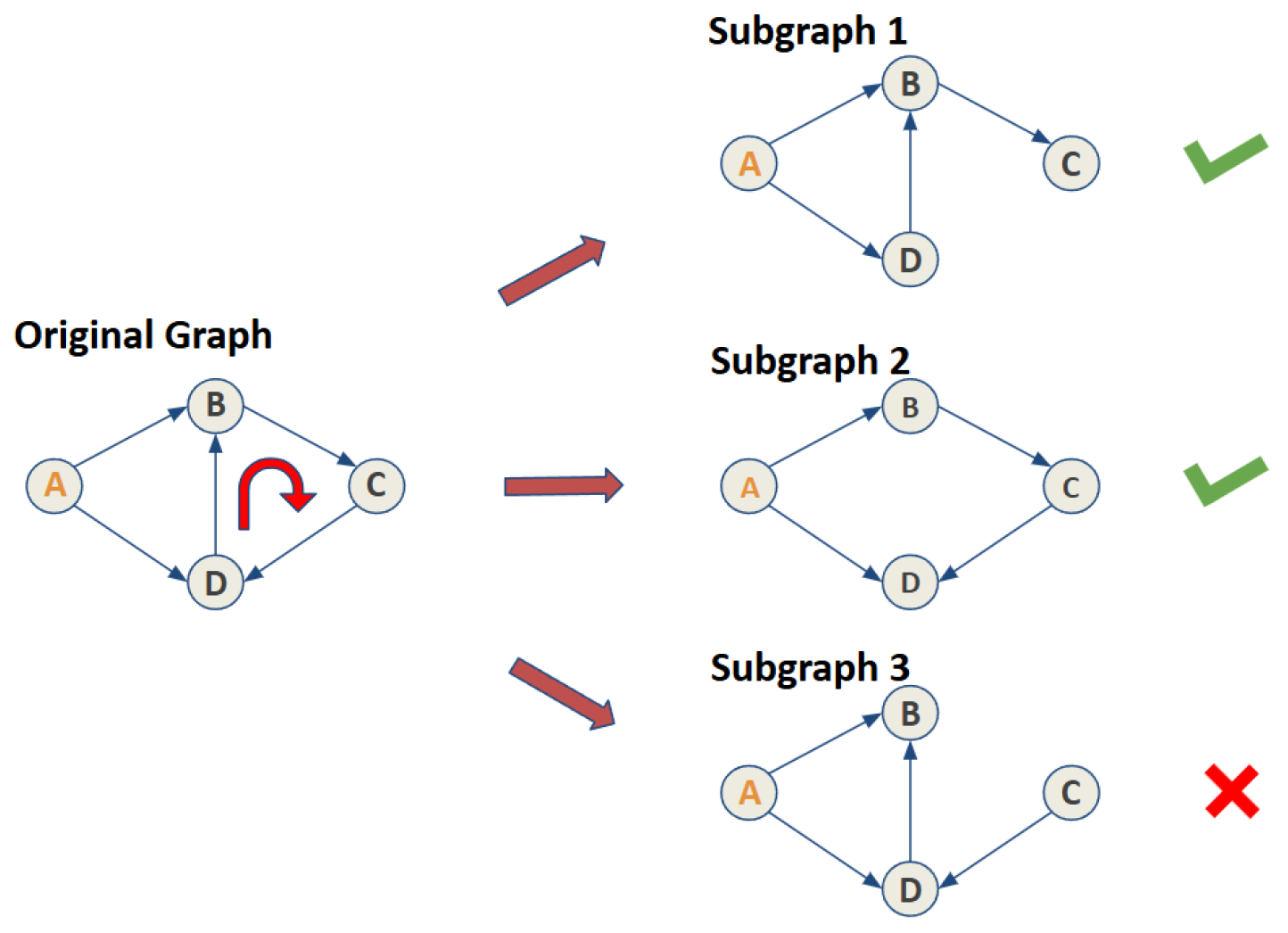

- 1.

- Given a set of evidence (identity attributes to be exposed), detect all cycles along the paths;

- 2.

- Attempt to remove edges contained within those cycles and confirm the validity of the paths after removal;

- 3.

- Record all valid subgraphs and conduct a simulation for each subgraph;

- 4.

- Finally, integrate the results of all simulations.

3.5. Cycle Decomposition Algorithm Description

3.5.1. Cycle Detection

3.5.2. Edge Removal Attempt

3.5.3. Path Validity Check

3.5.4. Subgraph Construction

3.5.5. Simulation with Bayesian Inference on Subgraphs

Accessibility

Post Effect

Privacy Risk

- Accessibility (): The increase in exposure probability due to the compromise of i’s ancestors;

- Post Effect (): The cumulative increase in expected loss among i’s descendants if i is breached.

- : Prior probability that i is exposed;

- : Liability value (property damage) for i;

- : Accessibility factor for i;

- : Post Effect of i.

3.5.6. Integration of Results

3.6. UTCID PrivacyCheck™: Privacy Policy Evaluation and Entropy-Based Risk Assessment

3.7. Summary of Notation

4. Evaluation

4.1. Comparison of Existing Approaches for Dealing with Cycles in Bayesian Networks

- Suitable for systems that evolve over time, making it ideal for temporal processes;

- Handles feedback and recursions by unrolling the network over multiple time steps.

- Computationally expensive as the number of time slices increases, posing challenges for real-time decision making;

- May fail to capture necessary feedback loops concisely in less temporally structured environments like identity theft;

- Requires careful temporal modeling, adding complexity.

- Faster than exact methods like the Junction Tree Algorithm and can work well for very large networks;

- Handles cyclic dependencies without converting the network to an acyclic form.

- Results are approximate and may converge to incorrect beliefs;

- Struggles with maintaining causal interpretability, which is critical in identity theft scenarios.

- Provides exact inference, ensuring high accuracy;

- Effectively manages large, complex systems by breaking them into manageable cliques.

- Triangulation can exponentially increase clique sizes, raising computational costs;

- Obscures causal relationships and feedback loops, reducing interpretability for cyclic processes like identity theft.

- Preserves feedback loops and causal structures critical for applications like identity theft simulation;

- Useful for applications where cycles reflect natural processes (e.g., feedback loops in systems or processes). In the IoT Identity Ecosystem example, simulating cyclic criminal behavior makes this method more realistic;

- Realistically simulates scenarios where cycles reflect natural processes;

- Flexible and customizable for domain-specific needs.

- Decomposing cycles requires domain expertise and can be computationally complex in larger networks;

- Relationships and dependencies across the entire network may be harder to infer compared to structured methods like the junction tree;

- Lacks standardized, widely accepted formal algorithms.

Why Some Methods Are Not Ideal for Identity Theft Scenarios

- Preserves causal relationships: Retains the network’s original structure, crucial for tracking cause-and-effect dynamics;

- Enhances interpretability: Maintains visibility into decision paths and feedback processes, helping analysts understand interactions between identity attributes and risks;

- Improves efficiency: Focuses on cyclic parts of the network, offering a more computationally feasible solution than triangulation.

4.2. Exploratory Comparison with Privacy Risk Score Algorithm and ImmuniWeb®

4.3. Validation of PrivacyCheck

4.4. Comparison with the CNIL Privacy Risk Methodology

5. Experiments and Results

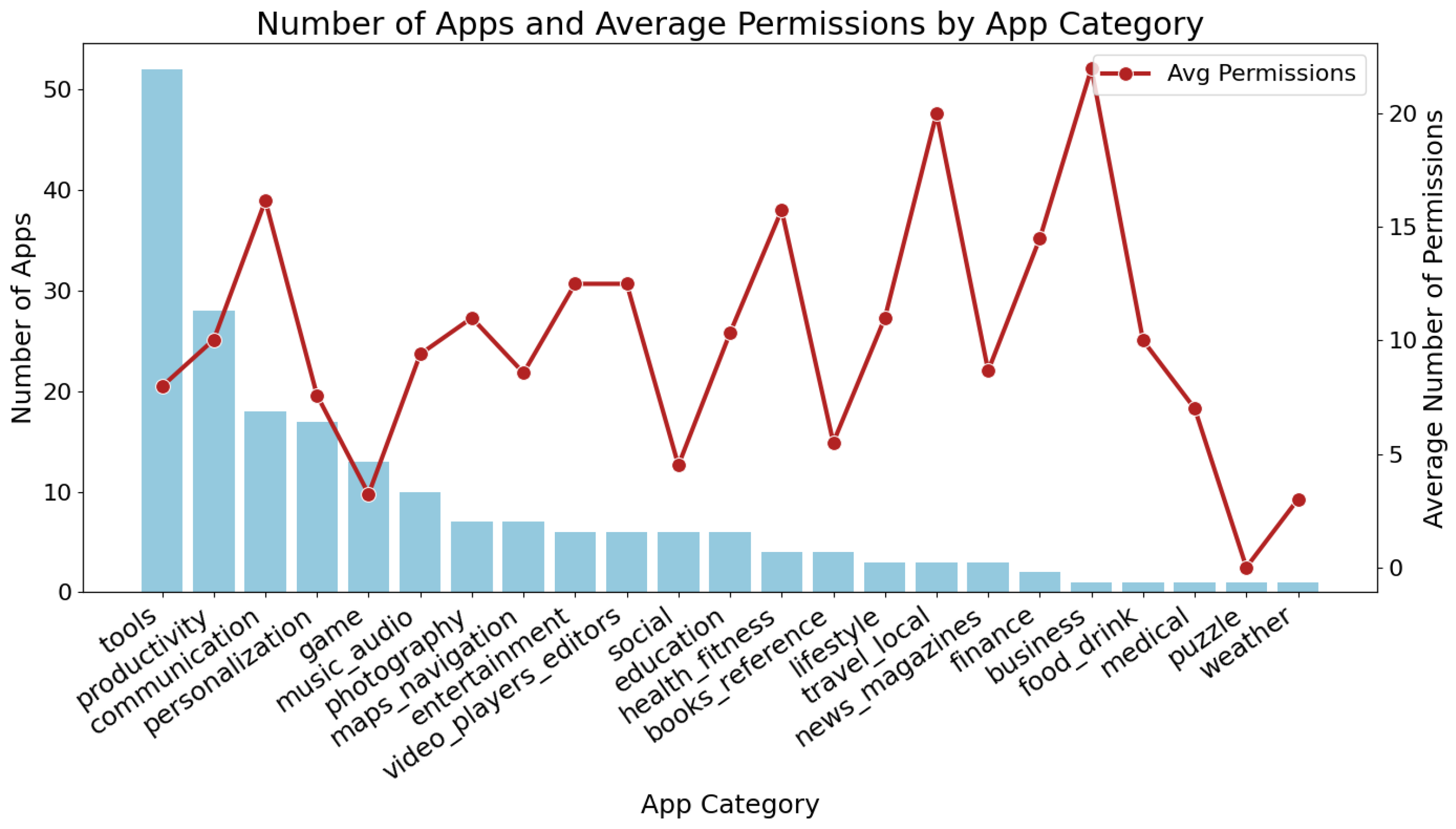

5.1. Experimental Setup

- Application Dataset: We used a dataset of 200 open-source Android apps, each annotated with metadata including app category, link to the apps, and permission usage. Apps were chosen using a stratified sampling approach to capture a representative cross-section of market-leading and emerging applications. The sample includes both well-established and lesser-known apps to evaluate the generalizability of our risk assessment models.

- Identity Attribute Inference: For each app, we analyzed the AndroidManifest.xml file to determine the permissions it requests. We mapped each permission to one or more identity attributes based on a predefined ontology. Additionally, we parsed each app’s privacy policy to identify explicitly declared identity attributes with Natural Language Processing (NLP). The union of permissions and declared attributes was treated as the complete set of identity attributes collected by the app.

- PPA Risk Score Calculation: Using the inferred identity attributes, we computed a PPA risk score for each app (cycle decomposition included). This score captures both the exposure likelihood and the consequence of breach for the set of collected attributes, based on the UTCID IoT Identity Ecosystem model.

- Privacy Policy Evaluation via PrivacyCheck: We applied the UTCID PrivacyCheck™ tool to each app’s privacy policy. PrivacyCheck uses machine learning to answer 20 predefined questions grounded in FIPPs and GDPR principles. This produces both an overall User Control score and GDPR compliance score, as well as scores for individual privacy provisions.

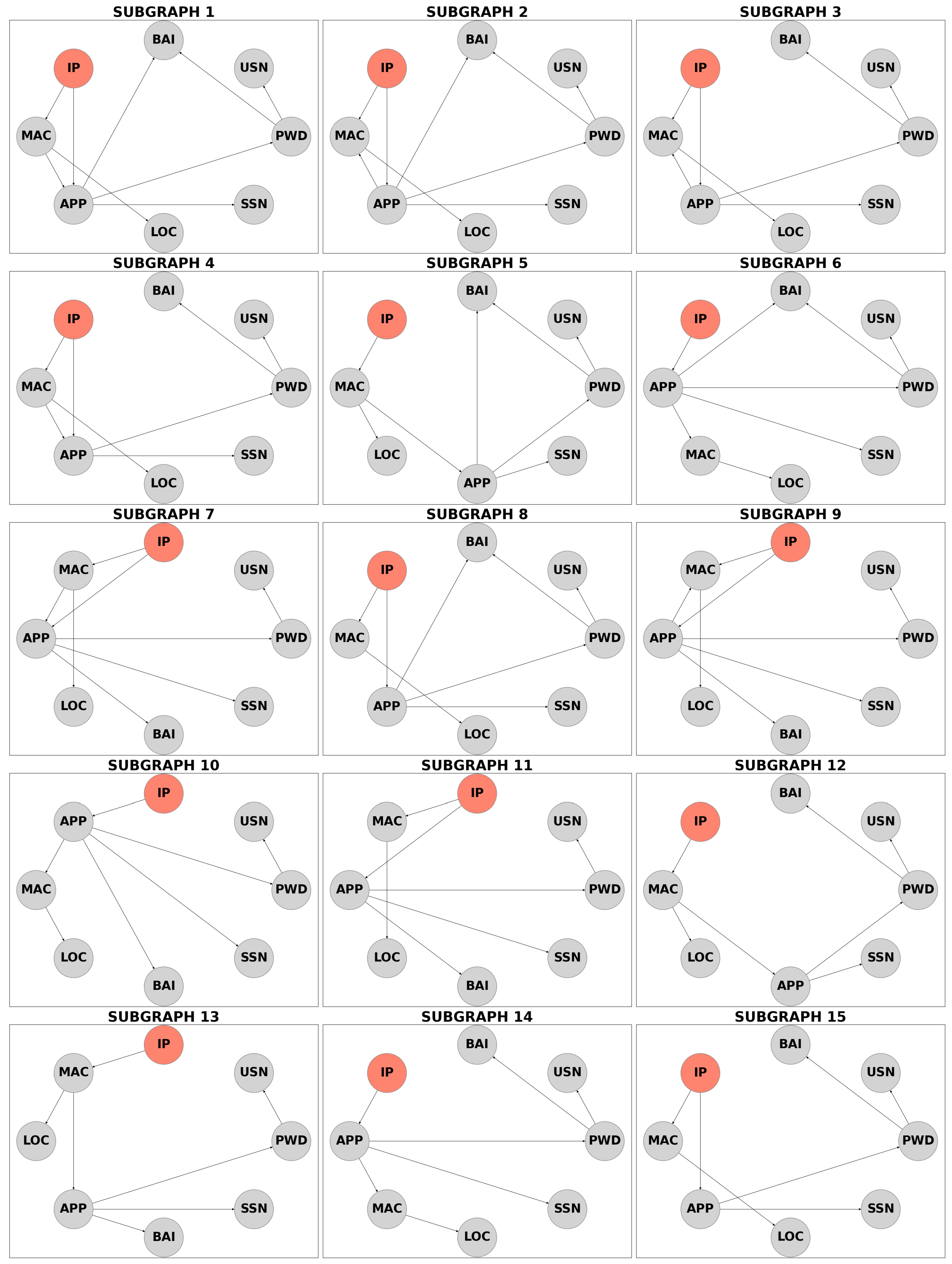

5.2. Cycle Decomposition Result Analysis

- Prior Change: Subgraph 8 shows the highest impact on priors following an IP Address breach, while subgraph 14 has the least;

- Edge Weight: Subgraph 2 is the most probable path for fraudsters, while subgraph 13 is the least likely;

- Accessibility: Subgraph 12 indicates the highest likelihood of access by other nodes, whereas subgraph 1 has the lowest;

- Post Effect: Subgraph 15 reflects the highest monetary loss post breach, while subgraph 11 shows the lowest;

- Privacy Risk: Subgraph 10 poses the greatest cumulative risk, while subgraph 1 represents the least.

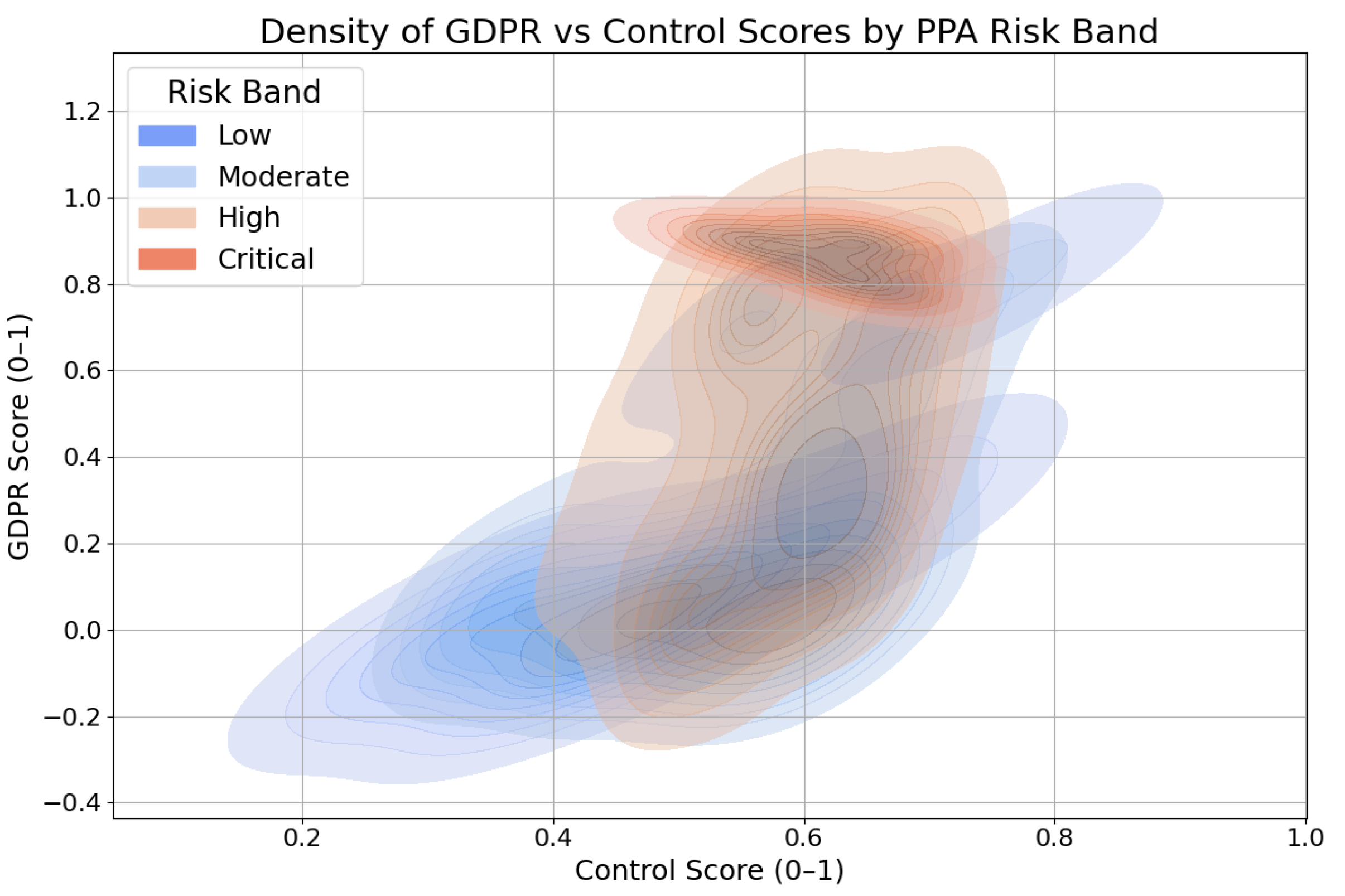

5.3. Correlation and Regression Analysis

- A user is alerted by the PPA that connecting to a particular IoT device or application carries a high privacy risk due to the types of identity attributes it collects.

- The user then reviews the associated PrivacyCheck scores for the app or device. If the GDPR compliance score is high, the user may infer that the company has a strong privacy policy and adheres to regulatory standards.

- This perception can enhance the user’s trust in the company’s data handling practices, regardless of the underlying technical exposure. As a result, the user may proceed to share personal information despite the PPA’s high-risk assessment.

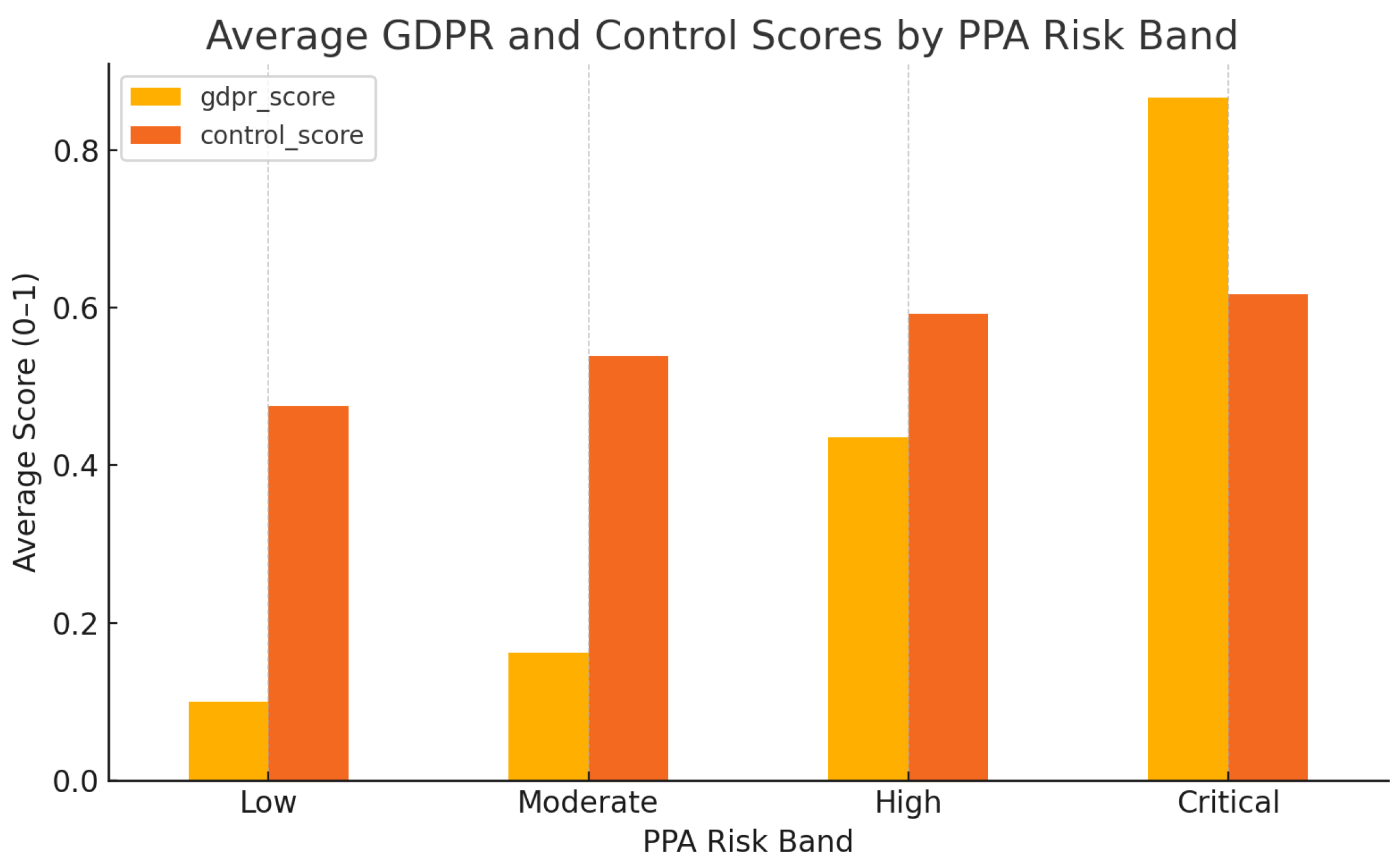

5.4. Risk Band Distribution and PrivacyCheck Scores

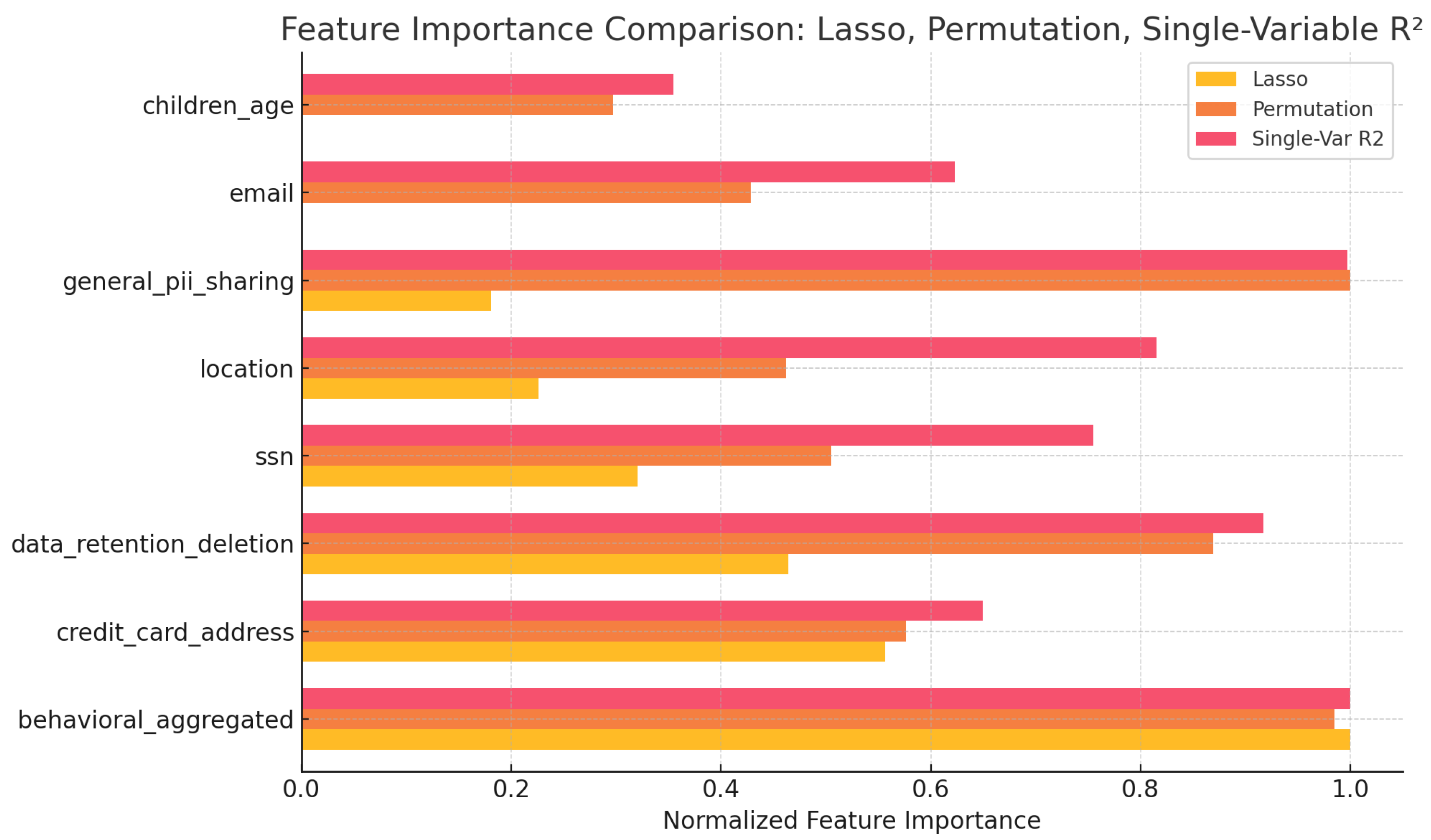

5.5. Key Data Types Contributing to Privacy Risk

5.6. App Category Insights

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| UTCID | University of Texas at Austin, Center for Identity |

| ITAP | The Identity Threat Assessment and Prediction |

| PII | Personally Identifiable Information |

| PPA | Personal Privacy Assistant |

| IoT | Internet of Things |

| NLP | Natural Language Processing |

References

- Mohammad, N.; Khatoon, R.; Nilima, S.I.; Akter, J.; Kamruzzaman, M.; Sozib, H.M. Ensuring Security and Privacy in the Internet of Things: Challenges and Solutions. J. Comput. Commun. 2024, 12, 257–277. [Google Scholar] [CrossRef]

- Rana, R.; Zaeem, R.N.; Barber, K.S. An Assessment of Blockchain Identity Solutions: Minimizing Risk and Liability of Authentication. In Proceedings of the 2019 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Thessaloniki, Greece, 14–17 October 2019; pp. 26–33. [Google Scholar]

- Stach, C.; Gritti, C.; Bräcker, J.; Behringer, M.; Mitschang, B. Protecting Sensitive Data in the Information Age: State of the Art and Future Prospects. Future Internet 2022, 14, 302. [Google Scholar] [CrossRef]

- Chen, S.H.; Pollino, C.A. Good practice in Bayesian network modelling. Environ. Model. Softw. 2012, 37, 134–145. [Google Scholar] [CrossRef]

- Zaeem, R.N.; Budalakoti, S.; Barber, K.S.; Rasheed, M.; Bajaj, C. Predicting and explaining identity risk, exposure and cost using the ecosystem of identity attributes. In Proceedings of the 2016 IEEE International Carnahan Conference on Security Technology (ICCST), Orlando, FL, USA, 24–27 October 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Uusitalo, L. Advantages and challenges of Bayesian networks in environmental modelling. Ecol. Model. 2007, 203, 312–318. [Google Scholar] [CrossRef]

- Husari, G.; Niu, X.; Chu, B.; Al-Shaer, E. Using Entropy and Mutual Information to Extract Threat Actions from Cyber Threat Intelligence. In Proceedings of the 2018 IEEE International Conference on Intelligence and Security Informatics (ISI), Miami, FL, USA, 9–11 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Upal, M.A. A Framework for Agent-Based Social Simulations of Social Identity Dynamics. In Conflict and Complexity: Countering Terrorism, Insurgency, Ethnic and Regional Violence; Fellman, P.V., Bar-Yam, Y., Minai, A.A., Eds.; Springer: New York, NY, 2015; pp. 89–109. [Google Scholar]

- Wang, C.; Zhu, H.; Yang, B. Composite Behavioral Modeling for Identity Theft Detection in Online Social Networks. IEEE Trans. Comput. Soc. Syst. 2022, 9, 428–439. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, X.; Lovrich, N.P. Forecasting Identity Theft Victims: Analyzing Characteristics and Preventive Actions through Machine Learning Approaches. In The New Technology of Financial Crime; Routledge: Abingdon, UK, 2022; pp. 183–212. [Google Scholar]

- Zaeem, R.N.; Manoharan, M.; Yang, Y.; Barber, K.S. Modeling and Analysis of Identity Threat Behaviors through Text Mining of Identity Theft Stories. Comput. Secur. 2017, 65, 50–63. [Google Scholar] [CrossRef]

- Lin, P.; Dou, C.; Gu, N.; Shi, Z.; Ma, L. Performing Bayesian Network Inference Using Amortized Region Approximation with Graph Factorization. Int. J. Intell. Syst. 2023, 2023, 2131915. [Google Scholar] [CrossRef]

- Murphy, K.P. Dynamic Bayesian Networks: Representation, Inference and Learning; University of California: Berkeley, CA, USA, 2002. [Google Scholar]

- Wainwright, M.J.; Jordan, M.I. Graphical Models, Exponential Families, and Variational Inference. Found. Trends® Mach. Learn. 2008, 1, 1–305. [Google Scholar] [CrossRef]

- Zhu, Q.X.; Ding, W.J.; He, Y.L. Novel Multimodule Bayesian Network with Cyclic Structures for Root Cause Analysis: Application to Complex Chemical Processes. Ind. Eng. Chem. Res. 2020, 59, 12812–12821. [Google Scholar] [CrossRef]

- Kumari, P.; Bhadriraju, B.; Wang, Q.; Kwon, J.S.I. A modified Bayesian network to handle cyclic loops in root cause diagnosis of process faults in the chemical process industry. J. Process Control 2022, 110, 84–98. [Google Scholar] [CrossRef]

- Wuyts, D.; Joosen, W. LINDDUN: A Privacy Threat Analysis Framework. In Proceedings of the 2015 Engineering Secure Software and Systems (ESSS), Milan, Italy, 4–6 March 2015. [Google Scholar]

- CNIL. Methodology for Privacy Risk Management; French Data Protection Authority: Paris, France, 2018. [Google Scholar]

- ISO/IEC 27557:2022; Information Security, Cybersecurity and Privacy Protection—Guidelines on Privacy Risk Management. ISO: Geneva, Switzerland, 2022.

- National Institute of Standards and Technology. NIST Privacy Framework: A Tool for Improving Privacy Through Enterprise Risk Management, Version 1.0; Technical Report NIST CSWP 1.0; NIST: Gaithersburg, MD, USA, 2020.

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- ITRC. Identity Theft Resource Center Q3 2024 Data Breach Analysis; ITRC: Washington, DC, USA, 2024. [Google Scholar]

- Alfalayleh, M.; Brankovic, L. Quantifying Privacy: A Novel Entropy-Based Measure of Disclosure Risk. In Proceedings of the Combinatorial Algorithms; Jan, K., Miller, M., Froncek, D., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 24–36. [Google Scholar]

- Airoldi, E.M.; Bai, X.; Malin, B.A. An entropy approach to disclosure risk assessment: Lessons from real applications and simulated domains. Decis. Support Syst. 2011, 51, 10–20. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Zaiss, J.; Anderson, R.; Zaeem, R.N.; Barber, K.S. ITAP Report 2019. 2019. Available online: https://identity.utexas.edu/sites/default/files/2020-09/CID_ITAP_Report_2019.pdf (accessed on 1 February 2024).

- Chang, K.C.; Zaeem, R.N.; Barber, K.S. Enhancing and Evaluating Identity Privacy and Authentication Strength by Utilizing the Identity Ecosystem. In Proceedings of the 2018 Workshop on Privacy in the Electronic Society; Association for Computing Machinery: New York, NY, USA, 2018. WPES’18. pp. 114–120. [Google Scholar] [CrossRef]

- Ma, X.; Deng, W.; Qiao, W.; Luo, H. A novel methodology concentrating on risk propagation to conduct a risk analysis based on a directed complex network. Risk Anal. 2022, 42, 2800–2822. [Google Scholar] [CrossRef] [PubMed]

- Angermeier, D.; Wester, H.; Beilke, K.; Hansch, G.; Eichler, J. Security Risk Assessments: Modeling and Risk Level Propagation. ACM Trans. Cyber-Phys. Syst. 2023, 7, 1–25. [Google Scholar] [CrossRef]

- Chang, K.C.; Zaeem, R.N.; Barber, K.S. A Framework for Estimating Privacy Risk Scores of Mobile Apps. In Proceedings of the Information Security; Susilo, W., Deng, R.H., Guo, F., Li, Y., Intan, R., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 217–233. [Google Scholar]

- Zaeem, R.N.; Barber, K.S. The Effect of the GDPR on Privacy Policies: Recent Progress and Future Promise. ACM Trans. Manag. Inf. Syst. 2020, 12, 1–20. [Google Scholar] [CrossRef]

- Recommendation of the Council concerning Guidelines governing the Protection of Privacy and Transborder Flows of Personal Data. Organisation for Economic Co-Operation and Development, 2025. OECD/LEGAL/0188. Available online: https://legalinstruments.oecd.org/public/doc/114/114.en.pdf (accessed on 30 June 2025).

- Nokhbeh Zaeem, R.; Anya, S.; Issa, A.; Nimergood, J.; Rogers, I.; Shah, V.; Srivastava, A.; Barber, K.S. PrivacyCheck v2: A tool that recaps privacy policies for you. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Online, 19–23 October 2020; pp. 3441–3444. [Google Scholar]

- Privacy Online: Fair Information Practices in the Electronic Marketplace; Federal Trade Commission. 2000. Available online: https://www.ftc.gov/reports/privacy-online-fair-information-practices-electronic-marketplace-federal-trade-commission-report (accessed on 30 June 2025).

- Zaeem, R.N.; Ahbab, A.; Bestor, J.; Djadi, H.H.; Kharel, S.; Lai, V.; Wang, N.; Barber, K.S. PrivacyCheck v3: Empowering Users with Higher-Level Understanding of Privacy Policies. In Proceedings of the 20th Workshop on Privacy in the Electronic Society (WPES 21), Seoul, Republic of Korea, 15 November 2021. [Google Scholar]

- Zaeem, R.N.; Barber, K.S. Comparing Privacy Policies of Government Agencies and Companies: A Study using Machine-learning-based Privacy Policy Analysis Tools. In Proceedings of the ICAART (2), Online, 4–6 February 2021; pp. 29–40. [Google Scholar]

- Zaeem, R.N.; Anya, S.; Issa, A.; Nimergood, J.; Rogers, I.; Shah, V.; Srivastava, A.; Barber, K.S. PrivacyCheck’s Machine Learning to Digest Privacy Policies: Competitor Analysis and Usage Patterns. In Proceedings of the 2020 IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT), Melbourne, Australia, 14–17 December 2020; pp. 291–298. [Google Scholar]

- Kjærulff, U. Inference in Bayesian networks using nested junction trees. In Learning in Graphical Models; Springer: Berlin/Heidelberg, Germany, 1998; pp. 51–74. [Google Scholar]

- Immuniweb® Mobile App Scanner. Available online: https://www.immuniweb.com/free/ (accessed on 30 June 2025).

| Aspect | LINDDUN | CNIL | ISO/IEC 27557 | Our Work |

|---|---|---|---|---|

| Threat Identification | Manual (data flow diagrams, checklists) | Manual (workshops, expert input) | Manual (policy/process review) | Automated (data mining, NLP) |

| Risk Quantification | Qualitative scoring | Semi-quantitative (risk matrix) | Qualitative | Quantitative (numeric scores) |

| Automation/ Scalability | Low | Low | Low | High |

| Attribute-level Analysis | Optional | Possible | No | Yes |

| Category | Advantages | Gaps / Limitations |

|---|---|---|

| Identity Theft Simulation | - Captures complex attacker/defender dynamics - Explores prevention and mitigation strategies - Uses diverse analytical tools (agent-based, ML, statistical) | - Context-specific models - Limited scalability to large or heterogeneous systems |

| Cyclic Bayesian Network | - Handles cycles in inference - Improves efficiency and tractability - Offers approximate and modular solutions | - May reduce interpretability - Requires specialized knowledge - Some accuracy trade-offs |

| Privacy Risk Assessment Frameworks | - Systematic threat/risk identification - Widely recognized standards - Support organizational compliance | - Manual, qualitative analysis - Limited automation or scalability - Attribute-level or fine-grained analysis not emphasized |

| Our Work | - Automated and scalable assessment - Attribute-level, quantitative scoring - Empirical validation with external tools | - Does not replace organizational or contextual risk analysis - May not capture all regulatory or sector-specific nuances |

| User Control | Scores: 100% (Green) | Scores: 50% (Yellow) | Scores: 0% (Red) | |

|---|---|---|---|---|

| 1 | How well does this website protect your email address? | Not asked for | Used for the intended service | Shared w/third parties |

| 2 | How well does this website protect your credit card information and address? | Not asked for | Used for the intended service | Shared w/third parties |

| 3 | How well does this website handle your Social Security number? | Not asked for | Used for the intended service | Shared w/third parties |

| 4 | Does this website use or share your location? | PII not used for marketing | PII used for marketing | PII shared for marketing |

| 5 | Does this website track or share your location? | Not tracked | Used for the intended service | Shared w/third parties |

| 6 | Does this website collect PII from children under 13? | Not collected | Not mentioned | Collected |

| 7 | Does this website share your information with law enforcement? | PII not recorded | Legal docs required | Legal docs not required |

| 8 | Does this website notify or allow you to opt out after changing their privacy policy? | Posted w/opt-out option | Posted w/o opt-out option | Not posted |

| 9 | Does this website allow you to edit or delete your information from its record? | Edit/delete | Edit only | No edit/delete |

| 10 | Does this website collect or share aggregated data related to your identity or behavior? | Not aggregated | Aggregated w/o PII | Aggregated w/PII |

| GDPR | Scores: 100% (Green) | Scores: 0% (Red) | ||

| 1 | Does this website share the user’s information with other websites only upon user consent? | Yes | No/Unanswered | |

| 2 | Does this website disclose where the company is based/user’s PII will be processed and transferred? | Yes | No/Unanswered | |

| 3 | Does this website support the right to be forgotten? | Yes | No/Unanswered | |

| 4 | If they retain PII for legal purposes after the user’s request to be forgotten, will they inform the user? | Yes | No/Unanswered | |

| 5 | Does this website allow the user the ability to reject their usage of user’s PII? | Yes | No/Unanswered | |

| 6 | Does this website restrict the use of PII of children under the age of 16? | Yes | No/Unanswered | |

| 7 | Does this website advise the user that their data are encrypted even while at rest? | Yes | No/Unanswered | |

| 8 | Does this website ask for the user’s informed consent to perform data processing? | Yes | No/Unanswered | |

| 9 | Does this website implement all of the principles of data protection by design and by default? | Yes | No/Unanswered | |

| 10 | Does this website notify the user of security breaches without undo delay? | Yes | No/Unanswered |

| Symbol | Description |

|---|---|

| i | Identity attribute |

| Prior probability of exposure of attribute i | |

| Liability value (property loss) associated with i | |

| Set of ancestor attributes of i in the BN | |

| Set of descendant attributes of i in the BN | |

| Probability of i given ancestor is exposed | |

| Accessibility of i (Equation (5)) | |

| Post Effect of i (Equation (6)) | |

| Expected loss for i (Equation (7)) | |

| Normalized risk score for i | |

| Probability distribution vector for X (i.e., ) | |

| Shannon entropy of random variable X | |

| Shannon entropy of probability distribution | |

| Random variable for PrivacyCheck question q | |

| Sum of entropies across all PrivacyCheck questions |

| App | PPA Scores (%) | ImmuniWeb |

|---|---|---|

| Wiki | 43.63 | Low |

| Firefox Focus | 47.99 | Low |

| Kodi | 48.79 | Low |

| QsmFurthermore, | 54.51 | Low |

| Duckduckgo | 67.39 | Medium |

| OpenVPN | 68.92 | Medium |

| Signal Private Messenger | 69.32 | Medium |

| Ted | 71.82 | Low |

| Blockchain Wallet | 73.67 | Medium |

| Telegram | 73.99 | Medium |

| Privacy Factor | Accuracy (%) |

|---|---|

| Contact Information Sharing | 73 |

| PII Usage | 72 |

| Third-Party Sharing | 63 |

| Data Retention | 60 |

| Location Data | 60 |

| Data Breach Notification | 57 |

| PII Collection Purpose | 57 |

| Children’s Privacy | 52 |

| Policy Change Notification | 44 |

| Data Aggregation/Profiling | 40 |

| Tool | Coverage (Out of 50) | Average Accuracy (%) |

|---|---|---|

| PrivacyCheck | 50 | 60 |

| Privee | 50 | 74 |

| ToS;DR | 14 | 57 |

| Usable Privacy | 14 | 59 |

| P3P | 1 | 100 |

| CNIL Step | PPA Score Approach | PrivacyCheck Score Approach |

|---|---|---|

| Identify Threats | Models the exposure of identity attributes as distinct privacy threats for each app or device. | Assesses the presence and coverage of privacy policy controls related to potential threats, such as unauthorized sharing or processing. |

| Assess Severity of Impact | Assigns a loss value to each identity attribute, reflecting its sensitivity and potential consequences of exposure. | Addresses severity indirectly, as some policy questions focus on sensitive data types (e.g., SSN, location). |

| Estimate Likelihood of Occurrence | Calculates the probability of exposure for each attribute, based on observed or modeled data flows in the ecosystem. | Higher PrivacyCheck scores indicate stronger policy controls, which are associated with a lower likelihood of privacy risks occurring. |

| Combine to Assess Risk | Aggregates probability and loss values into an overall risk score for each app (e.g., risk = probability × loss). | Provides a composite policy score as a proxy for the app’s overall risk mitigation practices and compliance. |

| Subgraph | PC | EW | AC | PE | PR |

|---|---|---|---|---|---|

| S1 | 0.031 | 0.915 | 0.052 | 0.216 | 0.896 |

| S2 | 0.169 | 1.00 | 0.487 | 0.019 | 0.901 |

| S3 | 0.806 | 0.999 | 0.333 | 0.434 | 0.934 |

| S4 | 0.019 | 0.914 | 0.445 | 0.107 | 0.913 |

| S5 | 0.007 | 0.700 | 0.405 | 0.146 | 0.917 |

| S6 | 0.524 | 0.897 | 0.336 | 0.035 | 0.899 |

| S7 | 0.058 | 0.800 | 0.515 | 0.379 | 0.903 |

| S8 | 1.00 | 0.901 | 0.228 | 0.172 | 0.914 |

| S9 | 0.089 | 0.885 | 0.269 | 0.320 | 0.900 |

| S10 | 0.552 | 0.781 | 0.336 | 0.714 | 1.00 |

| S11 | 0.086 | 0.786 | 0.430 | 0.001 | 0.898 |

| S12 | 0.069 | 0.699 | 1.00 | 0.148 | 0.922 |

| S13 | 0.012 | 0.584 | 0.268 | 0.513 | 0.973 |

| S14 | 0.001 | 0.896 | 0.353 | 0.055 | 0.902 |

| S15 | 0.126 | 0.900 | 0.568 | 1.00 | 0.968 |

| AVG | 0.237 | 0.844 | 0.402 | 0.284 | 0.923 |

| Feature | Coefficient | p-Value |

|---|---|---|

| Control Score | +0.123 | 0.267 |

| GDPR Score | +0.273 | <0.001 |

| Data Type | Relevant Questions |

|---|---|

| Control Q1, GDPR Q1 | |

| Credit Card/Address | Control Q2, GDPR Q7 |

| SSN | Control Q3, GDPR Q5 |

| Location | Control Q5, GDPR Q1, GDPR Q7 |

| Children’s Data/Age | Control Q6, GDPR Q6 |

| General PII Sharing | Control Q4, Control Q7, GDPR Q2, GDPR Q8 |

| Data Retention / Deletion | Control Q9, GDPR Q3, GDPR Q4 |

| Behavioral/Aggregated | Control Q10, GDPR Q9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, K.-C.; Barber, S. Entropy-Based Correlation Analysis for Privacy Risk Assessment in IoT Identity Ecosystem. Entropy 2025, 27, 723. https://doi.org/10.3390/e27070723

Chang K-C, Barber S. Entropy-Based Correlation Analysis for Privacy Risk Assessment in IoT Identity Ecosystem. Entropy. 2025; 27(7):723. https://doi.org/10.3390/e27070723

Chicago/Turabian StyleChang, Kai-Chih, and Suzanne Barber. 2025. "Entropy-Based Correlation Analysis for Privacy Risk Assessment in IoT Identity Ecosystem" Entropy 27, no. 7: 723. https://doi.org/10.3390/e27070723

APA StyleChang, K.-C., & Barber, S. (2025). Entropy-Based Correlation Analysis for Privacy Risk Assessment in IoT Identity Ecosystem. Entropy, 27(7), 723. https://doi.org/10.3390/e27070723