Abstract

Hyperparameter optimization (HPO), which is also called hyperparameter tuning, is a vital component of developing machine learning models. These parameters, which regulate the behavior of the machine learning algorithm and cannot be directly learned from the given training data, can significantly affect the performance of the model. In the context of relevance vector machine hyperparameter optimization, we have used zero-mean Gaussian weight priors to derive iterative equations through evidence function maximization. For a general Gaussian weight prior and Bayesian linear regression, we similarly derive iterative reestimation equations for hyperparameters through evidence function maximization. Subsequently, after using relative entropy and Bayesian optimization, the aforementioned non-closed-form reestimation equations can be partitioned into E and M steps, providing a clear mathematical and statistical explanation for the iterative reestimation equations of hyperparameters. The experimental result shows the effectiveness of the EM algorithm of hyperparameter optimization, and the algorithm also has the merit of fast convergence, except that the covariance of the posterior distribution is a singular matrix, which affects the increase in the likelihood.

1. Introduction

In machine learning, parameters are classified into two categories: model parameters, which are internal and configurable, and hyperparameters, which are external and cannot be estimated from data. Model parameters include the weights in a deep neural network, for example. Hyperparameters include batch size, the learning rate, and the number of hidden layers in a neural network [1]. Hyperparameter optimization (HPO) represents a pivotal aspect of machine learning model training, including the process of fine-tuning the hyperparameters of a model to improve its performance. Hyperparameters are parameters that are set before the learning process begins. The process of tuning these hyperparameters can significantly affect the model’s accuracy and generalization ability [2]. There are some main hyperparameter optimization methods as follows. Grid search: Grid search is the brute-force way of searching hyperparameters [3], with defined lower and higher bounds along with specific steps [4]. Grid search works based on the Cartesian product of the different sets of values, evaluates every configuration, and returns the combination with the best performance [5]. Grid search is a simple implementation that, however, can be highly inefficient for large search spaces due to its exhaustive nature. This problem is further compounded as data dimensionality increases. Random search: Random search involves randomly sampling hyperparameter combinations from a predefined search space. While less computationally intensive than grid search, it can often identify superior hyperparameter configurations because of its ability to explore the search space more efficiently [6,7]. Genetic algorithms: Genetic algorithms are inspired by the process of natural selection, whereby a population of candidate solutions (hyperparameter configurations) evolves over multiple generations [8,9]. They are useful for exploring large search spaces and can handle discrete and nonlinear search spaces effectively. Gradient-based optimization: Gradient-based optimization treats hyperparameters as continuous variables [10,11,12]. This approach is often used in neural network architectures and can be efficient for optimizing large-scale models. Bayesian optimization: Bayesian optimization employs a probabilistic model of the objective function (model performance) to identify the most promising hyperparameter configurations for evaluation. This approach is more efficient than grid and random search, particularly in the context of high-dimensional and noisy optimization problems [13,14,15,16]. Recently, automated machine learning (AutoML) platforms also have been applied to practical problems, which automate the entire machine learning pipeline, including data preprocessing, model selection, feature engineering, and hyperparameter optimization. They leverage various optimization techniques to find the best model configuration automatically and make machine learning more accessible to users with limited expertise in data science and machine learning by automating repetitive tasks and reducing the need for manual intervention. This allows experts to focus more on understanding the problem domain and interpreting the results rather than spending time on technical details [17,18,19,20]. Particle swarm optimization (PSO) is a popular algorithm for hyperparameter optimization recently. It is simple to implement and explores the global search space efficiently [21,22,23]. However, methods based on gradients, grid search, random search, genetic algorithms, and particle swarm optimization all lack rigorous mathematical explanation; they are more computational logic. Additionally, grid search, random search, and particle swarm optimization are all brute-force methods that are time-consuming and labor-intensive. Our proposed hyperparameter optimization EM algorithm, based on Bayesian optimization theory and relative entropy, has a strict mathematical derivation and explanation. The simulation results show that the algorithm has the advantage of fast convergence.

The relevance vector machine (RVM) represents a Bayesian sparse kernel technique that has been developed for the purpose of regression tasks. The RVM model for regression is linear, whose weight prior is Gaussian, defined by the following form [24]

where is a hyperparameter and .

In this paper, we will propose a Bayesian hyperparameter optimization in terms of a more general form of weight prior, defined as follows:

where is also a hyperparameter and .

We designate weight prior (2) as the general Gaussian weight prior (GGWP). Initially, leveraging evidence function maximization and GGWP, we derive non-closed-form iteratively reestimation equations for hyperparameters. Subsequently, we partition the aforementioned non-closed-form reestimation equations into E step and M step, elucidating the hyperparameter reestimation equations mathematically and statistically.

2. Related Mathematical Knowledge

Lemma 1.

.

Lemma 2.

.

The proofs of both Lemmas 1 and 2 refer to [24,25,26,27]

Definition 1.

Let be gradient and is the operator such that for ,

where .

The symbolic powers are referred to as operators that act upon a multivariate function with variables.

Lemma 3

(multivariate Taylor’s expansion). Let be a multivariate function, where and . For

, then there exists such that

Both Definition 1 and proof of Lemma 3 in detail refer to [28].

Corollary 1.

A quadratic function is defined as follows:

where is a scalar constant, and are both n-dimensional column vectors as well as is a n-dimensional inverse and symmetric matrix. Then, at the stationary point , we obtain the Taylor expansion of the function as follows:

where elements of the Hessian matrix are defined by

The proof of Corollary 1 refers to [29] in detail.

3. HPO via Maximization of the Evidence Function

3.1. Bayesian Linear Regression and Linear Basis Function Models

The linear model for regression is given with linear combinations of fixed nonlinear functions of the input variables as follows:

where is called bias parameter and is a basis function. For the sake of convenience, setting .

We rewrite (3) in matrix form [24]:

where and .

The target variable for regression is defined as the sum of a random noise and a deterministic function as follows:

where the noise is Gaussian, whose mean is zero and precision is (inverse variance). So, we obtain

Now, , called the input dataset, is now considered in conjunction with its target value vector . These data points are all drawn independently from the Gaussian distribution (6) based on our assumption. Thus, the likelihood function is obtained as follows:

According to (7), we define corresponding conjugate prior as follows:

From (7) and (8), we can shortly obtain the following posterior distribution:

where

The proofs of both (10) and (11) in detail refer to Appendix A in [29].

3.2. Evidence Approximation and Bayesian Model Comparison

Let us suppose we are presented with a series of models , . We compare these models and then choose the optimal one according to a Bayesian perspective, aiming to mitigate the risk of overfitting commonly associated with maximum likelihood approaches. We express uncertainty by a prior probability . In the context of a training set, it is reasonable to assume that all models have the same prior probability. This assumption is consistent with the notion that, in practice, there should be no inherent preference for any specific models . The objective is thus to assess the following posterior distribution given the dataset ,

The posterior distribution represents the model evidence, also known as the marginal likelihood. This term is interpreted as a likelihood function [24].

In this section, we present a fully Bayesian treatment based on the introduction of priors over the hyperparameters , and . The derivation of predictions can be accomplished by using the marginalization over these hyperparameters and the weight parameter. Nevertheless, it is difficult to completely marginalize all the variables in the analytical process. Consequently, we propose an approximation approach to identify these hyperparameters , and by the maximization of the marginal likelihood function after integrating over these parameters. In statistics, the method is commonly referred to as empirical Bayes [30,31], type 2 maximum likelihood [32], or generalized likelihood [33]. In the field of machine learning, it is frequently referred to as evidence approximation. [24,34]. Furthermore, a hyperprior over the parameters , and is introduced first. Subsequently, the following predictive distribution is obtained:

where is given according to (6) and is determined from (9).

If the posterior exhibits a sharp peak around , and , the predictive distribution can be derived by marginalizing over , where we set the hyperparameters to the value , and . So, we obtain

The posterior for the hyperparameters is expressed as

The values of , , and can be derived by the maximization of the marginal likelihood function based on the evidence approximation, particularly when the prior is relatively flat. By continuously evaluating the marginal likelihood, it is possible to identify its maxima, which enables the values of these hyperparameters to be determined solely from the training dataset.

3.3. The Evidence Function Evaluation

From (7), we derive the following result:

From (2), the following result is obtained:

It can be demonstrated that the posterior with respect to weight parameter is Gaussian as follows:

where

where .

The proofs of both (20) and (21) are detailed in Appendix A.

The evidence approximation is utilized to evaluate the hyperparameters. We obtain the marginal likelihood function (evidence function) by integrating out the weight parameters as follows:

Then, we obtain

where .

The proof of (23) is detailed in Appendix B.

In terms of (23), we take the logarithm of the marginal likelihood function, also called the log marginal likelihood function, and obtain the following result:

For convenience, we abbreviate the marginal likelihood function as the likelihood and the log marginal likelihood function as the log likelihood. Subsequently, the log likelihood will be maximized.

3.4. The Evidence Function Maximization

Now, is maximized with respect to . From (24) and Lemma 1, we derive the following result:

By utilization of (21), (25), and Lemma 1, we get the following result:

where we have used

By making use of (26), the following result was obtained

where represents the ith component of the principal diagonal of the covariance matrix .

The following result is obtained:

By making use of (27) and (28), the stationary point is obtained regarding such that

in which is the ith element of the matrix .

From (29), we obtain the following result:

The derivative of is obtained regarding by applying Lemma 2 and (21) as follows:

The application of Lemma 2 yields the following result.

We also get

From (32) and (33), the stationary point is obtained regarding by satisfying the following conditions:

From (34), we obtain

Let , we obtain

From the above derivation, we know that (30), (35) and (36) are non-closed-form reestimation equations for , and . In order to solve the problem, an iterative procedure is presented. Initially, after the mean and covariance are both computed using (20) and (21) by giving initial value , and , respectively, we reestimate hyperparameters alternately by employing (30), (35) and (36). We again alternately employ (20) and (21) to recompute the mean and covariance, repeating this process until a suitable standard for convergence is met. Nevertheless, it remains unclear whether this approach is accurate, as outlined in the reestimate iterative procedure. To gain a clearer understanding of the iterative reestimation equations, we will turn to the EM algorithm to analyze them from both mathematical and statistical perspectives.

4. HPO EM Algorithm via Bayesian Optimization and Relative Entropy

The expectation maximization (EM) algorithm is a highly effective and sophisticated approach for identifying maximum likelihood solutions within probabilistic models that incorporate latent variables [35,36,37].

The EM algorithm was derived by first treating the weights as latent variables, a process that is inherently straightforward. The log marginal likelihood function is then obtained by the weight parameters marginalization over the joint distribution

where is equal to the product of the prior and the likelihood given by the following result:

From Jensen’s inequality, we obtain a lower bound on as follows:

where is the variational probability distribution over the weights and denotes all other parameters. The EM algorithm is designed to maximize the log marginal likelihood function by using iterative maximization of the lower bound. For the E step, we maximize regarding the probability distribution for fixed hyperparameters , and . For the M step, we maximize regarding the hyperparameters , and for the fixed probability distribution . We rewrite the lower bound to enhance the understanding of the E step:

where is the relative entropy, also called Kullback–Leibler (KL) divergence. The KL divergence is always greater than or equal to zero, which is zero only and only if the two distributions are equal. The E step corresponds thus to equating the posterior on with the distribution , which means and [38]. Given that the posterior probability distribution is also Gaussian, the E step is reduced to the computation of its mean matrix and covariance matrix defined by (20) and (21), respectively.

To enforce the M step, is rewritten as a distinct form:

in which clearly is irrelevant to , , and and is the entropy of .

In order to perform the M step, from (40), we obtain

Finally, we obtain the following result:

The proof of (42) is derived in detail in Appendix C.

The stationary point of (42) is obtained with ease regarding , , and such that

So, the update laws are obtained as follows

The goal of the update equations is to achieve the maximization of the log marginal likelihood by the proposed EM algorithm. This result is also the same as maximizing the evidence function.

Finally, let us provide an intuitive explanation of our proposed EM algorithm for hyperparameter optimization. Firstly, we generate initial random hyperparameters values , , , which allows us to “guess” the initial hyperparameter. Then, we compute(guess) , , again by (46), (47), and (48). Subsequently, the log marginal likelihood is evaluated. By repeating this process, the algorithm alternates between refining the guesses and the log marginal likelihood gradually increases until it no longer changes—that is, once it has converged, the algorithm stops making further guesses for hyperparameters , , . This also implies that the hyperparameters have converged. Checking the convergence of the log likelihood function is easier to implement programmatically than checking the convergence of the hyperparameters. Different initial values for hyperparameters may lead to different local optima while the likelihood converges.

5. Experimental Set-Up

5.1. Synthetic Data

The parameter either is integrated out or is considered latent variables, so after sampling from a Gaussian distribution, its goal is to construct .

We firstly generate hyperparameters , , and randomly and choose a suitable linear basis function to get and . Subsequently, the EM algorithm is applied in order to maximize the likelihood or the log likelihood. The procedure is presented in detail as Algorithm 1:

| Algorithm 1: HPO EM algorithm for synthetic data | |

| 1. Generate randomly hyperparameters value , , and the dataset | |

| 2. Choose a suitable linear basis function to get an matrix by using (12). | |

| 3. Generate parameters value randomly sampled by (2). | |

| 4. Generate N-dimensional vector sampled randomly by the Gaussian distribution and then generate by (4) and (5), respectively. | |

| 5. E step. Compute the mean and covariance using the current hyperparameter values. | |

| , | (49) |

| . | (50) |

| 6. M step. Estimate again the hyperparameters by employing the mean and covariance obtained by step 5 and the following update equations | |

| , | (51) |

| , | (52) |

| . | (53) |

| 7. Compute the likelihood function or the log likelihood function given by the following result: | |

| or | |

| and then determine the convergence of the hyperparameters or the likelihood. If convergence is not satisfied, back to step 5. If the likelihood or the log likelihood converges, then the algorithm’s computational complexity is . | |

We apply Algorithm 1 to optimize hyperparameters value , , and , where the linear basis function is a Gaussian basis function. We have difficulty judging the convergence of hyperparameter values , , and , so we turn to judge the convergence of the likelihood or the log likelihood. It is reasonable because the convergence of hyperparameter values , , and means the likelihood or the log likelihood keeps constant; in other words, the convergence of the likelihood or the log likelihood. This is much easier to implement programmatically.

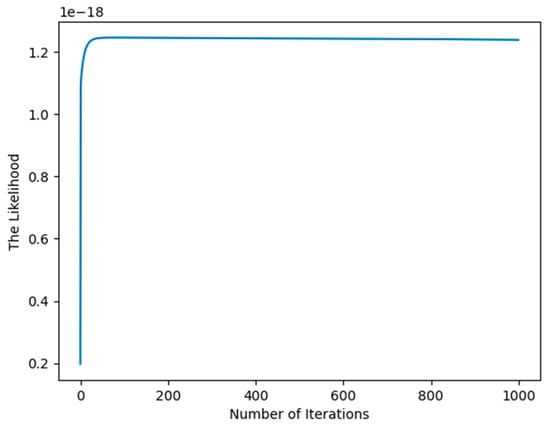

From Figure 1, we see that after approximately 10 iterations, the likelihood converges, so we can draw the conclusion that the EM algorithm has the advantage of fast convergence, which comes from strict interpretation mathematically and statistically.

Figure 1.

Illustration of the convergence of the EM algorithm for synthetic data.

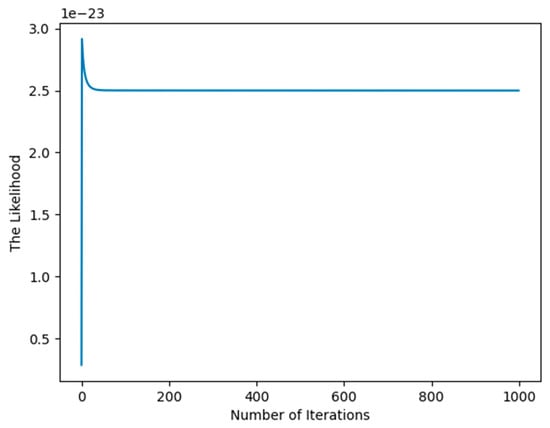

In the process of experimental set-up, the covariance of the posterior distribution defined by (50) may easily be a singular matrix, so we have to use a pseudo-inverse matrix to evaluate , which may produce the imprecise result that affects the increase in the likelihood function as shown in Figure 2.

Figure 2.

Illustration of singular covariance affecting the increase in the likelihood.

The singularity for arises from the initial value of the hyperparameter values , , and , the simplest and intuitive method is to randomly generate initial values of , , and again and again when the singularity occurs until the convergence criterion is met like Figure 2.

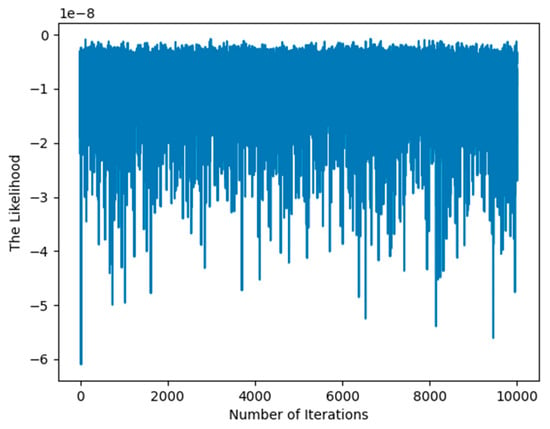

Particle swarm optimization (PSO) is an intuitive and computationally efficient metaheuristic that is highly effective for hyperparameter optimization across a wide range of machine learning models. For the same likelihood function, we use PSO to optimize the hyperparameter values , , and , as shown in Figure 3. After 10,000 iterations, the likelihood function still has not converged. This highlights the advantage of our proposed method’s fast convergence, which is attributed to its rigorous mathematical foundation.

Figure 3.

Illustration of convergence of the likelihood base on PSO.

5.2. The Diabetes Dataset

The diabetes dataset contains data from 442 diabetes patients, with each patient having measurements for 10 baseline variables, including age, sex, body mass index, average blood pressure, and six blood serum measurements. In addition, each patient has a response variable that represents a quantitative measure of disease progression one year after the baseline. This dataset is commonly used for predicting the progression of the disease and is one of the most frequently used datasets in machine learning. It is particularly well suited for research on regression problems.

Firstly, from the diabetes dataset, we obtain the input dataset , a matrix and the target value . We then generate hyperparameters , , randomly and choose a suitable linear basis function to get . Subsequently, the EM algorithm is applied in order to maximize the likelihood or the log likelihood. The procedure is presented in detail as Algorithm 2:

| Algorithm 2: HPO EM algorithm for the diabetes dataset | |

| 1. Generate randomly hyperparameters value , , . | |

| 2. Choose a suitable linear basis function to get an matrix by using (12). | |

| 3. E step. Compute the mean and covariance using the current hyperparameter values. | |

| (54) | |

| (55) | |

| 4. M step. Estimate again the hyperparameters by employing the mean and covariance obtained by step 3 and the following update equations | |

| (56) | |

| (57) | |

| (58) | |

| 5. Compute the likelihood function or log likelihood function given by the following result: | |

| or | |

| and then determine the convergence of the hyperparameters or the likelihood. If convergence criterion is not satisfied, go back to step 3. | |

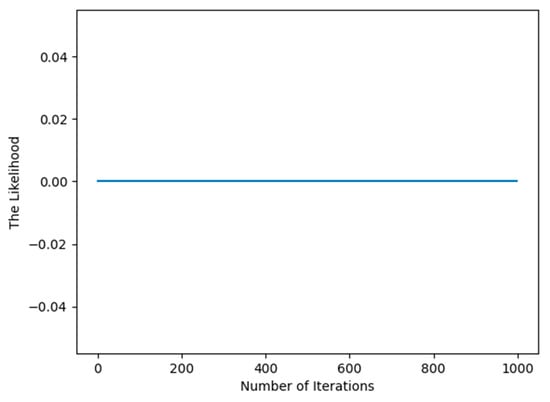

From Figure 4, we can also draw the same conclusion that the EM algorithm has the advantage of fast convergence for the famous dataset of machine learning after the first iteration.

Figure 4.

Illustration of the convergence of the EM algorithm for the diabetes dataset.

6. Conclusions

In this paper, we present the general Gaussian weight prior rather than zero-mean Gaussian weight priors for the hyperparameter optimization in the field of machine learning. We firstly derive non-closed-form iterative reestimation equations for hyperparameters by evidence function maximization. Though we know how to optimize the hyperparameters, we can’t understand the iterative reestimation equations clearly. To better understand non-closed-form reestimation equations more clearly, we have to resort to the EM algorithm. After using Bayesian theory and optimization, the EM algorithm partitions the iterative reestimation equations into E and M steps, which provides a clear interpretation for the iterative reestimation equations both mathematically and statistically. The experimental result shows the effectiveness of the EM algorithm of hyperparameter optimization, and the EM algorithm also has the advantage of fast convergence, except that the variance of the posterior distribution defined by (50) is a singular matrix, which affects the increase in the likelihood.

Author Contributions

Conceptualization, D.Z. and C.M.; methodology, D.Z.; software, D.Z. and P.W.; validation, D.Z., C.M. and P.W.; formal analysis, D.Z.; investigation, D.Z.; resources, Y.G.; data curation, Y.G.; writing—original draft preparation, D.Z. and C.M.; writing—review and editing, D.Z. and P.W.; visualization, D.Z.; supervision, C.M.; project administration, C.M.; funding acquisition, Y.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Fundamental Research Funds for the Universities in Heilongjiang Province (Project Numbers: YWF10236220242, YWF10236240126).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

From (17) and (18), the following result is obtained

and

From (10), (11), and using and , we obtain

Appendix B

By substituting (17) and (18) into (22), the following result is obtained

together with

Firstly, we compute the gradient of and let it be zero. Then, we obtain the stationary point of as follows:

Let

Then, we obtain the following result:

We obtain the following result by using Corollary 1:

along with

Thus, we obtain

where we have employed the following result:

Appendix C

We obtain the following the result from the first term of (41)

where is used.

We get the following result:

where we have used , where is the expectation over the given probability distribution . is the trace of a matrix.

Finally, we get

The second term of (41) is now computed, and then the following result is obtained:

The following result is obtained

where is used.

The result is obtained as follows:

Finally, we get

References

- Alibrahim, H.; Ludwig, S.A. Hyperparameter Optimization: Comparing Genetic Algorithm against Grid Search and Bayesian Optimization. In Proceedings of the 2021 IEEE Congress on Evolutionary Computation (CEC), Krakow, Poland, 28 June–1 July 2021. [Google Scholar]

- Algorain, F.T.; Alnaeem, A.S. Deep Learning Optimisation of Static Malware Detection with Grid Search and Covering Arrays. Telecom 2023, 4, 249–264. [Google Scholar] [CrossRef]

- Claesen, M.; Simm, J.; Popovic, D.; Moreau, Y.; Moor, B.D. Easy Hyperparameter Search Using Optunity. arXiv 2014. [Google Scholar] [CrossRef]

- Syarif, I.; Prugel-Bennett, A.; Wills, G. SVM parameter optimization using grid search and genetic algorithm to improve classification performance. TELKOMNIKA (Telecommun. Comput. Electron. Control.) 2016, 14, 1502–1509. [Google Scholar] [CrossRef]

- Liu, B. A Very Brief and Critical Discussion on AutoML. arXiv 2018. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R. Practical Bayesian Optimization of Machine Learning Algorithms. Adv. Neural Inf. Process. Syst. 2012, 4, 1–9. [Google Scholar]

- Di Francescomarino, C.; Dumas, M.; Federici, M.; Ghidini, C.; Maggi, F.M.; Rizzi, W.; Simonetto, L. Genetic Algorithms for Hyperparameter Optimization in Predictive Business Process Monitoring. Inf. Syst. 2018, 74, 67–83. [Google Scholar] [CrossRef]

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. J. Mach. Learn. Res. 2019, 20, 1997–2017. [Google Scholar]

- Thiede, L.A.; Parlitz, U. Gradient based hyperparameter optimization in Echo State Networks. Neural Netw. 2019, 115, 23–29. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y. Practical Recommendations for Gradient-Based Training of Deep Architectures. In Neural Networks: Tricks of the Trade, 2nd ed.; Montavon, G., Orr, G.B., Müller, K.-R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 437–478. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Kégl, B.; Bengio, Y. Algorithms for Hyper-Parameter Optimization. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Granada, Spain, 12–15 December 2011. [Google Scholar]

- Snoek, J.; Rippel, O.; Swersky, K.; Kiros, R.; Satish, N.; Sundaram, N.; Patwary, M.; Prabhat, M.; Adams, R. Scalable Bayesian Optimization Using Deep Neural Networks. arXiv 2015. [Google Scholar] [CrossRef]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; Freitas, N.D. Taking the Human Out of the Loop: A Review of Bayesian Optimization. Proc. IEEE 2016, 104, 148–175. [Google Scholar] [CrossRef]

- Yao, C.; Cai, D.; Bu, J.; Chen, G. Pre-training the deep generative models with adaptive hyperparameter optimization. Neurocomputing 2017, 247, 144–155. [Google Scholar] [CrossRef]

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.; Blum, M.; Hutter, F. Efficient and robust automated machine learning. Adv. Neural Inf. Process. Syst. 2015, 28, 2944–2952. [Google Scholar]

- Kaul, A.; Maheshwary, S.; Pudi, V. AutoLearn—Automated Feature Generation and Selection. In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), Orleans, LA, USA, 18–21 November 2017. [Google Scholar]

- Jin, H.; Song, Q.; Hu, X. Auto-Keras: An Efficient Neural Architecture Search System. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019. [Google Scholar] [CrossRef]

- Salehin, I.; Islam, M.S.; Saha, P.; Noman, S.M.; Tuni, A.; Hasan, M.M.; Baten, M.A. AutoML: A systematic review on automated machine learning with neural architecture search. J. Inf. Intell. 2024, 2, 52–81. [Google Scholar] [CrossRef]

- Chartcharnchai, P.; Jewajinda, Y.; Praditwong, K. A Categorical Particle Swarm Optimization for Hyperparameter Optimization in Low-Resource Transformer-Based Machine Translation. In Proceedings of the 28th International Computer Science and Engineering Conference (ICSEC), Khon Kaen, Thailand, 6–8 November 2024. [Google Scholar]

- Indrawati, A.; Wahyuni, I.N. Enhancing Machine Learning Models through Hyperparameter Optimization with Particle Swarm Optimization. In Proceedings of the International Conference on Computer, Control, Informatics and Its Applications (IC3INA), Bandung, Indonesia, 4–5 October 2023. [Google Scholar]

- Marchisio, A.; Ghillino, E.; Curri, V.; Carena, A.; Bardella, P. Particle swarm optimization hyperparameters tuning for physical-model fitting of VCSEL measurements. In Proceedings of the SPIE OPTO, San Francisco, CA, USA, 27 January–1 February 2024. [Google Scholar]

- Bishop, M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; JHU Press: Baltimore, MD, USA, 2013. [Google Scholar]

- Lütkepohl, H. Handbook of Matrices; John Wiley & Sons: Hoboken, NJ, USA, 1997. [Google Scholar]

- Aggarwal, C. Linear Algebra and Optimization for Machine Learning; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Simovici, D. Mathematical Analysis for Machine Learning and Data Mining; World Scientific: Singapore, 2018. [Google Scholar]

- Zou, D.; Tong, L.; Wang, J.; Fan, S.; Ji, J. A Logical Framework of the Evidence Function Approximation Associated with Relevance Vector Machine. Math. Probl. Eng. 2020, 2020, 2548310. [Google Scholar] [CrossRef]

- Bernardo, M.; Smith, A.F. Bayesian Theory; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Gelman, A.; Carlin, J.B.; Stern, H.S.; Rubin, D.B. Bayesian Data Analysis; Chapman and Hall/CRC: Boca Raton, FL, USA, 1995. [Google Scholar]

- Berger, J.O. Statistical Decision Theory and Bayesian Analysis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Wahba, G. A comparison of GCV and GML for choosing the smoothing parameter in the generalized spline smoothing problem. Ann. Stat. 1985, 13, 1378–1402. [Google Scholar] [CrossRef]

- MacKay, D.J.C. Bayesian Interpolation. Neural Comput. 1992, 4, 415–447. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- McLachlan, G.J.; Krishnan, T. The EM Algorithm and Extensions, 2nd ed.; Wiley-Interscience: Hoboken, NJ, USA, 2008. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. 1977, 39, 1–22. [Google Scholar] [CrossRef]

- Quinonero-Candela, J. Sparse probabilistic linear models and the RVM. In Learning with Uncertainty: Gaussian Processes and Relevance Vector Machines; Technical University of Denmark: Lyngby, Denmark, 2004. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).