Abstract

This study introduces an innovative two-stage framework for monitoring and diagnosing high-dimensional data streams with sparse changes. The first stage utilizes an exponentially weighted moving average (EWMA) statistic for online monitoring, identifying change points through extreme value theory and multiple hypothesis testing. The second stage involves a fault diagnosis mechanism that accurately pinpoints abnormal components upon detecting anomalies. Through extensive numerical simulations and electron probe X-ray microanalysis applications, the method demonstrates exceptional performance. It rapidly detects anomalies, often within one or two sampling intervals post-change, achieves near 100% detection power, and maintains type-I error rates around the nominal 5%. The fault diagnosis mechanism shows a 99.1% accuracy in identifying components in 200-dimensional anomaly streams, surpassing principal component analysis (PCA)-based methods by 28.0% in precision and controlling the false discovery rate within 3%. Case analyses confirm the method’s effectiveness in monitoring and identifying abnormal data, aligning with previous studies. These findings represent significant progress in managing high-dimensional sparse-change data streams over existing methods.

1. Introduction

In recent years, the rapid advancement of modern sensing and data acquisition technologies has enabled the real-time collection of high-dimensional data streams across diverse industrial applications. Such data streams often exhibit shifts in statistical properties, transitioning from an in-control (IC) state to an out-of-control (OC) state due to anomalies. For instance, in electron probe X-ray microanalysis, real-time monitoring of compositional changes in materials requires the detection of subtle shifts in high-dimensional spectral data, which may occur either abruptly or gradually. Addressing such scenarios requires robust online monitoring and fault diagnosis methods tailored for high-dimensional and sparsely changing data streams.

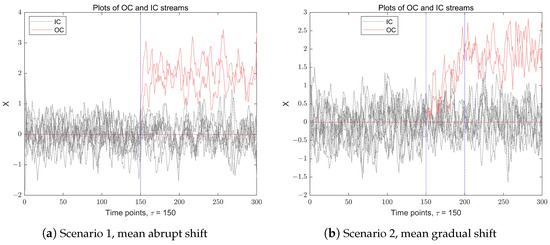

Before proceeding, for an intuitive explanation, we present a simple example to illustrate IC and OC observations in a multidimensional data stream. Figure 1 illustrates a p-dimensional () time series data stream recorded over time points. The IC observations, denoted as , are independently and identically distributed (i.i.d.) multivariate normal random variables with the mean vector , and a covariance matrix . After a change point , the components of the observations experience an anomaly, resulting in a shift in the data stream. The figure presents two distinct scenarios of mean-shift anomalies in a multidimensional data stream: the left subfigure depicts a mean abrupt shift scenario, where the change occurs suddenly after a time point , while the right subfigure illustrates a mean gradual shift scenario, where the shift changes in a duration of about a 50-time-point interval, ultimately stabilizing when .

Figure 1.

The data stream with IC data (gray) and OC data (red), when , , , , . The left subfigure is the mean abrupt shift case, and the right subfigure is the mean gradual shift case.

In the examples mentioned, the change points in the data stream are easily identifiable due to the low dimensionality, allowing easy visual detection. However, in real-world applications, monitoring complex and high-dimensional data streams is more challenging. Anomalies may only appear in a small subset of variables at any given time, and the intricate interactions and high dimensionality of these variables complicate online monitoring and fault detection. This is especially true when pinpointing the exact time and specific elements that show abnormal variations. For instance, in semiconductor manufacturing, subtle sensor anomalies may indicate critical equipment issues, while in energy infrastructure, gradual pressure drifts could signal potential failures. Timely detection and diagnosis of such anomalies are crucial for operational safety and efficiency. Yet, the high dimensionality, sparsity of changes, and complex variable interdependencies present significant challenges to existing monitoring and diagnostic frameworks.

In order to overcome these challenges, a series of articles have been developed on the monitoring and diagnosis of high-dimensional data streams. Here, we roughly divide them into the following three categories.

(1) Statistical and Control Chart-Based Methods. Statistical and control chart-based methods are widely employed for monitoring high-dimensional data streams, with prominent techniques including Hotelling’s , Multivariate Exponentially Weighted Moving Average (MEWMA), Multivariate Cumulative Sum (MCUSUM), and principal component analysis (PCA)-based monitoring. Zou et al. (2015) [1] introduce a robust control chart utilizing local CUSUM statistics, demonstrating effective detection capabilities across both sparse and dense scenarios, albeit with certain limitations in parameter assumptions and implementation complexity. Ebrahimi et al. (2021) [2] developed an adaptive PCA-based monitoring method incorporating compressed sensing principles and adaptive lasso for change source identification, though its computational demands may be substantial for large-scale datasets. Li (2019) [3] proposes a flexible two-stage monitoring procedure with user-defined IC average run length (ARL) and type-I error rates, which requires meticulous calibration of control limits for both stages. For comprehensive reviews of online monitoring and diagnostic methods based on statistical process control (SPC), refer to studies such as [4,5,6,7,8,9,10,11,12].

(2) Information-Theoretic and Entropy-Based Methods. Entropy-based measures, including Shannon entropy, Renyi entropy, and approximate entropy, are widely used to assess the complexity and randomness of data streams in monitoring and diagnostics. Recent research has made significant progress in both theoretical and practical aspects of this field. Mutambik (2024) [13] develops E-Stream, an entropy-based clustering algorithm for real-time high-dimensional Internet of Things (IoT) data streams. By incorporating an entropy-based feature ranking method within a sliding window framework, the algorithm reduces dimensionality, improving clustering accuracy and computational efficiency. However, its dependence on manual parameter tuning restricts its adaptability to diverse datasets. Wan et al. (2024) [14] propose an entropy-based method for monitoring pressure pipelines using acoustic signals. Their approach combines Denoising Autoencoder (DAE) and Generative Adversarial Network (GAN) with entropy-based loss functions for noise reduction. While effective, the adversarial training process requires substantial computational resources, limiting its feasibility for real-time applications in resource-constrained environments. For a deeper understanding of recent advancements in entropy-based theories, methods, and applications for data stream monitoring and diagnostics, see [15,16,17,18,19]. These studies highlight the latest developments in this area.

(3) Machine Learning and Data-Driven Methods. In recent years, machine learning and data-driven methods have become increasingly important for monitoring and diagnostics in various fields. Online learning techniques, such as Online Support Vector Machines (OSVMs), Online Random Forests, and Stochastic Gradient Descent (SGD), have been successfully applied to real-time monitoring tasks. Additionally, deep learning models like Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and autoencoders have shown strong performance in capturing temporal patterns and detecting anomalies in high-dimensional data streams. Recent developments in this area include OLFA (Online Learning Framework for sensor Fault diagnosis Analysis) by Yan et al. (2023) [20], which provides a solution for real-time fault diagnosis in autonomous vehicles by addressing the non-stationary nature of sensor faults. Chen et al. (2024) [21] present a real-time fault diagnosis method for multisource heterogeneous information fusion based on two-level transfer learning (TTDNN), focusing on gearbox dataset, bearing dataset, etc. Another important contribution is from Li et al. (2025) [22], who propose a novel framework combining Deep Reinforcement Learning (DRL) with SPC for online monitoring of high-dimensional data streams under limited resources. Although their approach shows good scalability through deep neural networks, the computational demands of the Double Dueling Q-network training process may be a limitation for real-time applications with strict computational constraints. For comprehensive reviews of machine learning-based online monitoring and diagnostic methods, see studies such as [21,23,24,25,26,27].

Despite these advancements, three key limitations remain. First, many SPC methods face computational challenges in ultra-high dimensions due to reliance on covariance matrix inversions or extensive hypothesis testing. Second, entropy-based techniques, though effective for noise reduction, show limited sensitivity to sparse changes where only few variables deviate, while machine learning approaches often demand substantial labeled data that may be unavailable. Third, current diagnostic procedures typically emphasize either speed or accuracy, struggling to achieve both real-time alerts and precise root-cause identification. This paper bridges these gaps by proposing a two-stage monitoring and diagnostic framework designed for high-dimensional data streams with sparse mean shifts. The first issue is online monitoring, which aims to check whether the data stream has changed over time points and accurately estimate the change point after the change occurs. The second issue is the diagnosis of the fault, which aims to identify the components that have undergone abnormal changes; it will help eliminate the root causes of the change.

As the EWMA statistic is sensitive to small changes and includes the information of previous samples, we use it for online monitoring [28]. At the first stage, we construct a monitoring statistic based on the EWMA statistic. Under certain conditions, we prove the asymptotic distribution of the monitoring statistic and then obtain the threshold of online monitoring. At any time point, if the monitoring statistic value is greater than the threshold, a real-time alert is issued, indicating that the data stream is out of control. Then, we move into the second stage, to identify the components that have undergone abnormal changes over time points, which belongs to the scope of fault diagnosis. Specifically, when the monitoring procedure proposed in the first stage alerts that the data stream is abnormal, we allow the monitoring procedure to continue running for a while, and obtain more sample data, then we conduct multiple hypothesis tests on the data before and after the alarm to determine which components of the data stream have experienced anomalies. The desired two-stage monitoring and diagnosis scheme in this study can not only correctly estimate the time point in time but also accurately identify the OC components of the data stream.

The contributions of this paper are as follows: (1) Methodological Innovation: This study introduces an EWMA-based monitoring statistic that leverages max-norm aggregation to enhance sensitivity to sparse changes. After its asymptotic distribution is derived, a dynamic thresholding mechanism is established for real-time anomaly detection. (2) Integrated Diagnosis: Upon detecting an OC state, the framework employs a delayed multiple hypothesis testing procedure to isolate anomalous components. This approach minimizes false discoveries while accommodating temporal dependencies in post-alarm data. (3) Industrial Applicability: Our method is validated through electron probe X-ray microanalysis (EPXMA), a critical technique in materials science for real-time composition monitoring. The method’s efficiency in handling high-dimensional sparse shifts makes it equally viable for IoT-enabled predictive maintenance and industrial process control.

This paper is structured as follows: Section 2 formulates the monitoring and diagnosis problem, detailing the EWMA statistic, fault isolation strategy, and performance metrics. Section 3 evaluates the method via simulations, benchmarking against state-of-the-art techniques. Section 4 demonstrates its practicality through an EPXMA case study, highlighting its superiority in detecting micron-scale material defects. Conclusions and future directions are presented in Section 5.

2. Methodology

2.1. Problem Formulation

Consider a high-dimensional data stream denoted by , where each observation denotes a p-dimensional vector at time point t. In the IC state, the observations are generalized from , with and , . However, following an unknown change point , the process experiences a mean shift from to , transitioning to an OC state. In practical applications, process changes are typically driven by only a small subset of components within the data stream. Consequently, the shift vector exhibits sparsity, with most elements being zero and only a few non-zero components. It is important to note that the transition from to can occur either abruptly or gradually, representing two distinct scenarios of mean-shift anomalies: abrupt mean shifts and gradual mean shifts.

This study aims to address two critical challenges in high-dimensional data stream monitoring: The first one is to propose an effective online monitoring method that can accurately estimate the location of the change point. Once the data stream changes abnormally, it can issue an alarm in time. The second task is to accurately identify the components of abnormal changes in high-dimensional data streams while controlling the error rate.

2.2. The EWMA Statistic

As is well known, the EWMA statistic is sensitive to small changes, and it includes information from previous samples [29]. Thus, an EWMA statistic is chosen in this study to monitor a high-dimensional data stream, the EWMA sequence is defined by

where is a weigh parameter. As described in Feng et al. (2020) [30], a smaller value of leads to a quicker detection of smaller shifts, and the detailed comparison results are provided in the numerical simulation section.

Let , the j-th component of is denoted as

where is prearranged, i.e., for . It is obvious that when the data stream is IC, we have

As , we have , then the asymptotic distribution of is as follows,

Correspondingly, the j-th component of obeys the following normal distribution, i.e.,

where is the j-th diagonal element of . However, once the mean value has undergone abnormal changes, does not follow the multivariate normal distribution mentioned above.

2.3. Online Monitoring Procedure

Without loss of generality, we assume that the mean vectors of , , , …, , …mentioned above are , , …, ,…, respectively. Thus, the online monitoring of high-dimensional data streams can be considered as a hypothesis testing change point detection problem, with the null and the alternative hypothesis are

It is obvious that the monitored data stream is IC up to time point t if is accepted, but a rejection of indicates that some components of the stream have changed abnormally after the change point .

Therefore, we construct the test statistic as the following max-norm information:

Intuitively, the larger the value of , the more likely the observation undergoes abnormal at time point t. If exceeds a threshold, it implies that an anomaly occurred.

It should be noted that the mean and covariance values of are unknown, so the reasonable estimators of and are needed. Suppose be the initial IC data stream, the sample mean and covariance of these m observations are defined as and , respectively.

Consequently, the estimated max-normal monitoring statistic is defined as

where is the j-th main diagonal element of .

For simplicity, let , , it is obvious that, , . The monitoring statistic can be rewritten as

Let , then is a high-dimensional normal random vector with the mean vector of zero and the covariance matrix of for , where the diagonal for . In order to obtain the threshold of online monitoring, we need to prove the asymptotic distribution of the statistic when the null hypothesis holds.

To this end, this requires the following two assumptions:

A.1.

for some constant ;

A.2.

for some constant .

Assumptions (A.1) and (A.2) are mild and common assumptions that are widely used in the high-dimensional means test under dependence. Under the above two assumptions, Proposition 1 studies the asymptotic distribution of when the null hypothesis holds.

Proposition 1.

Under the assumptions of (A.1) and (A.2), when the null hypothesis holds, for any , follows the asymptotic distribution

Proposition 1 shows that follows the type I extreme value distribution with the cumulative distribution function . The proof of this proposition is similar to that of Lemma 6 in Cai et al. (2014) [31], so it is omitted here.

Thus, the data stream shows abnormal change at time point t, if

where is the threshold at a given significance level , and

It should be noted that not all the observations of are considered as change points, and these points may also be outliers. Therefore, when occurs, we continue to monitor for a while to obtain some more observations and if all the values of are greater than . Thus, the change point can be estimated naturally by

In this case, we claim that the data stream changes abnormally after time point , and the process enters into an OC state.

2.4. Fault Diagnosis Procedure

To this end, we first keep monitoring a p-dimensional data stream by using the monitoring statistics . After the OC alarm is issued, we enter into the second stage of statistical fault diagnosis to identify the components that have undergone abnormal changes. To this end, we continue to monitor the data stream for a while and collect some more OC observations. The diagnostic problem can be regarded as the following multiple hypothesis testing. The null hypothesis and the alternative hypothesis are

It is obvious that if is accepted, the j-th component of the stream is IC; otherwise, a rejection indicates that the j-th component of the stream is OC when .

To address this problem, a diagnostic statistic is proposed in the following form,

where is the mean value of the observed samples, and the sample variance is defined as that in Equation (6). It can be seen that if the value of is large, it indicates that abnormal changes may occur in the data stream. Therefore, it can be inferred that the null hypothesis in Equation (11) is rejected if too large to exceed a threshold.

In many applied multiple testing problems, there are many test strategies, such as the traditional critical value test methods and some false discovery rate (FDR) controlling procedures [32,33,34]. It is obvious that , , and as under the null hypothesis . Thus, the diagnostic statistic follows Chi-squared distribution

In the diagnosis process, we should ensure that the global per-comparison error rate (PCER) can be controlled within a prespecified level . Without loss of generality, we assume the first components of the high-dimensional observations are IC, and the remaining components are OC when . The PCER can be defined as the expected number of type-I errors divided by the number of hypotheses, that is,

Therefore, we need to determine such that the Equation (14) is satisfied. The choice can be determined through numerical simulation. More specifically, we let the global per-comparison error rate equals , that is,

It is obvious that when Equation (15) is satisfied, the Equation (14) above is naturally controlled within and holds true.

For a given initial threshold value of , (such as ), the proportion of is denoted as when . Then, we repeat it B times (), the average values of over B replications can be used to approximate . Conversely, if Equation (15) is satisfied, we use numerical methods to obtain an approximate value, denoted as . Therefore, if is not larger than , we can control the global per-comparison error rate at a level , otherwise if is larger than , this indicates that the j-th component of the data stream is abnormal.

2.5. Algorithm Steps

The main algorithm is summarized as the following steps.

| Algorithm 1: The main algorithm steps of monitoring and diagnosis |

| 1 Given in-control data streams ; |

| 2 Calculate the sample mean of the IC observations; |

| 3 Observe p-dimensional data stream , the EWMA statistic as calculate |

| ; |

| 4 Construct global monitoring statistic ; |

| 5 If , then raise the OC alarm, the estimated change point is |

| . |

| 1 Collect n observations after the OC alarm signal occurs; |

| 2 Construct diagnostic statistic , ; |

| 3 Record the observations set with for as H, |

| let ; |

| 4 Randomly sample B times from data set H, the average of over B replications |

| is used to approximate ; |

| 5 Obtain , the diagnosed variables are the ones |

| corresponding to the indicators of . |

2.6. Performance Evaluation Measures

In the online monitoring phase, in order to measure the performance of the proposed monitoring method, we choose the following three metrics: The first is the accuracy of the change point estimation, that is, when the estimated value is close to the real change point , the change point estimation is accurate. The second is the type-I error rate of online monitoring, which is the proportion of normal observations mistakenly identified as outliers. The last one is the power value of online monitoring, that is, the proportion of abnormal observations that are accurately identified. The type-I error rate can be well controlled; meanwhile, the larger of the power value, approaching 100%, the better of the proposed monitoring method.

In the fault diagnosis stage, we define two relevant metrics to verify the effectiveness of the method: One is the false positive rate (FPR), a measure of the proportion of not-faulty variables that are incorrectly identified as faulty variables. The other is the true positive rate (TPR), defined as the percentage of the ratio of the number of variables that are correctly detected as faulty over the number of all not-faulty variables.

Without loss of generality, after an change point , the IC components of the stream are denoted as , and the OC components set is . At any time point t, the estimated OC components are defined as . Thus, the TPR and FPR values at time point t are defined as

where represents the number of elements in set A. Correspondingly, up to time point t, the average TPR and FPR values can be defined as follows:

In fact, we should ensure that the higher the average TPR value, the better; meanwhile, we should also ensure that the higher the FPR value, the better the performance.

3. Simulation Studies

In this section, we conduct a comprehensive simulation study to evaluate the performance of our proposed monitoring and diagnosis framework, comparing it with several existing methodologies. For the experimental setup, we generate IC data streams from a multivariate normal distribution . To assess the effectiveness of our approach, we consider two distinct OC scenarios characterized by mean shifts:

(i) Mean abrupt shift: the OC stream follows , where is a parameter used to measure the degree of drift, and is a p-dimensional mean vector.

(ii) Mean gradual shift: the OC stream follows , where the mean vector evolves progressively over time according to the relationship, , with representing a small incremental change vector over time.

The two mean shift scenarios described above may impact only a subset of dimensions within the data. In the case of an abrupt mean shift, it is assumed that the initial mean vector is equal to , while the post-shift mean vector is a sparse p-dimensional vector. Specifically, most of its components remain zero, with only a few components assuming a value of 1. In the context of a gradual mean shift, the change in the mean vector is similarly sparse. That is, the incremental change affects only a few components, while the majority of its elements remain zero. The duration of the mean shift in each affected dimension is denoted by d, and the magnitude of the change at each time point is given by , reflecting a linear trend of increase or decrease. Over time, after a period of continuous gradual drift, the mean vector stabilizes, and the data stream enters a post-drift phase.

In both scenarios, the abnormal change occurs at a specific time point . Prior to , (i.e, when ), the process remains IC. However, for , the mean values of components undergo a change, while the remaining components remain unchanged. This sparsity in the mean shift is a critical characteristic of high-dimensional data streams, where changes are often localized to a small subset of dimensions.

The covariance matrix , which characterizes the correlation among p-dimensional random variables, plays a crucial role in the simulation study. In the experimental design, we maintain the assumption that remains invariant before and after the change point. Specifically, we investigate three distinct covariance matrix structures:

(a) Independent structure case: , where represents the identity matrix, indicating no correlation between variables;

(b) Long-range dependence structure case: for , with the correlation coefficient fixed at 0.5. This structure exhibits slowly decaying correlations between variables;

(c) Short-range correlation case: is a block-diagonal matrix, within each block matrix, , , where b is the block size and , .

The method presented in this study is fundamentally grounded in extreme value theory (EVT), henceforth referred to as the EVT method. To comprehensively evaluate its efficacy, we conducted comparative simulations with three alternative approaches, assessing their respective monitoring and/or diagnostic capabilities. The first comparative method employs an adaptive principal component (APC) selection mechanism utilizing hard-thresholding techniques, as proposed by Samaneh et al. [2]. The second method, developed by Ahmadi and Mohsen [5], incorporates a two-stage detection system combining a single Hotelling’s control chart with a Shewhart control chart, subsequently denoted as the AM method. The third approach, proposed by Aman et al. [27], presents a fault detection framework utilizing Slow Feature Analysis (SFA), specifically designed for time series models and SPC. In this study, we perform a thorough performance comparison with the Dynamic Slow Feature Analysis (DSFA) method, establishing a robust evaluation framework for our proposed methodology.

In the simulation framework, these methods are systematically applied to monitor continuous data streams and detect anomalies. The comparative analysis is performed through extensive computational experiments, with all performance metrics calculated from 1000 independent simulation runs to ensure statistical reliability and robustness.

Table 1 presents the simulated type-I error rates () and power values () obtained from the proposed online monitoring procedure under various model configurations. The simulation was conducted with parameters set at , , , , , and the duration of the mean shift in Scenario (ii), while examining three levels of abnormality degree , respectively. The results demonstrate that when and the proportion of abnormal data stream components () ranges from 10% to 25%, the empirical type-I error rates are effectively maintained around the nominal level of 5%, in most scenarios. Furthermore, the power values exhibit a positive correlation with the abnormality degree (), indicating that higher degrees of abnormality facilitate easier detection of anomalous data. Notably, in Scenario (i), the power values consistently exceed 99% across all models and proportions when , demonstrating the method’s exceptional detection capability for abrupt changes. In contrast, Scenario (ii) shows relatively lower power values ranging from 86.6% to 94.0%, reflecting the increased challenge in detecting gradual changes compared with abrupt mutations. The standard deviations, presented in parentheses, indicate the stability of the monitoring results across different experimental conditions. These findings collectively validate the effectiveness of the proposed method in maintaining controlled type-I error rates while achieving high power across various abnormal data scenarios.

Table 1.

Average percentage of the empirical type-I error values (%) and the power values (%) by the proposed monitoring procedure under different model settings when , , , , , and , respectively.

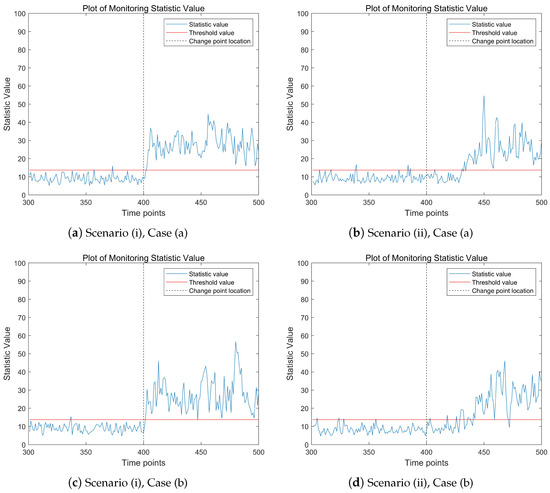

Figure 2 shows the change trend of the proposed monitoring statistics over time points in different model scenarios. The proportion of abnormal data stream is . The three subfigures on the left depict the mean abrupt shift model, while the three subplots on the right represent the mean gradual shift model. In the high-dimensional data stream being monitored, the real change point time . From the results presented in Figure 2, it is evident that in all three model scenarios, the values of the monitoring statistics exhibit a significant upward trend after the change point occurs. Notably, the monitoring statistic values in the left subplots show a more pronounced upward trend post-change point compared with the right subplots. This is attributed to the abrupt mean shift in the left subplots, which leads to a more substantial increase in the monitoring statistic values. It is important to note that before the change point occurs, the values of the monitoring statistics may occasionally exceed the threshold at certain time points. This phenomenon is likely caused by outliers in the data. Overall, Figure 2 demonstrates that the proposed monitoring statistic effectively captures the changing trends in high-dimensional data streams, providing a reliable method for detecting shifts in the data.

Figure 2.

The change trend of the monitoring statistic value when , , , , . The red dashed line represents the threshold line; the vertical gray dotted line is the change point location.

Table 2 presents a comprehensive comparison of three change point detection methods (EVT, A-M, and APC) under different scenarios and parameter settings. The evaluation is based on three key metrics: Bias (the absolute deviation between estimated and true change points), Sd (the standard deviation of estimators), and (the probability that the absolute difference between true and estimated change points is within a specified threshold j). The results demonstrate a clear trend across all methods: as the signal strength parameter increases from 1.0 to 2.0, the estimation accuracy improves significantly. This improvement is evidenced by the decreasing values of Bias and Sd, along with the increasing values of . Specifically, when reaches 2.0, all methods achieve their best performance, with values approaching or exceeding 90% in most cases.

Table 2.

Comparison of the three methods via change point estimation with , , , , the real change point and 400, respectively. Bias: the absolute deviation of estimators; Sd: the standard deviations of estimators; %, in Scenario (i), and in Scenario (ii).

In Scenario (i) with , the EVT method shows superior performance, particularly at higher values. When , EVT achieves a remarkably low Bias of 1.1 and Sd of 1.1, with reaching 99.5%. The APC method also demonstrates competitive performance, showing consistent improvement across different values. The A-M method, while showing improvement with increasing , generally underperforms compared with the other two methods in this scenario. For Scenario (i) with , similar patterns emerge, though the absolute values of the metrics are slightly different. The EVT method maintains its leading position, achieving a Bias of 1.2 and Sd of 0.8 at , with remaining at 99.5%. The APC method shows particularly strong improvement in this scenario, with increasing from 57.1% at to 92.7% at . In Scenario (ii), which represents a more challenging detection environment, all methods show higher Bias and Sd values compared with Scenario (i). However, the relative performance ranking remains consistent, with EVT maintaining its advantage, followed by APC and then A-M. Notably, even in this more difficult scenario, the EVT method achieves values above 90% when reaches 2.0.

These results collectively demonstrate that while all methods benefit from stronger signals (higher values), the EVT method consistently outperforms the others across different scenarios and parameter settings. The findings suggest that the choice of detection method should consider both the expected signal strength and the required precision of change point estimation.

The size of the smoothing parameter plays a crucial role in determining the performance of the EWMA-based monitoring procedure, particularly affecting the type-I error rate, detection power, and change point estimation accuracy. Table 3 presents a comprehensive simulation study evaluating these performance metrics under different values (0.2, 0.4, and 0.6) across various abnormality indicators ( = 1.0, 1.5, 2.0) and sparsity levels ( = 0.05, 0.10, 0.15) of the mean abrupt shift model. The simulation results reveal several important patterns. First, the type-I error rate remains stable around the nominal level of 5% across all values, demonstrating the robustness of the proposed method in maintaining false alarm control. However, the power shows a strong dependence on , with higher values of leading to substantially reduced detection rates. For instance, when = 1.0 and , the detection power decreases from 80.4% at = 0.2 to only 17.8% at = 0.6.

Table 3.

Average percentage of type-I error values (%), power values (%), and change point estimators () by the proposed online monitoring procedure under different choices of when , , , and .

The change point estimation accuracy, measured by , also exhibits sensitivity to selection. Smaller values consistently provide more precise change point detection, particularly for smaller mean shifts ( = 1.0). For example, at and = 1.0, the estimation error increases from 9.5 (209.5–200) at = 0.2 to 90.1 at = 0.6. This pattern is particularly pronounced for smaller values, confirming the EWMA statistic’s sensitivity to small mean drifts. Interestingly, as increases, the influence of on both detection power and change point estimation diminishes. At = 2.0, even with = 0.6, the method achieves detection power above 85% across all sparsity levels, and the change point estimation error remains within 5.5 time units. This suggests that while selection is critical for detecting small changes, its impact becomes less significant when dealing with larger mean shifts. The results also indicate that higher sparsity levels () generally improve detection performance, particularly for smaller values. This pattern is most evident in the = 1.0 scenario, where increasing from 0.05 to 0.15 improves detection power from 80.4% to 95.9% at = 0.2.

These findings collectively suggest that while smaller values (around 0.2) are preferable for detecting small changes and achieving accurate change point estimation, larger values may be more suitable when robustness to noise is prioritized over sensitivity to small shifts. The choice of should therefore be guided by the specific monitoring objectives and the expected magnitude of process changes.

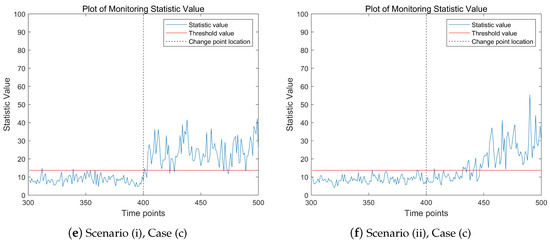

Figure 3 illustrates a comparative analysis of the performance metrics, including power values and type-I error rates, across three monitoring methods under varying parameter values, evaluated against three distinct covariance matrix structures in the mean abrupt shift scenario. The left panel of the figure demonstrates the power values obtained by each method. When = 0.4 or = 0.8, the AM method exhibits a slight advantage over our proposed EVT method. However, as increases to 1.2 or beyond, the EVT method significantly outperforms both the AM and APC methods in terms of power values. The right panel of Figure 3 focuses on the type-1 error rates. It is evident that the EVT method effectively controls the type-1 error rate around the nominal level of 5%. In contrast, the AM method fails to maintain control over the type-I error rate. The APC method also shows suboptimal performance in controlling type-I errors. Overall, Figure 3 highlights the robustness and superiority of the EVT method, especially for larger values of , both in terms of power and type-I error control. The results underscore the limitations of the AM and APC methods in maintaining statistical control under varying conditions.

Figure 3.

The change trend of power and size values (%) by various procedures when , , , , with the increase in the parameter .

Table 4 lists the fault diagnosis results of high-dimensional data streams by using different diagnostic methods. We have calculated the values of two main indicators here: one is the correct recognition rate, and the other is the incorrect recognition rate. At the same time, we also considered the value of change point estimation during the online monitoring phase. The results show that as k increases, the change point estimation becomes closer to the true value. Regardless of the proportion of being equal to 5%, 10%, or 15%, the EVT method is significantly better than the other two methods. Furthermore, from the diagnostic results, the TPR values of the EVT method are close to 100%. It should be noted that the AM method and APC method also have good diagnostic results. As pointed out by Vilenchik et al. (2019) [35], PCA-based approaches, such as APC, face a problem of difficult interpretation, especially for high-dimensional data. Considering this, as well as the results of point estimation, our method is, overall, superior to the other two methods.

Table 4.

Diagnosis accuracy of the proposed procedure, under different choices of and , when , , , and .

Recently, Aman et al. [27] proposed a novel methodology for fault detection based on Slow Feature Analysis (SFA), specifically tailored for time series models and SPC. Their comprehensive analysis demonstrates that Kernel SFA (KSFA) and Dynamic SFA (DSFA) significantly outperform traditional methods by offering enhanced sensitivity and fault detection capabilities.

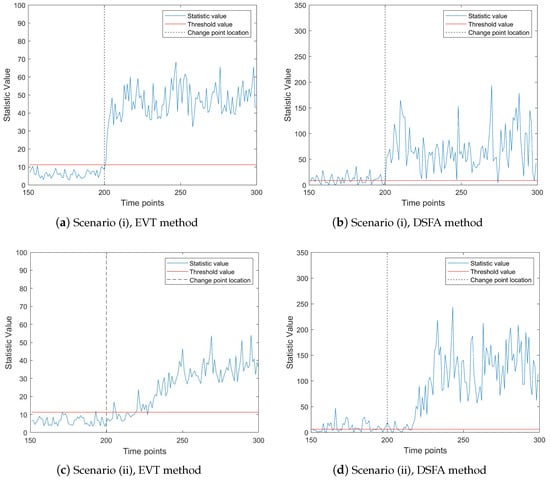

To further evaluate the effectiveness of fault detection methods, we compare the proposed EVT online monitoring method with the DSFA method. The results of this comparison are presented in Figure 4 and Figure 5. It is important to note that, as observed in prior analyses, the DSFA method struggles to effectively monitor high-dimensional and ultra-high-dimensional sparsely changing time series data streams, primarily due to the challenges posed by the curse of dimensionality. To facilitate a more meaningful comparison, we select a data stream with a slightly lower dimensionality. Specifically, we set the parameters as follows: , , , , with 30 components exhibiting abnormal changes. We examine two scenarios of mean change: (i) mean abrupt shift and (ii) mean gradual shift. In the gradual shift scenario, the duration of the change is set to time units.

Figure 4.

The change trend of the monitoring statistic value when , , , , , . The red dashed line represents the threshold line; the vertical gray dotted line is the change point location.

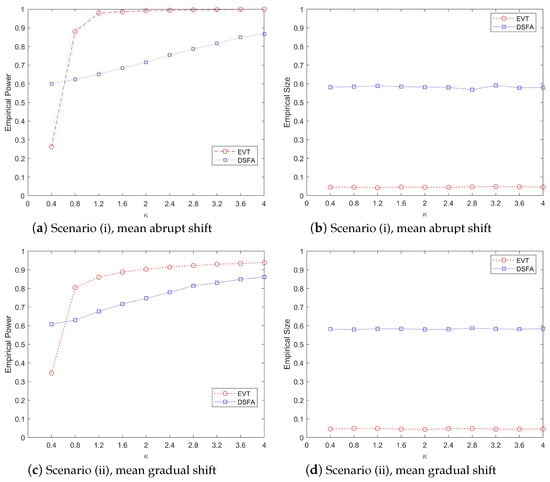

Figure 5.

The change trend of power and size values (%) by various procedures when , , , , with the increase in the parameter .

Figure 4 illustrates the performance of both the EVT and DSFA methods under these two scenarios. The left subfigure depicts the mean abrupt shift case, while the right subfigure represents the mean gradual shift case. In both subfigures, the gray lines denote IC data, and the red lines indicate OC data. From Figure 4, it is evident that both methods exhibit significant changes in monitoring statistics after the change point, indicating their ability to detect faults. In the first scenario (mean abrupt shift), the EVT method promptly reflects the abnormal changes in the data stream, with the monitoring statistics clearly exceeding the threshold at the change point. Moreover, in the absence of changes, most of the monitoring statistics remain below the threshold, demonstrating the method’s robustness. In the second scenario (mean gradual shift), both methods exhibit a delay in detection due to the gradual nature of the change. However, the DSFA method incorrectly identifies a substantial amount of normal data as abnormal before the change point occurs, highlighting its limitations in handling gradual shifts. In contrast, the EVT method demonstrates superior performance by more accurately distinguishing between normal and abnormal data, even in the presence of gradual changes.

Overall, the results in Figure 4 underscore the advantages of the EVT method over the DSFA method, particularly in terms of timely and accurate fault detection in both abrupt and gradual change scenarios. This comparison reinforces the effectiveness of the EVT approach for online monitoring of time series data streams.

Figure 5 illustrates the change trends in monitoring power and type-I error rates for the EVT and DSFA methods as the degree of abnormality increases from 0.4 to 4.0. The figure examines two types of mean changes: abrupt and gradual shifts. The top two subplots (a) and (b) depict the performance of the two methods in terms of monitoring power and type I error under abrupt mean changes, while the bottom two subplots (c) and (d) show the corresponding trends under gradual mean changes.

From Figure 5, it is evident that the monitoring power of both methods increases with the rise in , as shown in the left subplots. However, the EVT method consistently outperforms the DSFA method, demonstrating superior monitoring efficiency. Specifically, the power values monitored by the EVT method are significantly higher than those of the DSFA method, regardless of whether the mean change is abrupt or gradual. This indicates that the EVT method is more effective in detecting anomalies as increases. The right subplots focus on the type-I error rates. The EVT method effectively controls the error rate around the 5% threshold, maintaining reliability in false positive detection. In contrast, the DSFA method struggles to control the type-I error, with many monitoring statistics exceeding the threshold before the change point occurs. This aligns with the results observed in Figure 4, further highlighting the robustness of the EVT method in managing error rates. In summary, Figure 5 demonstrates that while both methods improve in monitoring power with increasing , the EVT method is significantly more effective and reliable, particularly in controlling type-I errors, making it a preferable choice for detecting mean changes in data streams.

4. Real Data Analysis

To validate the effectiveness of the proposed methodology, we apply our monitoring and diagnostic framework to a real-world industrial dataset involving archaeological glass vessel analysis. This dataset simulates a typical high-dimensional process monitoring scenario in material manufacturing industries. The data originates from Electron Probe X-ray Microanalysis (EPXMA) measurements of sixteenth- to seventeenth-century glass artifacts [36]. Previous studies have extensively analyzed this dataset for anomaly detection, including Hubert et al. (2005) [37], who applied RobPCA and identified row-wise outliers, and Hubert et al. (2019) [38], who employed MacroPCA to flag anomalous samples.

In industrial practice, EPXMA serves as a critical tool for quality control in glass production, semiconductor manufacturing, and metallurgy, where real-time monitoring of material composition ensures product consistency and enables early anomaly detection. For instance, modern glass manufacturing requires precise detection of elemental composition deviations caused by raw material impurities or furnace temperature fluctuations, which could otherwise lead to defective batches. Our case study treats the glass artifact data as a high-dimensional production process stream, containing 180 sequential time points (analogous to production cycles) and 750 spectral intensity variables. These EPXMA intensities reflect elemental composition characteristics comparable to real-time spectroscopic monitoring in industrial production lines. Each time point corresponds to a manufactured batch, with variables representing critical quality indicators such as SiO2, CaO, and trace element concentrations.

During preliminary analysis, we excluded 13 variables with missing values or measurement artifacts (e.g., sensor dropout events), retaining 737 process variables. This preprocessing aligns with industrial protocols for dynamically excluding faulty sensors from monitoring systems. Shapiro-Wilk normality tests reveal non-normal distributions for most components (p-values < 0.01). Following previous studies, we resampled the first 30 IC observations 100 times to generate a 3000-sample IC reference set. All IC observations served as historical data, with 180 subsequent observations used for testing. To approximate normality assumptions, we applied an inverse normal transformation: for , , where denotes the empirical cumulative distribution function for the j-th IC component. Notably, this marginal transformation does not enforce joint normality.

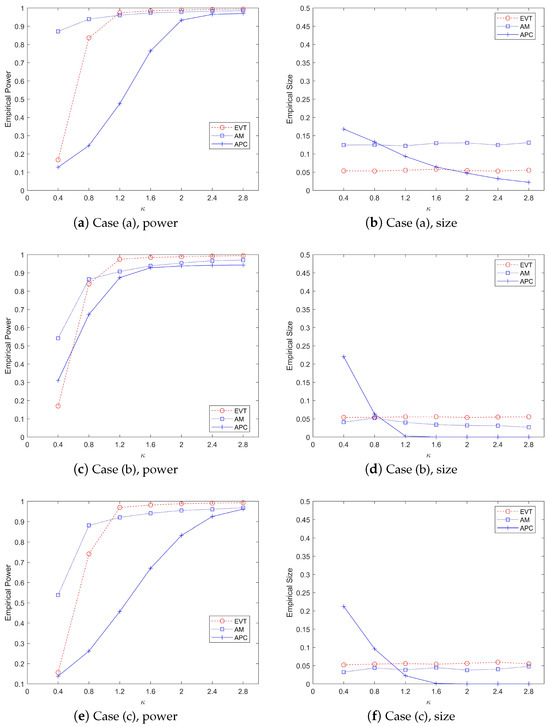

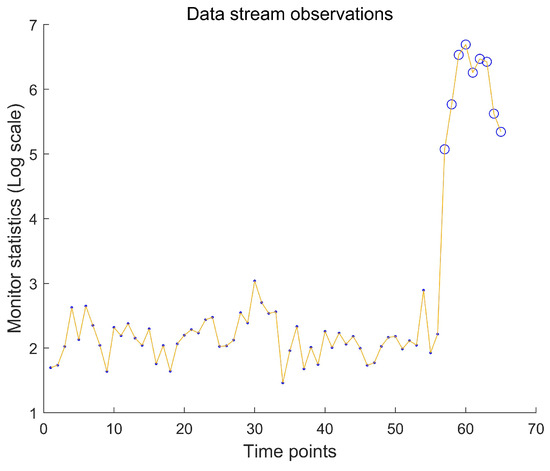

We implement our online monitoring framework with a control limit and weight parameter . As shown in Figure 6, the system detects an OC state at , indicating a systemic process deviation. This finding corroborates Hubert et al. (2019) [38], who identified similar anomalies using offline Robust PCA methods. In industrial practice, such detection would trigger immediate production checks for potential causes like raw material contamination or equipment malfunctions.

Figure 6.

Online monitoring results for the glass production process stream. Solid points: IC batches; Hollow circles: OC batches triggering alarms. The OC state from onward emulates a production line deviation requiring intervention.

In the second stage, to identify the root causes of data stream anomalies, we perform a detailed analysis of observations within a defined window ( = 5), covering five consecutive time points (58–62) after the change point. By using the fault diagnosis Algorithm 1 proposed in this study, we demonstrate significant intensity shifts in 36 variables, suggesting potential anomalies. While the specific chemical elements corresponding to the spectral data remain unidentified, we postulate that these shifts may indicate variations in certain chemical components, particularly those related to potassium (K) and lead (Pb) oxides. In an industrial setting, this precise diagnostic capability facilitates targeted quality adjustments for specific deviations, such as changes in K/Pb ratios due to contaminated raw materials. This diagnostic method marks a substantial improvement over conventional approaches that simply identify ‘abnormal batches’ without offering actionable insights for operational adjustments.

5. Conclusions and Discussion

This study develops a statistically rigorous framework for online monitoring and fault diagnosis in high-dimensional data streams with sparse anomalies. The proposed two-stage methodology integrates an EWMA-based monitoring scheme with the diagnostic Algorithm 1, demonstrating quantifiable improvements over conventional approaches. Numerical simulations validate that the monitoring stage achieves rapid anomaly detection (typically within 1 or 2 sampling intervals post-change) while maintaining type-I error rates at approximately 5%, aligning with conventional control limits. The diagnostic stage exhibits exceptional precision, attaining 99.1% identification accuracy for 200-dimensional anomaly streams and reducing false discovery rates to below 3–28.0% improvement over conventional approaches. These quantifiable advancements address critical limitations in existing methods, particularly in balancing sensitivity to sparse changes with stringent error control. This method demonstrates effectiveness in monitoring high-dimensional data streams characterized by abnormal mean changes.

However, its capability to detect anomalies is limited in scenarios involving covariance changes, indicating certain constraints in capturing diverse types of abnormal patterns. Consequently, the proposed approach imposes specific requirements on data quality, particularly regarding data distribution, to satisfy its theoretical assumptions. Moreover, it should be noted that several algorithmic parameters require careful calibration based on actual data characteristics in practical applications. Specifically, the transformation parameter and the diagnostic window width need appropriate adjustment according to the data quality. Future research should focus on extending the framework to address these constraints. Adaptive covariance estimation techniques and robust statistical formulations could enhance robustness to non-Gaussian noise and covariance shifts. Computational optimizations during initialization may improve scalability for ultra-high-dimensional systems. Furthermore, investigating adaptive parameter selection strategies and relaxing independence assumptions could broaden applicability to correlated anomaly patterns. Despite these limitations, the proposed method establishes a principled foundation for high-dimensional stream analysis, particularly in applications demanding sparse fault detection with controlled FDR.

Author Contributions

Conceptualization, T.W. and Z.L.; methodology, T.W., F.Z., and Z.L.; software, T.W.; validation, T.W. and Y.G.; formal analysis, T.W.; investigation, Y.G.; resources, Y.G.; writing—original draft preparation, T.W. and Z.L.; visualization, Y.G.; supervision, F.Z. and Z.L.; funding acquisition, T.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by National Science and Technology Major Project (2022ZD0114801), National Nature Science Foundation of China (11926347), Qinglan Project of Jiangsu Province of China (2022), Huai’an City Science and Technology Project of China (HAB202357), Natural Science Research of Jiangsu Higher Education Institutions of China (21KJD110003, 23KJB630003) and Education Department of Jilin Province of China (JJKH20220534KJ). The APC was funded by Qinglan Project of Jiangsu Province of China (2022) and Huai’an City Science and Technology Project of China (HAB202357).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this paper will be made available by the authors upon request.

Acknowledgments

The authors are grateful to the editor and two anonymous referees for their valuable comments that helped to improve the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| SPC | statistical process control |

| EWMA | exponentially weighted moving average |

| CUSUM | cumulative sum |

| IC | in-control |

| OC | out-of-control |

| ARL | average run length |

| FDR | false discovery rate |

| PCER | per-comparison error rate |

| FPR | false positive rate |

| TPR | true positive rate |

| EVT | extreme value theory |

| DSFA | dynamic slow feature analysis |

| PCA | principal component analysis |

| APC | adaptive principal component |

References

- Zou, C.; Wang, Z.; Zi, X.; Jiang, W. An efficient online monitoring method for high-dimensional data streams. Technometrics 2015, 57, 374–387. [Google Scholar] [CrossRef]

- Ebrahimi, S.; Ranjan, C.; Paynabar, K. Monitoring and root-cause diagnostics of high-dimensional data streams. J. Qual. Technol. 2020, 54, 20–43. [Google Scholar] [CrossRef]

- Li, J. A two-stage online monitoring procedure for high-dimensional data streams. J. Qual. Technol. 2019, 51, 392–406. [Google Scholar] [CrossRef]

- Li, W.; Xiang, D.; Tsung, F.; Pu, X. A diagnostic procedure for high-dimensional data streams via missed discovery rate control. Technometrics 2020, 62, 84–100. [Google Scholar] [CrossRef]

- Ahmadi-Javid, A.; Ebadi, M. A two-step method for monitoring normally distributed multi-stream processes in high dimensions. Qual. Eng. 2020, 33, 143–155. [Google Scholar] [CrossRef]

- Liu, K.; Mei, Y.; Shi, J. An adaptive sampling strategy for online high-dimensional process monitoring. Technometrics 2015, 57, 305–319. [Google Scholar] [CrossRef]

- Huang, T.; Kandasamy, N.; Sethu, H.; Stamm, M. An efficient strategy for online performance monitoring of datacenters via adaptive sampling. IEEE Trans. Cloud Comput. 2019, 7, 155–169. [Google Scholar] [CrossRef]

- Xiang, D.; Li, W.; Tsung, F.; Pu, X.; Kang, Y. Fault classification for high-dimensional data streams: A directional diagnostic framework based on multiple hypothesis testing. Nav. Res. Logist. (NRL) 2021, 68, 973–987. [Google Scholar] [CrossRef]

- Li, J. Efficient global monitoring statistics for high-dimensional data. Qual. Reliab. Eng. Int. 2020, 36, 18–32. [Google Scholar] [CrossRef]

- Xian, X.; Zhang, C.; Bonk, S.; Liu, K. Online monitoring of big data streams: A rank-based sampling algorithm by data augmentation. J. Qual. Technol. 2021, 53, 135–153. [Google Scholar] [CrossRef]

- Colosimo, B.; Jones-Farmer, L.; Megahed, F.; Paynabar, K.; Ranjan, C.; Woodall, W. Statistical process monitoring from industry 2.0 to industry 4.0: Insights into research and practice. Technometrics 2024, 66, 507–530. [Google Scholar] [CrossRef]

- Xiang, D.; Qiu, P.; Wang, D.; Li, W. Reliable post-signal fault diagnosis for correlated high-dimensional data streams. Technometrics 2022, 64, 323–334. [Google Scholar] [CrossRef]

- Mutambik, I. An entropy-based clustering algorithm for real-time high-dimensional IoT data streams. Sensors 2024, 24, 7412. [Google Scholar] [CrossRef]

- Wan, Y.; Lin, S.; Jin, C.; Gao, Y.; Yang, Y. Improved entropy-based condition monitoring for pressure pipeline through acoustic denoising. Entropy 2024, 27, 10. [Google Scholar] [CrossRef]

- Hotait, H.; Chiementin, X.; Rasolofondraibe, L. Intelligent online monitoring of rolling bearing: Diagnosis and prognosis. Entropy 2021, 23, 791. [Google Scholar] [CrossRef]

- Wu, H.; Yuan, R.; Lv, Y.; Stein, D.; Zhu, W. Multi-weighted symbolic sequence entropy: A novel approach to fault diagnosis and degradation monitoring of rotary machinery. Meas. Sci. Technol. 2024, 35, 106119. [Google Scholar] [CrossRef]

- Wang, Z.; Sun, Y. Role of entropy in fault diagnosis of mechanical equipment: A Review. Eng. Res. Express 2023, 5, 032004. [Google Scholar] [CrossRef]

- Hu, X.; Zhao, Y.; Yeganeh, A.; Shongwe, S. Two memory-based monitoring schemes for the ratio of two normal variables in short production runs. Comput. Ind. Eng. 2024, 198, 110690. [Google Scholar] [CrossRef]

- Liu, Y.; Ren, H.; Li, Z. A unified diagnostic framework via Symmetrized Data Aggregation. IISE Trans. 2024, 56, 573–584. [Google Scholar] [CrossRef]

- Yan, X.; Sarkar, M.; Lartey, B.; Gebru, B.; Homaifar, A.; Karimoddini, A.; Tunstel, E. An Online Learning Framework for Sensor Fault Diagnosis Analysis in Autonomous Cars. IEEE Trans. Intell. Transp. Syst. 2023, 24, 14467–14479. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, Z.; Zhou, F.; Wang, C. A real-time fault diagnosis method for multi-source heterogeneous information fusion based on two-Level transfer learning. Entropy 2024, 26, 1007. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Zheng, Z.; Liu, K. Online Monitoring of High-dimensional Data Streams with Deep Q-network. IEEE Trans. Autom. Sci. Eng. 2025, 1. [Google Scholar] [CrossRef]

- Li, D.; Bai, M.; Xian, X. Data-Driven Pathwise Sampling Approaches for Online Anomaly Detection. Technometrics 2024, 66, 600–613. [Google Scholar] [CrossRef]

- Cheruku, S.; Balaji, S.; Adolfo, D.; Krishnamurthy, V. Data-Driven Digital Twins for Real-Time Machine Monitoring: A Case Study on a Rotating Machine. J. Comput. Inf. Sci. Eng. 2025, 25, 1–15. [Google Scholar] [CrossRef]

- MacGregor, J.; Cinar, A. Monitoring, fault diagnosis, fault-tolerant control and optimization: Data driven methods. Comput. Chem. Eng. 2012, 47, 111–120. [Google Scholar] [CrossRef]

- Zhao, P.; Qinghe, Z.; Ding, Z.; Zhang, Y.; Wang, H.; Yang, Y. A High-Dimensional and Small-Sample Submersible Fault Detection Method Based on Feature Selection and Data Augmentation. Sensors 2021, 22, 204. [Google Scholar] [CrossRef]

- Aman, A.; Chen, Y.; Yiqi, L. Assessment of Slow Feature Analysis and Its Variants for Fault Diagnosis in Process Industries. Technologies 2024, 12, 237. [Google Scholar] [CrossRef]

- Yoav, B.; Daniel, Y. A multivariate exponentially weighted moving average control chart. Ann. Stat. 2001, 29, 1165–1188. [Google Scholar]

- Lowry, C.A.; Woodall, W.H.; Champ, C.W.; Rigdon, S.E. A multivariate exponentially weighted moving average control chart. Technometrics 1992, 34, 46–53. [Google Scholar] [CrossRef]

- Feng, L.; Ren, H.; Zou, C. A setwise EWMA scheme for monitoring high-dimensional datastreams. Random Matrices Theory Appl. 2020, 9, 2050004. [Google Scholar] [CrossRef]

- Cai, T.T.; Liu, W.; Xia, Y. Two-sample test of high dimensional means under dependence. J. R. Stat. Soc. 2014, 76, 349–372. [Google Scholar]

- Yoav, B.; Yosef, H. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B (Methodol.) 1995, 57, 289–300. [Google Scholar]

- Benjamini, Y.; Yekutieli, D. The control of the false discovery rate in multiple testing under dependency. Ann. Stat. 2001, 29, 1165–1188. [Google Scholar] [CrossRef]

- Du, L.; Guo, X.; Sun, W.; Zou, C. False discovery rate control under general dependence by symmetrized data aggregation. J. Am. Stat. Assoc. 2021, 118, 1–34. [Google Scholar] [CrossRef]

- Vilenchik, D.; Yichye, B.; Abutbul, M. To Interpret or Not to Interpret PCA? This Is Our Question. Proc. Int. AAAI Conf. Web Soc. Media 2019, 13, 655–658. [Google Scholar] [CrossRef]

- Lemberge, P.; De Raedt, I.; Janssens, K.H.; Wei, F.; Van Espen, P.J. Quantitative analysis of 16–17th century archaeological glass vessels using PLS regression of EPXMA and μ-XRF data. J. Chemom. J. Chemom. Soc. 2000, 14, 751–763. [Google Scholar] [CrossRef]

- Hubert, M.; Rousseeuw, P.; Branden, K. ROBPCA: A new approach to robust principal component analysis. Technometrics 2005, 47, 64–79. [Google Scholar] [CrossRef]

- Hubert, M.; Rousseeuw, P.; Van den Bossche, W. MacroPCA: An all-in-One PCA method allowing for missing values as well as cellwise and rowwise outliers. Technometrics 2019, 61, 459–473. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).