1. Introduction

Modern statistical inference increasingly emphasizes the quantification of statistical evidence, functions that measure the support that observed data provide for competing models or for specific structural features of the underlying generating process. Classical information-theoretic criteria, such as Kullback–Leibler divergence and model selection scores (AIC, BIC), are powerful for assessing overall fit, but many applications in reliability, finance, and insurance require evidence about distributional variability, stochastic comparisons, and ordered structural properties, not only global fit.

Stochastic orders provide a rigorous language for such comparisons. They rank distributions by characteristics such as location, variability, and risk, and are widely used in reliability, actuarial science, economics, and finance. Among these orders, the dilation order is central because it ranks random variables by dispersion, thereby offering a principled evidential framework for assessing relative variability. This is particularly valuable when analyzing heavy-tailed or complex data, where traditional dispersion measures such as variance can be insensitive or misleading.

The dilation order therefore plays a crucial role in applications where understanding differences in spread rather than central tendency is essential. For example, in survival analysis, finance, and insurance, practitioners often seek evidence regarding whether one population exhibits greater dispersion than another, rather than whether their means differ. Developing non-parametric tools tailored to this question is both theoretically important and practically necessary, especially when model assumptions cannot be guaranteed. Recall that a random variable

is more dispersed in the dilation order than a random variable

(denoted by

X ≤

dil Y) if

for every convex function

for which the expectations exist [

1]. This formalizes that

displays greater dispersion than

. It is immediate that

X ≤

dil Y implies

, but variance induces a total order and is therefore less informative than the partial order provided by dilation. For background and related variability orders, see [

2,

3,

4]. In parallel, measuring uncertainty via entropy functions has become an active area in statistics and information theory. Shannon’s [

5] differential entropy for a nonnegative random variable

with density (pdf)

and distribution function (cdf)

is defined by:

where “

” means natural logarithm. The quantity

is location-free since

and

share the same differential entropy for any

. Thus, negative values of

are allowed whenever

However, expression (2) has well-known limitations as a continuous analog of discrete entropy, which has motivated the development of alternative measures, including weighted entropy and residual/past entropy variants [

6,

7]. The cumulative residual entropy (CRE), defined by:

where

denotes the survival function, and

denotes the cumulative hazard function [

8]. The CRE effectively characterizes information dispersion and has numerous applications in reliability and aging analysis [

9,

10,

11,

12,

13]. From an evidential perspective, CRE functions not only as a measure of uncertainty but also as a tool for drawing statistical evidence about variability and aging behavior.

The cumulative entropy (CE), an alternative probability-based measure associated with inactivity time, is obtained by replacing the pdf with the cdf in Shannon’s definition

where

denotes the cumulative reversed hazard function [

14]. Because the logarithmic argument is a probability, both

and

are nonnegative; by contrast,

may be negative for continuous variables. Moreover,

if and only if

is degenerate, underscoring its role as a measure of uncertainty. Properties of CE and its dynamic version for past lifetimes establish useful connections to characterization and stochastic orderings, reinforcing its value as a functional tool for comparing distributions. Several recent developments on cumulative entropies and their applications are presented in [

15,

16,

17,

18,

19,

20].

Within the evidential framework, an evidence function is designed to estimate a clearly defined target that represents the scientific contrast of interest. The quality of an evidence function is evaluated through desiderata such as consistency, interpretability, and its explicit treatment of uncertainty. Recent work has clarified the distinction between statistical evidence, and Neyman–Pearson (NP) testing and has formalized the link between an evidence function and its associated target in applied analysis [

21,

22,

23,

24,

25].

While evidential statistics treats statistical evidence as a continuous and independent measure of support for one hypothesis over another, clearly separating evidence from belief and decision, most applications to date have relied on parametric models (e.g., Cahusac [

26], Dennis et al. [

25], and Powell et al. [

27]). In contrast, our approach provides the first fully non-parametric construction of an evidence function, thereby extending the evidential framework beyond parametric assumptions. Traditional tests often fail to quantify the relative support for competing scientific claims; for example, a non-significant

p-value does not imply equality of variances but merely indicates insufficient evidence against the null hypothesis. In contrast, evidential functions such as the entropy-based estimators proposed in this work are continuous, portable, and accumulable, providing estimates of well-defined inferential targets. Importantly, even under model misspecification, evidential methods preserve interpretable error properties, whereas classical tests can become increasingly misleading as data size grows (see Taper et al. [

21] for further discussion).

This paper develops entropy-based evidence functions for the dilation order. We define two quantities, (CRE-based) and (CE-based), as evidence estimators of targets and that quantify the degree of stochastic variability between two distributions. Our primary framing is evidential: and are constructed and analyzed as evidence functions; NP-style tests are presented only as optional, secondary adaptations for decision-making. This reframing yields two concrete extensions: (i) model-to-process feature evidence, comparing models to generating processes with respect to dispersion/variability; and (ii) process-to-process evidence, comparing distinct generating processes by their distributional features using CRE and CE.

By framing and as evidence functions for the dilation order, this study aligns with the modern evidential paradigm. The objective is not to reject or accept a null hypothesis of equal dispersion, but to quantify the degree to which the observed data support one distribution as more dispersed than another. This approach is robust to heavy-tailed behavior, invariant to location shifts, and firmly grounded in information-theoretic principles. Moreover, the evidential perspective naturally accommodates comparisons between models and empirical reality, or between two observed processes, without requiring either model to be strictly true. This property represents a critical advantage in fields such as reliability, finance, and insurance, where all models are inherently approximations.

Methodologically, we derive large-sample distributions for the proposed functionals, provide practical nonparametric estimators, and evaluate performance via Monte Carlo experiments spanning light- and heavy-tailed families. A real-data application (survival times) illustrates interpretability and robustness. Compared with existing dilation-order procedures [

4,

28,

29], the proposed approach is straightforward to implement, computationally efficient, and often competitive in power, particularly under heavy tails.

The remainder of this paper is organized as follows.

Section 2 presents the theoretical foundations of CRE and CE under the dilation order.

Section 3 introduces the proposed evidential functionals and investigates their asymptotic properties. Furthermore, it evaluates the accuracy of the proposed measures using a Monte Carlo simulation and demonstrates the methodology through a real-data application. Finally,

Section 4 summarizes the main findings and outlines potential directions for future research.

Throughout this paper, we consider the random variables to be absolutely continuous, and we assume that all integrals and expectations exist whenever they are mentioned in the text.

2. Preserving the Dilation Order via Cumulative Entropies

This section investigates how CRE and CE can serve as evidence functions for assessing the dilation order. A key advantage of CRE lies in its connection to the mean residual life (MRL) function,

. It has been shown that

, a relationship that underscores the relevance of CRE in reliability theory, where the MRL function is widely used to describe system aging [

10]. Similarly, CE is closely related to the mean inactivity time (MIT) function,

; in particular,

[

14]. These connections highlight the role of CRE and CE not only as measures of uncertainty but also as practical adequacy measures for quantifying variability. We now turn to the relationship between the dilation order of two random variables and the ordering of their CRE and CE. Recall that for a random variable

with cdf

, the quantile function is defined by

for

.

Theorem 1. Let and be two absolutely continuous nonnegative random variables with respective finite means and and with pdfs and and cdfs and , respectively. Then,

- (i)

if X ≤dil Y, then

.

- (ii)

if X≤ dil Y, then .

Proof. (i) Given that

, and

, along with the relation

and Equation (4), we can rewrite

as:

where the final equality follows from the substitution

. An analogous identity holds for

. Recall from Theorem 3.A.8 of [

2] that

implies

Since

and

, integrating both sides of the inequality over [0,1] and applying Fubini’s theorem yields

This implies

or equivalently,

, by recalling relation (7).

(ii) Using

, and Equation (6), we can rewrite

, as in Part (i), as follows:

where the last equality follows from the substitution

. The same identity holds for

. Recall from [

2] that

yields

Integrating both sides of the preceding relation over [0,1] and applying Fubini’s theorem yields

This implies that

or equivalently

by (8). This completes the proof. □

Before presenting the next theorem, we define the absolute lower Lorenz curve, used in economics to compare income distributions, as

for all

see e.g., [

4]. When

is a degenerate random variable,

coincides with the horizontal axis.

decreases for

and increases for

, with

. Furthermore,

is a convex function with respect to

implying

for all

. Note that t can also be written as

so its convexity in

reflects the convexity of functionals of the demeaned variable

, consistent with the definition of the dilation order given in (1). Moreover, we also define the complete function of the absolute upper Lorenz curve as follows:

It follows that

From Theorem 3.A.8 of [

2], we have

if and only if

or

for all

Let us check this with an example.

Example 1. Let and be random variables following exponential distributions with cdfs and where It is easy to see thatfor Since and is negative for , we conclude thatwhich means that for all and hence .

Remark 1. We recall that the results given in Example 1 are consistent with the fact that for exponential random variables, whenever (see [2] for the definition of the dispersive order ). So, by Theorem 3.B.16 in [2], it implies that .

The CRE and CE measures can be expressed in terms of the functions

and

, respectively. Recalling (7), and applying integration by parts with

and

, and thus

and

, we obtain an alternative expression for the CRE in terms of

as follows:

Similarly, recalling (8), and applying integration by parts with

and

, and thus

and

, we have an alternative expression in terms of

for the CE as follows:

The following counterexample demonstrates that the converse of Theorem 1 is not necessarily true, that is, to () does not imply .

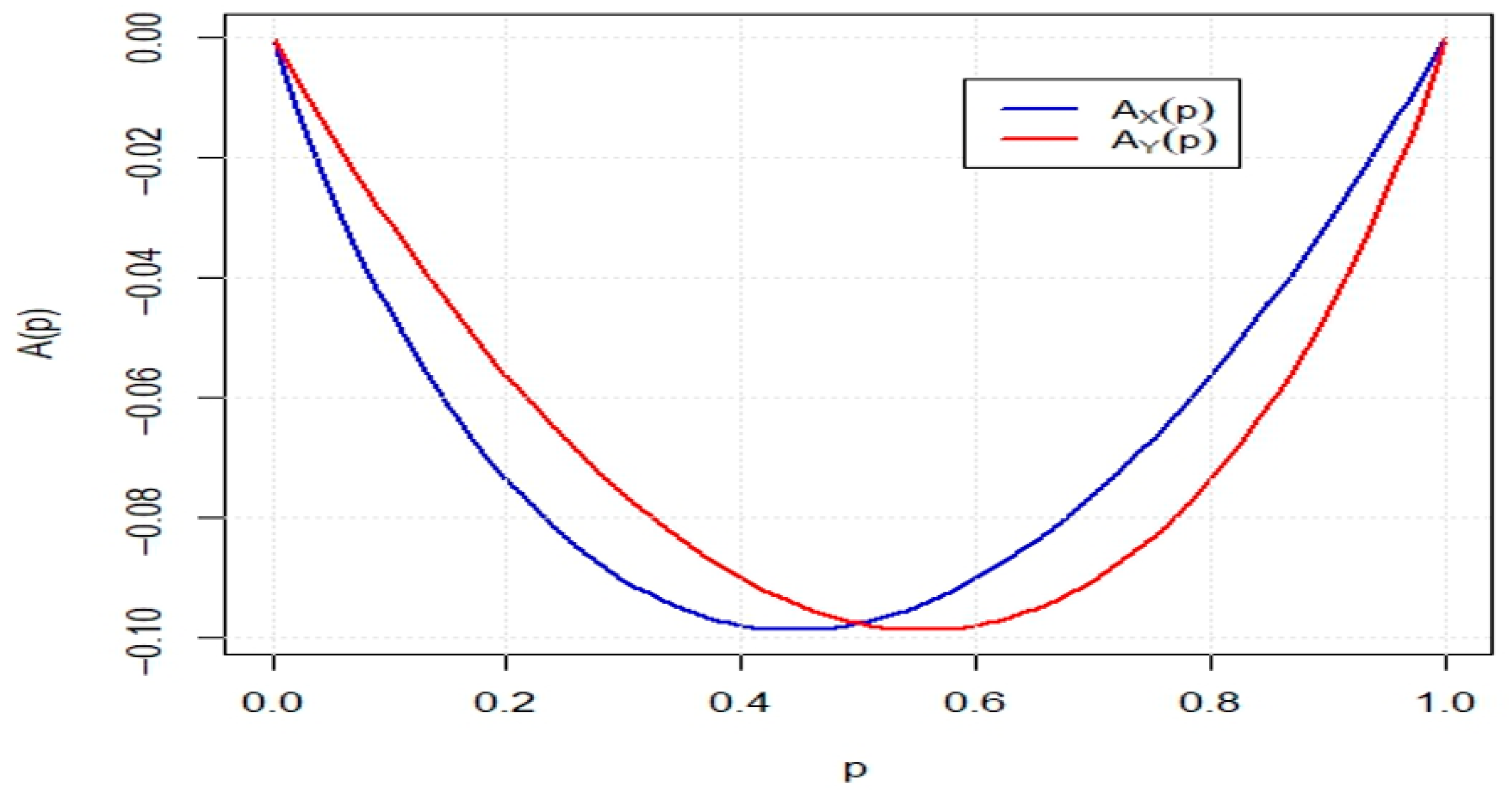

Example 2. Let and be random variables with cdfs and for . It is straightforward to verify that and which implies . Moreover, one can obtainfor Figure 1 displays the plots of and over the interval The figure reveals that for and for , leading to the conclusion that .

The expressions presented in Equations (9) and (10) are now utilized to derive several important results. The following theorem emphasizes a key implication: if two random variables are ordered by dilation and possess the same CE, then they are identical in distribution or differ only by a location shift.

Theorem 2. Under the conditions of Theorem 1, if and , then and have the same distribution up to a location parameter.

Proof. Based on the assumption

and recalling (10), it is equivalent to

Furthermore, by Theorem 2.1 of [

24],

implies

From (11) and (12),

almost everywhere on

. We claim that

for all

. Otherwise, there exists an interval

such that

for all

. Then,

contradicting with (11). Therefore,

Differentiating (13) with respect to

yields

implying that

and

have the same distribution up to a location parameter. □

For random variables ordered by dilation, equal CRE values imply identical distributions or differences only in location, which is proven in the next theorem.

Theorem 3. Under the conditions of Theorem 1, if and , then and have the same distribution up to a location parameter.

Proof. Based on the assumption

and recalling (9), it is equivalent to

Since

for all

, Theorem 2.1 of [

24] implies that

X ≤

dil Y leads to

The remainder of the proof is analogous to that of Theorem 2. □

It should be noted that Theorems 2 and 3 imply that if and (or ), then , meaning and are equal in distribution up to a location parameter.

3. Statistical Evidence for the Dilation Order via Cumulative Entropies

Economic and social processes often influence the variability of distributions, such as household spending before and after tax reforms, or stock returns before and after financial policy changes. A natural question in these contexts is whether such changes significantly alter variability. To address this, we develop tests for the null hypothesis

(variability remains unchanged) against the alternative

and

(variability increases). Note that

and

i.e.,

if and only if

for some real constant

and for all

. According to Theorems 1–3, this comparison can be expressed in terms of entropy-based evidence functions. In particular, the functionals

serve as natural measures of departure from

in favor of

. Thus, the null hypothesis should be rejected if the absolute values of

or

exceed their corresponding critical thresholds. Since the true values of

,

,

, and

are generally unknown, we estimate them from independent random samples

and

Replacing the population entropies with their empirical counterparts, we obtain the test statistics

and

where

,

and

denote the empirical estimators of CRE and CE, for random samples from

and

, respectively. Under the null hypothesis

i.e., the distributions of

and

differ at most by a location shift; both population contrasts

and

are equal to zero (see Theorems 2 and 3). Large absolute values of the estimators

and

thus provide evidence against the null hypothesis. Importantly, while

implies

and

(see Theorem 1), the converse does not hold (Example 2). Therefore, the sign of the estimated contrast should not be interpreted as definitive evidence for a specific direction of the dilation order. Instead, the statistics function as evidence functions, with the formal two-sided test providing protection against false claims of difference when

. Thus, the null hypothesis should be rejected if

and

. Here, the rejection thresholds

and

are determined by the null distributions of

and

, which are studied in the next subsection. We reject

when the estimates of

or

are sufficiently large. Let

be a sequence of independent and identically distributed (i.i.d.) continuous nonnegative random variables, with order statistics

. The empirical distribution function corresponding to

is defined as

which can equivalently be expressed as

where

denotes the indicator function of event

. An estimator of the CRE, based on a nonparametric approach and derived from the

L-functional estimator, is then given by

where

, for

. Similar arguments can be applied to obtain the estimator

. The following theorem demonstrates mean, variance and RMSE of

is invariant to shifts in the random variable

, but not to scale transformations.

Theorem 4. Assume that is a random sample of size taken from a population with pdf and cdf and. Then, when is approximately large, the following properties apply:

- (i)

,

- (ii)

,

- (iii)

.

Proof. It is not hard to see that

The last equality is obtained by noting that

since

is a Riemann sum for the integral of

when

is approximately large. The proof is then completed by leveraging the properties of the mean, variance, and RMSE of

. □

Similar arguments can be applied to obtain the estimator . Since is a linear combination of the dispersion measures and , the results established in Theorem 4 for follow directly from the corresponding properties of these estimators. The following theorem establishes the asymptotic normality of the test statistic , providing the theoretical foundation for its use as an evidence function in testing the dilation order.

Theorem 5. Assume that and with , and . Let and suppose that for some , Then, as , , wherewithand defined analogously. Proof. Since the function

is bounded and continuous, Theorems 2 and 3 of [

30] imply that

converges in distribution to a normal law with mean zero and finite variance

as

. A similar convergence holds for

, because convergence in distribution is preserved under convolution.

To address the dependence on the unknown distribution function, we employ a consistent estimator of the variance. Following the representation of [

31], we define

with

defined analogously. The decision rule for rejecting

in favor of

at significance level

is:

where

represents the

-quantile of the standard normal distribution. □

We now present the analogous result for the cumulative entropy. To this end, we propose a nonparametric estimator of CE, derived from the

-functional representation, defined as:

where

, for

. A similar estimator can be constructed for

. Similar Theorem 4 can also be obtained for the estimator

.

Theorem 6. Assume that is a random sample of size taken from a population with pdf and cdf and. Then, when is approximately large, the following properties apply:

- (i)

,

- (ii)

,

- (iii)

.

Similar arguments can be applied to obtain the estimator . Since is a linear combination of the dispersion measures and , the results established in Theorem 6 for follow directly from the corresponding properties of these estimators. The asymptotic normality of the CE-based test statistic is established in the following theorem. Since its proof closely parallels that of Theorem 4, it is omitted here for brevity.

Theorem 7. Assume that and such that , and . Let and suppose that for some , we haveThen, as , is normal with mean zero and the finite variancewhereand defined analogously. The estimator for is defined as: Similarly, we estimate as . Consequently, the decision rule for rejecting in favor of at significance level q is:where is defined previously. Remark 2. An important feature of the dilation order is that it provides a natural framework for characterizing the harmonic new better than used in expectation (HNBUE) and harmonic new worse than used in expectation (HNWUE) aging classes [32,33]. Specifically, a random variable belongs to the HNBUE (respectively, HNWUE) class if and only if for some that is exponential with mean equal to that of , i.e., . Building on this foundational concept, we introduce a test statistic that can be employed to evaluate the null hypothesis: : follows an exponential distribution vs. belongs to the HNBUE or HNWUE class but does not conform to an exponential distribution. If represents a random variable with an exponential distribution with mean of , we can deriveandwhere measures quantify deviation from to . The measures are empirically estimated, respectively, as:where and for .

Similar to Theorem 4 can also be obtained for the estimators and . To obtain scale invariant tests, we can use the statistics and , where represents the sample mean. By similar arguments as in the proofs of Theorems 5 and 7, and applying Slutsky’s theorem, we obtain the following asymptotic distributions:andThe null hypothesis should be rejected ifwhere and are the estimators of and , respectively. 3.1. Simulation Study

To assess the finite-sample performance of the proposed tests in (19) and (21), we carried out a simulation study comparing their power functions across a range of representative probability models. The chosen distributions are widely applied in economics, finance, insurance, and reliability, and together they span scenarios from light-tailed to heavy-tailed behavior. As a natural benchmark, we first considered the exponential distribution, a standard reference model in reliability theory whose tail behavior provides a baseline for detecting departures toward heavier-tailed alternatives. To capture such heavy-tailed phenomena, we included the Pareto distribution, commonly employed in economics, finance, and insurance to model extreme events. Its scale and shape parameters strongly affect dispersion, making it particularly relevant for testing under the dilation order. We also examined the gamma distribution, a versatile model frequently used in econometrics, Bayesian analysis, and life-testing. Its shape–scale parameterization offers flexibility in modeling waiting times, and in the special case of integer shape parameters it reduces to the Erlang distribution. Finally, we incorporated the Weibull distribution, another classical lifetime model with broad applications in reliability and survival analysis, well known for its ability to describe diverse aging behaviors. Together, these four families, exponential, Pareto, gamma, and Weibull, provide a balanced experimental design that reflects both exponential-tail and long-tail settings. For comparability and to ensure meaningful stochastic orderings, all simulated distributions were standardized to share a common mean, although this constraint is not required for the theoretical validity of the proposed tests. We evaluated our proposed tests by comparing their empirical power against four recent tests for the dilation order. Specifically, we compared our statistics and to those developed by the following test statistics:

Aly’s

statistic [

28]:

where

and

is defined similarly.

The test statistic

proposed by Belzunce et al. [

32]:

where

with

.

The test statistic

introduced by [

33], which is based on Gini’s mean difference:

Zardasht’s

statistic [

29], defined as:

where

for all

and

.

The statistic

from [

32] depends on a parameter

; since its performance remains largely consistent across different

values, we adopted

for our analysis. Similarly, for

from [

29], we chose

. We also simulated the following scenarios which are tabulated in

Table 1 and compared the empirical powers of the test statistics.

- (i)

Exponential Distribution: For this scenario, and where is varied from 1 to 2. The null hypothesis is then represented by the case where .

- (ii)

Pareto Distribution: For this scenario, the random variable and where varied from 1 to 2. The null hypothesis is then represented by the case where .

- (iii)

Gamma Distribution: For this scenario, and where varied from 2 to 3. The null hypothesis is then represented by the case where .

- (iv)

Weibull Distribution: For this scenario, and where varied from 1 to 2. The null hypothesis is then represented by the case where .

- (v)

Mixture Weibull Distribution: For this scenario, we have

where

and

where

varied from 1 to 2. The null hypothesis is then represented by the case where

.

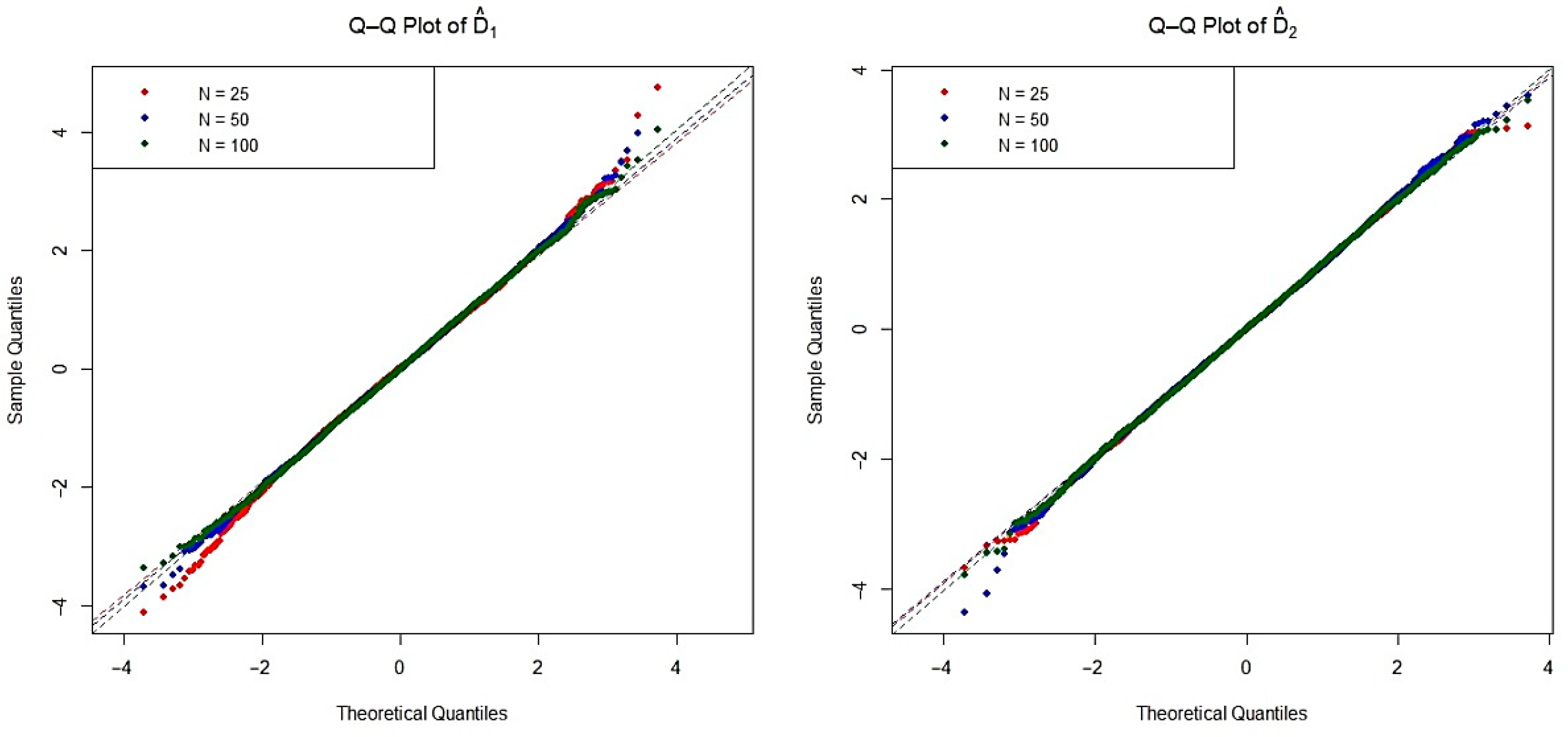

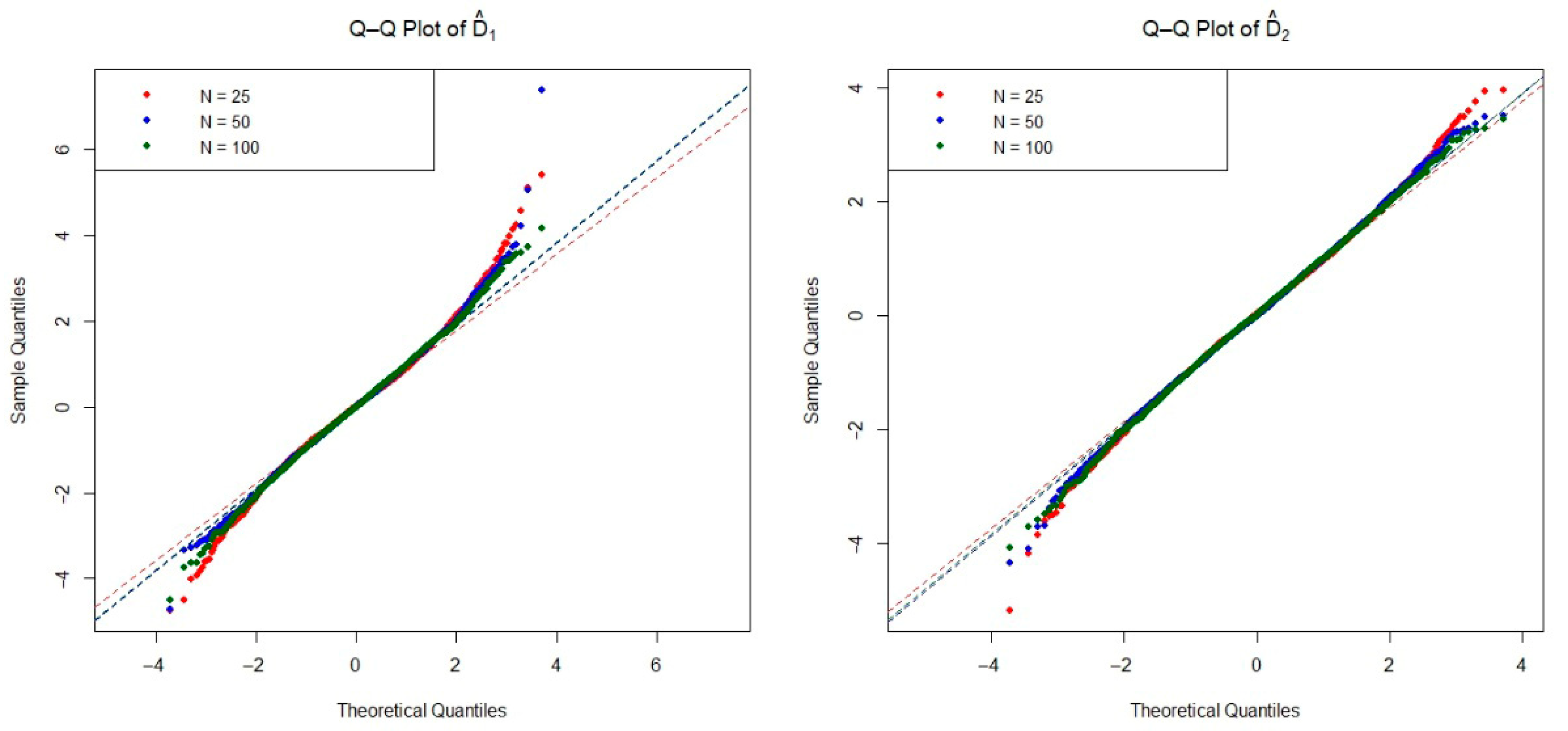

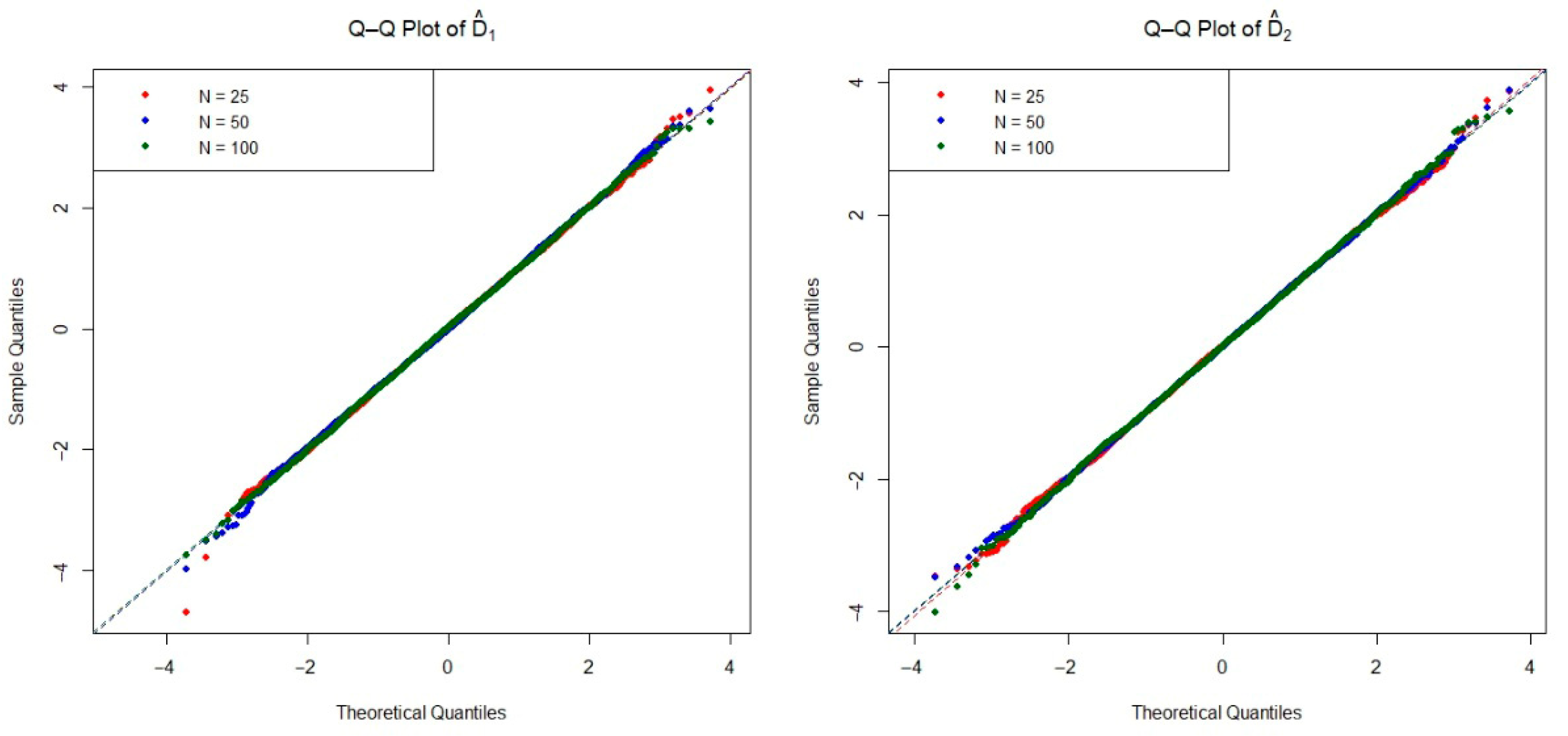

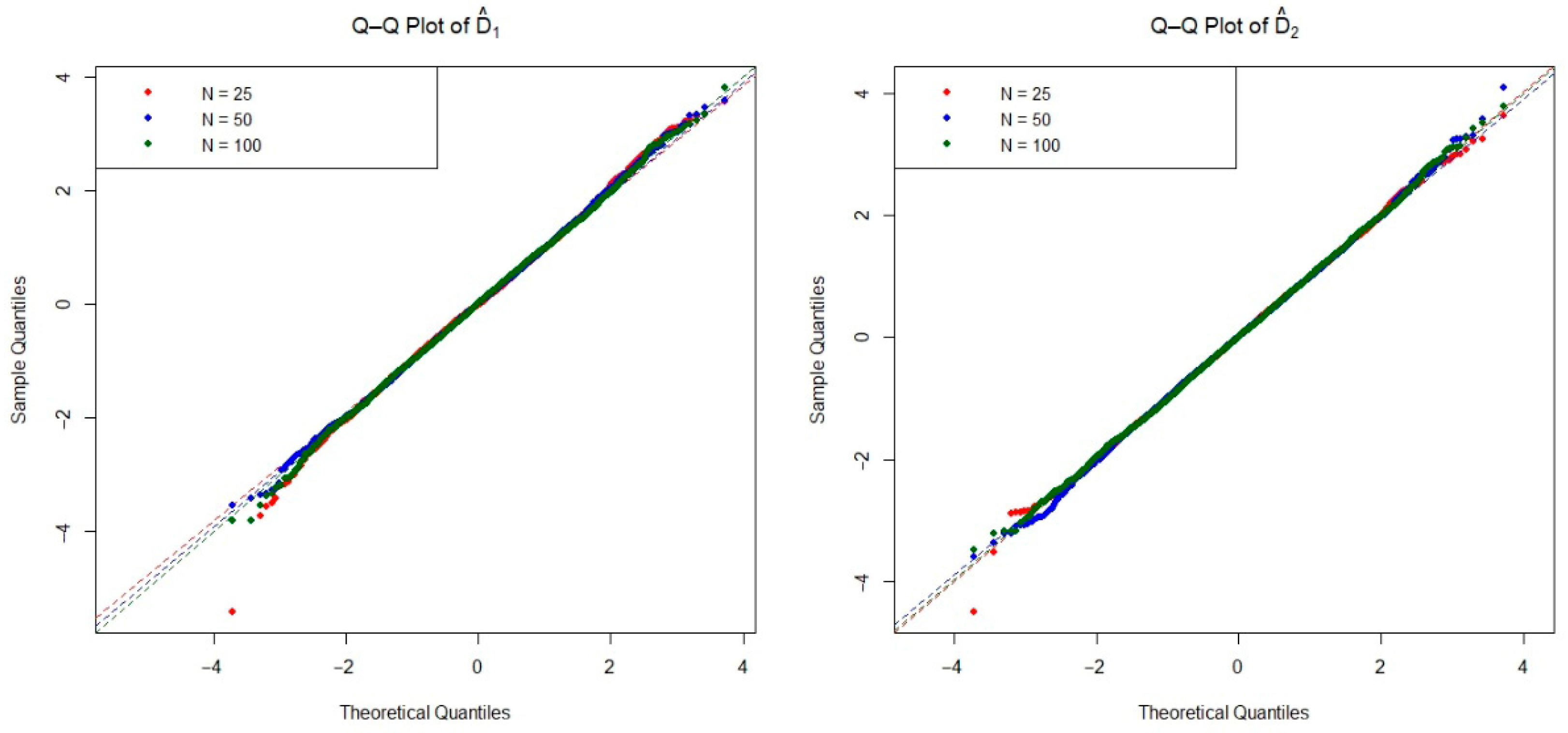

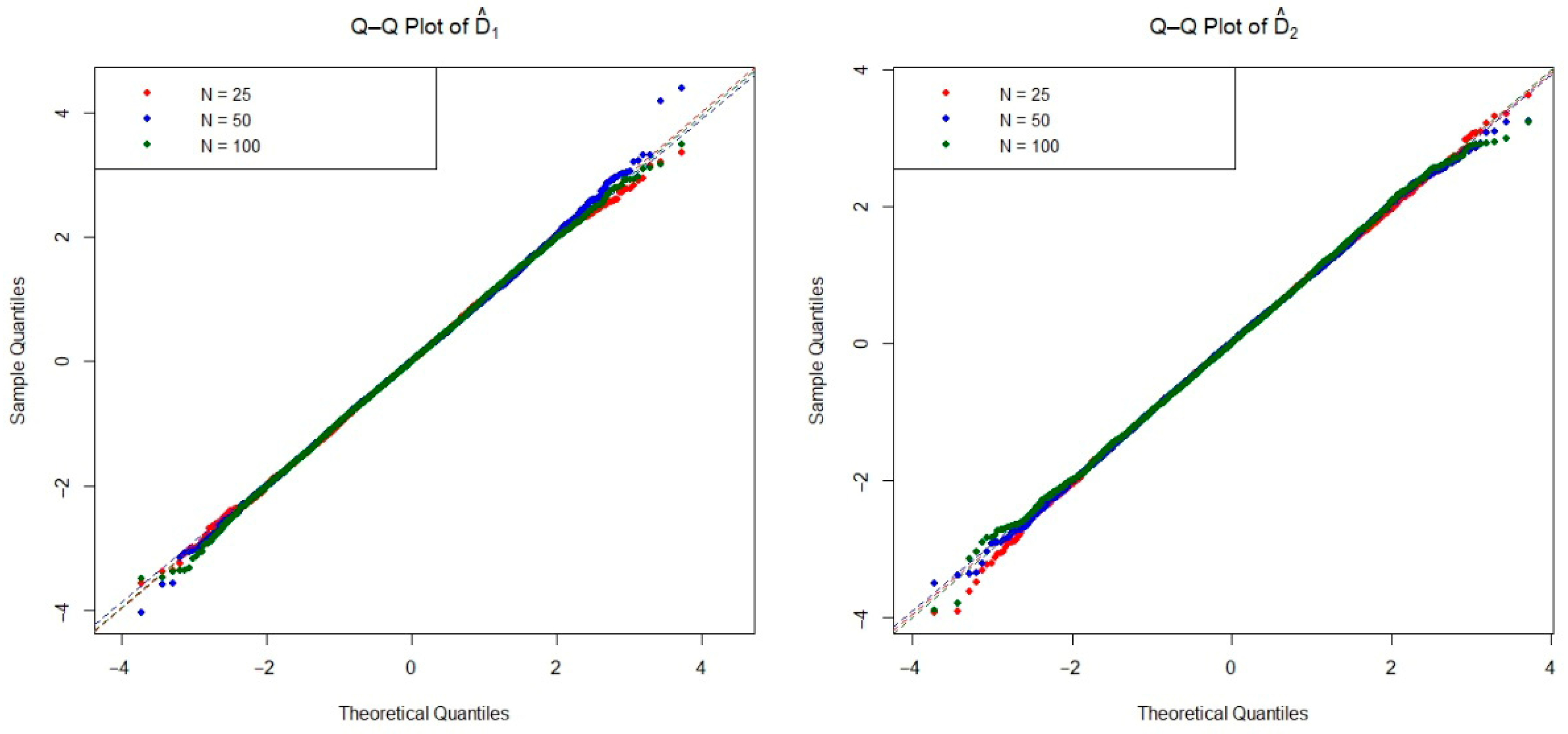

The asymptotic distribution of the test statistic is crucial for determining the critical values used to decide on the null hypothesis. To examine the empirical densities of the estimators

and

under the null hypothesis, we conducted an extensive simulation study based on the Monte Carlo method. Specifically, we generated 20,000 independent iterations pairs of random samples from each of the four distributions listed in

Table 1, as well as the mixture Weibull distribution. For each distribution, we considered three sample size configurations

, and 100 under the null hypothesis. The Q-Q plots of two estimators are presented in

Figure 2,

Figure 3,

Figure 4,

Figure 5 and

Figure 6. These plots provide valuable insight into the shape, center, and spread of the estimators’ distributions under

and illustrate the convergence toward normality as the sample size increases, consistent with asymptotic theory.

To obtain the critical values of estimators

and

, we iterate 5000 samples of size

from the null hypothesis distributions. From the 5000 values of estimators

and

,

th quantile represents the critical value corresponding to sample size

of the test statistics at significance level

. If the critical values for the two-sided test are denoted as

and

, then the null hypothesis is rejected with size

whenever

and

. The critical values of

and

are based on 5000 samples of different sample sizes generated from the null distribution at significance level

. The empirical power of the proposed test statistics was evaluated via five distribution functions, exponential, Pareto, gamma, Weibull, and mixture Weibull, using

independent pairs of random samples for each configuration, with equal sample sizes

, and 100. For each replication, the null hypothesis of dilation equivalence was tested, and empirical power was calculated as the proportion of rejections among the 5000 simulations. The results, summarized in

Table 2,

Table 3,

Table 4,

Table 5 and

Table 6, confirm the expected consistency: power generally increases with sample size for all methods.

Notably, the CE-based statistic exhibits superior power in most scenarios, particularly for exponential, Pareto, and gamma distributions. This underscores its effectiveness in detecting deviations from exponentiality toward heavier-tailed or more dispersed alternatives within these families. However, shows weaker performance under Weibull and mixture Weibull alternatives, indicating limited sensitivity to the specific dispersion characteristics of this model. In contrast, the CRE-based statistic demonstrates modest power in small samples but markedly improves as sample size grows, especially in Weibull and mixture Weibull settings, where it often outperforms competing methods including at . This suggests that gains reliability and robustness with larger datasets for these distributions.

Overall, while emerges as a versatile and powerful tool across a broad range of distributions, its suitability depends on the underlying data-generating process. Likewise, offers complementary strengths, particularly in Weibull-type or heavy-tailed mixture contexts. Thus, the two statistics are best viewed as complementary evidence functions, with the choice between them guided by the nature of the distribution and the available sample size.

3.2. Real-Data Analysis

To illustrate the practical utility of the proposed methodology, we analyze a real dataset on survival times of male RFM strain mice, originally reported by [

34]. The study considered two groups: the first group (

) consisted of mice raised under conventional laboratory conditions, while the second group (

) was raised in a germ-free environment. In both groups, death was due to thymic lymphoma, allowing a direct comparison of survival variability under different environmental conditions. This dataset is particularly valuable in survival and reliability analysis because it allows us to investigate how external factors, in this case environmental exposure, affect the dispersion of lifetimes. Specifically, our interest lies in testing whether the survival distribution of mice raised in germ-free conditions (

) is more dispersed than that of mice raised conventionally (

), which corresponds to verifying the dilation order relationship

.

As a preliminary step, we followed the graphical approach recommended by [

5], which suggested evidence consistent with the dilation ordering

. Building on this, we applied six test statistics, including the proposed entropy-based measures

and

, to formally assess the hypothesis. The results, summarized in

Table 7, provide strong statistical support for the dilation order. In particular, all six test statistics yielded small

p-values, leading to rejection of the null hypothesis of equality and confirming the alternative

in significance level

. The entropy-based test

was especially effective, delivering the strongest evidence among the six statistics.

This real-data application highlights three key insights. First, it demonstrates how cumulative entropies can function as practical evidence measures, capable of validating stochastic orderings in empirical settings. Second, it shows that entropy-based tests can reveal differences in variability between populations that may not be apparent from mean comparisons alone. Third, it illustrates the robustness and versatility of the proposed methodology in survival data analysis, with implications extending to biomedical research, reliability engineering, and actuarial science. This validation on real data underscores that entropy-based evidence functions are not only theoretically sound but also practically reliable, even in complex biological survival settings.

4. Conclusions

This paper introduced novel classes of entropy-based test statistics for assessing the dilation order, one rooted in an evidential interpretation of stochastic variability. By leveraging CRE and CE, we constructed evidence functions that quantify the degree to which one distribution exhibits greater variability than another, without requiring parametric assumptions. These statistics not only offered a principled measure of divergence aligned with dilation ordering but also served as interpretable evidence metrics in hypothesis testing. Moreover, we established the theoretical foundation of the proposed methods by deriving their asymptotic distributions and analyzing their large-sample behavior, ensuring validity under standard regularity conditions. Extensive simulation studies demonstrated that the CE-based statistic achieves high power and strong consistency across diverse alternatives, even in moderate samples, while the CRE-based counterpart exhibited notable robustness and improved performance as sample size increases. This complementary behavior underscored their joint utility across both small- and large-sample regimes. The practical relevance of the framework is illustrated through an analysis of survival times from RFM strain mice, where the proposed tests provide statistically significant evidence of dilation ordering between treatment groups. This real-world application highlights the methodology’s potential in survival analysis, reliability engineering, and biomedical research, where assessing variability differences is often more informative than mean comparisons.

On the other hand, the approach is extended to the classical problem of testing exponentiality against HNBUE and HNWUE alternatives, a critical task in reliability and actuarial science. The resulting tests inherited the evidential structure of the main framework, thereby bridging stochastic orders, aging properties, and information-theoretic measures in a unified setting. Despite these advances, several promising directions remain open for future work:

Refined inference procedures, such as bootstrap or permutation-based methods, to enhance small-sample accuracy in strength of evidence uncertainty estimation control of test Type I error.

Extension to multivariate settings, where notions of dilation order and entropy must be generalized to account for dependence and dimensionality.

Adaptation to time-dependent and censored data, including survival models with covariates, recurrent events, or temporal dependence structures (e.g., Markov or stationary processes).

Robustness analysis under model misspecification and integration into Bayesian evidence frameworks, potentially via entropy-based Bayes factors.

Generalization to alternative entropy measures, such as Rényi or Tsallis entropies, which may yield more flexible evidence functions adaptable to heavy-tailed or asymmetric distributions.

Together, these future avenues promise to broaden the scope, rigor, and applicability of entropy-based evidential testing in both theoretical and applied statistics.