2. “Just Physics” versus Implemented Computation

We consider finite system

S that interacts with a finite environment

E and assume that the joint system

is effectively isolated. The FEP characterizes the conditions under which

S and

E remain distinguishable from each other as the joint system

U evolves through time. It states, speaking informally, that

S and

E remain distinct only if they are only sparsely or weakly coupled [

9]. This condition can be formulated in various ways; one can require that almost all paths through the joint space that begin in

S(

E) remain in

S(

E) [

11], that the number of states on the Markov blanket (MB) between

S and

E be much smaller than the number of states in either

S or

E, or that the interaction Hamiltonian (or total interaction energy operator)

be much smaller than either of the self-interactions

and

[

13]. What all of these conditions assure is that both

S and

E have “internal states” that are not directly involved in the interaction and that therefore remain mutually conditionally statistically independent. These internal states can then implement distinct, independent computations that enable

S and

E to exhibit distinct, agentive behaviors.

The FEP is, therefore, fundamentally a principle about physical interaction, and hence about the exchange of energy between physical systems. It becomes a principle about inference when energy flow is interpreted as information flow. This interpretation rests on Clausius’ [

15] definition of entropy

, where

is energy,

T is ambient temperature, and

is entropy, and on Boltzmann’s [

16] identification of entropy with uncertainty about the state of a system,

, where

is Boltzmann’s constant and

is the number of observationally indistinguishable states of the system of interest. Combining these two yields Landauer’s principle,

for the minimal energy

required to resolve the value of one bit, i.e., to resolve the state of a two-state system [

17,

18]. Any energy flow, therefore, can be associated with a maximal number of bits, and hence with a maximal information bandwidth. With this information-flow interpretation of energetic coupling, the FEP becomes the claim that the input/output (I/O) bandwidths of persistent systems are small compared to the internal information flows—computations—that generate outputs given inputs. Persistent systems, in other words, remain persistent by implementing computations that effectively model the observable behavior their environments and acting accordingly, i.e., by being AIAs.

The idea that arbitrary physical systems can be interpreted as information-processing systems—computers—is not unique to the literature of the FEP; indeed, it is ubiquitous in physics [

19] and forms the basis for explanation by appeal to function in the life sciences [

20] and computer science [

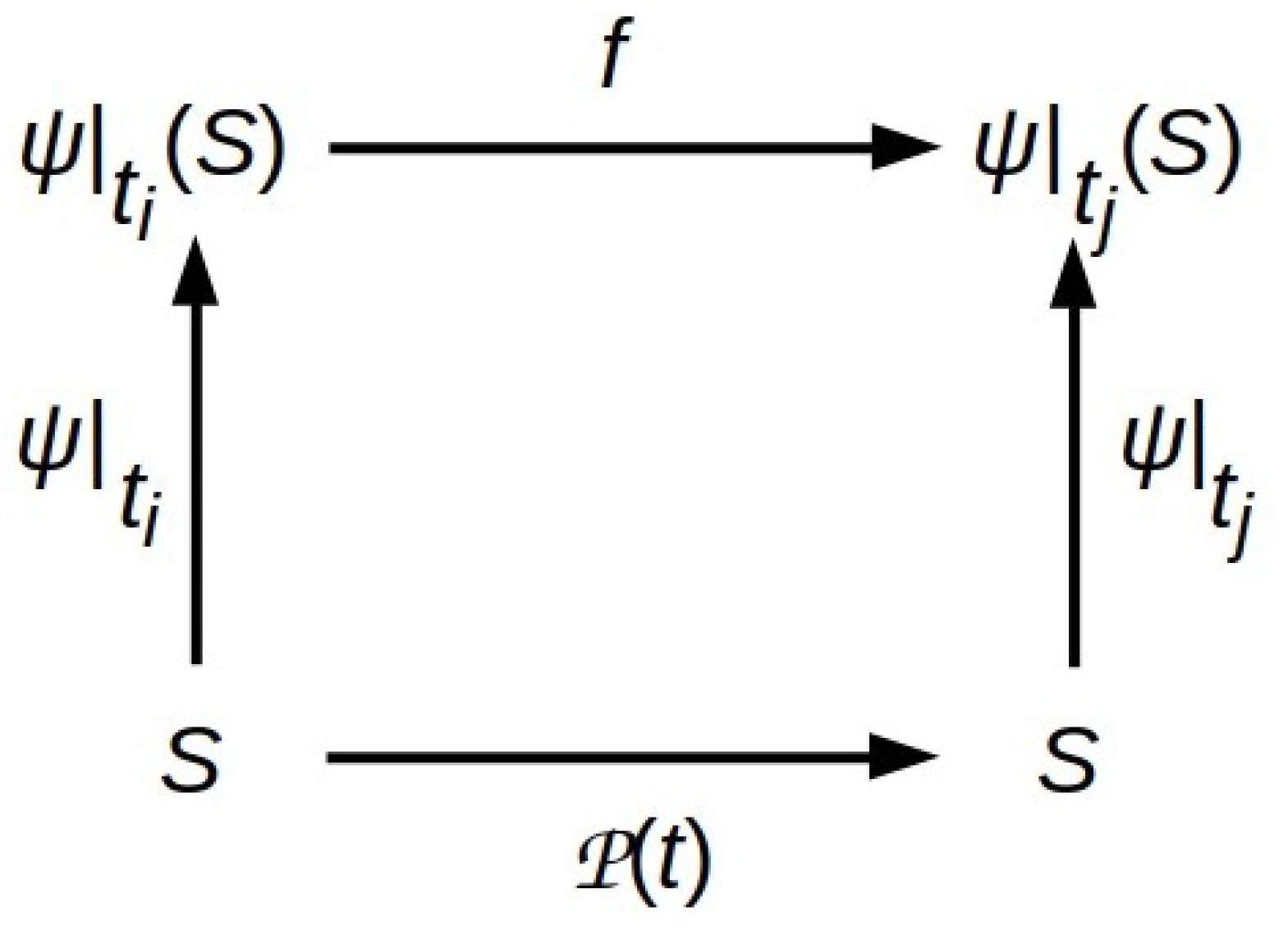

21]. The structure of any such interpretation is shown in

Figure 1. The vertical map

is

semantic in Tarski’s model-theoretic sense [

22]: it treats function

f as

implemented by physical process

between time points

and

. As Horsman et al. point out, such semantic maps can also be thought of as representing measurements [

19]; in this case,

Figure 1 depicts the relationship between any observation-based model

f and the physical process

that it models.

Representing physical systems as AIAs employs the mapping process shown in

Figure 1: the physical system behaves “as if” it is executing inferential processes encoded by some function

f that construct a model of its environment’s behavior and then employ that model to choose approximately Bayes-optimal actions. This inferential process must satisfy two constraints: (1) its only inputs from the environment are the data encoded on its MB; and (2) it must be tractable. As emphasized in [

8,

9] and elsewhere, these constraints are met optimally by function

f that minimizes an upper bound on the surprise

, where

b is an input “sensory” MB state and

is a model prediction. This upper bound is the VFE ([

9] Equation (2.3)),

where

is a variational density over predicted external states

parameterized by internal states

and

is an expectation value operator parameterized by variational density

q.

We can therefore choose to regard an AIA simply as a dissipative physical system that is maintaining its state in the vicinity of—or maintaining an orbit around—a nonequilibrium steady state (NESS), or we can choose to regard it as computer implementing a procedure that minimizes an abstract information measure, the VFE defined by Equation (

1). Provided that states

b are sampled from the complete state space of the MB separating the system from its environment—and hence capture the total energy/information exchange through the MB—descriptions of the dynamics as “just physics” or “just computations” are related by semantic map

as in

Figure 1. The energy and information flows they entail are, at optimal thermodynamic efficiency, quantitatively related by the total I/O bandwidth of the MB in bits times ln2(

kBT).

In practice, however, we do not always want to view systems as either “just physics” or “just computation”. We often want to view part of a system as computing some specific function, and the rest as providing the infrastructure services required by the system’s physical embodiment, including architectural integrity, adequate power, and heat dissipation. We are in this situation whenever specific computations are attributed to particular components of system S, or when only a particular subset of S’s MB states is regarded as encoding “interesting” inputs and outputs. Note that this choice of what is “of interest” is effectively a choice of semantic map that applies to only some components of S. This kind of interest-driven decomposition is ubiquitous in biology, e.g., when distinguishing signal transduction from metabolic pathways in cells, when modeling neural computation in terms of synaptic inputs and outputs, or when treating the I/O of animal’s brain as separate and distinct from that of its digestive system. It is also ubiquitous in practical computing, e.g., when specifying the application programming interface (API) of a software module while leaving power management to the hardware and memory management to the operating system.

Interpreting particular subsystems of system

S as computing particular functions abstracts away the fundamental constraints imposed on

S by its physicality, including the fact that acting on the environment by producing output requires TFE in accord with Landauer’s principle. Given the assumption that

is isolated, that energy must be obtained from the environment as an input. Providing a complete description of an AIA that computes some specific inputs and outputs—or sensations and actions—of interest requires, therefore, also the thermodynamic (or metabolic) inputs and outputs that the “of interest” designation assumes as infrastructure. It therefore requires devoting some of the states on the MB to flows of fuel and waste heat. Making these requirements of physical embodiment explicit, thus re-integrating thinking about software with thinking about hard- or bio-ware, is one of the goals of both the embodied cognition and mortal computing frameworks [

6].

3. Coupling Information and Energy Flows

If computational and infrastructure functions are regarded as performed by distinct components of a system, how do we represent their coupling? In the notation of

Figure 1, if we factor the interpretation of

, what is the relationship between the factors? How is TFE delivered to the computational processes that need it in order to compute VFE?

This question is challenging to formulate precisely, because any decomposition of system S into components generates an MB between them and renders each component a part of the environment of each of the others. Decomposition requires, therefore, a bottom level of undecomposed “atomic” components to avoid infinite regress. At this atomic level, the question of how computing and infrastructure relate must be answered without recourse to further decomposition.

This question of how “physical” TFE flows couple to “computational” VFE flows arises in both classical and quantum formulations of the FEP. It is, however, most easily addressed using quantum formalism, which provides a simple, intuitive description of inter-system interactions that applies to all systems, regardless of their structure. Using this formalism, we can view TFE and VFE flows as distinguished by a symmetry breaking that has no natural classical formulation [

23]. We first review the quantum formulation of generic physical interactions, then show how it provides both a natural definition of “atomic” systems and a precise characterization of the interaction between components in a composite system. We use the latter to understand how a thermodynamic component, effectively power supply, can provide regulated TFE flows to computational components of a composite system.

In quantum formalism, the joint state space of a composite system

is a finite-dimensional Hilbert space

[

13,

24]. For any system

X, the Hilbert space

is a vector space that can be constructed by assigning a basis vector to every independent yes/no question that can be asked about system

X. Each of these basis vectors can be represented by a quantum bit, a qubit, with measurable states (in the Dirac notation)

and

. Hilbert spaces

, and

can, therefore, all be considered qubit spaces; see [

25] for a textbook introduction to such spaces. We let

denote the boundary between

S and

E implicitly given by factorization

. Systems

S and

E can be considered distinct only if they have distinct, mutually conditionally independent states

and

. This is the case only if their joint state is separable; i.e., only if it factors as

. In this case, the entanglement entropy across

is zero. The FEP, in this formulation, states the truism that distinguishable systems must remain unentangled.

The interaction between

S and

E is represented in quantum formalism by a Hamiltonian or total energy operator

. This operator is linear, and so it can be written as

, where

,

, and

are the internal or “self” interactions of

U,

S, and

E, respectively. Interaction

is defined at boundary

. We can characterize both

and

by employing the Holographic Principle [

26,

27] which states that the information that can be obtained about any system

X by an observer outside

X is limited to the information that crosses boundary

of

X. If

X is finite, this quantity of information is finite, and can be written as classical entropy

. We can therefore think of boundary

between

S and

E as encoding

qubits, and hence as characterized by an ancillary Hilbert space

with dimension

. Hilbert space

is ancillary because it is not part of

, i.e.,

. This reflects the fact that

is merely a theoretical construct induced by factorization

.

Given this characterization of

, we are now in a position to describe internal dynamics

of

S. Formally,

is a linear operator on state space

, i.e., we can write

. Because

is a space of qubits, we can think of

as an operator acting on qubits to change their states, i.e., as a quantum computation (again see [

25] for an introduction). The only information flowing into

S from the outside, i.e., from

E, is the information encoded by the

N qubits composing

; similarly, the only information flowing out of

S and into

E must be encoded by these same qubits. Boundary

is therefore the input/output (I/O) interface to

S and hence to the quantum computation implemented by

.

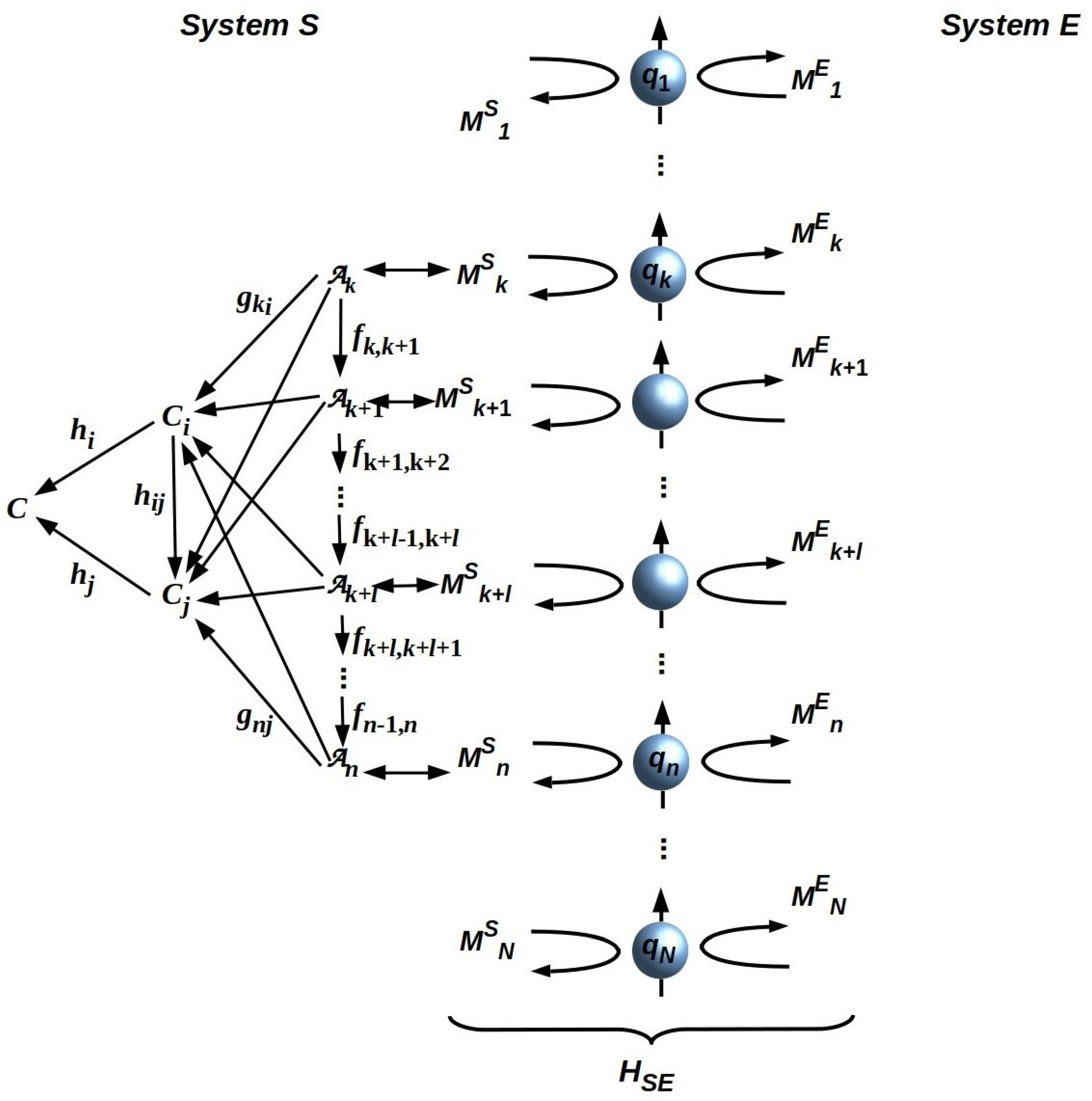

We can further characterize

by thinking of

as a finite collection of non-overlapping subsets of qubits, which we call “sectors”

and considering the components of

that act on each of these

. We can represent each of these components as a quantum reference frame (QRFs)

that measures and dually prepares the states of the

qubits that compose sector

. A QRF is a physical system that enables measuring or preparing states of other systems in a reproducible way [

28,

29]; meter sticks, clocks, and the Earth’s gravitational field are canonical examples of laboratory QRFs. Using a QRF such as a meter stick requires, however, implementing a similar QRF internally; an agent that had no internal ability to represent or process information about distances would have no use for a meter stick. Any observer can therefore be considered to implement a collection of QRFs, one for every combination of physical degree of freedom, every physical one observable, that the observer can detect, assign operational meaning to, and process information about [

13,

24]. Here, we follow previous convention [

12,

13,

24,

30] in extending the usual notion of a QRF to include all of the measurement and preparation processes that employ it. As each QRF

can also be regarded as a quantum computation, it can also be represented by a hierarchical, communtative diagram—a Cone-CoCone diagram (CCCD)—that depicts information flow between a set of

single-qubit operators and a single operator

that encodes an observational outcome for the physical observable represented by

[

12,

13,

24,

30]. We can depict

and an associated QRF

Q as in

Figure 2.

As mathematical objects, CCCDs are objects in category

; the morphisms of this category are embeddings of small CCCDs into larger ones and projections of small CCCDs out of larger ones [

30]; see [

31] for a textbook introduction to categories and their uses. Because CCCDs are by definition commutative diagrams, two CCCDs that do not mutually commute cannot be composed to form a larger CCCD. Pairs of non-commuting CCCDs correspond to pairs of non-commuting QRFs, i.e., to pairs of operators

and

for which commutator

. A single quantum process cannot simultaneously implement two non-commuting QRFs. If system

S implements non-commuting QRFs

and

, it must be partitioned into two subsystems

and

that are separated by a boundary via which they interact. Such a system must therefore have distinguishable components, and its components must have different environments. If

E is the environment of

S, the environment of

is

and vice versa. Hence, we can define

Definition 1. An atomic system is a system that can be represented as implementing a single QRF.

Systems that are not atomic are called “composite” systems. The QRFs implemented by an atomic system must, by Definition 1, all mutually commute; composite systems may implement QRFs that do not commute. Note that Definition 1 makes reference to how the system in question is represented. This reflects the fact that an external observer cannot determine what QRF(s) a system implements [

32]. How the system is represented is therefore a theoretical choice; indeed, it is the very choice of semantic map

that motivates defining atomic systems in the first place.

We let S be an atomic system, E be its environment, and Q be its single (effective) QRF. We can now state the following:

Theorem 1. The thermodynamic free energy required by an atomic system S is acquired from E via its single (effective) QRF Q.

Proof. We let

be the internal dynamics of

S; by definition,

implements

Q. As dom

, we can think of

Q as automorphism

(see [

30] for details). All TFE required by

S must traverse

; hence, all TFE required by

S can only be acquired from

E via

Q. □

If we assume that

is a pure quantum process, and hence that it is perfectly reversible, then it requires TFE only for the thermodynamically irreversible final step of acting on its environment

E, which we can represent, as in

Figure 2, as preparing specific final states of the qubits encoded by its boundary [

33,

34]. Any additional thermodynamically irreversible steps require additional TFE, up to the limit of fully irreversible classical computation, for which every step requires TFE proportional to the number of bits modified or written. Hence, we can write the TFE consumption of

Q as

where

is the number of qubits in sector dom(

Q) on

,

is a non-decreasing function with

everywhere,

is an inverse measure of the thermodynamic efficiency of

Q, and

is the effective ambient temperature. For an atomic system, dom(

. The minimum value

corresponds to fully reversible computation, i.e., to writing output values on dom(

Q) as the only thermodynamically irreversible step. For a classical binary tree,

. The value of

is implementation-dependent, with contemporary semiconductors and ATP/GTP-independent macromolecular switches such as rhodopsins approaching the theoretical optimum, i.e., the Landauer limit of ln2(

) per bit, and ATP/GTP-dependent macromolecular switches typically about 10x less efficient [

35].

We now consider system

S that is atomic and hence has a single QRF

Q that can be treated as a map

. If efficiency

is fixed, energy

must be obtained from each of the

qubits in dom(

Q). This follows from, and indeed illustrates, a fundamental symmetry of the Hamiltonian

: permuting the qubits on

, which, since

is ancillary to

and just means permuting the labels on

, has no effect on physical interaction

[

23]. This symmetry is evident from

Figure 2, which depicts an atomic system if only qubits

composing dom(

Q) are considered. It extends to

Q itself: since the CCCD representing

Q is a commutative diagram, permuting the “base-level” operators

is equivalent to just permuting their labels.

This symmetry of has a significant consequence for computational models of S. As increases, due to internal irreversibility, i.e., inefficiency, the amount of energy extracted from E by the measurement process and dissipated into E by the preparation process proportionately increases. Higher-energy interactions disturb E more per measurement and inject more noise into E per preparation. The symmetry of spreads this increased disturbance and noise uniformly across .

Therefore, from Equation (

2), we can see that any system

S, whether atomic or composite, faces an energetic tradeoff for every deployed QRF

Q. Systems operating far from the optimal, fully reversible limit of

ln2 can decrease the interaction energy for measurement and preparation locally by breaking the permutation symmetry of

[

12]. This requires factoring

Q into components

and

that act on distinct subsets of qubits and hence distinct sectors of

, i.e.,

, with

devoted to information exchange and

devoted to TFE exchange. This factorization is advantageous if

, with

ideally providing all of

above the Landauer minimum, allowing for the action of

to minimally disturb

E. We can represent this situation in schematic form as in

Figure 3. It is reflected in the designs of technologies, like transistors, that use separate power inputs and waste-heat outputs to enable high-sensitivity, low-noise computational I/O. It is also evident in the separation between signal transduction and metabolic pathways and between sensory systems and photosynthetic or digestive systems that are observed in biology.

Dividing

into sectors characterized by different thermal efficiencies by functionally distinguishing the sectors

or

creates a “difference that makes a difference” [

36] in how information flowing through

is processed. Differences between sectors can therefore be thought of as semantic differences—differences effectively in what actions are taken in response to inputs, as well as thermodynamic differences. A choice of a QRF to act on

corresponds, moreover, to a choice of basis vectors for describing both

and

[

13]; hence, we can view factorization

as a choice of distinct representations for the basis vectors characterizing

versus

. We could, from a mathematical perspective, also choose to maintain constant

and build the energetic difference into a difference between temperatures

and

associated with

and

, respectively [

37]. Any system that uses a part of its environment with above-average energy density, e.g., external electrical power, solar radiation, or sugar, as a thermal resource effectively takes this approach to the energy/information tradeoff. Organisms typically employ both variable

and variable

T strategies, e.g., by absorbing relatively high-temperature TFE resources from the environment through specialized anatomical structures with non-uniform bioenergetic properties.

4. Measuring and Controlling Energy Usage

Unlike technologies designed for an environment with effectively unlimited energy resources, living systems are often faced with energy scarcity. Restrictions on the availability of TFE are effectively restrictions on computational throughput, rendering the allocation of energy an important “control knob” on computation. It is for this reason that energy usage and its control are significant practical issues for modeling AIAs.

Energy-supply restrictions can prevent a system that has multiple available QRFs from deploying them simultaneously to measure and act on its environment. Deploying multiple QRFs sequentially requires a control system that allocates TFE resources to one QRF at a time. In the context of the FEP, attentional control—how much either a top-down or a bottom-up signal is amplified or attentuated—is standardly modeled as precision adjustment [

38,

39]. Low-resolution signals can be amplified, and hence have high precision [

40], for example, when reflexive attention is driven by the magnocellular visual pathway, which sacrifices object-identification accuracy for speed [

41]. Recognizing specific objects as having high significance, e.g., specific individual humans that must be correctly identified, requires both high precision and high resolution, and therefore more bits and more TFE. Hence, attention as precision control can, when high object-identification accuracy is required, automatically control TFE allocation as well; the utility of the blood oxygen level-dependent (BOLD) signal for indicating areas in enhanced neural activity via functional MRI provides striking evidence for this [

42]. Targeting energy resources to one QRF at the expense of others requires walling it off, with an interaction-minimizing boundary, from any others that might compete with it. Serialization of QRFs, in other words, induces compartmentalization even of QRFs that would otherwise commute. Hence, systems that are driven by TFE restrictions to deploy QRFs in sequence must be composites of multiple atomic systems, one for each serially deployable QRFs. The converse is also true:

Theorem 2. Only composite systems can control thermodynamic free energy flows.

Proof. Since it is clear that composite systems can control TFE flows, it suffices to show that atomic systems cannot. This, however, is obvious: for atomic system

S to be well defined, its QRF

Q must be well defined as a computation, and hence have well-defined values for all the terms in Equation (

2). □

On a deeper level, Theorem 2 follows from the inability of any system

S to measure its own boundary; for proof, see ([

32], Thm.1, Clause 1).

We suppose now that

S is a compartmentalized system interacting with an energetically restricted environment

E. Provided that TFE availability varies slowly compared to the timescale for other inputs from

E, natural selection processes favor architectures for

S that include a metaprocessor component

M that allocates energy resources to

m other components

of

S, each of which can be regarded as atomic [

43]. The boundary of

M must include

m disjoint sectors

that each interface with the thermodynamic sector

of one of the

; these sectors must be disjoint for the boundaries and hence the state spaces of the

to be well defined. The boundary of

M must also include a sector that manages its own thermodynamic I/O, i.e., that obtains TFE specifically from and dissipates waste heat specifically into

E. Each of the

has an associated QRF, which, to save notation, we can also call

. We assume these QRFs

all mutually commute, so that

M can measure the thermodynamic states of, and supply energy to, multiple of the

simultaneously. No generality is lost with this assumption by taking

M to be atomic, as any finite hierarchy of metaprocessors must have some top level with this characteristic. Theorem 2 therefore applies to

M: while

M can control TFE flows to the

, it cannot address its own energy supply versus computation tradeoff.

We can now ask: how effectively can M control the overall computational behavior of S by differentially allocating TFE resources to ? The answer clearly depends on M’s ability to determine both the need for a particular in the current behavioral context and that the resource needs, relative to the rest of S, of that . This information must be obtained from M’s environment , which comprises E together with all of the . Indeed, M is just an AIA operating in .

To recognize that M is an AIA operating in is, however, to recognize the difference and prima facie mismatch between M’s task in the context of S and M’s task in its own environment, i.e., in . The former task is effectively to increase S’s predictive power, while the latter is to increase M’s predictive power (i.e., the task stipulated by M’s compliance with the FEP). Compatibility between these tasks requires, at minimum, preventing competition between M and the . The only architecture for S that does this is one in which M is the sole energetic interface between the and E, and the are collectively the sole informational interface between M and E. To observed this, note that if can obtain TFE independently of M, M is less able to control their operation to prevent competition or deadlock, and hence less able to optimize S’s behavior, while if M can obtain information from E independently of the , the FEP drives M to optimize its own access to the affordances of E instead of optimizing S’s access.

This architecture explicitly restricts M’s information about S’s current behavioral context to that provided by its interaction with the . The only learnable predictive model for M is, therefore, a model of how energy distribution to the correlates with expected future energy availability to M. The role of M in increasing S’s predictive power is therefore limited to increasing S’s ability to predict future energy availability. This, as mentioned earlier, solves the dark room problem for S. It also places an energetic constraint on epistemic foraging that does not positively correlate with energetic foraging. From an organismal perspective, this constraint makes sense; novel information may be very valuable, but its value can only be realized if the energy required to exploit it can also be found. Attention, in other words, is automatically prioritized toward maintaining TFE resources, i.e., to maintaining allostasis. Semelparous species violate this rule, prioritizing sex over TFE, but pay the price when allostasis collapses.