Abstract

Despite their remarkable performance, deep learning models still lack robustness guarantees, particularly in the presence of adversarial examples. This significant vulnerability raises concerns about their trustworthiness and hinders their deployment in critical domains that require certified levels of robustness. In this paper, we introduce an information geometric framework to establish precise robustness criteria for white-box attacks in a multi-class classification setting. We endow the output space with the Fisher information metric and derive criteria on the input–output Jacobian to ensure robustness. We show that model robustness can be achieved by constraining the model to be partially isometric around the training points. We evaluate our approach using MNIST and CIFAR-10 datasets against adversarial attacks, revealing its substantial improvements over defensive distillation and Jacobian regularization for medium-sized perturbations and its superior robustness performance to adversarial training for large perturbations, all while maintaining the desired accuracy.

1. Introduction

One of the primary motivations for investigating machine learning robustness stems from the susceptibility of neural networks to adversarial attacks, wherein small perturbations in the input data can deceive the network into making the wrong decision. These adversarial attacks have been shown to be both ubiquitous and transferable [1,2,3]. Beyond posing a security threat, adversarial attacks underscore the glaring lack of robustness in machine learning models [4,5]. This deficiency in robustness is a critical challenge as it undermines trustworthiness in machine learning systems [6].

In this paper, we shed an information geometric perspective to adversarial robustness in machine learning models. We show that robustness can be achieved by encouraging the model to be isometric in the orthogonal space of the kernel of the pullback Fisher information metric (FIM). We subsequently formulate a regularization defense method for adversarial robustness. While our focus is on white-box attacks within multi-class classification tasks, the method’s applicability extends to more general settings, including unrestricted attacks and black-box attacks across various supervised learning tasks. The regularized model is evaluated on MNIST and CIFAR-10 datasets against projected gradient descent (PGD) attacks and AutoAttack [7] with and norms. Comparisons with the unregularized model, defensive distillation [8], Jacobian regularization [9], and Fisher information regularization [10] show significant improvement in robustness. Moreover, the regularized model is able to ensure robustness against larger perturbations compared to adversarial training.

The remainder of this paper is organized as follows. Section 2 introduces notations, notions of adversarial machine learning, and definitions related to geometry. Then, we derive a sufficient condition for adversarial robustness at a given sample point. Section 3 presents our method for approximating the robustness condition, which involves promoting model isometry in the orthogonal complement of the kernel of the pullback of the FIM. In Section 4, several experiments are presented to evaluate the proposed method. Section 5 discusses the results in the context of related work on adversarial defense. Finally, Section 6 concludes the paper and outlines potential extensions of this research. Appendix A provides the proof of the results stated in the main text.

2. Notations and Definitions

2.1. Notations

Let such that . Let . In the learning framework, d will be the dimension of the input space, while c will be the number of classes. The range of a matrix M is denoted as . The rank of M is denoted as . The Euclidean norm (i.e., norm) is denoted as . We use the notation if and 0 otherwise. We denote the components of a vector v by with a superscript. Smooth means .

2.2. Adversarial Machine Learning

An adversarial attack is any strategy aiming at deliberately altering the expected behavior of a model or extracting information from a model. In this work, we focus on attacks performed at inference time (i.e., after training), sometimes referred to as evasion attacks. The most well-known evasion attacks are gradient-based. Such gradient-based attacks all follow the same idea that we explain thereafter.

To reach good accuracy and generalization, a machine learning model f (with input x and parameter w) is typically trained by minimizing a loss function with respect to the parameters w of the model. In its simpler form, the loss function quantifies the error between the prediction of the model and ground-truth y. Given a clean input , an adversarial example can be crafted by maximizing the loss function , starting from and using gradient ascent , where the gradient is computed with respect to the input x (and not the parameter w as during training). In order for to be an adversarial example, and must be close to each other according to some dissimilarity measure, typically a norm. An adversarial example is successful if the model f classifies differently from . Some well-known gradient-based attacks include the fast gradient sign method [2] and projected gradient descent [3].

Adversarial attacks can be classified according to their threat model. White-box attacks assume that the adversary has perfect knowledge of the targeted model, including access to the training data, model architecture, and model parameters. Such an adversary can directly compute the gradient of the targeted model and craft adversarial examples. More realistic threat models are classified as gray-box or black-box attacks, where some or all of the information is unknown to the adversary. In this work, we use both white-box attacks as well as simple gray-box attacks where the adversary can access the training data and model architecture, but not the model parameters. To craft such gray-box adversarial examples, another model is trained with the same data and architecture. Then, white-box attacks are performed on this model. Finally, the adversarial examples can be transferred to the targeted model.

Adversarial robustness aims to build models that classify both and with the same class while preserving sufficient accuracy for the clean examples . Various defenses have been proposed to improve adversarial robustness. The most efficient defense is called adversarial training, which was first described in [2] and further developed in [3]. The idea behind adversarial training is to obtain the parameters of the trained model as:

in place of the original parameters . The set is a set of allowed adversarial attacks for x, e.g., a ball with a given radius (or budget). In practice, adversarial training is performed by adding adversarial examples to the training set, thus providing a lower bound for .

2.3. Geometrical Definitions

Consider a multi-class classification task. Let be the input domain, and let be the set of labels for the classification task. For example, in MNIST, we have (with ) and . We assume that is a d-dimensional embedded smooth connected submanifold of . Let .

Definition 1

(Probability simplex). Define the probability simplex of dimension m by

is a smooth submanifold of of dimension m. We can see as a coordinate system from to . Then, let us define .

A machine learning model (e.g., a neural network) is often seen as assigning a label to a given input . Instead, in this work, we see a model as assigning the parameters of a random variable Y to a given input . The random variable Y has a probability density function belonging to the family of c-dimensional categorical distributions .

can be endowed with a differentiable structure by using as a global coordinate system. Hence, becomes a smooth manifold of dimension m (more details on this construction can be found in [11], Chapter 2). We can identify with .

We see any machine learning model as a smooth map that assigns to an input , the parameters of a c-dimensional categorical distribution . In practice, a neural network produces a vector of logits . Then, these logits are transformed into the parameters with the softmax function: .

In order to study the sensitivity of the predicted with respect to the input , we need to be able to measure distances both in and in . In order to measure distances on smooth manifolds, we need to equip each manifold with a Riemannian metric.

First, we consider . As described above, we see as the family of categorical distributions. A natural Riemannian metric for (i.e., a metric that reflects the statistical properties of ) is the Fisher information metric (FIM).

Definition 2

(Fisher information metric). For each , the Fisher information metric (FIM) g defines a symmetric positive-definite bilinear form over the tangent space . In the standard coordinates of , for all and all tangent vectors , we have

where is the Fisher information matrix for parameter , defined by

For any , the matrix is symmetric positive-definite and non-singular (see Proposition 1.6.2 in [12]). The FIM induces a distance on , called the Fisher–Rao distance, denoted as for any .

The FIM has two remarkable properties. First, it is the “infinitesimal distance” of the relative entropy, which is the loss function used to train a multi-class classification model. More precisely, if D is the relative entropy (also known as the Kullback–Leibler divergence) and if d is the Fisher–Rao distance, then given two distributions and , we have (see Theorem 4.4.5 in [12]):

The same result can be restated infinitesimally using the FIM g, as follows:

where is seen as a tangent vector of .

The other remarkable property of the FIM is Chentsov’s theorem [13], claiming that the FIM is the unique Riemannian metric on , which is invariant under sufficient statistics (up to a multiplicative constant). Informally, the FIM is the only Riemannian metric that is statistically meaningful. In [14], Amari and Nagaoka state a more general result. Along with the FIM, they introduce a family of affine connections parameterized by a real parameter , called the -connections. Theorem 2.6 in [14] states that an affine connection is invariant under sufficient statistics if and only if it is an -connection for some . In other words, the -connections are the only affine connections that have a statistical meaning. While Equation (4) gives the second-order approximation of the relative entropy, an -connection can be seen as the third-order term in the Taylor approximation of some divergence [14]. More precisely, a given -connection can be canonically associated with a unique divergence (while the second-order term is always given by the FIM). If , the canonical divergences are the relative entropy and its dual (obtained by switching the arguments in ). More generally, for , the canonical divergence is not symmetric. The only canonical divergence that is symmetric is obtained for , and is precisely the square of the Fisher–Rao distance. Thus, the Fisher–Rao distance is the only statistically meaningful distance. This provides a motivation for using the Fisher–Rao distance to measure lengths in .

Now, we consider . Since we are studying adversarial robustness, we need a metric that formalizes the idea that two close data points must be “indistinguishable” from a human perspective (or any other relevant perspective). A natural choice is the Euclidean metric induced from on .

Definition 3

(Euclidean metric). We consider the Euclidean space endowed with the Euclidean metric . It is defined in the standard coordinates of for all and for all tangent vectors by

thus, its matrix is the identity matrix of dimension d, denoted as . The Euclidean metric induces a distance on that we will denote with the -norm: for any .

From now on, we fix:

- A smooth map . We denote by the i-th component of f in the standard coordinates of .

- A point .

- A positive real number .

Define the Euclidean open ball centered at x with radius by

Definition 4.

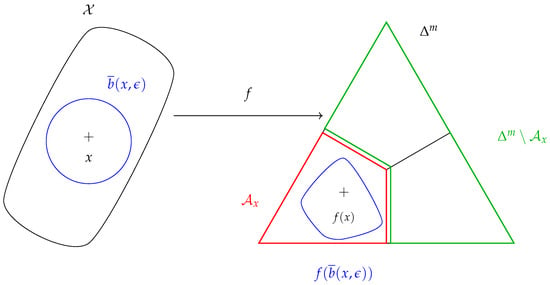

Define the set (Figure 1):

For simplicity, assume that is not on the “boundary” of , such that is well-defined.

Figure 1.

-robustness at x is enforced if and only if .

The set is the subset of distributions of that have the same class as .

Definition 5

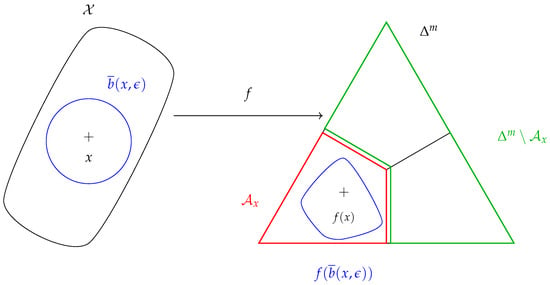

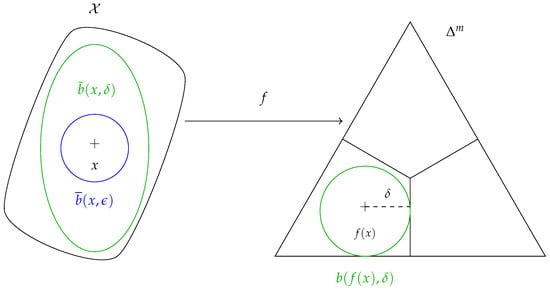

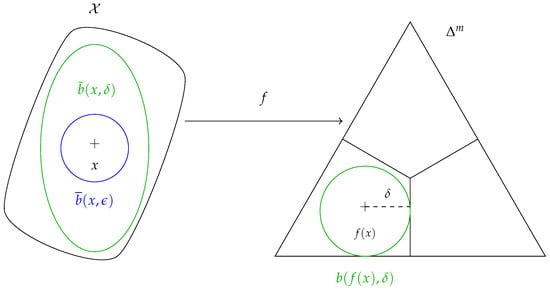

(Geodesic ball of the FIM). Let be the Fisher–Rao distance between and (Figure 2), i.e., the Fisher–Rao distance between and the closest distribution of with a different class.

Figure 2.

-robustness at x is enforced if .

Define the geodesic ball centered at with radius δ by

In Section 3.3, we propose an efficient approximation of δ.

Definition 6

(Pullback metric). On , define the pullback metric of g by f. In the standard coordinates of , is defined for all tangent vectors by

where is the Jacobian matrix of f at x (in the standard coordinates of and ). Define the matrix of in the standard coordinates of by

Definition 7

(Geodesic ball of the pullback metric). Let be the distance induced by the pullback metric on . We can define the geodesic ball centered at x with radius δ by

Note that the radius δ is the Fisher–Rao distance between and as defined in Definition 5.

2.4. Robustness Condition

Definition 8

Proposition 1

(Sufficient condition for robustness). If , then f is ϵ-robust at x (Figure 2).

Our goal is to start from Proposition 1 and make several assumptions in order to derive a condition that can be efficiently implemented.

Working with geodesic balls and is intractable, so our first assumption consists of using an “infinitesimal” condition by restating Proposition 1 in the tangent space instead of working directly on . In , define the Euclidean ball of radius by

Similarly, in , define the -ball of radius by

Assumption 1.

We replace Proposition 1 by

Proposition 2.

Equation (16) is equivalent to

Since , the Jacobian matrix has a rank smaller or equal to m. Thus, since has full rank, has a rank of at most m (when has a rank of m).

Assumption 2.

The Jacobian matrix has a full rank equal to m.

Using Assumptions 1 and 2, the constant rank theorem ensures that for small enough , f is -robust at x. However, contrary to Proposition 1, Assumption 1 does not offer any guarantee on the -robustness at x for arbitrary .

3. Derivation of the Regularization Method

In this section, we derive a condition for robustness (Proposition 4), which can be implemented as a regularization method. Then, we provide two useful results for the practical implementation of this method: an explicit formula for the decomposition of the FIM as (Section 3.2), and an easy-to-compute upper-bound of , i.e., the Fisher–Rao distance between and (Section 3.3).

3.1. The Partial Isometry Condition

In order to simplify the notations, we replace

- with J, which is a full-rank real matrix.

- with G, which is an symmetric positive definite real matrix.

- with , which is a symmetric positive-semidefinite real matrix.

We define . We will use the two following facts.

Fact 1.

Fact 2.

is symmetric positive semidefinite. Thus, by the spectral theorem, the eigenvectors associated with its nonzero eigenvalues are all in .

In particular, since , there exists an orthonormal basis of , denoted as , such that each is an eigenvector of , and such that is a basis of and is a basis of .

The set is an m-dimensional subspace of . does not define an inner product on because has a nontrivial kernel of dimension . In particular, the set is not bounded, i.e., it is a cylinder rather than a ball. However, when restricted to D, defines an inner product. We define the restriction of to D:

and similarly, we define the restriction of to D:

Assume that f is such that Equation (16) holds (i.e., ). Moreover, assume that we are in the limit case defined as follows: for any perturbation size, we can find a smaller perturbation of f such that Equation (16) does not hold anymore. This limit case is equivalent to having . In this case, is the smallest possible -ball (for the inclusion) such that Equation (16) holds. We noticed experimentally that enforcing this stronger criteria yields a larger robustifying effect. Thus, we make the following assumption:

Assumption 3.

We replace Equation (16) with

Proposition 3.

Equation (21) is equivalent to

We can rewrite Equation (22) in matrix form:

In Section 3.2, we show how to exploit the properties of the FIM to derive a closed-form expression for a matrix , such that . For now, we assume that we can easily access such a P and we are looking for a condition on P and J, which is equivalent to Equation (23).

Proposition 4.

The following statements are equivalent:

where is the identity matrix of dimension .

Proposition 4 constrains the matrix to be a semi-orthogonal matrix (multiplied by a homothety matrix). A smooth map f between Riemannian manifolds and is said to be (locally) isometric if the pullback metric (denoted ) coincides with , i.e., . Such a map f locally preserves distances. In our case, is not a metric (since its kernel is non-trivial); thus, f cannot be an isometry. However, Equation (22) ensures that f locally preserves distances along directions spanned by D. Hence, f becomes a partial isometry, at least in the neighborhood of the training points.

Under the Assumptions 1–3, Equation (ii) in Proposition 4 implies robustness as defined in Definition 8. In other words, Equation (ii) is a sufficient condition for robustness. However, there is no reason for a neural network to satisfy Equation (ii). This is why we define the following regularization term:

where |||·||| is any matrix norm, such as the Frobenius norm or the spectral norm. We use the Frobenius norm in the experiments of Section 4. To compute , we only need to compute the Jacobian matrix J, which can be efficiently achieved with backpropagation. Finally, the loss function is:

where l is the cross-entropy loss, and is a hyperparameter controlling the strength of the regularization with respect to the cross-entropy loss. The regularization term is minimized during training, such that the model is pushed to satisfy the sufficient condition of robustness.

3.2. Coordinate Change

In this subsection, we show how to compute the matrix P that was introduced in Proposition 4. To this end, we isometrically embed into the Euclidean space using the following inclusion map:

We can easily see that is an embedding. If is the sphere of radius 2 centered at the origin in , then is the subset of , where all coordinates are strictly positive (using the standard coordinates of ).

Proposition 5.

Let g be the Fisher information metric on (Definition 2), and be the Euclidean metric on . Then μ is an isometric embedding of into .

Now, we use the stereographic projection to embed into :

with .

Proposition 6.

In the coordinates τ, the FIM is:

Let be the Jacobian matrix of at . Then, we have:

Thus, we can choose:

Write and . For simplicity, write for . More explicitly, we have:

Proposition 7.

For :

3.3. The Fisher–Rao Distance

In this subsection, we derive a simple upper-bound for (i.e., the Fisher–Rao distance between and ). In Proposition 5, we show that the probability simplex endowed with the FIM can be isometrically embedded into the m-sphere of radius 2. Thus, the angle between two distributions of coordinates and in with and is:

The Riemannian distance between these two points is the arc length on the sphere:

In the regularization term defined in Equation (24), we replace with the following upper bound:

where is the center of the simplex . Thus,

4. Experiments

The regularization method introduced in Section 3 is evaluated on MNIST and CIFAR-10 datasets. Our method uses the loss function introduced in Equation (25).

4.1. Experiments on MNIST Dataset

4.1.1. Experimental Setup

For the MNIST dataset, we implement a LeNet model with two convolutional layers of 32 and 64 channels, respectively, followed by one hidden layer with 128 neurons. The code is available here: https://github.com/lshigarrier/geometric_robustness.git (accessed on 1 December 2022). We train three models: one regularized model, one baseline unregularized model, and one model trained with adversarial training. All three models are trained with the Adam optimizer ( and ) for 30 epochs, with a batch size of 64, and a learning rate of . For the regularization term, we use a budget of , which is chosen to contain the ball of radius 0.2. The adversarial training is conducted with 10 iterations of PGD with a budget using norm. We found that yields the best performance in terms of robustness–accuracy trade-off; this value is small because we did not attempt to normalize the regularization term.

The models are trained on the 60,000 images of MNIST’s training set and then tested on 10,000 images of the test set. The baseline model achieves an accuracy of 98.9% (9893/10,000), the regularized model achieves an accuracy of 94.0% (9403/10,000), and the adversarially trained model achieves an accuracy of 98.8% (9883/10,000). Although the current implementation of the regularized model is almost six times slower to train than the baseline model, it may be possible to accelerate the training using, for example, the technique proposed by Shafahi et al. [15], or using another method to approximate the spectral norm of . Even without relying on these acceleration techniques, the regularized model is still faster to train than the adversarially trained model.

4.1.2. Robustness to Adversarial Attacks

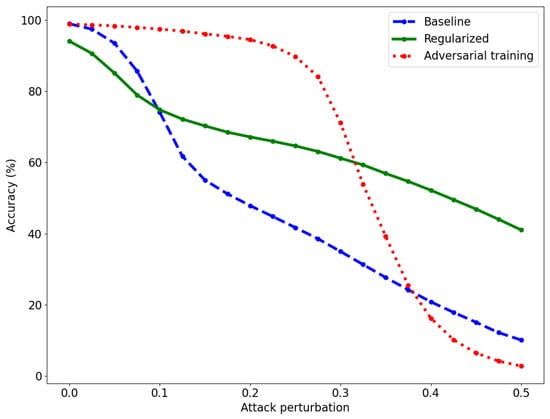

To measure the adversarial robustness of the models, we use the PGD attack with the norm, 40 iterations, and a step size of 0.01. The norm yields the hardest possible attack for our method, and corresponds more to the human notion of “indistinguishable images” than the norm. The attacks are performed on the test set, and only on images that are correctly classified by each model. The results are reported in Figure 3. The regularized model has a slightly lower accuracy than the baseline model for small perturbations, but the baseline model suffers a drop in accuracy above the attack level . Adversarial training achieves high accuracy for small- to medium-sized perturbations but the accuracy decreases sharply above . The regularized model remains robust even for large perturbations. The baseline model reaches 50% accuracy at and the adversarially trained model at , while the regularized model reaches 50% accuracy at .

Figure 3.

Accuracy of the baseline (dashed, blue), regularized (solid, green), and adversarially trained (dotted, red) models for various attack perturbations on the MNIST dataset. The perturbations are obtained with PGD using norm.

Table 1 provides more results against AutoAttack (AA) [7], which was designed to offer a more reliable evaluation of adversarial robustness. For a fair comparison, and in addition to a baseline model (BASE), we compare the partial isometry defense (ISO) with several other computationally efficient defenses: distillation (DIST) [8], Jacobian regularization (JAC) [9], which also relies on the Jacobian matrix of the network, and Fisher information regularization (FIR) [10], which also leverages information geometry. We also consider an adversarially trained (AT) model using PGD. ISO is the best defense that does not rely on adversarial training. In future work, ISO may be combined with AT to further boost performance. Note that ISO and JAC are more robust against attacks since they were designed to defend the model against such attacks. On the other hand, AT is more robust against attacks, because the adversarial training was conducted with the norm.

Table 1.

Clean and robust accuracy on MNIST against AA, averaged over 10 runs. The number in parentheses is the attack strength.

4.2. Experiments on CIFAR-10 Dataset

We consider a DenseNet121 model fine-tuned on CIFAR-10 using pre-trained weights for ImageNet. The code is available here: https://github.com/lshigarrier/iso_defense.git (accessed on 26 January 2023). As for the MNIST experiments, we compare the partial isometry defense with distillation (DIST), Jacobian regularization (JAC), and Fisher information regularization (FIR). Here, adversarial training (AT) relies on the fast gradient sign method (FGSM) attack [16]. All defenses are compared against PGD for various attack strengths. The results are presented in Table 2. The defenses are evaluated in a “gray-box” setting where the adversary can access the architecture and the data but not the weights. More precisely, the adversarial examples are crafted from the test set of CIFAR-10 using another unregularized DenseNet121 model. AT is the more robust method, but ISO achieves a robust accuracy 30% higher than the next best analogous method (FIR).

Table 2.

Clean and robust accuracy on CIFAR-10 against PGD. The number in parentheses is the attack strength.

One of our goals is to provide alternatives to adversarial training (AT). Apart from high computational costs, AT suffers from several limitations: it only robustifies against the chosen attack at the chosen budget and it does not offer a robustness guarantee. For example, under Gaussian noise, AT accuracy decreases faster than baseline accuracy (i.e., no defense). Achieving high robustness accuracy against specific attacks on a specific benchmark is insufficient and misleading to measure the true robustness of the evaluated model. Our method offers a new point of view that can be extended to certified defense methods in future works.

5. Discussion and Related Work

In 2019, Zhao et al. [17] proposed to use the Fisher information metric in the setting of adversarial attacks. They used the eigenvector associated with the largest eigenvalue of the pullback of the FIM as an attack direction. Following their work, Shen et al. [10] suggested a defense mechanism by suppressing the largest eigenvalue of the FIM. They upper-bounded the largest eigenvalue by the trace of the FIM. As in our work, they added a regularization term to encourage the model to have smaller eigenvalues. Moreover, they showed that their approach is equivalent to label smoothing [18]. In our framework, their method consists of expanding the geodesic ball as much as possible. However, their approach does not guarantee that the constraint imposed on the model will not harm the accuracy more than necessary. In our framework, matrix (compared with ) informs the model on the precise restriction that must be imposed to achieve adversarial robustness in the ball of radius .

Cisse et al. [19] introduced another adversarial defense called Parseval networks. To achieve adversarial robustness, the authors aim to control the Lipschitz constant of each layer of the model to be close to unity. This is achieved by constraining the weight matrix of each layer to be a Parseval tight frame, which is another name for semi-orthogonal matrix. Since the Jacobian matrix of the entire model with respect to the input is almost the product of the weight matrices, the Parseval network defense is similar to our proposed defense, albeit with completely different rationales. This suggests that geometric reasoning could successfully supplement the line of work on Lipschitz constants of neural networks, such as in [20].

Following another line of work, Hoffman et al. [9] advanced a Jacobian regularization to improve adversarial robustness. Their regularization consists of using the Frobenius norm of the input–output Jacobian matrix. To avoid computing the true Frobenius norm, they relied on random projections, which are shown to be both efficient and accurate. This method is similar to the method of Shen et al. [10] in the sense that it will also increase the radius of the geodesic ball. However, the Jacobian regularization does not take into account the geometry of the output space (i.e., the Fisher information metric) and assumes that the probability simplex is Euclidean.

Although this study focuses on norm robustness, it must be pointed out that there are other “distinguishability” measures that can be used to study adversarial robustness, including all other norms. In particular, the norm is often considered to be the most natural choice when working with images. However, the norm is not induced by any inner product and, hence, there is no Riemannian metric that induces the norm. However, given an budget , we can choose an budget , such that any attack in the budget will also respect the budget. When working on images, other dissimilarity measures are rotations, deformations, and color changes of the original image. Contrary to the or norms, these measures do not rely on a pixel-based coordinate system. However, it is possible to define unrestricted attacks based on these spatial dissimilarities, for example, in [21].

In this work, we derive the partial isometry regularization for a classification task. The method can be extended to regression tasks by considering the family of multivariate normal distributions as the output space. On the probability simplex , the FIM is a metric with constant positive curvature, while it has constant negative curvature on the manifold of multivariate normal distributions [22].

Finally, the precise quantification of the robustness condition presented in Equation (12) and Proposition 4 paves the way for the development of a certified defense [23] in this framework. By strongly enforcing Proposition 4 on a chosen proportion of the training set, it may be possible to maximize the accuracy under the constraint of a chosen robustness level, which offers another solution to the robustness–accuracy trade-off [24,25]. Certifiable defenses are a necessary step for the deployment of deep learning models in critical domains and missions, such as civil aviation, security, defense, and healthcare, where a certification may be required to ensure a sufficient level of trustworthiness.

6. Conclusions and Future Work

In this paper, we introduce an information geometric approach to the problem of adversarial robustness in machine learning models. The proposed defense consists of enforcing a partial isometry between the input space endowed with the Euclidean metric and the probability simplex endowed with the Fisher information metric. We subsequently derived a regularization term to achieve robustness during training. The proposed strategy is tested on the MNIST and CIFAR-10 datasets, and shows a considerable increase in robustness without harming the accuracy. Future works will evaluate the method on other benchmarks and real-world datasets. Several attack methods will also be considered in addition to PGD and AutoAttack. Although this work focuses on norm robustness, future work will consider other “distinguishability” measures.

Our work extends a recent, promising but understudied framework for adversarial robustness based on information geometric tools. The FIM has already been harnessed to develop attacks [17] and defenses [10,26] but a precise robustness analysis is yet to be proposed. Our work is a step toward the development of such an analysis, which might yield certified guarantees relying on these geometric tools. The study of adversarial robustness, which is non-local by definition and contrary to accuracy, should benefit greatly from a geometrical vision. However, the current literature on adversarial robustness is mainly concerned with the FIM and its spectrum (which are very local objects) without unfolding the full arsenal developed in information geometry. In our work, we demonstrate the usefulness of such an approach by developing a preliminary robustification method. Model robustification is a hard, unsolved yet vital problem to ensure the trustworthiness of deep learning tools in safety-critical applications. Our framework could be extended and applied to existing certification strategies, such as Lipschitz-based [27] or randomized smoothing [23], where statistical models naturally appear.

Author Contributions

Conceptualization, L.S.-G., N.C.B. and D.D.; methodology, L.S.-G. and N.C.B.; software, L.S.-G.; validation, L.S.-G.; formal analysis, L.S.-G., N.C.B. and D.D.; investigation, L.S.-G.; writing—original draft preparation, L.S.-G.; writing—review and editing, L.S.-G., N.C.B. and D.D.; supervision, N.C.B. and D.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: http://yann.lecun.com/exdb/mnist/ (accessed on 1 December 2022) and https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 26 January 2023).

Acknowledgments

We thank Roman Shterenberg for useful discussions.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Proofs

Proof of Proposition 2

Proof of Fact 1.

We prove the third equality (the second equality is a well-known fact of linear algebra).

Let . Then, ; thus, . Hence, .

Let . Since G is symmetric positive-definite, the function is a norm. We have . The positive-definiteness of the norm N implies . Thus, . Hence, . □

Proof of Proposition 3.

Proof of Proposition 4.

Let us first introduce the polar decomposition.

Let A be a matrix.

Define the absolute value of A by . Note that the square root of is well-defined because it is a positive-semidefinite matrix

Define the linear map by for any .

Using the fact that is symmetric, we have that ; thus, u is an isometry (we can arbitrarily extend u on the entire , e.g., by setting ).

Let U be the matrix associated to u in the canonical basis.

We now prove the main result.

Let . Using the polar decomposition, we have

where U is an isometry from to (using our assumption that ). Transposing this relation, we obtain

Hence, by multiplying both relations, we have

Assume that holds, i.e., . Then,

Since U is an isometry from D to , then is the projection onto D, denoted as . Thus, we have , which is .

Now, assume that holds, i.e., , where is the projection onto D. We have

Since , then . Since U is an isometry from D to , then . Thus, which is . □

Proof of Proposition 5.

We need to show that . Using the coordinates on (Definition 1) and the standard coordinates on , and writing we have

For and we have

and for :

with . Thus,

which is the FIM, as defined in Definition 2. □

Proof of Proposition 6.

For , the inverse transformation of is

and

Moreover, according to Proposition 5, the FIM in the coordinates is the metric induced on by the identity matrix (i.e., the Euclidean metric) of . Hence, we have

For and , we have

and for :

Thus,

□

References

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.J.; Fergus, R. Intriguing properties of neural networks. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Carlini, N.; Wagner, D. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Gilmer, J.; Metz, L.; Faghri, F.; Schoenholz, S.S.; Raghu, M.; Wattenberg, M.; Goodfellow, I.J. Adversarial Spheres. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Li, B.; Qi, P.; Liu, B.; Di, S.; Liu, J.; Pei, J.; Yi, J.; Zhou, B. Trustworthy AI: From Principles to Practices. ACM Comput. Surv. 2022, 55, 1–46. [Google Scholar] [CrossRef]

- Croce, F.; Hein, M. Reliable Evaluation of Adversarial Robustness with an Ensemble of Diverse Parameter-Free Attacks. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020. [Google Scholar]

- Papernot, N.; McDaniel, P.D.; Wu, X.; Jha, S.; Swami, A. Distillation as a Defense to Adversarial Perturbations against Deep Neural Networks. In Proceedings of the IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–26 May 2016. [Google Scholar]

- Hoffman, J.; Roberts, D.A.; Yaida, S. Robust Learning with Jacobian Regularization. arXiv 2018, arXiv:1908.02729. [Google Scholar]

- Shen, C.; Peng, Y.; Zhang, G.; Fan, J. Defending Against Adversarial Attacks by Suppressing the Largest Eigenvalue of Fisher Information Matrix. arXiv 2019, arXiv:1909.06137. [Google Scholar]

- Amari, S.i. Differential-Geometrical Methods in Statistics; Lecture Notes in Statistics; Springer: New York, NY, USA, 1985; Volume 28. [Google Scholar]

- Calin, O.; Udrişte, C. Geometric Modeling in Probability and Statistics; Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Čencov, N. Algebraic foundation of mathematical statistics. Ser. Stat. 1978, 9, 267–276. [Google Scholar] [CrossRef]

- Amari, S.I.; Nagaoka, H. Methods of Information Geometry; American Mathematical Society: Providence, RI, USA, 2000. [Google Scholar]

- Shafahi, A.; Najibi, M.; Ghiasi, M.A.; Xu, Z.; Dickerson, J.; Studer, C.; Davis, L.S.; Taylor, G.; Goldstein, T. Adversarial training for free! In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Wong, E.; Rice, L.; Kolter, J.Z. Fast is better than free: Revisiting adversarial training. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Zhao, C.; Fletcher, P.T.; Yu, M.; Peng, Y.; Zhang, G.; Shen, C. The Adversarial Attack and Detection under the Fisher Information Metric. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Müller, R.; Kornblith, S.; Hinton, G.E. When does label smoothing help? In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Cissé, M.; Bojanowski, P.; Grave, E.; Dauphin, Y.N.; Usunier, N. Parseval Networks: Improving Robustness to Adversarial Examples. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 854–863. [Google Scholar]

- Béthune, L.; Boissin, T.; Serrurier, M.; Mamalet, F.; Friedrich, C.; González-Sanz, A. Pay Attention to Your Loss: Understanding Misconceptions about 1-Lipschitz Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Xiao, C.; Zhu, J.Y.; Li, B.; He, W.; Liu, M.; Song, D. Spatially Transformed Adversarial Examples. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Skovgaard, L.T. A Riemannian Geometry of the Multivariate Normal Model. Scand. J. Stat. 1984, 11, 211–223. [Google Scholar]

- Cohen, J.; Rosenfeld, E.; Kolter, Z. Certified Adversarial Robustness via Randomized Smoothing. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 1310–1320. [Google Scholar]

- Zhang, H.; Yu, Y.; Jiao, J.; Xing, E.; Ghaoui, L.E.; Jordan, M. Theoretically Principled Trade-off between Robustness and Accuracy. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7472–7482. [Google Scholar]

- Tsipras, D.; Santurkar, S.; Engstrom, L.; Turner, A.; Madry, A. Robustness May Be at Odds with Accuracy. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Picot, M.; Messina, F.; Boudiaf, M.; Labeau, F.; Ben Ayed, I.; Piantanida, P. Adversarial Robustness via Fisher-Rao Regularization. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2698–2710. [Google Scholar] [CrossRef] [PubMed]

- Leino, K.; Wang, Z.; Fredrikson, M. Globally-Robust Neural Networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).