1. Introduction

Explainable Artificial Intelligence (XAI) is generally described as a collection of methods allowing humans to understand how an algorithm is able to learn from a database, reproduce and generalize. It is currently an active, multidisciplinary area of research [

1,

2] that relies on several theoretical or heuristic tools to identify salient features and indicators explaining the surprisingly performances of machine learning algorithms, especially deep neural networks. From a statistical point of view, a neural network is nothing but a parameterized regression or classification model, that can be described as a random variable whose probability distribution is known conditionally to external inputs and internal parameters [

3]. Unfortunately, even if this approach seems the most natural one, it is not adapted to XAI as no insight is gained on the learning and inference process. Furthermore, it seems that there is a contradiction between the statistical procedure that appeals for models with the smallest possible number of free parameters and the performance of deep learning relying on thousands to millions weights. On the other hand, attempts have been made to design numerical [

4] or visual [

5] indicators aiming at producing a summary of salient features.

XAI is also related to acceptable AI, that is proving or at least ensuring with a high probability that the model will produce the intended result and is robust to perturbations, either inherent to the data acquisition process or intentional. In both cases, it is mandatory to be able to perform a sensitivity analysis on a trained network. In [

6], an approach based on geometry was taken and the need of a metric on the set of admissible perturbations enforced. The problem of the so-called adversarial attacks is treated in several papers [

7,

8,

9] where mitigating procedures are proposed. Adversarial attacks are a major concern for acceptable AI, especially in critical application like autonomous vehicles or air traffic control. From now, most of the research effort was dedicated to the design of such attacks with the idea of incorporating the fooling inputs in the learning database in order to increase robustness. The reader can refer, for example, to Fast Gradient Sign methods [

10], robust optimization methods [

11] or DeepFool [

12,

13]. Unfortunately, while these approaches are relevant to acceptable AI, they do not provide XAI with usable tools. Furthermore, they rely on inputs in

, or generally in a finite dimensional Euclidean space, which is not always a valid hypothesis.

There is also a question on why learning from a high dimension data space is possible, and a possible answer is because data effectively lies on a low dimensional manifold [

14,

15]. As a consequence, most of the directions in the input space will have a very small impact on the output, while only a few number of them, namely those who are tangent to the data manifold, are going to be of great influence [

16]. The manifold hypothesis also justifies the introduction of the encoder–decoder architecture [

17] that is of wide use in the field of natural language processing [

18] or time-series prediction [

19]. The true underlying data manifold, if it exists, is most of the time not accessible, although some of its characteristics may be known and incorporated in the model. In particular, it may be subject to some action by a Lie group or possess extra geometric properties, like the existence of a symplectic structure. Specific networks have be designed to cope with such situations [

20,

21].

In a general setting, little is known about the data manifold and its geometric features, like metric, Levi-Civita connection and curvature. However, Riemannian properties are the most important ones as they dictate the behavior of the network under moves in the input space. Recalling the statistical approach invoked before, it makes sense to model the output of the network as a density probability parameterized by inputs and weights. Within this frame, there exists a well-defined Riemannian metric on the output space known as the Fisher Information Metric (FIM) originating from a second order expansion of the Kullback–Leibler divergence. The importance of this metric has already been pointed out in several past works [

22,

23]. The FIM can be pulled back to the input space, yielding, in most cases, a degenerate metric that can nevertheless be exploited to better understand the effect of perturbations [

16], or to parameter space to improve gradient-based learning algorithms [

24]. In this last case, however, things tend to be less natural than for the input space.

In this work, a unifying framework for studying the geometry of deep networks is introduced, allowing a description of encoder–decoder blocks from the FIM perspective. The pullback bundle is a key ingredient in our approach.

In the sequel, features and outputs are random variables, thus characterized by their distribution functions, or their densities in the absolutely continuous case. Within this frame, a neural network is a random variable:

where

is an underlying probability space and

are, respectively, the input and weight measure spaces Finally,

Y is assumed to take its values in the output measure space

Most of the time, the network has a layered structure so that the expression of

can be factored out as:

In many practical implementations, the weights

W are deterministic, that is equivalent to saying that their probability distribution is a Dirac distribution. In this case, a neural network can be described as a parameterized family of random variables

. A special case occur when a single decoder is considered [

25], that is, a measurable function:

where

f is a smooth mapping, assumed in [

25] to be an immersion; that is, for any

x,

has maximal rank

Conversely, one may consider an encoder

and assume

f to be a submersion. In this paper, the geometry of the complete encoder–decoder network

will be considered, as well as the case

The article is structured as follows: In

Section 2, the Fisher information metric is introduced and some formulas, valid when the parameter space is a smooth manifold, are given. In

Section 3, the pullback bundle is defined and applied to the encoder–decoder case. Finally, a conclusion is drawn in

Section 5. The convention of summation on repeated indices applies in this manuscript.

3. Pullback Bundles

In this section, a neural network with weights W is a mapping , where (i.e., ) is the input (i.e., output) manifold of dimension n (i.e., m). Both manifolds are assumed to be smooth, and also the mapping This last assumption is valid when the activation functions are smooth, which is the case for sigmoid functions, but not for the commonly used ReLu function. However, smooth approximations to the ReLu are easy to construct with an arbitrary degree of accuracy, so the framework introduced below can be still applied.

As mentioned in the introduction, is further assumed to be a statistical model 1 with Fisher metric This setting is the one of a neural network whose output is a random variable with conditional density in a family .

When the weights are kept fixed, the only free parameters are the inputs and the network is fully described by the mapping:

For the ease of notation, the mapping

will be abbreviated by

. When the activation functions in the network are smooth,

is a smooth mapping and its derivative will be denoted by

. With this convention, the pullback metric of

g by

, denoted

, is defined by:

Unless the network

is a decoder,

is generally degenerated and does not provide

with a Riemannian structure, so an ambient metric

h on

is assumed to exist. The triple

is called the data manifold of the network. The kernel of

, denoted

, is the distribution in

consisting of vectors

X such that

is the zero mapping. At a point

, the vectors in

belonging to

give directions in which the output of the network will not change up to order

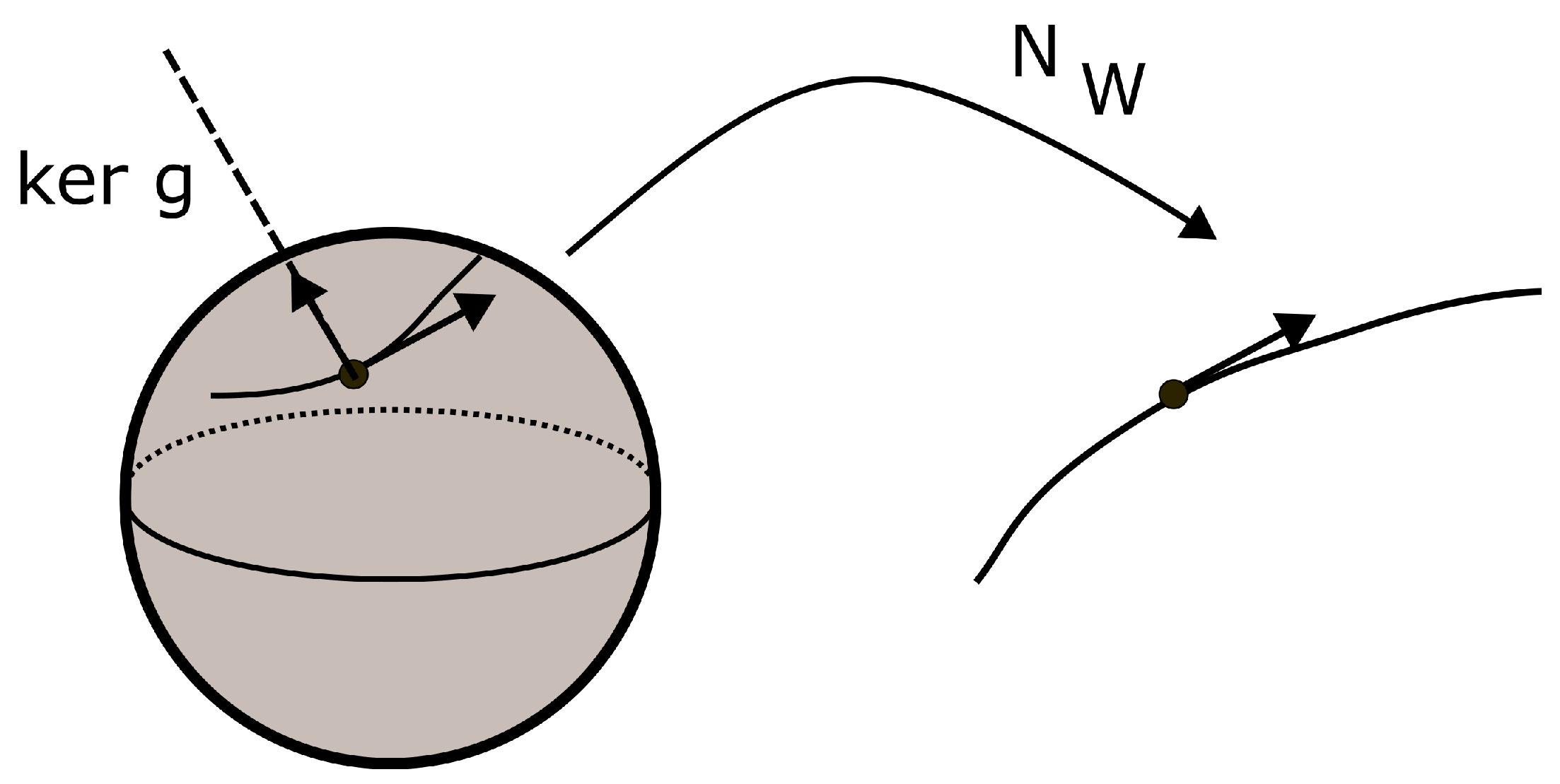

Figure 1 represents the case of a one dimensional output space and a 2-sphere input space. Since the dimension of the output is less than the one of the input, some moves in the data manifold will not induce any change at the output.

Unless the dimension of

is constant, this distribution does not define a foliation. However, this is true locally in the neighborhood of points in

such that

has maximal rank. Finally, if

is an

r-vector bundle on

, then its pullback by

will be denoted in short by

We recall that if

E has local charts:

and

has local charts

, then

has local charts:

The pullback bundle enjoys a universal property that is in fact the main reason for introducing it in our context.

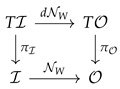

Proposition 6. Let (i.e., ) be a vector bundle on (i.e., ). For any bundle morphism , there exists a unique bundle morphism such that the following diagram commutes:where and This proposition is a classical one and its proof can be found in many textbooks. The one we give below is very simple, using only local charts.

The above construction is constructive and thus gives a practical mean of computation. For a network with fixed weights, e.g., a trained one, the derivative

can be efficiently computed by back propagation, so the bundle morphism:

has a practical meaning.

Introducing the pullback bundle gives the diagram:

The bundle mapping

to

is then the association:

The pullback bundle is thus a mean of representing the action of the network on tangent vectors to the data manifold. As an example, the construction of adversarial attacks given in [

32,

33] can be revisited in this context, extending it to the general setting of network with manifold inputs.

The general problem of building an adversarial attack is, informally, to find, for an input point in the data manifold, a direction in which a perturbation will have the most important effect on the output, hopefully fooling the network. Following [

33], we define:

Definition 3. Let h be a Riemannian metric on the input space. An optimal adversarial attack at with budget is a solution to: Using (

38), this optimization program can be viewed as a local approximation to the one based on the Kullback–Leibler divergence:

Definition 4. A Kullback–Leibler optimal adversarial attack at with budget is a solution to: The metric

g on

can be pulled back to

by letting:

Due to the special form of the criterion, the optimal point is on the boundary, so that finally, the optimal adversarial attack problem may be formulated as:

Definition 5. An optimal adversarial attack at with budget is a solution to: Where

stands for the unit sphere bundle with respect to the metric

Please note that due to bilinearity, the problem can be solved for

, then let the optimal vector be scaled by the original

From standard linear algebra, if

is the matrix of the bilinear form

at

x and

the one of

h, then one can find unitary matrices

and diagonal matrices

such that:

Any vector

v in

can be written as:

So that, finally, the original problem can be rewritten as:

which is solved readily by taking

w to be the unit eigenvector of

M associated with the largest eigenvalue. This is the solution found in [

33] when

In many cases, as the above example indicates, it is more convenient to work uniquely in the input space, thus justifying the introduction of the pullback bundle From now, we are going to adopt this point of view.

Remark 5. Please note that a section in is generally not related to a section of the form (63) in either or due to the fact that may not be a monomorphism or an epimorphism. The next proposition gives condition for the existence of global sections in associated with global sections in Proposition 7. In the case of a decoding network, when is an embedding, there is a natural embedding of bundles such that the image of is . The pullback bundle then splits as:where F has rank . Be careful that in this case, a section of the pullback bundle will not define a global section in

since some points of the output space may have no preimage by

However, by the extension lemma [

34] (Lemma 5.34, p. 115), local (global if

is closed) smooth vector fields on

exist, extending it.

Proof. If is an embedding, is a submanifold of and in an adapted chart, a vector field in can be written as , where the are the first n coordinate vector fields. It thus pulls back to a section of the same form in . Now, since is injective, is the image of a unique section in , hence the claim. □

Proposition 8. If has constant rank r, then there exists a splitting , and bundle isomorphism that coincides with on the fibers.

Proof. By Theorem 10.34, [

34] (p. 266),

is a subbundle of

and

a subbundle of

. In local charts, the morphism

gives rise to the decomposition:

with

an isomorphism where restricted to

Passing to local sections yields the result. □

An important case is the one of submersions, corresponding to encoders in machine learning. In this case, and establishes a bundle isomorphism between F and The pullback of Fisher–Rao metric g on gives rise to a metric on , but only to a degenerate metric on that can, nevertheless, be quite well understood, as indicated below.

Definition 6. On the input bundle , the symmetric tensor is defined using the splitting , by: Proposition 9. There exists a symmetric -tensor on , denoted by Θ, such that, for any tangent vectors : Proof. From standard linear algebra, there exists an adjoint

to

, defined by:

with, in local coordinates:

where

N (i.e.,

) is the matrix associated with

(i.e.,

) and, as usual,

The

-tensor

is then the product

□

Remark 6. Θ is defined even if is not full rank.

Remark 7. All the relevant information concerning is encoded in As a consequence, the geometry of an encoder is described by this tensor, hence also the one of an encoder–decoder block.

Remark 8. The tensor Θ has expression in a local orthonormal frame, hence is symmetric.

Definition 7. Let ∇ be a connection on . Its dual connection is defined by the next equation:where Z is any tangent vector in and are vector fields. Definition 8. A -tensor Θ is said to satisfy the gauge equation [35] if, for all tangent vectors Z: Proposition 10. If Θ satisfies the gauge Equation (78), then the -tensor defined by:is ∇ parallel. Proof. For any vector fields

, and any tangent vector

Z:

hence the claim. □

, being symmetric, admits a diagonal expression in a local orthonormal local frame

. When there exists a connection ∇ such that

for any vector fields

, parallel transport of the

shows that the eigenvalues are constant and the eigenspaces preserved. The existence of a solution to the gauge equation thus greatly simplifies the study of an encoder, as a local splitting of the input manifold exists. The reader is referred to [

35] for more details. In fact, the tensor

is defined even if for general networks and the splitting may exist in this setting. This is the case when the rank of

is locally constant, hence when it is maximal. A practical computation of

can be obtained through the singular value decomposition, as Proposition (

74) indicates. A numerical integration of the distribution given by the first singular vectors gives rise to a local system of coordinates, defining in turn a connection satisfying the gauge equation (the existence of a global solution has a cohomological obstruction that is outside the scope of this paper).

Finally, we introduce below a construction that takes into account the weight influence. As mentioned in

Section 2, the derivative of the network with respect to its weights is adequately described as a 1-form, thus a section of

In fact, when the inner layers of the network are manifolds, the parameters are no longer real values and a suitable extension has to be introduced. One possible approach is to take a connection ∇ on the layer manifold

Considering a point

, the exponential

defines a local chart centered at

Given a point

q in the injectivity domain of

, one can obtain its coordinates as

and the activation of a neuron with input

q as

, with

a 1-from in

In this general setting, a manifold neuron will be defined by its input in an exponential chart, a 1-form corresponding to the weights in the Euclidean setting and an activation function. Its free parameters are thus a couple

This particular vector bundle is known as the generalized tangent bundle.

Recalling (

43), it is worth to study the pullback of the generalized bundle

. The generalized pullback bundle is then

, whose local sections are generated by the pullback local sections of the form:

Please note that the pullback can be performed on any layer, internal or input. Most of the previous derivations can be carried out on the generalized bundle, which must be thus considered as a general, yet tractable framework for XAI.