Abstract

Obtaining solutions to optimal transportation (OT) problems is typically intractable when marginal spaces are continuous. Recent research has focused on approximating continuous solutions with discretization methods based on i.i.d. sampling, and this has shown convergence as the sample size increases. However, obtaining OT solutions with large sample sizes requires intensive computation effort, which can be prohibitive in practice. In this paper, we propose an algorithm for calculating discretizations with a given number of weighted points for marginal distributions by minimizing the (entropy-regularized) Wasserstein distance and providing bounds on the performance. The results suggest that our plans are comparable to those obtained with much larger numbers of i.i.d. samples and are more efficient than existing alternatives. Moreover, we propose a local, parallelizable version of such discretizations for applications, which we demonstrate by approximating adorable images.

1. Introduction

Optimal transport is the problem of finding a coupling of probability distributions that minimizes cost [1], and it is a technique applied across various fields and literatures [2,3]. Although many methods exist for obtaining optimal transference plans for distributions on discrete spaces, computing the plans is not generally possible for continuous spaces [4]. Given the prevalence of continuous spaces in machine learning, this is a significant limitation for theoretical and practical applications.

One strategy for approximating continuous OT plans is based on discrete approximation via sample points. Recent research has provided guarantees on the fidelity of discrete, sample-location-based approximations for continuous OT as the sample size [5]. Specifically, by sampling large numbers of points from each marginal, one may compute a discrete optimal transference plan on , with the cost matrix being derived from the pointwise evaluation of the cost function on .

Even in the discrete case, obtaining minimal cost plans is computationally challenging. For example, Sinkhorn scaling, which computes an entropy-regularized approximation for OT plans, has a complexity that scales with [6]. Although many comparable methods exist [7], all of them have a complexity that scales with the product of sample sizes, and they require the construction of a cost matrix that also scales with .

We have developed methods for optimizing both sampling locations and weights for small N approximations of OT plans (see Figure 1). In Section 2, we formulate the problem of fixed size approximation and reduce it to discretization problems on marginals with theoretical guarantees. In Section 3, the gradient of entropy-regularized Wasserstein distance between a continuous distribution and its discretization is derived. In Section 4, we present a stochastic gradient descent algorithm that is based on the optimization of the locations and weights of the points with empirical demonstrations. Section 5 introduces a parellelizable algorithm via decompositions of the marginal spaces, which reduce the computational complexity by exploiting intrinsic geometry. In Section 6, we analyze time and space complexity. In Section 7, we illustrate the advantage of including weights for sample points by providing a comparison with an existing location that is only based on discretization.

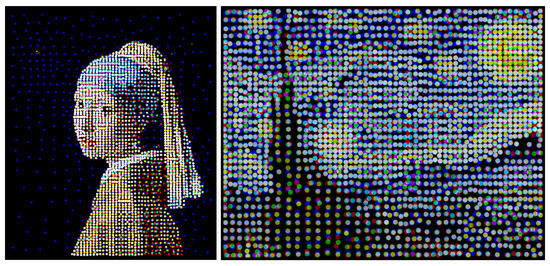

Figure 1.

Discretization of “Girl with a Pearl Earring” and “Starry Night” using EDOT with 2000 discretization points for each RGB channel. , .

2. Efficient Discretizations

Optimal transport (OT): Let , be compact Polish spaces (complete separable metric spaces), , be probability distributions on their Borel-algebras, and be a cost function. Denote the set of all joint probability measures (couplings) on with marginals and by . For the cost function c, the optimal transference plan between and is defined as in [1]: , where .

When , the cost , defines the k-Wasserstein distance between and for . Here, is the k-th power of the metric on X.

Entropy regularized optimal transport (EOT) [5,8] was introduced to estimate OT couplings with reduced computational complexity: , where is a regularization parameter, and the regularization term := is the Kullback–Leibler divergence. The EOT objective is smooth and convex, and its unique solution with a given discrete can be obtained using a Sinkhorn iteration (SK) [9].

However, for large-scale discrete spaces, the computational cost of SK can still be unfeasible [6]. Even worse, to even apply the Sinkhorn iteration, one must know the entire cost matrix over the large-scale spaces, which itself can be a non-trivial computational burden to obtain; in some cases, for example, where the cost is derived from a probability model [10], it may require intractable computations [11,12].

The Framework: We propose the optimization of the location and weights of a fixed size discretization to estimate the continuous OT. The discretization on is completely determined by those on X and Y to respect the marginal structure in the OT. Let , , be a discrete approximation of and , respectively, with , , , , and . Then, the EOT plan for the OT problem can be approximated by the EOT plan for the OT problem . There are three distributions that have their discrete counterparts; thus, with a fixed size , a naive idea about the objective to be optimized can be

where represents the k-th power of k-Wasserstein distance between measures and . The hyperparameter balances between the estimation accuracy over marginals and that of the transference plan, while the weights on marginals are equal.

To properly compute , a metric on is needed. We expect on X-slices or Y-slices to be compatible with or , respectively; furthermore, we may assume that there exists a constant such that:

For instance, (2) holds when is the p-product metric for .

The objective is estimated by its entropy regularized approximation for efficient computation, where is the regularization parameter, as follows:

Here, is estimated by . is computed by optimizing

One major difficulty in optimizing is to evaluate . In fact, obtaining is intractable, which is the original motivation for the discretization. To overcome this drawback, by utilizing the dual formulation of EOT, the following are shown (see proof in Appendix A):

Proposition 1.

When X and Y are two compact spaces, and the cost function c is , there exists a constant such that

Notice that Proposition 1 indicates that is bounded above by multiples of , i.e., when the continuous marginals and are properly approximated, so is the optimal transference plan between them. Therefore, to optimize , we focus on developing algorithms to obtain that minimize and .

Remark 1.

The regularizing parameters (λ and ζ above) introduce smoothness, together with an error term, into the OT problem. To make an accurate approximation, we need λ and ζ to be as small as possible. However, when parameters become too small, the matrices to be normalized in the Sinkhorn algorithm lead to an overflow or underflow problem of numerical data types (32-bit or 64-bit floating point numbers). Thus, the value for regularizing the constant threshold is proportional to the k-th power of the diameter of the supported region. In this work, we try our best to control the value (mainly on ζ), which ranges from 10−4 to 0.01 when the diameter is 1 in different examples.

3. Gradient of the Objective Function

Let be a discrete probability measure in the position of “” in the last section. For a fixed (continuous) , the objective now is to obtain a discrete target .

In order to apply a stochastic gradient descent (SGD) to both the positions and their weights to achieve , we now derive the gradient of about by following the discrete discussions of [13,14]. The SGD on X is either derived through an exponential map, or by treating X as (part of) an Euclidean space.

Let , and denote the joint distribution minimizing as with the differential form at being , which is used to define in Section 2.

By introducing the Lagrange multipliers i, we have , where with (see [5]). Let be the argmax; then, we have

with . Since and produce the same for any , the representative with that is equivalent to (as well as ) is denoted by (similarly ) below in order to obtain uniqueness and make the differentiation possible.

From a direct differentiation of , we have

With the transference plan and the derivatives of , , calculated, the gradient of can be assembled.

Assume that g is a Lipschitz constant that is differentiable almost everywhere (for and a Euclidean distance in , differentiability fails to hold only when and ) and that is calculated. The derivatives of and can then be calculated thanks to the Implicit Function Theorem for Banach spaces (see [15]).

The maximality of at and induces , and the Fréchet derivative vanishes. By differentiating (in the sense of Fréchet) again on and , respectively, we get

as a bilinear functional on (note that, in Equation (6), the index i of cannot be m). The bilinear functional is invertible, and we denote its inverse by as a bilinear form on . The last ingredient for the Implicit Function Theorem is :

Then, . Therefore, we have gradient calculated.

Moreover, we can differentiate Equations (4)–(8) to get a Hessian matrix of on ’s and ’s to provide a better differentiability of (which may enable Newton’s method, or a mixture of Newton’s method and minibatch SGD to accelerate the convergence). More details about the claims, calculations, and proofs are provided in the Appendix B.

4. The Discretization Algorithm

Here, we provide a description of an algorithm for the efficient discretizations of optimal transport (EDOT) from a distribution to with integer m, which is a given cardinality of support. In general, does not need not be explicitly accessible, and, even if it is accessible, computing the exact transference plan is not feasible. Therefore, in this construction, we assume that is given in terms of a random sampler, and we apply a minibatch stochastic gradient descent (SGD) through a set of samples that are independently drawn from of size N on each step to approximate .

To calculate the gradient , we need: (1). , the EOT transference plan between and , (2). the cost on X, and (3). its gradient on the second variable . From N samples , we can construct and calculate the gradients with replaced by as an estimation, whose effectiveness (convergence as ) is proved in [5].

We call this discretization algorithm the Simple EDOT algorithm. The pseudocode is stated in the Appendix C.

Proposition 2.

(Convergence of the Simple EDOT). The Simple EDOT generates a sequence in the compact set . If the set of limit points of does not intersect with , then converges to a stationary point in where represents the interior.

In simulations, we fixed to reduce the computational complexity and fixed the regularizer for X of diameter 1 and scales proportional with (see next section). Such a choice for is not only small enough to reduce the error between the EOT estimation and the true , but also ensures that and its byproduct in the SK are distinguishable from 0 in a double format.

Examples of discretization: We demonstrated our algorithm on the following:

E.g., (1). is the uniform distribution on .

E.g., (2) is the mixture of two truncated normal distributions on , and the PDF is , where is the density of the truncated normal distribution on with the expectation and standard deviation .

E.g., (3) is the mixture of two truncated normal distributions on , where the two distributions are of weight and of weight .

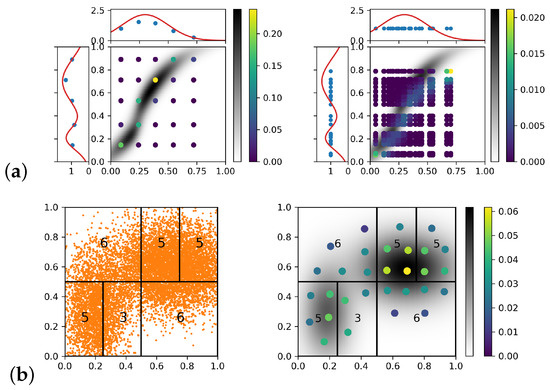

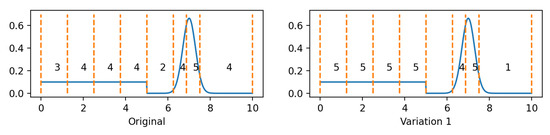

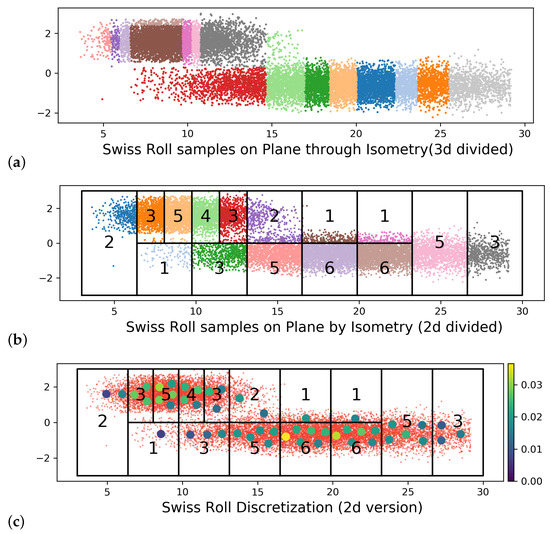

Let for all plots in this section. Figure 2a–c plots the discretizations () for E.g., (1)–(3) with and 7, respectively.

Figure 2.

(a–c) Plots of EDOT discretizations of the Examples (1)–(3). In (c), the x-axis and y-axis are the 2D coordinates, and the probability density of and weights of are encoded by color. (d,e) show comparison between EDOT and i.i.d. sampling for Examples (1) and (2). EDOT are calculated with to 7 (3 to 8). The 4 boundary curves of the shaded region are -, -, -, and -percentile curves; the orange line represents the level of ; (f) plots the entropy regularized Wasserstein distance versus the SGD steps for Example (2) with optimized by 5-point EDOT. in all cases.

Figure 2f illustrates the convergence rate of versus the SGD steps for Example (2) with obtained by a 5-point EDOT. Figure 2d,e plot the entropy-regularized Wasserstein versus m, thereby comparing EDOT and naive sampling for Examples (1) and (2). Here, the s are: (a) from the EDOT with in Example 1 and in Example 2, which are shown by ×s in the figures. (b) from naive sampling, which is simulated using a Monte Carlo of volume 20,000 on each size from 3 to 200. Figure 2d,e demonstrate the effectiveness of the EDOT: as indicated by the orange horizontal dashed line, even 5-point EDOT discretization in these two examples outperformed 95% of the naive samplings of size 40, as well as 75% of the naive samplings of size over 100 (the orange dash and dot lines).

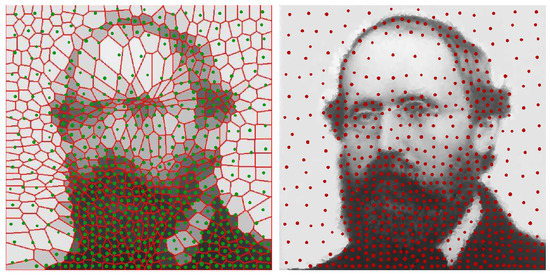

An example of a transference plan: In Figure 3a, we illustrate the efficiency of the EDOT on an OT problem: , where the marginal and are truncated normal (mixtures), and has two components (shown in red curve on the left), while has only one component (shown in red curve on the top). The cost function is the squared Euclidean distance, and .

Figure 3.

(a): Approximation of a transference plan. Left: EDOT approximation. Right: naive approximation. In both figures, magnitudes of each point is color coded, the background grayscale density represents the true EOT plan. (b): An example of adaptive refinement on a unit square. Left: division of 10,000 sample S approximating a mixture of two truncated Gaussian distributions and the refinement for 30 discretization points. Number of discretization points assigned to each region is marked by black numbers. E.g., upper left regaion needs 6 points. Right: the discretization optimized locally and combined as a probability measure with .

The left of Figure 3a shows a EDOT approximation with , , and . The high density area of the EOT plan is correctly covered by EDOT estimating points with high weights. The right shows a naive approximation with , , and . The points of the naive estimating with the highest weights missed the region where the true EOT plan was of the most density.

5. Methods of Improvement

I. Adaptive EDOT: The computational cost of a simple EDOT increases with the dimensionality and diameter of the underlying space. Discretization with a large m is needed to capture higher dimensional distributions, which result an increase in parameters for calculating the gradient of : for the positions and for the weights. Such an increment will not only increase the complexity in each step, but also require more steps for the SGD to converge. Furthermore, the calculation will have a higher complexity ( for each normalization in Sinkhorn).

We proposed to reduce the computational complexity using a “divide and conquer” approach. The Wasserstein distance took the k-th power of the distance function as a cost function. The locality of distance made the solution to the OT/EOT problem local, meaning that the probability mass was more likely to be transported to a close destination than to a remote one. Thus, we can “divide and conquer”—thereby cutting the space X into small cells and solve the discretization problem independently.

To develop a “divide and conquer” algorithm, we need: (1) an adaptive dividing procedure that is able to partition , which balances the accuracy and computational intensity among the cells; (2) to determine the discretization size and choose a proper regularizer for each cell . The pseudocodes for all variations are shown in the Appendix C Algorithms A2 and A3.

Choosing size m: An appropriate choice of will balance contributions to the Wasserstein among the subproblems as follows: Let be a manifold of dimension d, let be its diameter, and let be the probability of . The entropy-regularized Wasserstein distance can be estimated as [16,17]. The contribution to per point in support of is . Therefore, to balance each point’s contribution to the Wasserstein among the divided subproblems, we set .

Occupied volume (Variation 1):A cell could be too vast (e.g., large in size with few points in a corner), thus resulting in obtaining a larger than needed. To fix it, we may replace the above with , where is the occupied volume calculated by counting the number of nonempty cells in a certain resolution (levels in previous binary division). The algorithm (Variation 1) becomes a binary tree to resolve and obtain the occupied volume for each cell, then there is tree traversal to assign .

Adjusting the regularizer : In the , the SK on is calculated. Therefore, should scale with to ensure that the transference plan is not affected by the scaling of . Precisely, we may choose for some constant .

The division: Theoretically, any refinement procedure that proceeds iteratively and eventually makes the diameter of each cell approach 0 can be applied for division. In our simulation, we used an adaptive kd-tree-style cell refinement in a Euclidean space . Let X be embedded into within an axis-aligned rectangular region. We chose an axis in and evenly split the region along a hyperplane orthogonal to (e.g., cut square along the line ); thus, we constructed and . With the sample set S given, we split it into two sample sizes and according to which subregion each sample was located in. Then, the corresponding and could be calculated as discussed above. Thus, two cells and their corresponding subproblems were constructed. If some of the was still too large, the cell was cut along another axis to construct two other cells. The full list of cells and subproblems could be constructed recursively. In addition, another cutting method (variation 2) that chooses the most sparse point as a cutting point through a sliding window is sometimes useful in practice.

After having the set of subproblems, we could apply the EDOT for the solutions in each cell, then combine the solutions into the final result .

Figure 3b shows the optimal discretization for the example in Figure 2c with , which was obtained by applying the EDOT with adaptive cell refinement, or .

II. On embedded CW complexes: Although the samples on space X are usually represented as a vector in , inducing an embedding , the space X usually has its own structure as a CW complex (or simply a manifold) with a more intrinsic metric. Thus, if the CW complex structure is known, even piecewise, we may apply the refinement on X with respect to its own metric, whereas direct discretization as a subset in may result in a low expressing efficiency.

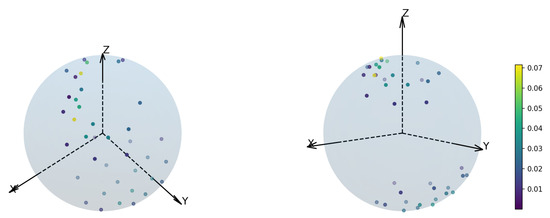

We now illustrate the adaptive EDOT by an example on a mixture normal distribution of a sphere mapped through stereographic projection. More examples of a truncated normal mixture over a Swiss roll and the discretization of a 2D optimal transference plan are detailed in the Appendix D.5.

On the sphere: The underlying space is the unit sphere in . is the pushforward of a normal mixture distribution on by stereographic projection. The sample set over is shown on Figure 4 on the left. Consider a (3D) Euclidean metric on the induced by the embedding. Figure 4a (right) plots the EDOT solution with refinement for with . The resulting cell structure is shown as colored boxes.

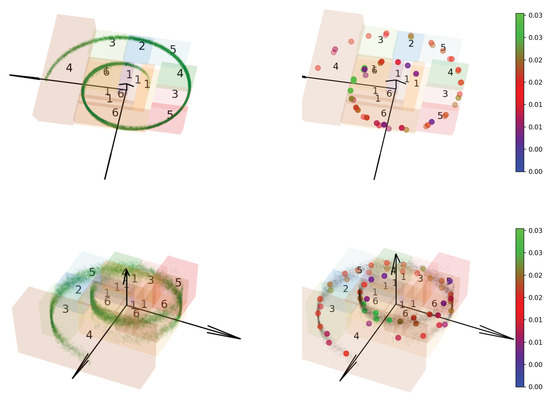

Figure 4.

(a) Left: 30,000 samples from and the 3D cells under divide-and-conquer algorithm Right: 40-point EDOTs in 3D. (b) The 40-point CW-EDOTs in 2D. Red dots: samples, other dots: discrete atoms with weights represented in colors. Left: upper hemisphere. Right: lower hemisphere, stereographic projections about poles. .

To consider the intrinsic metric, a CW complex was constructed about a point on the equator as a 0-cell structure; the rest of the equator was constructed as a 1-cell, and the upper hemisphere and lower hemisphere were constructed as two dimension 2- (open) cells. We took the upper and lower hemispheres and mapped them onto a unit disk through stereographic projection with respect to the south and north pole, respectively. Then, we took the metric from spherical geometry and rewrote the distance function and its gradient using the natural coordinate on the unit disk. Figure 4b shows the refinement of the EDOT on the samples (in red) and the corresponding discretizations in colored points. More figures can be found in the Appendices.

6. Analysis of the Algorithms

In this section, we derive the complexity of the simple EDOT and the adaptive EDOT. In particular, we show the following:

Proposition 3.

Let μ be a (continuous) probability measure on a space X. A simple EDOT of size m has time complexity and space complexity , where N is the minibatch size (to construct in each step to approximate μ), d is the dimension of X, L is the maximal number of iterations for SGD, and ϵ is the error bound in the Sinkhorn calculation for the entropy-regularized optimal transference plan between and .

Proposition 3 quantitatively shows that, when the adaptive EDOT is applied, the total complexities (in time and space) are reduced, because the magnitudes of both N and m are much smaller in each cell.

The procedure of dividing sample set S into subsets through the adaptive EDOT is similar to Quicksort; thus, the space and time complexities are similar. The similarity comes from the binary divide-and-conquer structure, as well as that each split action is based on comparing each sample with a target.

Proposition 4.

For the preprocessing (job list creation) for the adaptive EDOT, the time complexity is in the best and average case and in the worst case, where is the total number of sample points, and the space complexity is , or simply as .

Remark 2.

Complexity is the same as Quicksort. The set of sample points in the algorithm are treated as the “true” distribution in the adaptive EDOT, since, in the later EDOT steps for each cell, no further samples are taken, as it is hard for a sampler to produce a sample in a given cell. Postprocessing of the adaptive EDOT has complexity in both time and space.

Remark 3.

For the two algorithm variations in Section 5, the occupied volume estimation works in the same way as the original preprocessing step, which has the same time complexity as before (by itself, since dividing must happen after knowing the occupied volume of all cells), but, with the tree built, the original preporcessing becomes a tree traversal and has (additional) time complexity and (additional) space complexity for the space storing occupied volume.

For details on choosing cut points with window sliding, the discussion can be seen in the Appendix C.5.

Comparison with naive sampling: After having a size m discretization on X and a size n discretization on Y, the EOT solution (Sinkhorn algorithm) has time complexity . In the EDOT, two discretization problems must be solved before applying the Sinkhorn, while the naive sampling requires nothing but sampling.

According to Proposition 3, solving a single continuous EOT problem using a size m simple EDOT method may result in higher time complexity than naive sampling with an even larger sample size N (than m). However, unlike the EDOT, which only requires access to a distance function and on X and Y, respectively, a known cost function is necessary for naive sampling. In real applications, the cost function may be from real world experiments (or from extra computations) done for each pair in the discretization; thus, the size of discretized distribution is critical for cost control. and usually come along with the spaces X and Y, respectively, and are easy to compute. An additional application of the EDOT is necessary when the marginal distributions and are fixed for different cost functions; then, discretizations can be reused. Thus, the cost of discretization is calculated one time, and the improvement it brings accumulates in each repeat.

7. Related Work and Discussion

Our original problem was the optimal transport problem between general distributions as samplers (instead of integration oracles). We translated that into a discretization problem and an OT problem between discretizations.

I. Comparison with other discretization methods: There are several other methods that generate discrete distributions from arbitrary distributions in the literature, which are obtained via semi-continuous optimal transport where the calculation of a weighted Voronoi diagram is needed. Calculating the weighted Voronoi diagrams usually requires 1. that the cost function be a squared Euclidean distance and 2. the application of Delaunay triangulation, which is expensive in more than two dimensions. Furthermore, semi-continuous discretization may only optimize one aspect between the position and weights of the atoms, and this process is mainly based on [18] (the optimized position) and [19] (the optimized weights).

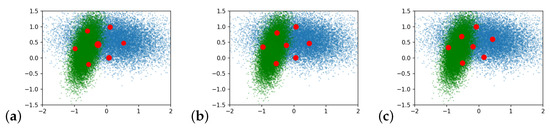

We mainly compared the prior work of [18], which focuses on the barycenter of a set of distributions under the Wasserstein metric. This work resulted in a discrete distribution called the Lagrangian discretization, which is of the form [2]. Other works, such as [20,21], find barycenters but do not create a discretization. Refs. [19,22] studied the discrete estimation of a 2-Wasserstein distance locating discrete points through a clustering algorithm k-means++ and a weighted Voronoi diagram refinement, respectively. Then, they assigned weights and made them non-Lagrangian discretizations. Ref. [19] (comparison in Figure 5) roughly followed a “divide-and-conquer” approach in selecting positions, but the discrete positions were not tuned according to Wasserstein distance directly. Ref. [22] converged as the number of discrete points increased. However, it lacked a criterion (such as the Wasserstein in the EDOT) to show that the choice is not just one among all possible converging algorithms, but, rather, it is a special one.

Figure 5.

EDOT of an example from [19]. Potrait of Riemann, discretization of size 625. Left: green dots show position and weights of EDOT discretization (same as right); cells in background are discretization of the same size in the original [19]. Right: A size 10,000 discretization from [19]; we directly applied EDOT to this picture, treating it as the continuous distribution. .

By projecting the gradient in the SGD to the tangent space of the submanifold , or by equivalently fixing the learning rate on the weights to zero, the EDOT can estimate a Lagrangian discretization (denoted by EDOT-Equal). A comparison among the methods is held on the map of the Canary islands, which is shown in Figure 6. This example shows that our method can get a similar result using Lagrangian discretization as the methods in the literature, while, in general, this type of EDOT can work better.

Figure 6.

A comparison of EDOT (left), EDOT-Equal (mid), and [18] (right) on the Canary islands, treated as a binary distribution with a constant density on islands and 0 in the sea. Discretizations for each method is shown by black bullets. Wasserstein distances: EDOT: , EDOT-Equal: , Claici: . Map size is .

Moreover, the EDOT can be used to solve barycenter problems.

Note that, to apply adaptive EDOT for barycenter problems, compatible divisions of the target distributions are needed (i.e., a cell A from one target distribution transports onto a discrete subset D thoroughly, and D transports onto a cell B from another target distribution, etc.).

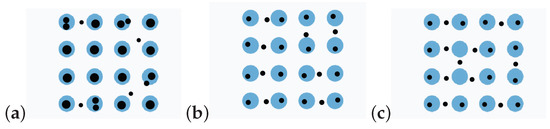

We also tested these algorithms on discretizing gray/colored scale pictures. The comparison of discretization with points varying from 10 to 4000 for a kitty image between EDOT, EDOT-equal, [18] and estimations of their Wasserstein distances to the original image are shown in Figure 7 and Figure 8.

Figure 7.

Discretization of a kitty. Discretization by each method is shown in red bullets on top of the Kitty image. (a) EDOT, 10 points, , radius represents weight; (b) EDOT-Equal, 10 points, ; (c) [18], 10 points, ; (d) [18], 200 points. Figure size , .

Figure 8.

2000-Point Discretizations, (a). EDOT (weight plotted in color), (b). EDOT-Equal, (c). Relations between and (all with divide and conquer); it can be seen that the advantage of over grows with the size of discretization.

Furthermore, the EDOT may be applied on RGB channels of an image independently, which then combine plots of discretizations in the corresponding color. The results are shown in Figure 1 at the beginning of this paper.

Lagrangian discretization may have a disadvantage in representing repetitive patterns with incompatible discretization points.

In Figure 9, we can see that discretizing 16 objects with 24 points caused weight incompatibility locally for the Lagrangian discretization, thus making points locate between objects and increasing the Wasserstein distance. With the EDOT, the weights of points that lie outside of the blue object were much smaller. The patterned structure was better represented by the EDOT. In practice, patterns often occur as part of the data (e.g., pictures of nature), and it is easy to get an incompatible number in Lagrangian discretization, since the equal weight-requirement is rigid; consequently, patterns cannot be properly captured.

Figure 9.

Discretization of 16 blue disks in a unit square with 24 points (black). (a) EDOT, ; (b) EDOT-Equal, ; (c) [18], . . Figure size is .

II. General k and deneral distance : Our algorithms (Simple EDOT, adaptive EDOT, and EDOT-Equal) work for a general choice of parameter and distance on X. For example, in Figure 4 part (b), the distance used on each disk was spherical (arc length along the big circle passing through two points), which could not be isometrically reparametrized into a plane with Euclidean metrics because of the difference in curvatures.

III. Other possible impacts: As the OT problem widely exists in many other areas, our algorithm can be applied accordingly, e.g., the location and size of supermarkets or electrical substations in an area, or even air conditioners in the rooms of supercomputers. Our divide-and-conquer methods are suitable for solving these real-world applications.

IV. OT for discrete distributions: Many algorithms have been developed to solve OT problems between two discrete distributions [3]. Linear programming algorithms were first developed, but their applications have been restricted by high computational complexity. Other methods such as [23], with a cost of form for some h, which applies the “back-and-forth” method by hopping between two forms of a Kantorovich dual problem (on the two marginals, respectively) to get a gradient of the total cost over the dual functions, usually solve problems with certain conditions. In our work, we chose to apply an EOT developed by [8] for an estimated OT solution of the discrete problem.

8. Conclusions

We developed methods for efficiently approximating OT couplings with fixed size approximations. We provided bounds on the relationship between a discrete approximation and the original continuous problem. We implemented two algorithms and demonstrated their efficacy as compared to naive sampling and analyzed computational complexity. Our approach provides a new approach to efficiently compute OT plans.

Author Contributions

Conceptualization, J.W. and P.W.; methodology, J.W.; software, J.W.; validation, P.W., P.S. and J.W.; formal analysis, J.W. and P.W.; investigation, P.W.; writing—original draft preparation, J.W., P.W. and P.S.; writing—review and editing, J.W., P.W. and P.S.; supervision, P.S.; project administration, P.S.; funding acquisition, P.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by DARPA grant number HR00112020039, W912CG22C0001, W911NF2020001 and NSF MRI 1828528.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| OT | Optimal Transport |

| EOT | Entropy-Regularized Optimal Transport |

| EDOT | Efficient Discretization of Optimal Transport |

| SGD | Stochastic Gradient Descent |

Appendix A. Proof of Proposition 1

Proof.

We will adopt the notations and . Furthermore, recall the condition:

For inequality (i) without loss, assume that

Denote the optimal that achieves by and similarly for . Then, we have:

Here, and eq (a) hold since , and ineq (b) holds since is the optimal choice.

For inequality (ii), we use the following to simplify the notations: and

Justifications for the derivations:

(a) Based on the dual formulation, it is shown in [5] Proposition 1 that, for , there exist such that:

;

(b) Inequality (ii) of Equation (A1);

(c) According to Ref. [24] Theorem 2, when X and Y are compact and c is smooth, and are uniformly bounded; moreover, both and are uniformly bounded by the diameter of X and Y, respectively; hence, the constant B exists;

(d) Inequality (ii) of Equation (A1);

(e) and ;

(f) Similarly as in (a), for , there exist , and such that and . Moreover, , and . □

Appendix B. Gradient of

Appendix B.1. The Gradient

Following the definitions and notations in Section 2 and Section 3 of the paper, we calculate the gradient of about parameters of in detail.

, where

Let and . Denote , and let

Since on the second component X is discrete and supported on , we may denote by ; thus,

Then, the Fenchel duality of Problem (A2) is

Let and be the argmax of the Fenchel dual (A5). The primal is solved by To make the solution unique, we restrict the freedom of the solution (where we see that for any ). We use the condition to narrow the choices down to only one, and denote the dual variable having the property and .

We first calculate with and as functions of . (from the paper).

Next, we calcuate the derivatives of and by finding their defining equation and then using the Implicit Function Theorem.

The optimal solution to the dual variables and is obtained by solving the stationary state equation . The derivatives are taken in the sense of the Fréchet derivative. The Fenchel dual function on and , has its domain and codomain . The derivatives are

where is defined as in the paper, (as a linear functional), and . Next, we need to show that is differentiable in the sense of the Fréchet derivative, i.e.,

By the definition of (we write for ),

The last equality is from a Taylor expansion of the exponential function. Consider that the essential supremum of for given measure .

Denote ,

Therefore,

which shows that the expression of in Equation (A8) gives the correct Fréchet derivative. Note here that is critical in Equation (A12).

Let values in . Then, defines and , which makes it possible to differentiate them about using the Implicit Function Theorem for Banach spaces. From now on, take values at , , i.e., the marginal conditions on hold.

Thus, we need and calculated, and prove that is invertible (and give the inverse).

It is necessary to make sure which form is in according to the Fréchet derivative. Start from the map , where is isomorphic to its dual Banach space . Then, , where represents the set of bounded linear operators. Moreover, recall that is the left adjoint functor of ; then, for -vector spaces, . Thus, we can write in terms of a bilinear form on vector space .

Consider the boundary conditions and . The as the Hessian form of can be written as

with , or further as

over the basis .

By the inverse of , we mean the element in which composes with (on the left and on the right) as identities. By the natural identity between double dual and the tensor hom adjunction,

we can write the inverse of as a bilinear form again.

Denote in the block form . According to the block-inverse formula

where , whose invertibility determines the invertibility of .

Consider that ; explicitly, . Therefore, from Equation (A17),

The matrix F is symmetric, of rank , and strictly diagonally dominant; therefore, it is invertible. To see the strictly diagonal dominance, consider by applying the marginal conditions. The matrix F is of size (there is no or for ). Then, the matrix F is strictly diagonally dominant.

With all ingradients known in formula (A19), we can calculate the inverse of .

Following the implicit function theorem, we need ; each partial derivative is an element in .

Note that if we apply the constraint to the s, we may set and recalculate the above derivatives as when and .

Appendix B.2. Second Derivatives

In this part, we calculate the second derivatives of with respect to the ingredients of , i.e., s and s, for the potential of applying Newton’s method to the EDOT (which we have not implemented yet).

Using the previous results, we can further calculate the second derivatives of about s and s. Differentiating (A6) and (A7) results in

Once we have the second derivatives of on s, we need the second derivatives of and to build the above second derivatives. From the formula , we can differentiate

Here, from the formula that (this is the product rule for ), we have

and

The last piece we need is :

where in the last one, k, represents the k-th component in ’s second part (about ).

Appendix C. Algorithms

Appendix C.1. Algorithm: Simple EDOT

The following states the Algorithm A1 of Simple EDOT.

| Algorithm A1 Simple EDOT using minibatch SGD |

|

Appendix C.2. Proof of Proposition 2

Remark A1.

The convergence to a stationary point in expectation means that the liminf of the expected norm of the gradient over all the sequences considered approaches to 0.

Proof.

Discrete distributions of size m can be parameterized by in terms of with and .

To make the SGD work, we assume that X is a path-connected subset of of dimension d.

For , let . First, we prove the claim by assuming that the set of limit points of is contained in .

According to Theorem 4.10 and Corollary 4.12 of [25], to show that Algorithm A1 converges to a stationary point in expectation, i.e., , one needs to check: (1). is second-differentiable; (2). is Lipschitz continuous; and (3). is bounded.

(1). The second differentiablity of is shown in Appendix B.2 with the second derivative calculated.

(2). As a consequence of (a), we have that is continuous. Moreover, by checking each factor of shown in Appendix B.2, we can see that is bounded. (Actually, we need finite to make the SIM bounded, which is true in .) Therefore, is Lipschitz continuous.

(3). Noise has bounded variance: Equivalently, we just need to check that

is finite, where is the empirical distribution with N samples taken (which is stochastic), and is the fixed discretization in (this need not to be the “optimal” one). is continuous with respect to both and ; hence, it is continuous over compact space ; hence, it is bounded by a constant C.

Thus, the proposition holds with assumption .

Further suppose that assumption does not hold. Then, for any sequence , there always exist infinite limit points of that lie outside for any . Therefore, we can construct a subsequence of converging to a point . Thus, p is also a limit point. This contradicts the assumption that the set of limit points of does not intersect with . The proof is then complete. □

Appendix C.3. Proof of Proposition 3

Proof.

First, for each iteration in the minibatch SGD, let N be the sample (minibatch) size of for approximating . Let m be the size of target discretization (the output). Furthermore, let d be the dimension of X and be the error bound in the Sinkhorn calculation for the entropy-regularized optimal transference plan between and . The Sinkhorn algorithm for the positive matrix (of size ) converges linearly, which takes steps to fall into a region of radius , thus contributing in time complexity. The inverse matrix of (Equation (6)) is taken block-wise

where . Block E is constructed in and inverted in ; block takes , as A is diagonal; and the block takes to construct. When , the time complexity in constructing is . From to the gradient of dual variables, the tensor contractions have complexity . Finally, to get the gradient, the complexity is dominated by the second term of (see Equation (5)), which is a contraction between a matrix (i.e., ) with tensors of sizes and (two gradients on the dual variables and ) along N and m, respectively. Thus, the final step contributes .

The time complexity of increment steps in the SGD turns out to be . Therefore, for L steps of the minibatch SGD, the time complexity is .

For space complexity of the simple EDOT, the Sinkhorn algorithm (which can be done in position ) is the only iterative computation in a single SGD step, and between two SGD steps, only the resulting distribution is passed to the next step. Therefore, the space complexity is coming from the ; others are, at most, of size . □

Appendix C.4. Adaptive Refinement via DFS: Midpoints

The pseudocode for the division algorithm of the adaptive EDOT using KD-tree refinement cutting at the midpoints is shown in Algorithm A2. The means the rounding method with rounded up, and is that with rounded down; thus, the discretization point is correctly partitioned.

| Algorithm A2 Adaptive Refinement via Depth First Search |

|

| Algorithm A3 Adaptive EDOT Variation 1 |

|

Appendix C.5. Adaptive Refinement: Variation 1

The original division algorithm of the adaptive EDOT (Algorithm A2) cuts a cell into two based on the balance of the averaged contribution of per discretization point between the divided cells. However, when the mass of is not distributed evenly in a cell to be cut, especially if it concentrates on a few small regions, the estimation of by the diameter of a cell becomes far greater than the actual one, thereby resulting in assigning much more discretizaiton points to a cell (Figure A1). Thus, we develop the division algorithm of Variation 1 to elevate the performance in this situation by estimating the Wasserstein distance using an occupied volume of a set of sample points (usually the same sample points we used in Algorithm A2): Given a resolution (the upper bound of a cell’s volume can be taken as , with as the size of sample points), we keep cutting the region X until each cell is either of a volume smaller than R or contains no sample points; then, we call the total volume of those nonempty cells by the occupied volume . Similar definition applies to each cell .

Figure A1.

Divide-and-conquer strategies: original and Variation 1. Original tends to assign more atoms to vast region with small weights, while Variation 1 does better. Example: distribution on , pdf is plotted in blue curve, discretization size of each cell is in black.

After having the occupied volume of each cell, we may proceed to assign a number of discretization points to each cell. The only improvement of division algorithm Variation 1 in this part is on the Wasserstein estimation step (line 12 and 13 in Algorithm A2), where the estimated Wasserstein of cell i is changed from to .

It is considered that the algorithm assigning the discretization size depends on the estimation of the Wasserstein distance; however, this estimation in Variation 1 requires the occupied volume, which is calculated from leaf to root, meaning that the binary tree for occupied volume has to be built before starting Algorithm A2 with a modified estimation of . Fortunately, as the cutting points in this step have to coincide with the occupied-volume-calculation step, and the sample points belonging to each cell are both needed, we may save the sample points partition in the binary tree building for occupied volume and reuse them in discretization size assigning. Therefore, the discretization size assigning step works as a tree traversal (on a subtree with the same root, which is defined by the stopping conditions in depth along each path) of the binary tree built for occupied volume calculation.

Therefore, the time complexity for the occupied volume calculation is again , as the algorithm works in the same way as Quicksort again, and the time complexity for the rest (assigning discretization sizes) is as traversal on a tree of, at most, m leaves (m discretization points in total).

For space complexity, it is still , since after the calculation of occupied volume, the rest is only adding constant size decorations onto the subtree with, at most, m leaves mentioned above.

Appendix C.6. Adaptive Refinement: Variation 2

The “cutting in the middle” method is easy to implement and guarantee the volume decreasing while going deeper in the tree (so the depth of getting under the resolution is guaranteed). However, it is also too rigid to fit the natural gaps of the original distribution, which may critically affect the optimal location of discretization points.

Our Variation 2 is on the dimension of redefining the cutting points from midpoints along the corresponding axis to the most sparse points. The sparsity is calculated by the moving window method along an axis/component of the d-coordinates; by applying the moving window method, we may have to sort the data points every time (since at each node, the sorting axis/component may be different). Since we still want to control the depth of the tree, a correction must be added to avoid the cutting point from locating too close to the boundaries (usually, the function with a and b the boundaries and as a constant). One influence is that now each cell’s volume (not the occupied volume) has to be calculated using the rectangular boundaries instead of being indicated only from its depth as before.

Thus, the influence on the time complexity is the following: 1. Changing the tree-building step to in the average case, in worst case (if Quicksort is applied) on each node’s moving-window method), and 2. Introducing a for calculating the volume on each node in the binary tree. Furthermore, it introduces, at most, additional space complexity, since each cell’s volume has to be stored instead of being calculated directly from the depth.

Variation 2 can be applied together with Variation 1, since they are aiming at different parts of the algorithm. An example is shown in Figure A6.

Appendix D. Empirical Parts

Appendix D.1. Estimate : Richardson Extrapolation and Others

In the analysis, we may need to compare how discretization methods behave. However, when the is not discrete, we are generally not able to obtain the analytical solution to the Wasserstein distance.

In certain cases, including all examples this paper contains, the Wasserstein can be estimated by finite samples (with a large size). According to [26], for in our setup (a probability measure on a compact Polish space with Borel algebra) and with being a continuous function, the Online Sinkhorn method can be used to estimate . The Online Sinkhorn needs a large number of samples for (in batch) to be accurate.

In our paper, as X are compact subsets in , and has a continuous probability density function, we may use the Richardson Extrapolation method to estimate the Wasserstein distance between and , which may require fewer samples and fewer computations (the Sinkhorn twice with different sizes).

Our examples are on intervals or rectangles, in which two grids of N points and of points (N and are both integers) can be constructed naturally for each. With determined by a smooth probability density function , let be the normalization of (this may not be a probability distribution, so we use its normalization). From a continuity of and the boundedness of the dual variables and , we can conclude that

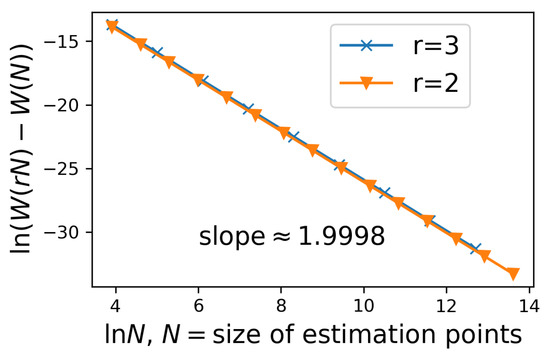

Let be a function of ; to apply Richardson extrapolation, we need the exponent of the lowest term of in the expansion , where .

Consider that

Since , we may conclude that , where d is the dimension of X. Figure A2 shows an empirical example in a and situation.

Figure A2.

Richardson Extrapolation: the power of the expansion about . We take the EDOT of example 2 (1-dim truncated normal mixture) as the target and use evenly positioned for different Ns to estimate. The y-axis is , where and are calculated. With as x-axis, linearity can be observed. The slopes are both about , which represent the exponent of the leading non-constant term of on N, while the theoretical result is . The differences are from higher order terms on N.

Appendix D.2. Example: The Sphere

The CW complex structure of the unit sphere is constructed as follows: let , the point on the equator, be the only dimension-0 structure, and let the equator be the dimension-1 structure (line segment attached to the dimension-0 structure by identifying both end points to the only point ). The dimension-2 structure is the union of two unit discs, which is identified to the south/north hemisphere of by stereographic projection:

with respect to the north/south pole.

Spherical Geometry

The spherical geometry is the Riemannian manifold structure induced by embedding onto the unit sphere in .

The geodesic between two points is the shorter arc along the great circle determined by the two points. In their coordinates, . Composed with stereographic projections, the distance in terms of CW complex coordinates can be calculated (and be differentiated).

The gradient about (or its CW coordinate) can be calculated via the above formulas. In practice, the only problem is when function arccos at is singular. From the symmetry of sphere on the rotation along axis , the derivatives of distance along all directions are the same. Therefore, we may choose the radial direction on the CW coordinate (unit disc). Furthermore, the differentiations are primary to calculate.

Appendix D.3. A Note on the Implementation of SGD with Momentum

There is a slight difference between our implementation of the SGD and the algorithm provided in the paper. In the implementation, we give two different learning rates to the positions (s) and the weights (s), as moving along positions is usually observed much slower than moving along weights. Empirically, we make the learning rates on the positions be exactly three times the learning rates on the weights at each SGD iteration. With this change, the convergence is faster, but we do not have a theory or empirical evidence to show that a fixed ratio of three is the best choice.

Implementing and testing the Newton’s method (hybrid with SGD) and other improved SGD methods could be good problems to work on.

Figure A3.

The sphere example with 3D discretization (same as the paper) on two view directions. Colors of dots represent the weights of each atom in the distribution.

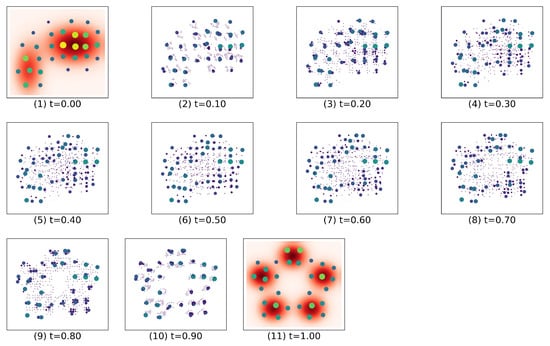

Appendix D.4. An Example on Transference Plan with Adaptive EDOT

We now illustrate the performance of the adaptive EDOT on a 2D optimal transport task. Let , be the Euclidean distance, , and the marginal , be truncated normal (mixtures), where has only two components and has five components. Figure A4 plots the McCann Interpolation of the OT plan between and (shown in red dots) and its discrete approximations (weights are color coded) with . With , the adaptive EDOT results were as follows: , , and . With , the adaptive EDOT results were as follows: , , and . The naive sampling results were as follows: , , and . The adaptive EDOT approximated the quality of 900 naive samples with only 100 points on a four-dimensional transference plan.

Figure A4.

The McCann interpolation figures in finer time resolution for visualizing the transference plan from (1)–(11). It is a refined figure of the original paper. We see can see that the larger bubbles (representing a large probability mass) moved in a short distance, and smaller pieces moved longer.

Appendix D.5. Example: Swiss Roll

In this case, the underlying space is a the Swiss Roll, which is a 2D rectangular strip embedded in : in cylindrical coordinates. is a truncated normal mixture on a -plane. Samples over are shown in Figure A5 (left) embedded in 3D and in Figure A6a as isometric into .

By following the Euclidean metric in , Figure A5 (right) plots the EDOT solution through adaptive cell refinement (Algorithm A2) with . The resulting cell structure is shown as colored boxes. The corresponding partition of is shown on Figure A6a, with samples contained in a cell marked by the same color. According to Figure A5 (right), the points in were mainly located on the strip, with only one point off in the most sparse cell (yellow cell located in the bottom in the figure).

Figure A5.

Discretization of a distribution supported on a Swiss Roll. Left: A total of 15,000 samples from the truncated normal mixture distribution over . Right: A 50-point 3D discretization using Variation 2 of Algorithm A2; the refinement cells are shown in colored boxes.

On the other hand, consider the metric on induced by the isometry from the Swiss Roll as a manifold to a strip on . A more intrinsic discretization of can be obtained by applying the EDOT through a refinement on the coordinate space—the (2D) strip. The partition of is shown on Figure A6b, and the resulting discretization is shown in Figure A6c. Notice that all 50 points were located on the (locally) high density region of the Swiss Roll. We observe from Figure A6a,b that the 3D partition pulled disconnected and intrinsically remote regions together, while the 2D partition maintained the intrinsic structure.

Figure A6.

Swiss Roll under isometry. (a) Refinement cells under 3D Euclidean metric (one color per samples from a refinement cell). (b) Refinement cells under 2D metric induced by the isometry. (c) EDOT of 50 points with respect to the 2D refinement. for all.

Appendix D.6. Example: Figure Densities

We used OpenCV to process the figures. The cat figure is a gray-scale figure of size . Variation 1 was used in cutting the figure into pieces, since the figure contains some sparse regions on which the original division algorithm does not work well.

For the colored figures (Starry Night and Girl with a Pearl Earring) in Figure 1, we process the three channels independently, then plotted the colored dots, and finally combined them as corresponding channels in a colored file. In the reconstruction of Starry Night, we made the size of the colored dots of same size with a modified color value according to the weights. Furthermore, for Girl with a Pearl Earring, we used pure color ((255,0,0) as red, etc.) and changed the size of the dots (with an area proportional to the weights).

Appendix D.7. Example: Simple Barycenter Problems

The EDOT in simple form (no divide and conquer) can solve barycenter problems. The idea is simple: the gradient of a sum of functions is the sum of gradients of each function. Thus, to find the discrete barycenter of size m for several distributions , we take the objective to be the sum of Wasserstein distances (raised to power k for rationality), whose gradient-to-target distribution is the sum of gradients between the discretization and each target distribution. This method only works for the simple EDOT, since there is no locality in barycenter problems. After a division, the weights of each target distribution in each cell of the partition may be different, so there is inter-cell transport in the optimal transport plan, which the current algorithm cannot deal with.

We can see in Figure A7 that the simple EDOT-Equal (no divide and conquer) achieved similar results as the non-regularized discretization in [18], whereas the EDOT produced a better approximation of the barycenter by taking advantage of changing weights freely.

Figure A7.

A 7-point barycenter of two Gaussian distributions: (a): EDOT, area of dots represent the weights, ; (b): EDOT-Equal, ; (c): [18], ; W are regularized with .

References

- Kantorovich, L.V. On the translocation of masses. J. Math. Sci. 2006, 133, 1381–1382. [Google Scholar] [CrossRef]

- Peyré, G.; Cuturi, M. Computational optimal transport. Found. Trends Mach. Learn. 2019, 11, 355–607. [Google Scholar] [CrossRef]

- Villani, C. Optimal Transport: Old and New; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008; Volume 338. [Google Scholar]

- Janati, H.; Muzellec, B.; Peyré, G.; Cuturi, M. Entropic Optimal Transport between Unbalanced Gaussian Measures has a Closed Form. Adv. Neural Inf. Process. Syst. 2020, 33, 10468–10479. [Google Scholar]

- Aude, G.; Cuturi, M.; Peyré, G.; Bach, F. Stochastic optimization for large-scale optimal transport. arXiv 2016, arXiv:1605.08527. [Google Scholar]

- Allen-Zhu, Z.; Li, Y.; Oliveira, R.; Wigderson, A. Much faster algorithms for matrix scaling. In Proceedings of the 2017 IEEE 58th Annual Symposium on Foundations of Computer Science (FOCS), Berkeley, CA, USA, 15–17 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 890–901. [Google Scholar]

- Lin, T.; Ho, N.; Jordan, M.I. On the efficiency of the Sinkhorn and Greenkhorn algorithms and their acceleration for optimal transport. arXiv 2019, arXiv:1906.01437. [Google Scholar]

- Cuturi, M. Sinkhorn distances: Lightspeed computation of optimal transport. In Proceedings of the Advances in Neural Information Processing Systems, Harrahs and Harveys, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 2292–2300. [Google Scholar]

- Sinkhorn, R.; Knopp, P. Concerning nonnegative matrices and doubly stochastic matrices. Pac. J. Math. 1967, 21, 343–348. [Google Scholar] [CrossRef]

- Wang, J.; Wang, P.; Shafto, P. Sequential Cooperative Bayesian Inference. In Proceedings of the International Conference on Machine Learning, PMLR, Online/Vienna, Austria, 12–18 July 2020; pp. 10039–10049. [Google Scholar]

- Tran, M.N.; Nott, D.J.; Kohn, R. Variational Bayes with intractable likelihood. J. Comput. Graph. Stat. 2017, 26, 873–882. [Google Scholar] [CrossRef]

- Overstall, A.; McGree, J. Bayesian design of experiments for intractable likelihood models using coupled auxiliary models and multivariate emulation. Bayesian Anal. 2020, 15, 103–131. [Google Scholar] [CrossRef]

- Wang, P.; Wang, J.; Paranamana, P.; Shafto, P. A mathematical theory of cooperative communication. Adv. Neural Inf. Process. Syst. 2020, 33, 17582–17593. [Google Scholar]

- Luise, G.; Rudi, A.; Pontil, M.; Ciliberto, C. Differential properties of sinkhorn approximation for learning with wasserstein distance. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–8 December 2018; pp. 5859–5870. [Google Scholar]

- Accinelli, E. A Generalization of the Implicit Function Theorems. 2009. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1512763 (accessed on 3 February 2021).

- Weed, J.; Bach, F. Sharp asymptotic and finite-sample rates of convergence of empirical measures in Wasserstein distance. Bernoulli 2019, 25, 2620–2648. [Google Scholar] [CrossRef]

- Dudley, R.M. The speed of mean Glivenko-Cantelli convergence. Ann. Math. Stat. 1969, 40, 40–50. [Google Scholar] [CrossRef]

- Claici, S.; Chien, E.; Solomon, J. Stochastic Wasserstein Barycenters. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 999–1008. [Google Scholar]

- Mérigot, Q. A multiscale approach to optimal transport. In Proceedings of the Computer Graphics Forum; Blackwell Publishing Ltd.: Oxford, UK, 2011; Volume 30, pp. 1583–1592. [Google Scholar]

- Solomon, J.; de Goes, F.; Peyré, G.; Cuturi, M.; Butscher, A.; Nguyen, A.; Du, T.; Guibas, L. Convolutional Wasserstein Distances: Efficient Optimal Transportation on Geometric Domains. ACM Trans. Graph. 2015, 34, 1–11. [Google Scholar] [CrossRef]

- Staib, M.; Claici, S.; Solomon, J.M.; Jegelka, S. Parallel Streaming Wasserstein Barycenters. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Long Beach, CA, USA, 2017; Volume 30. [Google Scholar]

- Beugnot, G.; Genevay, A.; Greenewald, K.; Solomon, J. Improving Approximate Optimal Transport Distances using Quantization. arXiv 2021, arXiv:2102.12731. [Google Scholar]

- Jacobs, M.; Léger, F. A fast approach to optimal transport: The back-and-forth method. Numer. Math. 2020, 146, 513–544. [Google Scholar] [CrossRef]

- Genevay, A.; Chizat, L.; Bach, F.; Cuturi, M.; Peyré, G. Sample complexity of sinkhorn divergences. In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 1574–1583. [Google Scholar]

- Bottou, L.; Curtis, F.E.; Nocedal, J. Optimization methods for large-scale machine learning. Siam Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Mensch, A.; Peyré, G. Online sinkhorn: Optimal transport distances from sample streams. Adv. Neural Inf. Process. Syst. 2020, 33, 1657–1667. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).