1. Introduction

Diffusion-based generative models [

1,

2,

3,

4,

5,

6,

7] have recently gained popularity due to their ability to synthesize high-quality audio [

8,

9], image [

10,

11] and other data modalities [

12], outperforming known methods based on Generative Adversarial Networks (GANs) [

13], normalizing flows (NFs) [

14] or Variational Autoencoders (vaes) and Bayesian autoencoders (BAEs) [

15,

16].

Diffusion models learn to generate samples from an unknown density

by reversing a

diffusion process which transforms the distribution of interest into noise. The forward dynamics injects noise into the data following a diffusion process that can be described by a Stochastic Differential Equation (SDE) of the form

where

is a random variable at time

t,

is the

drift term,

is the

diffusion term and

is a

Wiener process (or Brownian motion). We also consider a special class of linear SDEs, for which the drift term is decomposed as

, where the function

for all

t, and the diffusion term is independent of

. This class of parameterizations of SDEs is known as

affine and it admits analytic solutions. We denote the time-varying probability density by

, where, by definition

, and the conditional on the initial condition

by

. The forward SDE is usually considered for a “sufficiently long”

diffusion time T, leading to the density

. In principle, when

,

converges to Gaussian noise, regardless of initial conditions.

For generative modeling purposes, we are interested in the inverse dynamics of such process, i.e., transforming samples of the noisy distribution

into

. Such dynamics can be obtained by considering the solutions of the inverse diffusion process [

17],

where

, with the inverse dynamics involving a new Wiener process. Given

as the initial condition, the solution of Equation (

2) Equation (

2) after a

reverse diffusion time T, will be distributed as

. We refer to the density associated to the backward process as

. The simulation of the backward process is referred to as

sampling and, differently from the forward process, this process is not

affine and a closed form solution is out of reach.

Practical considerations on diffusion times. In practice, diffusion models are challenging to work with [

3]. Indeed, a direct access to the true

score function

required in the dynamics of the reverse diffusion is unavailable. This can be solved by approximating it with a parametric function

, e.g., a neural network, which is trained using the following loss function:

where

is a positive weighting factor and the notation

means that the expectation is taken with respect to the random process

in Equation (

1) for a generic function

h,

. The loss in Equation (

3), usually referred to as

score matching loss, is the cost function considered in [

18] (Equation (4)). The condition

, which we use in this work, is referred to a

likelihood reweighting. Due to the affine property of the drift, the term

is analytically known and normally distributed for all

t (expression available in

Table 1, and in Särkkä and Solin [

19]). Intuitively, the estimation of the

score is akin to a denoising objective, which operates in a challenging regime. Later, we will quantify the difficulty of learning the

score, as a function of

T.

While the forward and reverse diffusion processes are valid for all T, the noise distribution is analytically known only when the diffusion time is . Then, the common solution is to replace with a simple (i.e., easy to sample) distribution , which, for the classes of SDEs that we consider in this work, is a Gaussian distribution.

In the literature, the discrepancy between

and

has been neglected, under the informal assumption of a sufficiently large diffusion time. Unfortunately, while this approximation seems a valid approach to simulate and generate samples, the reverse diffusion process starts from an initial condition

which is different from

and, as a consequence, it will converge to a solution

that is different from the true

. Later, we will expand on the error introduced by this approximation, but for illustration purposes,

Figure 1 shows quantitatively this behavior for a simple 1D toy example where we set the data distribution equal to a mixture of normal (

) distributions as

, with

. When

T is small, the distribution

is very different from

and samples from

exhibit very low likelihood of being generated from

.

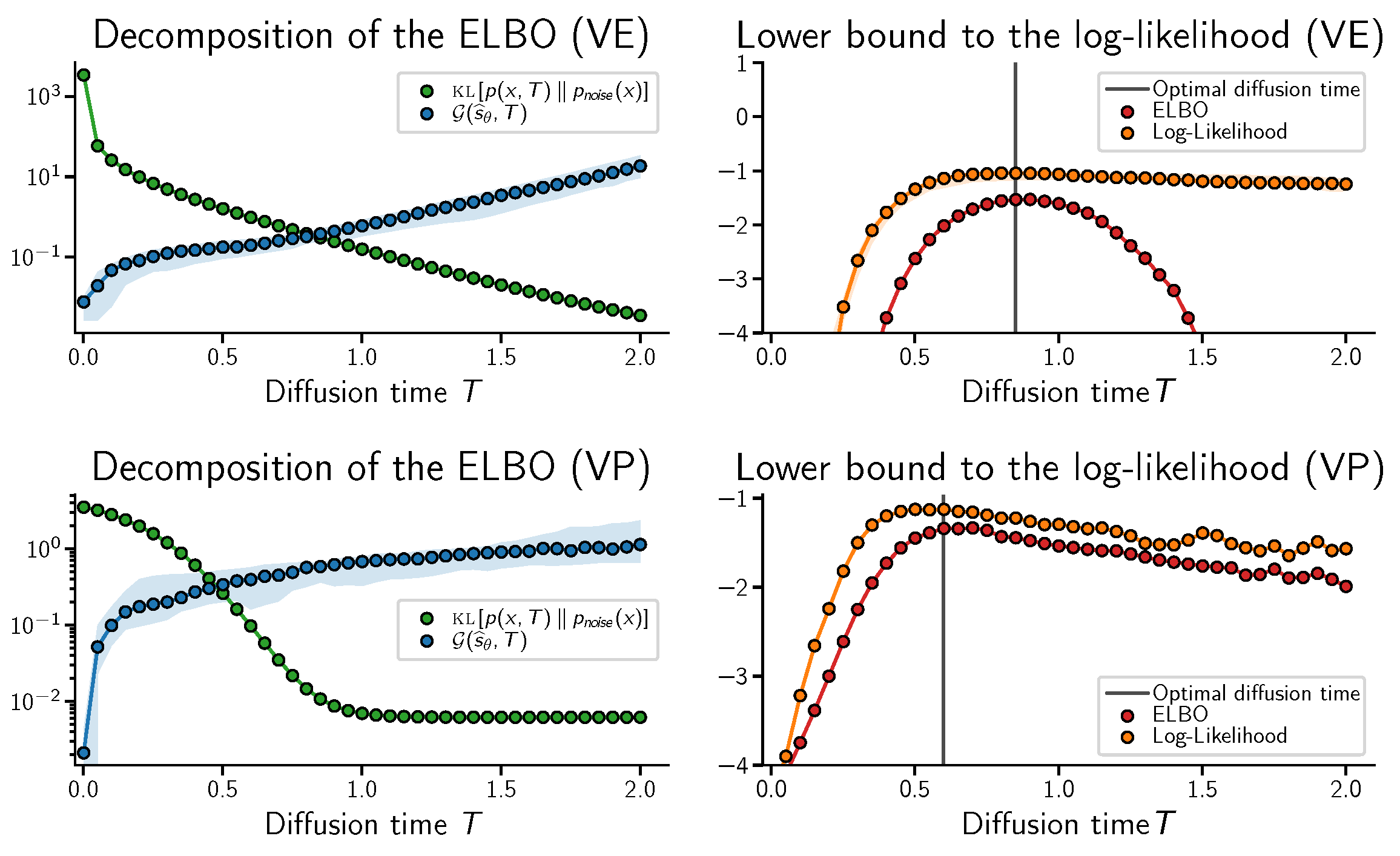

Crucially,

Figure 1 (zoomed region) illustrates an unknown behavior of diffusion models, which we unveil in our analysis. The right balance between efficient

score estimation and sampling quality can be achieved by diffusion times that are smaller than common best practices. Moreover, even excessively large diffusion times can be detrimental. This is a key observation that we explore in our work.

Contributions. An appropriate choice of the diffusion time

T is a key factor that impacts training convergence, sampling time and quality. On the one hand, the approximation error introduced by considering initial conditions for the reverse diffusion process drawn from a simple distribution

increases when

T is small. This is why the current best practice is to choose a sufficiently long diffusion time. On the other hand, training convergence of the

score model

becomes more challenging to achieve with a large

T, which also imposes extremely high computational costs

both for training and for sampling. This would suggest to choose a smaller diffusion time. Given the importance of this problem, in this work, we set off to study the existence of suitable operating regimes to strike the right balance between computational efficiency and model quality. The main contributions of this work are the following:

Contribution 1: We use an evidence lower bound (ELBO) decomposition which allows us to study the impact of the diffusion time T. This ELBO decomposition emphasizes the roles of (i) the discrepancy between the “ending” distribution of the diffusion and the “starting” distribution of the reverse diffusion processes, and (ii) of the

score

matching objective. Crucially, our analysis does not rely on assumptions on the quality of the score models. We explicitly study the existence of a trade-off and explore experimentally, for the first time, current approaches for selecting the diffusion time T.

Contribution 2: In

Section 3, we propose a novel method to improve

both

the training and sampling efficiency of diffusion-based models, while maintaining high sample quality. Our method introduces an auxiliary distribution, allowing us to transform the simple “starting” distribution of the reverse process used in the literature so as to minimize the discrepancy to the “ending” distribution of the forward process. Then, a standard reverse diffusion can be used to closely match the data distribution. Intuitively, our method allows to build “bridges” across multiple distributions, and to set

T toward the advantageous regime of small diffusion times.

In addition to our methodological contributions, in

Section 4, we provide experimental evidence of the benefits of our method, in terms of sample quality and log likelihood. Finally, we conclude this work in

Section 5.

Related Work. A concurrent work by Zheng et al. [

20] presents an empirical study of a truncated diffusion process but lacks a rigorous analysis and a clear justification for the proposed approach. Recent attempts by Lee et al. [

9] to optimize

, or the proposal to do so [

21], have been studied in different contexts. Related work focus primarily on improving sampling efficiency (but not training efficiency), using a wide array of techniques. Sample generation times can be drastically reduced considering adaptive step-size integrators [

22]. Such methods are complementary to our approach, and can be used in combination with the techniques we propose in this work. Other popular choices are based on merging multiple steps of a pretrained model through distillation techniques [

23] or by taking larger sampling steps with GANs [

24]. Approaches closer to ours

modify

the sde, or the discrete time processes, to obtain inference efficiency gains. In particular, Song et al. [

7] considers implicit non-Markovian diffusion processes, while Watson et al. [

25] changes the diffusion processes by optimal scheduling selection, and Dockhorn et al. [

26] considers overdamped SDEs. Finally, hybrid techniques combining VAEs and diffusion models [

4] or simple auto encoders and diffusion models [

27] have positive effects on training and sampling times.

Moreover, we remark that a simple modification of the noise schedule to steer the diffusion process toward a small diffusion time [

5,

28] is not a viable solution. As we discuss in

Section 2.4, the optimal value of the ELBO, in the case of affine SDEs, is invariant to the choice of the noise schedule. Naively selecting a faster noise schedule does not provide any practical benefit in terms of computational complexity, as it requires smaller step sizes to keep the same accuracy of the original noise schedule simulation. However, the optimization of the noise schedule can have important practical effects on the stability of training and variance of estimations [

5]. Finally, few other works in the literature attempt to study the convergence properties of diffusion models. For instance, De Bortoli et al. [

29] obtain a total variation bound between the generated and data distribution under maximum error assumptions between true and approximated score. De Bortoli [

30] relaxes this requirement obtaining a bound in terms of Wasserstein distance. Lee et al. [

31] show how the total variation bound can be expressed as a function of the maximum score error and find that the bound is optimized for a diffusion time that depends on this error. Our work, on the other hand, does not make any assumption and aims at selecting the smallest possible diffusion time to maximize training and sampling efficiency.

3. A New, Practical Method for Decreasing Diffusion Times

The ELBO decomposition in Equation (

9) and the bounds in Lemma 1 and

Section 2.2 highlight a dilemma. We thus propose a simple method that allows us to achieve

both a small gap

and a small discrepancy

. Before that, let us use

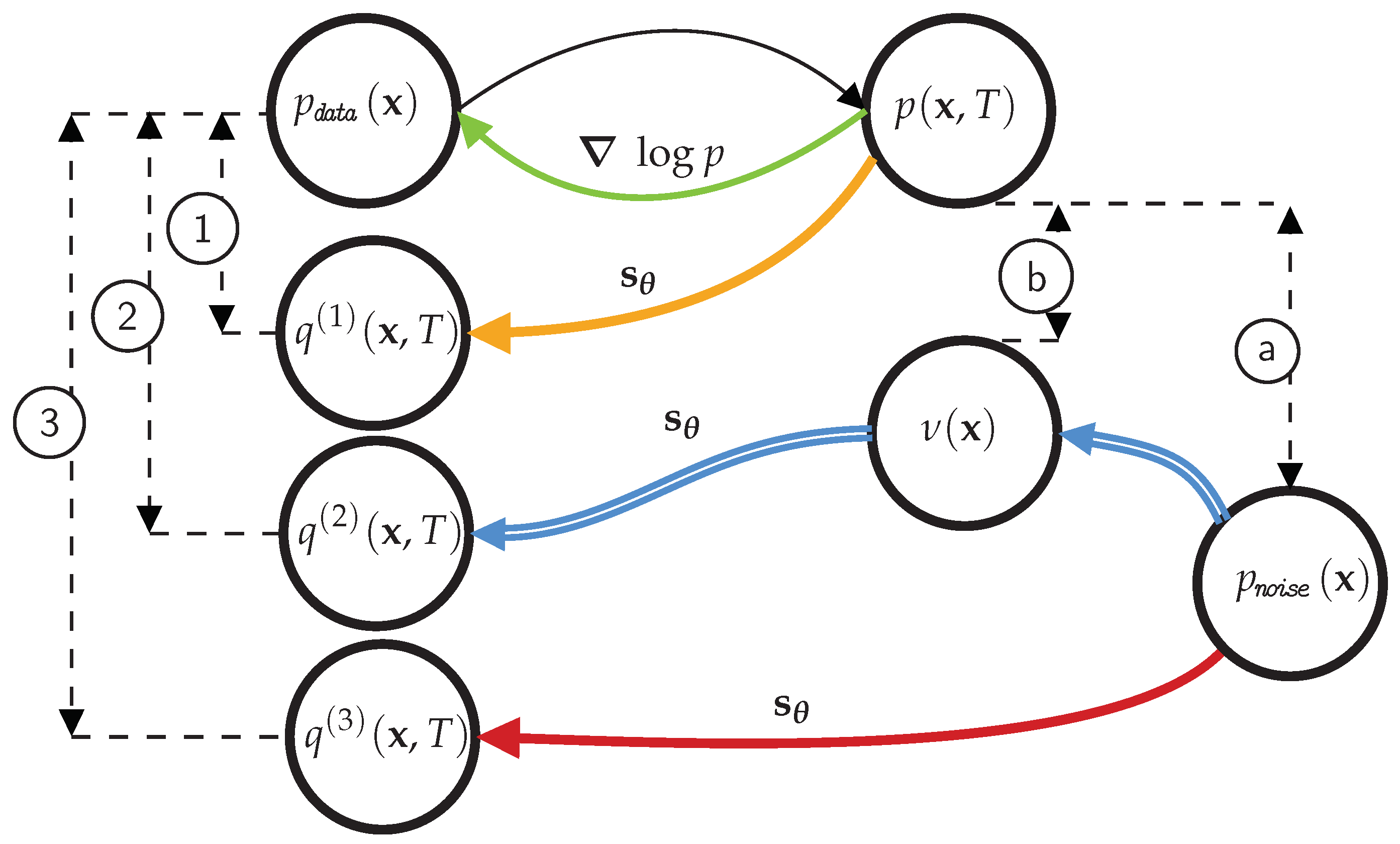

Figure 3 to summarize all densities involved and the effects of the various approximations, which will be useful to visualize our proposal.

The data distribution is transformed into the noise distribution through the forward diffusion process. Ideally, starting from , we can recover the data distribution by simulating using the exact score . Using the approximated score and the same initial conditions, the backward process ends up in , whose discrepancy ① to is . However, the distribution is unknown and replaced with an easy distribution , accounting for an error ⓐ measured as . With the score and initial distribution approximated, the backward process ends up in , where the discrepancy ③ from is the sum of the terms .

Multiple bridges across densities.

In a nutshell, our method allows us to reduce the gap term by selecting smaller diffusion times and by using a learned auxiliary model to transform the initial density into a density , which is as close as possible to , thus avoiding the penalty of a large KL term. To implement this, we first

transform

the simple distribution into the distribution , whose discrepancy ⓑ is smaller than ⓐ. Then, starting from from the auxiliary model , we use the approximate score to simulate the backward process reaching . This solution has a discrepancy ② from the data distribution of , which we will quantify later in the section. Intuitively, we introduce two bridges. The first bridge connects the noise distribution to an auxiliary distribution that is as close as possible to that obtained by the forward diffusion process. The second bridge—a standard reverse diffusion process—connects the smooth distribution to the data distribution. Notably, our approach has important guarantees, which we discuss next.

3.1. Auxiliary Model Fitting and Guarantees

We begin by stating the requirements we consider for the density . First, as it is the case for , it should be easy to generate samples from in order to initialize the reverse diffusion process. Second, the auxiliary model should allow us to compute the likelihood of the samples generated through the overall generative process, which begins in , passes through , and arrives in .

The fitting procedure of the auxiliary model is straightforward. First, we recognize that minimizing

with respect to

also minimizes

, which we can use as loss function. To obtain the set of optimal parameters

, we require samples from

, which can be easily obtained even if the density

is not available. Indeed, by sampling from

, and

, we obtain an unbiased Monte Carlo estimate of

, and optimization of the loss can be performed. Note that, due to the affine nature of the drift, the conditional distribution

is easy to sample from, as shown in

Table 1. From a practical point of view, it is important to notice that the fitting of

is independent from the training of the score-matching objective, i.e., the result of

does not depend on the shape of the auxiliary distribution

. This implies that the two training procedures can be run in parallel, thus enabling considerable time savings.

Next, we show that the first bridge in our model reduces the KL term, even for small diffusion times.

Proposition 1.

Let us assume that is in the family spanned by , i.e., there exists such that . Then we have that

Since we introduce the auxiliary distribution

, we shall define a new ELBO for our method:

Recalling that is the optimal score for a generic time T, Proposition 1 allows us to claim that .

Then, we can state the following important result:

Proposition 2.

Given the existence of , defined as the diffusion time such that the ELBO is maximized (Section 2.3), there exists at least one diffusion time , such that .

Proposition 2, which we prove in

Appendix I, has two interpretations. On the one hand, given two score models optimally trained for their respective diffusion times, our approach guarantees an ELBO that is at least as good as that of a standard diffusion model configured with its optimal time

. Our method achieves this with a smaller diffusion time

, which offers sampling efficiency and generation quality. On the other hand, if we settle for an equivalent ELBO for the standard diffusion model and our approach, with our method we can afford a sub-optimal score model, which requires a smaller computational budget to be trained, while guaranteeing shorter sampling times. We elaborate on this interpretation in

Section 4, where our approach obtains substantial savings in terms of training iterations.

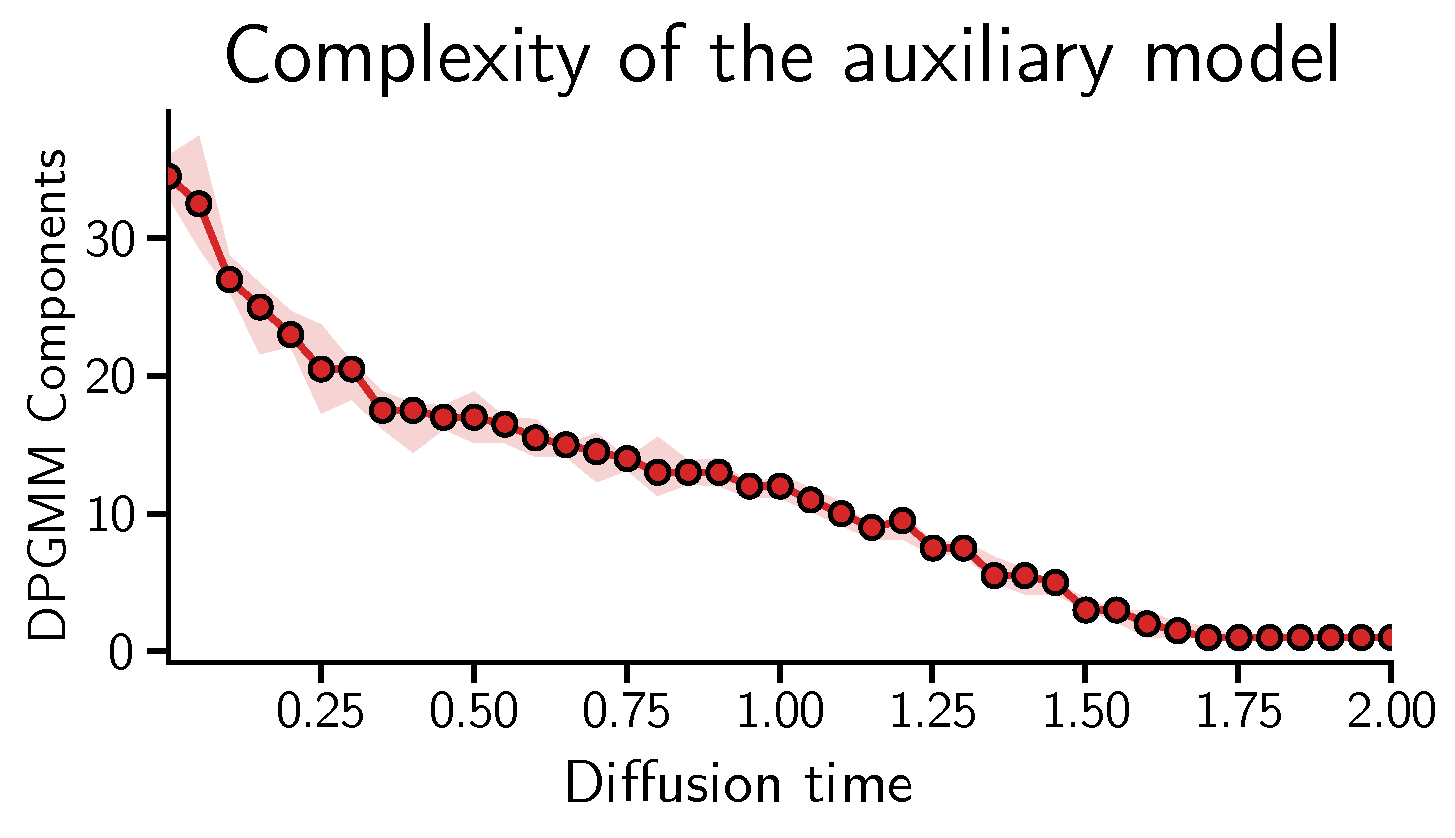

A final note is in order. The choice of the auxiliary model depends on the selected diffusion time. The larger the

T, the “simpler” the auxiliary model can be. Indeed, the noise distribution

approaches

, so that a simple auxiliary model is sufficient to transform

into a distribution

. Instead, for a small

T, the distribution

is closer to the data distribution. Then, the auxiliary model requires high flexibility and capacity. In

Section 4, we substantiate this discussion empirically on synthetic and real data.

3.2. Comparison with Schrödinger Bridges

In this section, we briefly compare our method with the Schrödinger bridges approach [

29,

35,

36], which allows one to move from an arbitrary

to

in any finite amount of time

T. This is achieved by simulating the SDE

where

solve the Partial Differential Equation (PDE) system

with boundary conditions

. In the above equation,

, being

N the dimension of the vectors

and the notation

indicating their

component. This approach presents drawbacks compared to classical Diffusion models. First, the functions

are not known, and their parametric approximation is costly and complex. Second, it is much harder to obtain quantitative bounds between true and generated data as a function of the quality of such approximations.

The

estimation procedure simplifies considerably in the particular case where

, for arbitrary

T. The solution of Equation (

13) is indeed

. The first PDE of the system is satisfied when

is a constant. The second PDE is the Fokker–Planck equation, satisfied by

. Boundary conditions are also satisfied. In this scenario, a sensible objective is the score-matching, as getting

equal to the true score

allows perfect generation.

Unfortunately, it is difficult to generate samples from

, the starting conditions of Equation (

12). A trivial solution is to select

in order to have

as the simple and analytically known steady state distribution of Equation (

1). This corresponds to the classical diffusion models approach, which we discussed in the previous sections. An alternative solution is to keep

T finite and

cover

the first part of the bridge from

to

with an auxiliary model. This provides a different interpretation of our method, which allows for smaller diffusion times while keeping good generative quality.

3.3. An Extension for Density Estimation

Diffusion models can be also used for density estimation by transforming the diffusion SDE into an equivalent Ordinary Differential Equation (ODE) whose marginal distribution

at each time instant coincide to that of the corresponding SDE [

3]. The exact equivalent ODE requires the score

, which in practice is replaced by the score model

, leading to the following ODE

whose time varying probability density is indicated with

. Note that the density

, is in general not equal to the density

associated to Equation (

1), with the exception of perfect score matching [

18]. The reverse time process is modeled as a Continuous Normalizing Flow (cnf) [

37,

38] initialized with distribution

; then, the likelihood of a given value

is

To use our proposed model for density estimation, we also need to take into account the ODE dynamics. We focus again on the term

to improve the expected log likelihood. For consistency, our auxiliary density

should now maximize

instead of

. However, the simulation of Equation (

14) requires access to

which, in the endeavor of density estimation, is available only once the score model has been trained. Consequently, optimization with respect to

can only be performed sequentially, whereas, for generative purposes, it could be done concurrently. While the sequential version is expected to perform better, experimental evidence indicates that improvements are marginal, justifying the adoption of the more efficient concurrent version.

4. Experiments

We now present numerical results on the MNIST and CIFAR10 datasets, to support our claims in

Section 2 and

Section 3. We follow a standard experimental setup [

5,

7,

18,

32]: we use a standard U-Net architecture with time embeddings [

6] and we report the log-likelihood in terms of bit per dimension (BPD) and the Fréchet Inception Distance (FID) scores (uniquely for CIFAR10). Although the FIDscore is a standard metric for ranking generative models, caution should be used against over-interpreting FIDimprovements [

39]. Similarly, while the theoretical properties of the models we consider are obtained through the lens of ELBO maximization, the log-likelihood measured in terms of BPD should be considered with care [

40]. Finally, we also report the number of neural function evaluations (NFE) for computing the relevant metrics. We compare our method to the standard score-based model [

3]. The full description on the experimental setup is presented in

Appendix K.

On the existence of

.

We look for further empirical evidence of the existence of a

, as stated in

Section 2.3. For the moment, we shall focus on the baseline model [

3], where no auxiliary models are introduced. Results are reported in

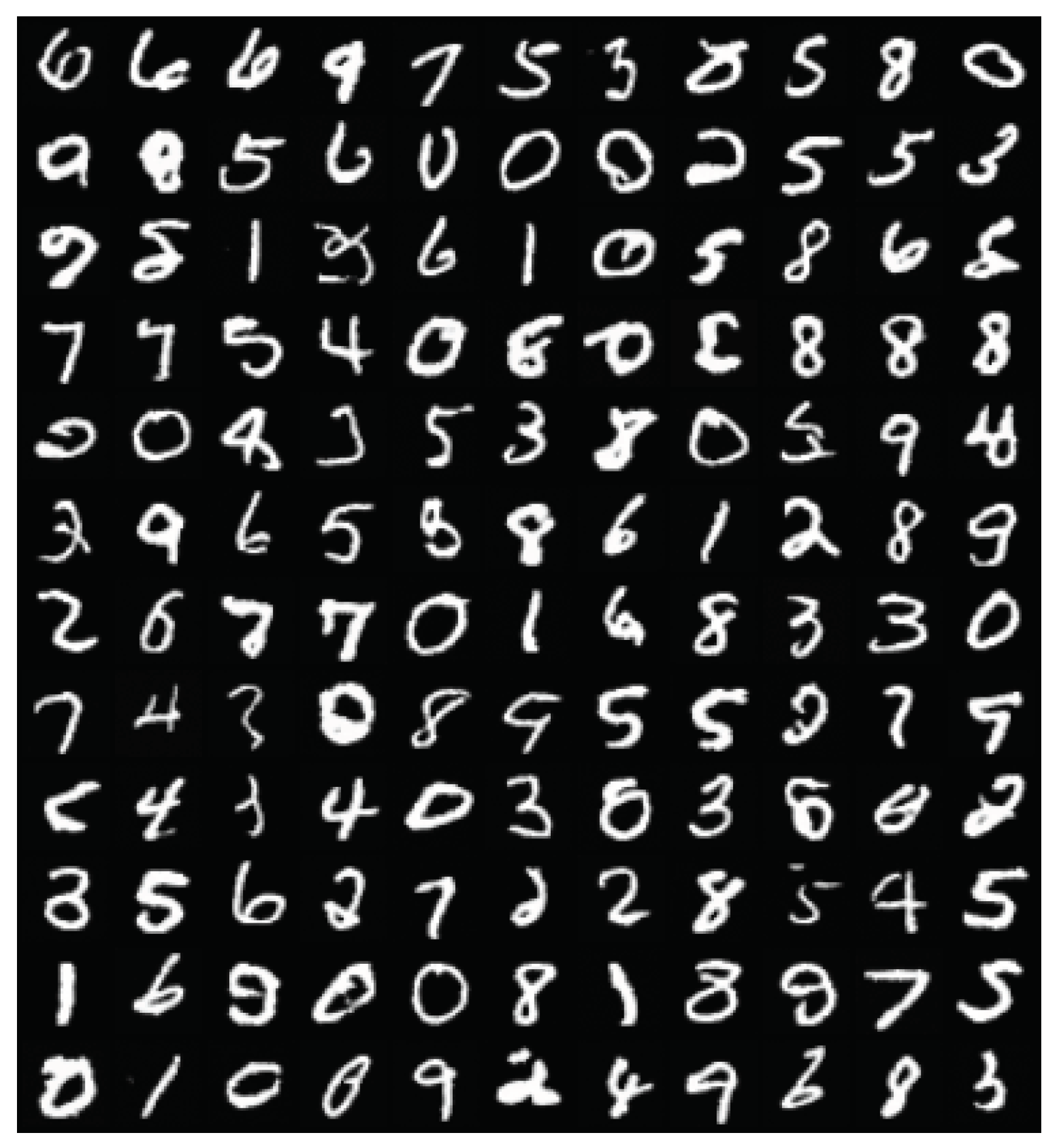

Table 2. For MNIST, we observe how times

and

have comparable performance in terms of BPD, implying that any

is at best unnecessary and generally detrimental. Similarly, for CIFAR10, it is possible to notice that the best value of BPD is achieved for

, outperforming all other values.

Our auxiliary models.

In

Section 3, we introduced an auxiliary model to minimize the mismatch between initial distributions of the backward process. We now specify the family of parametric distributions we have considered. Clearly, the choice of an auxiliary model also depends on the data distribution, in addition to the choice of diffusion time

T.

For our experiments, we consider two auxiliary models: (i) a Dirichlet process Gaussian mixture model (DPGMM) [

41,

42] for MNIST and (ii) Glow [

43], a flexible normalizing flow for CIFAR10. Both of them satisfy our requirements: they allow exact likelihood computation and they are equipped with a simple sampling procedure. As discussed in

Section 3, auxiliary model complexity should be adjusted as a function of

T.

This is confirmed experimentally in

Figure 4, where we use the number of mixture components of the DPGMM as a proxy to measure the complexity of the auxiliary model.

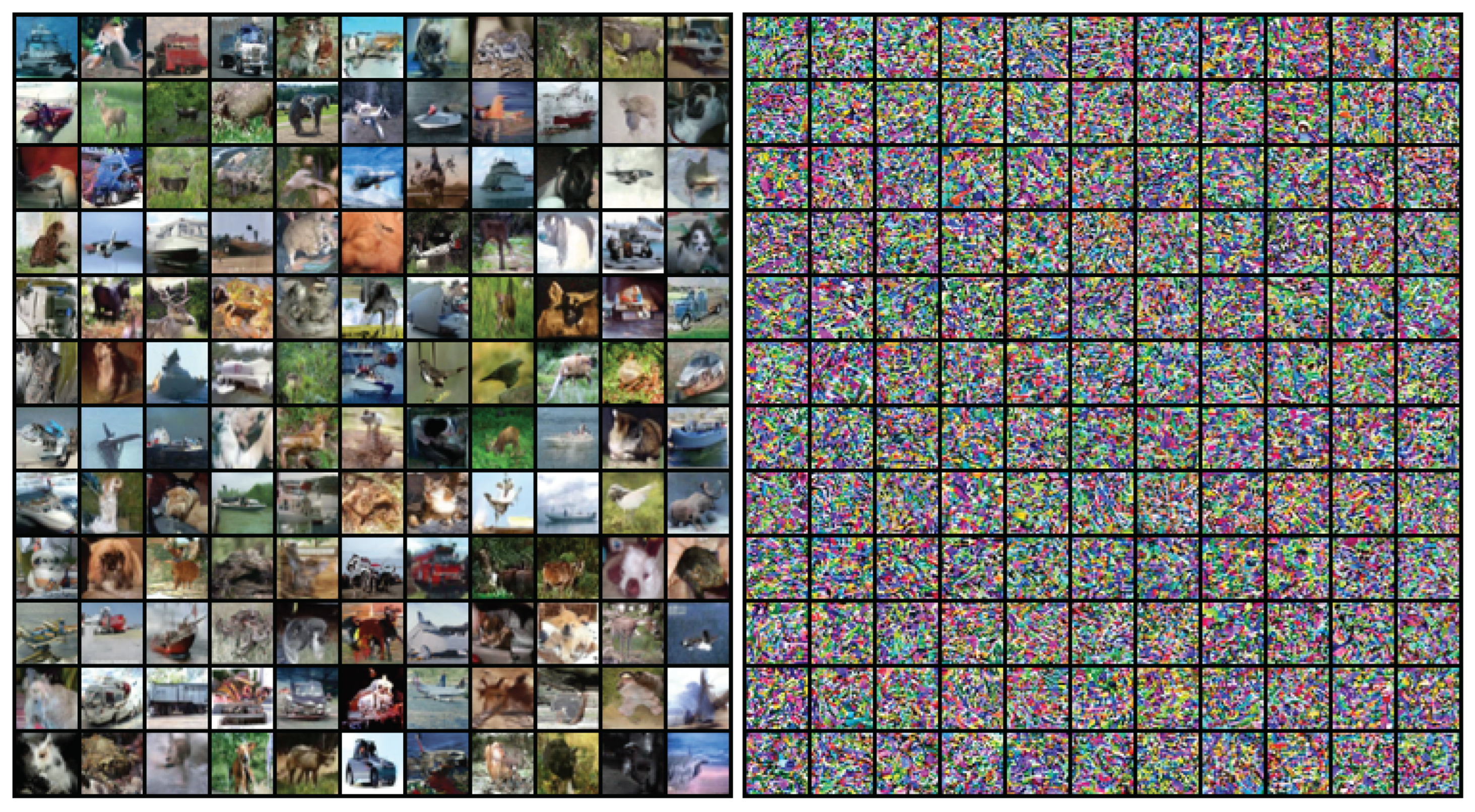

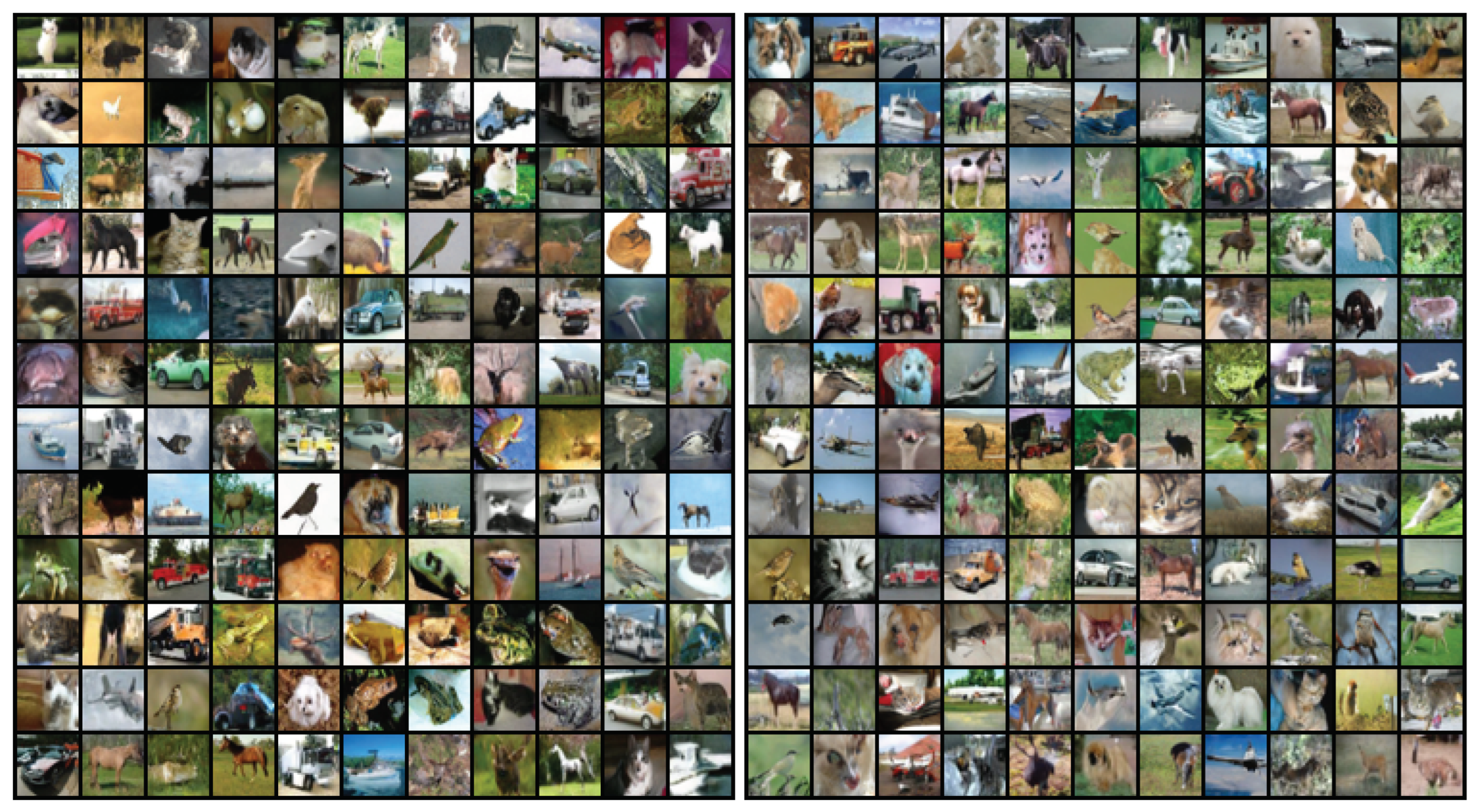

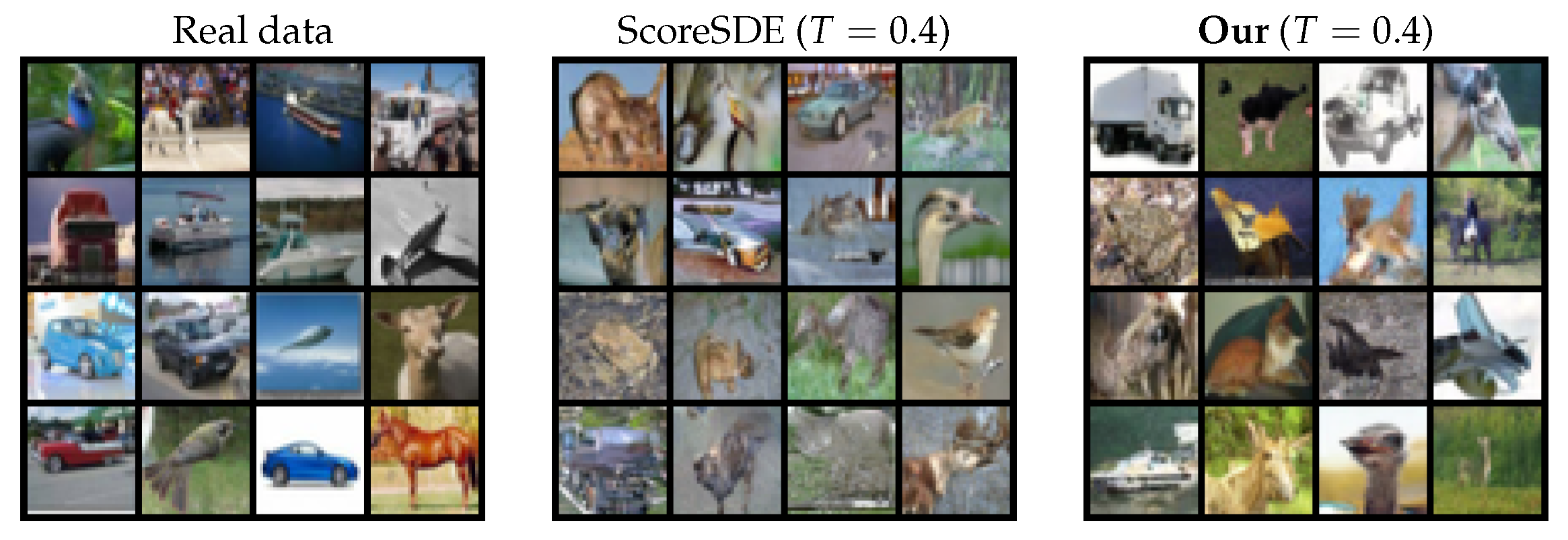

Reducing T with auxiliary models. We now show how it is possible to obtain a comparable (or better) performance than the baseline model for a wide range of diffusion times

T. For MNIST, setting

produces good performance both in terms of BPD (

Table 3) and visual sample quality (

Figure 5). We also consider the sequential extension (S) to compute the likelihood, but remark marginal improvements compared to a concurrent implementation. Similarly for the CIFAR10 dataset, in

Table 4 we observe how our method achieves better BPD than the baseline diffusion for

. Moreover, our approach outperforms the baselines for the corresponding diffusion time in terms of FIDscore (

Figure 6 and additional non-curated samples in the

Appendix K). In

Figure A3 we provide a non curated subset of qualitative results, showing that our method for a diffusion time equal to 0.4 still produces appealing images, while the vanilla approach fails. We finally notice how the proposed method has comparable performance with regard to several other competitors, while stressing that many orthogonal to our solutions (like diffusion in latent space [

4], or the selection of higher order schemes [

22]) can actually be combined with our methodology.

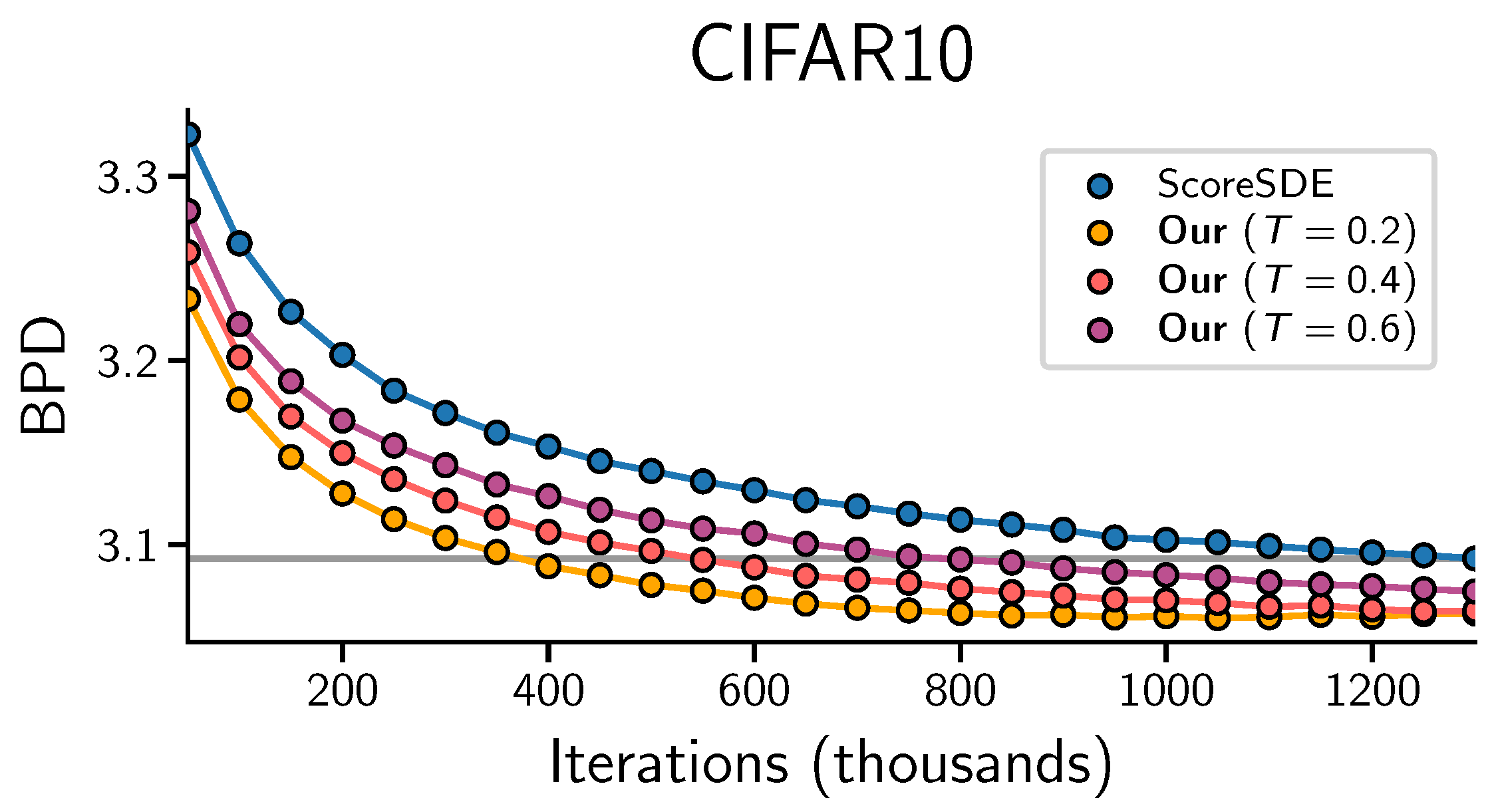

Training and sampling efficiency.

In

Figure 7, the horizontal line corresponds to the best performance of a fully trained baseline model for

[

3]. To achieve the same performance of the baseline, variants of our method require fewer iterations, which translate in training efficiency. For the sake of fairness, the total training cost of our method should account for the auxiliary model training, which, however, can be done concurrently to the diffusion process. As an illustration for CIFAR10, using four GPUs, the baseline model requires ∼6.4 days of training. With our method we trained the auxiliary and diffusion models for ∼2.3 and 2 days, respectively, leading to a total training time of

days. Similar training curves can be obtained for the MNIST dataset, where the training time for dpgmms is negligible.

Sampling speed benefits are evident from

Table 3 and

Table 4. When considering the SDE

version of the methods the number of sampling steps can decrease linearly with T, in accordance with theory [

45], while retaining good BPD and FIDscores. Similarly, although not in a linear fashion, the number of steps of the ODE samplers can be reduced by using a smaller diffusion time

T.

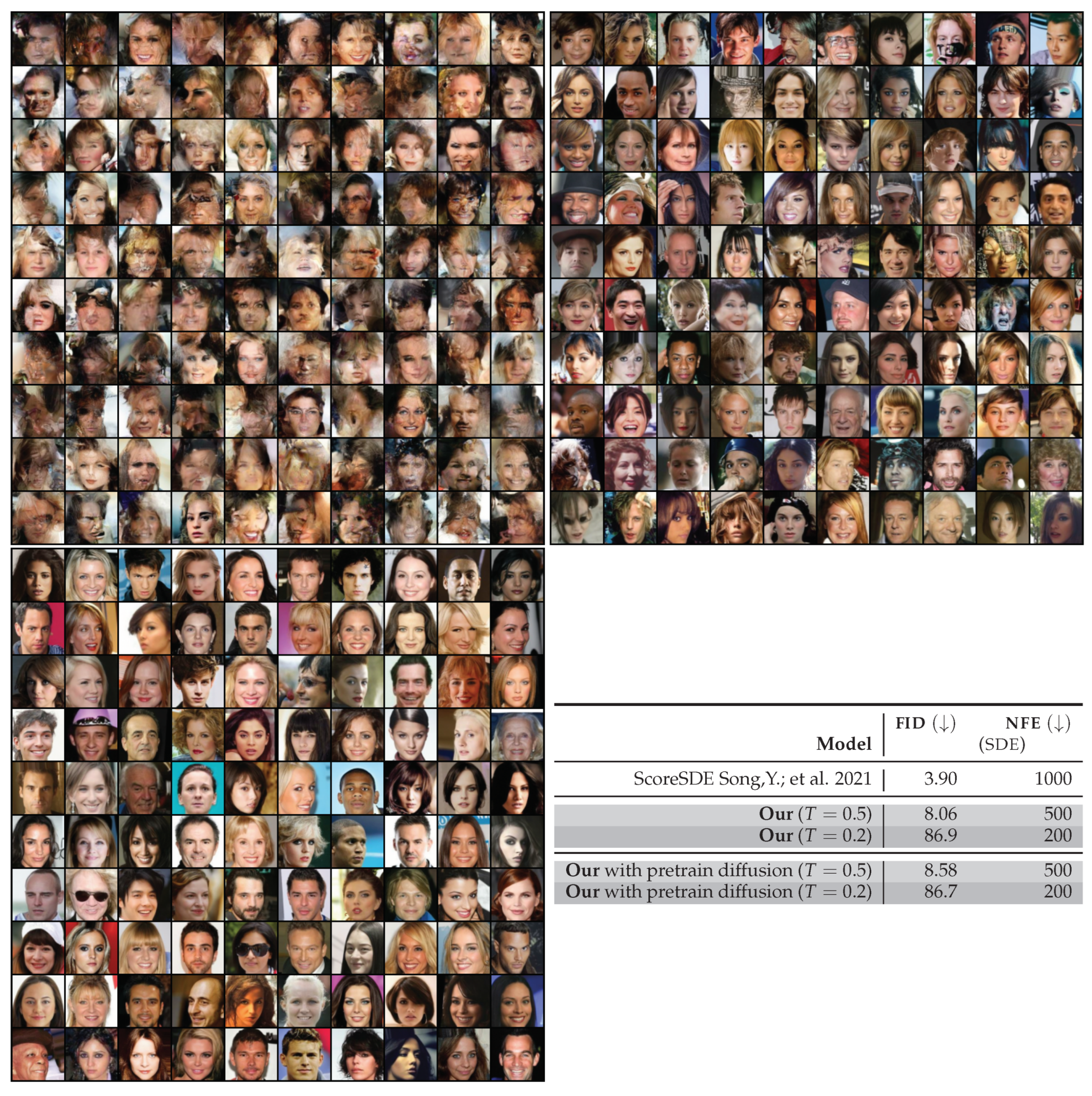

Finally, we test the proposed methodology on the more challenging CELEBA 64x64 dataset. In this case, we use a variance exploding diffusion and we consider again Glow as the auxiliary model. The results, presented in

Table 5, report the log-likelihood performance of different methods (qualitative results are reported in

Appendix K). On the two extremes of the complexity we have the original diffusion (VE,

) with the best BPD and the highest complexity, and Glow which provides a much simpler scheme with worse performance. In the table we report the BPD and the NFE metrics for smaller diffusion times, in three different configurations: naively neglecting the mismatch (ScoreSDE) or using the auxiliary model (Our). Interestingly, we found that the best results are obtained by using a combination of diffusion models pretrained for

. The summary of the content of this table is the following: by accepting a small degradation in terms of BPD, we can reduce the computational cost by almost one order of magnitude. We think it would be interesting to study more performing auxiliary models to improve performance of our method on challenging datasets.