1. Introduction

As an essential application of machine learning as a service (MLaaS) [

1], neural network inference is widely used in image recognition [

2,

3], medical diagnosis [

4], and so on. In the traditional MLaaS paradigm, the model owner provides a trained neural network, and a user, who holds some queries, calls an API of MLaaS to enjoy the inference service. However, with the increase in people’s privacy awareness and the perfection of laws and regulations [

5], the traditional MLaaS paradigm is being challenged. On the one hand, the user is unwilling to reveal queries and inference results to the model owner. On the other hand, the trained model is intellectual property belonging to the model owner and cannot be revealed to the user.

Secure inference utilizes cryptographic techniques to ensure that sensitive information is not revealed to each other.

In general, different cryptographic tools have different concerns. Fully homomorphic encryption (FHE) is communication-efficient but computation-expensive, which makes it unpractical [

6]. As an important component of secure multiparty computation (MPC), secret sharing (SS) is computation-efficient but more communication rounds are required [

7,

8]. Existing works from secret sharing are usually under this assumption of the fast network, which has a high-bandwidth and low-latency network, for example, in the local area network (LAN) setting. However, all these works are inefficient in low-bandwidth and high-latency networks, even under the semi-honest model. A fast network is difficult to achieve in the real world, especially in the wide area network (WAN) setting. A proven method is to reduce communication rounds of the protocol as much as possible or construct protocols with constant rounds. In addition, these methods are also important for computationally intensive neural network inference.

Recently, QNN has gained much attention. The quantization technique reduces the overall model computational overhead by limiting the representation bit-width of data and parameters in the model at the expense of a certain level of model accuracy. More precisely, quantization converts the float-point arithmetic (FP32, 32-bit floating point, single precision) of neural networks into the fixed-point arithmetic (e.g., INT8, 8-bit fixed-point integer) since the latter is easy to deploy in resource-limited devices, such as laptops and mobile devices. We wonder the following: could we achieve constant-round communication protocols based on secret sharing for QNN inference? As we will show, the answer is yes with our proposed protocols.

1.1. Related Work on Secure Inference

Researchers in recent years have proposed several solutions for secure inference.

Gilad-Bachrach et al. proposed CryptoNets [

6] mainly based on leveled homomorphic encryption, which allows a limited number of multiplication operations, and thus it is more efficient than the scheme based on FHE. However, the computation cost of CryptoNets is still high and unacceptable. Instead of relying on homomorphic encryption, some works introduce garbled circuit (GC) as the underlying cryptographic tool for secure inference. For example, DeepSecure [

9] was the first work mainly based on GCs with free-XOR optimization, but it still has bad communication efficiency, even in low-latency networks. Some other works based on three-party replicated secret sharing (RSS) focus on obtaining high throughput of secure inference, such as ABY3 [

7] and

Falcon [

8]. Most works use multiple protocols to achieve better performance. For example, Mohassel and Zhang presented SecureML [

10], which utilizes additive secret sharing (ASS) for linear operations and GCs for piecewise-approximated activation.

There are some works related to QNN. According to the bitwidth of weight, a QNN can be binary neural network, ternary neural network, and other varieties. Riazi et al. presented XONN [

11] for the binary neural network, where the values of weights and activations are restricted to the binary set

. The multiplication is replaced by XNOR operations, which can be computed by GCs. Ibarrondo et al. proposed

Banners[

12] for binary neural network inference based on three-party RSS. Zhu et al. proposed SecureBiNN [

13] for binary neural network inference based on three-party RSS and three-party oblivious transfer (OT). Agrawal et al. proposed QUOTIENT [

14] for ternary neural networks, where the weight is restricted to the ternary set

. Ternary multiplication can be done by using 1-out-of-2 OT. Dalskov et al. presented SecureQ8 [

15] based on three-party RSS in the different threat models for INT8 quantization. Shen et al. proposed a practical secure two-party framework ABNN2 for arbitrary-bitwidth QNN inference [

16]. A few works focus on the secure training of QNN, such as [

17]. However, reduction in communication rounds is not considered in all these works.

1.2. Our Contributions

This work considers QNN inference with INT8 quantization in the honest majority setting. In detail, our contributions are described as follows:

We provide a series of constant-round communication complexity secure protocols for QNN inference, including secure truncation, conversion, and clamping protocol. We achieve this by constructing protocols based on MSS.

We give detailed proof of security in the semi-honest model. Concretely, our protocols are secure against one single corruption.

The experiment shows that our protocols are practical and suitable for the high-latency network. Compared to the previous work for quantized inference, our protocols are 1.5 times faster in the WAN setting.

The remainder of this work is organized as follows. In

Section 2, we define notations and primitives related to cryptographic tools, security model, neural networks, and quantization. In

Section 3, we show the architecture for QNN secure inference. In

Section 4, we give several building blocks of QNN inference and provide security analysis of our protocols. In

Section 5, we provide our QNN structure. In

Section 6, we implement our protocols and then report the experimental results. Finally, we conclude this work in

Section 7.

2. Preliminaries

2.1. Basic Notations

At first, we define the notations used in this work in

Table 1.

2.2. Threat Model and Security

In this work, we consider three non-colluding servers as the computing parties of MPC to execute secure inference tasks, where static, semi-honest adversary corrupts only a single party during the protocol execution. The semi-honest adversary corrupts one of three parties and obtains its view (including its input, random tape, and received messages during the protocol execution), but follows the protocol specification exactly.

Our protocols rely on secure pseudo-random function (PRF), and thus, we can only provide security against a computationally bounded adversary; hence, all our protocols are computationally secure. Formally, we can define semi-honest security as follows:

Definition 1.

(Semi-honest Security [

18]).

Let Π be a three-party protocol in the real world, be the ideal funcationality in the ideal world. We say Π securely computes in presence of a single semi-honest adversary if for every corrupted party () and every input , there exists an efficient simulator such thatwhere , is the view of , is the output of all parties, and is the i-th output of . In other words, a protocol is computationally secure in the semi-honest model, if and only if the view of the ideal world simulator and the view of the real world adversary is computationally indistinguishable.

2.3. Secret Sharing Semantics

Let

x be the secret. Similar to [

19], we use the following sharing in this work.

-sharing: ASS among and . The dealer samples random elements as the shares of x, such that holds. The dealer distributes the shares to each party such that for holds . For simplicity, we denote as the additive shares of , and .

-sharing: MSS among all parties. The dealer samples random element , computes , and then shares among and by -sharing. The dealer distributes the shares to each party, such that holds , holds , and holds . For simplicity, we denote as the masked shares of , and .

Table 2 summarizes the individual shares of the parties for the aforementioned secret sharing. It is easy to see that each party only misses one share to reconstruct the secret

x.

The above steps can also be extended to by replacing addition/subtraction with XOR and multiplication with AND. We use both and as the computation fields and refer to the shares as arithmetic sharing and boolean sharing, respectively. We denote the Boolean sharing with in the superscript, which means the Boolean sharing of bit b is and depending on the type of sharing semantics.

Note that both

-sharing and

-sharing satisfy the linearity property, which allows the parties to compute the linear combination of two shared values

non-interactively. We only introduce the basic operations of MSS in this section. To reduce communication costs,

is used (cf.

Appendix A).

Suppose that for holds the shares , and public constants .

For linear combination , the parties locally compute its shares to be .

For multiplication

, we denote as functionality

, then

can be achieved as follows [

19]:

and locally sample random and by using ;

and locally sample random by using ;

locally computes and sends to ;

for locally computes ;

for sends to , who locally computes .

It is easy to see that the multiplication requires communication of at most

bits and 2 rounds. Note that steps 1–3 are independent of the secret

x and

y, which can be improved by using the offline–online paradigm (see

Section 3). In this way, the multiplication only requires

bits and 2 rounds in the online phase.

The aforementioned scalar operation can be extended to tensor or vector by sharing the elements of or element-wise. We omit the detail here.

2.4. Neural Network

A neural network usually includes many linear and non-linear layers, all stacked on top of each other such that the output of the previous layer is the input of the next layer. We summarize the linear layers and non-linear layers as follows.

The linear layers usually include fully connected layer and convolution layer. Both can be computed by matrix multiplications and additions:

The fully connected layer can be formulated as , where is the output of the fully connected layer, is the input vector, is the weight matrix and is the bias vector.

The convolution layer can be converted into computing the dot product of the matrix and vector, and then one addition as shown in [

20]; thus, it can be formulated as

.

The non-linear layers introduce nonlinearity into neural networks and allow bound inputs to a fixed range, for example, evaluating the activation function. In this work, we only consider the rectified linear unit (ReLU) activation, which is defined as .

2.5. Quantization

Although there are many different quantization methods [

21], we only consider the linear quantization method proposed by Jacob et al. [

22] in this work. This is because the linear quantization method only involves linear operations, which benefits constructing an SS-based MPC protocol.

For 8-bit quantization, 32-bit float-point

is quantized as an 8-bit integer

. The relationship between

and

a is a dequantized function

:

where

is called

scale, and

is called

zero-point. As pointed out by Jacob et al. [

22], both

S and

Z are determined at the training phase of the neural network; thus,

is a constant parameter in the inference phase. We use a single set of quantization parameters for each activation array and weights array in the same neural network layer.

In order to convert FP32 to INT8, we define quantized function

to be the inverse of

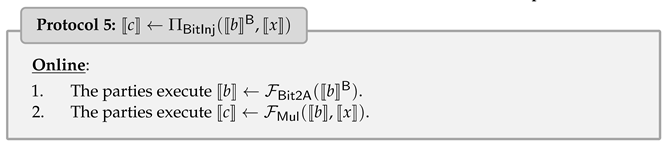

, then we have the following:

where

is a rounding operation. Note that multiple numbers may map to the same integer due to the rounding operation; see

Figure 1 (cf. [

15]).

As an important part of QNN, when we compute the convolution of two quantized tensors, we have to compute the clamping function

to bind the quantized result to

, i.e.,

should be computed. We refer the reader to [

15,

22] for more details.

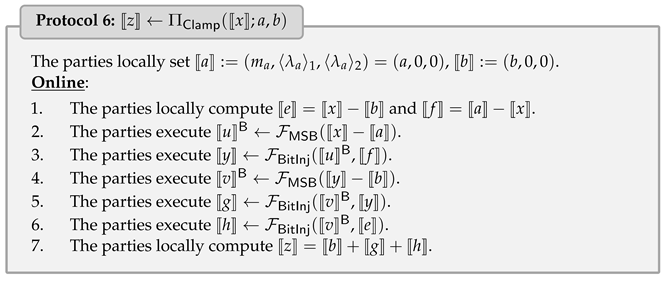

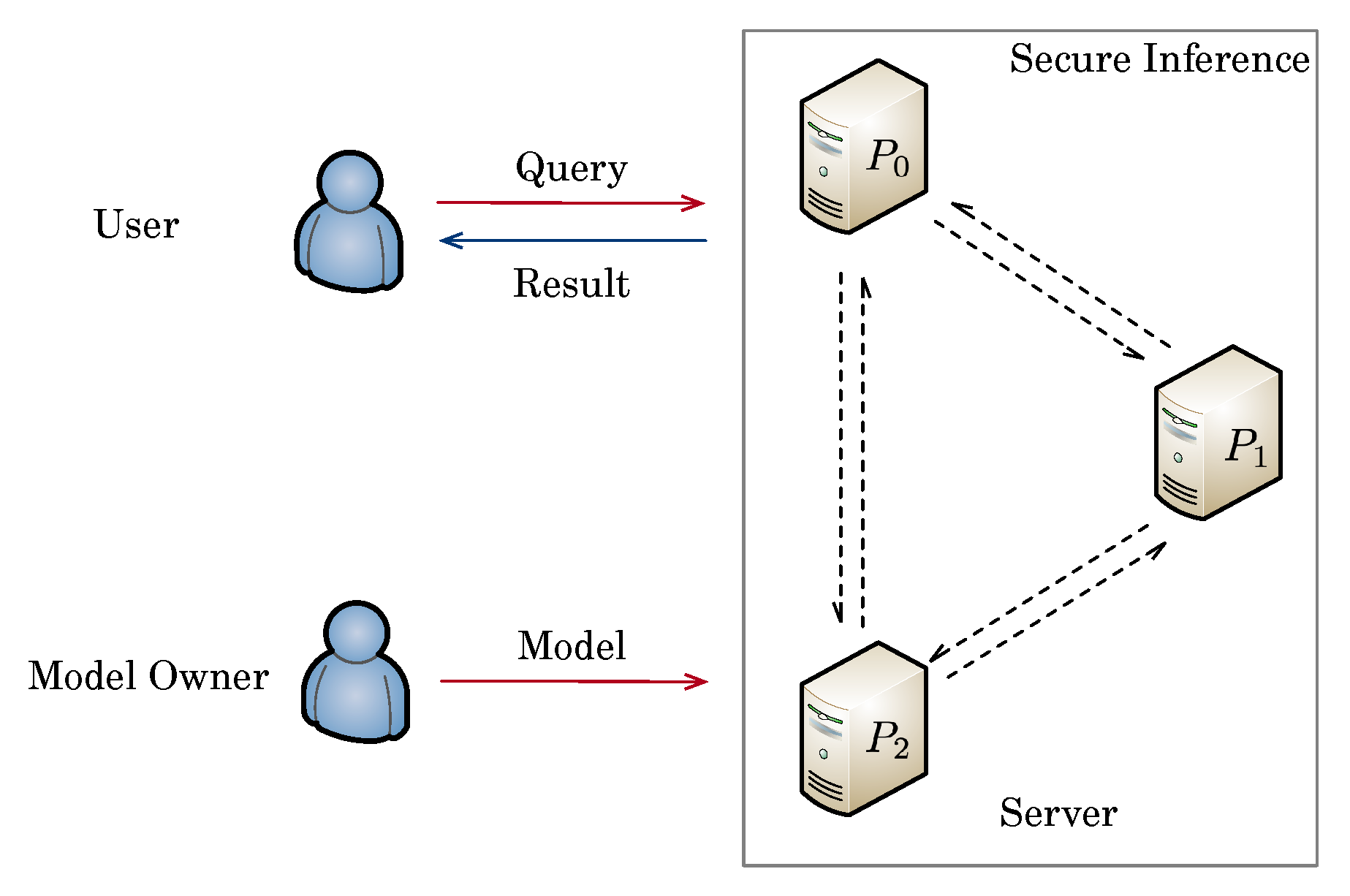

3. The Architecture for Secure Inference

Our secure inference system is built on outsourced computation architecture and is given in

Figure 2. The system has three different roles, which we describe as follows:

Server: There are three non-colluding servers in our system, denoted as

. Three servers can be from different companies in the real world, such as Amazon, Alibaba, and Google; any collusion will damage their reputations. Similar to prior works, we assume that all servers know the layer types, the sizes of each layer, and the number of layers. All servers perform a series of secure protocols proposed in

Section 4 to execute inference tasks for users’ shared queries in a secure way.

User: The user holds some queries as input and wants to enjoy a secure inference service without revealing both queries and inference results to others. To do so, the user uses Equation (

3) to convert the query to the 8-bit integer firstly, then uses

-sharing to split quantized queries to its masked shares before uploading to three servers, and receive the shares of inference results from three servers in the end. Note that only the user can reconstruct the final results; the privacy of both queries and inference results are protected during the secure inference.

Model Owner: The model owner holds a trained QNN model, which includes all quantized weights of different layers along with the quantization parameters. As an important intellectual property belonging to the model owner, the privacy of the QNN model should be protected. To do so, the model owner uses -sharing to split quantized weights to its masked shares before deploying to three servers. Once the deployment is done, the model owner can go offline until the model owner wants to update the model.

Similar to prior works of secure inference [

8,

20], we do not consider black-box attacks toward neural networks, such as model extraction attacks, model inversion attacks, and membership inference attacks, since these attacks are independent of the cryptographic techniques used to make the inference process secure [

23].

As pointed out by Dalskov et al. [

15], we might not enjoy the benefits of the size reduction when considering secure inference. Although data and network weights can be stored by 8-bit integer, the arithmetic operation must be computed modulo

. This work only focuses on reducing communication rounds and computation costs among three servers.

We use the offline–online paradigm to construct our secure protocols. This paradigm makes it possible to split the protocol into the offline phase and online phase, where the offline phase is independent of the input of the parties and the online phase depends on the specific input. We argue that the user occasionally raises inference requests; the servers will have enough time to process the offline phase to speed up the execution of upcoming inference requests [

23].

4. Protocols Construction

According to

Section 3, the model owner provides the weights of the layer and the quantization parameters to three servers, which allows us not to consider the impact of quantization. To construct an efficient, secure inference scheme in the WAN setting, we need to create a series of building blocks with constant rounds communication for the three servers, which is the goal of this section. Our main contribution here is to present a secure truncation, conversion, and clamping protocol for secure inference of three servers. The other protocols follow the previous work [

19], but we still give details for integrity.

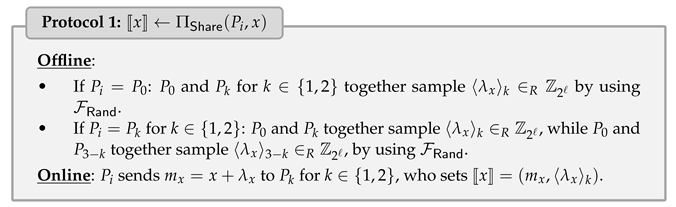

4.1. Secure Input Sharing Protocol

Let

be the secret owner holding

x. We define functionality

, which allows the parties to generate

. To achieve

, we follow [

19] and show it in Protocol 1, which requires the communication of at most

bits and 1 round in the online phase.

Observe that if both

and

hold the secret

x, then

can be generated without any communication by setting some shares to 0 instead of using

, which is inspired by [

24]. For simplicity, we still use the same notation to denote this case, i.e.,

. To achieve

,

can be done as follows:

for : The parties locally set .

: The parties locally set .

4.2. Secure Truncation Protocol

Recall that when the parties execute secure multiplication protocol in the fixed-point value, we have to deal with the double-precision result. More precisely, when all shared values are represented as

ℓ-bit fixed-point values with

d-bit precision, then multiplying two fixed-point numbers, the result will be

-bit precision and must be truncated by

d bits to keep right fixed-point representation. ABY3 [

7] proposed the faithful truncation, which only works on RSS. Although [

19] has the same semantics as us, they do not provide a secure truncation protocol in their work. In this work, we extend the faithful truncation to MSS as one of our contributions.

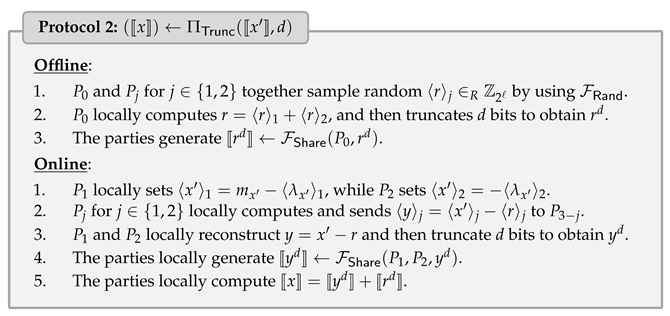

We define secure truncation functionality , where has -bit precision, and . Suppose that the parties hold and random shared truncated pair , where r is a random value, and denotes the value of the r truncated d-bit, i.e., . The online phase of truncation can be performed by the parties to mask, reveal, truncate in the clear, use to unmask, and obtain the truncated result x, i.e., .

The challenge here is to generate random shared truncated pair among the parties. To do so, we utilize the fact that if denotes the last d bits of r, then we have . Instead of sampling r by directly, and for together sample random by using such that can be locally computed by . In this way, can compute directly, and then share to and by invoking . During the online phase, and reconstruct and truncate to obtain , which follows by using to unmask the result. The protocol is described in Protocol 2, which requires the communication of at most bits and 1 round in the online phase.

4.3. Secure Conversion Protocol

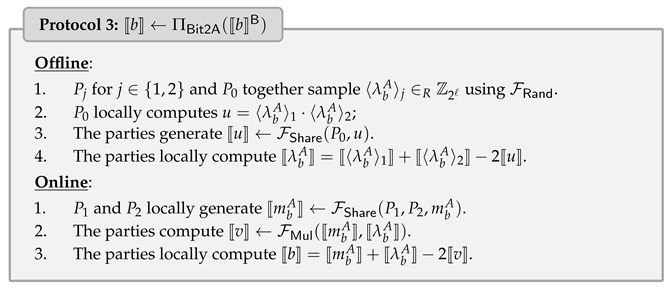

We define to convert the Boolean shares of a single bit to its arithmetic shares . To do so, we utilize the fact that if a and b are two bits, then .

Let be the value of bit b over , then according to the fact and masked sharing semantics, we have , where . In other words, can be computed by invoking secure input sharing protocol and secure multiplication protocol of masked secret sharing. Note that holds both and , and thus can be locally computed by without using Beaver triples.

To achieve , we describe the construction in Protocol 3, which requires the communication of at most bits and 1 round in the online phase.

4.4. Secure Comparison Protocol

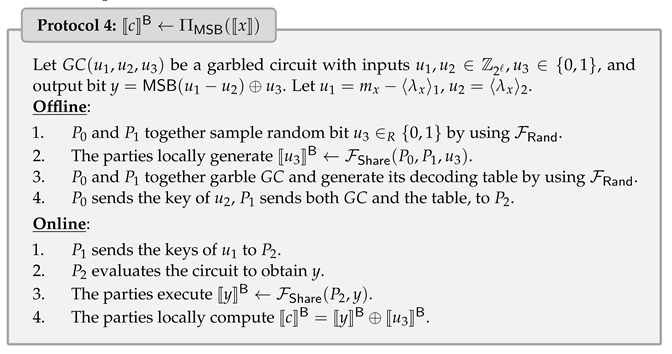

Comparison is an important building block of the neural network for evaluating ReLU activation, argmax function, and pooling layer. Fortunately, we can easily compare the quantized values if quantized a and b have the same quantization parameter . This is because if and , then holds if and only if holds. Therefore, the key step is to compute the most significant bit (MSB) of , i.e., if and only if . Letting , we define secure comparison functionality by giving shared value and extract the Boolean shared bit such that .

The secure comparison protocol of ABY3 [

7] needs

rounds in the online phase. To construct a constant-round comparison protocol, we implement

with the three-party GC proposed by [

19].

Let be a GC with inputs , and output a masked bit . We treat and as the common garbler and as the evaluator. The circuits are generated by and with correlated randomness by using . Namely, both garblers hold the knowledge of GCs, including the keys and the decoding table in clear. In our situation, the parties hold ; thus, we can define as the input of , as the input of , and as a random bit sampled by and using .

Note that also knows and the corresponding key; hence, sends the key of to directly without using OT. evaluates the circuit to obtain y, then shares it with , which only requires communication of at most 2 bits. Finally, the parties remove masked bit to obtain masked share .

As pointed out by [

25], the underlying circuit can be instantiated using the depth-optimized parallel prefix adder (PPA) of ABY3 [

7]. GC can be further optimized by state-of-the-art techniques, such as free-XOR [

26] and half gates [

27]. We describe the details in the following Protocol 4, which requires the communication of at most

bits and 2 rounds in the online phase.

4.5. Secure Clamping Protocol

As pointed out by

Section 2.5, when we compute the convolution of two quantized tensors, since rounding error exists, we may obtain the result

, and hence a clamping operation

should be computed [

15].

Let

, then according to

, one has

which is equivalent to the following Equation (

5):

Similarly, let

, then according to

, one has the following Equation (

6):

From Equations (

5) and (

6), one has

and thus the key point of the secure clamping protocol here is how to securely implement Equation (

7).

Let

and

. Note that when we implement Equation (

7) with masked secret sharing, both the shares of

u and

v are Boolean shares over

, while both the shares of

e and

f are arithmetic shares over

. In other words, we cannot invoke the secure multiplication protocol directly. This can be done by converting Boolean shares to arithmetic shares using secure conversion protocol and invoking secure multiplication protocol.

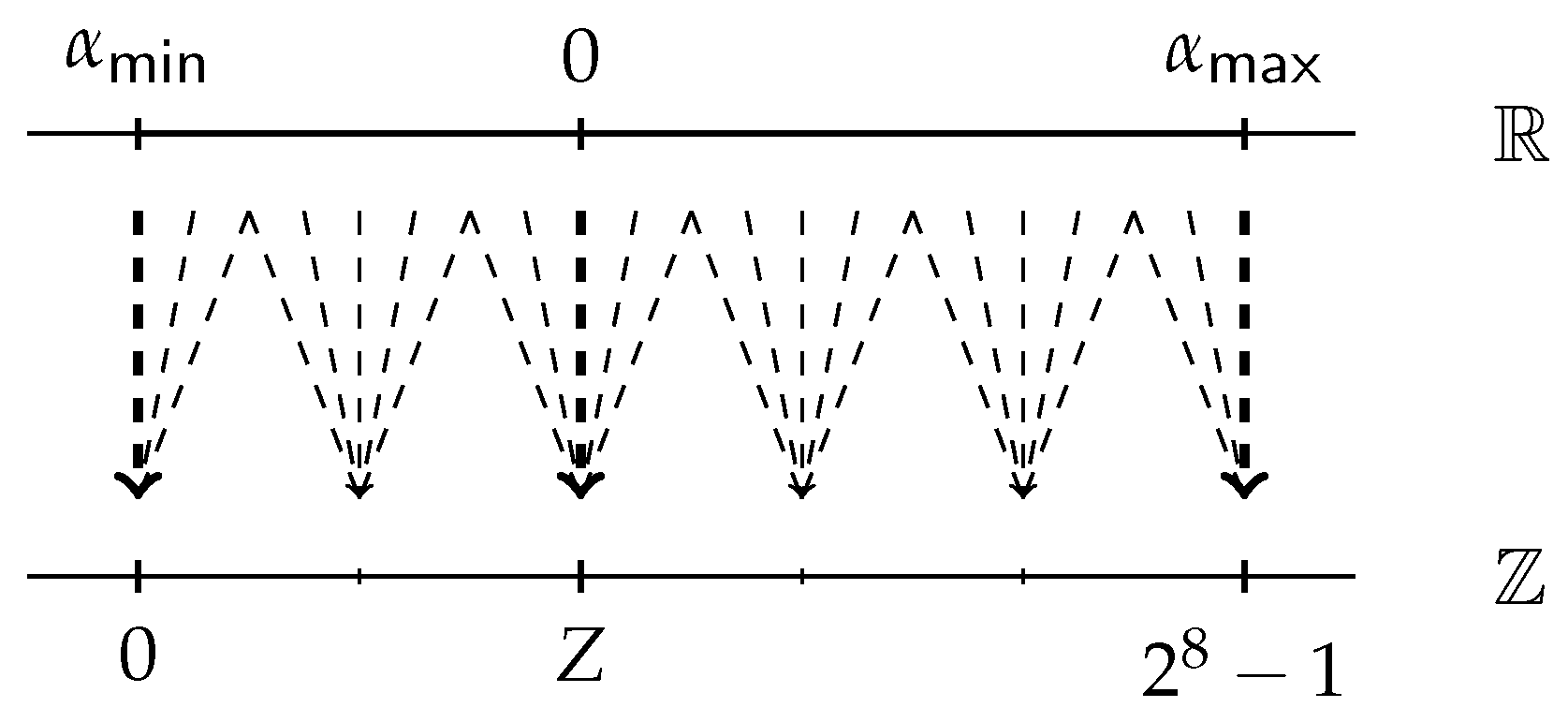

For simplicity, we formalize the above steps to be the bit injection functionality : given the Boolean shares of a bit b and the arithmetic shares of x, secure bit injection functionality allows the parties to compute . We provide in Protocol 5, which requires the communication of at most bits and 2 rounds.

Now, we can give our secure clamping protocol in the following Protocol 6. Steps 5–6 can be computed in parallel within 2 rounds. Therefore, Protocol 6 requires the communication of at most bits and 8 rounds in the online phase.

4.6. Theoretical Complexity

The total communication and round complexity of our protocols are provided in

Table 3. It is easy to see that all our protocols have constant-round communication in the online phase.

4.7. Security Analyses

This section gives proof sketches of our protocols in the real–ideal paradigm. We present the steps of the simulator

for

in the stand-alone model with security under sequential composition [

28]. The proof works in the

-hybrid model.

Theorem 1.

securely realizes the functionality in the -hybrid model and against a semi-honest adversary , who only corrupts one single party.

Proof. Given the ideal , the output of PRFs is a pseudo-random value, which can be simulated by uniformly samples random value. Note that sends to , is masked by random , which is unknown to ; hence, corrupted cannot learn any information of x. In short, the view of in real execution is computationally indistinguishable from the view of in ideal execution. □

Theorem 2.

securely realizes the functionality in the -hybrid model and against a semi-honest adversary , who only corrupts one single party.

Proof. Given ideal , and , the correlated randomness can be simulated by invoking . Then, invokes to simulate step 4 in the offline phase. Finally, invokes to simulate step 2 in the online phase. Note that all functionality of the output is the random shares over , and hence the view of in the real execution is computationally indistinguishable from the view of in the ideal execution. □

Theorem 3.

securely realizes the functionality in the -hybrid model and against a semi-honest adversary , who only corrupts one single party.

Proof. The security of

can be reduced to the security of

and

, which was proven to be secure in Theorem 1 and [

19], respectively. Since we make only black-box access to

and

, according to the sequential composition, the Bit2A protocol is secure in the semi-honest model. □

Theorem 4.

securely realizes the functionality in the -hybrid model and against a semi-honest adversary , who only corrupts one single party.

Proof. Given the ideal functionality , the security of is trivial for and . This is because both and are unknown to and at the same time. Because the parties are non-colluding, we have that y is oblivious to the corrupted party, even if both garblers have the circuit in the clear. Observe that evaluates the circuit to obtain y, masked by common random bit of and . In other words, y is uniformly random to . Therefore, the view of in real execution is computationally indistinguishable from the view of in the ideal execution. □

Theorem 5.

securely realizes the functionality in the -hybrid model and against a semi-honest adversary , who only corrupts one single party.

Proof. The security of

can be reduced to the security of

and

, which was proven to be secure in Theorem 4 and [

19], respectively. Since we make only black-box access to

and

, according to sequential composition, the bit injection protocol we proposed is secure in the semi-honest model. □

Theorem 6.

securely realizes the functionality in the -hybrid model and against a semi-honest adversary , who only corrupts one single party.

Proof. The security of can be reduced to the security of and , which was proven to be secure in Theorems 4 and 5, respectively. Since we make only black-box access to and , according to the sequential composition, our secure clamping protocol is secure in the semi-honest model. □

5. Quantized Neural Network Structure

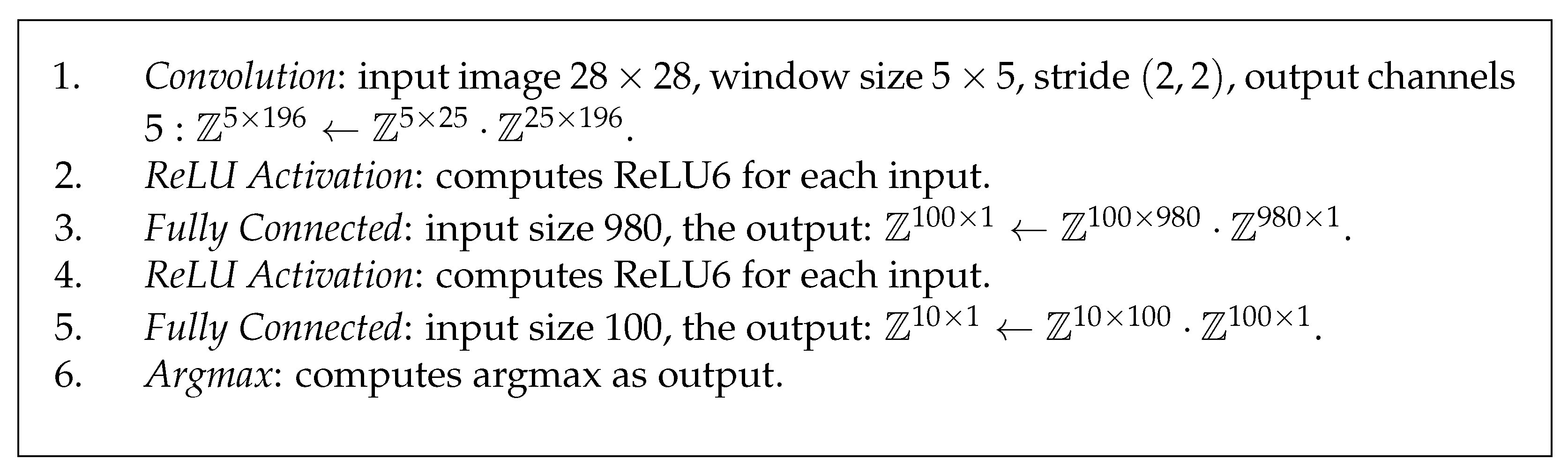

We consider the convolutional neural network presented in Chameleon [

2], which includes a single convolution layer and two fully connected layers. The activation function is ReLU activation. We consider its quantized variant as our QNN structure and describe it in

Figure 3. As we pointed out above, we set all data types of QNN from FP32 to INT8.

Instead of evaluating the original ReLU activation, we evaluate ReLU6 activation, as fixed ranges are easier to quantize with high precision in different channels and a quantized model with ReLU6 has less accuracy degradation [

22]. Herein, ReLU6 activation is defined as

, which is essentially a clamping operation. It seems that we have to invoke a secure comparison protocol to evaluate ReLU6 activation.

In fact, as pointed out by [

22], we can take advantage of quantification such that ReLU6 can be entirely fused into the computation of the inner product that precedes it. To do so, we can directly set the quantized parameters to be

and

, then

always holds for any

. By doing this, we can clamp the inner product to

, meanwhile evaluating ReLU6 activation. Namely, we can evaluate ReLU6 activation without any communication overhead.

In addition, the evaluation of the argmax function can be computed by invoking the secure comparison protocol.

6. Experimental Evaluation

6.1. Experimental Setup

We implemented our protocols with Python. All our experiments were executed on a server over Ubuntu 20.04 LTS, which is equipped with Intel(R) Xeon(R) Gold 5222 CPU processor (@3.80GHz) and 32GB RAM memory with AES-NI support. Three parties were simulated by three different terminal ports. We used the Linux traffic tools command tc to simulate LAN and WAN. Specifically, we considered the LAN setting with 625 Mbps bandwidth and 0.2 ms ping time, and the WAN setting with 80 Mbps bandwidth and 20 ms ping time. Note that these parameters are close to the ones we use daily, proving that our solution is practical.

All experiments were executed 10 times on our server to eliminate accidental errors and reported results with the average. We set the bit-length of the shares , the fixed-point precision , and the security parameter .

To simplify the experiment, we also made the following assumptions:

6.2. Experimental Results for Secure Inference

In our experiment, we compare our solution to two-party framework Chameleon [

2] and various three-party frameworks, including

Banners [

12], SecureBiNN [

13] and SecureQ8 [

15]. Note that both Chameleon and

Banners are not publicly available; hence, we use their reported results directly for reference. Both

Banners and SecureBiNN are designed for binary neural network inference. We also compare our solution to SecureQ8, which was also based on INT8 quantized and implemented by MP-SPDZ [

30] in the same setting. The experimental results of both LAN and WAN are reported in

Table 4. All communication is reported in MB, and runtimes are in seconds.

The author of Chameleon [

2] claims that the original network gives us accuracy of 99%. However, our experiment shows that the accuracy is less than 80% when we convert it into a quantized variant as shown in

Figure 3. Therefore, instead of reporting the Top-1 accuracy of the model, we reported its Top-5 accuracy, where the truth label is among the first five outputs of the model. In this way, our proposed solution gives us Top-5 accuracy of 98.4%. Note that the reported accuracy of different frameworks is only for reference since it may depend on the model parameters. This is beyond the scope of our work.

As shown in

Table 4, almost all quantized frameworks are faster than the nonquantized scheme Chameleon in the same setting. The communication cost of the quantized frameworks is also less than that of the nonquantized scheme. In addition, INT8 quantized schemes are better than binarized schemes in terms of Top-5 accuracy, but the latter have lower communication costs and runtimes.

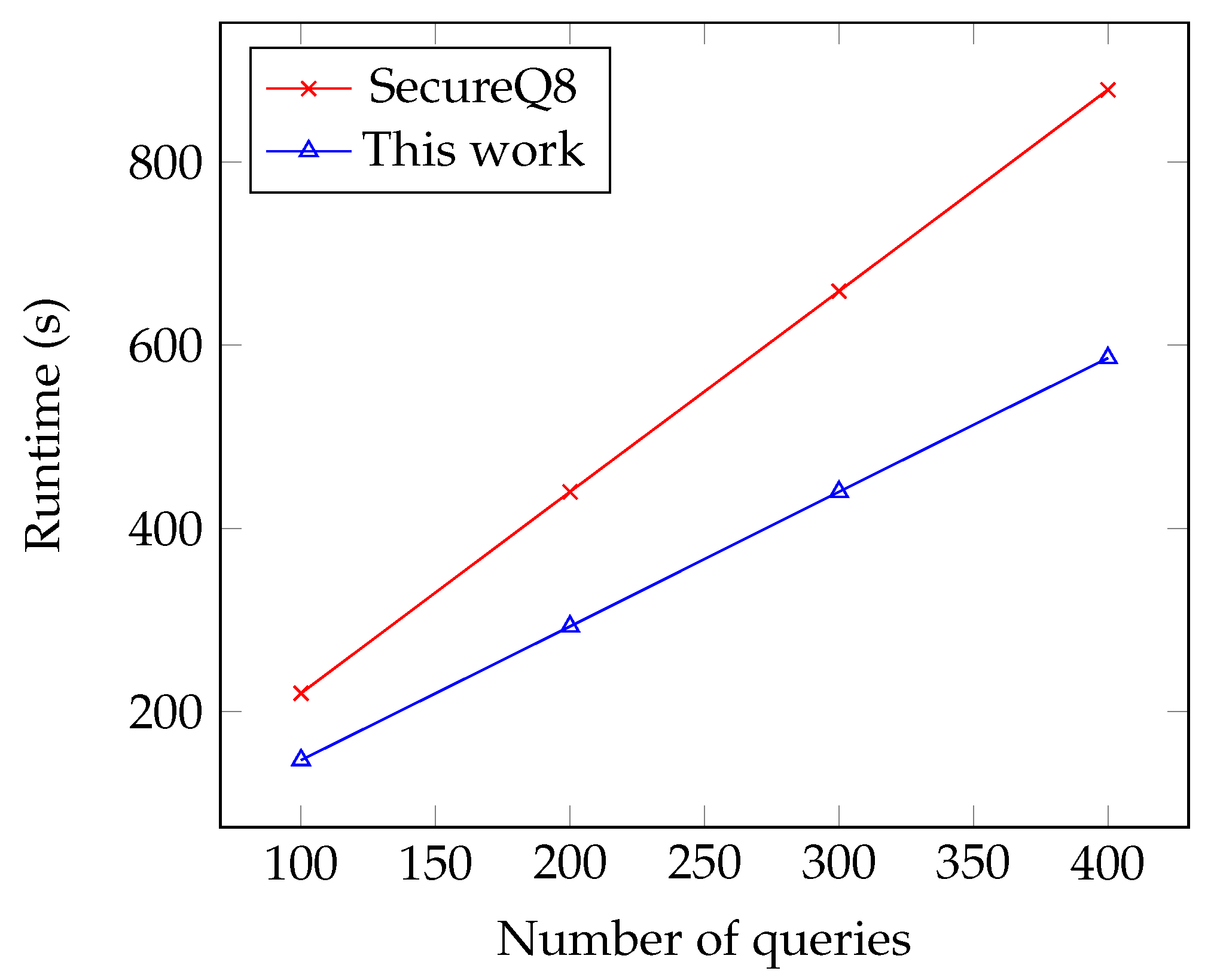

Compared to Chameleon, due to the quantization technique, our protocols were 1.41 times and 1.94 times faster in the LAN and WAN settings, respectively. In addition, our protocols were 1.32 times lower in online communication. Compared to SecureQ8, our scheme was 1.11 times slower in the LAN setting, but 1.5 times faster in the WAN setting. Because our protocols have constant-round complexity, it is suitable for a low-bandwidth and high-latency network. Note that the online communication costs of our scheme were slightly larger than SecureQ8, as our comparison protocol is based on three-party GC, where the decoding key is related to security parameter .

Our protocols also enjoy the benefit of the offline–online paradigm. Specifically, most of the communication cost of the online phase is transferred to the offline phase, which makes our scheme more efficient than SecureQ8 in the online phase, especially in the WAN setting. To see this more clearly, we also plot a performance comparison of batch inferences in

Figure 4.

7. Conclusions

We proposed a series of three-party protocols based on MSS in this study. Our key contribution is more communication-efficient building blocks for QNN inference. Our experiment shows that our protocols are suitable for low-bandwidth and high-latency environments, especially in the WAN network. All these blocks can also be used in other applications as long as the underlying sharing semantics are the same as ours.

Our constant-round comparison protocol is built on GC, and although free of OT, the online communication is related to the security parameter . How to construct a constant-round secure comparison protocol such that the online communication cost is independent of security parameters is still an open problem.

Moreover, we only consider a semi-honest adversary with Q3 structures (i.e., the adversary corrupts no more than 1/3 parties). Achieving security against other adversary structures with malicious adversaries will be the future work.