Abstract

A two-terminal distributed binary hypothesis testing problem over a noisy channel is studied. The two terminals, called the observer and the decision maker, each has access to n independent and identically distributed samples, denoted by and , respectively. The observer communicates to the decision maker over a discrete memoryless channel, and the decision maker performs a binary hypothesis test on the joint probability distribution of based on and the noisy information received from the observer. The trade-off between the exponents of the type I and type II error probabilities is investigated. Two inner bounds are obtained, one using a separation-based scheme that involves type-based compression and unequal error-protection channel coding, and the other using a joint scheme that incorporates type-based hybrid coding. The separation-based scheme is shown to recover the inner bound obtained by Han and Kobayashi for the special case of a rate-limited noiseless channel, and also the one obtained by the authors previously for a corner point of the trade-off. Finally, we show via an example that the joint scheme achieves a strictly tighter bound than the separation-based scheme for some points of the error-exponents trade-off.

1. Introduction

Hypothesis testing (HT), which refers to the problem of choosing between one or more alternatives based on available data, plays a central role in statistics and information theory. Distributed HT (DHT) problems arise in situations where the test data are scattered across multiple terminals, and need to be communicated to a central terminal, called the decision maker, which performs the hypothesis test. The need to jointly optimize the communication scheme and the hypothesis test makes DHT problems much more challenging than their centralized counterparts. Indeed, while an efficient characterization of the optimal hypothesis test and its asymptotic performance is well known in the centralized setting, thanks to [1,2,3,4,5], the same problem in even the simplest distributed setting remains open, except for some special cases (see [6,7,8,9,10,11]).

In this work, we consider a DHT problem with two parties, an observer and a decision maker, such that the former communicates to the latter over a noisy channel. The observer and the decision maker each has access to independent and identically distributed samples, denoted by and , respectively. Based on the information received from the observer and its own observations , the decision maker performs a binary hypothesis test on the joint distribution of . Our goal is to characterize the trade-off between the best achievable rate of decay (or exponent) of the type I and type II error probabilities with respect to the sample size. We will refer to this problem as DHT over a noisy channel, and its special instance with the noisy channel replaced by a rate-limited noiseless channel as DHT over a noiseless channel.

1.1. Background

Distributed statistical inference problems were first conceived in [12] and the information-theoretic study of DHT over a noiseless channel was first investigated in [6], where the objective is to characterize Stein’s exponent , i.e., the optimal type II error-exponent subject to the type I error probability constrained to be at most . The authors therein established a multi-letter characterization of this quantity including a strong converse, which shows that is independent of . Furthermore, a single-letter characterization of is obtained for a special case of HT known as testing against independence (TAI), in which the joint distribution factors as a product of the marginal distributions under the alternative hypothesis. Improved lower bounds on were subsequently obtained in [7,8], respectively, and the strong converse was extended to zero-rate settings [13]. While all the aforementioned works focus on , the trade-off between the exponents of both the type I and type II error probabilities in the same setting was first explored in [14].

In the recent years, there has been a renewed interest in distributed statistical inference problems motivated by emerging machine learning applications to be served at the wireless edge, particularly in the context of semantic communications in 5G/6G communication systems [15,16]. Several extensions of the DHT over a noiseless channel problem have been studied, such as generalizations to multi-terminal settings [9,17,18,19,20,21], DHT under security or privacy constraints [22,23,24,25], DHT with lossy compression [26], interactive settings [27,28], successive refinement models [29], and more. Improved bounds have been obtained on the type I and type II error-exponents region [30,31], and on for testing correlation between bivariate standard normal distributions [32]. In the simpler zero-rate communication setting, there has been some progress in terms of second-order optimal schemes [33], geometric interpretation of type I and type II error-exponent region [34], and characterization of for sequential HT [35]. DHT over noisy communication channels with the goal of characterizing has been considered in [10,11,36,37].

1.2. Contributions

In this work, our objective is to explore the trade-off between the type I and type II error-exponents for DHT over a noisy channel. This problem is a generalization of [14] from noiseless rate-limited channels to noisy channels, and also of [10,11] from a type I error probability constraint to a positive type I error-exponent constraint.

Our main contributions can be summarized as follows:

- (i)

- We obtain an inner bound (Theorem 1) on the error-exponents trade-off by using a separate HT and channel coding scheme (SHTCC) that is a combination of a type-based (type here refers to the empirical probability distribution of a sequence, see [38]) quantize-bin strategy and unequal error-protection scheme of [39]. This result is shown to recover the bounds established in [10,14]. Furthermore, we evaluate Theorem 1 for two important instances of DHT, namely TAI and its opposite, i.e., testing against dependence (TAD) in which the joint distribution under the null hypothesis factors as a product of marginal distributions.

- (ii)

- We also obtain a second inner bound (Theorem 2) on the error-exponents trade-off by using a joint HT and channel coding scheme (JHTCC) based on hybrid coding [40]. Subsequently, we show via an example that the JHTCC scheme strictly outperforms the SHTCC scheme for some points on the error-exponent trade-off.

While the above schemes are inspired from those in [10], which have been proposed with the goal of maximizing the type II error-exponent, novel modifications in its design and analysis are required when considering both of the error-exponents. More specifically, the schemes presented here perform separate quantization-binning or hybrid coding on each individual source sequence type at the observer/encoder (as opposed to a typical ball in [10]) with the corresponding reverse operation implemented at the decision-maker/decoder. This necessitates a different analysis to compute the probabilities of the various error events contributing to the overall error-exponents. We finally mention that the DHT problem considered here was recently investigated in [41], where an inner bound on the error-exponents trade-off (Theorem 2 in [41]) is obtained using a combination of a type-based quantization scheme and unequal error protection scheme of [42] with two special messages. A qualitative comparison between Theorem 2 and Theorem 2 in [41] seems to suggest that the JHTCC scheme here uses a stronger decoding rule depending jointly on the source-channel statistics. In comparison, the metric used at the decoder for the scheme in [41] factors as the sum of two metrics, one which depends only on the source statistics, and the other which depends only on the channel statistics. Importantly, this hints that the inner bound achieved by JHTCC scheme is not subsumed by that in [41]. That said, a direct computational comparison appears difficult, as evaluating the latter requires optimization over several parameters as mentioned in the last paragraph of [41].

2. Preliminaries

2.1. Notation

We use the following notation. All logarithms are with respect to the natural base e. , , , and denotes the set of natural, real, non-negative real and extended real numbers, respectively. For , and . Calligraphic letters, e.g., , denote sets, while and stands for its complement and cardinality, respectively. For , denotes the n-fold Cartesian product of , and denotes an element of . Bold-face letters denote vectors or sequences, e.g., for ; its length n will be clear from the context. For such that , , the subscript is omitted when . denotes the indicator of set . For a real sequence , stands for , while denotes . Similar notations apply for other inequalities. , and denote standard asymptotic notations.

Random variables and their realizations are denoted by uppercase and lowercase letters, respectively, e.g., X and x. Similar conventions apply for random vectors and their realizations. The set of all probability mass functions (PMFs) on a finite set is denoted by . The joint PMF of two discrete random variables X and Y is denoted by ; the corresponding marginals are and . The conditional PMF of X given Y is represented by . Expressions such as are to be understood as pointwise equality, i.e., , for all . When the joint distribution of a triple factors as , these variables form a Markov chain . When X and Y are statistically independent, we write . If the entries of are drawn in an independent and identically distributed manner, i.e., if , , then the PMF is denoted by . Similarly, if for all , then we write for . The conditional product PMF given a fixed is designated by . The probability measure induced by a PMF P is denoted by . The corresponding expectation is designated by .

The type or empirical PMF of a sequence is designated by , i.e., . The set of n-length sequences of type is . Whenever the underlying alphabet is clear from the context, is simplified to . The set of all possible types of n-length sequences is . Similar notations are used for larger combinations, e.g., , and . For a given and a conditional PMF , stands for the -conditional type class of .

For PMFs , the Kullback–Leibler (KL) divergence between P and Q is . The conditional KL divergence between and given is . The mutual information and entropy terms are denoted by and , respectively, where P denotes the PMF of the relevant random variables. When the PMF is clear from the context, the subscript is omitted. For , the empirical conditional entropy of given is , where . For a given function and a random variable , the log-moment generating function of Z with respect to f is whenever the expectation exists. Finally, let

denote the rate function (see, e.g., Definition 15.5 in [43]).

2.2. Problem Formulation

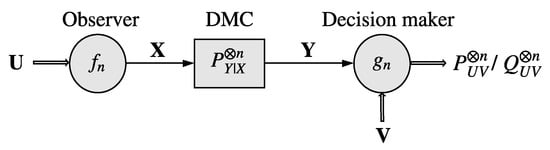

Let , , and be finite sets, and . The DHT over a noisy channel setting is depicted in Figure 1. Herein, the observer and the decision maker observe n independent and identically distributed samples, denoted by and , respectively. Based on its observations , the observer outputs a sequence as the channel input sequence (note that the ratio of the number of channel uses to the number of data samples, termed the bandwidth ratio, is taken to be 1 for simplicity; however, our results easily generalize to arbitrary bandwidth ratios). The discrete memoryless channel (DMC) with transition kernel produces a sequence according to the probability law as its output. We will assume that , , where indicates the absolute continuity of P with respect to Q. Based on its observations, and , the decision maker performs binary HT on the joint probability distribution of with the null () and alternative () hypotheses given by

Figure 1.

DHT over a noisy channel. The observer observes an n-length independent and identically distributed sequence , and transmits over the DMC . Based on the channel output and the n-length independent and identically distributed sequence , the decision maker performs a binary HT to determine whether or .

The decision maker outputs as the decision of the hypothesis test, where 0 and 1 denote and , respectively.

A length-n DHT code is a pair of functions , where

- (i)

- denotes the encoding function;

- (ii)

- denotes a deterministic decision function specified by an acceptance region (for null hypothesis ) as .

We emphasize at this point that there is no loss in generality in restricting our attention to a deterministic decision function for the objective of characterizing the error-exponents trade-off in HT (for e.g., see Lemma 3 in [24])).

A code induces the joint PMFs and under the null and alternative hypotheses, respectively, where

and

respectively. For a given code , the type I and type II error probabilities are and respectively. The following definition formally states the error-exponents trade-off we aim to characterize.

Definition 1

(Error-exponent region).An error-exponent pair is said to be achievable if there exists a sequence of codes such that

The error-exponent region is the closure of the set of all achievable error-exponent pairs . Set , where and .

We are interested in a computable characterization of , which pertains to the region of positive error-exponents (i.e., excluding the boundary points corresponding to Stein’s exponent). To this end, we present two inner bounds on in the next section.

3. Main Results

In this section, we obtain two inner bounds on , first using a separation-based scheme which performs independent HT and channel coding, termed the SHTCC scheme, and the second via a joint HT and channel coding scheme that uses hybrid coding for communication between the observer and the decision maker.

3.1. Inner Bound on via SHTCC Scheme

Let and be a PMF under which forms a Markov chain. For , let and define

where the rate function is defined in (1). For a fixed and , let

denote the expurgated exponent [38,44]. Let be a finite set and denote the set of all continuous mappings from to , where is the set of all conditional distributions . Set , , . Denote an arbitrary element of by , and set

We have the following lower bound for , which translates to an inner bound for .

Theorem 1

(Inner bound via SHTCC scheme). where

The proof of Theorem 1 is presented in Section 4.1. The SHTCC scheme, which achieves the error-exponent pair , is a coding scheme analogous to separate source and channel coding for the lossy transmission of a source over a communication channel with correlated side-information at the receiver [45], however, with the objective of reliable HT. In this scheme, the source samples are first compressed to an index, which acts as the message to be transmitted over the channel. But, in contrast to standard communication problems, there is a need to protect certain messages more reliably than others; hence, an unequal error-protection scheme [39,42] is used. To describe briefly, the SHTCC scheme involves the quantization and binning of sequences, whose type is within a -neighborhood (in terms of KL divergence) of , using as side information at the decision maker for decoding, and unequal error-protection channel coding scheme in [39] for protecting a special message which informs the decision maker that lies outside the -neighborhood of . The output of the channel decoder is processed by an empirical conditional entropy decoder which recovers the quantization codeword with the least conditional entropy with . Since this decoder depends only on the empirical distributions of the observations, it is universal and useful in the hypothesis testing context, where multiple distributions are involved (as was first noted in [8]). The various factors to in (7) have natural interpretations in terms of events that could possibly result in a hypothesis testing error. Specifically, and correspond to the error events arising due to quantization and binning, respectively, while and correspond to the error events of wrongly decoding an ordinary channel codeword and special message codeword, respectively.

Remark 1

(Generalization of Han–Kobayashi inner bound). In Theorem 1 in [14], Han and Kobayashi obtained an inner bound on for DHT over a noiseless channel. At a high level, their coding scheme involves type-based quantization of sequences, whose type lies within a -neighborhood of , where is the desired type I error-exponent. As a corollary, Theorem 1 recovers the lower bound for obtained in [14] by setting , and to ∞, which hold when the channel is noiseless, and maximizing over the set in (7). Then, note that the terms , and all equal ∞, and thus the inner bound in Theorem 1 reduces to that given in Theorem 1 in [14].

Remark 2

(Improvement via time-sharing). Since the lower bound on in Theorem 1 is not necessarily concave, a tighter bound can be obtained using the technique of time-sharing similar to Theorem 3 in [14]. We omit its description, as it is cumbersome, although straightforward.

Theorem 1 also recovers the lower bound for the optimal type II error-exponent for a fixed type I error probability constraint established in Theorem 2 in [10] by letting . The details are provided in Appendix A. Further, specializing the lower bound in Theorem 1 to the case of TAI, i.e., when , we obtain the following corollary which characterizes the optimal type II error-exponent for TAI established in Proposition 7 in [10] as a special case.

Corollary 1

The proof of Corollary 1 is given in Section 4.2. Its achievability follows from a special case of the SHTCC scheme without binning at the encoder.

Next, we consider testing against dependence (TAD) for which is an arbitrary joint distribution and . Theorem 1 specialized to TAD gives the following corollary.

Corollary 2

The proof of Corollary 2 is given in Section 4.3. Note that the expression for given in (11) is relatively simpler to compute compared to that in Theorem 1. This will be handy in showing that the JHTCC scheme strictly outperforms the SHTCC scheme, which we highlight via an example in Section 3.3 below.

3.2. Inner Bound via JHTCC Scheme

It is well known that joint source-channel coding schemes offer advantages over separation-based coding schemes in several information theoretic problems, such as the transmission of correlated sources over a multiple-access channel [40,46] and the error-exponent in the lossless or lossy transmission of a source over a noisy channel [42,47]. Recently, it was shown via an example in [10] that joint schemes also achieve a strictly larger type II error-exponent in DHT problems compared to a separation-based scheme in some scenarios. Motivated by this, we present an inner bound on using a generalization of the JHTCC scheme in [10].

Let and be arbitrary finite sets, and denote the set of all continuous mappings from to , where is the set of all conditional distributions . Let denote an arbitrary element of , and define

Then, we have the following result.

Theorem 2

(Inner bound via JHTCC scheme).

where

The proof of Theorem 2 is given in Section 4.4, and utilizes a generalization of hybrid coding scheme [40] to achieve the stated inner bound. Specifically, the error-exponent pair is achieved using type-based hybrid coding, while is realized by uncoded transmission, in which the channel input is generated as the output of a DMC with input (along with time sharing). In standard hybrid coding, the source sequence is first quantized via joint typicality and the channel input is then chosen as a function of both the original source sequence and its quantization. At the decoder, the quantized codeword is first recovered using the channel output and side information via joint typicality decoding, and an estimate of the source sequence is output as a function of the channel output and recovered codeword. The quantization part forms the digital part of the scheme, while the use of the source sequence for encoding and channel output for decoding comprises the analog part. The scheme derives its name from these joint hybrid digital-analog operations. In the HT context considered here, the aforementioned source quantization is replaced by a type-based quantization at the encoder, and the joint typicality decoder is replaced by a universal empirical conditional entropy decoder. We note that Theorem 2 recovers the lower bound on the optimal type II error-exponent proved in Theorem 5 in [10]. The details are provided in Appendix B.

Next, we provide a comparison between the SHTCC and JHTCC bounds via an example as mentioned earlier.

3.3. Comparison of Inner Bounds

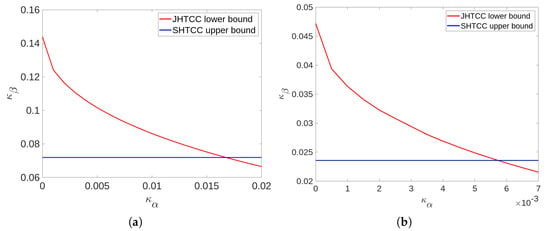

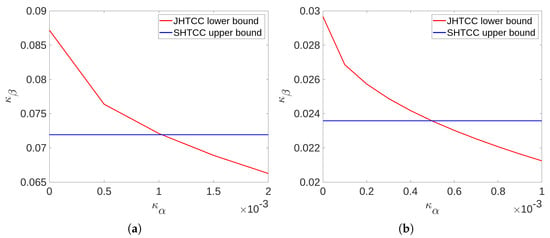

We compare the inner bounds established in Theorem 1 and Theorem 2 for a simple setting of TAD over a BSC. For this purpose, we will use the inner bound stated in Corollary 2 and that is achieved by uncoded transmission. Our objective is to illustrate that the JHTCC scheme achieves a strictly tighter bound on compared to the SHTCC scheme, at least for some points of the trade-off.

Example 1.

Let , ,

A comparison of the inner bounds achieved by the SHTCC and JHTCC schemes for the above example are shown in Figure 2 and Figure 3, where we plot the error-exponents trade-off achieved by uncoded transmission (a lower bound for the JHTCC scheme), and the expurgated exponent at a zero rate:

which is an upper bound on for any . To compute , we used the closed-form expression for given in Problem 10.26(c) in [38]. Clearly, it can be seen that the JHTCC scheme outperforms SHTCC scheme for below a threshold, which depends on the source and channel distributions. In particular, the threshold below which improvement is seen is reduced when the channel or the source becomes more uniform. The former behavior can be seen directly by comparing the subplots in Figure 2 and Figure 3, while the latter can be noted by comparing Figure 2a with Figure 3a, or Figure 2b with Figure 3b.

Figure 2.

Comparison of the error-exponents trade-off achieved by the SHTCC and JHTCC schemes for TAD over a BSC in Example 1 with parameters for (a) and for (b). The red curve shows pairs achieved by uncoded transmission while the blue line plots . The joint scheme clearly achieves a better error-exponent trade-off for values of below a threshold which depends on the transition kernel of the channel. In particular, a more uniform channel results in a lesser threshold.

Figure 3.

Comparison of the error-exponents trade-off achieved by the SHTCC and JHTCC schemes for Example 1 with parameters for (a) and for (b). The JHTCC scheme improves over the separation based scheme for small values of ; however, the region of improvement is reduced compared to Figure 2 as the source is more uniformly distributed.

4. Proofs

4.1. Proof of Theorem 1

We will show the achievability of the error-exponent pair by constructing a suitable ensemble of HT codes, and showing that the expected type I and type II error probabilities (over this ensemble) satisfy (5) for the pair . Then, an expurgation argument [44] will be used to show the existence of a HT code that satisfies (5) for the same error-exponent pair, thus showing that as desired.

Let , , , , , and be a small number. Additionally, suppose that satisfies

where and are defined in (6b) and (6c), respectively. The SHTCC scheme is as follows:

- Encoding: The observer’s encoder is composed of two stages, a source encoder followed by a channel encoder.

- Source encoder: The source encoding comprises a quantization scheme followed by binning to reduce the rate if necessary.

- Quantization codebook: Let

Let

and denote a random quantization codebook such that the codeword , if for some . Denote a realization of by .

Quantization scheme: For a given codebook and such that for some , let

If , let denote an index selected uniformly at random from the set , otherwise, set . Denoting the support of by , we have for sufficiently large n that

where the last inequality uses (16b) and .

Binning: If , then the source encoder performs binning as described below. Let , , and Note that

Let denote the random binning function such that for each , for , and with probability one. Denote a realization of by , where . Given a codebook and binning function , the source encoder outputs for . If , then is taken to be the identity map (no binning), and in this case, .

Channel codebook: Let denote a random channel codebook generated as follows. Without loss of generality, denote the elements of the set as . The codeword length n is divided into blocks, where the length of the first block is , the second block is , so on and so forth, and the length of the last block is chosen such that the total length is n. For , let and , where the empty sum is defined to be zero. Let be such that , i.e., the elements of equal i in the block for . Let with probability one, and the remaining codewords be constant composition codewords [38] selected such that , where is such that is non-empty and . Denote a realization of by . Note that for and large n, the codeword pair has joint type (approx) .

Channel encoder: For a given , the channel encoder outputs for output m from the source encoder. Denote this map by .

Encoder: Denote by the encoder induced by all the above operations, i.e., .

Decision function: The decision function consists of three parts, a channel decoder, a source decoder and a tester.

Channel decoder: The channel decoder first performs a Neyman–Pearson test on the channel output according to , where

If , then . Else, for a given , maximum likelihood (ML) decoding is done on the remaining set of codewords , and is set equal to the ML estimate. Denote the channel decoder induced by the above operations by , where .

For a given codebook , the channel encoder–decoder pair described above induces a distribution

Note that , for and for . Then, it follows by an application of Proposition A1 proved in Appendix C that for any and n sufficiently large, the Neyman–Pearson test in (20) yields

Moreover, given , a random coding argument over the ensemble of (see Exercise 10.18, 10.24 in [38,44]) shows that there exists a deterministic codebook such that (21a) and (21b) holds, and the ML decoding described above asymptotically achieves

This deterministic codebook is used for channel coding.

Source decoder: For a given codebook and inputs and , the source decoder first decodes for the quantization codeword (if required) using the empirical conditional entropy decoder, and then declares the output of the hypothesis test based on and . More specifically, if binning is not performed, i.e., if , . Otherwise, , where if and otherwise. Denote the source decoder induced by the above operations by .

Testing and Acceptance region: If , is declared. Otherwise, or is declared depending on whether or , respectively, where denotes the acceptance region for as specified next. For a given codebook , let denote the set of such that the source encoder outputs , . For each and , let

where ,

For , set , and define the acceptance region for at the decision maker as or equivalently as . Note that is the same as the acceptance region for in Theorem 1 in [14]. Denote the decision function induced by , and by .

Induced probability distribution: The PMFs induced by a code with respect to codebook under and are

respectively. For simplicity, we will denote the above distributions by and . Let , , and denote the random codebook, its support, and the probability measure induced by its random construction, respectively. Additionally, define and .

Analysis of the type I and type II error probabilities: We analyze the type I and type II error probabilities averaged over the random ensemble of quantization and binning codebooks . Then, an expurgation technique [44] guarantees the existence of a sequence of deterministic codebooks and a code that achieves the lower bound given in Theorem 1.

Type I error probability: In the following, random sets where the randomness is induced due to will be written using blackboard bold letters, e.g., for the random acceptance region for . Note that a type I error can occur only under the following events:

- (i)

- (ii)

- (iii)

- (iv)

- (v)

- .

Here, corresponds to the event that there does not exist a quantization codeword corresponding to atleast one sequence of type ; corresponds to the event, in which, there is neither an error at the channel decoder nor at the empirical conditional entropy decoder; and corresponds to the case, in which there is an error at the channel decoder (hence also at the empirical conditional entropy decoder); and corresponds to the case that there is an error (due to binning) only at the empirical conditional entropy decoder. For the event , it follows from a slight generalization of the type-covering lemma (Lemma 9.1 in [38]) that

Since for , the event may be safely ignored from the analysis of the error-exponents. Given that holds for some , it follows from Equation 4.22 in [14] that

for sufficiently large n since the acceptance region is the same as that in Theorem 1 in [14].

Next, consider the event . We have for sufficiently large n that

where

- (a)

- holds since the event is equivalent to ;

- (b)

Additionally, the probability of can be upper bounded as

where (27) is due to (24), the definition of in (15) and Lemma 2.2 and Lemma 2.6 in [38].

Finally, consider the event . Note that this event occurs only when . Additionally, iff , and hence and implies that . Let

We have

The second term in (28) can be upper bounded as

where the inequality in (29) follows from Equation (4.22) in [14] for sufficiently large n since the acceptance region is the same as that in [14]. To bound the first term in (28), define , and observe that since implies , we have

where follows since by the symmetry of the source encoder, binning function and random codebook construction, the term in (30) is independent of ; holds since implies that and form a Markov chain. Defining , and the event , we obtain

where

- (a)

- follows since is the uniform binning function independent of ;

- (b)

- holds due to the fact that if , then implies that with probability one for some ;

- (c)

- holds since , which follows similarly to Equation (101) in [10].

Continuing, we can write for sufficiently large n,

where and . In the above,

- (a)

- used Lemma 2.3 in [38] and the fact that the codewords are chosen uniformly at random from ;

- (b)

- follows since the total number of sequences such that and is upper bounded by , and ;

- (c)

- holds due to Lemma 2.2 in [38];

- (d)

By choice of , it follows from (24), (25), (26), (27) and (34) that the type I error probability is upper bounded by for large n.

Type II error probability: We analyze the type II error probability averaged over . A type II error can occur only under the following events:

- (i)

- (ii)

- (iii)

- (iv)

Similar to (24), it follows that . Hence, we may assume that holds for the type II error-exponent analysis. It then follows from the analysis in Equations (4.23)–(4.27) in [14] that for sufficiently large n,

The analysis of the error events , and follows similarly to that in the proof of Theorem 2 in [10], and results in

Since the exponent of the type II error probability is lower bounded by the minimum of the exponent of the type II error-causing events, we have shown from the above that for a fixed and sufficiently large n,

where

Expurgation: To complete the proof, we extract a deterministic codebook that satisfies

For this purpose, remove a set of highest type I error probability codebooks such that the remaining set has a probability of , i.e., . Then, it follows from (35a) and (35b) that for all ,

where is a PMF. Perform one more similar expurgation step to obtain such that for all sufficiently large n

Maximizing over and noting that is arbitrary completes the proof.

4.2. Proof of Corollary 1

Consider and . Then, . Additionally, for any , we have

where is due to the non-negativity of KL divergence and since ; is because of the monotonicity of KL divergence Theorem 2.14 in [43]; follows since for , for some . Minimizing over all yields that

where the inequality above follows from (36). Next, since , we have that . Additionally, by the non-negativity of KL divergence

where the final equality is since for . The claim in (8) now follows from Theorem 1.

Next, we prove (10). Note that and since and . Hence, we have

Additionally, , and . By choosing where is the capacity achieving input distribution, we have . Then, it follows from (8) and the continuity of , and in that . On the other hand, follows from the converse proof in Proposition 7 in [10]. The proof of the cardinality bound follows from a standard application of the Eggleston–Fenchel–Carathéodory theorem (Theorem18 in [48]), thus completing the proof.

4.3. Proof of Corollary 2

Specializing Theorem 1 to TAD, note that since and . Additionally, for , . Hence,

Then, we have

where follows due to the data-processing inequality for KL divergence Theorem 2.15 in [43]; is since implies that and for some . Next, note that since , . Additionally,

where

- (a)

- is obtained by taking and in the definition of . This implies that , and hence that the first term in the right hand side (RHS) of (38a) is zero;

- (b)

- is due to for .

Since is a non-increasing function of R and , selecting maximizes . Then, (11) follows from (37), (38b) and (38c).

Next, we prove (12). Note that , where , , and since and ,

Additionally, . By choosing (defined above (6a)) that maximizes , we have

where (39c) is due to . The latter in turn follows similar to (A10) and (A11) from the definition of . From (11), (39a,39b,39c), and the continuity of , in , (12) follows. The proof of the cardinality bound in the RHS of (39a) follows via a standard application of the Eggleston–Fenchel–Carathéodory Theorem (Theorem 18 in [48]). To see this, note that it is sufficient to preserve , and , all of which can be written as a linear combination of functionals of with weights . Thus, it requires points to preserve and one each for and . This completes the proof.

4.4. Proof of Theorem 2

We will show that the error-exponent pairs and are achieved by a hybrid coding scheme and uncoded transmission scheme, respectively. First, we describe the hybrid coding scheme.

Let , , , and . Further, let be a small number, and choose a sequence , where satisfies . Set .

Encoding: The encoder performs type-based quantization followed by hybrid coding [40]. The details are as follows:

Quantization codebook: Let be as defined in (15). Consider some ordering on the types in and denote the elements as , . For each joint type such that and , choose a joint type variable , , such that and , where . Define , for and . Let denote a random quantization codebook such that for , each codeword , , is independently selected from according to uniform distribution, i.e., . Let denote a realization of .

Type-based hybrid coding: For such that for some , let

If , let denote an index selected uniformly at random from the set ; otherwise, set . Given and , the quantizer outputs , where the support of is . Note that for sufficiently large n, it follows similarly to (18) that . For a given and , the encoder transmits if , and if .

Acceptance region: For a given codebook and , let denote the set of such that . For each and , set

where recall that , and

For , define The acceptance region for is given by or equivalently as

Decoding: Given codebook , , and , if , then , where . Otherwise, . Denote the decoder induced by the above operations by .

Testing: If , is declared. Otherwise, or is declared depending on whether or , respectively. Denote the decision function induced by and by .

Induced probability distribution: The PMFs induced by a code with respect to codebook under and are

and

respectively. For brevity, we will denote by , by , and the above probability distributions by and . Let and stand for the support and probability measure of , respectively, and set ,

Analysis of the type I and type II error probabilities: We analyze the expected type I and type II error probabilities, where the expectation is with respect to the randomness of , followed by the expurgation technique to extract a sequence of deterministic codebooks and a code that achieves the lower bound in Theorem 2.

Type I error probability: Denoting by the random acceptance region for , note that a type I error can occur only under the following events:

- (i)

- , where

- (ii)

- and ,

- (iii)

- , and ,

- (iv)

- , and .

By definition of , we have, similar to (24), the following:

Next, the event can be upper bounded as

For , note that, similar to Equation 4.17 in [14], we have

From this and (15), we obtain, similar to Equation (4.22) in [14] that

Substituting (43) in (42) yields

Next, we bound the probability of the event as follows:

where follows similar to (29) using (41) and (43); is since implies that ; and follows similar to (33). Further,

where the penultimate equality is since given , occurs only for such that , and the final inequality follows from (41), the definition of and Lemma 1.6 in [38]. From (41), (44), (47) and (48), the expected type I error probability satisfies for sufficiently large n via the union bound.

Type II error probability: Next, we analyze the expected type II error probability over . Let

A type II error can occur only under the following events:

- (a)

- ,

- (b)

- ,

- (c)

Considering the event , we have

where

For the inequality in above, we used and

which in turn follows from the fact that given and , is uniformly distributed in the set and that for sufficiently large n.

Next, we analyze the probability of the event . Let

Then,

where

Finally, considering the event , we have

The first term in the RHS decays double exponentially as , while the second term can be handled as follows:

where

Since the exponent of the type II error probability is lower bounded by the minimum of the exponent of the type II error-causing events, it follows from (49), (50) and (51) that for a fixed

where . Performing expurgation as in the proof of Theorem 1 to obtain a deterministic codebook satisfying (52a, 52b), maximizing over and noting that is arbitrary yields .

Finally, we show that , which will complete the proof. Fix and let and . Consider an uncoded transmission scheme in which the channel input . Let the decision rule be specified by the acceptance region for some small . Then, it follows from Lemma 2.6 in [42] that for sufficiently large n,

The proof is complete by noting that is arbitrary.

5. Conclusions

This work explored the trade-off between the type I and type II error-exponents for distributed hypothesis testing over a noisy channel. We proposed a separate hypothesis testing and channel coding scheme as well as a joint scheme utilizing hybrid coding, and analyzed their performance resulting in two inner bounds on the error-exponents trade-off. The separate scheme recovers some of the existing bounds in the literature as special cases. We also showed via an example of testing against dependence that the joint scheme strictly outperforms the separate scheme at some points of the error-exponents trade-off. An interesting avenue for future research is the exploration of novel outer bounds that could shed light on the scenarios where the separate or joint schemes are tight.

Author Contributions

Conceptualization, S.S. and D.G.; writing—original draft preparation, S.S. and D.G.; supervision, D.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the European Research Council Starting Grant project BEACON (grant agreement number 677854).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HT | Hypothesis testing |

| DHT | Distributed hypothesis testing |

| TAD | Testing against dependence |

| TAI | Testing against independence |

| SHTCC | Separate hypothesis testing and channel coding |

| JHTCC | Joint hypothesis testing and channel coding |

Appendix A. Proof that Theorem 1 Recovers Theorem 2 in [10]

We prove that , where is the lower bound on the type II error-exponent for a fixed type I error probability constraint and unit bandwidth ratio established in Theorem 2 in [10]. Note that , , and . The result then follows from Theorem 1 by noting that , and are continuous in and the fact that and are all greater than or equal to zero.

Appendix B. Proof that Theorem 2 Recovers Theorem 5 in [10]

We show that , where is as defined in Theorem 5 in [10]. Note that , , ,

and . The result then follows from Theorem 2 via the continuity of , , , and in .

Appendix C. An Auxiliary Result

Here, we prove a result which was used in the proof of Theorem 1, namely Proposition A1 given below. For this purpose, we require a few properties of log-moment generating function, which we briefly review next.

Lemma A1.

(Theorem 15.3, Theorem 15.6 in [43])

- (i)

- and , where denotes the derivative of with respect to λ.

- (ii)

- is a strictly convex function in λ.

- (iii)

- is strictly convex and strictly positive in θ except .

Proposition A1 is basically a characterization of the error-exponent region of a hypothesis testing problem, which we introduce next. Let be an arbitrary joint PMF, and consider a sequence of pairs of n-length sequences such that

Consider the following HT:

With the achievability of an error-exponent pair defined similar to Definition 1, consider the error-exponent region of interest

where and . We mention at this point that the notation is justified as the error-exponent region for the above hypothesis test depends on only through its limiting joint type , as will be evident later. Given this, the following proposition provides a single-letter characterization of .

Proposition A1.

where for ,

Proof.

Let be sequences that satisfy (A1). For simplicity, we will denote and by and , respectively.

Achievability: We will show that for ,

Consider the Neyman–Pearson test given by , where . Observe that the type I error probability can be upper bounded for and sufficiently large n as follows:

where follows from the Chernoff bound, and holds because for and sufficiently large n, the supremum in (A3) always occurs at . To see this, note that the term is a concave function of by Lemma A1 (i). Additionally, denoting its derivative with respect to by , we have

where (A4) follows from Lemma A1 (iii), and (A5) is due to the absolute continuity assumption, , on the channel, and (A1). Thus, by the concavity of , its supremum has to occur at . Simplifying the term within the exponent in (A3), we obtain

where (A7) follows from (A1) and the absolute continuity assumption on . Substituting (A7) in (A3) and from (1), we obtain for arbitrarily small (but fixed) and sufficiently large n, that

Similarly, it can be shown that for ,

Moreover, for , we have

It follows that

Hence,

From this, (A8) and (A9), we obtain for that

Then, the proof of achievability is completed by noting that is arbitrary and is a continuous function of for a fixed .

- Converse: Let . For any and decision function , we have from Theorem 14.9 in [43] that

Simplifying the RHS above, we obtain

where

- (a)

- follows since

- (b)

- is due to the independence of the events for different .

Define . Then, for arbitrary , and sufficiently large n, we can write

where follows from Theorem 15.9 in [43]; follows since from Theorem 15.6 in [43] and Theorem 15.11 in [43]; and is due to (A1). The equation above implies that

Hence, if for all sufficiently large n, then

Since (and ) is arbitrary, this implies via the continuity of in that

To complete the proof, we need to show that can be restricted to lie in . Toward this, it suffices to show the following:

- (i)

- ,

- (ii)

- , and

- (iii)

- and are convex functions of .

We have

where (A10) follows since each term inside the square braces in the penultimate equation is zero, which in turn follows from Lemma A1 (iii). Additionally,

where (A11) follows from the non-negativity of for every stated in Lemma A1 (iii). Combining (A10) and (A11) proves . We also have

where the final equality follows similarly to the proof of . This proves . Finally, (iii) follows from Lemma A1 (iii) and the fact that a weighted sum of convex functions is convex provided the weights are non-negative, thus completing the proof. □

References

- Neyman, J.; Pearson, E. On the Problem of the Most Efficient Tests of Statistical Hypotheses. Philos. Trans. R. Soc. Lond. 1933, 231, 289–337. [Google Scholar]

- Chernoff, H. A measure of asymptotic efficiency for tests of a hypothesis based on a sum of observations. Ann. Math. Statist. 1952, 23, 493–507. [Google Scholar] [CrossRef]

- Hoeffding, W. Asymptotically optimal tests for multinominal distributions. Ann. Math. Statist. 1965, 36, 369–400. [Google Scholar] [CrossRef]

- Blahut, R.E. Hypothesis Testing and Information Theory. IEEE Trans. Inf. Theory 1974, 20, 405–417. [Google Scholar] [CrossRef]

- Tuncel, E. On error-exponents in hypothesis testing. IEEE Trans. Inf. Theory 2005, 51, 2945–2950. [Google Scholar] [CrossRef]

- Ahlswede, R.; Csiszár, I. Hypothesis Testing with Communication Constraints. IEEE Trans. Inf. Theory 1986, 32, 533–542. [Google Scholar] [CrossRef]

- Han, T.S. Hypothesis Testing with Multiterminal Data Compression. IEEE Trans. Inf. Theory 1987, 33, 759–772. [Google Scholar] [CrossRef]

- Shimokawa, H.; Han, T.S.; Amari, S. Error Bound of Hypothesis Testing with Data Compression. In Proceedings of the IEEE International Symposium on Information Theory, Trondheim, Norway, 27 June–1 July 1994; p. 114. [Google Scholar]

- Rahman, M.S.; Wagner, A.B. On the Optimality of Binning for Distributed Hypothesis Testing. IEEE Trans. Inf. Theory 2012, 58, 6282–6303. [Google Scholar] [CrossRef]

- Sreekumar, S.; Gündüz, D. Distributed Hypothesis Testing Over Discrete Memoryless Channels. IEEE Trans. Inf. Theory 2020, 66, 2044–2066. [Google Scholar] [CrossRef]

- Salehkalaibar, S.; Wigger, M. Distributed Hypothesis Testing Based on Unequal-Error Protection Codes. IEEE Trans. Inf. Theory 2020, 66, 4150–4182. [Google Scholar] [CrossRef]

- Berger, T. Decentralized estimation and decision theory. In Proceedings of the IEEE 7th Spring Workshop on IInformation Theory, Mt. Kisco, NY, USA, September 1979. [Google Scholar]

- Shalaby, H.M.H.; Papamarcou, A. Multiterminal Detection with Zero-Rate Data Compression. IEEE Trans. Inf. Theory 1992, 38, 254–267. [Google Scholar] [CrossRef]

- Han, T.S.; Kobayashi, K. Exponential-Type Error Probabilities for Multiterminal Hypothesis Testing. IEEE Trans. Inf. Theory 1989, 35, 2–14. [Google Scholar] [CrossRef]

- Gündüz, D.; Kurka, D.B.; Jankowski, M.; Amiri, M.M.; Ozfatura, E.; Sreekumar, S. Communicate to Learn at the Edge. IEEE Commun. Mag. 2020, 58, 14–19. [Google Scholar] [CrossRef]

- Gündüz, D.; Qin, Z.; Aguerri, I.E.; Dhillon, H.S.; Yang, Z.; Yener, A.; Wong, K.K.; Chae, C.B. Beyond Transmitting Bits: Context, Semantics, and Task-Oriented Communications. IEEE J. Sel. Areas Commun. 2023, 41, 5–41. [Google Scholar] [CrossRef]

- Zhao, W.; Lai, L. Distributed Testing Against Independence with Multiple Terminals. In Proceedings of the 52nd Annual Allerton Conference on Communication, Control, and Computing, Monticello, IL, USA, 30 September–3 October 2014; pp. 1246–1251. [Google Scholar]

- Wigger, M.; Timo, R. Testing Against Independence with Multiple Decision Centers. In Proceedings of the International Conference on Signal Processing and Communications, Bangalore, India, 12–15 June 2016; pp. 1–5. [Google Scholar]

- Salehkalaibar, S.; Wigger, M.; Wang, L. Hypothesis Testing In Multi-Hop Networks. IEEE Trans. Inf. Theory 2019, 65, 4411–4433. [Google Scholar] [CrossRef]

- Zaidi, A.; Aguerri, I.E. Optimal Rate-Exponent Region for a Class of Hypothesis Testing Against Conditional Independence Problems. In Proceedings of the 2019 IEEE Information Theory Workshop (ITW), Visby, Sweden, 25–28 August 2019; pp. 1–5. [Google Scholar]

- Zaidi, A. Rate-Exponent Region for a Class of Distributed Hypothesis Testing Against Conditional Independence Problems. IEEE Trans. Inf. Theory 2023, 69, 703–718. [Google Scholar] [CrossRef]

- Mhanna, M.; Piantanida, P. On Secure Distributed Hypothesis Testing. In Proceedings of the IEEE International Symposium on Information Theory, Hong Kong, China, 14–19 June 2015; pp. 1605–1609. [Google Scholar]

- Sreekumar, S.; Gündüz, D. Testing Against Conditional Independence Under Security Constraints. In Proceedings of the IEEE Int. Symp. Inf. Theory (ISIT), Vail, CO, USA, 17–22 June 2018; pp. 181–185. [Google Scholar]

- Sreekumar, S.; Cohen, A.; Gündüz, D. Privacy-Aware Distributed Hypothesis Testing. Entropy 2020, 22, 665. [Google Scholar] [CrossRef] [PubMed]

- Gilani, A.; Amor, S.B.; Salehkalaibar, S.; Tan, V. Distributed Hypothesis Testing with Privacy Constraints. Entropy 2019, 21, 478. [Google Scholar] [CrossRef] [PubMed]

- Katz, G.; Piantanida, P.; Debbah, M. Distributed Binary Detection with Lossy Data Compression. IEEE Trans. Inf. Theory 2017, 63, 5207–5227. [Google Scholar] [CrossRef]

- Xiang, Y.; Kim, Y.H. Interactive hypothesis testing with communication constraints. In Proceedings of the 50th Annual Allerton Conference on Communication, Control, and Computing, Monticello, IL, USA, 1–5 October 2012; pp. 1065–1072. [Google Scholar]

- Xiang, Y.; Kim, Y.H. Interactive hypothesis testing against Independence. In Proceedings of the IEEE International Symposium on Information Theory, Istanbul, Turkey, 7–12 July 2013; pp. 2840–2844. [Google Scholar]

- Tian, C.; Chen, J. Successive Refinement for Hypothesis Testing and Lossless One-Helper Problem. IEEE Trans. Inf. Theory 2008, 54, 4666–4681. [Google Scholar] [CrossRef]

- Haim, E.; Kochman, Y. On Binary Distributed Hypothesis Testing. arXiv 2017, arXiv:1801.00310. [Google Scholar]

- Weinberger, N.; Kochman, Y. On the Reliability Function of Distributed Hypothesis Testing Under Optimal Detection. IEEE Trans. Inf. Theory 2019, 65, 4940–4965. [Google Scholar] [CrossRef]

- Hadar, U.; Liu, J.; Polyanskiy, Y.; Shayevitz, O. Error Exponents in Distributed Hypothesis Testing of Correlations. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Paris, France, 7–12 July 2019; pp. 2674–2678. [Google Scholar]

- Watanabe, S. Neyman-Pearson Test for Zero-Rate Multiterminal Hypothesis Testing. IEEE Trans. Inf. Theory 2018, 64, 4923–4939. [Google Scholar] [CrossRef]

- Xu, X.; Huang, S.L. On Distributed Learning With Constant Communication Bits. IEEE J. Sel. Areas Inf. Theory 2022, 3, 125–134. [Google Scholar] [CrossRef]

- Salehkalaibar, S.; Tan, V.Y.F. Distributed Sequential Hypothesis Testing With Zero-Rate Compression. In Proceedings of the 2021 IEEE Information Theory Workshop (ITW), Kanazawa, Japan, 17–21 October 2021; pp. 1–5. [Google Scholar]

- Sreekumar, S.; Gündüz, D. Strong Converse for Testing Against Independence over a Noisy channel. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Los Angeles, CA, USA, 21–26 June 2020; pp. 1283–1288. [Google Scholar]

- Salehkalaibar, S.; Wigger, M. Distributed Hypothesis Testing with Variable-Length Coding. IEEE J. Sel. Areas Inf. Theory 2020, 1, 681–694. [Google Scholar] [CrossRef]

- Csiszár, I.; Körner, J. Information Theory: Coding Theorems for Discrete Memoryless Systems; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Borade, S.; Nakiboğlu, B.; Zheng, L. Unequal Error Protection: An Information-Theoretic Perspective. IEEE Trans. Inf. Theory 2009, 55, 5511–5539. [Google Scholar] [CrossRef]

- Minero, P.; Lim, S.H.; Kim, Y.H. A Unified Approach to Hybrid Coding. IEEE Trans. Inf. Theory 2015, 61, 1509–1523. [Google Scholar] [CrossRef]

- Weinberger, N.; Kochman, Y.; Wigger, M. Exponent Trade-off for Hypothesis Testing Over Noisy Channels. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Paris, France, 7–12 July 2019; pp. 1852–1856. [Google Scholar]

- Csiszár, I. On the error-exponent of source-channel transmission with a distortion threshold. IEEE Trans. Inf. Theory 1982, 28, 823–828. [Google Scholar] [CrossRef]

- Polyanskiy, Y.; Wu, Y. Information Theory: From Coding to Learning; Cambridge University Press: Cambridge, UK, 2012; Available online: https://people.lids.mit.edu/yp/homepage/data/itbook-export.pdf (accessed on 10 December 2022).

- Gallager, R. A simple derivation of the coding theorem and some applications. IEEE Trans. Inf. Theory 1965, 11, 3–18. [Google Scholar] [CrossRef]

- Merhav, N.; Shamai, S. On joint source-channel coding for the Wyner-Ziv source and the Gelfand-Pinsker channel. IEEE Trans. Inf. Theory 2003, 49, 2844–2855. [Google Scholar] [CrossRef]

- Cover, T.; Gamal, A.E.; Salehi, M. Multiple Access Channels with Arbitrarily Correlated Sources. IEEE Trans. Inf. Theory 1980, 26, 648–657. [Google Scholar] [CrossRef]

- Csiszár, I. Joint Source-Channel Error Exponent. Prob. Control Inf. Theory 1980, 9, 315–328. [Google Scholar]

- Eggleston, H.G. Convexity, 6th ed.; Cambridge University Press: Cambridge, UK, 1958. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).