Abstract

As spatial correlation and heterogeneity often coincide in the data, we propose a spatial single-index varying-coefficient model. For the model, in this paper, a robust variable selection method based on spline estimation and exponential squared loss is offered to estimate parameters and identify significant variables. We establish the theoretical properties under some regularity conditions. A block coordinate descent (BCD) algorithm with the concave–convex process (CCCP) is composed uniquely for solving algorithms. Simulations show that our methods perform well even though observations are noisy or the estimated spatial mass matrix is inaccurate.

1. Introduction

Spatial econometrics is one of the essential branches of econometrics. Its basic content is to consider the spatial effects of variables in regional scientific models. The most widely used spatial econometric model is the spatial autoregressive (SAR) model, first proposed by [1], which has been extensively studied and applied in the fields of economy, finance, and environment.

The SAR model is mainly a parameter model. However, in the practical application, only the parametric model cannot fully explain the complex economic problems and phenomena. Therefore, in order to improve the flexibility and applicability of the spatial econometric model, the non-parametric spatial econometric model has received more attention. Ref. [2] studied the SAR model in the non-parametric frame, obtained the parameter estimators by using the generalized moment estimation, and proved the consistency and asymptotic property of the estimator. The instrumental variable method was used by [3] to study semi-parametric varying-coefficient spatial panel data models with endogenous explanatory variables.

However, for all practical purposes, data may have spatial correlation and spatial heterogeneity simultaneously, which leads to spatial heterogeneity that cannot be fully considered and reflected by the SAR model in the parametric form and the non-parametric SAR model.

The single-index varying-coefficient model is a generalization of the single-index and varying-coefficient models, which can effectively avoid the “curse of dimension” in multidimensional non-parametric regression. Many domestic and foreign researchers have learned this. Refs. [4,5] studied the evaluation of the single-index varying-coefficient model. Ref. [6] constructed the empirical likelihood confidence region of the single-index varying-coefficient model by using the empirical likelihood method; Ref. [7] proposed a new estimated empirical likelihood ratio statistic, obtained maximum likelihood estimators of the model parameters, and proposed a new Profile empirical likelihood ratio, which was shown to be asymptotically close to the standard chi-square distribution.

In addition, selecting significant explanatory variables is one of the most important problems of statistical learning. Some robust regression methods have been proposed, such as quantile regression, composite quantile regression, and modal regression. Ref. [8] presented a new class of robust regression estimators methods for linear models based on exponential square loss. The specific method is as follows: for the linear regression model , minimize this objective function to estimate the regression parameters , in which controls the robustness of the estimation. For a large h, . Therefore, the proposed estimation is similar to the least squares estimation in the extreme case. For a small h, the value of is large, and the impact on the estimated value is small. Hence, a small value of h will limit the influence of outliers on the estimation, thus improving the robustness of estimators. Ref. [8] also pointed out that their method is more robust than the other general robust estimators methods. Ref. [9] made a robust estimation based on exponential square loss for some linear regression models and proposed a data driver to select adjustment parameters. The exponential square loss square is used in data simulation, and positive results were obtained by the method. Ref. [10] suggested a robust variable selection for the high-dimensional single-index varying-coefficient model based on exponential square loss, established and proved the theoretical properties of estimators, and demonstrated the robustness of this method through numerical simulation. Ref. [11] applied exponential square loss to conduct robust structure analysis and variable selection for some linear variable coefficient models and obtained good results.

Inspired by the above article, we introduce the spatial position of the observed objects into a single-index variable coefficient model, and a spatial single-index variable coefficient model is proposed. We also presented a variable selection method for the spatial single-index varying-coefficient model based on spline estimation and the exponential loss function. This method was capable of selecting significant predictors while estimating regression coefficients. The following are the main contributions of this work.

- We propose a novel model: the spatial single-index varying-coefficient model, which can deal with the spatial correlation and spatial heterogeneity of data at the same time.

- We construct a robust variable selection method for the spatial single-index varying-coefficient model, which uses exponential square loss function to resist the influence of strong noise and inaccurate spatial weight matrix. Furthermore, we present the BCD (block coordinate descent) algorithm to solve the optimization problem of the objective function.

- Under reasonable assumptions, we give theoretical properties of this method. In addition, we verify the robustness and effectiveness of the variable selection method through numerical simulation studies. The numerical study shows that the method is more robust than other comparative methods in variable selection and parameter estimation when outliers or noise are presented in the observations.

The rest of this paper is organized as follows. In Section 2, we develop the methodology for variable selection with exponential squared loss and give the theoretical properties of the proposed method in Section 3. In Section 4, we present the related algorithms. The experimental results are carried out in Section 5, and we conclude the paper in Section 6. All of the details of the proofs of the main theorems are collected in the Appendix A.

2. Methodology

2.1. Model Setup

Consider the following spatial single-index varying-coefficient model:

where is the response variable, is the q-dimensional of the observed variable, and is the m-dimensional spatial location parameter. The matrix of the spatial weights matrix W in dimensional space is . and are the parameters to be estimated. It is natural to suppose that is independent and subject to a mean value of zero and a variance of . is an unknown function. For the identifiability of the model, it is assumed that and the first nonzero element of is positive.

It can be seen from the model (1) that the spatial single-index varying-coefficient model is a semi-parametric varying-coefficient model, and the unknown function g changes with the transformation of geographical location. When , the model becomes the partial linear single-index varying-coefficient model. When and while the other , the model becomes the SAR model.

2.2. Basis Function Expansion

Since is unknown, we replace with its basis function approximations. The specific estimation steps are as follows:

Step 1. The initial value should be given. This paper uses the method proposed by [12]. We roughly calculate the estimated value of by the linear regression model:

set the estimated value of as , in which and the first nonzero element in is positive.

Step 2. Set as l nodes on the interval . By the initial value , let , then the radial basis function of degree p is

Suppose that the coefficient of the radial basis function is

then, the sth unknown function , where . Substituting the radial basis function into model (1), we can obtain the following:

Let , where , , then the matrix form of the model (2) is

As can be seen from model (3), model (1) is transformed from the spatial single-index varying-coefficient model to the classical SAR model under the fitting of the radial basis function. The theory of the SAR model is relatively well-equipped, and the exponential squared loss-based variable selection method for the SAR model is used to estimate the unknown parameters.

2.3. The Penalized Robust Regression Estimator

Now, we consider the variable selection for the model (3). To guarantee the model identifiability and to improve the model fitting accuracy and interpretability, we normally assume that the true regression coefficient vector is sparse with only a small proportion of nonzeros [13,14]. It is natural to employ the penalized method that simultaneously selects important variables and estimates the values of parameters. The constructed model is recast as follows:

where , , is a penalty term, is the exponential squared loss function: , in which is the tuning parameter controlling the degree of robustness.

Concerning the choice of the penalty term. The lasso or adaptive lasso penalty could be considered if there is no extra structured information. Assume that is a root-n-consistent estimator for , for instance, the naive least square estimator . Define the weight vector with , , and then we set in this paper as suggested by [15]. An adaptive lasso penalty is described as

The objective function of penalized robust regression that consists of exponential squared loss and an adaptive lasso penalty is formulated as

The selection of tuning parameter and regularization parameter is discussed in Section 4.

2.4. Estimation of the Variance of the Noise

Set , then the variance of the noise is estimated as

where and could be estimated by the solutions of (6). It is pointed out that H is a nonsingular matrix, then . Let , then and then defined by (7) can be computed by

3. Theoretical Properties

To discuss the theoretical properties, let the parameters , with , and , be the true values of , and . It is generally assumed that , , and , , are all nonzero parts of . Moreover, we assume that , , and , are all nonzero parts of . Set ; the real parameters of satisfy . Hence, is differentiable within the neighborhood of , and the Jacobian matrix is

Assumption:

- (C1)

- The density function of is uniformly bounded on and far from 0. Furthermore, is assumed to satisfy the Lipschitz condition of order 1 on T.

- (C2)

- The function , has bounded and continuous derivatives up to order on T, where is the jth components of .

- (C3)

- and .

- (C4)

- is a strictly stationary and strongly mixing sequence with coefficient , where .

- (C5)

- Let be the interior knots of , where , . Moreover, we set , , , . Then, a positive constant exists such that

- (C6)

- Let and then as . Further, let, where .

- (C7)

- is a nonsingular matrix, invertible for any , is a compact parameter space, and the absolute row and column sums of , are uniformly bounded on ;

- (C8)

- Letwhere . Suppose that is negative definite.

- (C9)

- is positive definite.

Under the above preparations, we give the following sampling properties for our proposed estimators. The following theorem presents the consistency of the penalized exponential squared loss estimators.

Theorem 1.

Assume that conditions hold and the number of knots . Further, we suppose that for some and is negative definite. Then,

- (i)

- ;

- (ii)

- , for

where , r is defined in condition (C2), and represents the first order derivative of .

In addition, we have proved that when some suitable conditions hold, the consistent estimation must be sparse, as described below.

Theorem 2.

Suppose that conditions hold, and the number of knots . We assume that and . Let

Then, with probability approaching 1, and satisfy

- (i)

- , ;

- (ii)

- , .

We then show that the estimators of nonzero coefficients for the parameter components have the same asymptotic distribution as the estimators based on the correct submodel. Set

and let and be true values of and , respectively. Corresponding covariates are denoted by and , . Furthermore, let , ,

The following result presents the asymptotic properties of .

Theorem 3.

If the assumptions of Theorem 2 hold, we have

where ‘’ represents the convergence in distribution.

Theorems 1 and 2 show that the proposed variable selection procedure is consistent, and Theorems 1 and 3 show that the penalized estimators have the oracle property. This demonstrates that if the subset of true zero coefficients are known, the penalty estimators perform well.

4. Algorithm

In this section, we talk about a feasible algorithm for the solution of (6). A data-driven procedure for and a simple selection method for are considered. Moreover, effective optimization algorithms have been composed to solve non-convex and non-differentiable objective functions.

4.1. Choice of the Tuning Parameter

The tuning parameter controls the level of robustness and performance of the proposed robust regression estimators. Ref. [16] propose a data-driven procedure to choose for ordinary regression. We follow its steps and apply it to the spatial single-index varying-coefficient model. Firstly, a set of tuning parameters is determined to ensure that the proposed penalized robust estimators have an asymptotic breakdown point at . Then, the tuning parameter is selected with the maximum efficiency.

The whole procedures are presented as follows:

Step 1. Initialize and . Set , a robust estimator. The model can be recasted as , where .

Step 2. Find the pseudo outlier set of the sample:

Let . Calculate and . Then, take the pseudo outlier set , set , and .

Step 3. Select the tuning parameter : construct , in which

Let be the minimizer of in the set , where has the same definition in [8] and means the determinant operator.

Step 4. Update and as the optimal solution of , where . Repeat step 2 to step 4 until convergence.

It is noted that an initial robust estimator is needed in the initial step above. In practice, we make the estimator of the LAD loss as the initial estimator. In this sense, the selection of does not depend on basically. Meanwhile, one could also select the two parameters and jointly by cross-validation as discussed in [8]. Nevertheless, this approach needs huge computation. Moreover, the candidate interval of is . Practically, we find the threshold of subject to . The choice of is usually located in the interval of .

4.2. Choice of the Regularization Parameter and

With regard to the choice of the regularization parameter and in (6), as the parameter can be unified with , we set . Generally, many methods can be applied to select , such as AIC, BIC, and cross-validation. To ensure that variable selection is consistent and that the intensive computation can be reduced, we propose the regularization parameter by minimizing a BIC-type objective function as [16]:

where . This results in . can be easily estimated by the unpenalized exponential squares loss estimator , where the parameter value of has been estimated as described in Section 4.1. Note that this simple choice satisfies the conditions for and for , with d the number of nonzeros in the true value of . Thus, the consistent variable selection is ensured by the final estimator.

4.3. Block Coordinate Descent (BCD) Algorithm

We seek to compose an effective algorithm to solve the objective function (6). Finding an effective algorithm is difficult because the optimization problem is non-convex and non-differentiable. We embark on using the BCD algorithm proposed by [17] and then overcome the above challenges. The BCD algorithm framework is shown in Algorithm 1 specifically.

| Algorithm 1 The block coordinate descent (BCD) algorithm |

|

4.4. DC Decomposition and CCCP Algorithm

An elemental observation for problem (12) is that the exponential squared loss function is a DC function, and the lasso or the adaptive lasso penalty function is convex. As a result, problem (12) is a DC programming. It can be solved by the following algorithms.

We first analyze whether the exponential squared loss function can be denoted as the difference of two convex functions:

where , , .

Set

in which , is defined in (13), is in the ith row of the weight matrix W, and a convex penalty with regard to . Then, and are convex and concave functions, respectively. Subproblem (12) is recast as follows:

Furthermore, it can be solved by the concave–convex procedure algorithm structure proposed by [18] as shown in Algorithm 2.

| Algorithm 2 The Concave–Convex Procedure |

|

We focus on the lasso and the adaptive lasso penalty. Since is linear to , according to the definition in (15), the objective function of (16) can be expressed as

where is a convex and continuously differentiable function, is the lasso penalty, , or the more general adaptive lasso penalty: , . Therefore, we can refer to an efficient algorithm ISTA and FISTA proposed by [19] to solve the model with a framework (17) for the lasso penalty. The iterative steps of ISTA is simply , where L is the unknown Lipschitz constant. FISTA is an accelerated version of ISTA that has been shown to have a better convergence rate in theory and practice, proven by [19]. Ref. [17] extended it to solve the model by adaptive lasso penalty and can ensure numerical efficiency.

Now consider solving subproblem (11) to update . Since problem (11) minimizes a function of univariate variable, we employ the classical golden section search algorithm based on parabolic interpolation (see [20] for details).

In accordance with Beck and Teboulle, the value of the iterative function generated by FISTA for solving the subproblem (16) of CCCP converges to the optimal function value at the speed of , with an iteration step of k. The ordinary termination criterion of ISTA and FISTA is , where is a tolerance approaching zero and greater than zero. Under the criterion of either , or , Algorithm 1 terminates. Therefore, to obtain an optimal solution of , the required iterations of the FISTA algorithm are and the gradient of (17) is computed for each iteration. Suppose that the BCD algorithm converges with a specified number of iterations and the CCCP algorithm terminates at most m times in each iteration. Since computation is needed to calculate the gradient , the total computational complexity is .

5. Simulation Studied

In this section, we conduct numerical studies to illustrate the performance of the proposed method, including the cases of normal data and noisy data.

5.1. Simulation Sampling

The data is generated from model (1). We set , where generates from a 2-dimensional normal distribution of the mean vector and covariance matrix , with the unit matrix , is the zero vector of q dimension. Set the sample size , and spatial coefficient is generated by uniform distribution on interval , where . For comparison’s sake, we also consider , which means that there is no spatial dependency, and model (1) changes into the normal single-index varying-coefficient model.

The variable follows , in which is generated from a uniform distribution by on interval . We also consider the case when there are outliers in the response. The error term follows a mixed normal distribution , where . is independent and randomly taken from the normal distribution , and the space weight matrix , where , ⊗ is a Kronecker product, and is the m-dimensional column vector of ones. We take different values of and R, where R = 20,100.

Moreover, we construct the spatial location information, where two-dimensional plane coordinates are used in this paper. Take a square to simulate the geographical area object, set the end point of the lower left corner of the square as the origin, and establish a rectangular coordinate system along the horizontal and vertical directions. Each side is divided into equal points, and corresponding equal points are connected along the horizontal and vertical axes to form crossing points (including the equal points of each square side). Each crossing point is the geographical location point. Set sampling capacity ; then, the geographical location coordinate is expressed as:

where mod and floor are the representations of built-in functions in MATLAB, represents the remainder of divided by h, and represents the integer part of the quotient of divided by h. We set

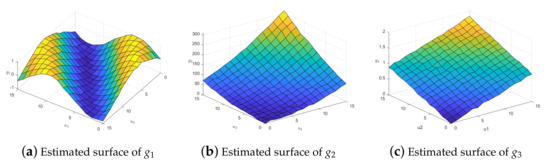

The true surface of the three coefficient functions is shown in Figure 1.

Figure 1.

Real surfaces of coefficient functions.

Another important problem of the spatial single-index varying-coefficient model is the estimation of weight matrix W. Since is composed of the correlation of every two observations, it is usually difficult to obtain an accurate estimation of the weight matrix W in practical applications. In order to confirm the effect of inaccurate estimation of the matrix W, we randomly remove , , and non-zero weights from each row of the true weight matrix W, respectively.

For each case of the simulation experiment, all of the results shown below are averaged over 100 replications to avoid unintended effects. We adopt the node selection method proposed by [12], with and the number of radial basis functions .

5.2. Simulation Results

The evaluation of simulation results is shown as follows. We use the median of squared error (MedSE) proposed by [21]. It is defined as in this paper, where , , is the estimator of . The square root of mean deviation (MAISE) is used as the evaluation index for the unknown function. Specifically, , where represents the total simulation times of the model, and t represents the tth unknown function of the model, . The smaller the value of each index, the higher the accuracy of parameter estimation and the better fitting effect of the unknown function.

Table 1 illustrates the results of the estimated coefficient by the spatial single-index varying-coefficient model with , the null penalty term, and Gaussian noise in y, where “E”, “S”, and “L” indicate the exponential squared loss, the square loss, and the LAD loss, respectively. It is shown that both of the three loss functions bring nonzero estimates of and , which are close to the true values (the mean of the true values of and are 0.6, 0.8 resp.). Comparatively, the model with the square loss produces the most accurate estimation. As the sample size increases, all three loss functions bring an accurate estimate of and .

Table 1.

Estimation with no regularizer on normal data (q = 5).

Table 2 presents the results of the estimated coefficient by the spatial single-index varying-coefficient model when the dimension is comparatively close to the sample size. Similar results in Table 1 have been observed, except for . As the sample size is not enough compared with the dimension, these results are as expected.

Table 2.

Estimation with no regularizer on normal data when the dimension is close to the sample size.

Table 3 illustrates the results of the model when the observations of y have outliers. Compared with the square loss model and LAD loss model, the model with exponential square loss shows advantages in parameter estimation in terms of MedSE, especially when the sample size is large.

Table 3.

Estimation with no regularizer when the observations of y have outliers.

We list the results of the estimated coefficients with inaccurate weight matrix W in Table 4. Compared with the results with normal data (Table 1), the MedSE values increase, and the estimations of and become worse for each loss functions in total. Particularly, for removing a certain part (30%, 50%, and 80%) of nonzero weights of the matrix W, MedSE increases as the moving nonzeros increase and decreases as the sample size n increases for each of the three loss functions. The exponential squared loss has the lowest MedSE among the three loss functions.

Table 4.

Estimation with no regularizer with noisy weighting matrix w.

Correspondingly, Table 5, Table 6, Table 7 and Table 8 show the variable selection results compared with other loss functions. The average number of zero coefficients that are correctly chosen is labeled as “Correct”. The label “Incorrect” depicts the average number of nonzero coefficients incorrectly identified as zero. “”, “”, and “null” express the adaptive lasso penalty, the lasso penalty, and without penalty term, respectively.

Table 5.

Variable section with regularizer on normal data (), E: the exponential loss; S: the square loss; L: the LAD loss; : the lasso penalty; and : the adaptive lasso penalty.

Table 6.

Variable section with regularizer on normal data when the dimension is close to the sample size, E: the exponential loss; S: the square loss; L: the LAD loss; : the lasso penalty; and : the adaptive lasso penalty.

Table 7.

Variable selection with regularizer when the observations y have outliers, E: the exponential loss; S: the square loss; L: the LAD loss; : the lasso penalty; and : the adaptive lasso penalty.

Table 8.

Variable selection with regularizer and noisy weighting matrix w, E: the exponential loss; S: the square loss; L: the LAD loss; : the lasso penalty; and : the adaptive lasso penalty.

Table 5 shows the variable section results of the lasso and the adaptive lasso regularizer on normal data with . In almost all of the tested cases, the model with the exponential squared loss and the lasso penalty or the adaptive lasso penalty (i.e., , ) identifies more numbers of true zero coefficients (“Correct”) and much lower MedSE.

Similar results have been observed when the sample dimension is close to the sample size, which is presented in Table 6. In the tested cases of , the model with almost correctly identifies all the zero coefficients. The above performance of the proposed exponential squared loss and lasso or adaptive lasso penalty is beyond our expectations.

Table 7 and Table 8 list the variable selections results with noise in the observations and the inaccurate weight matrix. The model with the exponential squared loss and the lasso penalty or the adaptive lasso penalty (i.e., , ) identifies many more numbers of true coefficients (“Correct”) and has much lower MedSE. Compared with the results in the normal cases (Table 5), the superiority of and is more evident.

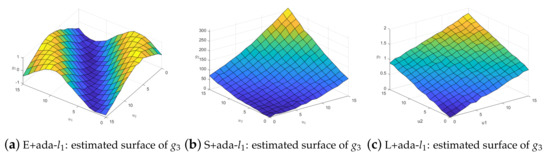

For the fitting effect diagram of the coefficient function surface, we select the case at the median position in 100 repeated experiments as the standard. Note, we present the situation when on the normal data. The fitting surfaces of , , and are shown in Figure 2.

Figure 2.

Estimated surfaces of coefficient functions with exponential squared loss.

From the fitting effect of each coefficient function, it can be seen that the model has an excellent fitting effect for unknown coefficient functions, which shows that in the case of limited samples, the fitting effect of the spatial single-index varying-coefficient model based on radial basis function and exponential squared loss is excellent. In other cases, the fitting effect of each coefficient function also performs well.

We also present the fitting evaluation index MAISE when on normal data, which is shown in Table 9. It can be seen that with the increase in the total number of spatial objects, the value of the unknown function fitting evaluation index MAISE shows a downward trend. That is, the fitting effect is getting better and better. Similarly, the MAISE value of y also shows a downward trend, indicating that for the model as a whole, the relevant data is getting closer to the real data.

Table 9.

Results of MAISE for the total number of different spatial objects.

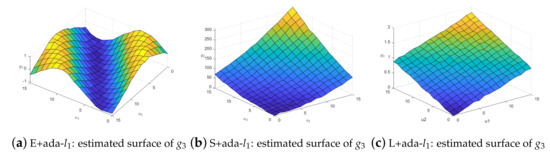

When the observations of y have outliers, the coefficient function surface fitting effect is compared. We still select the one in the median of 100 repetitions and take the fitted surface as an example. When , , , the fitting effect of loss functions with adaptive lasso is shown in Figure 3. This shows that our method performs better. The same conclusion can be conducted in the case of noisy weighting matrix W. Figure 4 illustrates the results when we remove 50% nonzero weights.

Figure 3.

Comparison of when y have outliers.

Figure 4.

Comparison of in the case of noisy weighting matrix w.

6. Summary

In this paper, we propose a novel model (the spatial single-index varying-coefficient model) and introduce a robust variable selection based on spline estimation and exponential squared loss for the model. The theoretical properties of the proposed estimators are established under reasonable assumptions. We especially design a BCD algorithm equipped with a CCCP procedure for efficiently solving the non-convex and non-differentiable mathematical optimization problem about the variable selection process. Numerical studies show that our proposed method is particularly robust and applicable when observations and the weight matrix are noisy.

Author Contributions

Conceptualization, Y.S. and Y.W.; methodology, Y.W.; software, Y.W.; validation, Z.W. and Y.S.; formal analysis, Y.W.; investigation, Z.W.; resources, Y.S.; writing—original draft preparation, Y.W.; writing—review and editing, Y.W., Z.W. and Y.S.; visualization, Y.W.; supervision, Y.S.; project administration, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

The researches are supported by the National Key Research and Development Program of China (2021YFA1000102).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The main abbreviations used in this work are as follows:

| SAR model: | Spatial autoregressive model; |

| BCD algorithm: | Block-coordinate descent algorithm; |

| DC function: | Difference between two convex functions; |

| CCCP: | concave–convex procedure; |

| ISTA: | Iterative shrinkage-thresholding algorithm; |

| FISTA: | Fast iterative shrinkage-thresholding algorithm; |

| MedSE: | Median of squared error; |

| MAISE: | Square root of mean deviation. |

Appendix A. Proofs

Appendix A.1. The Related Lemmas

Lemma A1

(Convexity Lemma). Let be a sequence of random convex functions defined on a convex, open subset of . Assume is a real-valued function on for which in probability, for each . Then, for each compact subset of

The function is necessary convex on .

Proof of Lemma A1.

For this well-known convexity lemma, there are many versions of proof, one of which can be referred to [22]. □

Lemma A2.

If , satisfy condition (C2), then there a constant exists relying only on M such that

Proof of Lemma A1.

The proof of Lemma A2 is similar to the proof of inference 6.21 in [23]. □

Appendix A.2. Poof of Main Theorems

Proof of Theorem 1.

Let

(i) Let

We will present that, for any given , a large constant exists C such that

where the true value of . and are and . Let

and

where Let . Then, through the Taylor expansion and a simple calculation, we obtain

Notice that and . Hence, though selecting a sufficiently large C, dominates uniformly in . Moreover, invoking , and by the standard argument of the Taylor expansion, we obtain

Then, it is clear to present that is dominated by uniformly in . Therefore, selecting a sufficiently large C, (A.1) holds. Hence, there exist local minimizers and such that

By calculating, we obtain , which finishes the proof of (i).

(ii) Note that

Then, invoking , a simple calculation shows

In addition, it is easy to show that

Invoking (A.2) and (A.3), we finish the proof of (ii). □

Proof of Theorem 2

(i) From , it is easy to show that for large n. Then, by Theorem 1, it is sufficient to show that, for any which satisfies

and some given small and , when , with probability approximating to one, we obtain for , and for . Let

a simple calculation shows that

where with th component 1. Under conditions (C1), (C2), (C3), and Theorem 1, it is easy to present that

The sign of the derivative is completely determined by that of . Then, for , and for hold. This finishes the proof of (i).

(ii) Applying the similar arguments as in the proof of (i), we obtain, with probability approximating to one, . Then, invoking

the result of this theorem is obtained from . □

Proof of Theorem 3.

Though Theorems 1 and 2, we obtain that, as , with probability approximating to one, , reaches the local maximizer at and . Let

Then, and satisfy

where

Applying the Taylor expansion to , we have

Furthermore, condition (C6) implies that , and note that as . From Theorems 1 and 2, we have

Therefore, a simple calculation shows that

Let

Then, from conditions (C8) and (C9), Theorem 1, and , we obtain

Thus, we can have

where is defined in (A.4). As the definition of that . Since , the proof is proved by Slutsky’s lemma and the central limit theorem. This ends with proof of Theorem 3. □

Thus, the proof is completed.

References

- Cliff, A.D. Spatial Autocorrelation; Technical Report; Pion: London, UK, 1973. [Google Scholar]

- Su, L. Semiparametric GMM estimation of spatial autoregressive models. J. Econom. 2012, 167, 543–560. [Google Scholar] [CrossRef]

- Zhang, Y.; Shen, D. Estimation of semi-parametric varying-coefficient spatial panel data models with random-effects. J. Stat. Plan. Inference 2015, 159, 64–80. [Google Scholar] [CrossRef]

- Fan, J.; Yao, Q.; Cai, Z. Adaptive varying-coefficient linear models. J. R. Stat. Soc. Ser. B 2003, 65, 57–80. [Google Scholar] [CrossRef]

- Lu, Z.; Tjøstheim, D.; Yao, Q. Adaptive varying-coefficient linear models for stochastic processes: Asymptotic theory. Stat. Sin. 2007, 17, 177-S35. [Google Scholar]

- Xue, L.; Wang, Q. Empirical likelihood for single-index varying-coefficient models. Bernoulli 2012, 18, 836–856. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, R. Profile empirical-likelihood inferences for the single-index-coefficient regression model. Stat. Comput. 2013, 23, 455–465. [Google Scholar] [CrossRef]

- Wang, X.; Jiang, Y.; Huang, M.; Zhang, H. Robust variable selection with exponential squared loss. J. Am. Stat. Assoc. 2013, 108, 632–643. [Google Scholar] [CrossRef]

- Jiang, Y. Robust estimation in partially linear regression models. J. Appl. Stat. 2015, 42, 2497–2508. [Google Scholar] [CrossRef]

- Song, Y.; Jian, L.; Lin, L. Robust exponential squared loss-based variable selection for high-dimensional single-index varying-coefficient model. J. Comput. Appl. Math. 2016, 308, 330–345. [Google Scholar] [CrossRef]

- Wang, K.; Lin, L. Robust structure identification and variable selection in partial linear varying coefficient models. J. Stat. Plan. Inference 2016, 174, 153–168. [Google Scholar] [CrossRef]

- Yu, Y.; Ruppert, D. Penalized spline estimation for partially linear single-index models. J. Am. Stat. Assoc. 2002, 97, 1042–1054. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Wang, H.; Li, G.; Jiang, G. Robust regression shrinkage and consistent variable selection through the LAD-lasso. J. Bus. Econ. Stat. 2007, 25, 347–355. [Google Scholar] [CrossRef]

- Song, Y.; Liang, X.; Zhu, Y.; Lin, L. Robust variable selection with exponential squared loss for the spatial autoregressive model. Comput. Stat. Data Anal. 2021, 155, 107094. [Google Scholar] [CrossRef]

- Yuille, A.L.; Rangarajan, A. The concave–convex procedure (CCCP). In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–8 December 2001; Volume 14. [Google Scholar]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Forsythe, G.E. Computer Methods for Mathematical Computations; Prentice-Hall: Hoboken, NJ, USA, 1977; Volume 259. [Google Scholar]

- Liang, H.; Li, R. Variable selection for partially linear models with measurement errors. J. Am. Stat. Assoc. 2009, 104, 234–248. [Google Scholar] [CrossRef] [PubMed]

- Pollard, D. Asymptotics for least absolute deviation regression estimators. Econom. Theory 1991, 7, 186–199. [Google Scholar] [CrossRef]

- Schumaker, L. Spline Functions: Basic Theory; Cambridge Mathematical Library: Cambridge, UK, 1981. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).