Abstract

We consider the “partial information decomposition” (PID) problem, which aims to decompose the information that a set of source random variables provide about a target random variable into separate redundant, synergistic, union, and unique components. In the first part of this paper, we propose a general framework for constructing a multivariate PID. Our framework is defined in terms of a formal analogy with intersection and union from set theory, along with an ordering relation which specifies when one information source is more informative than another. Our definitions are algebraically and axiomatically motivated, and can be generalized to domains beyond Shannon information theory (such as algorithmic information theory and quantum information theory). In the second part of this paper, we use our general framework to define a PID in terms of the well-known Blackwell order, which has a fundamental operational interpretation. We demonstrate our approach on numerous examples and show that it overcomes many drawbacks associated with previous proposals.

1. Introduction

Understanding how information is distributed in multivariate systems is an important problem in many scientific fields. In the context of neuroscience, for example, one may wish to understand how information about an external stimulus is encoded in the activity of different brain regions. In computer science, one might wish to understand how the output of a logic gate reflects the information present in different inputs to that gate. Numerous other examples abound in biology, physics, machine learning, cryptography, and other fields [1,2,3,4,5,6,7,8,9,10].

Formally, suppose that we are provided with a random variable Y which we call the “target”, as well as a set of n random variables which we call the “sources”. The partial information decomposition (PID), first proposed by Williams and Beer in 2010 [11], aims to quantify how information about the target is distributed among the different sources. In particular, the PID seeks to decompose the mutual information provided jointly by all sources into a set of nonnegative terms, such as redundancy (information present in each individual source), synergy (information only provided by the sources jointly, not individually), union information (information provided by at least one individual source), and unique information (information provided by only one individual source).

As discussed in detail below, the PID is inspired by an analogy between information theory and set theory. In this analogy, the information that the sources provide about the target are imagined as sets, while PID terms such as redundancy, union information, and synergy are imagined as the sizes of intersections, unions, and complements. While the analogy between information-theoretic and set-theoretic quantities is suggestive, it does not specify how to actually define the PID. Moreover, it has also been shown that existing measures from information theory (such as mutual information and conditional mutual information) cannot be used directly to construct the PID, since these measures conflate contributions from different terms like synergy and redundancy [11,12]. In response, many proposals for how to define PID terms have been advanced [5,13,14,15,16,17,18,19,20,21]. However, existing proposals suffer from various drawbacks, such as behaving counterintuitively on simple examples, being limited to only two sources, or lacking a clear operational interpretation. Today there is no generally agreed-upon way of defining the PID.

In this paper, we propose a new and principled approach to the PID which addresses these drawbacks. Our approach can handle any number of sources and can be justified in algebraic, axiomatic, and operational terms. We present our approach in two parts.

In part I (Section 4), we propose a general framework for defining the PID. Our framework does not prescribe specific definitions, but instead shows how an information-theoretic decomposition can be grounded in a formal analogy with set theory. Specifically, we consider the definitions of “set intersection” and “set union” in set theory: the intersection of sets is the largest set that is contained in all of the , while the union of sets is the smallest set that contains all of the . As we show, these set-theoretic definitions can be mapped into information-theoretic terms by treating “sets” as random variables, “set size” as mutual information between a random variable and the target Y, and “set inclusion” as some externally specified ordering relation ⊏, which specifies when one random variable is more informative than another. Using this mapping, we define information-theoretic redundancy and union information in the same way that the sizes of intersections and unions are defined in set theory (other PID terms, such as synergy and unique information, can be computed in a straightforward way from redundancy and union information). Moreover, while our approach is motivated by set-theoretic intuitions, as we show in Section 4.2, it can also be derived from an alternative axiomatic foundation. We finish part I by reviewing relevant prior work in information theory and the PID literature. We also discuss how our framework can be generalized beyond the standard setting of the PID and even beyond Shannon information theory, to domains like algorithmic information theory and quantum information theory.

One unusual aspect of our framework is that it provides independent definitions of union information and redundancy. Most prior work on the PID has focused exclusively on the definition of redundancy, because it assumed that union information can be determined from redundancy using the so-called “inclusion-exclusion principle”. In Section 4.3, we argue that the inclusion-exclusion principle should not be expected to hold in the context of the PID.

Part I provides a general framework. Concrete definitions of the PID can be derived from this general framework by choosing a specific “more informative” ordering relation ⊏. In fact, the study of ordering relations between information sources has a long history in statistics and information theory [22,23,24,25,26,27]. One particularly important relation is the so-called “Blackwell order” [13,28], which has a fundamental operational interpretation in terms of utility maximization in decision theory.

In part II of this paper (Section 5), we combine the general framework developed in part I with the Blackwell order. This gives rise to concrete definitions of redundancy and union information. We show that our measures behave intuitively and have simple operational interpretations in terms of decision theory. Interestingly, while our measure of redundancy is novel, our measure of union information has previously appeared in the literature under a different guise [13,17].

In Section 6, we compare our redundancy measure to previous proposals, and illustrate it with various bivariate and multivariate examples. We finish the paper with a discussion and proposals for future work in Section 7.

We introduce some necessary notation and preliminaries in the next section. In addition, we provide background regarding the PID in Section 3. All proofs, as well as some additional results, are found in the appendix.

2. Notation and Preliminaries

We use uppercase letters () to indicate random variables over some underlying probability space. We use lowercase letters () to indicate specific outcomes of random variables, and calligraphic letters () to indicate sets of outcomes. We often index random variables with a subscript, e.g., the random variable with outcomes (so does not refer to the outcome of random variable X, but rather to some generic outcome of random variable ). We use notation like to indicate that A is conditionally independent of C given B. Except where otherwise noted, we assume that all random variables have a finite number of outcomes.

We use notation like to indicate the probability distribution associated with random variable X, to indicate the joint probability distribution associated with random variables X and Y, and to indicate the conditional probability distribution of X given Y. Given two random variables X and Y with outcome sets and , we use notation like to indicate some stochastic channel of outputs given inputs . In general, a channel specifies some arbitrary conditional distribution of X given Y, which can be different from , the actual conditional distribution of X given Y (as determined by the underlying probability space).

As described above, we consider the information that a set of “source” random variables provide a “target” random variable Y. Without loss of generality, we assume that the marginal distributions and for all i have full support (if they do not, one can restrict and/or to outcomes that have strictly positive probability).

Finally, note that despite our use of the terms “source” and “target”, we do not assume any causal directionality between the sources and target (see also discussion in [29]). For example, in neuroscience, Y might be an external stimulus which causes the activity of brain regions , while in computer science Y might represent the output of a logic gate caused by inputs (so the causal direction is reversed). In yet other contexts, there could be other causal relationships among and Y, or they might not be causally related at all.

3. Background on the Partial Information Decomposition (PID)

Given a set of sources and a target Y, the PID aims to decompose , the total mutual information provided by all sources about the target, into a set of nonnegative terms such as [11,12]:

- Redundancy , the information present in each individual source. Redundancy can be considered as the intersection of the information provided by different sources and is sometimes called “intersection information” in the literature [16,18].

- Union information , the information provided by at least one individual source [12,17].

- Synergy , the information found in the joint outcome of all sources, but not in any of their individual outcomes. Synergy is defined as [17]

- Unique information in source , , the non-redundant information in each particular source. Unique information is defined as

In addition to the above terms, one can also define excluded information,

as the information in the union of the sources which is not in a particular source . To our knowledge, excluded information has not been previously considered in the PID literature, although it is the natural “dual” of unique information as defined in Equation (2).

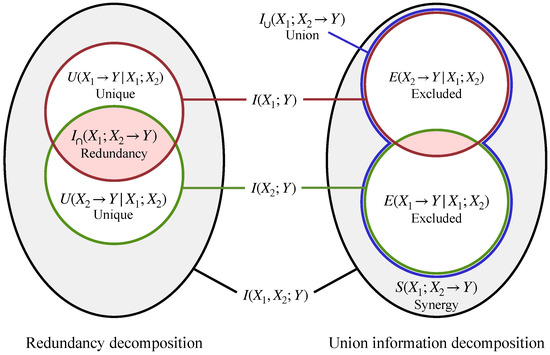

Given the definitions above, once a measure of redundancy is chosen, unique information is determined by Equation (2). Similarly, once a measure of union information is chosen, synergy and excluded information are determined by Equations (1) and (3). In Figure 1, we illustrate the relationships between these different PID terms for the simple case of two sources, and . We show two different decompositions of the information provided by the sources jointly, , and individually, and . The diagram on the left shows the decomposition defined in terms of redundancy , while the diagram on the right shows the decomposition defined in terms of union information .

Figure 1.

Partial information decomposition of the information provided by two sources about a target. On the left, we show the decomposition induced by redundancy , which leads to measures of unique information U. On the right, we show the decomposition induced by union information , which leads to measures of synergy S and excluded information E.

When more than two sources are present, the PID can be used to define additional terms, beyond the ones shown in Figure 1. For example, for three sources, one can define redundancy terms like (representing the information found in all individual sources) as well as redundancy terms like (representing the information found in all pairs of sources), and similarly for union information.

The idea that redundancy and union information lead to two different information decompositions is rarely discussed in the literature. In fact, the very concept of union information is rarely discussed in the literature explicitly (although it often appears in an implicit form via measures of synergy, since synergy is related to union information through Equation (1)). As we discuss below in Section 4.3, the reason for this omission is that most existing work assumes (whether implicitly or explicitly) that redundancy and union information are not independent measures, but are instead related via the so-called “inclusion-exclusion principle”. If the inclusion-exclusion principle is assumed to hold, then the distinction between the two decompositions disappears. We discuss this issue in greater detail below, where we also argue that the inclusion-exclusion principle should not be expected to hold in the context of the PID.

We have not yet described how the redundancy and union information measures and are defined. In fact, this remains an open research question in the field (and one which this paper will address). When they first introduced the idea of the PID, Williams and Beer proposed a set of intuitive axioms that any measure of redundancy should satisfy [11,12], which we summarize in Appendix A. In later work, Griffith and Koch [17] proposed a similar set of axioms that union information should satisfy, which are also summarized in Appendix A. However, these axioms do not uniquely identify a particular measure of redundancy or union information.

Williams and Beer also proposed a particular redundancy measure which satisfies their axioms, which we refer to as [11,12]. Unfortunately, has been shown to behave counterintuitively in some simple cases [19,20]. For example, consider the so-called “COPY gate”, where there are two sources and and the target is a copy of their joint outcomes, . If and are statistically independent, , then intuition suggests that the two sources provide independent information about Y and therefore that redundancy should be 0. In general, however, does not vanish in this case. To avoid this issue, Ince [20] proposed that any valid redundancy measure should obey the following property:

which is called the Independent identity property.

In recent years, many other redundancy measures have been proposed [13,15,16,18,19,20,21]. However, while some of these proposals satisfy the Independent identity property, they suffer various other drawbacks, such as exhibiting other types of counterintuitive behavior, being limited to two sources, and/or lacking a clear operational motivation. We discuss some of these previously proposed measures in Section 4.4, Section 5.4 and Section 6.

Unlike redundancy, to our knowledge only two measures of union information have been advanced. The first one appeared in the original work on the PID [12], and was derived from using the inclusion-exclusion principle. The second one appeared more recently [13,17] and is discussed in Section 5.4 below.

4. Part I: Redundancy and Union Information from an Ordering Relation

4.1. Introduction

As mentioned above, PID is motivated by an informal analogy with set theory [12]. In particular, redundancy is interpreted analogously to the size of the intersection of the sources , while union information is interpreted analogously to the size of their union.

We propose to define the PID by making this analogy formal, and in particular by going back to the algebraic definitions of intersection and union in set theory. In pursuing this direction, we build on a line of previous work in information theory and PID, which we discuss in Section 4.4.

Recall that in set theory, the intersection of sets (where U is some universal set) is the largest set that is contained in all (Section 7.2, [30]). This means that the size of the intersection can be written as

Similarly, the union of sets is the smallest set that contains all (Section 7.2, [30]), so the size of the union can be written as

Equations (5) and (6) are useful because they express the size of the intersection and union via an optimization over simpler terms (the size of individual sets, , and the subset inclusion relation, ⊆).

We translate these definitions to the information-theoretic setting of the PID. We take the analogue of a “set” to be some random variable A that provides information about the target Y, and the analogue of “set size” to be the mutual information . In addition, we assume that there is some ordering relation ⊏ between random variables analogous to set inclusion ⊆. Given such a relation, the expression means that random variable B is “more informative” than A, in the sense that the information that A provides about Y is contained within the information that B provides about Y.

At this point, we leave the ordering relation ⊏ unspecified. In general, we believe that the choice of ⊏ will not be determined from purely information-theoretic considerations, but may instead depend on the operational setting and scientific domain in which the PID is applied. At the same time, there has been a great deal of research on ordering relations in statistics and information theory. In part II of this paper, Section 5, we will combine our general framework with a particular ordering relation, the so-called “Blackwell order”, which has a fundamental interpretation in terms of decision theory.

We now provide formal definitions of redundancy and union information, relative to the choice of ordering relation ⊏. In analogy to Equation (5), we define redundancy as

where the maximization is over all random variables with a finite number of outcomes. Thus, redundancy is the maximum information about Y in any random variable that is less informative than all of the sources. In analogy with Equation (6), we define union information as

Thus, union information is the minimum information about Y in any random variable that is more informative than all of the sources. Given these definitions, other elements of the PID (such as unique information, synergy, and excluded information) can be defined using the expressions found in Section 3. Note that and depend the choice of ordering relation ⊏, although for convenience we leave this dependence implicit in our notation.

One of the attractive aspects of our definitions is that they do not simply quantify the amount of redundancy and union information, but also specify the “content” of that redundant and union information. In particular, the random variable Q that achieves the optimum in Equation (7) specifies the content of the redundant information via the joint distribution . Similarly, the random variable Q which achieves the optimum in Equation (8) specifies the content of the union information via the joint distribution . Note that these optimizing Q may not be unique, reflecting the fact that there may be different ways to represent the redundancy or union information. (Note also that the supremum or infinitum may not be achieved in Equations (7) and (8), in which case one can consider Q that achieve the optimal values to any desired precision .)

So far we have not made any assumptions about the ordering relation ⊏. However, we can derive some useful bounds by introducing three weak assumptions:

- Monotonicity of mutual information: (less informative sources have less mutual information).

- Reflexivity: for all A (each source is at least as informative as itself).

- For all sources , , where O indicates a constant random variable with a single outcome and indicates all sources considered jointly (each source is more informative than a trivial source and less informative than all sources jointly).

Assumptions I and II imply that the redundancy and union information of a single source are equal to the mutual information in that source:

Assumptions I and III imply the following bounds on redundancy and union information:

Equation (9) in turn implies that the unique information in each source , as defined in Equation (2), is bounded between 0 and . Similarly, Equation (10) implies that the synergy, as defined in Equation (1), obeys

where we have used the chain rule . Equation (10) also implies that excluded information in each source , as defined in Equation (3), is bounded between 0 and .

Note that in general, stronger orders give smaller values of redundancy and larger values of union information. Consider two orders ⊏ and where the first one is stronger than the second: for all A and B. Then, any Q in the feasible set of Equation (7) under ⊏ will also be in the feasible set under , and similarly for Equation (8). Therefore, defined relative to ⊏ will have a lower value than defined relative to , and vice versa for .

In the rest of this section, we discuss alternative axiomatic justifications for our general framework, the role of the inclusion-exclusion principle, relation to prior work, and further generalizations. Readers who are more interested in the use of our framework to define concrete measures of redundancy and union information may skip to Section 5.

4.2. Axiomatic Derivation

In Section 4.1, we defined the PID in terms of an algebraic analogy with intersection and union in set theory. This definition can be considered as the primary one in our framework. At the same time, the same definitions can also be derived in an alternative manner from a set of axioms, as commonly sought after in the PID literature. In particular, in Appendix B, we prove the following result regarding redundancy.

Theorem 1.

Any redundancy measure that satisfies the following five axioms is equal to as defined in Equation (7).

- Symmetry: is invariant to the permutation of .

- Self-redundancy: .

- Monotonicity: .

- Order equality: if for some .

- Existence: There is some Q such that and for all i.

While Symmetry, Self-redundancy, and Monotonicity axioms are standard in the PID literature (see Appendix A), the last two axioms require some explanation. Order equality is a generalization of the previously proposed Deterministic equality axiom, described in Appendix A, where the condition (deterministic relationship) is generalized to the “more informative” relation . This axiom reflects the idea that if a new source is more informative than an existing source , then redundancy shouldn’t decrease when is added.

Existence is the most novel of our proposed axioms. It says that for any set of sources , there exists some random variable which captures the redundant information. It is similar to the statement in axiomatic set theory that the intersection of a collection of sets is itself a set (note that in Zermelo-Fraenkel set theory, this statement is derived from the Axiom of Separation).

We can derive a similar result for union information (proof in Appendix B).

Theorem 2.

Any union information measure that satisfies the following five axioms is equal to as defined in Equation (8).

- Symmetry: is invariant to the permutation of .

- Self-union: .

- Monotonicity: .

- Order equality: if for some .

- Existence: There is some Q such that and for all i.

These axioms are dual to the redundancy axioms outlined above. Compared to previously proposed axioms for union information, as described in Appendix A, the most unusual of our axioms is Existence. It says that given a set of sources , there exists some random variable which captures the union information. It is similar in spirit to the “Axiom of Union” in axiomatic set theory [31].

Finally, note that for some choices of ⊏, there may not exist measures of redundancy and/or union information that satisfy the axioms in Theorems 1 and 2, in which case these theorems still hold but are trivial. However, even in such “pathological” cases, and can still be defined via Equations (7) and (8), as long as ⊏ has a “least informative” and a “most informative” element (e.g., as provided by Assumption III above), so that the feasible sets are not empty. In this sense, the definitions in Equations (7) and (8) are more general than the axiomatic derivations provided by Theorems 1 and 2.

4.3. Inclusion-Exclusion Principle

One unusual aspect of our approach is that, unlike most previous work, we propose separate measures of redundancy and union information.

Recall that in set theory, the size of the intersection and the union are not independent of each other, but are instead related by the inclusion-exclusion principle (IEP). For example, given any two sets S and T, the IEP states that the size of the union of S and T is given by the sum of their individual sizes minus the intersection,

More generally, the IEP relates the sizes of intersection and unions for any number of sets, via the following inclusion-exclusion formulas:

Historically, the IEP has played an important role in analogies between set theory and information theory, which began to be explored in 1950s and 1960s [32,33,34,35,36]. Recall that the entropy quantifies the amount of information gained by learning the outcome of random variable X. It has been observed that, for a set of random variables , the joint entropy behaves somewhat like the size of the union of the information in the individual variables. For instance, like the size of the union, joint entropy is subadditive () and increases with additional random variables (). Moreover, for two random variables and , the mutual information acts like the size of the intersection of the information provided by and , once intersection is defined analogously to the IEP expression in Equation (11) [35,36]. Given the general IEP formula in Equation (13), this can be used to define the size of the intersection between any number of random variables. For instance, the size of a three-way intersection is

a quantity called co-information or interaction information in the literature [32,33,35,36,37].

Unfortunately, interaction information, as well as other higher-order interaction terms defined via the IEP, can take negative values [32,35,37]. This conflicts with the intuition that information measures should always be non-negative, in the same way that set size is always non-negative.

One of the primary motivations for the PID, as originally proposed by Williams and Beer [11,12], was to solve the problem of negativity encountered by interaction information. To develop a non-negative information decomposition, Williams and Beer took two steps. First, they considered the information that a set of sources provide about some target random variable Y. Second, they developed a non-negative measure of redundancy () which leads to a non-negative union information once an IEP formula like Equation (12) is applied (Theorem 4.7, [12]). For example, in the original proposal, union information and redundancy are related via

which is the analogue of Equation (11). This can be plugged into expressions like Equation (1), so as to express synergy in terms of redundancy as

The meaning of IEP-based identities such as Equations (14) and (15) can be illustrated using the Venn diagrams in Figure 1. In particular, they imply that the pink region in the right diagram is equal in size to the pink region in the left diagram, and that the grey region in the left diagram is equal in size to the grey region in the right diagram. More generally, IEP implies an equivalence between the information decomposition based on redundancy and the one based on union information.

As mentioned in Section 3, due to shortcomings in the original redundancy measure , numerous other proposals for the PID have been advanced. Most of these proposals introduce new measures of redundancy, while keeping the general structure of the PID as introduced by Williams and Beer. In particular, most of these proposals assume that the IEP holds, so that union information can be derived from a measure of redundancy. While the assumption of the IEP is sometimes stated explicitly, more frequently it is implicit in the definitions used. For example, many proposals assume that synergy is related to redundancy via an expression like Equation (15), although (as shown above) this implicitly assumes that the IEP holds. In general, the IEP has been largely an unchallenged and unexamined assumption in the PID field. It is easy to see the appeal of the IEP: it builds on deep-seated intuitions about intersection/union from set theory and Venn diagrams, it has a long history in the information-theoretic literature, and it simplifies the problem of defining the PID since it only requires a measure of redundancy to be defined—rather than a measure of redundancy and a measure of union information. (Note that one can also start from union information and then derive redundancy via the IEP formula in Equation (13), as in Appendix B of Ref. [17], although this is much less common in the literature.)

However, there is a different way to define a non-negative PID, which is still grounded in a formal analogy with set theory but does not assume the IEP. Here, one defines measures of redundancy and union information based on the underlying algebra of intersection and union: the intersection of is the largest element that is less than each , while the union is the smallest element that is greater than each . Given these definitions, intersections and unions are not necessarily related to each numerically, as in the IEP, but are instead related by an algebraic duality.

This latter approach is the one we pursue in our definitions (it has also appeared in some prior work, which we review in the next subsection). In general, the IEP will not hold for redundancy and union information as defined in Equations (7) and (8). (To emphasize this point, we put a question mark in Equations (14) and (15), and made the sizes of the pink and grey regions visibly different in Figure 1). However, given the algebraic and axiomatic justifications for and , we do not see the violation of the IEP as a fatal issue. In fact, there are many domains where generalizations of intersections and unions do not obey the IEP. For example, it is well-known that the IEP is violated in the domain of vector spaces, once the size of a vector space is measured in terms of its dimension [38]. The PID is simply another domain where the IEP should not be expected to hold.

We believe that many problems encountered in previous work on the PID—such as the failure of certain redundancy measures to generalize to more than two sources, or the appearance of uninterpretable negative synergy values—are artifacts of the IEP assumption. In fact, the following result shows that any measures of redundancy and union information which satisfy several reasonable assumptions must violate the IEP as soon as 3 or more sources are present (the proof, in Appendix I, is based on a construction from [39,40]).

Lemma 1.

Let be any nonnegative redundancy measure which obeys Symmetry, Self-redundancy, Monotonicity, and Independent identity. Let be any union information measure which obeys . Then, and cannot be related by the inclusion-exclusion principle for 3 or more sources.

The idea that different information decompositions may arise from redundancy versus synergy (and therefore union information) has recently appeared in the PID literature [15,40,41,42,43]. In particular, Chicharro and Panzeri proposed a PID that involves two decomposition: an “information gain” decomposition based on redundancy and an “information loss” decomposition based on synergy [41]. These decompositions correspond to the two Venn diagrams shown in Figure 1.

4.4. Relation to Prior Work

Here we discuss prior work which is relevant to our algebraic approach to the PID.

First, note that our definitions of redundancy and union information in Equations (7) and (8) are closely related to notions of “meet” and “join” in a field of algebra called order theory, which generalize intersections and unions to domains beyond set theory [44]. Given a set of objects S and an order ⊏, the meet of is the unique largest that is smaller than both a and b: and for any d that obeys . Similarly, the join of is the unique smallest c that is larger than both a and b: and for any d that obeys . Note that meets and joins are only defined when ⊏ is a special type of partial order called a lattice. This is a strict requirement, and many important ordering relations in information theory are not lattices (this includes the “Blackwell order”, which we will consider in part II of this paper [45]).

In our approach, we do not require the ordering relation ⊏ to be a lattice, or even a partial order. We do not require these properties because we do not aim to find the unique union random variable or the unique redundancy random variable. Instead, we aim to quantify the size of the intersection and the size of the union, which we do by optimizing mutual information subject to constraints, as Equations (7) and (8). These definitions are well-defined even when ⊏ is not a lattice, which allows us to consider a much broader set of ordering relations.

We mention three important precursors of our approach that have been proposed in the PID literature. First, Griffith et al. [16] considered the following order between random variables:

This ordering relation ⊲ was first considered in a 1953 paper by Shannon [22], who showed that it defines a lattice over random variables. That paper was the first to introduce the algebraic idea of meets and joins into information theory, leading to an important line of subsequent research [46,47,48,49,50]. Using this order, Ref. [16] defined redundancy as the maximum mutual information in any random variable that is a deterministic function of all of the sources,

which is clearly a special case of Equation (7). Unfortunately, in practice, is not a useful redundancy measure, as it tends to give very small values and is highly discontinuous. For example, whenever the joint distribution has full support, meaning that it vanishes on almost all joint distributions [16,18,47]. The reason for this counterintuitive behavior is that the order ⊲ formalizes an extremely strict notion of “more informative”, which is not robust to noise.

Given the deficiencies of , Griffith and Ho [18] proposed another measure of redundancy (also discussed as in Ref. [49]),

This measure is also a special case of Equation (7), where the more informative relation is formalized via the conditional independence condition . This measure is similar to the redundancy measure we propose in part II of this paper, and we discuss it in more detail in Section 5.4. (Note that there are some incorrect claims about in the literature: Lemmas 6 and 7 of Ref. [49] incorrectly state that whenever and are independent—see the AND gate counterexample in Section 6—while Ref. [18] incorrectly states that obeys a property called Target Monotonicity).

Finally, we mention the so-called “minimum mutual information” redundancy [51]. This is perhaps the simplest redundancy measure, being equal to the minimal mutual information in any source: . It can be written in the form of Equation (7) as

This redundancy measure has been criticized for depending only on the amount of information provided by the different sources, being completely insensitive to the content of that information. Nonetheless, can be useful in some settings, and it plays an important role in the context of Gaussian random variables [51].

Interestingly, unlike , the original redundancy measure proposed by Williams and Beer [11], , does not appear to be a special case of Equation (7) (at least not under the natural definition of the ordering relation ⊏). We demonstrate this using a counter-example in Appendix H.

As mentioned in Section 4.1, stronger ordering relations give smaller values of redundancy. For the orders considered above, it is easy to show that

This implies that . In fact, is the largest measure that is compatible with the monotonicity of mutual information (Assumption I in Section 4.1).

4.5. Further Generalizations

We finish part I of this paper by noting that one can further generalize our approach, by considering other analogues of “set”, “set size”, and “set inclusion” beyond the ones considered in Section 4.1. Such generalizations allow one to analyze notions of information intersection and union in a wide variety of domains, including setups different from the standard one considered in the PID, and domains not based on Shannon information theory.

At a general level, consider a set of object that represents possible “sources”, which may be random variables, as in Section 4.1, or otherwise. Assume there is some function that quantifies the “amount of information” in a given source , and some relation ⊏ on that indicates which sources are more informative than others. Then, in analogy to Equations (5) and (6), for any set of sources , one can define redundancy and union information as

Synergy, unique, and excluded information can then be defined via Equations (1) to (3).

There are many possible examples of such generalizations, of which we mention a few as illustrations.

- Shannon information theory (beyond mutual information). In Section 4.1, was the mutual information between each random variable and some target Y. This can be generalized by choosing a different “amount of information” function , so that redundancy and union information are quantified in terms of other measures of statistical dependence. Among many other options, possible choices of include Pearson’s correlation (for continuous random variables) and measures of statistical dependency based f-divergences [52], Bregman divergences [53], and Fisher information [54].

- Shannon information theory (without a fixed target). The PID can also be defined for a different setup than the typical one considered in the literature. For example, consider a situation where the sources are channels , while the marginal distribution over the target Y is left unspecified. Here one may take as the set of channels, as the channel capacity , and ⊏ as some ordering relation on channels [24]

- Algorithmic information theory. The PID can be defined for other notions of information, such as the ones used in Algorithmic Information Theory (AIT) [55]. In AIT, “information” is not defined in terms of statistical uncertainty, but rather in terms of the program length necessary to generate strings. For example, one may take as the set of finite strings, ⊏ as algorithmic conditional independence (, where is conditional Kolmogorov complexity), and as the “algorithmic mutual information” with some target string y. (This setup is closely related to the notion of algorithmic “common information” [47]).

- Quantum information theory. As a final example, the PID can be defined in the context of quantum information theory. For example, one may take as the set of quantum channels, ⊏ as quantum Blackwell order [56,57,58], and , where is the Ohya mutual information for some target density matrix under channel [59].

5. Part II: Blackwell Redundancy and Union Information

In the first part of this paper, we proposed a general framework for defining PID terms. In this section, which forms part II of this paper, we develop a concrete definition of redundancy and union information by combining our general framework with a particular ordering relation ⊏. This ordering relation is called the “Blackwell order”, and it plays a fundamental role in statistics and decision theory [28,45,60]. We first introduce the Blackwell order, then use it to define measures of redundancy and union information, and finally discuss various properties of our measures.

5.1. The Blackwell Order

We begin by introducing the ordering relation that we use to define our PID. Given three random variables and Y, the ordering relation is defined as follows:

We refer to the relation as the Blackwell order relative to random variable Y. (Note that the Blackwell order and Blackwell’s Theorem are usually formulated in terms of channels—that is, conditional distributions like and —rather than of random variables as done here. However, these two formulations are equivalent, as shown in [45]).

In words, Equation (23) means the conditional distribution by can be generated by first sampling from the conditional distribution , and then applying some channel to the outcome. The relation implies that is more noisy than and, by the “data processing inequality” [61], B must have less mutual information about Y than C:

Intuition suggests that when , the information that B provides about Y is contained in the information that C provides about Y. This intuition is formalized within a decision-theoretic framework using the so-called Blackwell’s Theorem [28,45,60]. To introduce this theorem, imagine a scenario in which Y represents the state of the environment. Imagine also that there is an agent who acquires information about the environment via the conditional distribution , and then uses outcome to select actions according to some “decision rule” given by the channel . Finally, the agent gains utility according to some utility function , which depends on the agent’s action a and the environment’s state y. The maximum expected utility achievable by any decision rule is given by

From an operational perspective, it is natural to say that B is less informative than C about Y if there is no utility function such that an agent with access to B can achieve higher expected utility than an agent with access to C. Blackwell’s Theorem states that this is precisely the case if and only if [28,45]:

In some sense, this operational description of the relation is deeper than the data processing inequality, Equation (24), which says that is sufficient (but not necessary) for . In fact, it can happen that even though [26,60,62].

A connection between PID and Blackwell’s theorem was first proposed in [13], which argued that the PID should be defined in an operational manner (see Section 5.3 for further discussion of [13]).

5.2. Blackwell Redundancy

We now define a measure of redundancy based on the Blackwell order. Specifically, we use our general definition of redundancy, Equation (7), while using the Blackwell order relative to Y as the “more informative” relation ⊏:

We refer to this measure as Blackwell redundancy.

Given Blackwell’s Theorem, has a simple operational interpretation. Imagine two agents, Alice and Bob, who can acquire information about Y via different random variables, and then use this information to maximize their expected utility. Suppose that Alice has access to one of the sources . Then, the Blackwell redundancy is the maximum information that Bob can have about Y without being able to do better than Alice on any utility function, regardless of which source Alice has access to.

Blackwell redundancy can also be used to define a measure of Blackwell unique information, , via Equation (2). As we show in Appendix I, satisfies the following property, which we term the Multivariate Blackwell property.

Theorem 3.

if and only if for all .

Operationally, Theorem 3 means that source has non-zero unique information iff there exists a utility function such that an agent with access to source can achieve higher utility than an agent with access to any other source .

Computing involves maximizing a convex function subject to a set of linear constraints. These constraints define a feasible set which is a convex polytope, and the maximum must lie on one of the vertices of this polytope [63]. In Appendix C, we show how to solve this optimization problem. In particular, we use a computational geometry package to enumerate the vertices of the feasible set, and then choose the best vertex (code is available at [64]). In that appendix, we also prove that an optimal solution to Equation (27) can always be achieved by Q with cardinality . Note that the supremum in Equation (27) is always achieved. Note also that satisfies the redundancy axioms in Section 4.2.

As discussed above, solving the optimization problem in Equation (27) gives a (possibly non-unique) optimal random variable Q which specifies the content of the redundant information. As shown in Appendix C, solving Equation (27) also provides a set of channels for each source , which identify the redundant information in each source.

Note that the Blackwell order satisfies assumptions I-III in Section 4.1, thus Blackwell redundancy satisfies the bounds derived in that section. Finally, note that like many other redundancy measures, Blackwell redundancy becomes equivalent to the measure (as defined in Equation (19)) when applied to Gaussian random variables (for details, see Appendix E).

5.3. Blackwell Union Information

We now define a measure of union information using our general definition in Equation (8), while using the Blackwell order relative to Y as the “more informative” relation:

We refer to this measure as Blackwell union information.

As for Blackwell redundancy, Blackwell union information can be understood in operational terms. Consider two agents, Alice and Bob, whose use information about Y to maximize their expected utility. Suppose that Alice has access to one of the sources . Then, the Blackwell union information is the minimum information that Bob must have about Y in order to do better than Alice on any utility function, regardless of which source Alice has access to.

Blackwell union information can be used to define measures of synergy and excluded information via Equations (1) and (3). The resulting measure of excluded information satisfies the following property, which is the “dual” of the Multivariate Blackwell property considered in Theorem 3. (See Appendix I for the proof).

Theorem 4.

if and only if for all .

Operationally, Theorem 4 means that there is excluded information for source iff there exists a utility function such that an agent with access to one of the other sources can achieve higher expected utility than an agent with access to .

We discuss the problem of numerically solving the optimization problem in Equation (28) in the next subsection.

5.4. Relation to Prior Work

Our measure of Blackwell redundancy is new to the PID literature. The most similar existing redundancy measure is [18], which is discussed above in Section 4.4. is a special case of Equation (7), once the “more informative” relation is defined in terms of conditional independence . Note that conditional independence is stronger than the Blackwell order: given the definition of in Equation (23), it is clear that implies (the channel can be taken to be ), but not vice versa. As discussed in Section 4.1, stronger ordering relations give smaller values of redundancy, so in general . Note also that depends only on the pairwise marginals and , while conditional independence depends on the joint distribution . As we discuss in Appendix F, the conditional independence order can be interpreted in decision-theoretic terms, which suggests an operational interpretation for .

Interestingly, Blackwell union information is equivalent to two measures that have been previously proposed in the PID literature, although they were formulated in a different way. Bertschinger et al. [13] considered the following measure of bivariate redundancy:

where is defined via the optimization problem

and reflects the minimal mutual information that two random variables can have about Y, given that their pairwise marginals with Y are fixed to be and . Note that Ref. [13] did not refer to as a measure of union information (we use our notation in writing it as ). Instead, these measures were derived from an operational motivation, with the goal of deriving a unique information measure that obeys the so-called Blackwell property: if (see Theorems 3 and 4 above).

Starting from a different motivation, Griffith and Koch [17] proposed a multivariate version of ,

The goal of Ref. [17] was to derive a measure of multivariate synergy from a measure of union information, as in Equation (1). In that paper, was explicitly defined as a measure of union information. To our knowledge, Ref. [17] was the first (and perhaps only) paper to propose a measure of union information that was not derived from redundancy via the inclusion-exclusion principle.

While and are stated as different optimization problems, we prove in Appendix G that these optimization problems are equivalent, in that they will always achieve the same optimum value. Interestingly, since and are equivalent, our measure of Blackwell redundancy appears as the natural dual to . Another implication of this equivalence is that Blackwell union information can be quantified by solving the optimization problem in Equation (31), rather than Equation (28). This is advantageous, because Equation (31) involves the minimization of a convex function over a convex polytope, which can be solved using standard convex optimization techniques [65].

In Ref. [13], the redundancy measure in Equation (29) was only defined for the bivariate case. Since then, it has been unclear how to extend this redundancy measure to more than two sources. However, by comparing Equations (14) and (29), we see the root of the problem: is derived by applying the inclusion-exclusion principle to a measure of union information, . It cannot be extended to more than two sources because the inclusion-exclusion principle generally leads to counterintuitive results for more than 2 sources, as shown in Lemma 1. Note also that what Ref. [13] called the unique information in , , in our framework would be considered a measure of the excluded information for .

At the same time, the union information measure , and the corresponding synergy from Equation (1), does not use the inclusion-exclusion principle. Therefore, it can be easily extended to any number of sources [17].

5.5. Continuity of Blackwell Redundancy and Union Information

It is often desired that information-theoretic measures are continuous, meaning that small changes in underlying probability distributions lead to small changes in the resulting measures. In this section, we consider the continuity of our proposed measures, and .

We first consider Blackwell redundancy . It turns out that this measure is not always continuous in the joint probability (a discontinuous example is provided in Section 5.6). However, the discontinuity of is not necessarily pathological, and we can derive an interpretable geometric condition that guarantees that is continuous.

Consider the conditional distribution of the target Y given some source , . Let indicate its rank, meaning the dimension of the space spanned by the vectors . The rank of quantifies the number of independent directions that the target distribution can be moved by manipulating the source distribution , and it cannot be larger than . The next theorem shows that is locally continuous, as long as or more of the source conditional distributions have this maximal rank.

Theorem 5.

As a function of the joint distribution , is locally continuous whenever or more of the conditional distributions have .

In proving this result, we also show that is continuous almost everywhere (see proof in Appendix D). Finally, in that appendix we also use Theorem 5 to show that is continuous everywhere if Y is a binary random variable.

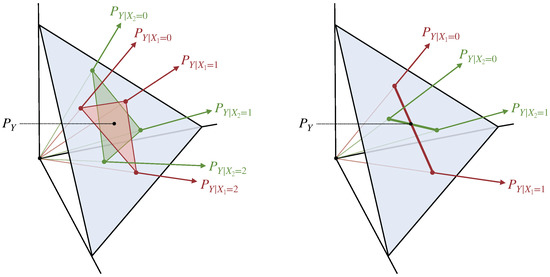

We illustrate the meaning of Theorem 5 visually in Figure 2. We show two situations, both of which involve two sources and and a target Y with cardinality . In one situation, both pairwise conditional distributions have rank equal to , so is locally continuous. In the other situation, both pairwise conditional distributions are rank deficient (e.g., this might happen because and have cardinality ), so is not guaranteed to be continuous. From the figure it is easy to see how the discontinuity may arise. Given the definition of the Blackwell order and , for any random variable Q in the feasible set of Equation (27), the conditional distributions must fall within the intersection of the distributions spanned by and (the intersection of the red and green shaded regions in Figure 2). On the right, the size of this intersection can discontinuously jump from a line (when and are perfectly aligned) to a point (when and are not perfectly aligned). Thus, the discontinuity of arises from a geometric phenomenon, which is related to the discontinuity of the intersection of low-dimensional vector subspaces.

Figure 2.

Illustration of Theorem 5, which provides a sufficient condition for the local continuity of . Consider two scenarios, both of which involves two sources and and a target Y with cardinality . The blue areas indicate the simplex of probability distributions over , with the marginal and the pairwise conditionals marked. On the left, both sources have , so is locally continuous. On the right, both sources have , so is not necessarily locally continuous. Note that is also continuous if only source has .

We briefly comment on the continuity of . As we described above, this measure turns out to be equivalent to . The continuity of in the bivariate case was proven in Theorem 35 of Ref. [66]. We believe that the continuity of for an arbitrary number of sources can be shown using similar methods, although we leave this for future work.

5.6. Behavior on the COPY Gate

As mentioned in Section 3, the “COPY gate” example is often used to test the behavior of different redundancy measures. The COPY gate has two sources, and , and a target which is a copy of the joint outcome. It is expected that redundancy should vanish if and are statistically independent, as formalized by the Independent identity property in Equation (4).

Blackwell redundancy satisfies the Independent identity. In fact, we prove a more general result, which shows that is equal to an information-theoretic measure called Gács-Körner common information [16,47,67]. quantifies the amount of information that can be deterministically extracted from both random variables X or Y, and it is closely related to the “deterministic function” order ⊲ defined in Equation (16). Formally, it can be written as

where H is Shannon entropy. In Appendix I, we prove the following result.

Theorem 6.

.

Note that [47], so satisfies the Independent identity property. At the same time, can be strictly less than . For example, if has full support, then can be arbitrarily large while (see proof of Theorem 6). This means that violates a previously proposed property, sometimes called the Identity property, that suggests that redundancy should satisfy . However, the validity of the Identity property is not clear, and several papers have argued against it [15,39].

The value of depends on the precise pattern of zeros in the joint distribution and is therefore not continuous. For instance, for the bivariate COPY gate, redundancy can change discontinuously as one goes from the situation where (so that all information is redundant, ) to one where and are almost, but not entirely, identical. This discontinuity can be understood in terms of Theorem 5 and Figure 2: in the COPY gate, the cardinality of the target variable is larger than the cardinality of the individual sources. In other words, when the sources and are not perfectly correlated, they provide information about different “subspaces” of the target , and so it is possible that very little (or none) of their information is redundant.

At the same time, the Blackwell property, Theorem 3, implies that

In other words, the redundancy in and , where either one of the individual sources is taken as the target, is given by the mutual information . This holds even though the redundancy in the COPY gate can be much lower than .

It is also interesting to consider how Blackwell union information, , behaves on the COPY gate. Using techniques from [13], it can be shown that the union information is simply the joint entropy,

Since , Equations (1) and (34) together imply that the COPY gate has no synergy.

Note that we can use Theorem 6 and Equation (34) to illustrate that and violate the inclusion-exclusion principle, Equation (14). Using Equation (34) and a bit of rearranging, Equation (14) becomes equivalent to , which is the Identity property mentioned above. violates this property, since redundancy for the COPY gate can be smaller than .

6. Examples and Comparisons to Previous Measures

In this section, we compare our proposed measure of Blackwell redundancy to existing redundancy measures. We focus on redundancy, rather than union information, because redundancy has seen much more development in the literature, and because Blackwell union information is equivalent to an existing measure (see Section 5.4).

6.1. Qualitative Comparison

In Table 1, we compare to six existing measures of multivariate redundancy:

Table 1.

Comparison of different redundancy measures. ? indicate properties that we could not easily establish.

- , the redundancy measure first proposed by Williams and Beer [11].

- , proposed by Ince [20].

- , proposed by Finn and Lizier [21].

We also compare to three existing measures of bivariate redundancy (i.e., for 2 sources):

- , proposed by Bertschinger et al. [13], defined in Equation (29).

- , proposed by Harder et al. [19].

- , proposed by James et al. [15].

For as well as the 9 existing measures, we consider the following properties, which are chosen to highlight differences between our approach and previous proposals:

- Has it been defined for more than 2 sources

- Does it obey the Monotonicity axiom from Section 4.2

- Is it compatible with the inclusion-exclusion principle (IEP) for the bivariate case, such that union information as defined in Equation (14) obeys

- Does it obey the Independent identity property, Equation (4)

- Does it obey the Blackwell property (possibly in its multivariate form, Theorem 3)

We also consider two additional properties, which require a bit of introduction.

The first property was suggested by Ref. [13], who argued that redundancy should only depend on the pairwise marginal distributions of each source with the target,

In Table 1, we term this property Pairwise marginals. We believe that the validity of Equation (35) is not universal, but may depend on the particular setting in which the PID is being used. However, redundancy redundancy measures that satisfy this property have one important advantage: they are well-defined not only when the sources are random variables , but also in the more general case when the sources are channels .

The second property has not been previously considered in the literature, although it appears to be highly intuitive. Observe that the target random variable Y contains all possible information about itself. Thus, it may be expected that adding the target to the set of sources should not decrease the redundancy:

In Table 1, we term this property Target equality. Note that for redundancy measures which can be put in the form of Equation (7), Target Equality is satisfied if the order ⊏ obeys for all sources . (Note also that Target Equality is unrelated to the previously proposed Strong Symmetry property; for instance, it is easy to show that the redundancy measures and satisfy Target Equality, even though they violate Strong Symmetry [68]).

6.2. Quantitative Comparison

We now illustrate our proposed measure of redundancy on some simple examples, and compare its behavior to existing redundancy measures.

The values of were computed with our code, provided at [64]. The values of all other redundancy measures except were computed using the dit Python package [69]. To our knowledge, there have been no previous proposals for how to compute . In fact, this measure involves maximizing a convex function subject to linear constraints, and can be computed using similar methods as . We provide code for computing at [64].

We begin by considering some simple bivariate examples. In all cases, the sources and are binary and uniformly distributed. The results are shown in Table 2.

Table 2.

Behavior of and other redundancy measures on bivariate examples.

- The AND gate, , with and independent. (It is incorrectly stated in Refs. [18,49] that vanishes here; actually , which corresponds to the maximum achieved in Equation (18) by .)

- The SUM gate: , with and independent.

- The UNQ gate: . Here (marked with ∗) gave values that increased with the amount of correlation between and but were typically larger than .

- The COPY gate: . Here, our redundancy measure is equal to the Gács-Körner common information between X and Y, as discussed in Section 5.6. The same holds for the redundancy measures and , which can be shown using a slight modification of the proof of Theorem 6. For this gate, (marked with ∗) gave the same values as for the UNQ gate, which increased with the amount of correlation between and but were typically larger than .

We also analyze several examples with three sources, with the results shown in Table 3. We considered those previously proposed measures which can be applied to more than two sources (we do not show , as our implementation was too slow for these examples).

Table 3.

Behavior of and other redundancy measures on three sources.

- Three-way AND gate: , where the sources are binary and uniformly and independently distributed.

- Three-way SUM gate: , where the sources are binary and uniformly and independently distributed.

- “Overlap” gate: we defined four independent uniformly distributed binary random variables, . These were grouped into three sources as , , . The target was the joint outcome of all three sources, . Note that the three sources overlap on a single random variable A, which suggests that the redundancy should be 1 bit.

7. Discussion and Future Work

In this paper, we proposed a new general framework for defining the partial information decomposition (PID). Our framework was motivated in several ways, including a formal analogy with intersections and unions in set theory as well as an axiomatic derivation.

We also used our general framework to propose concrete measures of redundancy and union information, which have clear operational interpretations based on Blackwell’s theorem. Other PID measures, such as synergy and unique information, can be computed from our measures of redundancy and union information via simple expressions.

One unusual aspect of our framework is that it provides separate measures of redundancy and union information. As we discuss above, most prior work on the PID assumed that redundancy and union information are related to each other via the so-called “inclusion-exclusion” principle. We argue that the inclusion-exclusion principle should not be expected to hold in the context of the PID, and in fact that it leads to counterintuitive behavior once 3 or more sources are present. This suggests that different information decompositions should be derived for redundancy vs. union information. This idea is related to a recent proposal in the literature, which argues that two different PIDs are needed, one based on redundancy and one based on synergy [41]. An interesting direction for future work is to relate our framework with the dual decompositions proposed in [41].

From a practical standpoint, an important direction for future work is to develop better schemes for computing our redundancy measure. This measure is defined in terms of a convex maximization problem, which in principle can be NP-hard (a similar convex maximization problem was proven to be NP-hard in [70]). Our current implementation, which enumerates the vertices of the feasible set, works well for relatively small state spaces, but we do not expect it to scale to situations with many sources, or where the sources have large cardinalities. However, the problem of convex maximization with linear constraints is a very active area of optimization research, with many proposed algorithms [63,71,72]. Investigating these algorithms, as well as various approximation schemes such as relaxations and variational bounds, is of interest.

Finally, we showed how our framework can be used to define measures of redundancy and union information in situations that go beyond the standard setting of the PID (e.g., when the probability distribution of the target is not specified). Our framework can even be applied in domains beyond Shannon information theory, such as algorithmic information theory and quantum information theory. Future work may exploit this flexibility to explore various new applications of the PID.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Acknowledgments

We thank Paul Williams, Alexander Gates, Nihat Ay, Bernat Corominas-Murtra, Pradeep Banerjee, and especially Johannes Rauh for helpful discussions and suggestions. We also thank the Santa Fe Institute for helping to support this research.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. PID Axioms

In developing the PID framework, Williams and Beer [11,12] proposed that any measure of redundancy should obey a set of axioms. In slightly modified form, these axioms can be written as follows:

- Symmetry: is invariant to the permutation of .

- Self-redundancy: .

- Monotonicity: .

- Deterministic equality: if for some and deterministic function f.

These axioms are based on intuitions regarding the behavior of intersection in set theory [12]. The Symmetry axiom is self-explanatory. Self-redundancy states that if only a single-source is present, all of its information is redundant. Monotonicity states that redundancy should not increase when an additional source is considered (consider that the size of set intersection can only decrease as more sets are considered). Deterministic equality states that redundancy should remain the same when an additional source is added that contains all (or more) of the same information that is already contained in an existing source (which is formalized as the condition ).

Union information was considered the original PID proposal [12,73], as well as a more recent paper [17]. Ref. [17] proposed that any measure of union information should satisfy the following set of natural axioms, stated here in slightly modified form:

- Symmetry: is invariant to the permutation of .

- Self-union: .

- Monotonicity: .

- Deterministic equality: if for some and deterministic function f.

These axioms are based on intuitions concerning the behavior of the union operator in set theory, and are the natural “duals” of the redundancy axioms mentioned above.

Appendix B. Uniqueness Proofs

Proof of Theorem 1.

Assume there is a redundancy measure that obeys the five axioms stated in the theorem. We will show that , as defined in Equation (7).

Given Equation (7) and the definition of the supremum, for any there exists a random variable Q such that for and

By Order equality, . Induction gives

where we used Self-redundancy and Equation (A1). We also have by Symmetry and Monotonicity. Combining gives

We now show that is the largest measure that satisfies Existence. Let Q be a random variable that obeys for all and . Since Q falls within the feasible set of the optimization problem in Equation (7),

Combining gives

Since this holds for all , taking the limit gives . □

Proof of Theorem 2.

Assume there is a union information measure that obeys the five axioms stated in the theorem. We will show that , as defined in Equation (8).

Given Equation (8) and the definition of the infinitum, for any there exists a random variable Q such that for and

By Order equality, . Induction gives

where we used Self-union and Equation (A2). We also have by Symmetry and Monotonicity. Combining gives

We now show that is the smallest measure that satisfies Existence. Let Q be a random variable that obeys for all and . Since Q falls within the feasible set of the optimization problem in Equation (8),

Combining gives

Since this holds for all , taking the limit gives . □

Appendix C. Computing

Here we consider the optimization problem that defines our proposed measure of redundancy, Equation (27). We first prove a bound on the required cardinality of Q.

Theorem A1.

For optimizing Equation (27), it suffices to consider Q with cardinality .

Proof.

Consider any random variable Q with outcome set which satisfies for all i. We show that whenever Q has full support on outcomes, there is another random variable which achieves , while satisfying for all i and having support on at most outcomes.

To begin, let indicate the set of random variables over outcomes , such that all satisfy:

Since , by Equation (23) there exist channels that satisfy Now write the conditional distribution over and Y as

where we used Equation (A3) and defined the channel as

(Note this is a kind of double Bayesian inverse, given Equation (A4)). Equation (A5) implies that for all i.

We now show that there is that achieves and has support on at most outcomes in . Write the mutual information between any and Y as

where is the Kullback-Leibler divergence. We consider the maximum of this mutual information across , . Using Equations (A4) and (A6), this maximum can be written as

where is the set of all distributions over . By conservation of probability, , so we can eliminate a constraint for one of the outcomes of each source i. Thus, is the maximum of a linear function over , subject to hyperplane constraints.

The feasible set is compact, and the maximum will be achieved at one of the extreme points of the feasible set. By Dubin’s Theorem [74], any extreme point of this feasible set can be expressed as a convex combination of at most extreme points of . In other words, the maximum in Equation (27) is achieved by a random variable with support on at most values of . This random variable satisfies

where the last inequality comes from the fact that Q is an element of . □

We now return to the optimization problem in Equation (27). Given Theorem A1 and the definition of the Blackwell order in Equation (23), it can be rewritten as

where the optimization is over channels with of cardinality . The notation indicates the mutual information that arises from the marginal distribution and the conditional distribution ,

Equation (A7) involves maximizing a convex function over the convex polytope defined by the following system of linear (in)equalities:

We do not place a constraint on in Equation (A12) because that would be redundant with the constraints Equations (A10) and (A11). Also, note that we replaced the sup in Equation (27) with max in Equation (A7), which is justified since we are optimizing over a finite dimensional, closed, and bounded region (thus the supremum is always achieved).

The maximum of a convex function over a convex polytope is found at one of the vertices of the polytope. To find the solution to Equation (A7), we use a computational geometry package to enumerate the vertices of . We evaluate at each vertex, and pick the maximum value. This procedure also finds optimal conditional distributions . Code is available at [64].

Appendix D. Continuity of

To prove the continuity of , we begin by considering the feasible set of the optimization problem in Equation (A7), as specified by the system (in)equalities in Equations (A8) to (A12). For convenience, write this system of (in)equalities in matrix notation,

where is a vector representation of , the matrix A encodes the left-hand side of Equations (A10) to (A12), and the vector a is filled with 1s and 0s, as appropriate.

We first prove the following lemma.

Lemma A1.

The matrix A defined in Equation (A13) is full rank if or more of the pairwise conditional distributions have .

Proof.

Without loss of generality, assume that has full support (otherwise none of the pairwise marginals can achieve rank ). Write A in block matrix form as , where the matrix B has rows and encodes the constraints of Equations (A10) and (A11), and the matrix C has rows and encodes the constraints of Equation (A12).

Each row in B has a 1 in some column which is zero in every other row of B and every row of C. This column corresponds either to for a particular y (for constraints like Equation (A10)), or to for a particular i and (for constraints like Equation (A11)). These columns are 0 in C because is omitted Equation (A12). This means that no row of B is a linear combination of other rows in B or C, and that no row in C is a linear combination of any set of other rows that includes a row in B. Therefore, if the rows of A are linearly dependent, it must be that the rows of C are linearly dependent.

Next, let indicate the row of C that represents the constraints in Equation (A12) for some source i and outcomes . Any such row has a column for each with value (at the same index as the row in that represents ). Since for at least one , one of these columns must be non-zero. At the same time, these columns are zero in every row where or . This means that row can only be a linear combination of other rows in C if, for all , is a linear combination of for . In linear algebra terms, this can be stated as .

The previous argument shows that if A is linearly dependent, there must be at least one source i with and some row which is a linear combination of other rows from C. Observe that this row has a column with value (at the same index as the row in that represents ). This column is zero in every other row for or . This means that is a linear combination of a set of other rows in C that include some row for . This implies that is also a linear combination of other rows in C, which means that .

We have shown that if A is linearly dependent, there must be at least two pairwise conditionals with . □

We are now ready to prove Theorem 5.

Proof of Theorem 5.

For the case of a single source (), reduces to the mutual information , which is continuous (Section 2.3, [75]). Thus, without loss of generality, we assume that .

Next, we define some notation. Note that the optimum value () and the feasible set of the optimization problem in Equation (A7) is a function of the pairwise marginal distributions . We write for the set of all pairwise marginal distributions which have the same marginal over Y:

For any , let indicate the corresponding optimum value in Equation (A7), given the marginals in r, and let indicate the feasible set of the optimization problem, as defined in Equation (A13).

Note that the matrix A in Equation (A13) depends on the choice of r, which we indicate by writing it as the matrix-valued function . Given any and feasible solution , let indicate the corresponding mutual information , where the marginal distribution over Y is specified by r and the conditional distribution of Q given Y is specified by . Using this notation, .

Below, we show that is continuous if r is rank regular [76], which means that there is a neighborhood of r such that for all . Then, to prove the theorem, we assume that is full rank. Given Lemma A1, this is true as long as or more of the pairwise conditionals have . Note that a matrix M is full rank iff the singular values are all strictly positive. Since is full rank, and and are continuous, there is a neighborhood U of r such that the singular values are all strictly positive for all , therefore all have full rank. This shows that r is rank regular and so is continuous at r.

We now prove that is continuous if is rank regular. To do so, we will use Hoffman’s Theorem [77,78]. In our case, it states that for any pair of marginals and a feasible solution , there exists a feasible solution such that

where is a constant that does not depend on or . (In the notation of [78], we take , and , and use that the norm of is bounded, given that it is finite dimensional and has entries in ). We will also use Daniel’s theorem (Theorem 4.2, [78]), which states that for any such that , and any feasible solution , there exists such that

where is a constant that doesn’t depend on (in the notation of [78], and again use that have a bounded norm).

Now consider also any sequence that converges to a marginal . Let indicate an optimal solution of Equation (A7) for , so that . Given Equation (A14), there is a corresponding sequence such that

Since is continuous and converges to r, we have and therefore . This implies

where we first used continuity of mutual information, and .

Now assume that r is rank regular. Since converges to r, for all sufficiently large i. Let be an optimal solution of Equation (A7) for r, so that . Given Equation (A15), for all sufficiently large i there exists such that

As before, we have and , which implies

where we first used continuity of mutual information, , and .

Finally, note that is a real analytic function of r. This means that almost all r rank regular, because those r which are not rank regular form a proper analytic subset of (which has measure zero) [76]. Thus, is continuous almost everywhere. □

We finish our analysis of the continuity of by showing global continuity when the target is a binary random variable.

Corollary A1.

is continuous everywhere when Y is a binary random variable.

Proof.

In an overloading of notation, let and indicate and the mutual information , respectively, for the joint distribution . By Theorem 5, can only be discontinuous at the joint distribution if there is a source with . However, if source has rank 1, then the conditional distributions are the same for all , so and (since ). Finally, consider any sequence of joint distributions for that converges to . We have

where we used the continuity of mutual information. This shows that , proving continuity. □

Appendix E. Behavior of on Gaussian Random Variables