1. Introduction

Extensions of the maximum entropy principle based on non-standard entropic functionals [

1,

2,

3,

4] have proven to be useful for the study of diverse problems in physics and elsewhere, particularly in connection with complex systems [

5,

6]. These lines of enquiry were greatly stimulated by research into a generalized thermostatistics advanced in the late 80s, in which the canonical probability distributions optimize the

power-law, non-additive entropies [

7]. The

thermostatistics was successfully applied to the analysis of a wide range of systems and processes in physics, astronomy, biology, economics, and other fields [

8,

9,

10,

11]. Motivated by the work on the

entropies, researchers also explored the properties and possible applications of several other entropic measures, such as those introduced by Borges and Roditi [

12], by Anteneodo and Plastino [

13], by Kaniadakis [

14], and by Obregón [

15]. Recent reviews on these and other entropic forms can be found in [

16,

17]. These developments, in turn, led to the investigation of general properties of entropic variational principles, in order to elucidate which features are shared by large families of entropic forms, or are even universal, and, on the contrary, which features characterize specific entropies, such as the

ones. Several aspects of general entropic variational principles have been studied along those lines, including, for instance, the Legendre transform structure [

18,

19,

20], the maximum entropy–minimum norm approach to inverse problems [

21], the implementation of dynamical thermostatting schemes [

22,

23], the interpretation of superstatistics in terms of entropic variational prescriptions [

24], and the derivation of generalized maximum-entropy phase-space densities from Liouville dynamics [

25].

Of all the thermostatistics associated with generalized entropic forms, the thermostatistics derived form the entropies has been the most intensively studied and fruitfully applied one. The -thermostatistics exhibits some intriguing structural similarities with the standard Boltzmann–Gibbs one. The aim of the present contribution is to explore one of these similarities, within the context of thermostatistical formalisms based on general entropic functionals. As is well known, the Boltzmann–Gibbs entropy of a normalized probability distribution can be expressed as minus the mean value of the logarithms of the probabilities. Or, alternatively, as the mean value of the logarithms of the inverse probabilities. On the other hand, the probability distribution that optimizes under the constraints imposed by normalization and by the energy mean value, has an exponential form, where the exponential is the inverse function of the above mentioned logarithm function. In a nutshell: the entropy is the mean value of a logarithm (evaluated on the inverse probabilities), while the maximum-entropy probabilities are given by an exponential function, which is the inverse function of the logarithm. This structure turns out to be nontrivial, and, up to a duality condition, is shared by the -thermostatistics. Indeed, it is possible to define a q-logarithm function, and its inverse function, a q-exponential, both parameterized by the parameter q, such that the entropy is the mean value of a q-logarithm (evaluated on the inverse probabilities), while the probability distribution optimizing has a q-exponential form. The alluded duality condition, however, imposes that the value of the q-parameter associated with the aforementioned q-logarithm should not be the same as the value of the parameter associated with the q-exponential. Both q-values are connected via the duality relation , which is ubiquitous in the -thermostatistics. In the present work, we shall explore which entropic measures generate similar structures, linking the entropic functional, regarded as the mean value of a generalized logarithm, with the form of the maximum-entropy distributions.

This paper is organized in the following way. In

Section 2, we provide a brief review of the

-thermostatistical formalism, focusing on the

q-logarithm duality relation. In

Section 3, we explore which entropic functionals give rise to structures, and duality relations, similar to those characterizing the

-thermostatistics. More general scenarios are considered in

Section 3. Finally, some conclusions are drawn in

Section 4.

2. Entropies, q-Logarithms, and q-Exponential Maximum-Entropy Probability Distributions

The

-thermostatistics is constructed on the basis of the non-additive, power-law entropy

[

5] defined as

where

is a parameter characterizing the degree of non-additivity exhibited by the entropy,

k is a constant chosen once and for ever, determining the dimensions and the units in which the entropy is measured, and

is an appropriately normalized probability distribution for a system admitting

W microstates. In what follows, we shall assume that

. The limit

corresponds to the standard Boltzmann–Gibbs (BG) entropy, that is,

. The power-law entropy

constitutes a distinguished and founding member of the club of generalized entropies, which is nowadays the focus of intensive research activity [

3,

4,

16].

The

q-logarithm function, given by

and its inverse function, the

q-exponential

constitute essential ingredients of the

thermostatistical formalism. For the sake of completeness, it is worth mentioning that sometimes people use an alternative notation for the

q-exponential, given by

. The

q-logarithm and the

q-exponential functions arise naturally when one considers the constrained optimization of the entropy

[

5,

9]. Moreover, it is central to the

q-thermostatistical theory that the

entropy itself can be expressed in terms of

q-logarithms,

Note that, for

, the above equation reduces to the well-known one,

.

The gist of the

thermostatistics is centered on the optimization of

under suitable constraints. The

entropic variational problem can be formulated using standard linear constraints or nonlinear constraints based on escort probability distributions [

26,

27]. When working with more general entropic functionals, it is not well understood what are the appropriate escort mean values to be used, and few or no concrete applications of escort mean values to particular problems have been developed. Consequently, in order to investigate and clarify the distinguishing features of the

formalism within the context of more general entropic formalisms, it is convenient to restrict our considerations to the optimization of the

entropy under linear constraints. The main instance of the

variational problem is the one yielding the generalized canonical probability distribution, which corresponds to the optimization of

under the constraints corresponding to normalization,

and to mean energy. We assume that the

ith microstate of the system under consideration, which has probability

, has energy

. The mean energy is then

Introducing the Lagrange multipliers

and

, corresponding to the constraints of normalization (

5) and the mean energy (

6), the optimization of

leads to the variational problem

yielding

For later comparison with thermostatistical formalisms based on general entropic forms, it will prove convenient to recast the above equation as

with

and

. At first glance, it might seem cumbersome to introduce the parameters

and

, since, within the context of the

-thermostatistics, they are simple functions of the entropic parameter

q. The new parameters, however, will prove essential when exploring the duality properties exhibited by thermostatitsical formalisms based on other generalized entropies, and when comparing those properties with the ones corresponding to the

entropy. In those scenarios, the parameters

and

have other values, depending on the parameterized form of the relevant entropic functionals. Using the

and

parameters, the maximum

entropy probability distribution can be expressed in terms of a

q-exponential, as follows:

where

Comparing now the Equation (

4) for the entropy, with the Equation (

10) for the probabilities optimizing the entropy, we see that the

entropy can be expressed in terms of a

q-logarithm function, while the optimal probabilities are given by an inverse

q-logarithm function (that is, by a

q-exponential function). However, the value of the

q-parameter that appears in the first

q-logarithm, associated with the entropy, does not coincide with the one, denoted by

, that appears in the inverse

q-logarithm defining the optimal probabilities. This pair of

q-values satisfy the duality relation (

11). It is important to emphasize that the duality relation (

11) has the property

In other words, the dual of the dual of

q is equal to

q itself. Note also that, in the Boltzmann–Gibbs limit,

, the duality relation reduces to

. The Boltzmann–Gibbs thermostatistics, regarded as a particular member of the

-thermostatistical family, is self-dual. The duality relation (

12) between the values of the

q-parameters characterizing two

q-logarithm functions, can be reformulated as a duality relation between the

q-logarithms themselves. Indeed, one has that

For

, the self-dual condition

is obtained, and the relation (

13) reduces to the well-known property of the standard logarithm,

.

3. Generalized Entropies and Logarithms

Now, we shall consider a generic trace-form entropy

. It can always be written in the form

expressed in terms of an appropriate generalized logarithm function

. The specific form of the generalized logarithmic function

depends on which particular thermostatistical formalism one is considering. For example, in the case of the

-based thermostatistics,

is given by the generalized logarithm

. Note that the subindex “

G” stands for “generalized", and it does not represent a numerical parameter. In order to lead to a sensible entropy, the function

has to be continuous and two-times differentiable, has to comply with

for

and

, and has to satisfy the concavity requirement given by

.

One can optimize the entropic measure (

14) under the constraints imposed by normalization (

5) and by the energy mean value (

6). The corresponding variational problem reads

where

and

are the Lagrange multipliers corresponding to the normalization and the mean energy constraints. The solution to the variational problem is given by a probability distribution complying with the equations

where

.

Equation (

16) arises from a generic entropy optimization problem. Basically, the optimization of any trace form entropy leads to an equation of the form (

16). Here, we want to consider a particular family of entropies, leading to maximum entropy distributions satisfying a special symmetry requirement. We want the maximum entropy distribution

to be of the form

where

, with

and

appropriate constants (

), and

is the inverse of a generalized logarithmic function

, related to

through a

duality relationship. A few clarifying remarks are now in order. First,

is, up to the additive and multiplicative constants

and

, equal to the right-hand side of (

16). Second, the constants

A and

B depend only on the form of the entropy (

14), and not on any details of the system under consideration, such as the number of microstates

W, the values of the microstates’ energies

, or the values of the Lagrange multipliers

and

. Last, the duality relation connecting the functions

and

is such that the dual of the dual is equal to the original function, that is

Combining Equations (

16) and (

17), one obtains

Introducing the constants

and

, the above equation can be cast in the more convenient form

For a given duality relation

, and given values of the parameters

A and

B, Equation (

20) can be regarded as a differential equation that has to be obeyed by the generalized logarithmic function

. For solving the differential equation, one needs an initial condition, given by the value

adopted by the generalized logarithm at some particular point

. We shall assume, as an initial condition, that

.

Different forms of the duality relation are compatible with different forms of the generalized logarithm, and with different forms of the generalized entropy. In what follows, we shall explore some instances of duality relations, in order to determine which entropic forms are compatible with them.

3.1. The Duality Condition Satisfied by the Thermostatistics

Motivated by the

-based thermostatistics, we shall first adopt the duality condition

which is precisely the relation (

13) satisfied by the

-thermostatistics. Equation (

20) then becomes

Therefore, in order to find the form of

, we have to solve the differential equation

with the initial condition

. The (unique) solution of Equation (

23) is then

We see that, up to the multiplicative constant

A, the only generalized logarithmic function leading to an entropy optimization scheme compatible with the duality condition (

21) is the

q-logarithm

The parameter

B appearing in (

22) coincides with the parameter

q of the

-thermostatistics.

3.2. The Simplest Duality Relation

We shall now consider the simplest possible duality relation, which is

In spite of its simplicity, this duality relation is worthy of consideration, because

it includes the standard logarithm (and the corresponding Boltzmann–Gibbs scenario) as a particular case. It is interesting, therefore, to explore which entropic forms are compatible with the simplest conceivable condition (

26), even if this exploration is not a priori motivated by a generalized entropy of known physical relevance.

Combining the general Equation (

20) with the duality relation (

26), one obtains

Then, we have to solve the ordinary differential equation

or, equivalently,

with the condition

. At first sight, Equation (

29) may look like a standard ordinary differential equation. It has, however, the peculiarity that in the right-hand side of (

29), the unknown function

is evaluated at two different values of its argument:

x and

. This situation is similar to the one that occurs, for instance, with differential equations describing dynamical systems with delay. In the case of (

29), this difficulty can be removed by recasting the equation as a pair of coupled ordinary differential equations. Let us introduce the functions

The differential Equation (

28) can be reformulated as the two coupled differential equations

with the conditions

. To find a solution for (

31), we propose the ansatz

If one inserts the ansatz (

32) into the differential Equations (

31), one can verify that (

32) constitutes a solution, provided that

and

where

. It follows from (

33) and (

34) that

, and that

The relations (

33)–(

35), together with the initial conditions

, lead to

and

The solution to the system of differential Equations (

31) is completely determined by the conditions

. Therefore, given these conditions, and for

, the solution (

36) and (

37) is unique. Now, the entropy

compatible with the duality relation (

26) is

, with

. Therefore, for

, one has

which, after some algebra, can be recast in the more convenient form

Introducing now the parameters

(

) and

(

), the entropy (

39) can be expressed as a linear combination of two

entropies,

where

In the limit

, which corresponds to

,

, and

, the generalized entropy (

40) is, up to a multiplicative constant, equal to the Boltzmann–Gibbs entropy

.

3.3. More General Duality Relations

It is possible to consider duality relations more general than the ones discussed previously. One can consider scenarios where the relation between a generalized logarithm and its dual is defined in terms of a pair of functions

, as

where the functions

satisfy

For example, the duality relation associated with the

entropy corresponds to

and

, while the duality relation associated with the entropy

corresponds to

.

Other duality relations can be constructed, for instance, in terms of the Moebius transformations

with

. The inverse of (

44) is

Moebius transformations that are self-inverse (that is, transformations coinciding with their own inverse:

) are candidates for the functions

from which possible duality relations for generalized logarithmic functions can be constructed. Examples of self-inverse Moebius transformations are those of the form

which have

. Notice that, for

, the above form of

depends on only two parameters, as follows:

. Another self-inverse Moebius transformation, not included in the family (

46), is the identity function,

, corresponding to

and

(see also [

28]). The duality relations corresponding to the entropic measures

and

are both constructed in terms of particular instances of Moebius transformations. The duality relation associated with the entropy

is constructed with

and

, which are the self-inverse Moebious transformation corresponding, respectively, to

,

, and

, and to

and

. The duality relation for the entropy

is constructed with

, which correspond to

and

.

A generalized logarithmic function

defining a trace-form entropy (

14), for which the associated entropic optimization principle leads to the duality relation (

42), must satisfy the differential equation

with the condition

. For expression (

14) to represent a sensible (i.e., concave) entropy, the generalized logarithm satisfying (

47) has to comply with the requirement

For duality relations more general than the two ones already analyzed by us in detail (corresponding to the entropies

and

), the associated differential Equation (

47) has, presumably, to be treated numerically.

3.4. Duality Relations: The Inverse Problem

One can also consider the following inverse problem. Given a parameterized family of non-negative, monotonically increasing functions

, depending on one or more parameters (that we collectively denote by

), find out if the inverse function

is related to a generalized logarithmic function defining a sensible entropy (

14), and satisfying a duality relation (

42) defined in terms of appropriate functions

. The problem is the following: for the inverse function

, determine if suitable functions

exist, and identify them. We assume that the integral

converges.

In order to formulate this inverse problem, we consider a thermostatistical formalism, based on a generalized entropy, which yields optimizing-entropy canonical probability distributions of the form

where

. In the latter expression,

and

are, as usual, the Lagrange multipliers associated with normalization of mean energy, and

and

are constants, possibly depending on the parameters

characterizing the function

.

The associated entropy

can be expressed as

where the function

is defined as the integral

The function

satisfies the following properties,

which guarantee that

, defined by (

51), is a sensible entropy. For

, one has

,

,

, and

coincides with the Boltzmann–Gibbs entropy. If we compare the expression (

51) for

with the expression (

14) for a generalized entropy in terms of a generalized logarithm, we find that the generalized logarithm associated with

is

or, equivalently,

On the other hand, if we compare the form (

17) for a generalized canonical distribution, with the form (

50) corresponding to the function

, we obtain

The present inverse problem consists of determining what type of duality relation, if any, exists between the functions (

55) and (

56). It seems that this is a difficult problem, which has to be tackled in a case-by-case way. As an intriguing example of this inverse problem, we can consider the one posed by probability distributions related to the Mittag-Leffler function

(see [

29] and references therein). The Mittag-Leffler function is given, for a general complex argument

z, by the power series expansion

with

. Notice that, in the literature [

29], the two parameters

a and

b characterizing the Mittag-Leffler function are sometimes referred to as

and

.

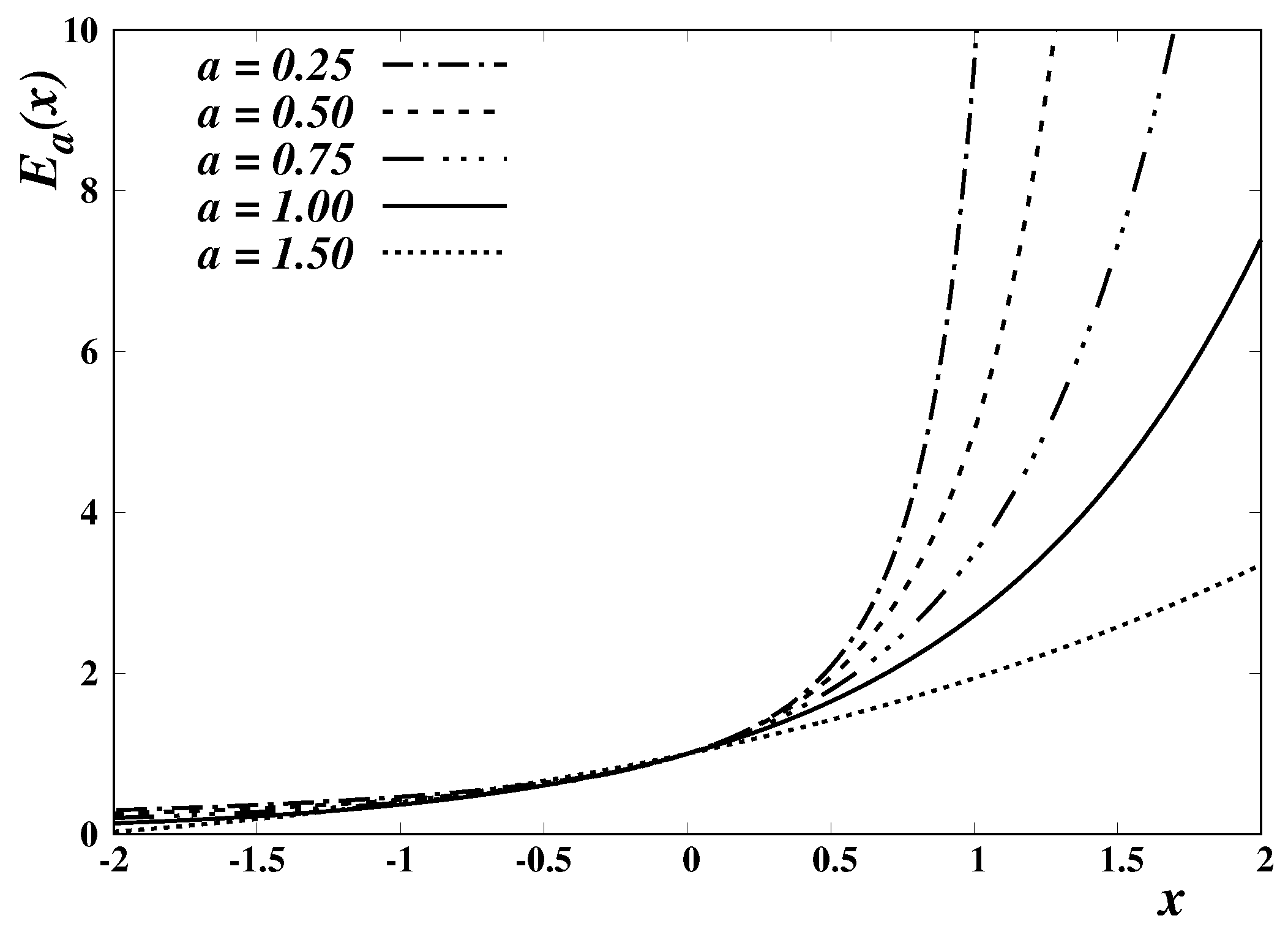

The Mittag-Leffler function has several applications in physics and other fields. In particular, it plays a distinguished role in the study of non-standard diffusion processes involving fractional calculus operators [

29]. In the present context, we consider only real values of the parameters

and real arguments. A few examples of the Mittag-Leffler function, and of its inverses, are respectively depicted in

Figure 1 and

Figure 2, for

and different values of the parameter

a.

In the context of a Mittag-Leffler-based thermostatistical formalism, some possible choices for the function

would be

where

is the set of parameters characterizing the Mittag-Leffler function. For each of these choices, provided that the values of the parameters

are such that the appropriate conditions are fulfilled, it is possible to explore the existence of functions

for which the Mittag-Leffler-related generalized logarithms, (

55) and (

56), satisfy a differential equation of the form (

47). For

, the corresponding generalized entropy (

51) is defined in terms of the function

, given by (

52). A few examples of

, which we obtained by numerically solving the integrals (

49) and (

52) for particular values of the parameter

a, are plotted in

Figure 3.

4. Conclusions

Several generalizations or extensions of the notion of entropy have been advanced and enthusiastically investigated in recent years. The associated entropic optimization problems seem to provide valuable tools for the study of diverse problems in physics and other fields, particularly when applied to the analysis of complex systems. Among the growing number of entropic forms that have been advanced, the non-additive, power-law entropies exhibit the largest number of successful applications. It is clear by now, however, that the entropies are not universal: some systems or processes seem to be described by entropic forms not belonging to the family. Given this state of affairs, it is imperative to investigate in detail the properties of the various entropies, and of the associated thermostatistics, in order to elucidate and clarify the deep reasons that make them suitable for treating specific problems. In particular, the structural features of the thermostatistics are certainly worthy of close scrutiny. In the present work, we investigated one of these features, according to which the q-exponential function describing the maximum-entropy probability distributions are linked, via a duality relation, with the q-logarithm function in terms of which the entropy itself can be defined. We investigated which entropic functionals lead to this kind of structure and explored the corresponding duality relations.

The main take-home message of the present work is that there is a close connection between the aforementioned duality relations, and the forms of the entropic measures. The

thermostatistics exhibits a particular duality connection, which, in the limit of the Boltzmann–Gibbs thermostatistics, reduces to a self-duality. We proved that there is no other entropic functional satisfying the duality relation associated with

, namely, Equation (

21). This constitutes what may be regarded as a brand new uniqueness theorem leading to

, in addition to those already existing, such as the Enciso–Tempesta theorem [

30] and those indicated therein. Assuming other types of duality relation, it is possible to formulate differential equations that lead to new entropic measures complying with the assumed duality. We studied in detail a duality relation leading to a differential equation that admits closed analytical solutions, and corresponds to a new generalized entropy, which we denoted by

. The duality relations characterizing the entropies

and

seem to be exceptional, in that the concomitant differential equations can be solved analytically. In many other cases, the differential equations resulting from duality relations have to be treated numerically. The investigation of these equations, associated with thermostatistical scenarios different from, or more general than, those based on the entropies

and

, would certainly be worthwhile. It would also be valuable to identify new duality relations admitting an analytical treatment. The exploration of the ensuing thermostatistical scenarios may suggest interesting new applications of generalized entropies. Another promising direction for future research is to extend the present study to scenarios involving non-trace-form entropies [

31], or involving escort mean values [

27,

32]. We would be delighted to see further advances along these or related lines.