Abstract

In this paper, we propose DiLizium: a new lattice-based two-party signature scheme. Our scheme is constructed from a variant of the Crystals-Dilithium post-quantum signature scheme. This allows for more efficient two-party implementation compared with the original but still derives its post-quantum security directly from the Module Learning With Errors and Module Short Integer Solution problems. We discuss our design rationale, describe the protocol in full detail, and provide performance estimates and a comparison with previous schemes. We also provide a security proof for the two-party signature computation protocol against a classical adversary. Extending this proof to a quantum adversary is subject to future studies. However, our scheme is secure against a quantum attacker who has access to just the public key and not the two-party signature creation protocol.

1. Introduction

Ever since Peter Shor proposed an algorithm that was able to efficiently break most of the classical asymmetric cryptographic primitives such as RSA or ECDSA in the 1990s [1,2], research has been conducted to find quantum-resistant replacements. This work has recently been coordinated by the U.S. National Institute of Standards and Technology (NIST). In 2016, NIST announced an effort to standardise some of the proposed public key encryption algorithms, key-establishment algorithms, and signature schemes [3].

The primary focus of NIST is to obtain drop-in replacements for the current standardised primitives in order to ease the transition. However, not all of the current application areas are covered by the standardisation process.

One important class of examples is threshold signatures. In a ()-threshold scheme, the secret key is shared between n users/devices. To create a valid signature, a subset of t users/devices should collaborate and use their secret key shares. Over the years, threshold versions of a number of major cryptographic algorithms including RSA and (EC)DSA have been studied [4,5,6,7,8]. Recent interest in threshold versions of ECDSA has been influenced by applications in blockchains. However, our motivation stems more from server-assisted RSA signatures, as proposed in the scheme by Buldas et al. [9]. This scheme has been implemented in a Smart-ID mobile application and was recognised as a qualified signature creation device (QSCD) in November 2018 [10]. In 2021, the number of Smart-ID users in the Baltic countries was estimated at 2.9 million [11].

In 2019, D. Cozzo and N. Smart analysed a number of NIST post-quantum standardisation candidates and concluded that none of them provide a threshold implementation that would be comparable in efficiency to threshold RSA or even ECDSA [12].

The goal of this research is to find a suitable version of one of the NIST signature candidates (Crystals-Dilithium) that would allow for a more efficient two-party implementation but would still provide post-quantum security. From this, we propose a concrete/specific two-party signature scheme and prove its security in this paper.

To be able to compute the private key or a signature forgery having access only to the public key, the attacker would need to solve Module Learning With Errors, Rejected Module Learning With Errors, and Module Short Integer Solution problems. These are considered hard even for quantum computers. We present security proof for the two-party signing protocol itself; however, it only provides security against a classical attacker. Extending the proof to cover quantum attackers is a task for future work.

Contributions

In this paper, we construct DiLizium, a lattice-based two-party signature scheme that follows the Fiat–Shamir with Aborts (FSwA) paradigm, and prove the security of the proposed scheme in the classical random oracle model. The security of the proposed signature scheme relies on the hardness of solving the Module-LWE and Module-SIS problems. Our two-party signature protocol is based on the scheme described in the paper by Kiltz et al., Appendix B [13]. Initially, we attempted to construct a two-party version of the Crystals-Dilithium digital signature submitted to the NIST PQC completion. However, we concluded that there are no straightforward approaches for modification thereof to the distributed version. Solutions require using a two-party computation protocol, which increases not only the signing time but also the communication complexity. The simplified version of the Crystals-Dilithium scheme from [13] is easier to work with because there are no additional bit decomposition algorithms used. Having a bit decomposition protocol would require an additional secure two-party computation protocol that would allow client and server to jointly compute high-order bits without revealing their private intermediate values. That would lead to an increased number of communication rounds and usage of additional security assumptions.

Additionally, DiLizium scheme does not require sampling from a discrete Gaussian distribution. We decided to use the scheme with uniform distribution because the Gaussian distribution is known to be hard to implement securely [14,15]. Our work follows the approach from [16], but instead of using homomorphic commitments, we decided to use the homomorphic hash function. We wanted to find an alternative to the commitment scheme introduced in [16] to increase the computational efficiency of the signature scheme. Due to the way our scheme is constructed, in the security proof, we rely on rejected Module-LWE, which is a non-standard security assumption, and it is not used in this work [16].

2. Related Work

In 2020, NIST announced fifteen third-round candidates for the PQC competition, of which seven were selected as finalists and eight were selected as alternate candidates [17]. The finalists in the digital signature category were Crystals-Dilithium [18], Falcon [19], and Rainbow [20]. Crystals-Dilithium and Falcon are both lattice-based signature schemes. Falcon has better performance, signature, and key sizes; however, its implementation is more complex, as it requires sampling from the Gaussian distribution and it utilises floating-point numbers to implement an optimised polynomial multiplication [19]. The performance of a Crystals-Dilithium signature scheme is slightly slower, and the signature and key sizes are larger than the ones in Falcon; however, the signature scheme itself has a simpler structure [18]. Rainbow is a multivariate signature scheme with fast signing and verifying processes. The size of the Rainbow signature is the shortest among the finalists; however, the public key size is the largest [20].

Due to the interest aroused by the PQC competition, several works were proposed that introduce lattice-based threshold signatures and lattice-based multisignatures. The works [21,22,23,24,25,26] focused on creating multisignatures that followed the FSwA paradigm. These schemes use rejection sampling, due to which the signing process is repeated until a valid signature is created. Additionally, intermediate values produced during the signature generation process need to be kept secret until the rejection sampling has been completed. There are currently no known techniques to prove the security of FSwA signatures if intermediate values are published before the rejection sampling is performed [16]. In multisignatures [21,22,23,24,25], intermediate values are published before the rejection sampling is completed, which leads to incomplete security proofs in these works [16]. The work by M. Fukumitsu and S. Hasegawa [26] solves the problem with aborted executions of the protocol by introducing a non-standard hardness assumption (rejected Module-LWE).

In 2019, D. Cozzo and N. Smart analysed the second round NIST PQC competition signature schemes to determine whether it is possible to create threshold versions of these signature schemes [12]. The authors proposed a possible threshold version for each of the schemes using only generic Multiparty Computation (MPC) techniques, such as linear secret sharing and garbled circuits. As a result, the authors proposed that the most suitable signature scheme is Rainbow, which belongs to the multivariate family. The authors described a threshold version of Crystals-Dilithium, which is estimated to take around 12 s to produce a single signature. The authors explained that the problems with performance arise from the fact that the signature scheme consists of both linear and nonlinear operations and that it is inefficient to switch between these representations using generic MPC techniques. However, the goal of the current work is to focus on the two-party scenario. This means that some of the difficulties in D. Cozzo and N. Smart paper can be avoided.

R. Bendlin, S. Krehbiel, and C. Peikert proposed threshold protocols for generating a hard lattice with trapdoor and sampling from the discrete Gaussian distribution using the trapdoor [27]. These two protocols are the main building blocks for the Gentry–Peikert–Vaikuntanathan (GPV) signature scheme (based on hash-and-sign paradigm), where generating a hard lattice is needed for the key generation and Gaussian sampling is needed for the signing process. M. Kansal and R. Dutta proposed a lattice-based multisignature scheme with a single round signature generation that has key aggregation and signature compression in [28]. The underlying signature scheme follows neither the hash-and-sign nor FSwA paradigms, which are the main techniques used to construct lattice-based signature schemes.

In 2020, I. Damgård, C. Orlandi, A. Takahashi, and M. Tibouchi proposed a lattice-based multisignature and distributed signing protocols that are based on the Dilithium-G signature scheme [16]. Dilithium-G is a version of the Crystals-Dilithium signature that requires sampling from a discrete Gaussian distribution [29]. The work contains complete classical security proofs for the proposed schemes. The work solves the problem with the aborted executions by using commitments such that, in the case of an abort, only commitment is published, the intermediate value itself stays secret. The proposed distributed signature scheme could potentially fit the Smart-ID framework; however, some questions need to be addressed. More precisely, the scheme is based on a modified version of Crystals-Dilithium from the NIST PQC competition project and uses Gaussian sampling. It is known that generating samples from the Gaussian distribution is nontrivial, which means that the insecure implementation may lead to side-channel attacks [30]. The open question is whether it is possible to use a version of the scheme more similar to the one being submitted to the NIST PQC competition.

3. Preliminaries

3.1. Notation

- Let be a ring of all integers. denotes a ring of residue classes modulo q. denotes a ring of polynomials in the variable x with integer coefficients.

- R denotes a quotient ring , where and denotes a quotient ring , where .

- Polynomials are denoted in italic lowercase p. is a polynomial of degree bound by n: . It can also be expressed in a vector notation through its coefficients ().

- Vectors are denoted in bold lowercase . is a vector of dimension n: , where each element is a polynomial in .

- Matrices are denoted in bold uppercase . is a matrix with elements in .

- For an even positive integer and for every , define , as in the range such that . For an odd positive integer and for every , define , as in the range such that . For any positive integer , define , as in the range such that .

- For an element , its norm is defined as .

- For an element , its infinity norm is defined as , where denotes the absolute value of the element. For an element , . Similarly for an element , .

- denotes a set of all elements such that .

- denotes sampling an element uniformly at random from the set A.

- denotes sampling an element from the distribution defined over the set A.

- denotes mapping x to the least integer greater than or equal to x (e.g., ).

- The symbol ⊥ is used to indicate a failure or rejection.

3.2. Definitions of Lattice Problems

Definition 1(Decisional Module-LWE ()).

Let χ be an error distribution, given a pair decide whether it was generated uniformly at random from or it was generated as , ( and .

The advantage of adversary in breaking decisional Module-LWE for the set of parameters () can be defined as follows:

Definition 2(Computational Module-LWE ()).

Let χ be an error distribution, given a pair , where , (, and when finding a vector .

The advantage of adversary in breaking computational Module-LWE for the set of parameters () can be defined as follows:

Definition 3(Module-SIS ()).

Given a uniformly random matrix, find a vectorsuch thatand.

The advantage of adversary in breaking Module-SIS for the set of parameters () can be defined as follows:

Additionally, we define the rejected Module-LWE assumption adapted from [26].

Definition 4(Rejected Module-LWE ( )).

Let χ be an error distribution, and let C be a set of all challenges. Let , , , and . Assume that or hold. Given , decide whether was generated uniformly at random from or it was generated as .

The advantage of adversary in breaking the rejected Module-LWE for the set of parameters () can be defined as follows:

3.3. Forking Lemma

The following forking lemma is adapted from [31]. x can be viewed as a public key of the signature scheme, and can be viewed as replies to the random oracle queries.

Lemma 1

(General forking lemma).Fix an integer to be the number of queries. Fix set C of size . Let be a randomised algorithm that takes as input , where , and returns a pair with the first element being index i (integer in the range ) and the second element being side output . Let be a randomised input generation algorithm. Let the accepted probability of be denoted as . This is the probability that in the following experiment:

The forking algorithm connected with is defined in Algorithm 1.

| Algorithm 1 |

| 1: Pick random coins ρ for |

| 2: |

| 3: |

| 4: If , then return |

| 5: Regenerate |

| 6: |

| 7: If and , then return |

| 8: Otherwise, return |

Let us define the probability as

Then,

Alternatively,

3.4. Lattice-Based Signature Scheme

Lattice-based cryptography is a promising candidate for the post-quantum public key cryptography standards. Among all of the submissions to the NIST PQC competition, the majority of schemes belong to the lattice-based family [17]. Many lattice-based signatures are constructed from the identification schemes using the Fiat–Shamir (FS) transform. The FS transform technique introduced in [32] allows for creating a digital signature scheme by combining an identification scheme with a hash function.

The following definition is adapted from [13].

Definition 5(Identification scheme).

An identification scheme is defined as a tuple of algorithms

- The key generation algorithm takes as input system parameters and returns the public key and secret key as output . Public key defines the set of challenges C, the set of commitments W, and the set of responses Z.

- The prover algorithm consists of two sub-algorithms. takes as input the secret key and returns a commitment and a state . takes as input the secret key, a commitment, a challenge, and a state and returns a response .

- The verifier algorithm V takes as input the public key and the conversation transcript and outputs a decision bit (accepted) or (rejected).

In the signature scheme that uses FS transform, the signing algorithm generates a transcript , where a challenge c is derived from a commitment w and the message to be signed m as follows . The signature is valid if the transcript passes the verification algorithm with . The publication [33] introduced a generalisation to this technique called Fiat–Shamir, with aborts transformation that takes into consideration aborting provers.

The following signature scheme (further referred to as the basic scheme) is a slightly modified version of the scheme [34]; the description below is based on a version described in [13] (Appendix B). The signature scheme makes use of a hash function, which produces a vector of size n with elements in [18]. The hashing algorithm starts with applying a collision-resistant hash function (e.g., SHAKE-256) to the input to obtain a vector from the first bits of the hash function’s output. Then, SampleInBall algorithm (Algorithm 1) is invoked to create a vector in with exactly nonzero elements. In each iteration of the for loop, the SampleInBall algorithm generates an element using the output of a collision-resistant hash function. Then, the algorithm performs shuffling of the elements in the vector and takes an element from the vector to generate or 1. For an in-depth overview of the algorithm, refer to the original paper [18].

| Algorithm 2 SampleInBall. |

| 1: Initialise as zero vector of length n |

| 2: for |

| 1: |

| 2. |

| 3. |

| 4. |

| 3: return |

All of the algebraic operations in the signature scheme are performed over the ring . A formal definition of the key generation, signing, and verification is presented in Algorithms 3–5.

| Algorithm 3 KeyGen(). |

| 1: |

| 2: |

| 3: |

| 4: return |

| Algorithm 4 Sign(). |

| 1: |

| 2: while do: |

| 1: |

| 2. |

| 3. |

| 4. and |

| 5. if or , then |

| 3: return |

| Algorithm 5 Verify(). |

|

Correctness

Since , , . and , it holds that

Therefore, if a signature was generated correctly, it will successfully pass the verification.

3.5. Homomorphic Hash Function

We decided to use a homomorphic hash function instead of a homomorphic commitment scheme as in [16].

Definition 6(Homomorphic hash function).

Let + be an operation defined over X, and let ⊕ be an operation defined over R. Let be any two inputs to the hash function. A hash function is homomorphic if it holds that

Definition 7(Regular hash function).

Let , where be a collection of functions indexed by a set A. A family of hash functions is called ϵ-regular if the statistical distance between its output distribution and the uniform distribution is at most ϵ.

One of the homomorphic hash functions available is called SWIFFT; it is a special case of the function proposed in [35,36,37]. SWIFFT is a collection of compression functions that are provably one-way and collision-resistant [38]. Additionally, SWIFFT has several statistical properties that can be proven unconditionally: universal hashing, regularity, and randomness extraction. However, due to the linearity, SWIFFT functions are not pseudorandom. It follows that the function is not a suitable instantiation of a random oracle [38]. Therefore, in the security proofs of the two-party signature scheme, SWIFFT is not used as a random oracle. Security proof makes use of such provable properties as regularity and collision resistance.

4. Proposed Two-Party Signature Scheme (DiLizium)

In the following section, we define and give detailed description of our two-party signature scheme: DiLizium. We start by defining the distributed signature scheme; the following definition is adapted from [16].

Definition 8(Distributed signature protocol).

Distributed signature protocol is a protocol between parties that consists of the following algorithms:

- Generate public parameters using security parameter λ as input: Setup().

- Each party generates a key pair consisting of secret key share and a public key using interactive algorithm and public parameters as input: KeyGen for each .

- To sign a message m, each party runs an interactive signing algorithm using secret key share: Sign for each .

- To verify a signature, the verifier needs to check if Verify() = 1. If the signature was generated correctly, verification should always succeed.

4.1. Specification and Overview of DiLizium Signature Scheme

Table 1 describes the parameters used in the two-party signature scheme.

Table 1.

Parameters for the two-party protocol.

4.1.1. Parameter Setup

Let us assume that, before starting the key generation and signing protocols, the parties invoke a Setup() function that, based on the security parameter , outputs a set of public parameters that are described in Table 1.

4.1.2. Key Generation

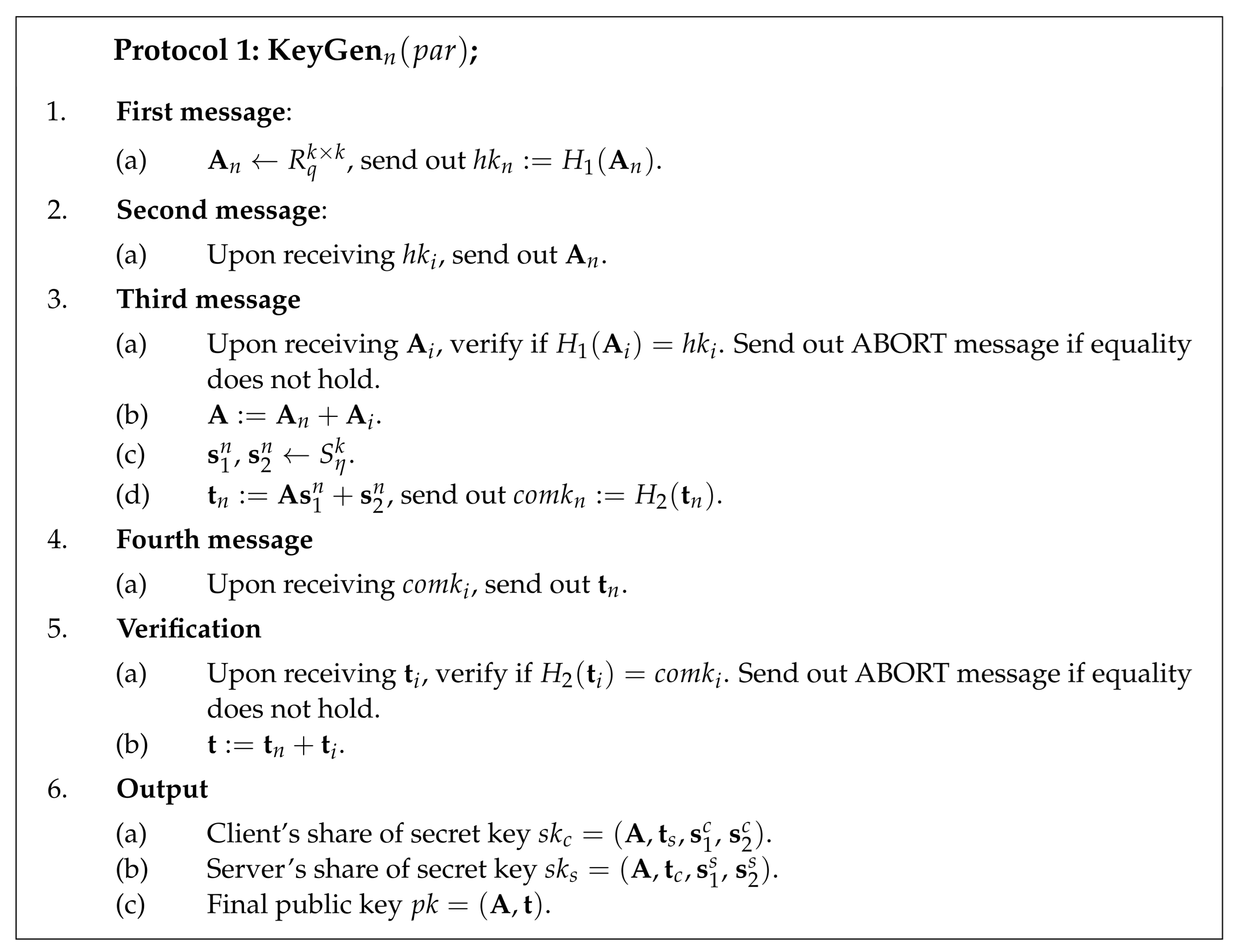

and are some collision-resistant hash functions. The key generation protocol is parametrised by the set of public parameters . The client begins the key generation process by sampling a share of matrix and by sending out the commitment to this share . The server generates its matrix share and sends commitment to the client. Upon receiving commitments, the client and server exchange matrix shares and check if the openings for the commitments were correct. If openings are successfully verified, the client and server locally compute composed matrix .

The client proceeds by generating two secret vectors (, ) and by computing its share of the public key . The client sends out a commitment to the public key share . The server samples its secret vectors (, ) and uses them to compute its public key share . Next, the server sends commitment to the public key share to the client.

Once the client and server have received commitments from each other, the client and server exchange public key shares. Next, the client and server both locally check if the commitments were opened correctly. If these checks succeed, the client and server locally compute the composed public key . The final public key consists of composed matrix and vector .

It is necessary to include the server’s public key share to the client’s secret key and vice versa. During the signing process, the client needs to use the server’s public key share to verify the correctness of a commitment.

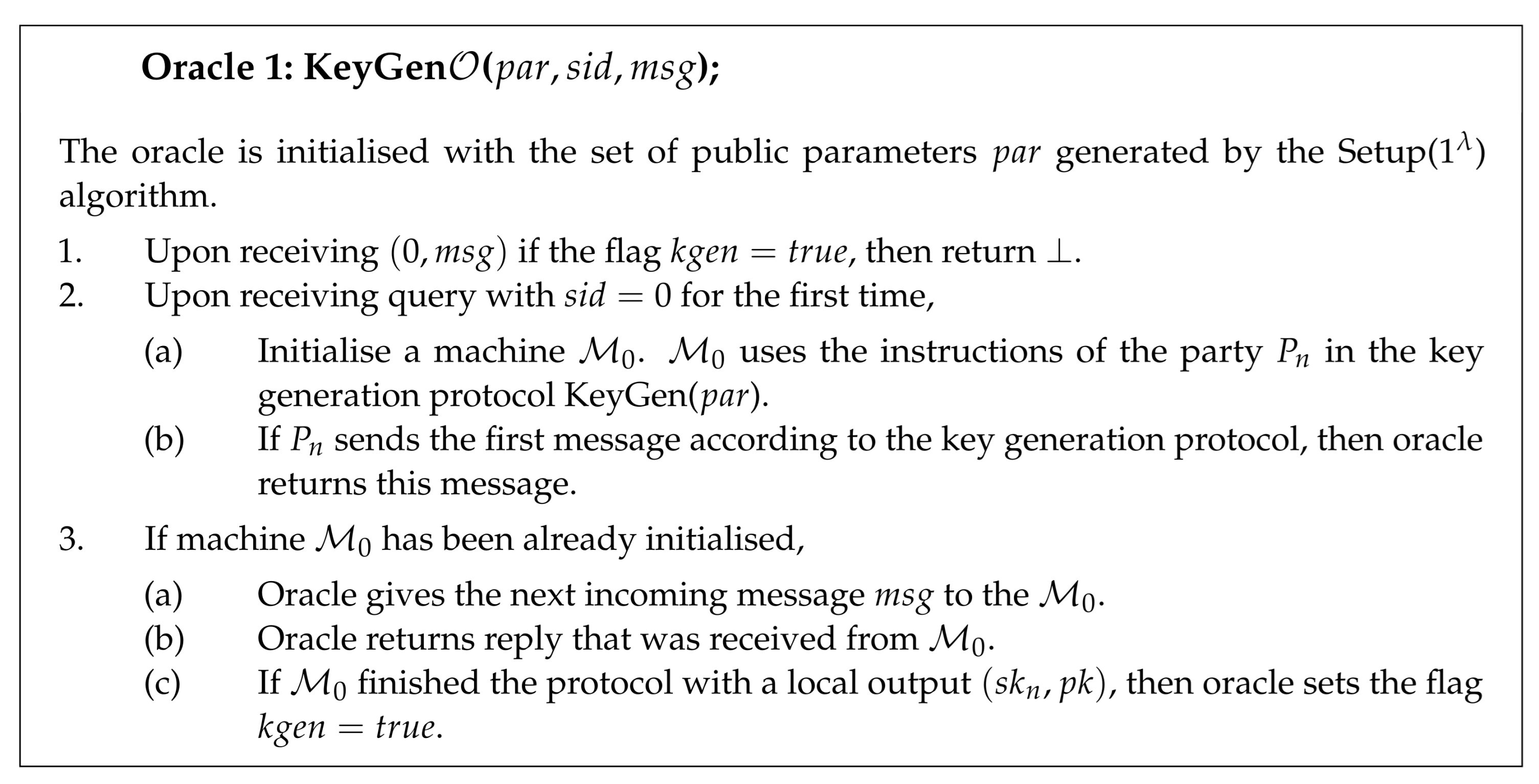

Protocol 1 describes two-party key generation between the parties in a more formal way. Instructions of the protocol are the same for the client and server. Therefore, Protocol 1 presents the behavior of the nth party, .

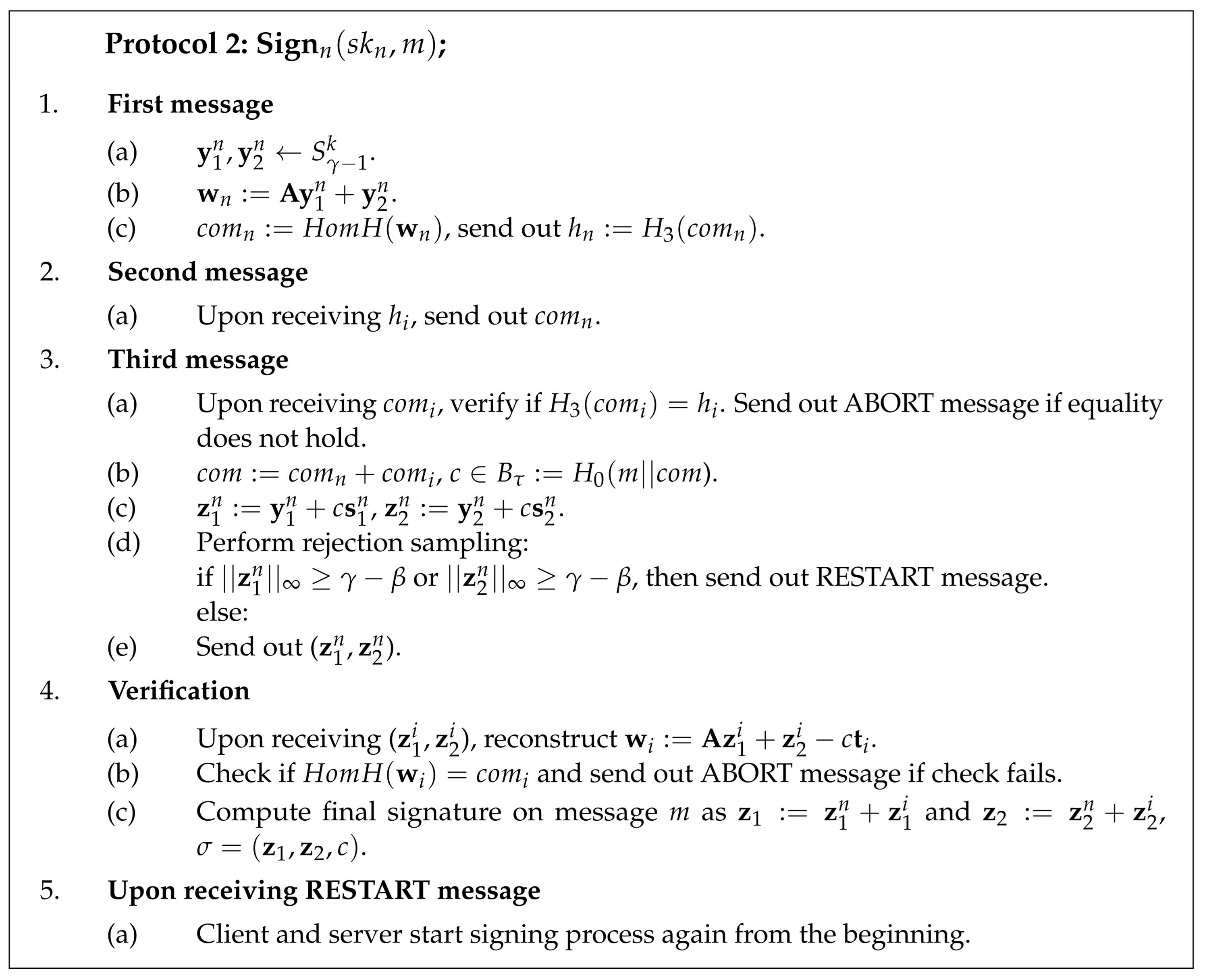

4.1.3. Signing

is a homomorphic hash function from the SWIFFT family. is a hash function that outputs a vector of length n with exactly coefficients being either or 1 and the rest being 0 as described in Algorithm 2. is a collision-resistant hash function.

The client starts the signing process by generating its shares of masking vectors () and by computing a share of . Next, the client uses a homomorphic hash function to compute and hashes it using some collision-resistant hash function . The composed output of the homomorphic hash function is later used to derive a challenge. Therefore, it is crucial to ensure that have not been chosen maliciously. Thus, before publishing these shares, the client and server should exchange commitments to the shares .

The server, in turn, generates its shares of masking vectors (), computes its share of , and sends commitment to . After receiving commitments from each other, the client and server open the commitments by sending out shares .

The client proceeds by checking if the server opened its commitment correctly. If the check succeeds, the client computes and derives challenge . Next, the client computes potential signature shares and performs rejection sampling. If all of the conditions in rejection sampling are satisfied, the client sends its signature share to the server.

The server checks if the client opened its commitment correctly. If the check succeeds, the server computes composed and derives challenge c. Next, the server computes its potential signature shares and performs rejection sampling. If all of the conditions in rejection sampling are satisfied, the server sends its signature share to the client.

Finally, the client performs verification if indeed contains . The client reconstructs as and checks if it is a valid opening for . If the check succeeds, the client computes the final signature . The server performs the same verification that indeed contains using and . If the check succeeds, the server computes and outputs the final signature.

Protocol 2 describes the two-party signing process in the more formal way.

4.1.4. Verification

Verification is almost the same as in the original scheme except the verifier needs to apply homomorphic hash function on the reconstructed in order to check the correctness of challenge. Algorithm 6 describes verification in the more formal way.

4.1.5. Correctness

Since , , and it holds that:

.

Furthermore, by triangle inequality, it holds that if and , then . The same holds for the second signature component . This means that can be defined as and . Therefore, if a signature was generated correctly, verification always succeed.

| Algorithm 6 Verify() |

| 1: Compute . |

| 2: if H() and and : return 1 (success). |

| 3: else: return 0. |

Security

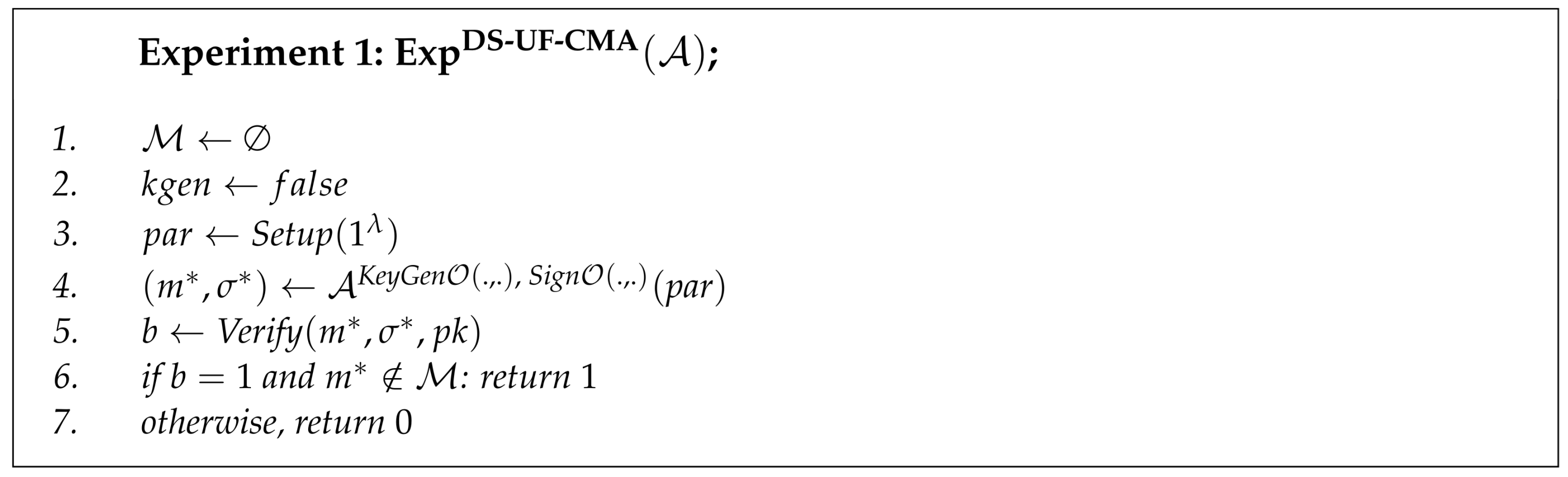

Definition 9 (Existential Unforgeability under Chosen Message Attack).

The distributed signature protocol is Existentially Unforgeable under Chosen Message Attack (DS-UF-CMA) if, for any probabilistic polynomial time adversary , its advantage of creating successful signature forgery is negligible. The advantage of adversary is defined as the probability of winning in the experiment :

Theorem 1.

Assume a homomorphic hash function is provably collision-resistant and ϵ-regular then for any probabilistic polynomial time adversary that makes a single query to the key generation oracle; queries to the signing oracle; and queries to the random oracles and , the distributed signature protocol is DS-UF-CMA secure in the random oracle model under Module-LWE, rejected Module-LWE, and Module-SIS assumptions.

This section presents the main idea for the security proof of the proposed scheme; the full proof is given in Appendix A. The proof considers only the classical adversary and relies on the forking lemma. The idea of the proof is, given an adversary that succeeds in creating forgeries for the distributed signature protocol, to construct an algorithm around it that can be used to solve Module-SIS problem or to break the collision resistance of the homomorphic hash function. The idea of our proof relies on the proofs from [7,16,31,39].

The proof consists of two major steps. In the first step, we construct a simulator . Algorithm is constructed such that it fits all of the assumptions of the forking lemma. simulates the behavior of a single honest party without using its actual secret key share. In the second step, the forking algorithm is invoked to obtain two forgeries with distinct challenges and the same commitments.

4.1.6. Simulation

In the key-generation process, we need to simulate the way the matrix share and the public vector share are constructed. Due to the use of random oracle commitments, once the simulator obtains the adversary’s commitment , it can extract the matrix share . Next, the simulator computes its matrix share using a resulting random matrix and programs random oracle .

Due to the Module-LWE assumption, the public vector share of the honest party is indistinguishable from the uniformly random vector sampled from the ring . Using the same strategy as that for the matrix share, the simulator sets it public vector share after seeing the adversary’s commitment and programs the random oracle .

The signature share generation starts with choosing a random challenge from the set of all possible challenges. Then, the simulator proceeds with randomly sampling two signature shares from the set of all possible signature shares . The share of vector is computed from the signature shares, public vector share, and challenge as . Then, the simulator extracts value from the adversary’s commitment , computes the composed value , and programs random oracle .

4.1.7. Forking Lemma

The combined public key consists of matrix uniformly distributed in and vector uniformly distributed in . We want to replace it with the Module-SIS instance , where . The view of adversary does not change if we set .

In order to conclude the proof, we need to invoke the forking lemma to receive two valid forgeries from the adversary that are constructed using the same commitment but different challenges . Using these forgeries, it is possible to find a solution to the Module-SIS problem on input or to break the collision resistance of the homomorphic hash function.

As both forgeries and are valid, it holds that

If , then we found a collision for the homomorphic hash function. If , then it can be rearranged as and this in turn leads to

Considering that is an instance of Module-SIS problem, we found a solution for Module-SIS with parameters , where .

5. Performance

In this section, we analyse the performance of our scheme according to the following metrics:

- Number of communication rounds in key generation and signing protocols,

- Keys and signature sizes, and

- Number of rejection sampling rounds.

It should be noted that this section does not present the exact parameter choice for the scheme and does not argue the bit security of the scheme for these parameters. The parameter choice presented in this section is illustrative and is given to provide performance estimations of the proposed scheme. Choosing correct parameters for post-quantum schemes is a nontrivial multidimensional optimisation task as parameters should be chosen such that the scheme has the small signature and key sizes while having enough bits of security and an optimal number of communication rounds. Additionally, the security of the proposed scheme relies on rejected Module-LWE, which is not a well-studied assumption, and therefore, it is difficult to estimate the bit security of the proposed scheme. The parameters presented in Table 2 are chosen based on parameters proposed in Crystals-Dilithium [18] so that the expected number of repetitions of the signing process is practical.

Table 2.

Illustrative parameters.

5.1. Number of Rejection Sampling Rounds

To estimate the number of rejection sampling rounds in the signing process, it is necessary to compute the probability that the following holds for both parties: and . Let be a coefficient of . If coefficients of are in the range , then the corresponding coefficients of are in the range . Therefore, the size of the correct coefficient range for is and the coefficients of have possibilities. Then, the probability that every coefficient of in the correct range is as follows:

As the client and server sample vectors independently in the beginning of the signing protocol, the probability that the check succeeds for both signature components on the client and server side is the following:

The expected number of repetitions can be estimated as .

5.2. Signature and Key Sizes

The public key consists of two components: matrix and vector . The matrix can be generated out of 256-bit seed using an extendable output function, as proposed in the Crystals-Dilithium signature scheme [18]. While using this approach, only the seed used to generate the matrix needs to be stored. As both parties need to generate their matrix share, two seeds should be stored to represent matrix . Each seed is converted to the matrix form using an extendable output function; after that, two matrix shares can be added together. The size of the public key in bytes is as follows:

The secret key of the party consists of two vectors , , matrix , and vector . It should be noted that vectors , may contain negative values as well, so one bit should be reserved for each coefficient to indicate the sign. Therefore, the size of the secret key in bytes can be computed as follows:

Finally, a signature consists of three components: , and with exactly coefficients being either or 1 and the rest being 0. All of the components may contain negative values, so for each coefficient of c one bit should be reserved to indicate the sign. With regard to storing c, it is possible to store only the positions of and 1 in c. Therefore, the size of the signature in bytes can be computed as

In order to better understand key and signature sizes, let us assume the choice of parameters defined in Table 2. The key and signature sizes corresponding to this choice of parameters are listed in Table 3.

Table 3.

Key and signature sizes and expected number of repetitions of the signing protocol.

5.3. Communication between Client and Server

In order to generate a key pair, four rounds of communication between the client and server are needed. Table 4 shows the sizes of messages that are exchanged between the client and server during the key generation process using illustrative parameters from Table 2. The first message is output of a hash function (commitment to matrix ), which consists of 256 bits. The second message contains not the matrix share itself but the seed of 256 bits that was use to generate it. The third message is output of a hash function (commitment to vector ), which consists of 256 bits. The fourth message is the share of public key , the size of which is bytes.

Table 4.

Message sizes in the key generation process.

The number of communication rounds during the signing process depends on the number of rejections E. If there are no rejections, the signature generation process requires three rounds of communication between the client and server. For E rejections, the number of communication rounds equals to . Table 5 shows the sizes of messages exchanged between the client and server during the signing process using illustrative parameters from Table 2. The first message is output of a hash function (commitment to ), which consists of 256 bits. The size of the second message in the signing process is caused by the structure of SWIFFT hash function. To calculate , the vector that consists of elements is divided into 15 input blocks of 256 bytes each. The output produced by the homomorphic hash function consists of 15 blocks of 128 bytes each. The third message consists of the signature shares , the size of which is bytes.

Table 5.

Message sizes in the signing process.

6. Comparison to Prior Work

Table 6 presents the comparison of our scheme DiLizium with other lattice-based threshold signature schemes [16,27,40]. Column “Rounds” shows the number of communication rounds in signing protocol; for the schemes with rejection sampling, it is assumed that the rejection sample passes from the first attempt. We also provide a more detailed comparison with [16], due to the fact both works are based on variants of Crystals-Dilithium and have a similar structure. We leave out the comparison with publications [21,22,23,24,25,26] as these discuss multisignatures instead of threshold.

Table 6.

Comparison with prior work.

The threshold signature schemes from [16] are based on Dilithium-G, which is a version of Crystals-Dilithium that uses sampling from a discrete Gaussian distribution for the generation of secret vectors. The usage of Gaussian distribution helps to decrease the number of rejections in signature schemes that follow the FSwA paradigm [16]. However, the implementation of sampling from a discrete Gaussian distribution in a manner secure against side-channel attacks is considered difficult. Therefore, in our scheme, we decided to use sampling from a uniform distribution.

Due to the structure of our scheme, we use a non-standard security assumption that was introduced in [26]. In future work, we aim to modify security proof such that it will no longer be needed to rely on rejected Module-LWE. The security of threshold signature scheme from [16] relies only on standard problem: Module-LWE and Module-SIS.

Additionally, we compare the message sizes of Dåmgard et al. [16] with our scheme. Since this paper [16] does not provide an instantiation of parameters, we use the recommended parameters for Dilithium-G from [29] for the signature scheme. We only changed the modulus q, since by Theorem 4 [16], q should satisfy . We selected parameters for the homomorphic commitment scheme based on the third parameter set from Baum et al. [42] (Table 2) such that the conditions from Lemma 5 and Lemma 7 are satisfied. Table 7 and Table 8 present parameters that are needed to compute message sizes. We only provide a comparison of messages sent during the signing process because messages exchanged during the key generation process are similar in both schemes (Table 9).

Table 7.

Illustrative parameters for Dåmgard et al. [16].

Table 8.

Illustrative parameters for Dåmgard et al. [16] statistically binding commitment scheme.

Table 9.

Illustrative comparison of DiLizium and Dåmgard et al. message sizes.

The first message is the output of a hash function (commitment to ), which consists of 256 bits. The second message is a commitment . The homomorphic commitment scheme defined in Dåmgard et al. [16] (Figure 7) describes a commitment to a single ring element . In order to commit to a vector , it is proposed to commit to each vector element separately. Therefore, the byte size of the second message can be computed as . The third message consists of a signature share and an opening for the commitment . We know that, for a valid signature share, it holds that and we know that . The signature share may contain negative values, so for each coefficient of , one bit should be reserved to indicate the sign. Therefore, the approximate byte size of the signature share is . For a valid opening, it holds that . The value also may contain negative values, so for each coefficient of , one bit should be reserved to indicate the sign. The approximate byte size of the commitment opening is .

From Table 9, we can see that the size of the second and the third messages in Dåmgard et al.’s scheme is much larger than that in DiLizium. The reason is that scheme Dåmgard et al. uses lattice-based homomorphic commitments. For the signature scheme to be secure, it was required to have statistical binding. Parameters that guarantee statistical binding are not very practical; however, there may exist optimal parameter choice.

Currently, it is not possible to provide a more detailed comparison of the efficiency of these schemes. The main reason is that neither of the works have a reference implementation yet. Therefore, we leave the implementation of the proposed scheme and a detailed comparison for future research.

7. Conclusions

Nowadays, threshold signature schemes have a variety of practical applications. There are several efficient threshold versions of the RSA and (EC)DSA signature schemes that are used in practice. However, threshold instantiations of post-quantum signature schemes are less researched. Previous researches have demonstrated that creating threshold post-quantum signatures is a highly non-trivial task. Some of the proposed schemes yield inefficient implementation, while others have incomplete security proofs.

In this work, we presented a new lattice-based two-party signature scheme: DiLizium. Our construction uses the SWIFFT homomorphic hash function to compute commitment in the signing process. We provide security proof for our scheme in the classical random oracle model under the Module-LWE, rejected Module-LWE, and Module-SIS assumptions. The proposed scheme can potentially substitute distributed RSA and ECDSA signature schemes in authentication applications such as Smart-ID [11]. This would allow for using these applications even in the quantum computing era.

Compared with the scheme proposed in [16], this work does not use sampling from the discrete Gaussian distribution and does not use a lattice-based homomorphic commitment scheme. In the key generation and signing processes, our scheme uses uniform sampling, which facilitates secure implementations in the future.

The security proof of the proposed scheme is based on non-standard security assumption: rejected Module-LWE. Removing this assumption from the security proof is an important part of future work. Furthermore, the concept of homomorphic hash functions is new and has not been properly studied yet. We aim to research the properties and usage of homomorphic hash functions more deeply in future work. The implementation of the proposed scheme and the exact choice of parameters for this implementation is left for future research, which may also involve optimisation of the size of keys and signature, and security proof against the quantum adversary.

Author Contributions

Conceptualisation, J.V.; formal analysis and scheme design, J.V. and N.S.; security proof, J.V.; performance analysis, J.V. and N.S.; writing—original draft preparation, J.V. and N.S.; writing—review and editing, J.V., N.S. and J.W.; visualisation, J.V.; supervision, J.W.; project administration, J.W.; funding acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This paper has been supported by the Estonian Personal Research grant number 920 and European Regional Development Fund through the grant number EU48684.

Acknowledgments

The authors are grateful to Ahto Buldas and Alisa Pankova for their support throughout the process of research.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RSA | Rivest–Shamir–Adleman cryptosystem |

| DSA | Digital Signature Algorithm |

| ECDSA | Elliptic Curve Digital Signature Algorithm |

| NIST | National Institute of Standards and Technology |

| PQC | Post-Quantum Cryptography |

| QSCD | Qualified Electronic Signature Creation Device |

| LWE | Learning with Errors |

| SIS | Short Integer Solution |

| FSwA | Fiat–Shamir with Aborts |

| MPC | Multiparty Computation |

| GPV | Gentry–Peikert–Vaikuntanathan |

| FS | Fiat-Shamir |

| SHAKE | Secure Hash Algorithm and KECCAK |

| DS-UF-CMA | Distributed Signature Unforgeability Against Chosen Message Attacks |

| naHVZK | no-abort Honest-Verifier Zero-Knowledge |

Appendix A. Full Security Proof

This section presents detailed security proof for the two-party signature scheme.

Definition A1(no-abort Honest-Verifier Zero-Knowledge).

An identification scheme is said to be -naHVZK if there exists a probabilistic expected polynomial-time algorithm that is given only the public key and that outputs such that the following holds:

- The distribution of the simulated transcript produced by () has a statistical distance at most from the real transcript produced by the transcript algorithm .

- The distribution of c from the output conditioned on is uniformly random over the set C.

Theorem A1.

Assume a homomorphic hash function is provably collision-resistant and ϵ-regular; then for any probabilistic polynomial time adversary that makes a single query to the key generation oracle, queries to the signing oracle, and queries to the random oracles , the distributed signature protocol is DS-UF-CMA secure in the random oracle model under Module-LWE, rejected Module-LWE, and Module-SIS assumptions.

Proof.

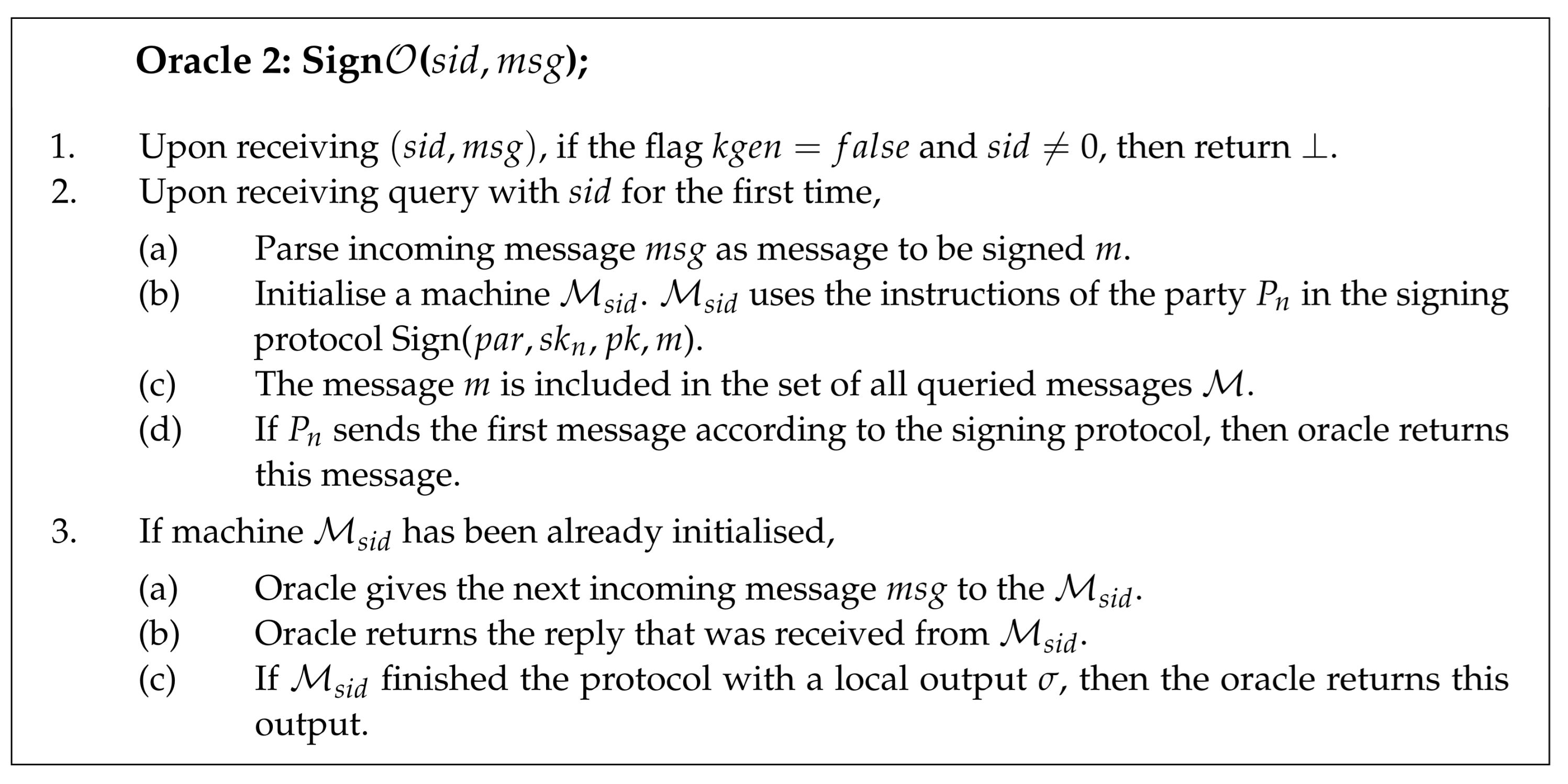

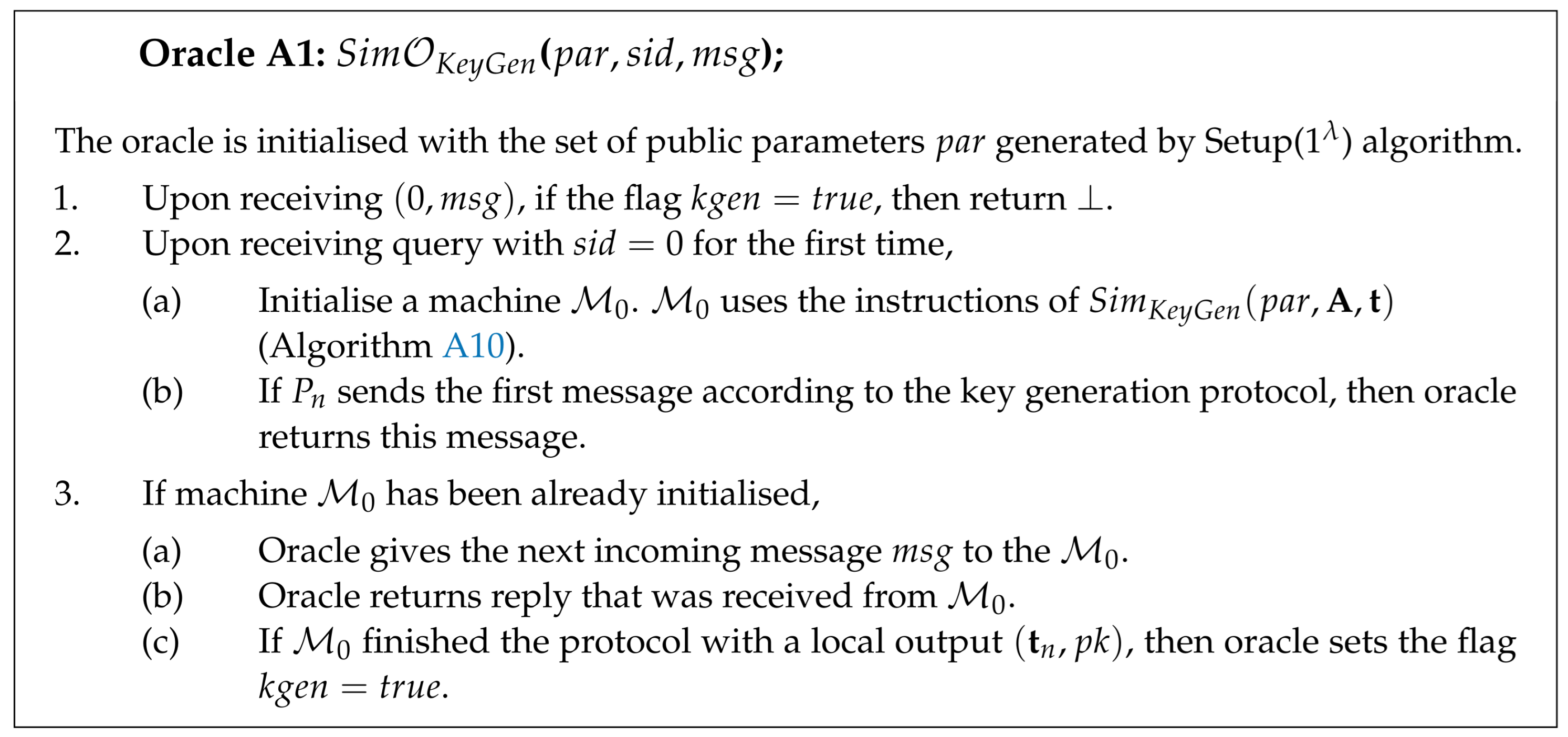

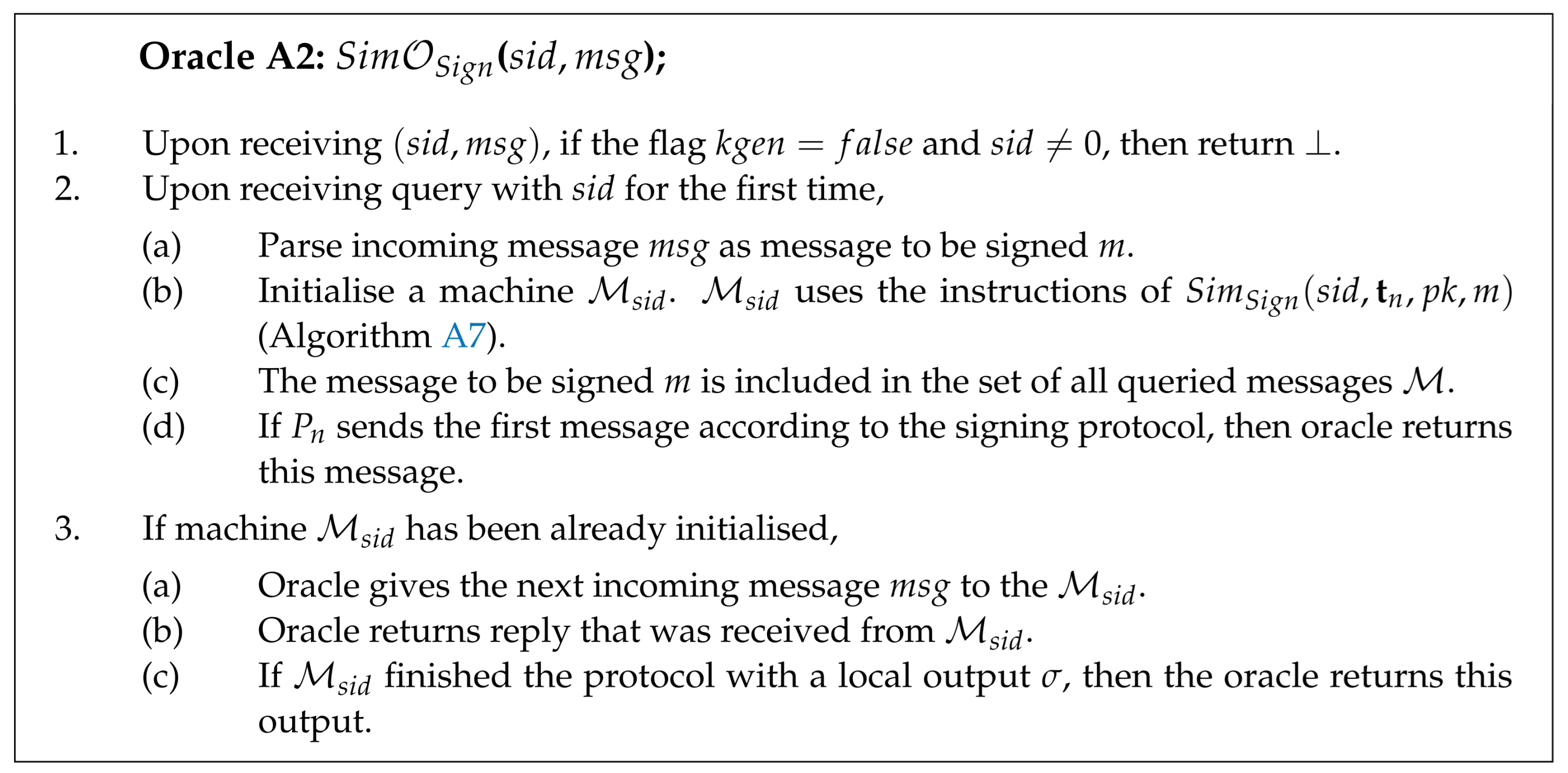

Given an adversary that succeeds in breaking the distributed signature protocol with advantage , a simulator is constructed. simulates the behaviour of the single honest party without using honestly generated secret keys for the computation. Algorithm is constructed such that it fits all the assumptions of the forking lemma. By the definition of forking algorithm, it was required that is given a public key and a random oracle query replies as input. simulates the behaviour of the honest party , and the party is corrupted by the adversary. The algorithm is defined in Algorithm A1.

| Algorithm A1(). |

| 1: Create empty hash tables for . |

| 2: Create a set of queried messages . |

3: Simulate the honest party oracle as follows:

|

4: Simulate random oracles as follows:

|

5: Upon receiving a forgery on message from :

|

Appendix A.1. Random Oracle Simulation

There are several random oracles that need to be simulated:

- [C is a set of all vectors in with exactly nonzero elements]

All of the random oracles are simulated as described in Algorithm A2. Additionally, there is a searchHash() algorithm for searching entries from the hash table defined in Algorithm A3.

| Algorithm A2. |

| is a hash table that is initially empty. |

| 1: On a query x, return element if it was previously defined. |

| 2: Otherwise, sample output y uniformly at random from the range of and return |

| Algorithm A3 searchHash() |

| 1: For value h, find its preimage m in the hash table such that . |

| 2: If preimage of value h does not exist, set flag and set preimage . |

| 3: If for value h more than one preimage exists in hash table , set flag . |

| 4: Output: |

Simulators for the key generation and signing processes were constructed using several intermediate games. The goal was to remove the usage of the actual secret key share of the party from both processes. Let Pr[] denote the probability that does not output (0,⊥) in the game . This means that the adversary must have created a valid forgery (as defined in Algorithm A1). Then, Pr[] . In Game 0, simulates the honest party behaviour using the same instructions as in the original KeyGen and Sign protocols.

Appendix A.2. Game 1

In Game 1, only signing process ics changed with respect to the previous game. The simulator for the signing process in Game 1 is described in Algorithm A4. Challenge c is now sampled uniformly at random, and the signature shares are computed without communicating with the adversary. Changes with respect to the previous game are highlighted.

| Algorithm A4. |

| 1: . |

| 2: . |

| 3: . |

| 4: and . |

| 5: , send out . |

| 6: Upon receiving , search for . |

| 7: If the flag is set, then simulation fails with output . If the flag is set, then send out . |

| 8: . |

| 9: Program random oracle to respond queries with c. Set . If has been already set, set flag and the simulation fails with output . |

10: Send out . Upon receiving :

|

| 11: Otherwise, run rejection sampling, if it did not pass: send out RESTART and go to the step 1. |

| 12: Otherwise, send out . Upon receiving RESTART, go to step 1. |

| 13: Upon receiving , reconstruct and check that , if not: send out ABORT. |

| 14: Otherwise, set , and output composed signature . |

Game 0 → Game 1:

The difference between Game 0 and Game 1 can be expressed using the events that can happen with the following probabilities:

- Pr[] is the probability that at least one collision occurs during at most queries to the random oracle made by adversary or simulator. This means that two values were found such that . As all of the responses of are chosen uniformly at random from and there are at most queries to the random oracle , the probability of at least one collision occurring can be expressed as , where is the length of output.

- Pr[] is the probability that programming random oracle fails at least once during queries. This event can happen in the following two cases: was previously queried by the adversary or it was not queried by the adversary:

- -

- Case 1: has been already asked by adversary during at most queries to . This means that the adversary knows and may have queried before. This event corresponds to guessing the value of .Let the uniform distribution over be denoted as X and the distribution of output be denoted as Y. As is -regular (for some negligibly small ), it holds that SD . Then, for any subset T of , by definition of statistical distance, it holds that . Therefore, for a uniform distribution X, the probability of guessing Y by T is bounded by .Since was produced by in the beginning of the signing protocol completely independently from , the probability that queried is at most for each query.

- -

- Case 2: has been set by adversary or simulator by chance during at most prior queries to the . Since has not queried before, adversary does not know and the view of is completely independent from . The probability that occurred by chance in one of the previous queries to is at most .

- Pr[] is the probability that the adversary predicted at least one of two outputs of the random oracle without making a query to it. In this case, there is no record in the hash table that corresponds to the preimage . This can happen with probability at most for each signing query.

Therefore, the difference between two games is

Appendix A.3. Game 2

In Game 2, when the signature share gets rejected, simulator commits to a uniformly random vector from the ring instead of committing to a vector computed as . The simulator for the signing process in Game 2 is described in Algorithm 5.

Game 1 → Game 2:

The difference between Game 1 and Game 2 can be expressed with the probability that the adversary can distinguish simulated commitment with random from the real one when the rejection sampling algorithm does not pass. If the signature shares are rejected, it means that or .

Let us assume that there exists an adversary who succeeds in distinguish simulated commitment with random from the real one with nonnegligible probability:

Then, the adversary can be used to construct an adversary who solves the rejected Module-LWE for parameters , where U is the uniform distribution. The adversary is defined in Algorithm A6.

| Algorithm A5. |

| 1: . |

| 2:. |

| 3: and . |

| 4: Run rejection sampling; if it does not pass, proceed as follows: |

| 1. . |

| 2. , send out . |

| 3. Upon receiving , search for . |

| 4. If the flag is set, then simulation fails with output . If the flag is set, then send out . |

| 5. . |

| 6. Program random oracle to respond queries with c. Set . If has been already set, set flag and the simulation fails with output . |

7. Send out . Upon receiving :

|

| 8. Otherwise, send out RESTART and go to step 1. |

| 5: If rejection sampling passes, proceed as follows: |

| 1. . |

| 2. , send out . |

| 3. Upon receiving , search for . |

| 4. If the flag is set, then simulation fails with output . If the flag is set, then continue. |

| 5. . |

| 6. Program random oracle to respond queries with c. Set . If has been already set, set flag and the simulation fails with output . |

7. Send out .Upon receiving :

|

| 8. Otherwise, send out . Upon receiving RESTART, go to step 1. |

| 9. Upon receiving , reconstruct and check that , if not: send out ABORT. |

| 10. Otherwise, set , and output composed signature . |

| Algorithm A6. |

| 1: |

| 2: |

| 3: return |

As a consequence, the difference between the two games is bounded by the following:

Appendix A.4. Game 3

In Game 3, the simulator does not generate the signature shares honestly and thus does not perform rejection sampling honestly. Rejection sampling is simulated as follows:

- Rejection case: with probability simulator generates commitment to the random as in the previous game.

- Otherwise, sample signature shares from the set and compute out of it.

The simulator for the signing process in Game 3 is described in Algorithm A7.

Game 2 → Game 3:

The signature shares generated in Algorithm A7 are indistinguishable from the real ones because of the -naHVZK property of the underlying identification scheme from [13], appendix B. Therefore, the difference between Game 2 and Game 3 can be defined as follows:

According to the proof from [13], for the underlying identification scheme.

| Algorithm A7. |

1: With probability , proceed as follows:

|

2: Otherwise, proceed as follows:

|

Appendix A.5. Game 4

Now, the signing process does not rely on the actual secret key share of the honest party . In the next games, the key generation process is changed so that it does not use secret keys as well. In this game, the simulator is given a predefined uniformly random matrix , and the simulator defines its own matrix share out of it. By definition, the algorithm (Algorithm A1) receives a pre-generated public key as the input. Therefore, the simulator in Game 4 is given a predefined matrix , and in the later games, the simulator is changed so that it receives the entire public key and uses it to compute its shares . The simulator for the key generation process in Game 4 is described in Algorithm A8.

| Algorithm A8). |

| 1: Send out |

| 2: Upon receiving : |

|

| 3: Program random oracle to respond queries with . Set . If has been already set, then set the flag and the simulation fails with output . |

4: Send out . Upon receiving :

|

| 5: (, ) . |

| 6: , send out . |

| 7: Upon receiving , send out . |

| 8: Upon receiving , check that . If not: send out ABORT. |

| 9: Otherwise, , and . |

Game 3 → Game 4:

The distribution of public matrix does not change between Game 3 and Game 4. The difference between Game 3 and Game 4 can be expressed using events that happen with the following probabilities:

- Pr[] is the probability that at least one collision occurs during at most queries to the random oracle made by adversary or simulator. This can happen with probability at most , where is the length of output.

- Pr[] is the probability that programming random oracle fails, which happens if has been previously asked by adversary during at most queries to the random oracle . This event corresponds to guessing random , for each query the probability of this event is bounded by .

- Pr[] is the probability that adversary predicted at least one of two outputs of the random oracle without making a query to it. This can happen with probability at most .

Therefore, the difference between the two games is

Appendix A.6. Game 5

In Game 5, the simulator picks public key share randomly from the ring instead of computing it using secret keys. The simulator for the key generation process in Game 5 is described in Algorithm A9.

| Algorithm A9. |

| 1: Send out . |

2: Upon receiving :

|

| 3: Program random oracle to respond queries with . Set . If has been already set, then set the flag and the simulation fails with output . |

4: Send out . Upon receiving :

|

| 5: , send out . |

| 6: Upon receiving , send out . |

| 7: Upon receiving , check that . If not: send out ABORT. |

| 8: Otherwise, , . |

Game 4 → Game 5:

In Game 5, public key share is sampled uniformly at random from instead of computing it as , where are random elements from . As matrix follows the uniform distribution over , if adversary can distinguish between Game 3 and Game 4, this adversary can be used as a distinguisher that breaks the decisional Module-LWE problem for parameters , where U is the uniform distribution.

Therefore, the difference between two games is bounded by the advantage of adversary in breaking decisional Module-LWE:

Appendix A.7. Game 6

In Game 6, the simulator uses as input a random resulting public key to compute its own share . The simulator for the key generation process in Game 6 is described in Algorithm A10.

Game 5 → Game 6:

The distributions of do not change with respect to Game 5. The difference between Game 5 and Game 6 can be expressed using events that happen with the following probabilities:

- Pr[] is the probability that at least one collision occurs during at most queries to the random oracle made by adversary or simulator. This can happen with probability at most , where is the length of output.

- Pr[] is the probability that programming random oracle fails, which happens if was previously asked by adversary during at most queries to the random oracle . This event corresponds to guessing a uniformly random , for each query the probability of this event is bounded by .

- Pr[] is the probability that adversary predicted at least one of two outputs of the random oracle without making a query to it. This can happen with probability at most .

Therefore, the difference between the two games is

| Algorithm A10. |

| 1: Send out . |

2: Upon receiving :

|

| 3: Program random oracle to respond queries with . Set . If has been already set, then set the flag and the simulation fails with output . |

4: Send out . Upon receiving :

|

| 5: Send out . |

| 6: Upon receiving , search for . |

| 7: If the flag is set, then simulation fails with output . |

8: Compute public key share:

|

| 9: Program random oracle to respond queries with . Set . If has been already set, set flag and the simulation fails with output . |

10: Send out . Upon receiving :

|

| 11: Otherwise, , . |

Appendix A.8. Forking Lemma

Now, both key generation and signing do not rely on the actual secret key share of the honest party . In order to conclude the proof, it is needed to invoke forking lemma to receive two valid forgeries that are constructed using the same commitment but different challenges .

Currently, the combined public key consists of matrix uniformly distributed in and vector uniformly distributed in . We want to replace it with Module-SIS instance , where . The view of adversary will not be changed if we set .

Let us define an input generation algorithm such that it produces the following input: for the . Now, let us construct around the previously defined simulator . invokes the forking algorithm on the input .

As a result, with probability two valid forgeries and are obtained. Here, by the construction of , it holds that , . The probability satisfiesollowing:

Since both signatures are valid, it holds that

Let us examine the following cases:

Case 1 :

, and is able to break the collision resistance of the hash function (that is hard under the worst-case difficulty of finding short vectors in cyclic/ideal lattices), as was proven in [35,36].

Case 2 :

. It can be rearranged as , and this, in turn, leads to

Now, recall that is an instance of Module-SIS problem; this means that we found a solution for Module-SIS with parameters , where .

Therefore, the probability is the following:

Finally, taking into account that the underlying identification scheme has perfect naHVZK (i.e., ), the advantage of the adversary is bounded by the following:

☐

References

- Shor, P.W. Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer. SIAM J. Comput. 1997, 26, 1484–1509. [Google Scholar] [CrossRef] [Green Version]

- Shor, P.W. Algorithms for quantum computation: Discrete logarithms and factoring. In Proceedings of the 35th Annual Symposium on Foundations of Computer Science, Santa Fe, NM, USA, 20–22 November 1994; pp. 124–134. [Google Scholar] [CrossRef]

- Chen, L.; Jordan, S.; Liu, Y.K.; Moody, D.; Peralta, R.; Perlner, R.; Smith-Tone, D. Report on Post-Quantum Cryptography; Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2016. [Google Scholar] [CrossRef]

- Shoup, V. Practical Threshold Signatures. In Proceedings of the International Conference on the Theory and Applications of Cryptographic Techniques; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1807, pp. 207–220. [Google Scholar] [CrossRef] [Green Version]

- Damgård, I.; Koprowski, M. Practical Threshold RSA Signatures without a Trusted Dealer. In International Conference on the Theory and Applications of Cryptographic Techniques; Springer: Berlin/Heidelberg, Germany, 2001; Volume 2045, pp. 152–165. [Google Scholar] [CrossRef] [Green Version]

- MacKenzie, P.D.; Reiter, M.K. Two-party generation of DSA signatures. Int. J. Inf. Sec. 2004, 2, 218–239. [Google Scholar] [CrossRef]

- Lindell, Y. Fast Secure Two-Party ECDSA Signing. In Proceedings of the Annual International Cryptology Conference; Katz, J., Shacham, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; Volume 10402, pp. 613–644. [Google Scholar] [CrossRef]

- Doerner, J.; Kondi, Y.; Lee, E.; Shelat, A. Secure Two-party Threshold ECDSA from ECDSA Assumptions. In Proceedings of the 2018 IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 20–24 May 2018; pp. 980–997. [Google Scholar] [CrossRef]

- Buldas, A.; Kalu, A.; Laud, P.; Oruaas, M. Server-Supported RSA Signatures for Mobile Devices. In Proceedings of the Computer Security– ESORICS 2017, Oslo, Norway, 11–15 September 2017; pp. 315–333. [Google Scholar]

- SK ID Solutions. eID Scheme: SMART-ID; Public Version, 1.0. 2019. Available online: https://www.ria.ee/sites/default/files/content-editors/EID/smart-id_skeemi_kirjeldus.pdf (accessed on 29 July 2021).

- Solutions, S.I. Smart-ID Is a Smart Way to Identify Yourself. 2021. Available online: https://www.smart-id.com/ (accessed on 15 April 2021).

- Cozzo, D.; Smart, N.P. Sharing the LUOV: Threshold Post-quantum Signatures. In Proceedings of the Cryptography and Coding—17th IMA International Conference, IMACC 2019, Oxford, UK, 16–18 December 2019; Volume 11929, pp. 128–153. [Google Scholar] [CrossRef]

- Kiltz, E.; Lyubashevsky, V.; Schaffner, C. A Concrete Treatment of Fiat-Shamir Signatures in the Quantum Random-Oracle Model. Cryptology ePrint Archive, Report 2017/916. 2017. Available online: https://eprint.iacr.org/2017/916 (accessed on 29 July 2021).

- Bruinderink, L.G.; Hülsing, A.; Lange, T.; Yarom, Y. Flush, Gauss, and Reload—A Cache Attack on the BLISS Lattice-Based Signature Scheme. In Proceedings of the Cryptographic Hardware and Embedded Systems—CHES 2016—18th International Conference, Santa Barbara, CA, USA, 17–19 August 2016; Volume 9813, pp. 323–345. [Google Scholar] [CrossRef] [Green Version]

- Espitau, T.; Fouque, P.; Gérard, B.; Tibouchi, M. Side-Channel Attacks on BLISS Lattice-Based Signatures: Exploiting Branch Tracing against strongSwan and Electromagnetic Emanations in Microcontrollers. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, CCS 2017, Dallas, TX, USA, 30 October–3 November 2017; pp. 1857–1874. [Google Scholar] [CrossRef] [Green Version]

- Damgård, I.; Orlandi, C.; Takahashi, A.; Tibouchi, M. Two-round n-out-of-n and Multi-Signatures and Trapdoor Commitment from Lattices. Cryptology ePrint Archive, Report 2020/1110. 2020. Available online: https://eprint.iacr.org/2020/1110 (accessed on 29 July 2021).

- Moody, D.; Alagic, G.; Apon, D.C.; Cooper, D.A.; Dang, Q.H.; Kelsey, J.M.; Liu, Y.K.; Miller, C.A.; Peralta, R.C.; Perlner, R.A.; et al. Status Report on the Second Round of the NIST Post-Quantum Cryptography Standardization Process; Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2020. [Google Scholar] [CrossRef]

- Lyubashevsky, V.; Ducas, L.; Kiltz, E.; Lepoint, T.; Schwabe, P.; Seiler, G.; Stehle, D.; Bai, S. CRYSTALS-Dilithium. Algorithm Specifications and Supporting Documentation. 2020. Available online: https://csrc.nist.gov/projects/post-quantum-cryptography/round-3-submissions (accessed on 29 July 2021).

- Prest, T.; Fouque, P.A.; Hoffstein, J.; Kirchner, P.; Lyubashevsky, V.; Pornin, T.; Ricosset, T.; Seiler, G.; Whyte, W.; Zhang, Z. Falcon: Fast-Fourier Lattice-Based Compact Signatures over NTRU. 2020. Available online: https://csrc.nist.gov/projects/post-quantum-cryptography/round-3-submissions (accessed on 29 July 2021).

- Ding, J.; Chen, M.S.; Petzoldt, A.; Schmidt, D.; Yang, B.Y.; Kannwischer, M.; Patarin, J. Rainbow—Algorithm Specification and Documentation. The 3rd Round Proposal. 2020. Available online: https://csrc.nist.gov/projects/post-quantum-cryptography/round-3-submissions (accessed on 29 July 2021).

- Bansarkhani, R.E.; Sturm, J. An Efficient Lattice-Based Multisignature Scheme with Applications to Bitcoins. In Proceedings of the Cryptology and Network Security—15th International Conference, CANS 2016, Milan, Italy, 14–16 November 2016; Volume 10052, pp. 140–155. [Google Scholar] [CrossRef]

- Fukumitsu, M.; Hasegawa, S. A Tightly-Secure Lattice-Based Multisignature. In Proceedings of the 6th on ASIA Public-Key Cryptography Workshop, APKC@AsiaCCS 2019, Auckland, New Zealand, 8 July 2019; pp. 3–11. [Google Scholar] [CrossRef]

- Tso, R.; Liu, Z.; Tseng, Y. Identity-Based Blind Multisignature From Lattices. IEEE Access 2019, 7, 182916–182923. [Google Scholar] [CrossRef]

- Ma, C.; Jiang, M. Practical Lattice-Based Multisignature Schemes for Blockchains. IEEE Access 2019, 7, 179765–179778. [Google Scholar] [CrossRef]

- Toluee, R.; Eghlidos, T. An Efficient and Secure ID-Based Multi-Proxy Multi-Signature Scheme Based on Lattice. Cryptology ePrint Archive, Report 2019/1031. 2019. Available online: https://eprint.iacr.org/2019/1031 (accessed on 29 July 2021).

- Fukumitsu, M.; Hasegawa, S. A Lattice-Based Provably Secure Multisignature Scheme in Quantum Random Oracle Model. In Proceedings of the Provable and Practical Security—14th International Conference, ProvSec 2020, Singapore, 29 November–1 December 2020; Volume 12505, pp. 45–64. [Google Scholar] [CrossRef]

- Bendlin, R.; Krehbiel, S.; Peikert, C. How to Share a Lattice Trapdoor: Threshold Protocols for Signatures and (H)IBE. In Proceedings of the Applied Cryptography and Network Security—11th International Conference, ACNS 2013, Banff, AB, Canada, 25–28 June 2013; Volume 7954, pp. 218–236. [Google Scholar] [CrossRef] [Green Version]

- Kansal, M.; Dutta, R. Round Optimal Secure Multisignature Schemes from Lattice with Public Key Aggregation and Signature Compression. In Proceedings of the Progress in Cryptology—AFRICACRYPT 2020—12th International Conference on Cryptology in Africa, Cairo, Egypt, 20–22 July 2020; Volume 12174, pp. 281–300. [Google Scholar] [CrossRef]

- Ducas, L.; Lepoint, T.; Lyubashevsky, V.; Schwabe, P.; Seiler, G.; Stehle, D. CRYSTALS—Dilithium: Digital Signatures from Module Lattices. Cryptology ePrint Archive, Report 2017/633. 2017. Available online: https://eprint.iacr.org/2017/633 (accessed on 29 July 2021).

- Pessl, P.; Bruinderink, L.G.; Yarom, Y. To BLISS-B or not to be: Attacking strongSwan’s Implementation of Post-Quantum Signatures. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, CCS 2017, Dallas, TX, USA, 30 October–3 November 2017; pp. 1843–1855. [Google Scholar] [CrossRef] [Green Version]

- Bellare, M.; Neven, G. Multi-signatures in the plain public-key model and a general forking lemma. In Proceedings of the 13th ACM Conference on Computer and Communications Security—CCS’06, Alexandria, VA, USA, 30 October–3 November 2006. [Google Scholar] [CrossRef] [Green Version]

- Fiat, A.; Shamir, A. How to Prove Yourself: Practical Solutions to Identification and Signature Problems. In Proceedings of the Advances in Cryptology—CRYPTO’86, Santa Barbara, CA, USA, 11–15 August 1986; Voloume 263; pp. 186–194. [Google Scholar] [CrossRef] [Green Version]

- Lyubashevsky, V. Fiat-Shamir with Aborts: Applications to Lattice and Factoring-Based Signatures. In Proceedings of the Advances in Cryptology—ASIACRYPT 2009, 15th International Conference on the Theory and Application of Cryptology and Information Security, Tokyo, Japan, 6–10 December 2009; Volume 5912, pp. 598–616. [Google Scholar]

- Güneysu, T.; Lyubashevsky, V.; Pöppelmann, T. Practical Lattice-Based Cryptography: A Signature Scheme for Embedded Systems. In Proceedings of the Cryptographic Hardware and Embedded Systems—CHES 2012—14th International Workshop, Leuven, Belgium, 9–12 September 2012; Volume 7428, pp. 530–547. [Google Scholar] [CrossRef] [Green Version]

- Lyubashevsky, V.; Micciancio, D. Generalized Compact Knapsacks Are Collision Resistant. In Proceedings of the Automata, Languages and Programming, 33rd International Colloquium, ICALP 2006, Venice, Italy, 10–14 July 2006; Volume 4052, pp. 144–155. [Google Scholar] [CrossRef]

- Micciancio, D. Generalized Compact Knapsacks, Cyclic Lattices, and Efficient One-Way Functions from Worst-Case Complexity Assumptions. In Proceedings of the 43rd Symposium on Foundations of Computer Science (FOCS 2002), Vancouver, BC, Canada, 16–19 November 2002; pp. 356–365. [Google Scholar] [CrossRef]

- Peikert, C.; Rosen, A. Efficient Collision-Resistant Hashing from Worst-Case Assumptions on Cyclic Lattices. In Proceedings of the Theory of Cryptography, Third Theory of Cryptography Conference, TCC 2006, New York, NY, USA, 4–7 March 2006; Volume 3876, pp. 145–166. [Google Scholar] [CrossRef] [Green Version]

- Lyubashevsky, V.; Micciancio, D.; Peikert, C.; Rosen, A. SWIFFT: A Modest Proposal for FFT Hashing. In Proceedings of the Fast Software Encryption, 15th International Workshop, FSE 2008, Lausanne, Switzerland, 10–13 February 2008; Volume 5086, pp. 54–72. [Google Scholar] [CrossRef] [Green Version]

- Bellare, M.; Neven, G. New Multi-Signature Schemes and a General Forking Lemma. 2005. Available online: https://soc1024.ece.illinois.edu/teaching/ece498ac/fall2018/forkinglemma.pdf (accessed on 29 July 2021).

- Boneh, D.; Gennaro, R.; Goldfeder, S.; Jain, A.; Kim, S.; Rasmussen, P.M.R.; Sahai, A. Threshold Cryptosystems from Threshold Fully Homomorphic Encryption. In Proceedings of the Advances in Cryptology—CRYPTO 2018, Santa Barbara, CA, USA, 19–23 August 2018; pp. 565–596. [Google Scholar]

- Gentry, C.; Peikert, C.; Vaikuntanathan, V. Trapdoors for Hard Lattices and New Cryptographic Constructions. Cryptology ePrint Archive, Report 2007/432. 2007. Available online: https://eprint.iacr.org/2007/432 (accessed on 29 July 2021).

- Baum, C.; Damgård, I.; Lyubashevsky, V.; Oechsner, S.; Peikert, C. More Efficient Commitments from Structured Lattice Assumptions. In Security and Cryptography for Networks; Catalano, D., De Prisco, R., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 368–385. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).