1. Introduction

In the information age, massive amounts of data have been generated over time. These data are closely related to many studies. In mathematics, a time series is a series of data points indexed in time order. Most commonly, a time series [

1] is a sequence taken at successive equally spaced points in time. Time series contains information on time dimension and data dimension, and it exists in many fields such as economy, life science, military science, space science, geology and meteorology, and industrial automation. Time series classification [

2,

3,

4] is an essential task that has attracted widespread attention. Normally, time series classification refers to assign time series patterns to a specific category, for example, judge whether it will rain or not through a series of temperature data [

5] or determine whether the patient has Parkinson’s disease through a period of physiological data [

6,

7]. Dau et al. [

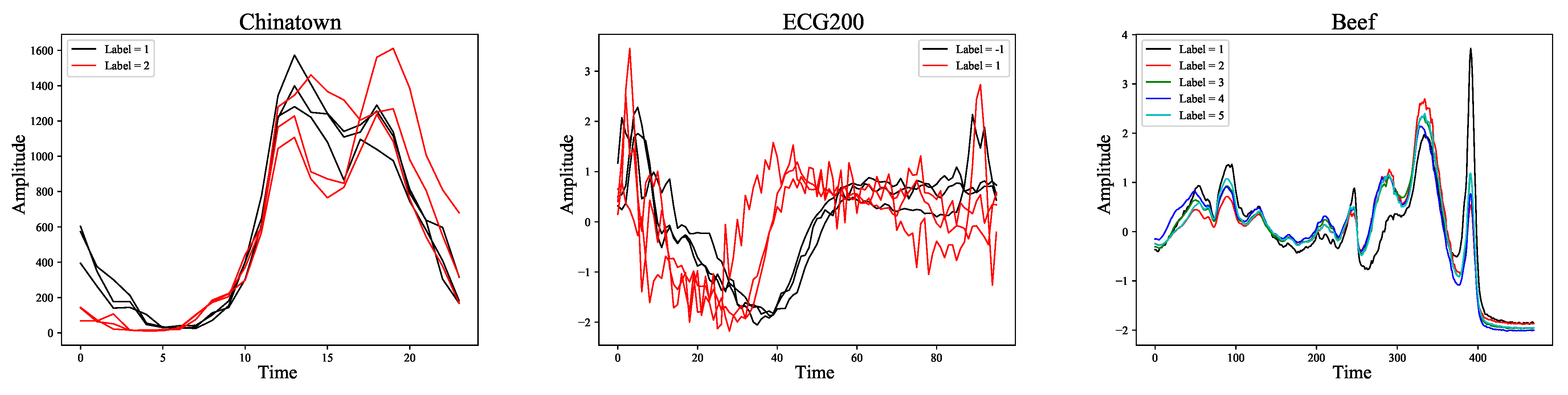

8] proposed UCR Time Series Classification Archive (UCR) for this task, including 128 datasets from different fields such as ECG, Sensor, and Image. In order to understand TSC more intuitively,

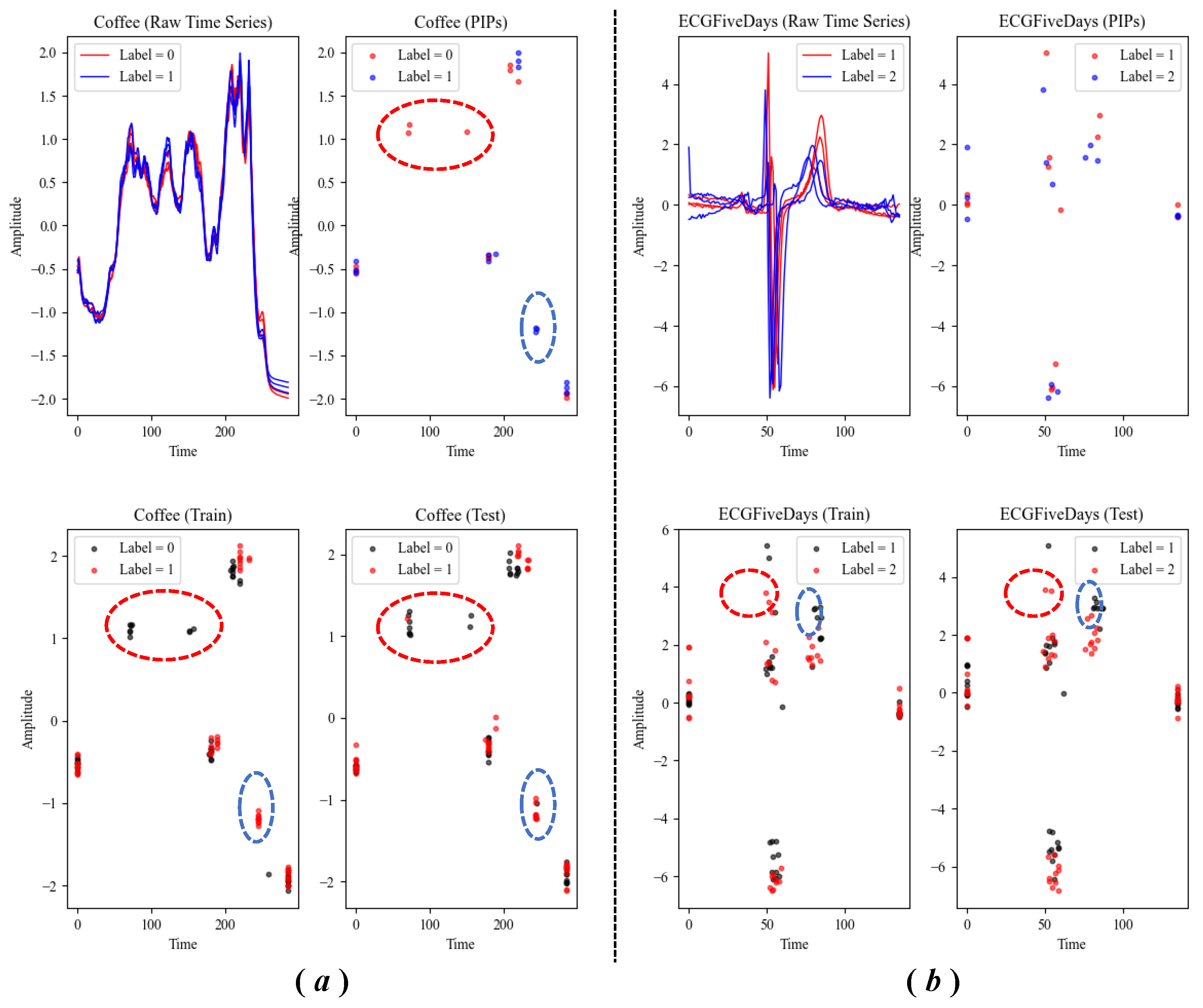

Figure 1 shows some representative datasets in UCR. These datasets almost cover the existing TSC tasks, show the morphological structure of various time series, and lay the foundation for researchers to explore general classification methods. In order to solve this problem, many methods have been proposed, which can be divided into five categories according to their different cores: dictionary-based, distance-based, interval-based, shapelet-based, and kernel-based.

The dictionary-based method refers to the idea of natural language processing. Researchers believe that a time series is a special sentence composed of discrete words or words. How to segment and map the time series into characters is the first issue that needs to be considered. There are three main time series symbolization methods: Piecewise Aggregate Approximation (PAA) [

9,

10], Symbolic Aggregate approXimation (SAX) [

11,

12], and Symbolic Fourier Approximation (SFA) [

13]. Subsequently, the Bag-of-SFA Symbols (BOSS) method based on the bag-of-words model was proposed [

14]. This method records high-frequency symbol features and uses them to distinguish different types of time series samples. Matthew et al. [

15] and James et al. [

16] further proposed Contract BOSS (cBOSS) and Spatial BOSS (S-BOSS). In addition, Word Extraction for Time Series Classification (WEASEL) [

17] is also a typical dictionary-based method composed of a supervised symbolic time series representation for discriminative word generation and the Bag of Patterns (BOP) [

18] model for building a discriminative feature vector.

Many TSC methods focus on the distance between time series. Generally, a time series can be regarded as a point in a multi-dimensional space, and the dimension of this multi-dimensional space depends on the length of the time series. Different types of time series will have different aggregations. At this time, distance is an effective way to distinguish. K-Nearest Neighbors (KNN) and the Elastic Ensemble (EE) [

19] are two commonly used methods. Ben et al. [

20] proposed Proximity Forest to model a decision tree forest that uses distance measures to partition data. It should be noted that since most distance calculations use the form of “one to one”, samples of equal length are necessary. For unequal length sequences, dynamic time warping (DTW) [

21,

22,

23] is a robust calculation method, which can avoid differences in length and shape. Combining KNN and DTW is a way to take advantage of both at the same time [

24,

25].

In reality, different types of time series may have precisely the same statistical characteristics such as mean, variance, standard deviation, and so on [

26]. In order to avoid this problem, the interval-based method focuses on local features rather than overall features. Deng et al. [

27] proposed a Time Series Forest (TSF) model that converts time series into statistical features of sub-sequences and uses random forest for classification. Cabello et al. [

28] further constructed Supervised Time Series Forest (STSF), an ensemble of decision trees built on intervals selected through a supervised process. Random Interval Spectral Ensemble (RISE) is a popular variant of time series forest [

29]. RISE differs from time series forest in two ways. First, it uses a single time series interval per tree. Second, it is trained using spectral features extracted from the series instead of summary statistics. Since RISE relies on frequency information extracted from the time series, it can be defined as a frequency-based classifier.

The shapelet-based method draws inspiration from pattern recognition. Shapelets are defined in [

30,

31] as “subsequences that are in some sense maximally representative of a class”. Informally, if we assume a binary classification setting, a shapelet is discriminant if it is present in most classes and absent from the series of the other class. However, any subsequence may be distinguishable, and the length of the subsequence is arbitrary, which means that all samples and their subsequences need to be checked through a sliding window, and the search space for shapelets is enormous. In response to this problem, Ji et al. [

32,

33] proposed a fast shapelets selection algorithm.

Building on the recent success of convolutional neural networks for time series classification, Dempster et al. [

34] realize that simple linear classifiers using random convolutional kernels achieve state-of-the-art accuracy with a fraction of the computational expense of existing methods. Therefore, they proposed ROCKET, a kernel-based time series classification method. This is a new direction for TSC, which can both reduce computational complexity and improve accuracy.

By analyzing the five classification methods, we realized that the existing algorithms are essentially trying to find efficient distinguishing features by learning all the original information of the sample, which leads to high computational complexity and resource consumption. In fact, for human beings, it does not require all the information to distinguish time series. On the contrary, we only pay attention to a few critical data points, which are enough to describe the approximate outline of time series samples and present a significant distribution. This paper proposes a classification framework based on perceptual features, which can extract support points of morphological structure from the original time series and further obtain interval-level and point-level features for classifiers such as decision trees. The contributions of our work are described below.

An improved algorithm called globally restricted matching perceptually important points (GRM-PIPs) is proposed, which avoids the redundancy caused by sequential matching in traditional important point extraction.

How many data points are necessary to describe complete information? We conducted in-depth research on this question and verified our opinions through mathematical proofs and experiments.

The data points extracted by GRM-PIPs can divide the time series into sub-sequences similar to shapelets. We used statistical features such as mean, standard deviation, slope, skewness, and kurtosis to enhance discrimination further.

Most classifiers learn the information of the original time series, which is not suitable for perceptual features. Therefore, we matched a suitable classifier and proposed a complete perceptual features-based framework.

The remainder of this paper is organized as follows. In

Section 2, related work about PIPs, decision trees, random forests, and gradient boosting decision trees are presented.

Section 3 describes the details about PFC, including GRM-PIPs, perceptual feature extraction, and classifiers adaptation.

Section 4 presents the experimental setup and performance of the approach we proposed, as well as a comparison of the experiments and performances. A discussion about the differences in experimental results is also given in

Section 4. Finally, the conclusions and directions for future research are given in

Section 5.

2. Related Work

2.1. Perceptually Important Points

For time series, avoiding point-to-point local comparison is the key to reducing computational complexity. In time series pattern mining, unique, and frequently occurring patterns can be abstractly represented by several critical points. It is precisely through these points with important visual impacts that humans remember specific time series patterns [

35]. The definition of perceptually important points was first introduced in reference [

36]. The PIPs algorithm can retain the key turning points in the time series, and its ability to capture the critical points in the time series has been verified in the time series segmentation and pattern recognition [

37,

38,

39].

Interestingly, PIPs have been widely used in the research of stock time series. Fu et al. [

40] used PIPs as a new time series segmentation method to extract the uptrend and downtrend patterns. Mojtaba et al. [

41] regard PIPs as a dimensionality reduction method similar to PCA and combine it with support vector regression to predict the trend of the stock market. The turning point in the stock time series indicates a substantial change in the market, and PIPs are sensitive to these dividing points, which is also the advantage of PIPs.

In general, we would define any time series as . This is a classic one-dimensional definition, which treats a time series as a string of data arranged chronologically. However, a one-dimensional data sequence is considered to have no morphological structure and cannot be displayed on a two-dimensional plane. Therefore, we need to upgrade the traditional one-dimensional definition to two-dimensional to explain the calculation process of PIPs. By introducing data in the time dimension, the two-dimensional definition of a time series is , where represents the current data point in the position in the entire time series, and corresponds to amplitude. PIPs uses a concise idea to extract important points in the morphological structure of time series. The process is shown below.

Definition 1. Perceptually Important Points.

Given a time series sample , an empty list is set to save the extracted perceptually important points. In general, when extracting m important points, the following steps should be followed.

Step 1: Put the first point and the last point in T as initial two PIPs into .

Step 2: Check each point in T and calculate the distance between them and and . Choose the point with the largest distance as the third PIP and save it in .

Step 3: The fourth PIP is the point that maximizes its distance to its adjacent PIPs (which are either the first and the third, or the third and the second PIP). It is also necessary to save the fourth PIP into the .

Step 4: For each new PIP, use the same method as the fourth PIP, repeat Step 3 until the length of is equal to m.

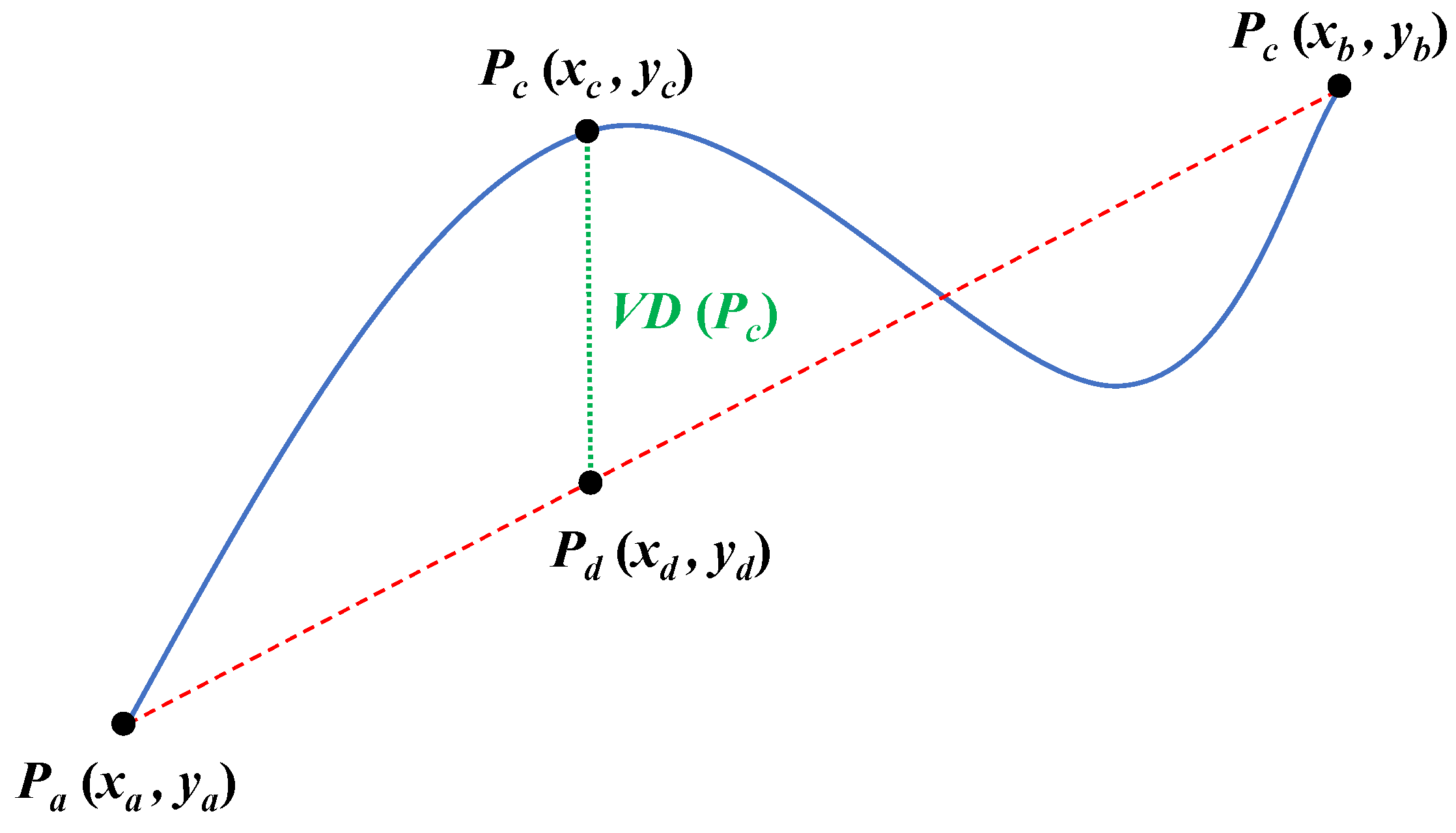

For PIPs, there are three distance measures including the euclidean distance (ED), the perpendicular distance (PD), and the vertical distance (VD). The calculation formula of the vertical distance

between

and the line

is shown in Formula (1) and

Figure 2. We use VD to show the calculation process of PIPs through a simple example.

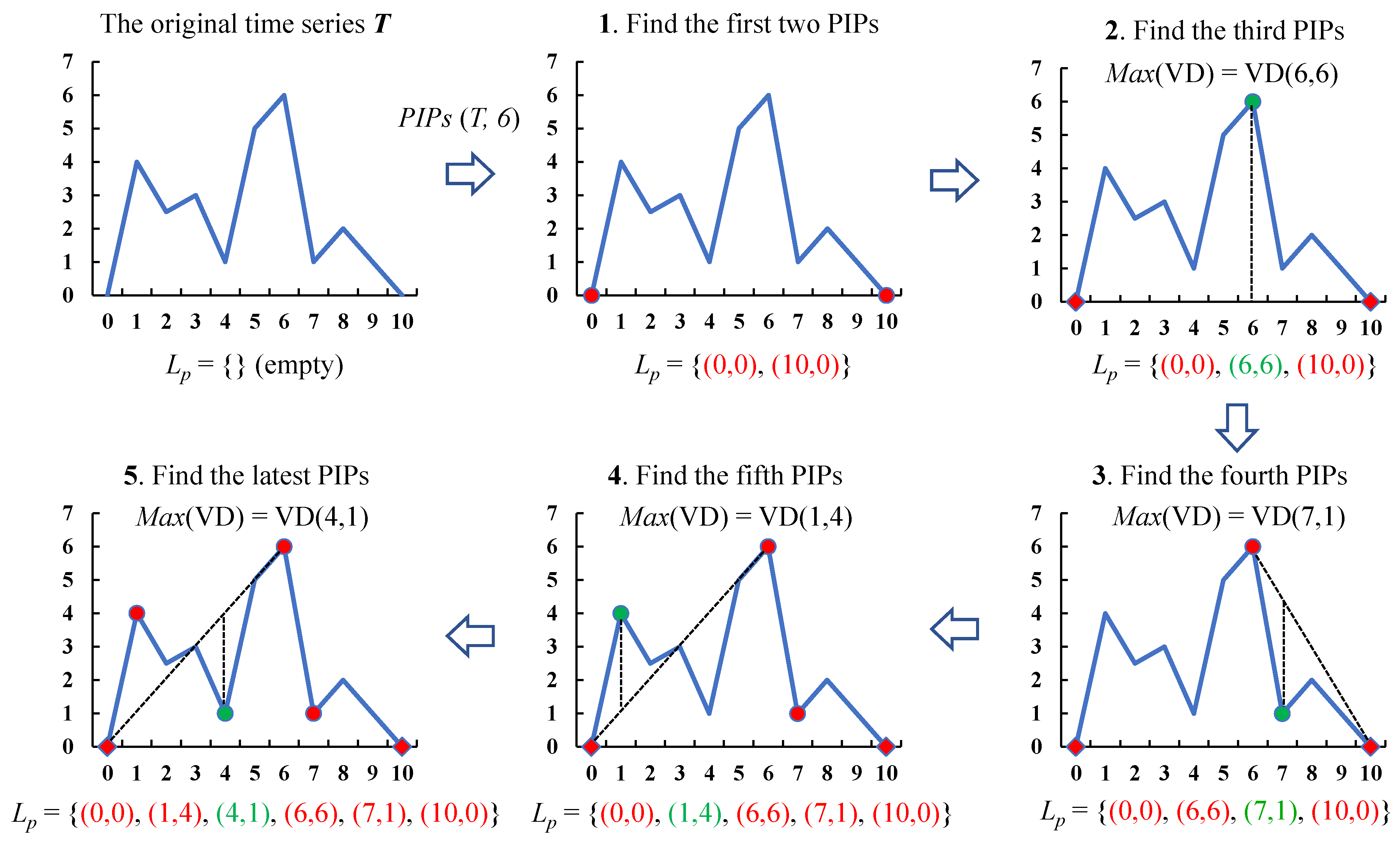

We define the extraction of

m PIPs from the sample

T as

. For example, if the one-dimensional representation of

T is

, its corresponding two-dimensional representation as below. The process of finding six PIPs from

T is shown in

Figure 3.

It should be noted that when there are two points with the same maximum vertical distance, the first calculated point is usually set as the new PIP.

2.2. Decision Tree and Ensemble Methods

A Decision Tree (DT) is a decision support tool that uses a tree-like model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. DT is a non-parameter supervised learning method used for classification and regression. Its purpose is to create a model to learn simple decision rules from data features to predict the value of a target variable [

42]. DT are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in machine learning [

43].

DT is a predictive model in machine learning, which represents a mapping relationship between object attributes and object values. Each node in the tree represents an object, and each bifurcation path represents a possible attribute value, and each leaf node corresponds to the value of the object represented by the path from the root node to the leaf node. The decision tree has only a single output. If you want to have a complex output, you can build an independent decision tree to handle different outputs [

44]. Simultaneously, DT is a frequently used technique in data mining, which can be used to analyze data, and it can also be used to make predictions [

45].

The applications of decision tree on the TSC task mainly has three directions, pattern recognition, shapelet transformation and features selection. Pierre [

4] believed that many time-series classification problems can be solved by detecting and combining local properties or patterns in time series and he proposed a technique based on DT to find patterns which are useful for classification. Qiu et al. [

46] forecast shanghai composite index based on fuzzy time series and improved C-fuzzy decision trees. Willian et al. [

47] explored shapelet transformation for time series classification in decision trees and develop strategies to improve the representation quality of the shapelet transformation. In essence, the DT uses the “if-then-else” rule to learn the data, and the deeper the rule is applied, the better the data fitting will be.

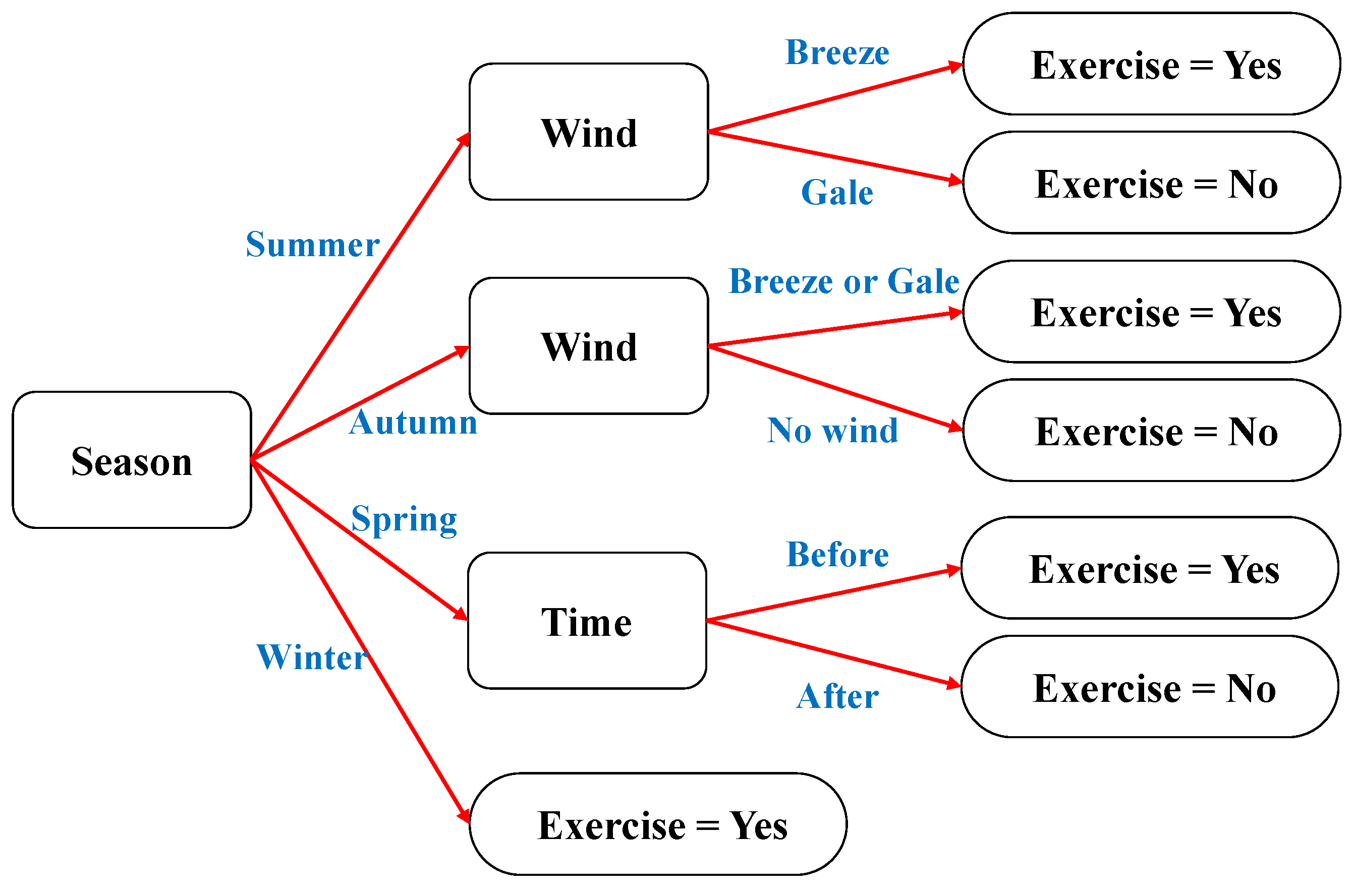

There are too many research results and knowledge about DT, and we would not repeat them specifically. The following is a simple example to introduce DT. We assume a scenario that includes three factors: season, wind, and time. In this scenario, record the data of whether someone is doing morning exercises, as shown in

Table 1. This scenario is a typical classification task, and the decision tree constructed based on

Table 1 is shown in

Figure 4.

The ensemble methods is a high-level application, and the decision tree is regarded as a basic/weak estimator. The goal of the ensemble methods is to combine the predictions of multiple basic estimators to achieve better generalization or robustness than a single estimator. The ensemble methods generally fall into three categories:

Bagging Method. This method usually considers the homogeneous weak estimators, learns these weak estimators independently and in parallel, and combines them according to some deterministic average process [

48]. In general, the combined estimator is better than the single estimator because its variance is reduced. Random forest (RF) [

49] is a typical Bagging method, it can build a large number of decision trees to filter features to get the best decision rule set.

Boosting Method. The core of this method is also a combination of homogeneous weak estimators. It sequentially learns these weak estimators in a highly adaptive method (each basic estimator attempts to reduce the bias of the combined estimator), and combines them according to a certain deterministic strategy. The current popular boosting methods include AdaBoost and Gradient Tree Boosting. Freund and Schapire proposed the former in 1999 [

50]. Its core idea is to train a series of weak estimators by repeatedly modifying the weights of the data [

51]. On the other hand, Gradient Tree Boosting [

52] is a generalization of the lifting algorithm for any differentiable loss function. It can be used for classification and regression and applied to various fields, including web search ranking and ecological environment [

53,

54].

Stacking Method. Different from the previous methods, the stacking method uses heterogeneous estimators, learns them in parallel, and combines them by training a “meta mode” to output a final result according to different predictions [

55].

3. Perceptual Features-Based Framework

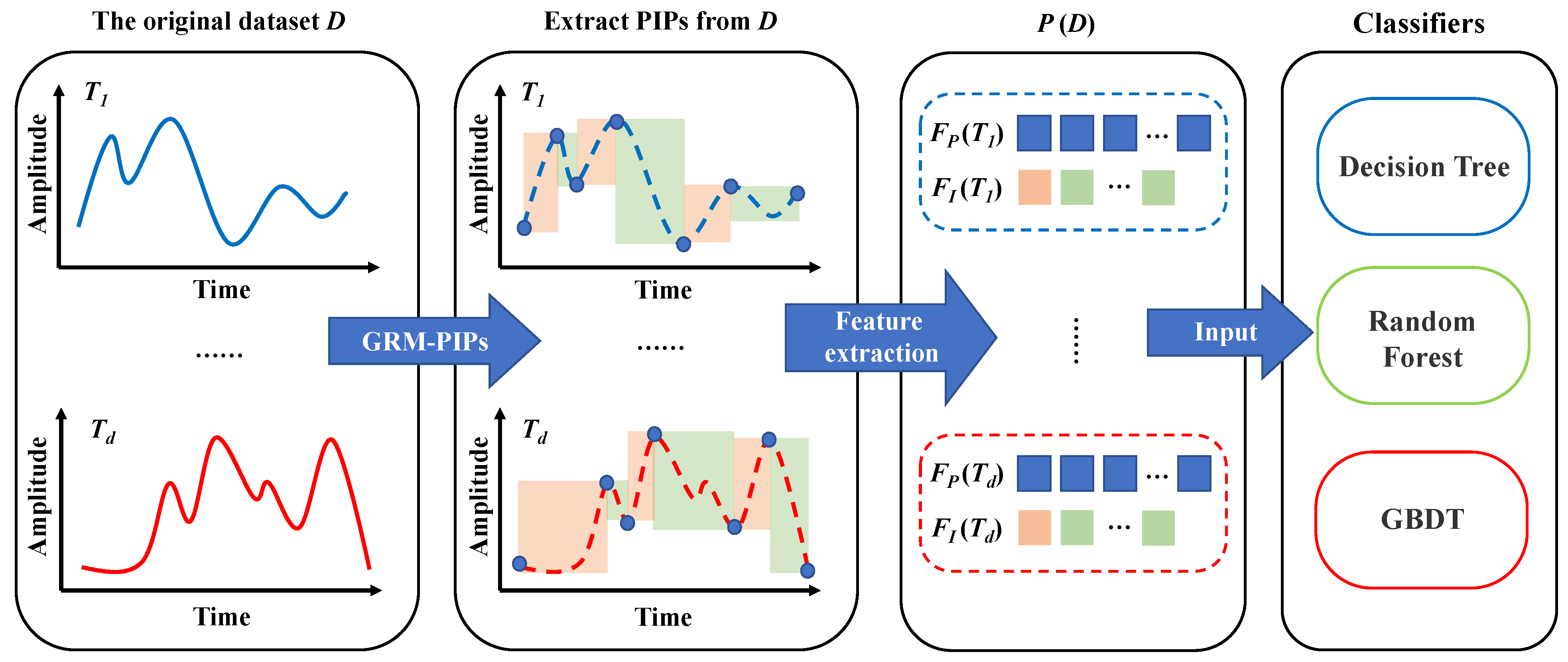

This section will introduce the perceptual features-based framework (PFC) in detail, divided into three parts: time series preprocessing with GRM-PIPs, feature extraction, and classifier. These parts have a precise sequence in our framework.

3.1. Time Series Preprocessing with GRM-PIPs

The purpose of this part is to traverse the time series and extract a certain number of PIPs. Based on the traditional PIPs algorithm, we determined the global optimal selection strategy and proposed a restrictive selection method. The relevant definition is as follows.

Definition 2. Globally Restricted Matching Perceptually Important Points.

Given a time series sample , an empty list is set to save the extracted perceptually important points, the interval between adjacent PIPs is defined as δ with . Commonly, when the number of extracted PIPs m is large enough (), all points in T will be identified as PIPs, but if the parameter δ is considered, the upper limit of PIPs will be further restricted. The calculation steps of GRM-PIPs are as follow.

Step 1: Put the first point and the last point in T as initial two PIPs into .

Step 2: Set a temporary PIP , which can be any point in T, and calculate the vertical distance between and the line . divides the sequence {, ..., } into two subsequences: {, ..., } and {, ..., }. If the length of any subsequence is less than δ, the current should not be considered, and a new point is set as to continue the calculation until a is found that can maximize and satisfy that the length of all subsequences is greater than δ, then save this in as the third PIP.

Step 3: The fourth PIP is the point that maximizes the vertical distance to its adjacent PIPs (which are either the first and the third, or the third and the second PIP) and controls the length of all segmented subsequences are greater than δ. It is also necessary to save the fourth PIP into the .

Step 4: For each new PIP, use the same recursive method as the fourth PIP, repeat Step 3 until the length of is equal to m.

GRM-PIPs ensure a well-distribution of PIPs in the entire time series by adding a restriction on the interval length. A simple example in

Figure 5 is shown to distinguish between the traditional PIPs and the GRM-PIPs proposed by us.

In this example, we set a time series sample

T in (7) with the length

.

Figure 5 shows that the morphological structure of

T is composed of two peaks and one trough. Seven PIPs were extracted from it. There is an apparent difference between the results of GRM-PIPs and PIPs, which are highlighted by red and green circles, respectively. Traditional PIPs are easy to fall into local optima because there is no interval constraint, and the selected PIPs do not contribute to the depiction of the overall structure. GRM-PIPs avoids this problem and accurately extracts PIPs that are more conducive to generalizing structural features.

In GRM-PIPs, affected by the length of interval

, the number of extracted PIPs has an upper limit. In order to calculate the upper limit, we need to define the “quotient” first. Suppose there are two integers

and

,

, there must be a pair of integers

and

satisfy

, and

can be called the quotient of

divided by

, abbreviated as

. In this way, the number of extracted PIPs can be calculated as follows:

It is obvious that the upper limit is closely related to

. In our research, we set

because the subsequent feature extraction determines this value. We would explain the reason in detail in

Section 3.2.

3.2. Feature Extraction

In this paper, we extract two features from time series, including point-level features and interval-level features .

The point-level feature is straightforward, which is the coordinates of PIPs. We found that for different classes of time series, the distributions of PIPs in the two-dimensional space are also significantly different. Most importantly, these special distributions are consistent on the training set and the test set. Therefore, the point-level feature is distinctive and consistent, should be taken seriously. Some representative UCR datasets shown in

Figure 6 confirm our views.

On the other hand, PIPs can generate excellent time series segmentation. Many datasets have no significant differences in the distribution of PIPs. At this time, the interval-level features need to be supplemented to help the classifier further distinguish samples of different categories. There are five interval-level features used by us:

Arithmetic mean. The arithmetic mean (or simply mean)

of a sequence is the sum of all of the amplitudes divided by the length of the sequence

n. This is a rough feature used to describe the average level of all data in the sequence. The calculation of the arithmetic mean follows Formula (4).

Standard deviation. In statistics, the standard deviation

is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean of the set, while a high standard deviation indicates that the values are spread out over a wider range. The standard deviation plays an important role in distinguishing frequently fluctuating series from stable changing series. The calculation of this feature is shown below.

Slope. In mathematics, the slope or gradient of a line is a number that describes both the direction and the steepness of the line. Slope is calculated by finding the ratio of the “vertical change” to the “horizontal change” between (any) two distinct points on a line. We can also abstract any subsequence as a straight line connecting two adjacent PIPs and the trend can be judged by calculating the slope of the interval. For sequence

, its slope can be calculated according to the following formula.

Kurtosis. In probability theory and statistics, kurtosis is a measure of the “tailedness” of the probability distribution of a real-valued random variable. The standard measure of a distribution’s kurtosis is a scaled version of the fourth moment of the distribution. Objectively speaking, kurtosis is not exactly the same as peakedness. Higher kurtosis means that the data has large deviations or extreme abnormal points, which deviate from the mean. However, in most cases, when the amplitude in a period of time in the time series is high, the corresponding kurtosis is high. In the calculation of kurtosis

we use Standard unbiased estimator. It is worth noting that

n represents the number of samples, and the formula needs to calculate

. As part of the denominator, it is required to be

, which means that

n must be a positive integer greater than 3. This is why we require the parameter

to be equal to 4.

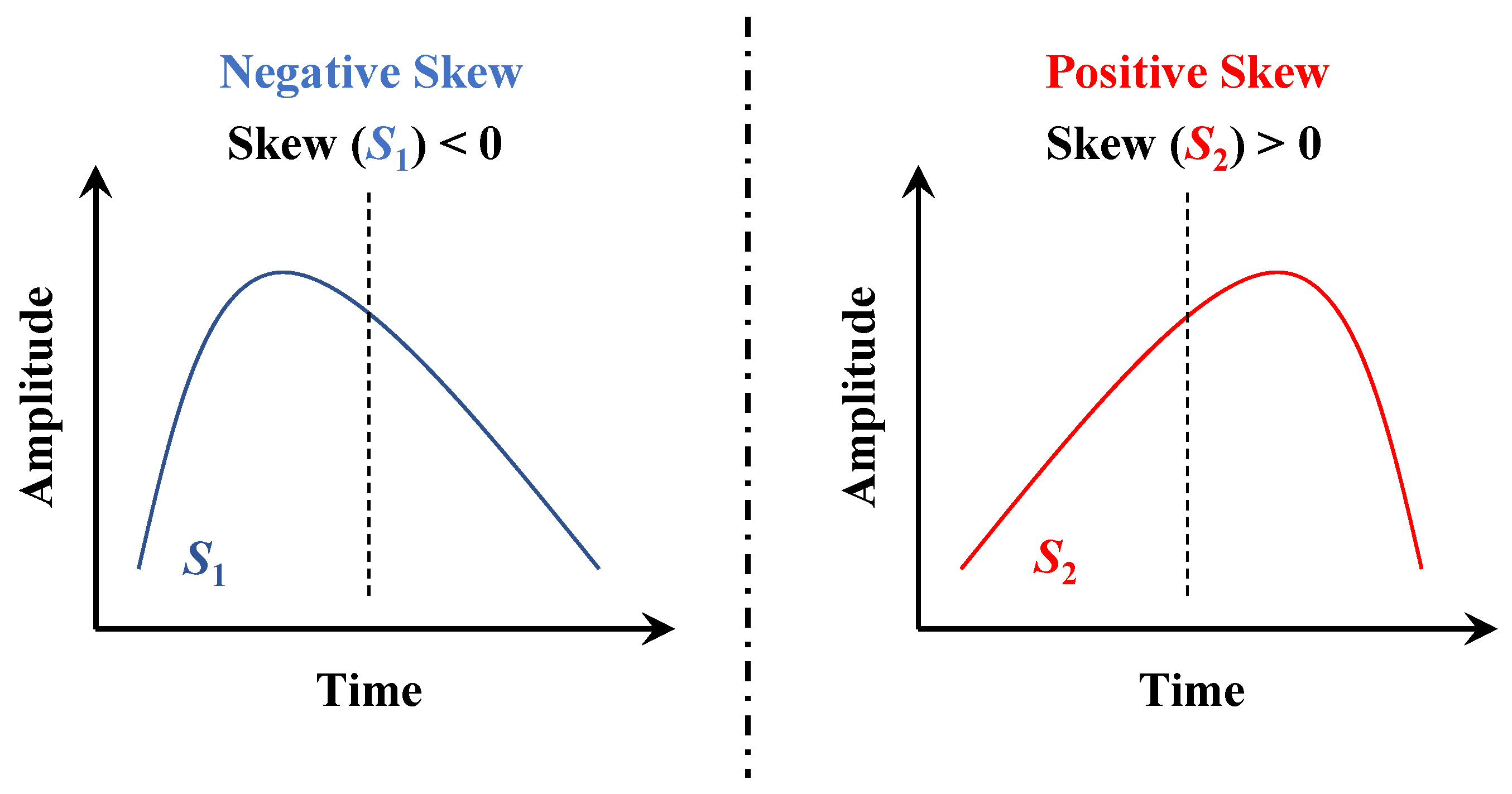

Skewness. In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. Skewness can be visually understood as the degree of inclination of the shape to the left or right. For example, in the two sequences shown in

Figure 7,

is almost obtained by mirror flipping of

, which is an indistinguishable situation for the mean, standard deviation, slope, and kurtosis. The use of skewness makes up for this deficiency. The calculation formula of skewness

is similar to kurtosis, is a scaled version of the third central moment.

3.3. Classifer and the PFC Framework

In the TSC dataset, the data format is with d time series and corresponding labels. We extract m PIPs through GRM-PIPs, and get intervals, thereby converting the original dataset into the corresponding feature set . Subsequently, the training set in the is input into the classifier and the test set is used for verification.

We realize that is a high-level representation of raw data, essentially a combination of many features, and an explicit expression of morphological information. Therefore, we are more inclined to choose a classifier that is conducive to feature processing. In the PFC framework, we have selected three levels of classifiers, which are the decision tree as the basic estimator, the random forest with bagging idea, and the gradient boosting decision tree using boosting theory.

There are many ways to implement decision trees, such as ID3, C4.5, and CART. Under normal circumstances, the effect of CART is better than other methods, so we decided to implement CART. The reason for choosing RF and GBDT is that they are classifiers developed based on decision trees. RF conducts joint learning by constructing a large number of decision trees and integrates all classification results. RF equalizes the weights of all basic estimators, while GBDT gradually upgrades the weak classifiers to robust classifiers by iteratively changing the weights.

A schematic diagram of the PFC framework is shown in

Figure 8. The innovation of our work is to propose GRM-PIPs, extract the combination of point-level and interval-level features, and use a suitable classifier to form a framework for TSC tasks. What we want to explore is the effect of the entire framework. Therefore, we did not make any special optimizations to the classifiers, and all the classifiers use traditional implementation methods. Further improvement of the classifier is our future work.

4. Performance Evaluation and Discussion

4.1. Experimental Design

The UCR archive has been widely used as a benchmark to evaluate TSC algorithms [

8] (check details in

http://www.timeseriesclassification.com, accessed on 1 May 2021). It currently contains 128 datasets, 15 of these are unequal length, 15 of there are missing values, and one (Fungi) has a single instance per class in the train files. Given this situation, in order to evaluate PFC, we select part of the UCR dataset. Since the two-category data is typically exclusive to each other, we divide the verification into two types, two-category and hybrid.

In the verification of two-category, we selected all the two-category datasets in UCR Archive and excluded the two with many missing values. Finally, 40 datasets were used for comparison experiments. Considering that PFC is a fast and straightforward classification method, it is unfair compared with some methods that use neural networks and consume substantial computing resources and time. Therefore, we exclude some deep learning algorithms for the benchmark model, such as ResNet and HIVE-COTE. The following five classification algorithms were selected for comparison, including the word extraction for time series classification (WEASEL), bag of symbolic-fourier approximation symbols (BOSS), time series forest (TSF), random interval spectral ensemble (RISE), and canonical time-series characteristics (Catch22). The results of these comparison algorithms have been officially recognized and released.

In the hybrid verification, we introduced some methods published recently as comparisons. These methods include extreme-SAX (E-SAX, 2020) [

56], interval feature transformation (IFT, 2020) [

57], and discriminative virtual sequences learning (DVSL, 2020) [

58]. PFC is tested on the same datasets with these methods, including two-category datasets and multi-category datasets.

In addition, through the analysis of the experimental results, we would find answers to the following questions:

All experiments strictly follow UCR’s division of training set and test set. The classification accuracy is uniformly adopted as the metric. Some methods use classification errors and we convert them to accuracy. The number of time series correctly classified is defined as

, and the total number of time series of test set denoted by

. The calculation formula for classification accuracy (

) and error (

) is shown below.

Due to the randomness of RF and GBDT, the final experimental result is an average of 50 runs under the same parameters. At the same time, we do not do particular parameter optimization for DT, RF, and GBDT. DT uses the information gain to measure the quality of a split, and the nodes are expanded until all leaves are pure. There are 600 trees in RF, and the number of boosting stages to perform in GBDT is 600, too.

4.2. The Verification of Two-Category

The information of 40 two-category datasets in UCR Archive is listed in

Table 2. Obviously, these datasets cover various situations such as short-sequence classification (Chinatown and ItalyPowerDemand), long-sequence classification (HandOutlines, HouseTwenty, and SemgHandGenderCh2), unbalanced training set and test set (ECGFiveDays and FreezerSmallTrain), and so on.

The classification accuracy of the five benchmark methods and PFC on these datasets is shown in

Table 3. We found that not all datasets have public results on the five benchmark methods, and the results of two datasets (FordB and HandOutLines) are missing. These two datasets were excluded when calculating the number of times to obtain the best accuracy, and the experimental results of the remaining 38 datasets were considered.

The PFC framework achieved the best accuracy in 13 of 38 UCR datasets. What is interesting is that when DT and GBDT are used as classifiers, 6 times catch the best, which is less than 10 times when RF is used. Nevertheless, their performance has been better than RISE, TSF and Catch22.

This seems to be a counter-intuitive result. As the most complex classifier, GBDT has not achieved the best results. However, this situation can be explained. We noticed that there is a significant difference in the number of PIPs extracted by the GRM-PIPs algorithm when the best results are obtained (for detail see

Appendix A). When DT and GBDT achieve their best results, the number of PIPs is almost the same, while RF requires more PIPs to achieve higher accuracy. This means that the upper limit of RF performance is the highest among the three classifiers. This may be caused by no parameter optimization. GBDT and DT usually rely on adjusting parameters to improve accuracy, while RF is not sensitive to parameters, and a large number of random decisions can effectively compensate for parameter defects.

We conduct an in-depth analysis of the experimental results shown in

Table 3, which are divided into two aspects:

The impact of the length of the time series on accuracy. We sort all the datasets according to their length, and the ones with a length less than 100 are classified as a group of , which contains 11 datasets. has 11 datasets, the corresponding length is greater than 100 but less than 300. covers 15 datasets ranging in length from 300 to 1000. The remaining three datasets whose length exceeds 1000 are set as . From to , the number of times that PFC achieves the best accuracy is 3, 6, 4, and 0, respectively. The results show that PFC is good at distinguishing time series samples whose length ranges from 100 to 1000. For samples with a length less than 100, GRM-PIPs can only extract 27 PIPs at most and generate 26 intervals, which results in the feature dimension being much larger than the original sequence dimension, and the information redundancy makes the classifier unable to obtain robust decision rules. On the other hand, since we set up to extract only 30 PIPs in the experiment, the features of samples longer than 1000 may be incompletely extracted.

Does the imbalance of the training set and test set affect the accuracy of PFC? As far as the current results are concerned, the training set and test set are not factors that affect accuracy.

4.3. The Hybrid Verification

In hybrid verification, we will compare with the TSC methods in three recently published papers. Since the datasets validated by these methods are different, we decided to compare them one by one and use the same datasets.

First, we test the performance of PFC and DVSL. Abhilash et al. [

58] believed that the existing VSML methods employ fixed virtual sequences, which might not be optimal for the subsequent classification tasks. Therefore, they proposed DVSL to learn a set of discriminative virtual sequences that help separate time series samples in a feature space. Finally, this method was validated on 15 UCR datasets. The results of the comparative experiment are shown in

Table 4.

The results show that PFC performed better on the same 15 UCR datasets and surpassed DVSL for the best accuracy in 12 of them. At the same time, we also notice that the accuracy of PFC is much lower than that of DVSL in datasets such as Beef.

Figure 9 shows the distribution of PIPs in Beef. We can clearly find that only the distribution of

(represented by the red dots) is distinguishable, and the distributions of the other categories are highly similar. We believe that PFC can distinguish some samples with obvious distinguishing characteristics, but if these characteristics are highly similar in multiple types of samples, PFC will be invalid. Although this situation is accidental, PFC is based on morphological perception information, and it is difficult to process samples with small differences in morphology.

The second comparison method is IFT [

57], which also uses PIPs. The difference is that IFT adopts information gain-based selection for interval features, which makes the whole method a special decision tree. Since both PFC and IFT perceive the importance of morphological features, this is a meaningful comparative experiment. IFT was validated on 22 UCR datasets, and we also tested on the same datasets. The comparison results are shown in

Table 5.

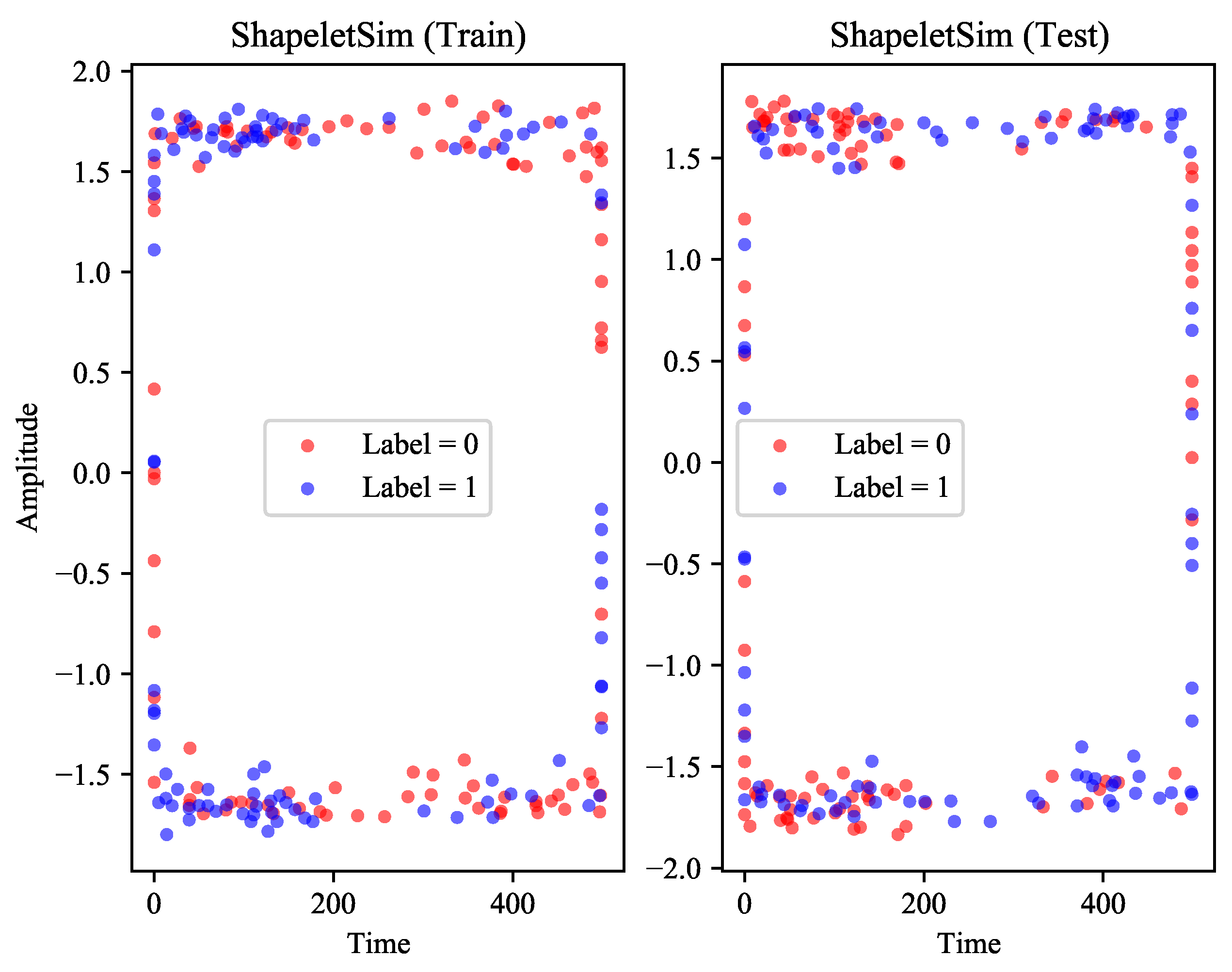

On these datasets, the performance of PFC almost completely surpasses IFT. However, one exception to the results, was the huge difference in the accuracy of the PFC and IFT on a dataset called ShapeletSim. The samples in ShapeletSim present a form similar to high-frequency sinusoidal signals, which causes most of the PIPs to be located at the peaks and troughs. At this time, the distribution of PIPs can describe the boundary of the sample only, a rectangle in

Figure 10. The crux of the problem is not just the abnormality of these distributions, we realize that they lack the necessary distinguishability. On this dataset, the performance of IFT is almost perfect. The reason may be that its feature selection is different from our work, and these unique features play an important role in classification.

Finally, we set our sights on E-SAX. One of the most popular dimensionality reduction techniques of time series data is the Symbolic Aggregate Approximation (SAX), which is inspired by algorithms from text mining and bioinformatics. E-SAX uses only the extreme points of each segment to represent the time series [

56]. The essence of SAX is to reduce the dimensionality of time series, which is the same as PIPs. For these reasons, we chose E-SAX as the comparison method.

There are 45 UCR datasets used for comparison experiments, and all the results are listed in

Table 6. It is important to point out that E-SAX originally used classification error

as the metric. In order to facilitate comparison, we convert the classification error

into classification accuracy

according to formula (9).

As shown in

Table 6, the PFC achieves the most best ACC, and best performance in 34 out of 45 datasets.These datasets are divided into 17 two-category datasets and 28 multi-category datasets. PFC has achieved significant advantages in 13 two-category datasets and 21 multi-category datasets. Although PFC is still at a disadvantage in some datasets, we found that the results obtained by PFC are very close to E-SAX, which is based on the premise that we have not optimized any parameters and model structure. We believe that PFC still has the possibility of improvement.

This comparison experiment and the previous two-category verification have a very small gap in the number of datasets used. It is equivalent to removing part of the two-category datasets and introducing a large number of multi-category datasets based on the latter. However, the number of times that the PFC using RF as a classifier achieves the best accuracy has greatly increased, far exceeding the cases of DT and GBDT. RF can rely on a large number of decision trees to satisfy multi-classification tasks, and this advantage has been demonstrated.

4.4. Discussion on the Number of PIPs

This is a meaningful discussion, because most of the current papers ignore this problem. No matter what operations will be performed later, we usually extract m PIPs from the original time series at first. There are two questions that need to be answered at this time:

The second question is relatively easy to answer. The data listed in

Appendix A. gives us the answer: the same

m has different effects on different classifiers. RF and GBDT always require a large number of PIPs to achieve high accuracy, but DT is not so demanding. RF and GBDT as ensemble methods must be suitable for more features, but on some simple datasets, DT can outperform them with a few PIPs.

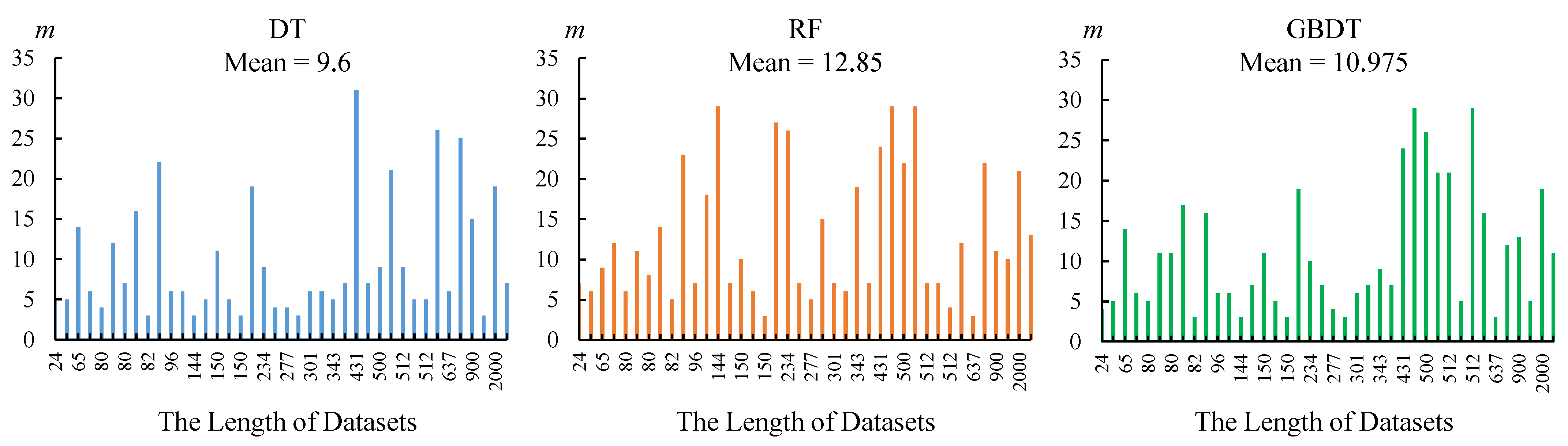

In fact, the most difficult thing is to answer the first question. As shown in

Figure 11, with the length of the dataset as the horizontal axis, we obtain the distribution of PIPs when the best accuracy is achieved on the corresponding dataset. The three distributions are similar, but for RF and GBDT, the appropriate number of PIPs is greater than DT.

On the other hand, on the same dataset, the larger m does not mean the better. Through a large number of experimental records, we found that there is no specific rule. For some time series with quite different morphological structures, a small amount of PIPs is enough to highlight their differences. Conversely, more PIPs may cause information redundancy and confusion. When the morphological structure of the time series is complex, the situation is completely opposite, and more PIPs are needed to describe the characteristics of the sample.

5. Conclusions

The introduction of morphological structure features is an important improvement to the time series classification. Based on the way of human visual cognition, many studies have pointed out that the shape of time series can be described by a sequence of important turning points. Inspired by these studies, we proposed GRM-PIPs, which control the length of the interval. Then we used PIPs to segment the time series, and extracted the feature combination of interval-level and point-level. The introduction of three classifiers, DT, RF, and GBDT, completes the perceptual feature-based framework. Finally, we compared five benchmark methods and three recently published methods on a large number of UCR datasets. The experimental results show that our work has excellent performance on the TSC task. In addition, we demonstrated the threshold of the interval length and discussed the influence of the number of PIPs, which made up for the deficiency in these aspects.

In future work, we plan to add more different types of classifiers and optimize these classifiers. At the same time, further improvement of feature extraction is considered.