Abstract

Ordinal pattern dependence is a multivariate dependence measure based on the co-movement of two time series. In strong connection to ordinal time series analysis, the ordinal information is taken into account to derive robust results on the dependence between the two processes. This article deals with ordinal pattern dependence for a long-range dependent time series including mixed cases of short- and long-range dependence. We investigate the limit distributions for estimators of ordinal pattern dependence. In doing so, we point out the differences that arise for the underlying time series having different dependence structures. Depending on these assumptions, central and non-central limit theorems are proven. The limit distributions for the latter ones can be included in the class of multivariate Rosenblatt processes. Finally, a simulation study is provided to illustrate our theoretical findings.

1. Introduction

The origin of the concept of ordinal patterns is in the theory of dynamical systems. The idea is to consider the order of the values within a data vector instead of the full metrical information. The ordinal information is encoded as a permutation (cf. Section 3). Already in the first papers on the subject, the authors considered entropy concepts related to this ordinal structure (cf. [1]). There is an interesting relationship between these concepts and the well-known Komogorov–Sinai entropy (cf. [2,3]). Additionally, an ordinal version of the Feigenbaum diagram has been dealt with e.g., in [4]. In [5], ordinal patterns were used in order to estimate the Hurst parameter in long-range dependent time series. Furthermore, Ref. [6] have proposed a test for independence between time series (cf. also [7]). Hence, the concept made its way into the area of statistics. Instead of long patterns (or even letting the pattern length tend to infinity), rather short patterns have been considered in this new framework. Furthermore, ordinal patterns have been used in the context of ARMA processes [8] and change-point detection within one time series [9]. In [10], ordinal patterns were used for the first time in order to analyze the dependence between two time series. Limit theorems for this new concept were proven in a short-range dependent framework in [11]. Ordinal pattern dependence is a promising tool, which has already been used in financial, biological and hydrological data sets, see in this context, also [12] for an analysis of the co-movement of time series focusing on symbols. In particular, in the context of hydrology, the data sets are known to be long-range dependent. Therefore, it is important to also have limit theorems available in this framework. We close this gap in the present article.

All of the results presented in this article have been established in the Ph.D. thesis of I. Nüßgen written under the supervision of A. Schnurr.

The article is structured as follows: in the subsequent section, we provide the reader with the mathematical framework. The focus is on (multivariate) long-range dependence. In Section 3, we recall the concept of ordinal pattern dependence and prove our main results. We present a simulation study in Section 4 and close the paper by a short outlook in Section 5.

2. Mathematical Framework

We consider a stationary d-dimensional Gaussian time series (for ), with:

such that and for all and . Furthermore, we require the cross-correlation function to fulfil for and , where the component-wise cross-correlation functions are given by for each and . For each random vector , we denote the covariance matrix by , since it is independent of j due to stationarity. Therefore, we have .

We specify the dependence structure of and turn to long-range dependence: we assume that for the cross-correlation function for each , it holds that:

with for finite constants with , where the matrix has full rank, is symmetric and positive definite. Furthermore, the parameters are called long-range dependence parameters. Therefore, is multivariate long-range dependent in the sense of [13], Definition 2.1.

The processes we want to consider have a particular structure, namely for , we obtain for fixed :

The following relation holds between the extendend process and the primarily regarded process . For all , we have:

where . Note that the process is still a centered Gaussian process since all finite-dimensional marginals of follow a normal distribution. Stationarity is also preserved since for all , and , the cross-correlation function of the process is given by

and the last line does not depend on j. The covariance matrix of has the following structure:

Hence, we arrive at:

where , . Note that and , since we are studying cross-correlation functions.

Therefore, we finally have to show that based on the assumptions on , the extended process is still long-range dependent.

Hence, we have to consider the cross-correlations again:

since and , with , and .

Let us remark that .

Therefore, we are still dealing with a multivariate long-range dependent Gaussian process. We see in the proofs of the following limit theorems that the crucial parameters that determine the asymptotic distribution are the long-range dependence parameters , of the original process and therefore, we omit the detailed description of the parameters herein.

It is important to remark that the extended process is also long-range dependent in the sense of [14], p. 2259, since:

with:

and can be chosen as any constant that is not equal to zero, so for simplicity, we assume without a loss of generality , and therefore, , since the condition in [14] only requires convergence to a finite constant . Hence, we may apply the results in [14] in the subsequent results.

We define the following set, which is needed in the proofs of the theorems of this section.

and denote the corresponding long-range dependence parameter to each by

We briefly recall the concept of Hermite polynomials as they play a crucial role in determining the limit distribution of functionals of multivariate Gaussian processes.

Definition 1.

(Hermite polynomial, [15], Definition 3.1)

The j-th Hermite polynomial , , is defined as

Their multivariate extension is given by the subsequent definition.

Definition 2.

(Multivariate Hermite polynomial, [15], p. 122)

Let . We define as d-dimensional Hermite polynomial:

with .

Let us remark that the case is excluded here due to the assumption .

Analogously to the univariate case, the family of multivariate Hermite polynomials

forms an orthogonal basis of , which is defined as

The parameter denotes the density of the d-dimensional standard normal distribution, which is already divided into the product of the univariate densities in the formula above.

We denote the Hermite coefficients by

The Hermite rank of f with respect to the distribution is defined as the largest integer m, such that:

Having these preparatory results in mind, we derive the multivariate Hermite expansion given by

We focus on the limit theorems for functionals with Hermite rank 2. First, we introduce the matrix-valued Rosenblatt process. This plays a crucial role in the asymptotics of functionals with Hermite rank 2 applied to multivariate long-range dependent Gaussian processes. We begin with the definition of a multivariate Hermitian–Gaussian random measure with independent entries given by

where is a univariate Hermitian–Gaussian random measure as defined in [16], Definition B.1.3. The multivariate Hermitian–Gaussian random measure satisfies:

and:

where denotes the Hermitian transpose of . Thus, following [14], Theorem 6, we can state the spectral representation of the matrix-valued Rosenblatt process , as

where each entry of the matrix is given by

The double prime in excludes the diagonals , in the integration. For details on multiple Wiener-Itô integrals, as can be seen in [17].

The following results were taken from [18], Section 3.2. The corresponding proofs were outsourced to the Appendix A.

Theorem 1.

Let be a stationary Gaussian process as defined in (1) that fulfils (2) for , . For we fix:

with and as described in (6). Let be a function with Hermite rank 2 such that the set of discontinuity points is a Null set with respect to the -dimensional Lebesgue measure. Furthermore, we assume f fulfills . Then:

where:

The matrix is a normalizing constant, as can be seen in [18], Corollary 3.6. Moreover, is a multivariate Hermitian–Gaussian random measure with and L as defined in (2). Furthermore, is a normalizing constant and:

where for each and and:

where C denotes the matrix of second order Hermite coefficients, given by

It is possible to soften the assumptions in Theorem 1 to allow for mixed cases of short- and long-range dependence.

Corollary 1.

Instead of demanding in the assumptions of Theorem 1 that (2) holds for with the addition that for all we have , we may use the following condition.

We assume that:

with as given in (2), but we do no longer assume for all but soften the assumption to and for , we allow for . Then, the statement of Theorem 1 remains valid.

However, with a mild technical assumption on the covariances of the one-dimensional marginal Gaussian processes that is often fulfilled in applications, there is another way of normalizing the partial sum on the right-hand side in Theorem 1, this time explicitly for the case and , such that the limit can be expressed in terms of two standard Rosenblatt random variables. This yields the possibility of further studying the dependence structure between these two random variables. In the following theorem, we assume for the reader’s convenience.

Theorem 2.

Under the same assumptions as in Theorem 1 with and and the additional condition that , for , and , it holds that:

with being the same normalizing factor as in Theorem 1, and . Note that and are both standard Rosenblatt random variables whose covariance is given by

3. Ordinal Pattern Dependence

Ordinal pattern dependence is a multivariate dependence measure that compares the co-movement of two time series based on the ordinal information. First introduced in [10] to analyze financial time series, a mathematical framework including structural breaks and limit theorems for functionals of absolutely regular processes has been built in [11]. In [19], the authors have used the so-called symbolic correlation integral in order to detect the dependence between the components of a multivariate time series. Their considerations focusing on testing independence between two time series are also based on ordinal patterns. They provide limit theorems in the i.i.d.-case and otherwise use bootstrap methods. In contrast, in the mathematical model in the present article, we focus on asymptotic distributions of an estimator of ordinal pattern dependence having a bivariate Gaussian time series in the background but allowing for several dependence structures to arise. As it will turn out in the following, this yields central but also non-central limit theorems.

We start with the definition of an ordinal pattern and the basic mathematical framework that we need to build up the ordinal model.

Let denote the set of permutations in , that we express as -dimensional tuples, assuring that each tuple contains each of the numbers above exactly once. In mathematical terms, this yields:

as can be seen in [11], Section 2.1.

The number of permutations in is given by . In order to get a better intuitive understanding of the concept of ordinal patterns, we have a closer look at the following example, before turning to the formal definition.

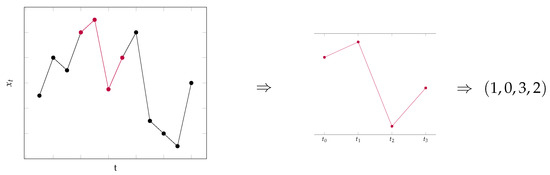

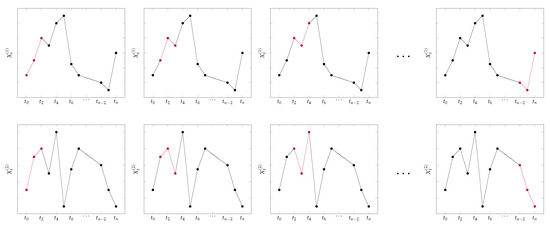

Example 1.

Figure 1 provides an illustrative understanding of the extraction of an ordinal pattern from a data set. The data points of interest are colored in red and we consider a pattern of length , which means that we have to take data points into consideration. We fix the points in time , , and and extract the data points from the time series. Then, we search for the point in time which exhibits the largest value in the resulting data and write down the corresponding time index. In this example, it was given by . We order the data points by writing the time position of the largest value as the first entry, the time position of the second largest as the second entry, etc. Hence, the absolute values are ordered from largest to smallest and the ordinal pattern is obtained for the considered data points.

Figure 1.

Example of the extraction of an ordinal pattern of a given data set.

Formally, the aforementioned procedure can be defined as follows, as can be seen in [11], Section 2.1.

Definition 3.

As the ordinal pattern of a vector , we define the unique permutation :

such that:

with if , .

The last condition assures the uniqueness of if there are ties in the data sets. In particular, this condition is necessary if real-world data are to be considered.

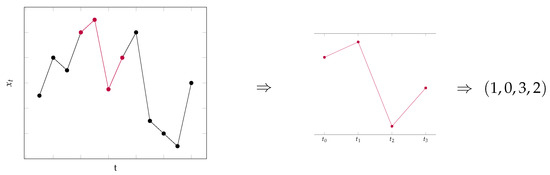

In Figure 2, all ordinal patterns of length are shown. As already mentioned in the introduction, from the practical point of view, a highly desirable property of ordinal patterns is that they are not affected by monotone transformations, as can be seen in [5], p. 1783.

Figure 2.

Ordinal patterns for .

Mathematically, this means that if is strictly monotone, then:

In particular, this includes linear transformations , with and .

Following [11], Section 1, the minimal requirement of the data sets we use for ordinal analysis in the time series context, i.e., for ordinal pattern probabilities as well as for ordinal pattern dependence later on, is ordinal pattern stationarity (of order h). This property implies that the probability of observing a certain ordinal pattern of length h remains the same when shifting the moving window of length h through the entire time series and is not depending on the specific points in time. In the course of this work, the time series, in which the ordinal patterns occur, always have either stationary increments or are even stationary themselves. Note that both properties imply ordinal pattern stationarity. The reason why requiring stationary increments is a sufficient condition is given in the following explanation.

One fundamental property of ordinal patterns is that they are uniquely determined by the increments of the considered time series. As one can imagine in Example 1, the knowledge of the increments between the data points is sufficient to obtain the corresponding ordinal pattern. In mathematical terms, we can define another mapping , which assigns the corresponding ordinal pattern to each vector of increments, as can be seen in [5], p. 1783.

Definition 4.

We define for the mapping :

such that for , , we obtain:

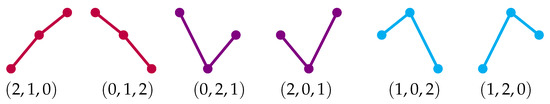

We define the two mappings, following [5], p. 1784:

An illustrative understanding of these mappings is given as follows. The mapping , which is the spatial reversion of the pattern , is the reflection of on a horizontal line, while , the time reversal of , is its reflection on a vertical line, as one can observe in Figure 3.

Figure 3.

Space and time reversion of the pattern .

Based on the spatial reversion, we define a possibility to divide into two disjoint sets.

Definition 5.

We define as a subset of with the property that for each , either π or are contained in the set, but not both of them.

Note that this definition does not yield the uniqueness of .

Example 2.

We consider the case again and we want to divide into a possible choice of and the corresponding spatial reversal. We choose , and therefore, . Remark that is also a possible choice. The only condition that has to be satisfied is that if one permutation is chosen for , then its spatial reverse must not be an element of this set.

We stick to the formal definition of ordinal pattern dependence, as it is proposed in [11], Section 2.1. The considered moving window consists of data points, and hence, h increments. We define:

and:

Then, we define ordinal pattern dependence as

The parameter q represents the hypothetical case of independence between the two time series. In this case, p and q would obtain equal values and therefore, would equal zero. Regarding the other extreme, the case in which both processes coincide or one is a strictly monotone increasing transform of the other one, we obtain the value 1. However, in the following, we assume and .

Note that the definition of ordinal pattern dependence in (17) only measures positive dependence. This is no restriction in practice, because negative dependence can be investigated in an analogous way, by considering . If one is interested in both types of dependence simultaneously, in [11], the authors propose to use . To keep the notation simple, we focus on as it is defined in (17).

We compare whether the ordinal patterns in coincide with the ones in . Recall that it is an essential property of ordinal patterns that they are uniquely determined by the increment process. Therefore, we have to consider the increment processes as defined in (1) for , where , . Hence, we can also express p and q (and consequently ) as a probability that only depends on the increments of the considered vectors of the time series. Recall the definition of for , given by

such that with as given in (6).

In the course of this article, we focus on the estimation of p. For a detailed investigation of the limit theorems for estimators of , we refer to [18]. We define the estimator of p, the probability of coincident patterns in both time series in a moving window of fixed length, by

where:

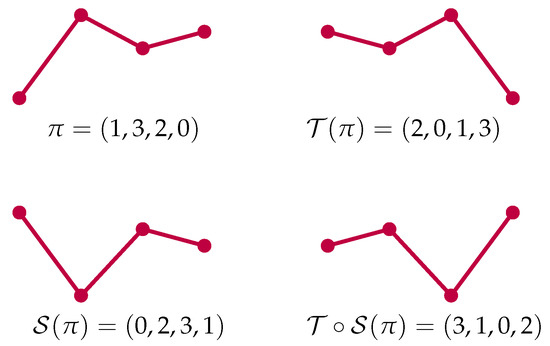

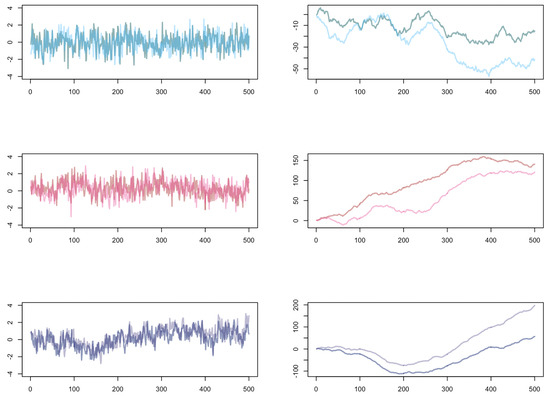

Figure 4 illustrates the way ordinal pattern dependence is estimated by . The patterns of interest that are compared in each moving window are colored in red.

Figure 4.

Illustration of estimation of ordinal pattern dependence.

Having emphasized the crucial importance of the increments, we define the following conditions on the increment process : let be a bivariate, stationary Gaussian process with , :

- (L)

- We assume that fulfills (2) with in . We allow for to be in the range .

- (S)

- We assume such that the cross-correlation function of fulfills for :with and holds.

Furthermore, in both cases, it holds that for and to exclude ties.

We begin with the investigation of the asymptotics of . First, we calculate the Hermite rank of , since the Hermite rank determines for which ranges of the estimator is still long-range dependent. Depending on this range, different limit theorems may hold.

Lemma 1.

The Hermite rank of with respect to is equal to 2.

Proof.

Following [20], Lemma 5.4 it is sufficient to show the following two properties:

- (i)

- ,

- (ii)

- .

Note that the conclusion is not trivial, because in general, as can be seen in [15], Lemma 3.7. Lemma 5.4 in [20] can be applied due to the following reasoning. Ordinal patterns are not affected by scaling, therefore, the technical condition that is positive semidefinite is fulfilled in our case. We can scale the standard deviation of the random vector by any positive real number since for all we have:

To show property , we need to consider a multivariate random vector:

with covariance matrix . We fix . We divide the set into disjoint sets, namely into , as defined in Definition 5 and the complimentary set . Note that:

holds. This implies:

for . Hence, we arrive at:

for .

Consequently, .

In order to prove , we consider:

to be a random vector with independent distributed entries. For and such that , we obtain:

since for all . This was shown in the proof of Lemma 3.4 in [20].

All in all, we derive and hence, have proven the lemma. □

The case exhibits the property that the standard range of the long-range dependence parameter has to be divided into two different sets. If , the transformed process is still long-range dependent, as can be seen in [16], Table 5.1. If , the transformed process is short-range dependent, which means by definition that the autocorrelations of the transformed process are summable, as can be seen in [13], Remark 2.3. Therefore, we have two different asymptotic distributions that have to be considered for the estimator of coincident patterns.

3.1. Limit Theorem for the Estimator of p in Case of Long-Range Dependence

First, we restrict ourselves to the case that at least one of the two parameters and is in . This assures . We explicitly include mixing cases where the process corresponding to is allowed to be long-range as well as short-range dependent.

Note that this setting includes the pure long-range dependence case, which means that for , we have , or even . However, in general, the assumptions are lower, such that we only require for either or and the other parameter is also allowed to be in or .

We can, therefore, apply the results of Corollary 1 and obtain the following asymptotic distribution for :

Theorem 3.

Under the assumption in (L), we obtain:

with as given in Theorem 1 for and being a normalizing constant. We have:

for each and and , where the variable:

denotes the matrix of second order Hermite coefficients.

Proof.

The proof of this theorem is an immediate application of the Corollary 1 and Lemma 1. Note that for it holds that it is square integrable with respect to and that the set of discontinuity points is a Null set with respect to the -dimensional Lebesgue measure. This is shown in [18], Equation (4.5). □

Following Theorem 2, we are also able to express the limit distribution above in terms of two standard Rosenblatt random variables by modifying the weighting factors in the limit distribution. Note that this requires slightly stronger assumptions as in Theorem 1.

Theorem 4.

Let (L) hold with . Additionally, we assume that , for , and . Then, we obtain:

with and as given in Theorem 3. Note that and are both standard Rosenblatt random variables, whose covariance is given by

Remark 1.

Following [18], Corollary 3.14, if additionally and is fulfilled for all , then the two limit random variables following a standard Rosenblatt distribution in Theorem 4 are independent. Note that due to the considerations in [21], Equation (10), we know that the distribution of the sum of two independent standard Rosenblatt random variables is not standard Rosenblatt. However, this yields a computational benefit, as it is possible to efficiently simulate the standard Rosenblatt distribution, for details, as can be seen in [21].

We turn to an example that deals with the asymptotic variance of the estimator of p in Theorem 3 in the case .

Example 3.

We focus on the case and consider the underlying process . It is possible to determine the asymptotic variance depending on the correlation between these two increment variables.

We start with the calculation of the second order Hermite coefficients in the case . This corresponds to the event , which yields:

and:

Due to , we have and therefore, . We identify the second order Hermite coefficients as the ones already calculated in [20], Example 3.13, although we are considering two consecutive increments of a univariate Gaussian process there. However, since the corresponding values are only determined by the correlation between the Gaussian variables, we can simply replace the autocorrelation at lag 1 by the cross-correlation at lag 0. Hence, we obtain:

Recall that the inverse of the correlation matrix of is given by

By using the formula for obtained in [18], Equation (4.23), we derive:

Plugging the second order Hermite coefficients and the entries of the inverse of the covariance matrix depending on into the formulas, we arrive at:

and:

Therefore, in the case , we obtain the following factors in the limit variance in Theorem 3:

Remark 2.

It is not possible to analytically determine the limit variance for , as this includes orthant probabilities of a four-dimensional Gaussian distribution. Following [22], no closed formulas are available for these probabilities. However, there are fast algorithms at hand that calculate the limit variance efficiently. It is possible to take advantage of the symmetry properties of the multivariate Gaussian distribution to keep the computational cost of these algorithms low. For detail, as can be seen in [18], Section 4.3.1.

3.2. Limit Theorem for the Estimator of p in Case of Short-Range Dependence

In this section, we focus on the case of . If , we are still dealing with a long-range dependent multivariate Gaussian process . However, the transformed process is no longer long-range dependent, since we are considering a function with Hermite rank 2, see also [16], Table 5.1. Otherwise, if , the process itself is already short-range dependent, since the cross-correlations are summable. Therefore, we obtain the following central limit theorem by applying Theorem 4 in [14].

Theorem 5.

Under the assumptions in (S), we obtain:

with:

We close this section with a brief retrospect of the results obtained. We established limit theorems for the estimator of p as probability of coincident pattern in both time series and hence, on the most important parameter in the context of ordinal pattern dependence. The long-range dependent case as well as the mixed case of short- and long-range dependence was considered. Finally, we provided a central limit theorem for a multivariate Gaussian time series that is short-range dependent if transformed by . In the subsequent section, we provide a simulation study that illustrates our theoretical findings. In doing so, we shed light on the Rosenblatt distribution and the distribution of the sum of Rosenblatt distributed random variables.

4. Simulation Study

We begin with the generation of a bivariate long-range dependent fractional Gaussian noise series .

First, we simulate two independent fractional Gaussian noise processes and derived by the R-package “longmemo”, for a fixed parameter in both time series. For the reader’s convenience, we denote the long-range dependence parameter d by as it is common, when dealing with fractional Gaussian noise and fractional Brownian motion. We refer to H as Hurst parameter, tracing back to the work of [23]. For and we generate samples, for , we choose . We denote the correlation function of univariate fractional Gaussian noise by , . Then, we obtain for :

for .

Note that this yields the following properties for the cross-correlations of the two processes for :

We use and to obtain unit variance in the second process.

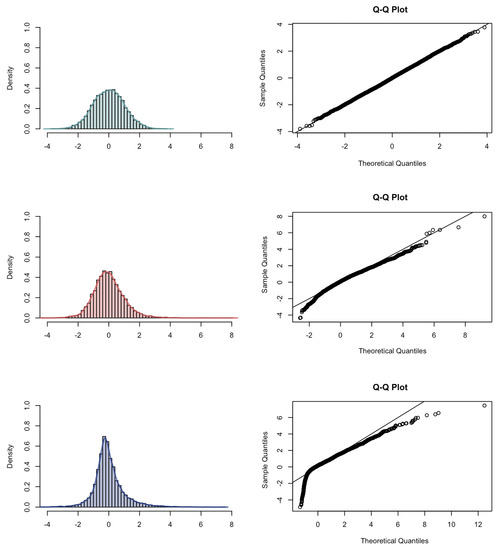

Note that we chose the same Hurst parameter in both processes to get a better simulation result. The simulations of the processes and are visualized in Figure 5. On the left-hand side, the different fractional Gaussian noises depending on the Hurst parameter H are displayed. They represent the stationary long-range dependent Gaussian increment processes we need in the view of the limit theorems we derived in Section 3. The processes in which we are comparing the coincident ordinal patterns, namely and , are shown on the right-hand side in Figure 5. The long-range dependent behavior of the increment processes is very illustrative in these processes: roughly speaking, they become smoother the larger the Hurst parameter gets.

Figure 5.

Plots of 500 data points of one path of two dependent fractional Gaussian noise processes (left) and the paths of the corresponding fractional Brownian motions (right) for different Hurst parameters: (top), (middle), (bottom).

We turn to the simulation results for the asymptotic distribution of the estimator . The first limit theorem is given in Theorem 3 for and . In the case of , a different limit theorem holds, see Theorem 5. Therefore, we turn to the simulation results of the asymptotic distribution of the estimator of p, as shown in Figure 6 for pattern length . The asymptotic normality in case can be clearly observed. We turn to the interpretation of the simulation results of the distribution of for and as the weighted sum of the sample (cross-)correlations: we observe in the Q–Q plot for that the samples in the upper and lower tail deviate from the reference line. For , a similar behavior in the Q–Q plot is observed.

Figure 6.

Histogram, kernel density estimation and Q–Q plot with respect to the normal distribution () or to the Rosenblatt distribution of with for different Hurst parameters: (top); (middle); (bottom).

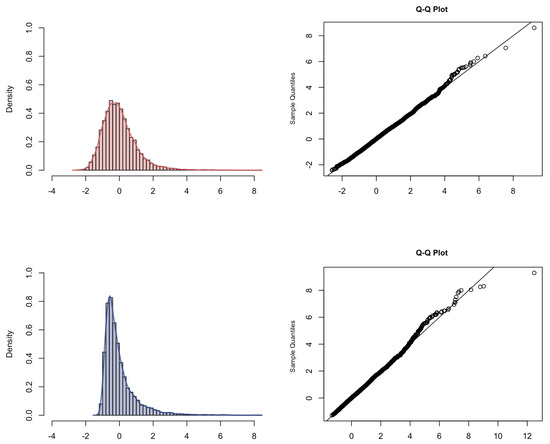

We want to verify the result in Theorem 4 that it is possible, by a different weighting, to express the limit distribution of as the distribution of the sum of two independent standard Rosenblatt random variables. The simulated convergence result is provided in Figure 7. We observed the standard Rosenblatt distribution.

Figure 7.

Histogram, kernel density estimation and Q–Q plot with respect to the Rosenblatt distribution of for different Hurst parameters: (top); (bottom).

5. Conclusions and Outlook

We considered limit theorems in the context of the estimation of ordinal pattern dependence in the long-range dependence setting. Pure long-range dependence, as well as mixed cases of short- and long-range dependence, were considered alongside the transformed short-range dependent case. Therefore, we complemented the asymptotic results in [11]. Hence, we made ordinal pattern dependence applicable for long-range dependent data sets as they arise in the context of neurology, as can be seen in [24] or artificial intelligence, as can be seen in [25]. As these kinds of data were already investigated using ordinal patterns, as can be seen, for example, in [26], this emphasizes the large practical impact of the ordinal approach in analyzing the dependence structure multivariate time series. This yields various research opportunities in these fields in the future.

Our results rely on the assumption of Gaussianity of the considered multivariate time series. If we focus on comparing the coincident ordinal patterns in a stationary long-range dependent bivariate time series, we highly benefit from the property of ordinal patterns not being affected by monotone transformations. It is possible to transform the data set to the Gaussian framework without losing the necessary ordinal information. In applications, this property is highly desirable. If we consider the more general setting, that is, stationary increments, the mathematical theory in the background gets a lot more complex leading to the limitations of our results. A crucial argument used in the proofs of the results in Section 2 is given in the Reduction Theorem, originally proven in Theorem 4.1 in [27] in the univariate case and extended to the multivariate setting in Theorem 6 in [14]. For further details, we refer the reader to the Appendix A. However, this result only holds in the Gaussian case. Limit theorems for the sample cross-correlation process of multivariate linear long-range dependent processes with Hermite rank 2 have recently been proven in Theorem 4 in [28]. This is possibly an interesting starting point to adapt the proofs in the Appendix A to this larger class of processes without requiring Gaussianity. Considering the property of having a discrete bivariate time series in the background, an interesting extension is given in time continuous processes and the associated techniques of discretization to still regard the ordinal perspective. To think even further beyond our scope, a generalization to categorical data is conceivable and yields an interesting open research opportunity.

Author Contributions

Conceptualization, I.N. and A.S.; methodology and mathematical theory, I.N.; simulations, I.N.; validation, I.N. and A.S.; writing—original draft preparation, I.N.; writing—review and editing, A.S.; funding acquisition, A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the German Research Foundation (DFG) grant number SCHN 1231/3-2.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, Ines Nüßgen, upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Technical Appendix

All proofs in this appendix were taken from [18], Chapter 3.

Appendix A.1. Preliminary Results

Before turning to limit theorems, we introduce a possibility to decompose the d-dimensional Gaussian process using the Cholesky decomposition, as can be seen in [29]. Based on the definition of the multivariate normal distribution, as can be seen in [30], Definition 1.6.1, we find an upper triangular matrix , such that . Then, it holds that:

where is a d-dimensional Gaussian process where each has independent and identically distributed entries. We want to assure that preserves the long-range dependent structure of . Since we know from (2) that:

the process has to fulfill:

with .

Then, it holds for all that:

Note that the assumption in (A2) is only well-defined because we assumed for and in (1). This becomes clear in the following considerations. In the proofs of the theorems in this chapter, we do not only need a decomposition of , but also of . As is still a multivariate Gaussian process, the covariance matrix of given by is positive definite. Hence, it is possible to find an upper triangular matrix A, such that . It holds that:

for:

The random vector consists of independent and standard normally distributed random variables. We notice the different structure of compared to . We assure that for consecutive j, the entries in are all different while there are identical entries, for example in and . This complicates our aim that:

holds.

The special structure of , namely that consisting of h consecutive entries of each marginal process , , alongside the dependence between two random vectors in the process , has to be reflected in the covariance matrix of . Hence, we need to check whether such a vector exists, i.e., if there is a positive semi-definite matrix that fulfills these conditions. We define as a block diagonal matrix with A as main-diagonal blocks and all off-diagonal blocks as -zero matrix.

We denote the covariance matrix of by and define the following matrix:

We know that is a positive semi-definite for all because is a Gaussian process. Mathematically described, this means that:

for all . We conclude:

Therefore, is a positive semi-definite matrix for all and the random vector:

exists and (A4) holds. Note that we do not have any further information on the dependence structure within the process , in general, this process neither exhibits long-range dependence, nor independence, nor stationarity.

We continue with two preparatory results that are also necessary for proving Theorem 2.1.

Lemma A1.

Let be a d-dimensional Gaussian process as defined in (1) that fulfills (2) with , such that:

Let be a normalization constant:

and let be an upper triangular matrix, such that:

Furthermore, for we have:

Then, for it holds that:

where has the spectral domain representation:

where:

and is a multivariate Hermitian–Gaussian random measure as defined in (12).

Proof.

First, we can use (A1):

such that is a multivariate Gaussian process with and is still long-range dependent, as can be seen in (A2). It is possible to decompose the sample cross-covariance matrix with respect to at lag l given by

to:

where we define the sample cross-covariance matrix with respect to at lag l by

Each entry of:

is given by

Following [31], proof of Lemma 7.4, the limit distribution of:

is equal to the limit distribution of:

We recall the assumption that for all . We follow [14], Theorem 6 and use the Cramer–Wold device: Let . We are interested in the asymptotic behavior of:

We consider the function:

and may apply Theorem 6 in [14]. Using the Hermite decomposition of f as given in (11), we observe that f and therefore, , , only affects the Hermite coefficients. Indeed, using [15], Lemma 3.5, the Hermite coefficients reduce to for each summand on the right-hand side in (A7). Hence, we can state:

where has the spectral domain representation:

where:

and is an appropriate multivariate Hermitian–Gaussian random measure. Thus, we proved convergence in the distribution of the sample-cross correlation matrix:

We take a closer look at the covariance matrix of . Following [28], Lemma 5.7, we observe:

with as defined in (A3) and ⊗ denotes the Kronecker product. Furthermore, denotes the commutation matrix that that transforms into for . Further details can be found in [32].

Hence, the covariance matrix of the vector of the sample cross-covariances is fully specified by the knowledge of as it arises in the context of long-range dependence in (A3).

We obtain a relation between L and , since:

Both:

and:

hold and we obtain:

We study the covariance matrix of: :

Let be an upper triangular matrix, such that:

We know that such a matrix exists because is positive definite. Analogously, we define :

Then, it holds that:

We arrive at:

where has the spectral domain representation:

where:

and is a multivariate Hermitian–Gaussian random measure as defined in (12). Note that the standardization on the left-hand side is appropriate since the covariance matrix of is given by

by denoting:

We observe:

following [28], (27). Neither the case nor has to be incorporated as the diagonals are excluded in the integration in (A12). □

Corollary A1.

Under the assumptions of Lemma A1, there is a different representation of the limit random vector. For , we obtain:

where has the spectral domain representation:

The matrix is a diagonal matrix:

and is such that:

Furthermore, is a multivariate Hermitian–Gaussian random measure that fulfills:

Proof.

The proof is an immediate consequence of Lemma A1 using with and as defined in (12). □

Appendix A.2. Proof of Theorem 2.1

Proof.

Without loss of generality, we assume . Following the argumentation in [20], Theorem 5.9, we first remark that with and A as described in (A4) and (A5). We now want to study the asymptotic behavior of the partial sum where . Since , as can be seen in [15], Lemma 3.7, hence, we know by [14], Theorem 6, that these partial sums are dominated by the second order terms in the Hermite expansion of :

This follows from the multivariate extension of the Reduction Theorem as proven in [14]. We obtain:

since . This results in:

Note that:

since the entries of are independent for fixed j and identically distributed. Thus, the subtrahend in (A14) equals the expected value of the minuend.

Define with:

since we are considering a stationary process. We obtain:

Hence, we can state the following:

where we define , with as the matrix of second order Hermite coefficients, in contrast to B now with respect to the original considered process .

Remembering the special structure of , namely that , , we can see that:

where we divide:

with such that for each and .

We can now split the considered sum in (A17) in a way such that we are afterwards able to express it in terms of sample cross-covariances. In order to do so, we define the sample cross-covariance at lag l by

for .

Note that in the case , it follows directly that:

The case has to be regarded separately, too, and we obtain:

Note that for each of the terms labeled by ★, the following holds for :

We use this property later on when dealing with the asymptotics of the term in (A17). Finally, we consider the term in (A17) for and arrive at:

Again for each of the terms labeled by ★ it holds for :

since each ★ describes a sum with a finite number (independent of n) of summands. Therefore, we continue to express the terms denoted by ★ by .

With these calculations, we are able to re-express the partial sum, whose asymptotics we are interested in, in terms of the sample cross-correlations of the original long-range dependent process .

Taking the parts containing the sample cross-correlations into account, we derive:

We take a closer look at the impact of each long-range dependence parameter , to the convergence of this sum. The setting we are considering does not allow for a normalization depending on p and q for each cross-correlation , , but we need to find a normalization for all . Hence, we need to remember the set and the parameter , such that for each , we have . For each with and , we conclude that:

since .

This implies that:

Hence, using Slutsky’s theorem, the crucial parameters that determine the normalization and therefore, the limit distribution of (A27) are given in . We have an equal long-range dependence parameter to regard for all . Applying Lemma A1, we obtain the following, by using the symmetry in of the cross-correlation function for :

by defining . Applying the continuous mapping theorem given in [33], Theorem 2.3, to the result in Corollary A1, we arrive at:

where:

The matrix is given in Corollary A1. Moreover, is a multivariate Hermitian–Gaussian random measure with and L as defined in (2). □

Appendix A.3. Proof of Corollary 2.2

Proof.

We assumed , because otherwise we leave the long-range dependent setting, since we are studying functionals with Hermite rank 2 and the transformed process would no longer be long-range dependent and limit theorems for functionals of short-range dependent processes would hold, as can be seen in Theorem 4 in [14]. This choice of assures that the multivariate generalization of the Reduction Theorem as it is used in the proof of Theorem 2.1 still holds for these softened assumptions, as explained in (8).

We turn to the asymptotics of . We obtain for all , i.e., excluding and for all as in (A26), that:

since .

This implies that:

Applying Slutsky’s theorem, we observe that only have an impact on the convergence behavior as it is given in (A27) and hence, the result in Theorem 2.1 holds. □

Appendix A.4. Proof of Theorem 2.3

Proof.

We follow the proof of Theorem 2.1 until (A27), in order to obtain a limit distribution that can be expressed by the sum of two standard Rosenblatt random variables:

We remember that for and . Since is the inverse of the covariance matrix of it is a symmetric matrix. The matrix of second order Hermite coefficients C has the representation and therefore, for each . Then, is also a symmetric matrix, since . We can now show that is a symmetric matrix, i.e., . To this end, we define such that only if , . Then, we obtain:

We now apply the new assumption that , for and show with the symmetry features of the multivariate normal distribution discussed in (2.2) and in (2.3) in [18], since , .

We have to study:

Since is a symmetric and persymmetric matrix, we have and for . Then, we obtain:

Note that:

Then, we arrive at:

Therefore, we have to deal with a special type of matrix, since the original matrix in the formula (A27), namely , has now reduced to .

Finally, we know that any real-valued symmetric matrix A can be decomposed via diagonalization into an orthogonal matrix V and a diagonal matrix D, where the entries of the latter one are determined via the eigenvalues of A, for details, as can be seen in [34], p. 327.

We can explicitly give formulas for the entries of these matrices here:

such that:

Therefore, continuing with (A29), we now have the representation:

with and .

Now, note that:

Therefore, we created a bivariate long-range dependent Gaussian process, since:

with cross-covariance function:

Note that the covariance functions have the following asymptotic behavior:

and analogously:

We can now apply the result of [14], Theorem 6, since we created a bivariate Gaussian process with independent entries for fixed j. Note that for the function we apply here, namely the weighting factors in [14], Theorem 6, reduce to and . These weighting factors fit into the result in [14], (3.6) and (3.7), which even yields the joint convergence of the vector of both univariate summands, , suitably normalized to a vector of two (dependent) Rosenblatt random variables. Since the long-range dependence property in [13], Definition 2.1 is more specific than in [14], p. 2259, (3.1) (see the considerations in (8)), we are able to scale the variances of each Rosenblatt random variable to 1 and give the covariance between them, by using the normalization given in [15], Theorem 4.3. We obtain:

with being the same normalizing factor as in Theorem 2.1.

We observe that and are both standard Rosenblatt random variables. Following Corollary A1, their covariance is given by

Note that is fulfilled since . □

References

- Bandt, C.; Pompe, B. Permutation entropy: A natural complexity measure for time series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef] [PubMed]

- Keller, K.; Sinn, M. Kolmogorov–Sinai entropy from the ordinal viewpoint. Phys. D Nonlinear Phenom. 2010, 239, 997–1000. [Google Scholar] [CrossRef]

- Gutjahr, T.; Keller, K. Ordinal Pattern Based Entropies and the Kolmogorov–Sinai Entropy: An Update. Entropy 2020, 22, 63. [Google Scholar] [CrossRef] [PubMed]

- Keller, K.; Sinn, M. Ordinal analysis of time series. Phys. A Stat. Mech. Its Appl. 2005, 356, 114–120. [Google Scholar] [CrossRef]

- Sinn, M.; Keller, K. Estimation of ordinal pattern probabilities in Gaussian processes with stationary increments. Comput. Stat. Data Anal. 2011, 55, 1781–1790. [Google Scholar] [CrossRef]

- Matilla-García, M.; Ruiz Marín, M. A non-parametric independence test using permutation entropy. J. Econom. 2008, 144, 139–155. [Google Scholar] [CrossRef]

- Caballero-Pintado, M.V.; Matilla-García, M.; Marín, M.R. Symbolic correlation integral. Econom. Rev. 2019, 38, 533–556. [Google Scholar] [CrossRef]

- Bandt, C.; Shiha, F. Order patterns in time series. J. Time Ser. Anal. 2007, 28, 646–665. [Google Scholar] [CrossRef]

- Unakafov, A.M.; Keller, K. Change-Point Detection Using the Conditional Entropy of Ordinal Patterns. Entropy 2018, 20, 709. [Google Scholar] [CrossRef]

- Schnurr, A. An ordinal pattern approach to detect and to model leverage effects and dependence structures between financial time series. Stat. Pap. 2014, 55, 919–931. [Google Scholar] [CrossRef]

- Schnurr, A.; Dehling, H. Testing for Structural Breaks via Ordinal Pattern Dependence. J. Am. Stat. Assoc. 2017, 112, 706–720. [Google Scholar] [CrossRef]

- López-García, M.N.; Sánchez-Granero, M.A.; Trinidad-Segovia, J.E.; Puertas, A.M.; Nieves, F.J.D.l. Volatility Co-Movement in Stock Markets. Mathematics 2021, 9, 598. [Google Scholar] [CrossRef]

- Kechagias, S.; Pipiras, V. Definitions and representations of multivariate long-range dependent time series. J. Time Ser. Anal. 2015, 36, 1–25. [Google Scholar] [CrossRef]

- Arcones, M.A. Limit theorems for nonlinear functionals of a stationary Gaussian sequence of vectors. Ann. Probab. 1994, 22, 2242–2274. [Google Scholar] [CrossRef]

- Beran, J.; Feng, Y.; Ghosh, S.; Kulik, R. Long-Memory Processes; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Pipiras, V.; Taqqu, M.S. Long-Range Dependence and Self-Similarity; Cambridge University Press: Cambridge, UK, 2017; Volume 45. [Google Scholar]

- Major, P. Non-central limit theorem for non-linear functionals of vector valued Gaussian stationary random fields. arXiv 2019, arXiv:1901.04086. [Google Scholar]

- Nüßgen, I. Ordinal Pattern Analysis: Limit Theorems for Multivariate Long-Range Dependent Gaussian Time Series and a Comparison to Multivariate Dependence Measures. Ph.D. Thesis, University of Siegen, Siegen, Germany, 2021. [Google Scholar]

- Caballero-Pintado, M.V.; Matilla-García, M.; Rodríguez, J.M.; Ruiz Marín, M. Two Tests for Dependence (of Unknown Form) between Time Series. Entropy 2019, 21, 878. [Google Scholar] [CrossRef]

- Betken, A.; Buchsteiner, J.; Dehling, H.; Münker, I.; Schnurr, A.; Woerner, J.H. Ordinal Patterns in Long-Range Dependent Time Series. arXiv 2019, arXiv:1905.11033. [Google Scholar]

- Veillette, M.S.; Taqqu, M.S. Properties and numerical evaluation of the Rosenblatt distribution. Bernoulli 2013, 19, 982–1005. [Google Scholar] [CrossRef]

- Abrahamson, I. Orthant probabilities for the quadrivariate normal distribution. Ann. Math. Stat. 1964, 35, 1685–1703. [Google Scholar] [CrossRef]

- Hurst, H.E. Long-term storage capacity of reservoirs. Trans. Amer. Soc. Civil Eng. 1951, 116, 770–808. [Google Scholar] [CrossRef]

- Karlekar, M.; Gupta, A. Stochastic modeling of EEG rhythms with fractional Gaussian noise. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014; pp. 2520–2524. [Google Scholar]

- Shu, Y.; Jin, Z.; Zhang, L.; Wang, L.; Yang, O.W. Traffic prediction using FARIMA models. In Proceedings of the 1999 IEEE International Conference on Communications (Cat. No.99CH36311), Vancouver, BC, Canada, 6–10 June 1999; Volume 2, pp. 891–895. [Google Scholar]

- Keller, K.; Lauffer, H.; Sinn, M. Ordinal analysis of EEG time series. Chaos Complex. Lett. 2007, 2, 247–258. [Google Scholar]

- Taqqu, M.S. Weak convergence to fractional Brownian motion and to the Rosenblatt process. Probab. Theory Relat. Fields 1975, 31, 287–302. [Google Scholar]

- Düker, M.C. Limit theorems in the context of multivariate long-range dependence. Stoch. Process. Appl. 2020, 130, 5394–5425. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; JHU press: Baltimore, MD, USA, 2013; Volume 3. [Google Scholar]

- Brockwell, P.J.; Davis, R.A. Time Series: Theory and Methods; Springer-Verlag New York, Inc.: New York, NY, USA, 1991. [Google Scholar]

- Düker, M.C. Limit Theorems for Multivariate Long-Range Dependent Processes. arXiv 2017, arXiv:1704.08609v3. [Google Scholar]

- Magnus, J.R.; Neudecker, H. The commutation matrix: Some properties and applications. Ann. Stat. 1979, 7, 381–394. [Google Scholar] [CrossRef]

- Van der Vaart, A.W. Asymptotic Statistics; Cambridge University Press: Cambridge, UK, 2000; Volume 3. [Google Scholar]

- Beutelspacher, A. Lineare Algebra; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).