1. Introduction

The Bayes factor, defined as a ratio of the marginal likelihoods for the two models being compared, is an important quantity in Bayesian model comparison and hypothesis testing, see, e.g., in [

1]. The posterior odds ratio, used for comparing two competing models, is equal to the product of the prior odds and the Bayes’ factor. Therefore, one of the challenges for Bayesians is to accurately estimate the factor, especially in models with a large number of parameters and/or latent variables. Most popular methods of calculating the Bayes factor, based on estimation of the marginal likelihoods of each model separately, are very time-consuming (or even computationally infeasible) in high-dimensional cases. Therefore, methods for direct estimation of the Bayes factor, instead of estimating two marginal likelihoods, have been devised. There already exist different approaches, such as the product-space approach, reversible jump MCMC, and path sampling (see, e.g., in [

2,

3,

4,

5]), which make it possible to estimate the Bayes factor without calculation of the marginal densities of the data, but in many empirical cases (especially in the case of a large number of model parameters) they require extremely complicated and extensively time-consuming operations, with no general guarantees of success.

Note that for nested models with a large number of latent variables such as stochastic volatility models, Jacquier et al. [

6] have shown how to estimate the Bayes factor directly using Markov Chain Monte Carlo (MCMC) simulations from the posterior distribution. They pointed out that the Bayes factor can be expressed as the expected value of the ratio of corresponding densities with respect to the posterior distribution of parameters and latent variables. This expected value can be estimated by averaging over the MCMC draws. Thus, using the special structure of stochastic volatility models and exploiting prior assumptions about parameters, they have estimated Bayes factors for comparing the basic stochastic volatility model to the models with fat tails and correlated errors. Unfortunately, the finite sample properties of the estimator have not been analyzed by the authors. In this paper, we will show that even in very simple models it does not perform well, but it can be improved by trimming the space of parameters and latent variables.

Now, the issue of a direct estimation of the Bayes factor will be described more formally. Let us consider a Bayesian model, in which

- (1)

the space of parameters is denoted by and is a prior density function of the parameters collected in ,

- (2)

the space of latent variables is denoted by H and is a prior density function of the latent variables collected in , and

- (3)

y is a vector of observations.

The marginal data density,

, is defined as the integral (calculated over the whole space of parameters and latent variables,

) of the conditional data density given the vector of parameters and latent variables,

, with respect to the prior distribution of the parameters and latent variables:

In the majority of models it is impossible to analytically integrate out parameters and latent variables from the joint distribution for

y,

h, and

,

. It very often results from the number of dimensions of the space of the latent variables and parameters being too large. Furthermore, a correct assessment of the marginal likelihood is computationally challenging (see, e.g., in [

7,

8,

9], where summaries of various methods can be found). In the presence of a large number of latent variables (e.g., in stochastic volatility models) popular methods of Monte Carlo estimation of

for the observed vector

y are computationally extremely intensive, and often they turn out to be infeasible. Fortunately, we can consider the ratio of marginal data densities instead of

. In fact, the main criterion of comparison of two competing models

and

is the posterior odds ratio between the two models:

where

is the posterior probability of the model

,

is the prior probability of the model

, and

is the marginal data density value (the marginal likelihood) in the model

The ratio

is referred to as the Bayes factor in favor of the model

against the model

, in turn, the ratio

is the prior odds ratio. Thus, if the prior odds ratio is equal to 1 (i.e., both models are equally probable a priori,

, the posterior odds ratio between the two models is then equal to the Bayes factor. Moreover, if we assume that the set of models

is exhaustive, Bayes factors can be used to obtain the posterior model probabilities:

for

. By choosing

, we obtain

for

.

Thus, the Bayes factors can be used instead of the marginal data density values. Note that in many situations it is easier to estimate the Bayes factor than the marginal likelihood. In this paper, we show how to estimate the Bayes factor in models with a large number of parameters and/or latent variables, in which calculation of the marginal data density value is numerically impossible. It will be shown that the Bayes factor is equal to the posterior mean, restricted to a certain subset D of the parameter and latent variable space, of the ratio of conditional densities of the corresponding quantities times the reciprocal of the posterior probability of the subset D. This fact leads the researcher to using arithmetic mean estimator of the ratio based on simulation from the posterior distribution restricted to the subset D.

It is well known that the Savage–Dickey density ratio and its generalizations (see in [

10,

11,

12]) are relatively simple and widely used methods for computing Bayes factors for nested models. The Savage–Dickey method requires an estimate of the value of the posterior distribution at a single point. It is not reliable when this point lies in the tail of the posterior distribution. As has already been mentioned, the Savage–Dickey method can be used only for nested models. Our method can be applied for both nested and non-nested ones.

Note that although the posterior odds principle is fundamental, there are other Bayesian approaches to model comparison or hypothesis testing, e.g., the so-called Lindley type test (see [

13]) or the Full Bayesian Significance Test (FBST), introduced by Pereira and Stern [

14] as a Bayesian significance test for precise (sharp) hypotheses. A solid theoretical background of the FBST can be found in [

15]. Detailed discussions and extensions of the Pereira–Stern test as well as of its applications are presented in, e.g., [

16,

17,

18,

19,

20,

21]. Our approach to Bayesian model comparison (or comparing hypotheses) is based on posterior probabilities associated with models (or hypotheses). This leads directly to the so-called posterior odds approach. Motivations for the formal Bayesian approach to model selection and for the use of Bayes factors are discussed in [

22]. First of all, the Bayes factors and posterior model probabilities are easy to understand. Moreover, this approach is consistent, penalizes model complexity, remains conceptually the same regardless of the number of models (or hypotheses) under consideration, and does not require nested models or regular asymptotics. This approach allows one not only to test hypotheses, but also to compare them—“the process of revising prior probabilities associated with alternative hypotheses does not necessarily involve a decision to reject or accept these hypotheses” (see [

1], p. 291). Furthermore, the Bayes factors make it possible to compare models whose prior distributions are different. Finally, the posterior probabilities of models or the Bayes factors can be used in the so-called Bayesian pooling approach (or Bayesian model averaging, see, e.g., in [

23]). This paper is organized as follows. In

Section 2, our new method is presented.

Section 3 contains the simulation study. In

Section 4, we present results of comparison of hybrid MSV-MGARCH models. The last section contains conclusions and direction of further research.

2. New Estimators of the Bayes Factor

It is easy to show that the Bayes factor in favor of the model

against the model

can be expressed as an integral:

where

,

, and

denote the conditional probability density function of the vector of observations (conditional sampling density), the conditional probability density function of latent variables, and the prior density of parameters in the model

, respectively. Note that when the two competing models

and

have the same parameter vector and the vector of latent variables (i.e.,

,

,

,

, then the Bayes factor can be computed in a relatively straightforward way which does not require estimation of marginal data density values for each model separately. Of course, it is possible only if draws from one of the posterior distributions are available (see in [

24]). We have

where

Therefore, given the sample

from the posterior distribution

, an estimator of

can be expressed as

As was pointed out in [

24], it is crucial that the variability of the ratio

is small under the posterior distribution

; otherwise, estimates of

might be driven by few values of

and

, and thus an extremely large simulation sample could be required to obtain adequate result. To deal with this problem, we propose a certain modification of the estimator (

7). The idea of this modification is based on trimming the posterior sample to a certain subset of parameter and latent variable space, similarly to the idea of the correction of arithmetic mean estimator, proposed in [

25]. Let us assume that

and

. The equality

implies that

We can see immediately that

thus

Equality (

9) means that the Bayes factor can be expressed as a product of the reciprocal of the posterior probability of the subset

D in the model

,

, and of the expected value of the indicator function of subset

D times the ratio

,

. This expected value is calculated with respect to the posterior distribution of the model parameters and latent variables in the model

. Therefore, given the sample

from the posterior distribution

, an estimator of

can be expressed as

where

is an assessment of the posterior probability of the subset

D in the model

. Unfortunately, this assessment requires also sampling from the posterior distribution in the model

.

Note that equality (

9) can obviously be written as

provided that

. This equality suggests that the Bayes factor can be estimated by the product of the ratio of posterior probabilities of the subset

D and the sample arithmetic mean of the ratio

:

based on

drawn from the posterior distribution given by

, truncated to the subset

D, that is,

.

As a by-product of our considerations, we have just shown that if a Monte Carlo sampler only visits a subset of the support of the posterior distribution, the correction of the sample arithmetic mean of the ratio , is needed.

Now, we will extend our analysis to models which contain two groups of parameters: the parameters common to both models and parameters specific to only one of them. Moreover, additional assumptions pertaining to conditional probability density functions will be discussed.

Let us assume that

- (i)

denotes all the parameters of the model (since called , while contains the parameters common to both models: and (since called , and the vector denotes specific parameters of ;

- (ii)

, i.e., the random vectors and are a priori independent;

- (iii)

, i.e., the prior distribution for the vector of parameters is proper; and

- (iv)

; , i.e., competing models have the same vector of latent variables.

2.1. Different Prior Distributions of Latent Variables in Both Models

In this section, we additionally assume that

- (v)

, i.e., competing models have the same conditional sampling density and

- (vi)

, i.e., in both models, the common parameters have the same prior distribution.

Under above assumptions the Bayes factor in favor of the model

versus the model

is given by the following integral:

Thus, the estimator of the Bayes factor takes the form

where

are drawn from the posterior distribution of parameters and latent variables in the model

, i.e., from the distribution given by

.

On the other hand, it is easy to show that the Bayes factor in favor of the model

against the model

is as follows:

and consequently

where

and

are drawn from the posterior distribution

and from the prior distribution,

, respectively. However, if the dimension of the vector

is high, then the estimators

and

tend to suffer from the so-called “simulation pseudo-bias”, similarly to the harmonic mean estimator (see in [

26]). This “simulation pseudo-bias” of the estimators will be illustrated on the basis of simulation studies in the next section. To deal with the problem of “pseudo-bias”, we propose drawing from the posterior and prior distributions restricted to a subset of the space of parameters and latent variables with non-zero posterior probability, and correcting the arithmetic mean of the ratio by the posterior probability of the subset visited by the sampler.

Let us assume that and , , .

Starting from the identity

we obtain

On the basis of the fact that

and under assumptions (i)–(vi), we have

Identity (

18) naturally leads to the following estimator of the Bayes factor:

where

are drawn from

, or equivalently

where

are drawn from

truncated to the subset

. Because the posterior simulation support is the subset

of the parameter and latent variable space, most of the

have “similar” values of the ratio of the conditional densities of latent variables, so that, having probabilities

,

,

, the simulation process will be numerically more efficient than in the case of unrestricted space of parameters and latent variables. The estimates will not be dominated by few values of the ratio. Therefore, a smaller simulation sample will be required to obtain adequate precision in

.

Now suppose that

and

,

. This time we start with the identity

and we obtain

In this case,

and under assumptions (i)–(vi)

Given a sample

,

from the distributions

and

, respectively, then, as results from (

22), an estimator of the Bayes factor for the model

against the model

can be

Additionally, if

, then

where

,

are drawn from the distributions determined by

and

, respectively, restricted to the subset

D.

2.2. Different Prior Distributions for Common Parameters in Both Models

Now, let us assume that

- (v)

, i.e., competing models have the same sampling density and

- (vii)

, i.e., in both models, the latent variables have the same prior distribution, instead of (vi) .

Then, it is easy to show that in the first case

and in the second case

Basing on identities (

25) and (

26), the following estimators of the Bayes factors can be formulated:

where

are drawn from

,

where

,

are drawn from

and

, respectively.

2.3. Different Conditional Sampling Distributions of the Vector of Observations

Now we assume that conditions (i)–(iv), (vi), and (vii) hold. Thus, the conditional distribution of the observable vector can be different, but the prior distributions for vector of latent variables and common parameters are the same in both competing models. Then, it is easy to show that

and for the reciprocal of this Bayes factor we have

Similarly to the above, basing on identities (

29) and (

30), the following estimators of the Bayes factors can be formulated:

where

are drawn from

, and

where

,

are drawn from

and

, respectively.

Note that the estimators (

19), (

22), (

27), (

28), (

31), and (

32) are based on the arithmetic mean of the ratio of densities times the indicator function of an arbitrary subset of the space of model parameters and latent variables. Additionally, this arithmetic mean is corrected by the reciprocal of the posterior probability of the subset.

3. Simulation Study

In this section, we present a simple simulation study for models with latent variables in which, after having integrated out latent variables analytically, the true values of the marginal likelihoods can easily be assessed using, e.g., the corrected arithmetic mean estimator (CAME [

25]). These assessments will be applied to obtain estimates of Bayes factors used as benchmark values for evaluation of the performance of the new method proposed.

Let us consider the Student t model

where

denotes the Student t distribution with mode

, precision 1, and

v degrees of freedom.

denotes the Normal distribution with mean

and variance

, in turn,

stands for the Exponential distribution with mean

and variance

. The symbol

iid stands for independent and identically distributed. Thus, the random variables

are independent and have the same Student t distribution.

The Student t distribution can be expressed as a scale mixture of Gaussian distributions by introducing a random variable

that is inverse-gamma distributed. The model can be written as

where

denotes the gamma distribution with shape

and rate

.

To simulate datasets we generated samples of size

, data points from model (

33) with

, and

.

In turn, to simulate from the posterior distribution the Gibbs algorithm was used and run for 20,000 iterations. The conditional posterior distribution for

is Normal, i.e.,

where

, while the conditional posterior distribution for

is Gamma:

An easy computation shows that the conditional posterior distribution of

v is nonstandard:

where

.

However, reliable numerical methods for generating from this distribution do exist. We use one of them, the rejection sampling proposed by [

27].

In the model under consideration,

,

, and

; thus, the number of latent variables is equal to the number of observations: 500, 1000, and 2000, respectively. The direct computation of the marginal likelihood for model (

34) is intensive and unstable due to the presence of latent variables. However, by integrating out the latent variables from the joint distribution of parameters, latent variables, and data,

, the conditional density of the data given parameters only can be written in the following form:

Unfortunately, the marginal density of the data (i.e.,

cannot be presented in closed form. However, due to the lack of latent variables as well as thanks to the small number of parameters, the marginal data density value can easily and precisely be evaluated by using the corrected arithmetic mean estimator (CAME [

25]). Estimates obtained by the use of the CAME are treated as benchmark values.

In order to show performance of our new estimators of the Bayes factor, we assume that the subset

D is an intersection of the space of parameters and latent variables, obtained from the condition that the ratio of conditional density functions

and

is between the lowest and the highest values of the ratio evaluated on the basis of pseudo-random sample

in both models, and the hyper-cuboid limited by the range of the sampler output, i.e.,

where

In the restricted models (

, it is assumed that

, whereas in the unrestricted model (

)

. The ratio of density functions of the conditional distributions of the observable vector is as follows:

In

Table 1,

Table 2 and

Table 3, we present results obtained by using newly proposed estimators of Bayes factors (i.e., the corrected arithmetic means of the ratio of the densities of the conditional distributions of the observable vector,

and

and uncorrected arithmetic means of the ratios

and

.

Table 1,

Table 2 and

Table 3 present means, standard deviations, root mean square errors and average errors (relative to the CAM estimates) of the decimal logarithm of estimates obtained in models under consideration. As mentioned earlier, closed-form expressions for the marginal likelihoods do not exist. Therefore, the root mean square errors and average errors are determined relative to the CAM estimates obtained for each marginal likelihood separately.

To estimate

,

, and

, we use simulation from appropriate posterior or prior distributions, e.g.,

where

are drawn from the posterior distribution

. The remaining probabilities are calculated in a similar manner. Furthermore, we consider uncorrected arithmetic means of the ratios

and

to investigate sampling properties of these estimators.

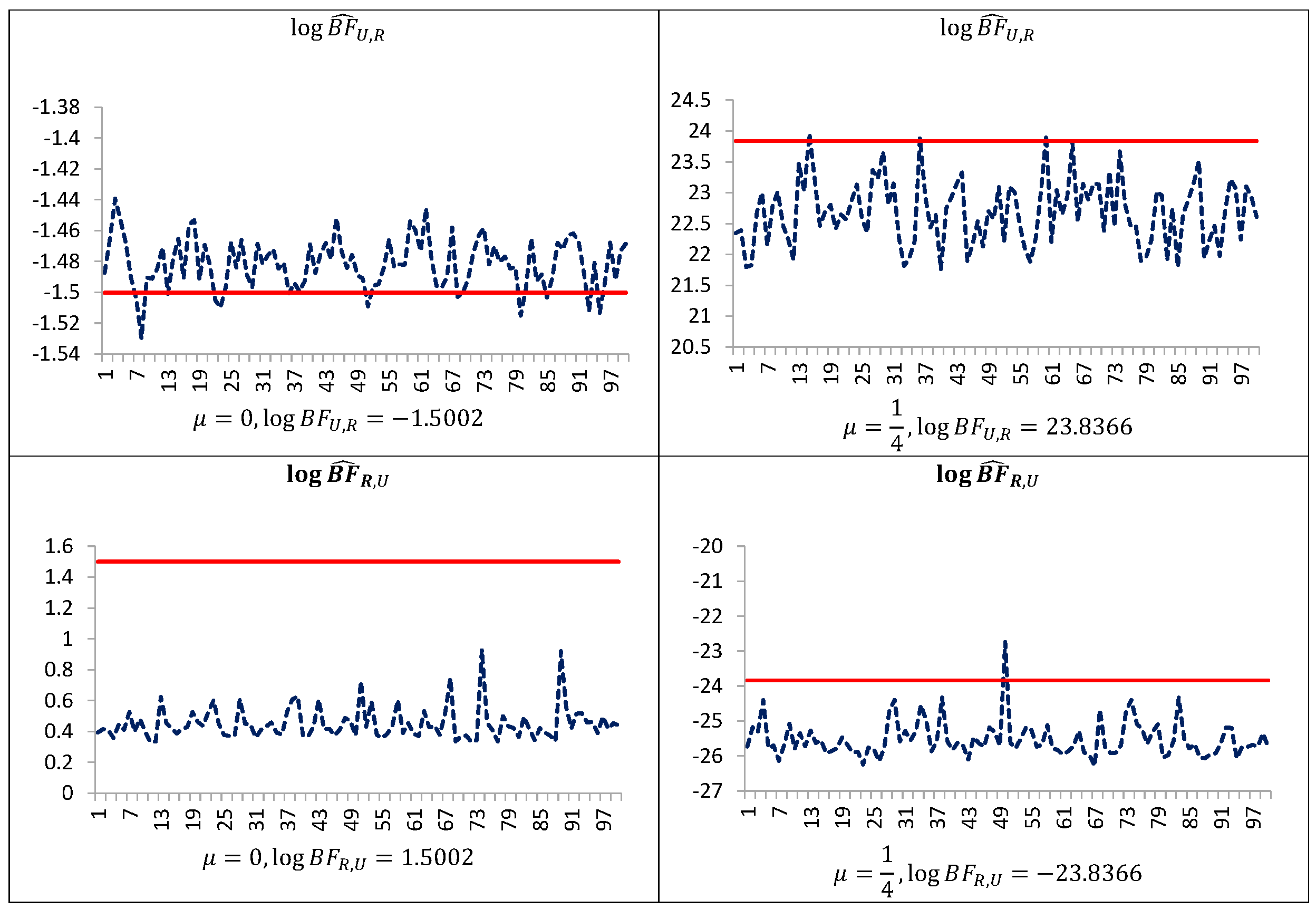

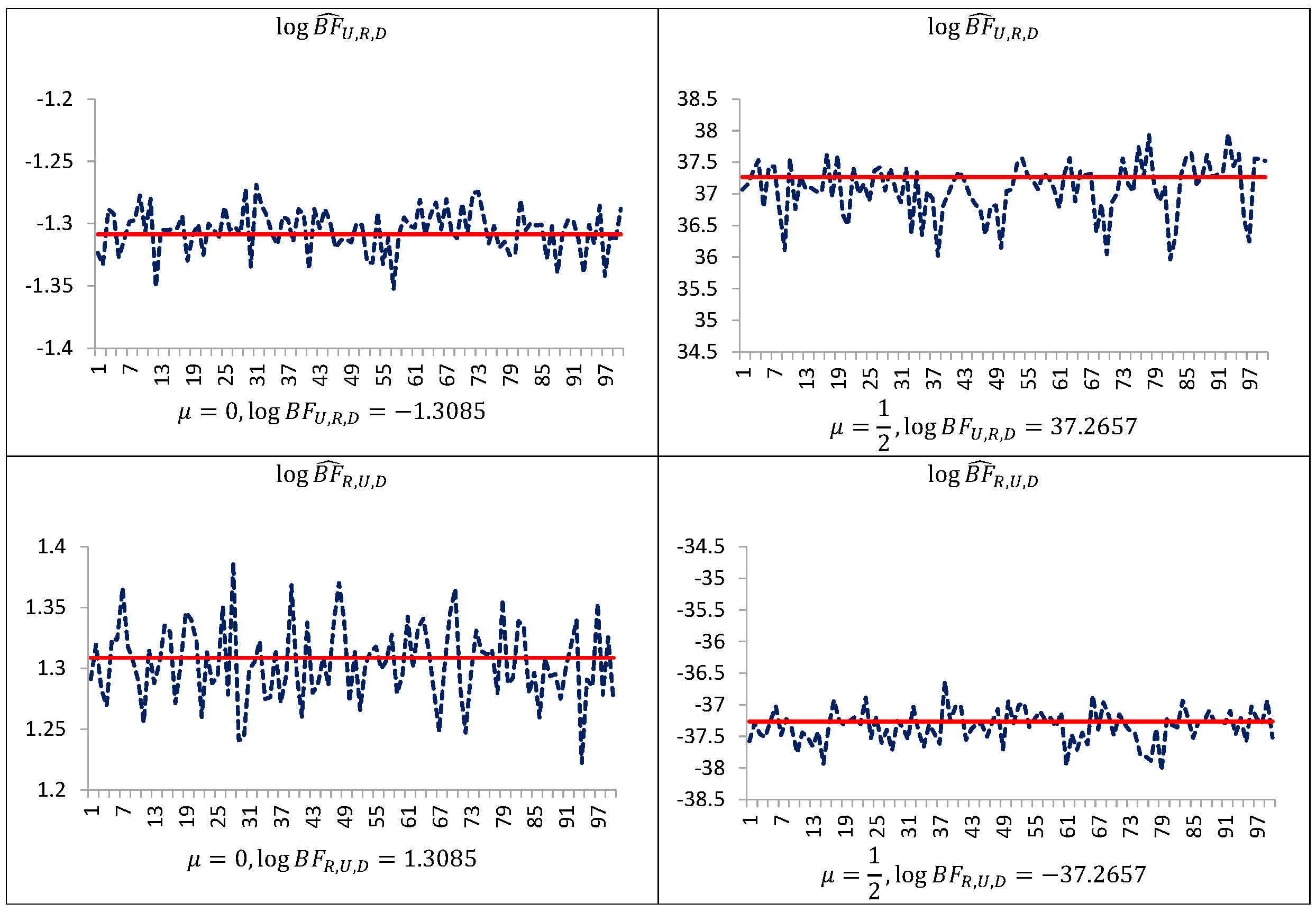

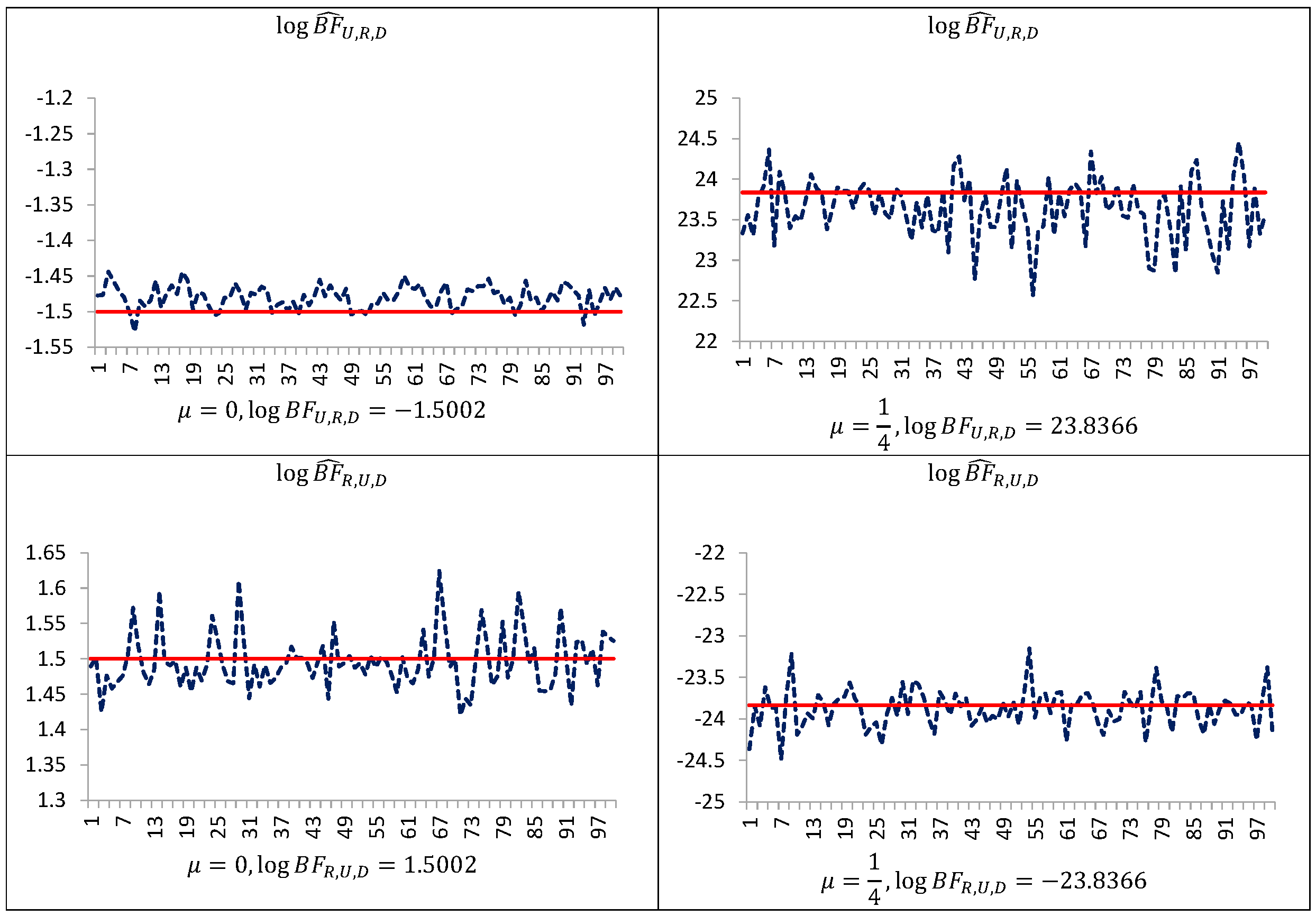

Figure 1 indicates that even in such simple models performance of the uncorrected estimators are not satisfactory. The estimator

is downwardly “pseudo-biased” (respective average errors are negative). On the other hand, our simulation study demonstrates that the performance of the estimators

and

can be improved by trimming the posterior simulation support to a given subset of the space of latent variables and parameters, and next making the correction of the arithmetic mean of the ratios using the posterior probabilities of the subset. As can be seen from

Table 1,

Table 2 and

Table 3 and

Figure 1,

Figure 2 and

Figure 3, corrected estimators of the Bayes factors perform very well in comparison to uncorrected ones. The estimators

and

produce estimates which are very close to those obtained on the basis of the CAME, while the estimators

and

, based on uncorrected arithmetic mean, provide biased estimates.

Finally, note that by using both estimators for the ratio of densities and their reciprocals, i.e., and , one can check the accuracy of assessments and of adequate (from numerical point of view) selection of the subset D. This is because, roughly speaking, the equality implies that it is natural to use and to estimate . Different estimates for can indicate numerical inadequacy of selection of the subset D and/or too small simulation sample.

4. Empirical Illustration: Formal Bayesian Comparison of Hybrid MSV-MGARCH Models

In this section, the new method will be applied in order to formally compare the hybrid Multivariate Stochastic Volatility–Multivariate Generalized Autoregressive Conditional Heteroskedasticity (MSV-MGARCH) models and thus to show that it can be used in practice. The hybrid MSV-MGARCH models were proposed in [

28,

29,

30] for modeling financial time series. These hybrid models are characterized by relatively simple model structures that exploit advantages of both model classes: flexibility of the Multivariate Stochastic Volatility (MSV) class, where volatility is modeled by latent stochastic processes, and relative simplicity of the Multivariate GARCH (MGARCH) class. The simplest specification of MSV-MGARCH model (called LN-MSF-SBEKK) is based on one latent process (Multiplicative Stochastic Factor (MSF)) and on the scalar BEKK [

31] covariance structure. The LN-MSF-SBEKK structure is obtained by multiplying the SBEKK conditional covariance matrix

by a scalar random variable

such that

is a Gaussian AR(1) latent process with autoregression parameter

. The hybrid LN-MSF-SBEKK specification has been recognized in the literature [

32,

33,

34,

35] and proved to be useful in multivariate modeling of financial time series as well as of macroeconomic data [

36,

37,

38,

39,

40,

41]. The drawback of the LN-MSF-MGARCH process is that it cannot be treated as a direct extension of the MGARCH process with the Student t conditional distribution. When

, the LN-MSF-SBEKK process reduces itself to the SBEKK process with the conditional distribution being a continuous mixture of multivariate normal distributions with covariance matrices

and

log-normally distributed. However, the multivariate Student t distribution can be expressed as a scale mixture of normal distributions with the inverted gamma as a mixing distribution. This fact was exploited in [

42,

43], where the IG-MSF-SBEKK specification was proposed as a natural hybrid extension of the SBEKK process with the Student

t conditional distribution (t-SBEKK). In the new specification, the latent process

remains an autoregressive process of order one, but it is non-Gaussian. For

the latent variables

(where

) are independent and have inverted gamma (IG) distribution. Unfortunately, for

the unconditional distribution of the latent variables

is unknown. To summarize, the IG-MSF-SBEKK model is obtained by multiplying the SBEKK conditional covariance matrix

by a scalar random variable

coming from such latent process (with autoregression parameter

) that, for

,

has an inverted gamma distribution. Thus,

leads to the t-SBEKK specification, in which the conditional distribution is represented as a continuous mixture of multivariate normal distributions with covariance matrices

, where

is inverted gamma distributed. If

, the latent variables

(

) are dependent, so (in comparison to the t-SBEKK model) in the IG-MSF-SBEKK model there exists an additional source of dependence.

Let us consider a two-dimensional autoregressive process

defined by the equation

where

and

are, respectively,

and

matrix parameters, and

T is the length of the observed time series. The hybrid MSF-MGARCH specification for the disturbance term

is defined by the following equality:

where

is a Gaussian white noise sequence with mean vector zero and unit covariance matrix;

is a sequence of independent positive random variables;

is inverted gamma distributed with mean

for

, i.e.,

,

for all

,

;

and

are positive scalar parameters such that

; and

A is a free symmetric positive definite matrix of order 2. Under (

41) and (

42), the conditional distribution of

(given the past of

and the current latent variable

is Normal with mean vector

and covariance matrix

. For

(this value is excluded in the definition of the hybrid models under consideration)

, so the distribution of

is an inverted gamma. In this case,

in (

41) is, given its past, an IG mixture of two-variate normal distributions

, i.e., it has the two-variate Student t distribution.

The assumptions presented so far determine the conditional distribution of the observations and latent variables given the parameters. Thus, it remains to formulate the prior distributions of parameters. We use the same prior distributions as in [

42,

43]. Six elements of

are assumed to be a priori independent of other parameters, with the

prior. Matrix

A has an inverted Wishart prior distribution such that

has the Wishart prior distribution with mean

; the elements of

are jointly uniformly distributed over the unit simplex. As regards the initial conditions for

, we take

and treat

as an additional parameter, a priori exponentially distributed with mean 1;

has the uniform distribution over (−1, 1), and for

v we assume the gamma distribution with mean

, truncated to

. We assume that

with two cases:

and

(note that

leads to exponential distribution for

v).

Furthermore, we use the same bivariate data sets as those modeled in [

30,

42,

43]. The first data set consists of the official daily exchange rates of the National Bank of Poland (NBP fixing rates) for the US dollar and German mark in the period 1 February 1996–31 December 2001. The length of the modeled time series of their daily growth rates (logarithmic return rates) is 1482. The second data set consists of the daily quotations of the main index of the Warsaw Stock Exchange (WIG) and the S&P500 index of NYSE—1727 logarithmic returns are modeled from the period 8 January 1999–1 February 2006.

In order to obtain pseudo-random sample from the posterior distribution of parameters and latent variables, we use MCMC simulation techniques described in [

42,

44] and implemented within the GAUSS programming environment.

Using fully Bayesian methods, we want to answer the question whether the IG-MSF-SBEKK model can be reduced to the t-SBEKK case. Thus, we consider the hypothesis that a scalar parameter (the t-SBEKK model, and the alternative hypothesis that (the IG-MSF-SBEKK model, . For the exchange rate data, the posterior probability that is approximately only and is included in the highest posterior density (HPD) interval of probability content as high as . Thus, Lindley’s procedure leads to the conclusion that the t-SBEKK is inadequate. But for the stock data, the posterior probability that is for and for , and is included in the HPD interval of probability content or , depending on the prior hyperparameter . In the case of the stock data, Lindley’s testing procedure yields results that the t-SBEKK model cannot be rejected by the data.

Now, our new estimators of the Bayes factors will be used. We start with the assumption that the subset

D is an intersection of the subspace of parameters and latent variables, obtained by the condition that the ratio of conditional density functions

and

is between the lowest and the highest values of the ratio evaluated at pseudo-random sample

in both models, and the hyper-cuboid limited by the range of the sampler output, i.e.,

, where

The ratio of the densities of the conditional distributions of the vector of latent variables is as follows:

In

Table 4, we present results obtained by using newly proposed estimators of Bayes factors—the corrected arithmetic means of the ratio of the densities of the conditional distributions of latent variables,

and

; the subscript

h is omitted for convenience. Results obtained on the basis of our new method confirm that in the case of exchange rates, the t-SBEKK model is strongly rejected by the data, whereas it seems that the t-SBEKK specification can be adequate for stock indices. Under equal prior model probabilities, the IG-MSF-SBEKK model for exchange rates is about 14–15 times more probable

a posteriori than the t-SBEKK model, indicating a strong (but not very strong) evidence against the t-SBEKK model. In turn, for stock indices the decimal logarithm of the Bayes factor of t-SBEKK relative to IG-MSF-SBEKK depends on prior distribution of the number of degrees of freedom,

v. The Bayes factor in favor of t-SBEKK is equal to about

and

in models with

and

, respectively. Of course, according to scale of Kass and Raftery (1995) these results indicate evidence for a given model that is negligible: “not worth more than a bare of mention”.

For the sake of comparison, in the last row of the

Table 4 the estimates of the Savage–Dickey ratio is presented. The marginal posterior density of

is evaluated at

on the basis of the histogram of the sampled values of

from the posterior distribution. The main conclusions pertaining to the validity of the reduction of the IG-MSF-SBEKK model to the t-SBEKK one are very similar.

Additionally, it is very important to stress that our method makes it possible to compare IG-MSF-SBEKK models whose prior distributions are different (e.g., in respect of the number of degrees of freedom). As can be seen from

Table 5, for exchange rates the IG-MSF-SBEKK and t-SBEKK models with exponential distribution for

v are more probable a posteriori than those with the gamma distribution for

v with the hyperparameter

. In contrary, for stock indices the IG-MSF-SBEKK model with the hyperparameter

is more probable a posteriori than the same model with

, but this improvement is negligible.