1. Introduction

Image segmentation has been one of the most challenging problems in image processing and computer vision for the last three decades. It is different from image classification, as an image classification algorithm has to classify the object which has a particular label, but an ideal image segmentation algorithm has to segment even the unknown objects [

1]. Good image segmentation is expected to have uniform and homogeneous segmented regions, but achieving a desired segmented image is a challenging task [

2]. Numerous image segmentation algorithms have been developed and reported in the literature. The traditional image segmentation algorithms are generally based on clustering technology, and the Markov model is one of the most well-known approaches. In general, the traditional image segmentation algorithms based on digital image processing and mathematical morphology are susceptible to noise, and require a lot of interaction between a human and computer for accurate segmentation [

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13]. Amongst image segmentation algorithms, Deep Learning (DL)-based image segmentation has created a new generation of image segmentation models, and surpassed traditional image segmentation methods in many aspects [

14,

15]. DL-based image segmentation aims to predict a category label for every image pixel, which is an important yet challenging task [

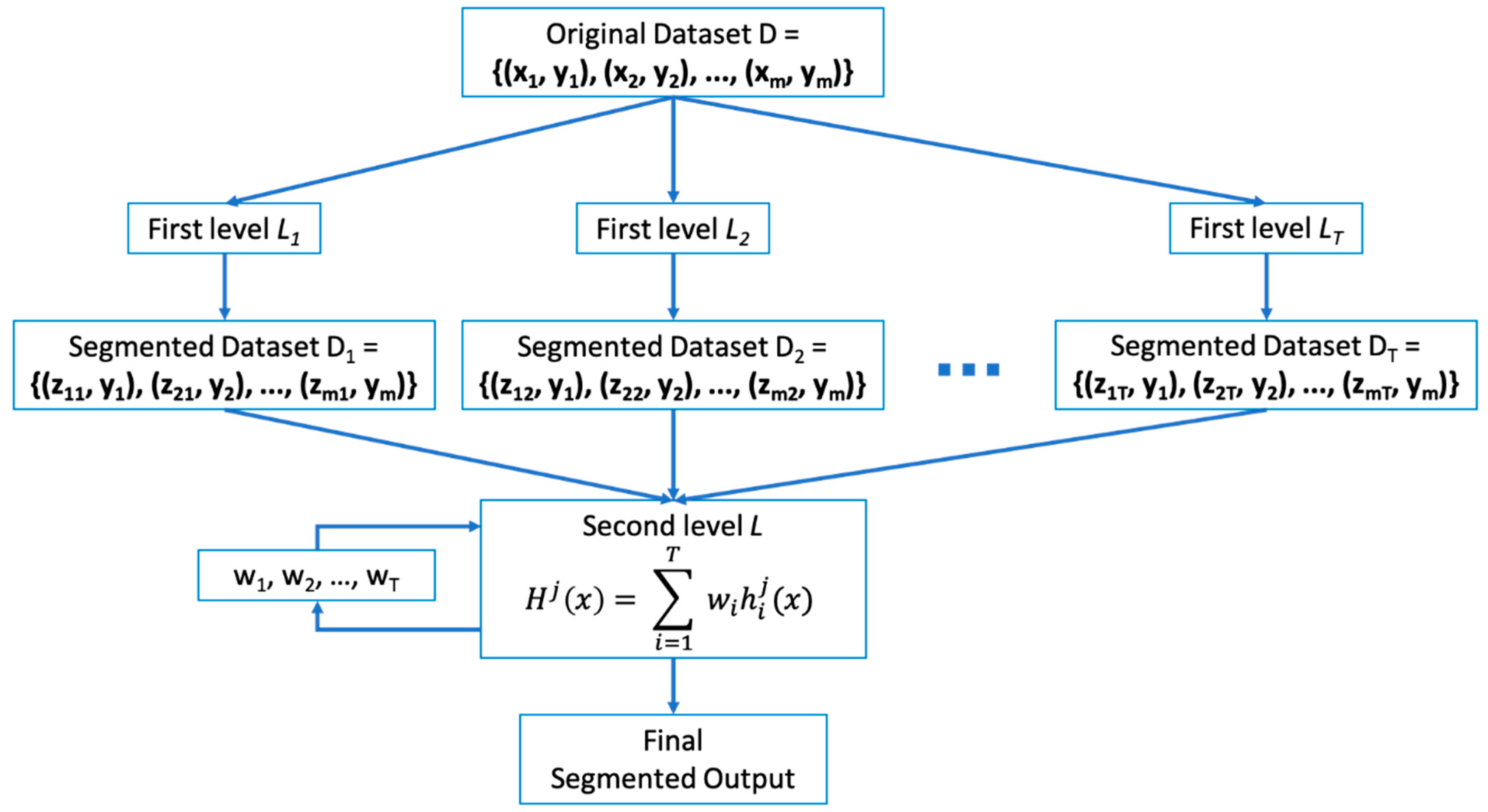

16]. Ensemble methods can be used for improving prediction performance, and the idea of ensemble method is to build an effective model by integrating multiple models [

17]. Model averaging is a general strategy amongst many ensemble methods in machine learning, and its idea is to train several different models separately, and then all the models vote on the output of each model. It is known that neural networks provide a wide variety of solutions, and can benefit from model averaging, even if all of the models are trained on the same dataset [

18].

Integrating multiple models to enhance the prediction performance has been studied and analyzed by several researchers in machine learning: Breiman explained a method to improve the performance accuracy by combining several models [

19]; Warfield et al. presented an algorithm for combining and estimating the segmentation performance [

20]; Rohlfing et al. introduced a new way to combine multiple segmentation and enhance performance [

21]; Hansen and Salamon suggested that an ensemble of similar functioning and configured networks increased the predictive performance of the individual network [

22]; Singh et al. specified that ensemble segmentation had resulted in better performance than individual segmentation [

23]; Yan Zou et al. introduced a rapid ensemble learning by combining deep convolution networks and random forests to reduce learning time using limited training data [

24]; and Andrew Holliday et al. suggested a model compression technique to speed up DL segmentation using an ensemble. The authors combined the strengths of different architectures, while maintaining the real-time performance of segmentation [

25]. Ishan Nigam et al. proposed an ensemble knowledge transfer to improve aerial segmentation. The authors trained multiple models by progressive fine-tuning and combined the collections of models to improve performance [

26]. D. Marmanis et al. suggested an ensemble approach using Fully Convolution Network (FCN) models for the semantic segmentation of aerial images. It has been shown in this work that ensembles of several networks achieve excellent results [

27]. Machine Learning contests are usually won by methods using model averaging, for example the Netflix Grand Prize [

28]. Y. W. Kim et al. introduced an ensemble approach by combining three DL-based portrait segmentation models, and showed significant improvement in the segmentation accuracy [

29].

Semantic image segmentation plays a central role in a broad range of applications such as medical image analysis, autonomous vehicles, video surveillance and Augmented Reality (AR) [

14,

30]. Portrait segmentation, which is a subset of semantic image segmentation, is generally used to segment a human’s upper body in an image, but may not be limited to the upper body. The use of portrait segmentation technology is becoming more and more popular due to the popularity of “selfies” (self-portrait photographs). Portrait segmentation is widely used as a pre-processing step in multiple applications such as security system, entertainment application, video conference, AR, etc. In portrait segmentation, the precise segmentation of the human body is crucial but challenging [

31]. A substantial amount of DL-based portrait segmentation approaches have been developed since the performance and accuracy of semantic image segmentation have improved significantly due to the recently introduced DL technology [

32,

33,

34,

35,

36,

37,

38,

39,

40,

41]. However, studies related to these approaches are focused on a single portrait segmentation model.

In this paper, we propose a novel approach using ensemble method by combining multiple heterogeneous DL-based portrait segmentation models, and the experiment results show that the proposed approach can produce improved performance over single DL-based portrait segmentation models. The major contributions of this research are as follows:

- (1)

A novel approach of combining multiple heterogeneous DL-based portrait segmentation models was introduced. According to the best of our knowledge, there are no reported works on portrait segmentation using an ensemble of multiple heterogeneous DL models.

- (2)

The efficiency rate of memory and computing power was measured to evaluate the efficiency of portrait segmentation models. We attempted to measure cost efficiency of five state-of-the-art DL-based portrait segmentation models, and proposed an ensemble approach. As per the best of our information and relevant survey, we have not found any reported works measuring cost efficiency for DL-based portrait segmentation models.

- (3)

Intersection over Union (IoU), IoU standard deviation, False Negative Rate (FNR), False Discovery Rate (FDR), FNR + FDR and |FNR-FDR| were used to measure the accuracy, variance and bias error of segmentation results. We have used IoU standard deviation to measure the variance error of experimented models, FNR + FDR to measure the bias error and |FNR-FDR| to measure the balance of bias error. These metrics are a new approach to evaluate the performance of DL-based portrait segmentation models.

In addition to these major contributions, the minor contributions of our research work are presented below:

- (4)

Six state-of-the-art DL-based portrait segmentation models were experimented on and compared.

- (5)

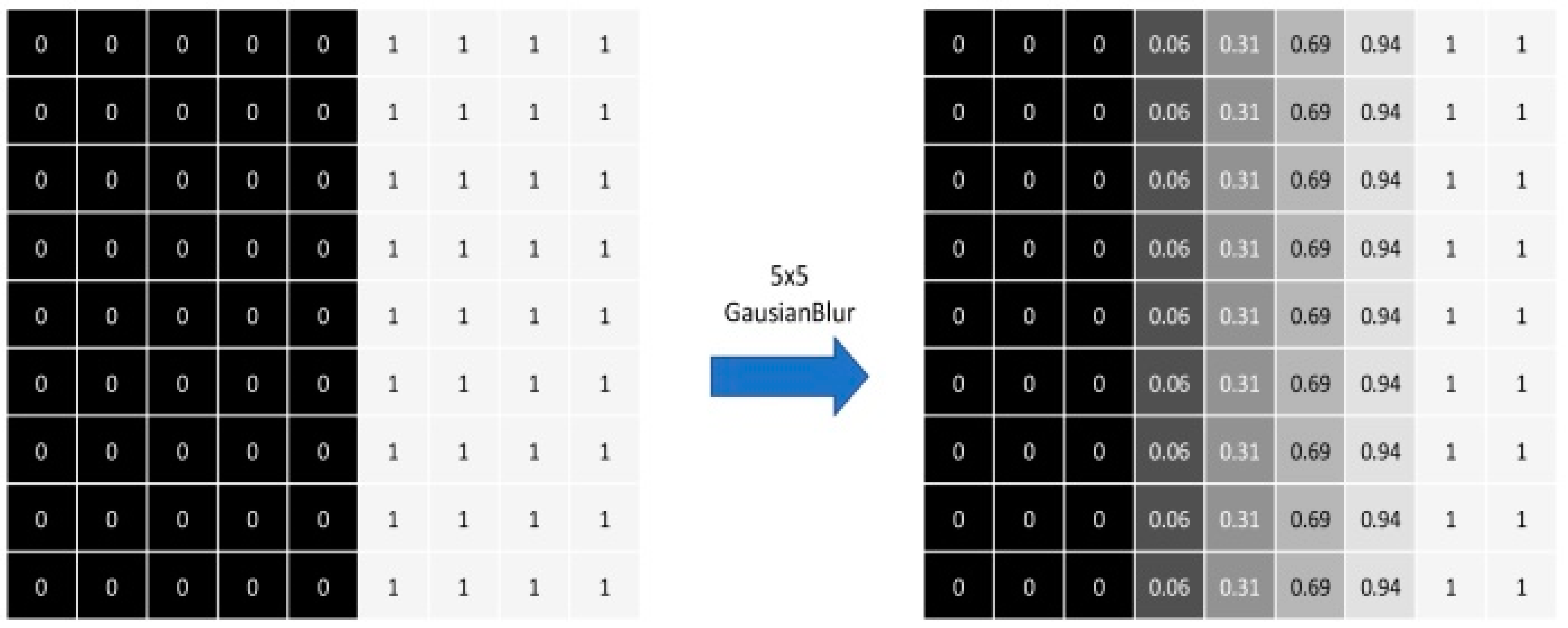

The Simple Soft Voting method and Weighted Soft Voting method were used to make an ensemble of individual portrait segmentation models.

- (6)

A simple and efficient way to combine the output results of individual portrait segmentation models was introduced.

- (7)

A quantitative experiment was used to evaluate the performance of portrait segmentation models.

Section 2 gives a brief introduction about the portrait segmentation and soft voting method.

Section 3 explains portrait segmentation models used in the experiment, the concept of an ensemble, approach to combine portrait segmentation models, evaluation metrics and experiment environment.

Section 4 presents the results of the experiment, followed by the brief discussion of the results in

Section 5. The summary of experiment and contribution of this work are covered in

Section 6.

6. Conclusions

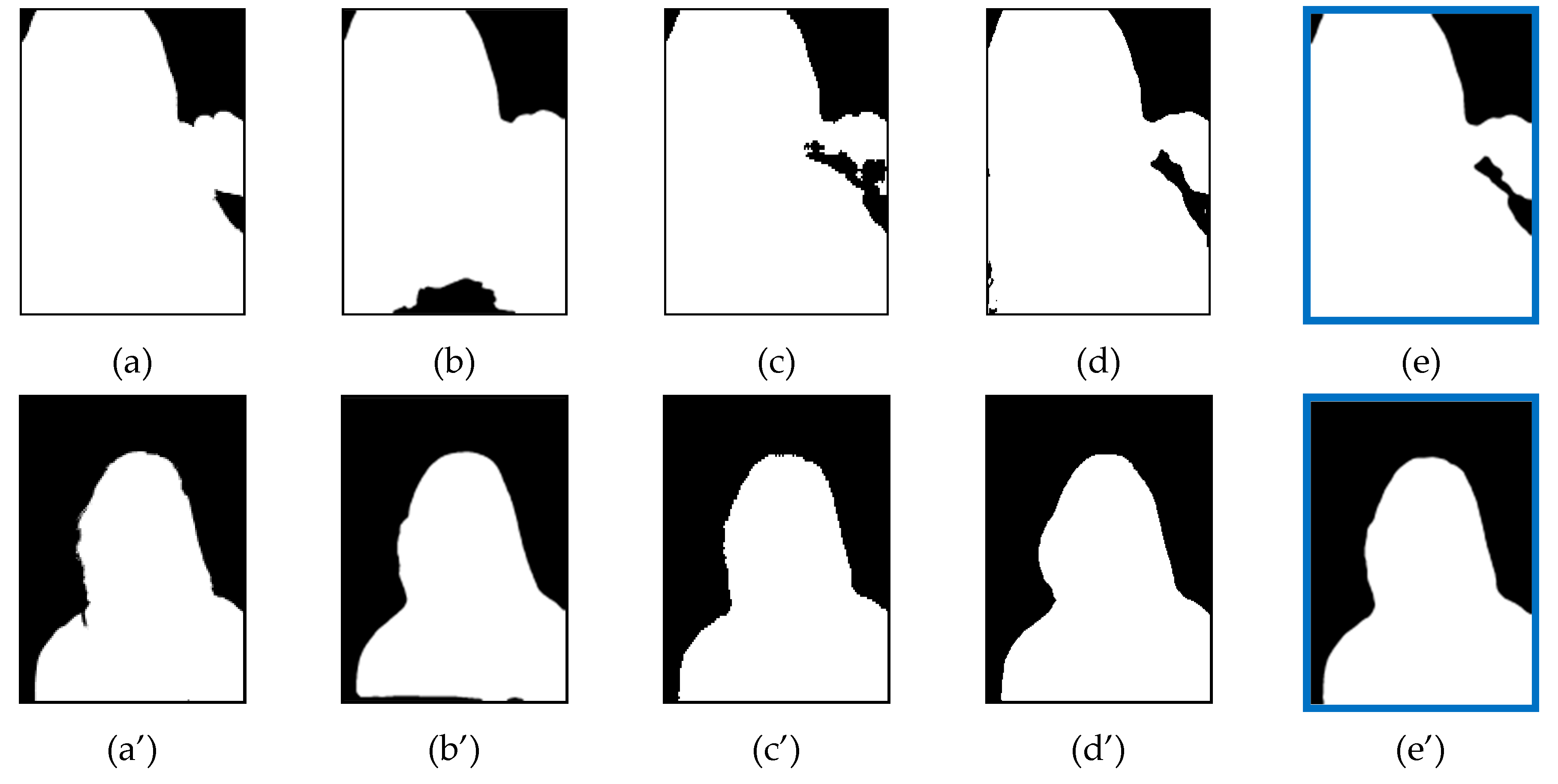

In this paper, simple and efficient ensemble approaches for portrait segmentation using six state-of-the-art DL-based portrait segmentation models were proposed, and the experiment results of the Two-Models and Three-Models ensemble were presented and analyzed. The simple soft voting method and weighted soft voting methods as a Meta-Classifier in a stacking ensemble were used to combine the individual portrait segmentation models. The images from validation set of EG1800 and CDI dataset were used for the experiment. The IoU metric was used to evaluate the accuracy of single models and the proposed ensemble approach. The IoU standard deviation and false prediction rate were analyzed to evaluate the improvement in variance and bias errors. The efficiency rate was analyzed to evaluate the efficiency of single models and the proposed ensemble approach. The experiment result showed that the IoU value, IoU standard deviation and false prediction rate of single models were significantly improved after ensemble. The result of the ensemble using the weighted soft voting method showed better accuracy than the simple soft voting method. It was also observed that the Three-Models ensemble showed better results than the Two-Models ensemble in terms of accuracy, variance and bias errors. The analysis of cost efficiency showed that the ensemble of DL-based portrait segmentation models typically increased the use of memory and computing power. However, it has also shown that the ensemble of DL-based portrait segmentation models can perform more efficiently than a single DL-based portrait segmentation model with higher accuracy, using less memory and less computing power.

In this paper, we evaluated six state-of-the-art DL-based portrait segmentation models and compared them with the proposed ensemble approach. We also introduced methods to improve the performance of DL-based portrait segmentation models using the ensemble approach, as well as methods to evaluate the performance of DL-based portrait segmentation models and its ensemble models. We hope these findings will benefit other researchers.