Distributed Learning for Dynamic Channel Access in Underwater Sensor Networks

Abstract

1. Introduction

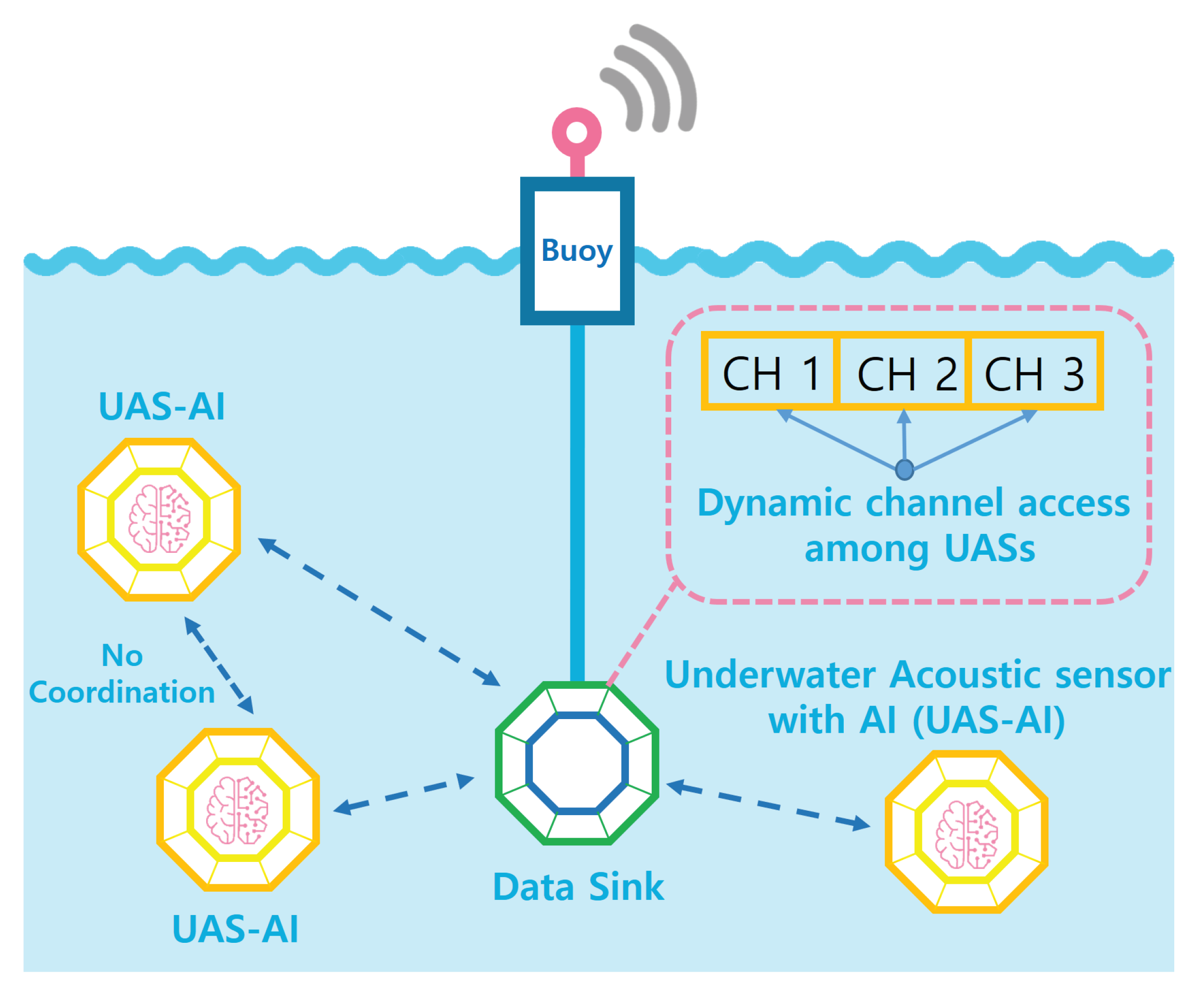

- We formulate the dynamic channel access problem in UASNs as a multi-agent MDP, wherein each underwater sensor is considered an agent whose objective is to maximize the total network throughput without coordinating with or exchanging messages among underwater sensors.

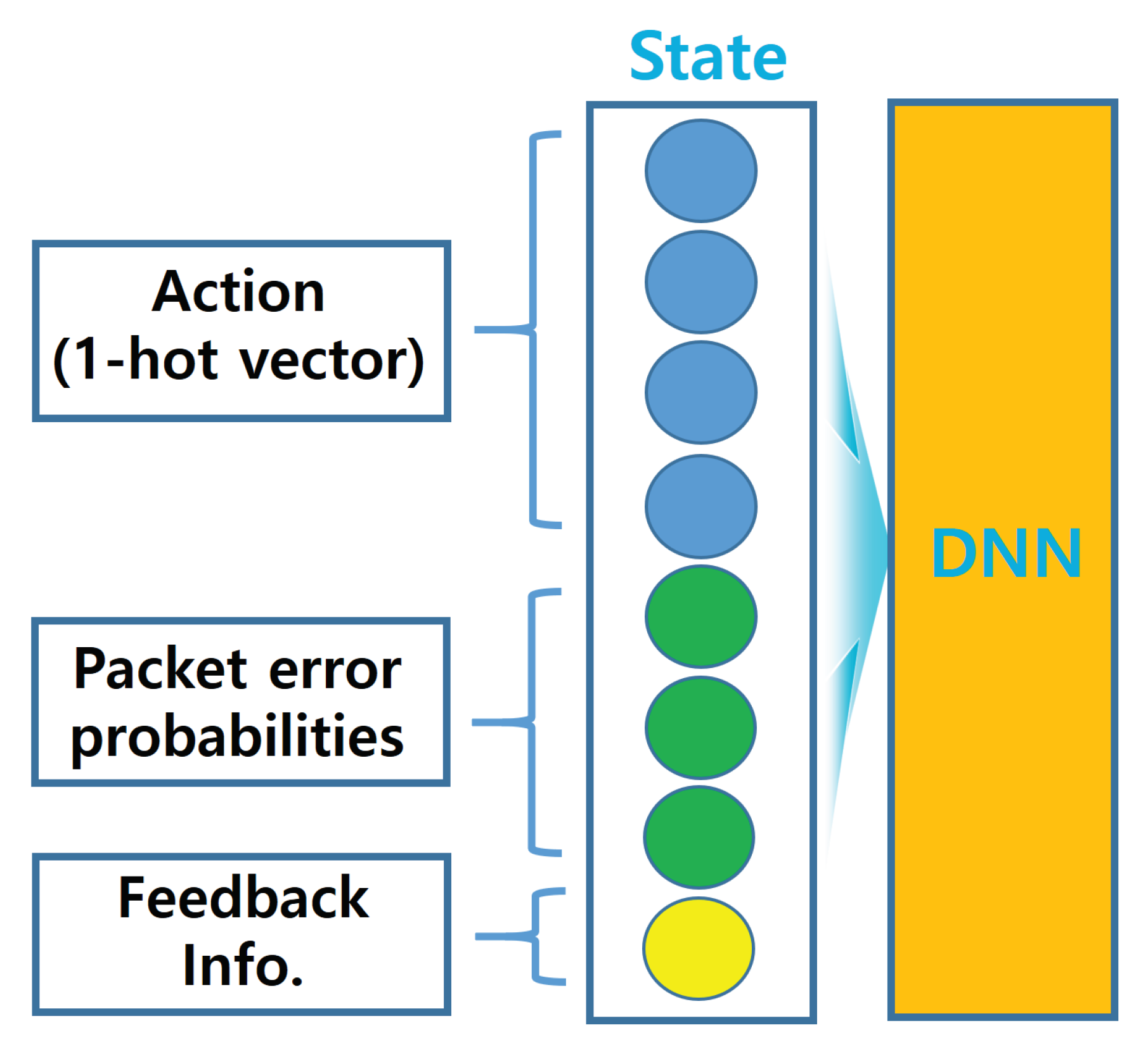

- We propose a dynamic channel access algorithm for UASNs, based on deep Q-learning. In the proposed algorithm, each agent (i.e., underwater sensor) exploits partial information, i.e., only the feedback information between a data sink and that particular underwater sensor instead of complete information on the actions of all other agents, to learn not only the behaviors (i.e., actions) of the other sensors but also the physical features, i.e., channel error probability (CEP) of its available acoustic channels. This property ensures that each underwater sensor can implement the proposed algorithm in a distributed manner, i.e., there is no need for cooperation between agents.

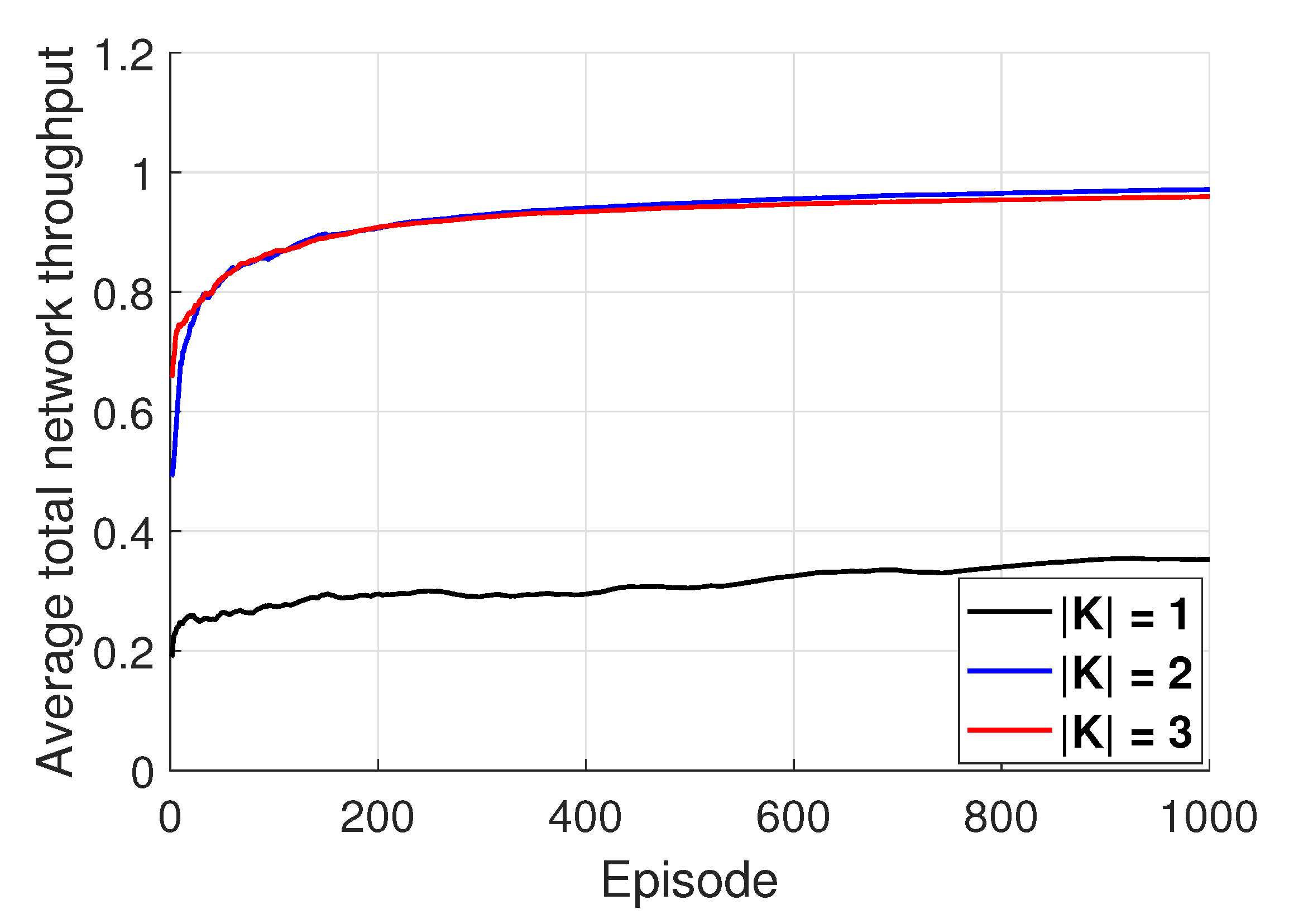

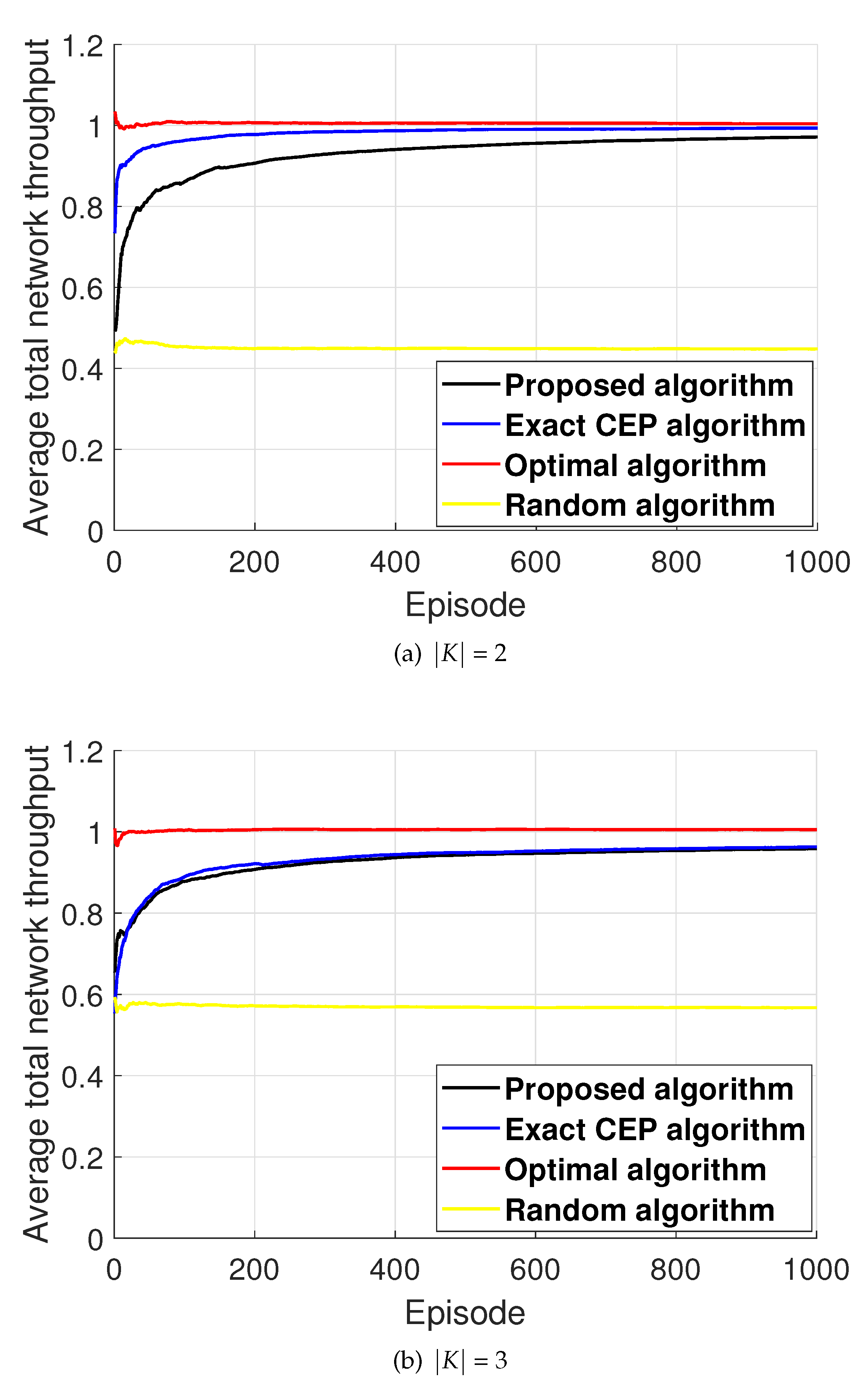

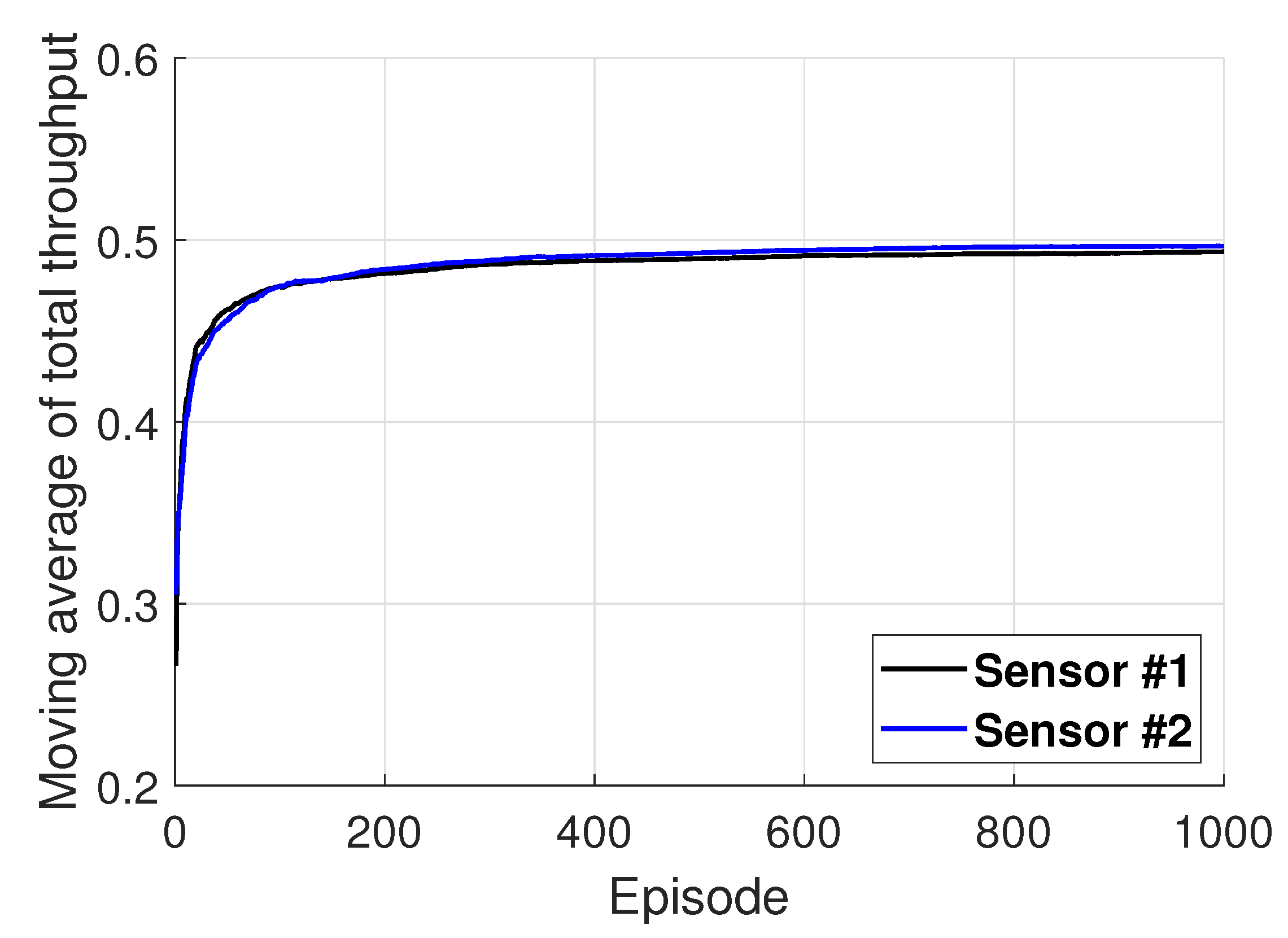

- Through performance evaluations, we demonstrate that the performance difference between the proposed algorithm and the centralized algorithms is not that large, even though if it is implemented in a distributed manner. Moreover, it is identified that the performance of the proposed algorithm is much better than that of the random algorithm.

2. System Model

3. Problem Formulation with MDP

4. Background on Q-Learning and Deep Reinforcement Learning

5. Proposed Algorithm

| Algorithm 1 DQN-based dynamic channel access algorithm for each underwater sensor |

| 1: Establish a trained DQN with weights and a target DQN with weights |

| 2: Initialize and set |

| 3: In time slot , the agent randomly selects an action a and executes the action, and then observes the reward r and new state |

| 4: Store in reply buffer |

| 5: : |

| 6: for to T do |

| 7: In each time slot t, the agent chooses action by following the below distribution described in Equation (11) |

| 8: Execute and observe reward , feedback information and new state |

| 9: Store in reply buffer |

| 10: Update the estimation of corresponding to the chosen action using (2) with feedback information |

| 11: The agent randomly samples a minibatch with Z experiences from reply buffer , and then updates weights for the trained DQN |

| 12: In every predetermined time slot, the agent updates the weights for the target DQN with |

| 13: end for |

6. Performance Evaluations

6.1. Network Environment

6.2. Learning Environment

6.3. Baseline Schemes

6.4. Performance Evaluations

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Xia, T.; Wang, M.M.; Zhang, J.; Wang, L. Maritime internet of things: Challenges and solutions. IEEE Wirel. Commun. 2020, 27, 188–196. [Google Scholar] [CrossRef]

- Kim, Y.; Song, Y.; Lim, S.H. Hierarchical maritime radio networks for internet of maritime things. IEEE Access 2019, 7, 54218–54227. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, M.M.; Xia, T.; Wang, L. Maritime IoT: An architectural and radio spectrum perspective. IEEE Access 2020, 8, 93109–93122. [Google Scholar] [CrossRef]

- Huang, Y.; Li, Y.; Zhang, Z.; Liu, R.W. GPU-accelerated compression and visualization of large-scale vessel trajectories in maritime IoT industries. IEEE Internet Things J. 2020, 99, 1–19. [Google Scholar] [CrossRef]

- Huo, Y.; Dong, X.; Beatty, S. Cellular communications in ocean waves for maritime internet of things. IEEE Internet Things J. 2020. [Google Scholar] [CrossRef]

- Sozer, E.M.; Stojanovic, M.; Proakis, J.G. Underwater acoustic networks. IEEE J. Ocean. Eng. 2000, 25, 72–82. [Google Scholar] [CrossRef]

- Rutgers, D.P.; Akyildiz, I.F. Overview of networking protocols for underwater wireless communications. IEEE Commun. Mag. 2009, 47, 97–102. [Google Scholar]

- Proakis, J.G.; Sozer, E.M.; Rice, J.A. Shallow Water Acoustic Networks. IEEE Commun. Mag. 2001, 39, 114–119. [Google Scholar] [CrossRef]

- Akyildiz, I.F.; Pompili, D.; Melodia, T. Challenges for efficient communication in underwater acoustic sensor networks. ACM SIGBED Rev. 2004, 1, 3–8. [Google Scholar] [CrossRef]

- Akyildiz, I.F.; Pompili, D.; Melodia, T. Underwater acoustic sensor networks: Research challenges. Ad Hoc Netw. 2005, 3, 257–279. [Google Scholar] [CrossRef]

- Heidemann, J.; Ye, W.; Wills, J.; Syed, A.; Li, Y. Research challenges and applications for underwater sensor networking. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), Las Vegas, NV, USA, 3–6 April 2006. [Google Scholar]

- Chen, K.; Ma, M.; Cheng, E.; Yuan, F.; Su, W. A survey on MAC protocols for underwater wireless sensor networks. IEEE Commun. Surv. Tutor 2014, 16, 1433–1447. [Google Scholar] [CrossRef]

- Patan, J.; Kurose, J.; Levine, B.N. A survey of practical issues in underwater networks. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2007, 11, 23–33. [Google Scholar] [CrossRef]

- Preisig, J. Acoustic propagation considerations for underwater acoustic communications network development. SIGMOBILE Mob. Comput. Commun. Rev. 2007, 11, 2–10. [Google Scholar] [CrossRef]

- Ismail, N.N.; Hussein, L.A.; Ariffin, S.H.S. Analyzing the performance of acoustic channel in underwater wireless sensor network (UWSN). In Proceedings of the Fourth Asia International Conference on Mathematical/Analytical Modelling and Computer Simulation, Bornea, Malaysia, 26–28 May 2010. [Google Scholar]

- Stojanovic, M.; Preisig, J. Underwater acoustic communication channels: Propagation models and statistical characterization. IEEE Commun. Mag. 2009, 47, 84–89. [Google Scholar] [CrossRef]

- Chitre, M.; Topor, I.; Bhatnagar, R.; Pallayil, V. Variability in link performance of an underwater acoustic network. In Proceedings of the MTS/IEEE OCEANS-Bergen, Bergen, Norway, 10–14 June 2013. [Google Scholar]

- Caiti, A.; Grythe, K.; Hovem, J.M.; Jesus, S.M.; Lie, A.; Munafò, A.; Reinen, T.A.; Silva, A.; Zabel, F. Linking acoustic communications and network performance: Integration and experimentation of an underwater acoustic network. IEEE J. Ocean. Eng. 2013, 38, 758–771. [Google Scholar] [CrossRef]

- Zhong, X.; Ji, F.; Chen, F.; Guan, Q.; Yu, H. A new acoustic channel interference model for 3-dimensional underwater acoustic sensor networks and throughput analysis. IEEE Internet Things J. 2020. [Google Scholar] [CrossRef]

- Singer, A.C.; Nelson, J.K.; Kozat, S.S. Signal processing for underwater acoustic communications. IEEE Commun. Mag. 2009, 47, 90–96. [Google Scholar] [CrossRef]

- Syed, A.A.; Heidemann, J. Time Synchronization for high latency acoustic networks. In Proceedings of the 25th IEEE International Conference on Computer Communications, Barcelona, Spain, 23–29 April 2006. [Google Scholar]

- Molins, M.; Stojanovic, M. Slotted FAMA: A MAC protocol for underwater acoustic networks. In Proceedings of the OCEANS 2006-Asia Pacific, Singapore, 16–19 May 2006. [Google Scholar]

- Chirdchoo, N.; Soh, W.-S.; Chua, K.C. Aloha-Based MAC Protocols with Collision Avoidance for Underwater Acoustic Networks. In Proceedings of the 26th IEEE International Conference on Computer Communications (IEEE Infocom 2007), Barcelona, Spain, 6–12 May 2007. [Google Scholar]

- Peleato, B.; Stojanovic, M. Distance aware collision avoidance protocol for ad-hoc underwater acoustic sensor networks. IEEE Commun. Lett. 2007, 11, 1025–1027. [Google Scholar] [CrossRef]

- Park, M.K.; Rodoplu, V. UWAN-MAC: An energy-efficient MAC protocol for underwater acoustic wireless sensor networks. IEEE J. Ocean. Eng. 2007, 32, 710–720. [Google Scholar] [CrossRef]

- Porto, A.; Stojanovic, M. Optimizing the transmission range in an underwater acoustic network. In Proceedings of the OCEANS 2007, Vancouver, BC, Canada, 29 September–4 October 2007. [Google Scholar]

- Song, Y.; Kong, P.-Y. Optimizing design and performance of underwater acoustic sensor networks with 3D topology. IEEE Trans. Mobile Comput. 2020, 19, 1689–1701. [Google Scholar] [CrossRef]

- Song, Y.; Kong, P.-Y. QoS Provisioning in underwater acoustic sensor networks with 3-dimensional topology. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 16–18 October 2019. [Google Scholar]

- Song, Y. Underwater acoustic sensor networks with cost efficiency for internet of underwater things. IEEE Trans. Ind. Electron. 2020. [Google Scholar] [CrossRef]

- Song, Y.; Shin, H.C. Cost-efficient underwater acoustic sensor networks for internet of underwater things. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020. [Google Scholar]

- Zorzi, M.; Casari, P.; Baldo, N.; Harris, A.F. Energy-efficient routing schemes for underwater acoustic networks. IEEE J. Sel. Areas Commun. 2008, 26, 1754–1766. [Google Scholar] [CrossRef]

- Noh, Y.; Lee, U.; Wang, P.; Choi, B.S.C.; Gerla, M. VAPR: Void-aware pressure routing for underwater sensor networks. IEEE Trans. Mobile Comput. 2013, 12, 895–908. [Google Scholar] [CrossRef]

- Kalaiselvan, S.A.; Udayakumar, P.; Muruganantham, R.; Satheesh, N.; Joseph, T. A linear path combined MAC based routing for improving the energy efficiency in underwater acoustic network. Inter. J. Innov. Techn. Exp. Eng. 2020, 9, 1–6. [Google Scholar] [CrossRef]

- Gao, C.; Liu, Z.; Cao, B.; Mu, L. Relay selection scheme based on propagation delay for cooperative underwater acoustic network. In Proceedings of the 2013 International Conference on Wireless Communications and Signal Processing, Hangzhou, China, 24–26 October 2013. [Google Scholar]

- Zhang, W.; Stojanovic, M.; Mitra, U. Analysis of a simple multihop underwater acoustic network. In Proceedings of the Third ACM International Workshop on Underwater Networks (WuWNeT ’08), New York, NY, USA, 3–10 September 2008. [Google Scholar]

- Zhang, W.; Stojanovic, M.; Mitra, U. Analysis of a simple multihop underwater acoustic network. IEEE J. Ocean. Eng. 2010, 35, 961–970. [Google Scholar] [CrossRef]

- Simao, D.H.; Chang, B.S.; Brante, G.; Pellenz, M.E.; Souza, R.D. Energy efficiency of multi-hop underwater acoustic networks using fountain codes. IEEE Access 2020, 8, 23110–23119. [Google Scholar] [CrossRef]

- Li, N.; Martinez, J.-F.; Chaus, J.M.M.; Eckert, M. A survey on underwater acoustic sensor network routing protocols. Sensors 2016, 16, 414. [Google Scholar] [CrossRef]

- Ayaz, M.; Baig, I.; Abdullah, A.; Faye, I. A survey on routing techniques in underwater wireless sensor networks. J. Netw. Comput. Appl. 2011, 34, 1908–1927. [Google Scholar] [CrossRef]

- Yick, J.; Mukherjee, B.; Ghosal, D. Wireless sensor network survey. Comput. Netw. 2008, 52, 2292–2330. [Google Scholar] [CrossRef]

- Elsawy, H.; Hossain, E.; Kim, D.I. HetNets with cognitive small cells: User offloading and distributed channel access techniques. IEEE Commun. Mag. 2013, 51, 28–36. [Google Scholar] [CrossRef]

- Lin, S.; Kong, L.; Gao, Q.; Khan, M.K.; Zhong, Z.; Jin, X.; Zeng, P. Advanced dynamic channel access strategy in spectrum sharing 5G systems. IEEE Wirel. Commun. 2017, 24, 74–80. [Google Scholar] [CrossRef]

- Zhou, G.; Huang, C.; Yan, T.; He, T.; Stankovic, J.A.; Abdelzaher, T.F. MMSN: Multi-frequency media access control for wireless sensor networks. In Proceedings of the 25th IEEE International Conference on Computer Communications (IEEE INFOCOM 2006), Barcelona, Spain, 23–29 April 2006. [Google Scholar]

- Wu, Y.; Stankovic, J.A.; He, T.; Lin, S. Realistic and efficient multi-channel communications in wireless sensor networks. In Proceedings of the 27th Conference on Computer Communications (IEEE INFOCOM 2008), Phoenix, AZ, USA, 13–18 April 2008. [Google Scholar]

- Xing, G.; Sha, M.; Huang, J.; Zhou, G.; Wang, X.; Liu, S. Multi-channel interference measurement and modeling in low-power wireless networks. In Proceedings of the 30th IEEE Real-Time Systems Symposium, Washington, DC, USA, 1–4 December 2009. [Google Scholar]

- Peleato, B.; Stojanovic, M. A channel sharing scheme for underwater cellular networks. In Proceedings of the IEEE OCEANS, Aberdeen, UK, 18–21 June 2007. [Google Scholar]

- Baldo, N.; Casari, P.; Casciaro, P.; Zorzi, M. Effective heuristics for flexible spectrum access in underwater acoustic networks. In Proceedings of the MTS/IEEE OCEANS, Quebec City, QC, Canada, 15–18 September 2008. [Google Scholar]

- Baldo, N.; Casari, P.; Zorzi, M. Cognitive spectrum access for underwater acoustic communications. In Proceedings of the IEEE International Conference on Communications Workshops (ICC Workshops), Beijing, China, 19–23 May 2008. [Google Scholar]

- Le, A.; Kim, D. Joint channel and power allocation for underwater cognitive acoustic networks. In Proceedings of the 2014 International Conference on Advanced Technologies for Communications (ATC 2014), Hanoi, Vietnam, 15–17 October 2014. [Google Scholar]

- Ramezani, H.; Leus, G. DMC-MAC: Dynamic multi-channel MAC in underwater acoustic networks. In Proceedings of the 21st European Signal Processing Conference (EUSIPCO 2013), Marrakech, Morocco, 9–13 September 2013. [Google Scholar]

- Su, Y.; Jin, Z. UMMAC: A multi-channel MAC protocol for underwater acoustic networks. J. Commun. Netw. 2016, 18, 75–83. [Google Scholar]

- Luo, Y.; Pu, L.; Mo, H.; Zhu, Y.; Peng, Z.; Cui, J.-H. Receiver-initiated spectrum management for underwater cognitive acoustic network. IEEE Trans. Mobile Comput. 2017, 16, 198–212. [Google Scholar] [CrossRef]

- Geng, X.; Zheng, Y.R. MAC Protocol for Underwater Acoustic Networks Based on Deep Reinforcement Learning. In Proceedings of the International Conference on Underwater Networks & Systems (WUWNET ’19), Atlanta, GA, USA, 1–5 October 2019. [Google Scholar]

- Ye, X.; Fu, L. Deep reinforcement learning based MAC protocol for underwater acoustic networks. In Proceedings of the International Conference on Underwater Networks (WUWNET ’19) & Systems, Atlanta, GA, USA, 23–25 October 2019. [Google Scholar]

- Pan, C.; Jia, L.; Cai, R.; Ding, Y. Modeling and simulation of channel for underwater communication network. Int. J. Innov. Comput. Inf. Control 2012, 8, 2149–2156. [Google Scholar]

- Richard, S.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI 2016), Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experience replay. In Proceedings of the International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications. IEEE Trans. Cybern. 2020, 50, 3826–3839. [Google Scholar] [CrossRef] [PubMed]

- Auer, P.; Cesa-Bianchi, N.; Freund, Y.; Schapire, R.E. Gambling in a rigged casino: The adversarial multi-armed bandit problem. In Proceedings of the IEEE 36th Annual Foundations of Computer Science, Milwaukee, WI, USA, 23–25 October 1995. [Google Scholar]

- Qarabaqi, P.; Stojanovic, M. Statistical Characterization and Computationally Efficient Modeling of a Class of Underwater Acoustic Communication Channels. IEEE J. Oceanic Eng. 2013, 38, 701–717. [Google Scholar] [CrossRef]

- Stojanovic, M. On the Relationship between Capacity and Distance in an Underwater Acoustic Communication Channel. SIGMOBILE Mob. Comput. Commun. Rev. 2007, 11, 1559–1662. [Google Scholar] [CrossRef]

- Zhao, M.X.; Pompili, D.; Alves, J. Energy-efficient OFDM bandwidth selection for underwater acoustic carrier aggregation systems. In Proceedings of the IEEE Third Underwater Communications and Networking Conference (UComms), Lerici, Italy, 30 August–1 September 2016. [Google Scholar]

- Chen, B.; Pompili, D. Reliable geocasting for random access underwater acoustic sensor networks. Ad Hoc Netw. 2014, 21, 134–146. [Google Scholar] [CrossRef]

| Network Parameter | Value |

|---|---|

| Number of active sensors and data sink | 2, 1 |

| Surface height (depth) | 100 m |

| Height of sensors and data sink | 10 m |

| Transmit power of sensors | 20 W |

| Number of available acoustic channels | 3 |

| Minimum frequencies of available channels | [10, 30, 50] KHz |

| Bandwidth of each channel | 10 KHz |

| Hyperparameter | Agent |

|---|---|

| Batch size | 6 |

| Optimizer | Adam |

| Activation function | Relu |

| Learning rate | |

| Experience replay size | 1000 |

| Discount factor | 0.99 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, H.; Kim, Y.; Baek, S.; Song, Y. Distributed Learning for Dynamic Channel Access in Underwater Sensor Networks. Entropy 2020, 22, 992. https://doi.org/10.3390/e22090992

Shin H, Kim Y, Baek S, Song Y. Distributed Learning for Dynamic Channel Access in Underwater Sensor Networks. Entropy. 2020; 22(9):992. https://doi.org/10.3390/e22090992

Chicago/Turabian StyleShin, Huicheol, Yongjae Kim, Seungjae Baek, and Yujae Song. 2020. "Distributed Learning for Dynamic Channel Access in Underwater Sensor Networks" Entropy 22, no. 9: 992. https://doi.org/10.3390/e22090992

APA StyleShin, H., Kim, Y., Baek, S., & Song, Y. (2020). Distributed Learning for Dynamic Channel Access in Underwater Sensor Networks. Entropy, 22(9), 992. https://doi.org/10.3390/e22090992