1. Introduction

In recent years, convolutional neural networks have shown excellent performance in visual tasks. Starting from AlexNet [

1], many successful convolutional neural network models have been developed, such as VGG [

2], GoogLeNet [

3], ResNet [

4], and DenseNet [

5]. With the help of hierarchical convolution kernel and nonlinear computation, deep neural networks can extract more discriminative local features for visual representations. Literature [

6] proves that the convolution feature is a set of local features related to objects, all of which together describe the visual characteristics of objects. Rich feature descriptions draw researchers’ great interest in deep convolution features. To enhance feature learning, a lot of aggregation operations based on deep convolution features are proposed, such as max pooling [

7], cross-dimensional pooling [

8], sum pooling [

6], and bilinear pooling [

9]. These operations are in high-dimensional Euclidean spaces.

For the past few years, the SPD (symmetric positive definite) matrix has drawn considerable attention because of its powerful representation ability. Based on non-Euclidean Riemannian geometric properties, the SPD matrix is more suitable for capturing the desired data distribution properties. The SPD matrix manifold is widely used in medical imaging [

10], sonar signal processing [

11], radar signal processing [

12], face recognition [

13,

14], action recognition [

15,

16], object or image classification [

17,

18], and transfer learning [

19]. The nonlinear distribution of the SPD matrix in the Riemannian manifold can be measured on a non-Euclidean scale, based on the geodetic distance.

Transforming convolution features into SPD matrices is a problem of converting first-order data into second-order statistic information. Gaussian distribution and covariance matrix are widely used SPD matrix representations that transform first-order features into second-order statistical information [

20,

21]. However, the dimension of the convolution feature is often higher than the number of features. In this case, both the Gaussian distribution and the covariance matrix are singular matrices, and the data distribution does not conform to the manifold property of the SPD matrix. It is inappropriate to exploit Riemannian metrics to distinguish the information difference. To solve this problem, a small perturbation can be added to the covariance [

22]. Besides, kernel functions can characterize nonlinear relationships of data. In [

23], positive definite kernel based on Gaussian radial basis function is defined on manifold, and Euclidean space algorithms, such as support vector machine (SVM) and principal component analysis (PCA) are extended to Riemannian manifold with the help of the proposed positive definite kernel, based on Gaussian radial basis function. With the advent of deep matrix learning [

24,

25,

26], literature [

27] proposes a deep SPD matrix learning model, which exploits RBF kernel function to aggregate convolution features into SPD matrices. Their ultimate goal is to convert the SPD matrix from a Riemannian manifold to another more distinctive manifold.

Based on the above empirical observations, we introduce a method to transform spectral convolution features into SPD matrices through an RBF kernel function, and then detect the target signal using a deep SPD matrix learning network. Two modules are included in this method. The first module uses a nonlinear function to transform convolution features of the spectrum into SPD matrices. All generated SPD matrices become processing objects for the second module. The second module utilizes SPDnet [

25]. It is a deep matrix learning network consisting of a SPD matrix transformation layer, SPD matrix nonlinear processing layer, log-Euclidean projection layer, and fully connected (FC) layers. SPD matrices become more compact and discriminative after being passed through the SPD matrix transformation layer and SPD matrix nonlinear processing layer. After using the log-Euclidean metric, the difference in geodetic distance between SPD matrices generated by different samples will be projected into the Euclidean space, and the dichotomization problem based on the Riemannian manifold will be solved by the FC layer based on the Euclidean space. The convolution features of the spectrogram correspond to the information annotation of the spectrogram in advance. Theoretically, SPD matrices based on pre-processed samples can have more significant differences. The experimental results show that compared with the signal detection method based on spectrum, our method can obtain a gain of 0.5–2 dB on the simulation data set, and can obtain a gain of 0.5–1 dB on the semi-physical simulation data set.

The rest is in the following order: In

Section 2, we elucidate the processing approach of input samples by the convolution neural network and some relevant contents of convolution features. In

Section 3, we introduce the nonlinear kernel function and the RBF kernel function used in our paper. In

Section 4, we provide the spectrum SPD matrix learning method on the basis of a deep network for signal detection. In

Section 5, we will first evaluate the performance of the method, by using the simulation data set based on K-distribution clutter as disturbance, and explore the influence of its hyperparameters, and then compare the performance by using the semi-physical simulation data set on the basis of the measured sea clutter as disturbance. In the end, we provide a conclusion in

Section 6.

2. Acquisition of Convolution Features

The convolution features are derived from the convolutional neural network. It originates from the neocognitron proposed in 1980 [

28], and the first model LeNet appeared in 1998 [

29]. Since 2012, the research of convolutional neural networks has gained significant achievement. The main advantage of convolutional neural networks compared to their predecessors is that it can automatically detect the important features of input data without any human supervision. For example, in image classification, the convolutional neural network can automatically learn the differences between different categories of images. Additionally, in semantic segmentation, through continuous iteration, the convolutional neural network can discover the features of different types of regions in the image, and identify different targets in the image according to the features learned.

Although models of the convolutional neural network vary widely, they all follow some similar principles.

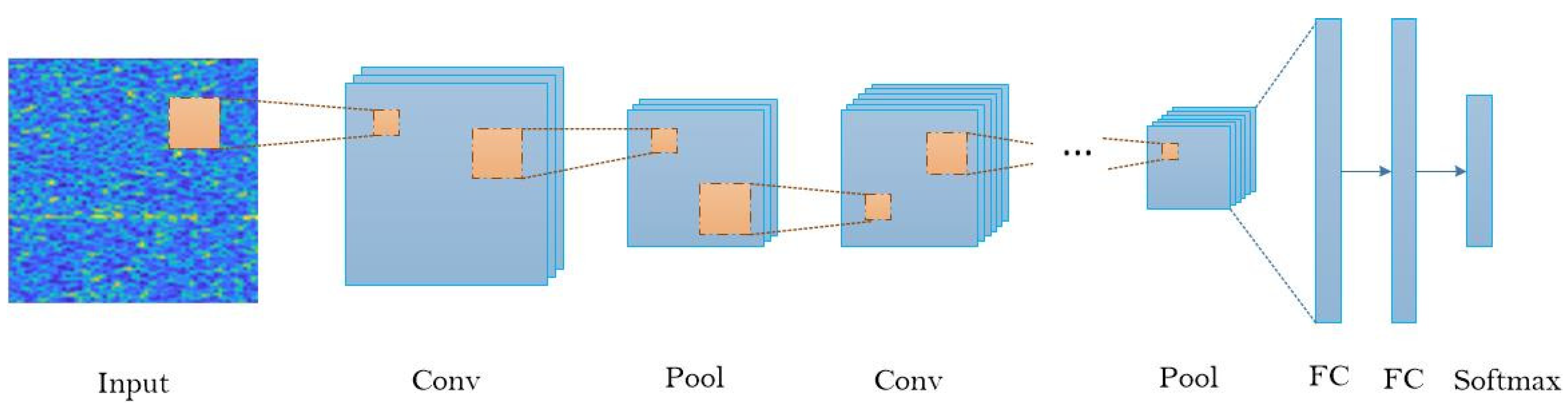

Figure 1 shows the general architecture of a convolutional neural network for a classification task.

When an image is fed to the convolutional neural network, it will be processed by a series of convolutional layers and pooling layers. The image is continuously reduced in dimension and finally vectorized into the full connection layers to get the decision result.

Figure 1 omits layers such as nonlinear layers, and normalized layers that perform particular calculations. They are usually immediately behind a convolutional layer and are not distributed throughout the network. Convolution and pooling are the main layers of a convolutional neural network. We mainly introduce the theoretical basis and process of image convolution features extracted by convolutional layers, and the role of pooling in convolution feature extraction.

An image is represented by a matrix of integers, and each pixel corresponds to each element value in the matrix. A monochromatic image is a two-dimensional matrix, while an RGB image is a three-dimensional matrix.

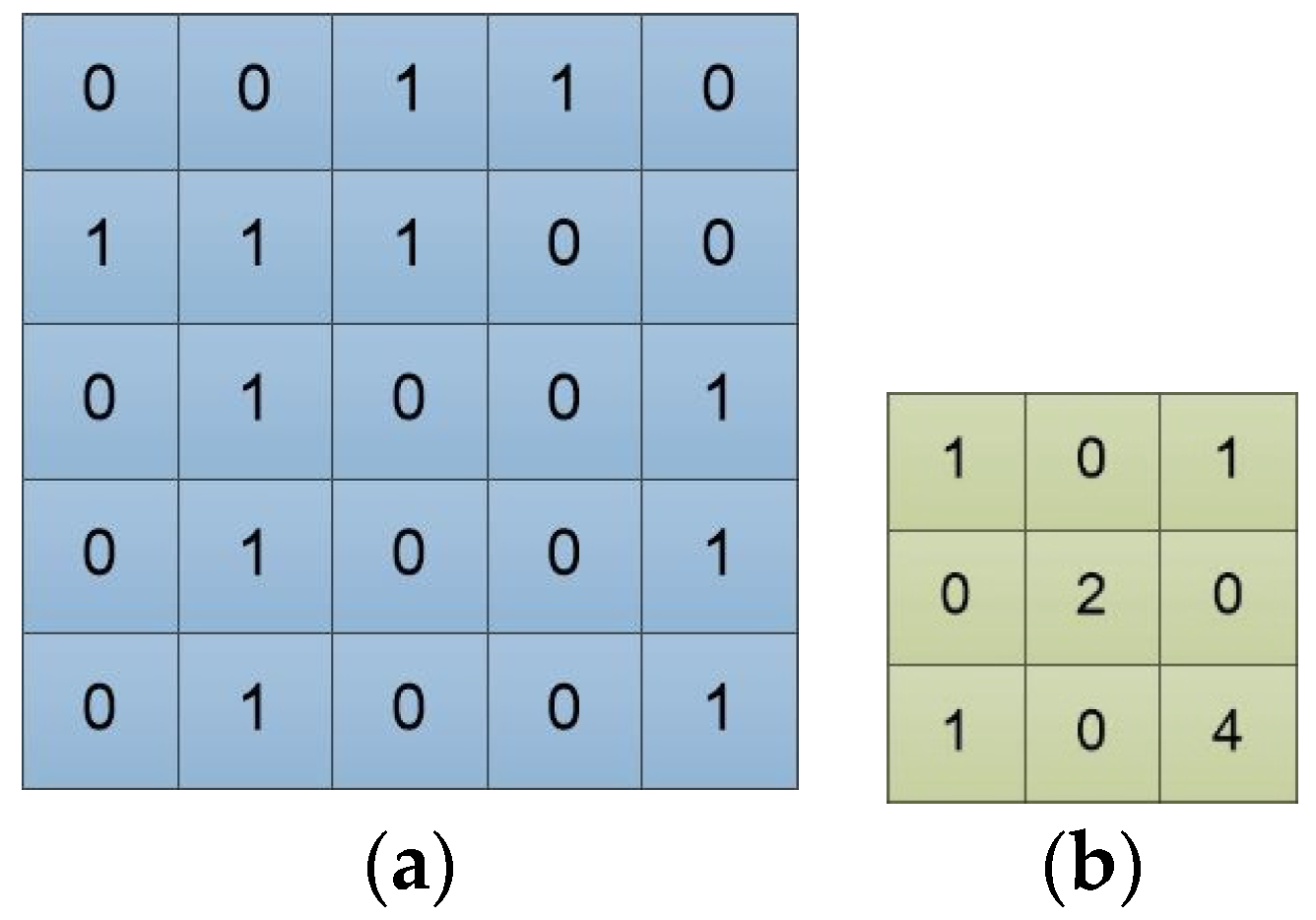

Figure 2a is an input example, and can be viewed as a single-channel image. The “0” and “1” represent pixel values.

Figure 2b is a 3 × 3 convolution filter. Each of its element values represents the weight of the position. The feature extraction ability of the convolution filter is closely related to its size and weights.

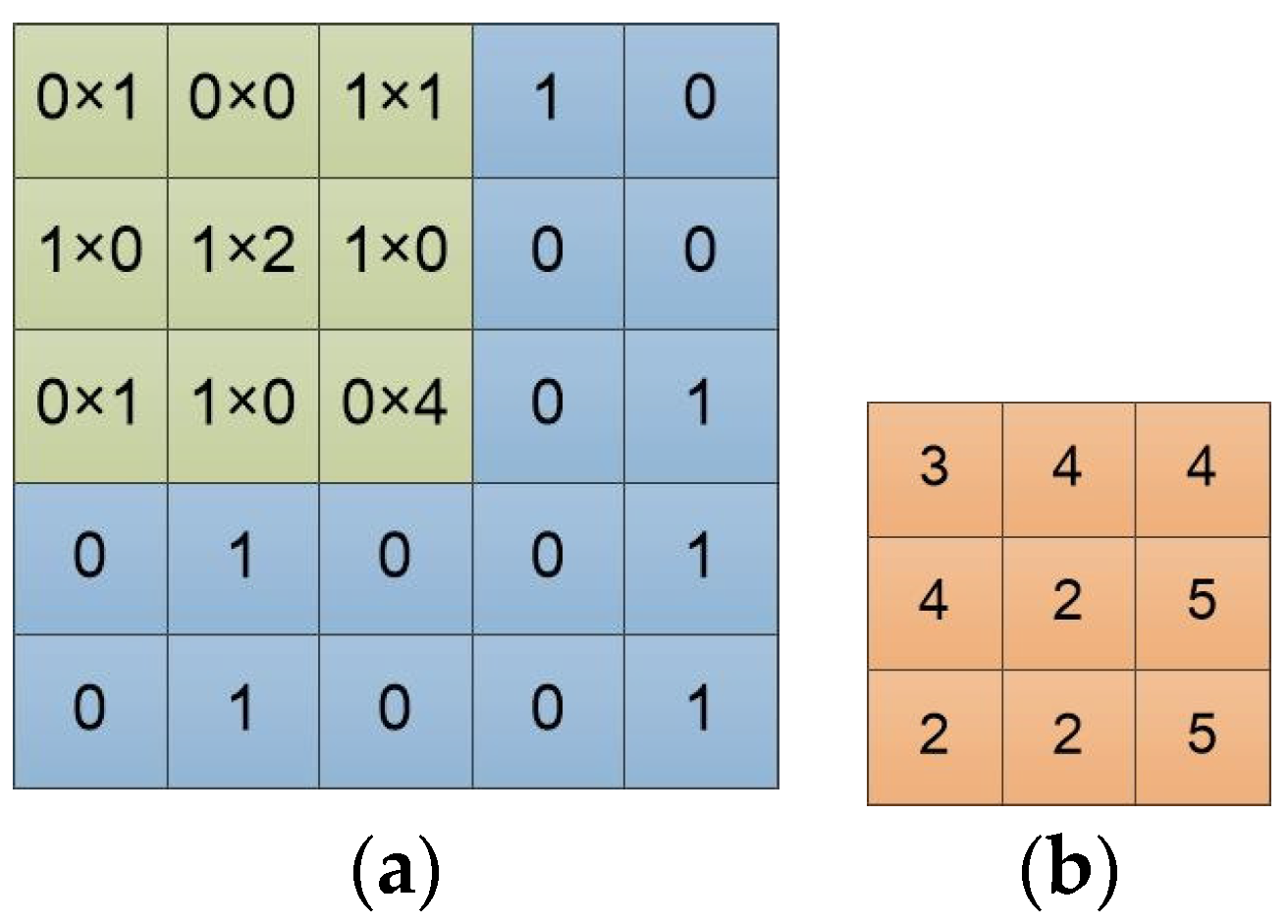

In mathematics, convolution is an operation on two functions that produces a third function expressing how the shape of one is modified by the other. The convolution operation in the convolutional neural network adds each element of the image (or feature map) to its local neighbors, weighted by the convolution filter, to produce a new feature map. The computational relationship between the convolution filter and the image in

Figure 2 is shown in

Figure 3a. We slide the convolution filter over the input data. As

Figure 3b shows, a local multiplication is performed at every location, and we sum the result onto the feature map.

The weighted sum between the convolution filter and the image enhances the effects of some local features of the image and suppresses the rest. The convolution features are enhancements of some features of the original image. Different convolution filters can extract different features, and these filters are learnable. Typically, the output feature maps are enhanced with specific features. The feature map will be used as the input of the next convolutional filter, to generate a more abstract feature map with a lower dimension.

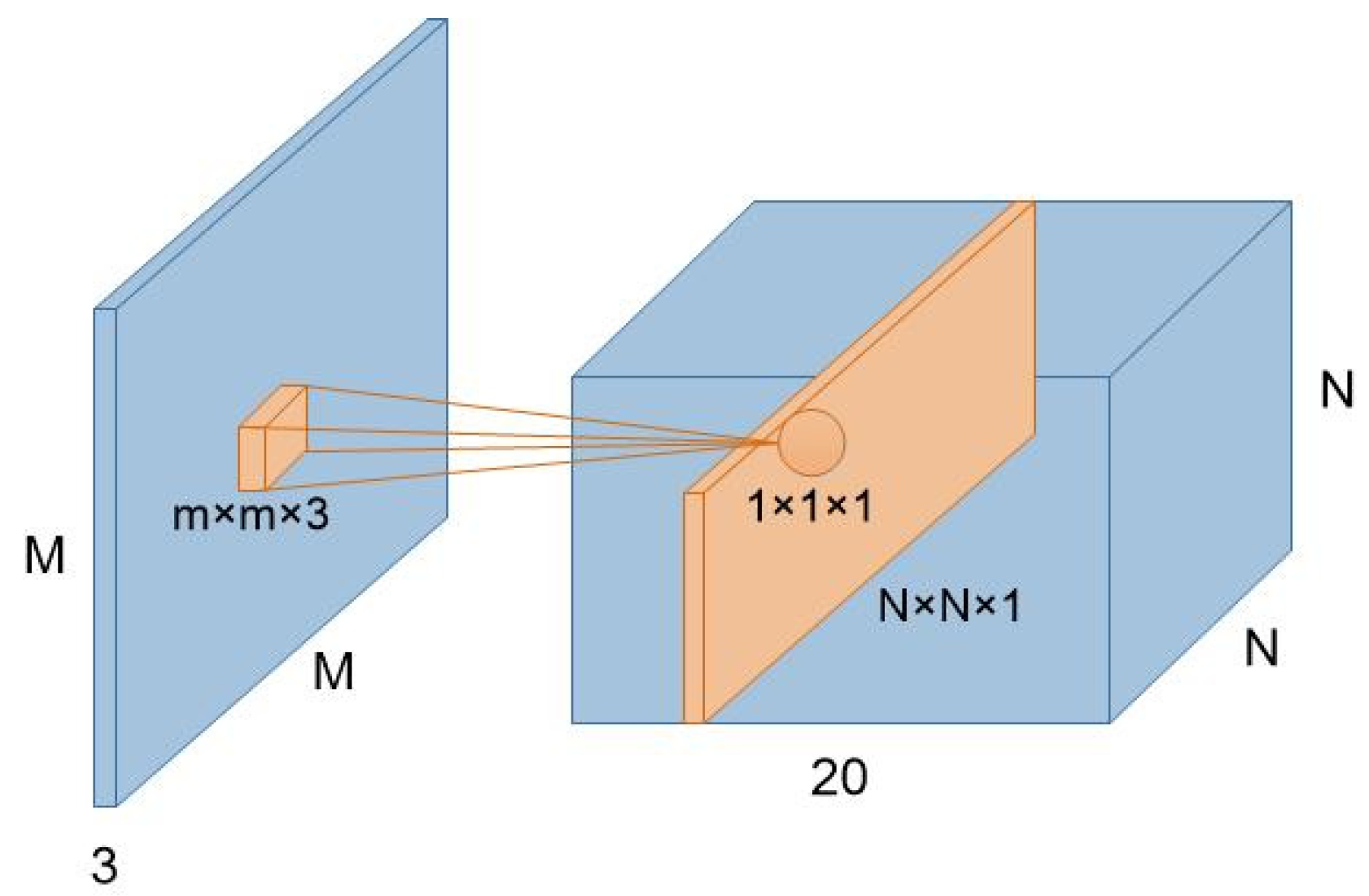

An image is represented as a three-dimensional matrix, with dimensions of height, width, and depth (color channels). So, the convolution filter has a depth dimension, and it covers the entire depth of its input. It is also important to note that multiple convolutions on input are performed, each using a different filter and resulting in a distinct feature map. The final output of the convolution layer is the superposition of all feature maps.

Figure 4 shows the convolution computation of an input image by a convolution filter. There are twenty convolution filters to deal with the input, respectively, and the generated feature map has a depth of 20.

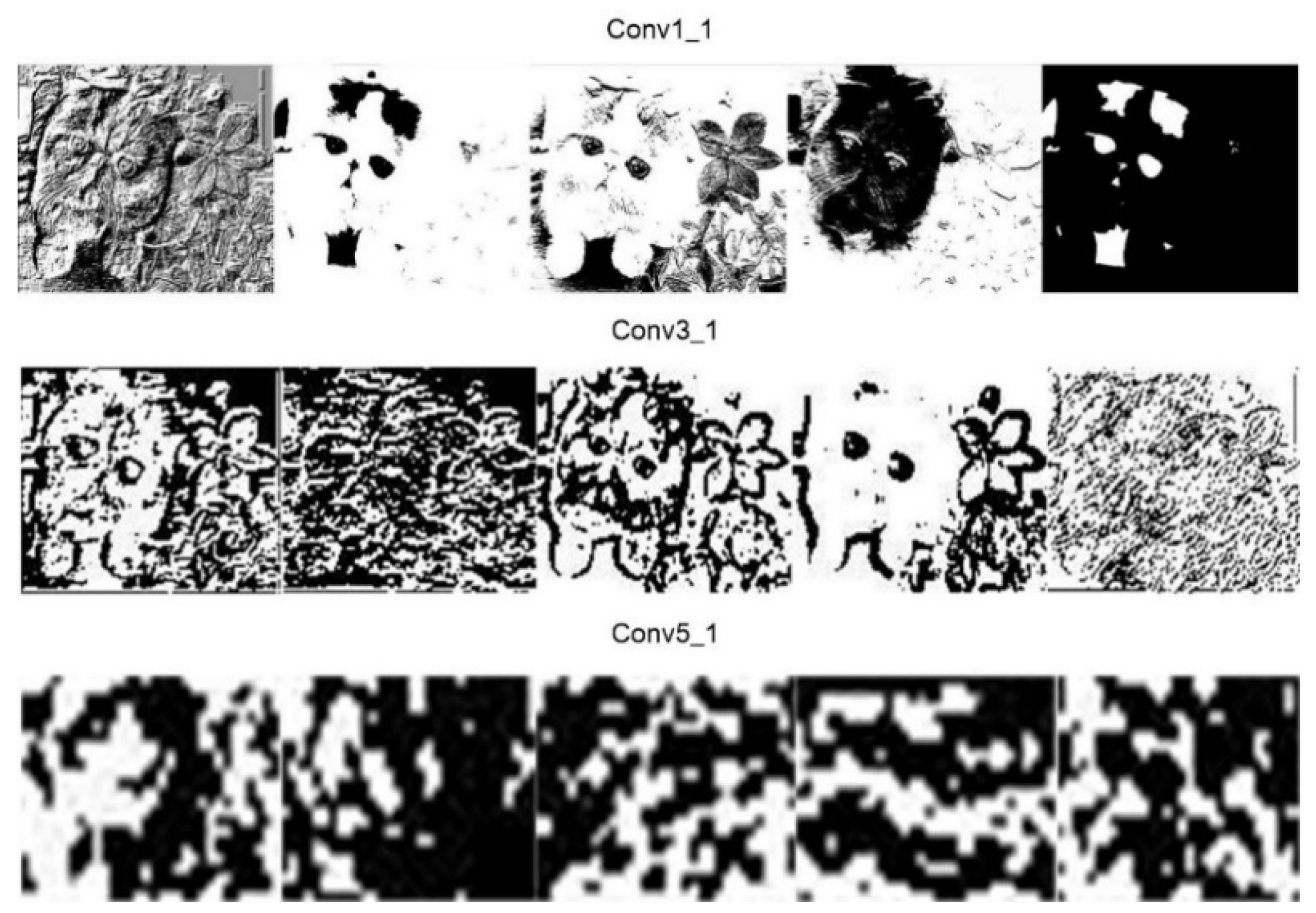

Figure 5 is some feature maps output by different convolution layers of VGG19. From the Conv1_1 layer to the Conv5_1 layer, the depth of the network is increasing, the extracted convolution feature is more and more abstract, the number of feature maps generated by the same layer is increasing, and the dimension is getting lower and lower.

The pooling layer also plays a role in the generation of convolution features. This section provides a brief introduction to the most commonly used pooling: max pooling.

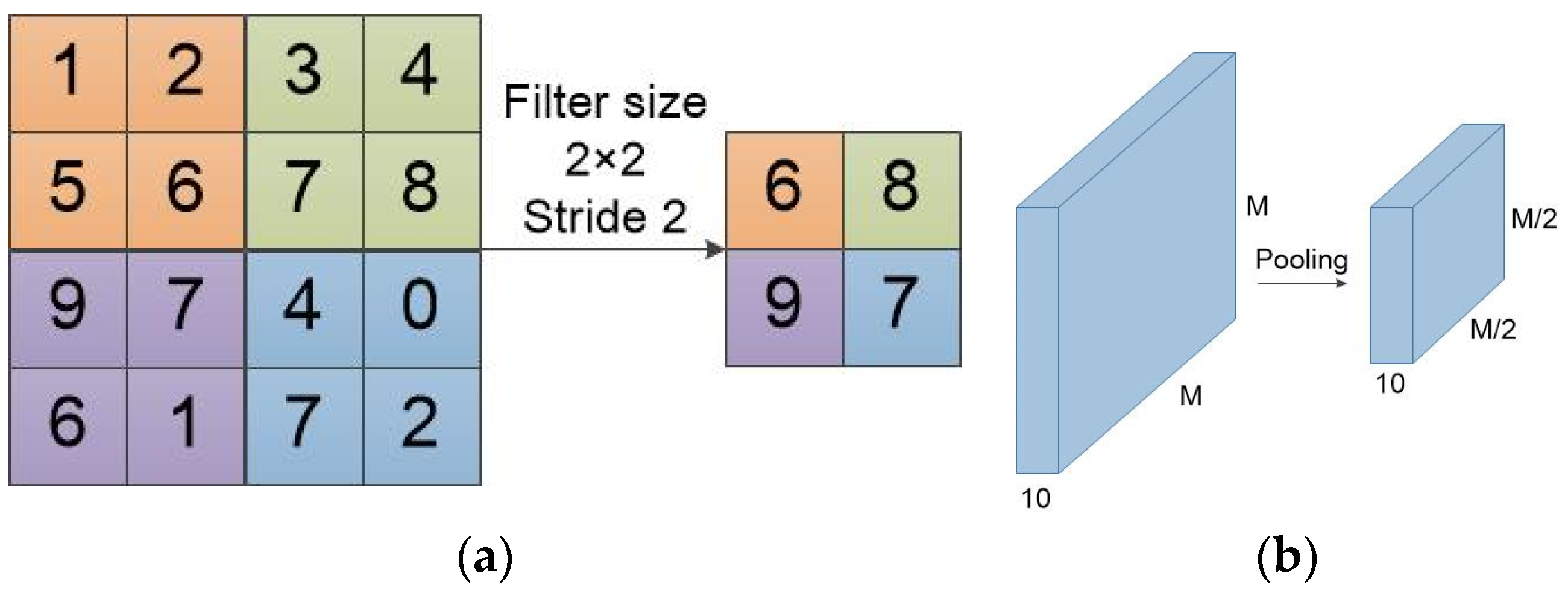

As shown in

Figure 6, the calculation process of a max pooling is similar to convolution. However, the max pooling calculation reserves the maximum value in the calculation region. The output of max pooling is the image that retains the most obvious convolution feature. Max pooling reduces the dimension of feature maps, but does not increase or decrease the number of feature maps.

3. Construction of SPD Matrix Based on Convolution Feature

Although the covariance matrix is used to model the features and has acquired gratifying results, two issues still remain ineluctable. One is that the covariance matrix may be singular. This situation usually occurs when the dimension of local features is larger than the number of local features extracted from the image area. The other one is that the covariance matrix cannot evaluate the nonlinear correlation of features. It is not conducive to the modeling of convolution features. To settle the above two issues, we exploit the nonlinear kernel function to construct the SPD matrix.

We set as the set of all convolution features of one layer in the convolutional neural network. is the number of feature maps, and are the height and width of the feature map, respectively. is the -th local feature and is the -th feature map. The modeling of nonlinear kernel function revolves around .

Elements in the SPD matrix

generated by nonlinear kernel function can be defined as:

where

is the implicit mapping,

is the pairwise inner product.

In this paper, we exploit the RBF kernel function to construct SPD matrices. It is expressed as:

where

is the mean Euclidean distances of all feature maps, ‖ · ‖ is the Euclidean norm.

The RBF kernel function can guarantee the positive definiteness of the matrix. The proof is as follows:

To simplify the proof, we rewrite Formula (2) as:

where

is used to denote

for the purpose of simplification.

The Fourier transform convention of the Formula (3) is:

Then, we prove that the quadratic form of the kernel matrix

is always greater than 0. Set

, and it is an arbitrary non-zero vector. The quadratic expression is obtained as:

In Formula (5), is positive and continuous, and the quadratic form is 0 on the condition that . However, the complex exponentials are linear independence. Thus, the quadratic form is always greater than 0, and matrix is positive definite.

The above is the whole process of the positive definiteness; proof of the RBF kernel function. The use of the RBF kernel function to construct the SPD matrix is essentially a correlation calculation between feature maps.

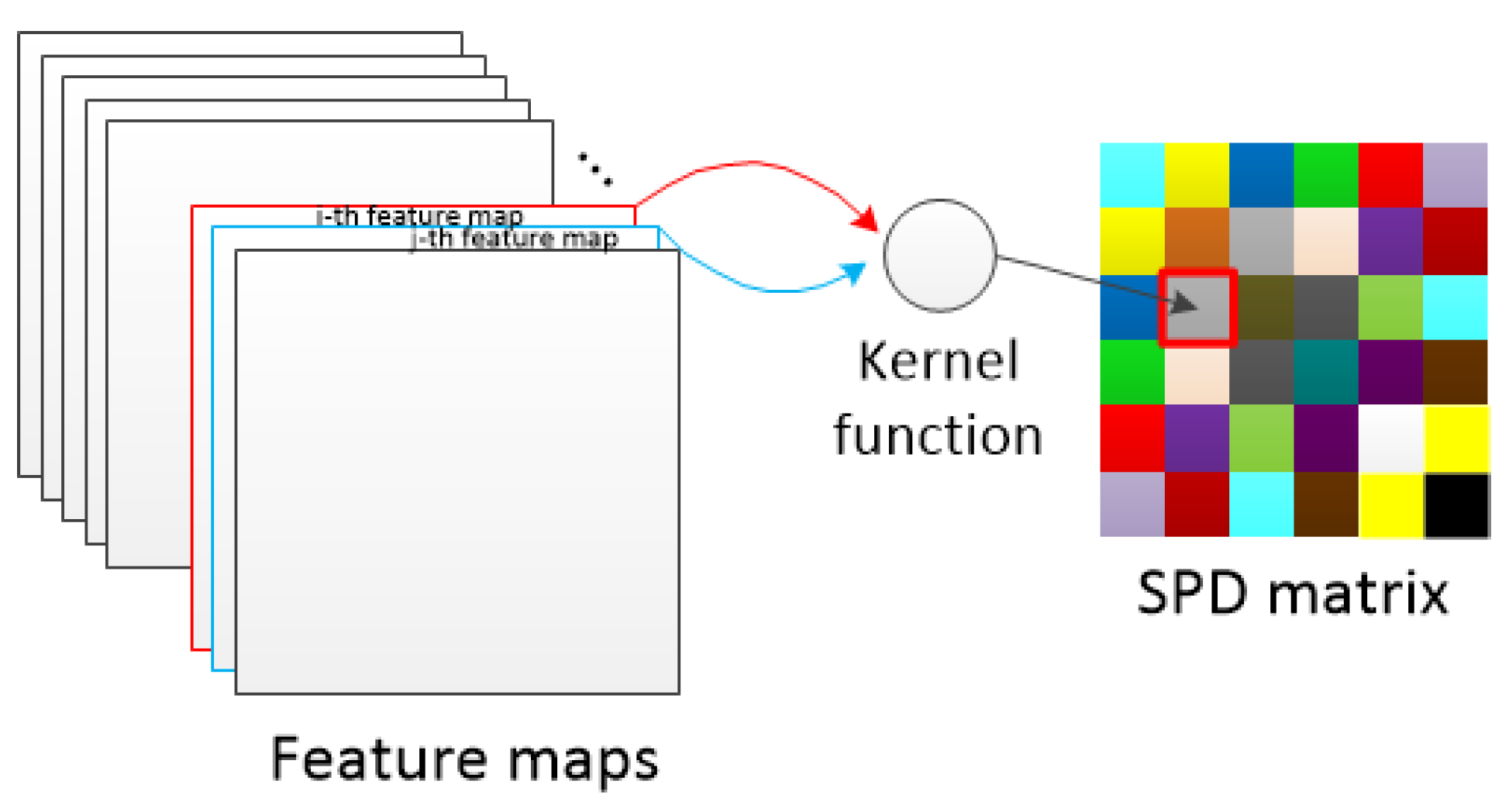

Figure 7 shows the calculation process of the correlation operation.

Formula (2) reveals the nonlinear relationship between convolution features. It can be calculated faster by means of matrix operations through proper modification.

Convolution features

can be reshaped to a matrix

and

. Each feature map

can be expanded into vector form in a certain order

. Moreover, the

is equivalent to:

Three terms to the right of Formula y can be computed by matrix multiplication:

where

stands for the Hadamard product and

is the matrix whose elements are all “1”.

We name

to X1, name

to X2 and name

to X3. formula (2) is modified to the following:

where

is the exponential operation to each element in the matrix A.

In this paper, we use VGG19 and GoogLeNet-V3 to extract convolution features. For VGG19, we use feature maps for its pool1, pool2, and pool3 output. These three layers are located in layer 5, layer 10, and layer 19 of VGG19, and their depths are 64, 128, and 256, respectively. This also means that SPD matrices they build are 64 × 64, 128 × 128, 256 × 256, respectively. For GoogLeNet-V3, we use feature maps output by its Maxpool_5a_3 × 3 layer, which is located in layer 6 of GoogLeNet-V3 and has a depth of 192.

4. SPDnet: A Deep Matrix Learning Method

SPDnet is characterized by embedding bilinear mapping (BiMap) and eigenvalue rectification (ReEig) into the algorithm, in the form of layers of the deep learning network. Their effect on SPD matrices is equivalent to the convolution layer and nonlinear computing layer of the convolution neural network, both of which aim to make objects to be processed more compact and discriminative.

The computation expression for a BiMap layer to perform bilinear mapping is

where

is the input matrix of the

-th layer,

is a transformation matrix, which is row full-rank, and

is the output matrix.

The computation expression for a ReEig layer to perform eigenvalue rectification is

where

and

are achieved by singular value decomposition,

,

indicates a threshold,

indicates an identity matrix, and

means retaining the elements in

that are greater than

and replacing the rest with

.

Exploiting singular value decomposition instead of eigenvalue decomposition to analyze the SPD matrix is an efficient approximation method. The computational speed of singular value decomposition is faster than that of eigenvalue decomposition. Similar to the eigenvalues, singular values are arranged from large to small in the diagonal matrix generated by the decomposition. In most cases, the sum of the first 10% singular values occupies around 99% of the sum of all singular values. Based on the square matrix property of the SPD matrix, the approximate reconstruction by the ReEig layer is reasonable.

The LogEig layer is used to perform Riemannian calculation on SPD matrices generated after multiple bilinear mappings and eigenvalue correction. The log-Euclidean Riemannian metric endows the SPD matrices Riemannian manifold with a Lie group structure, and the SPD matrix manifold is reduced to a flat space. The log-Euclidean metric is described in detail below.

The space of SPD matrices can form a Riemannian manifold . It has a globally defined differential structure and has the possibility to define the derivatives of the curves on the SPD matrix manifold. The tangent space of point on the manifold can be constructed by exploiting logarithm map , and an inner product . The family of inner products on Riemannian manifold’s all tangent spaces is called the Riemannian metric of the manifold. The geodesic distance between two points , on the SPD matrix manifold can be expressed as in the framework of Riemannian metric.

The log-Euclidean metric of the SPD matrix manifold corresponds to the Euclidean metric in the SPD matrix logarithmic domain. Thus, the scalar product between

and

in the tangent space at the point

S is

where

is the directional derivative of the matrix logarithm at

S along with

T.

The logarithmic and exponential maps related to the metric can be expressed as

where

roots in the differentiation of the equality

, and

is the identity matrix.

From Formulas (11) and (12), the geodesic distance obtained through log-Euclidean metric is

Formula (13) is to the Euclidean distance in the logarithmic domain. Under the log-Euclidean metric, the distance between any two points on SPD matrix manifold is acquired through propagating by translation the scalar product in the tangent space at the identity matrix. The space of SPD matrices is reduced to a flat Riemannian space with the help of the log-Euclidean.

The disadvantage of the log-Euclidean metric is that it is not an affine invariant metric, which leads to the fact that it cannot fully reflect the geodesic distance between two points on the Riemannian manifold. However, the form of the log-Euclidean metric has low computational complexity, and it is widely used in Riemannian manifold-based algorithms. For more details about the log-Euclidean metric, please refer to the literature [

30].

The computation expression for the LogEig layer is

where

and

is the diagonal matrix of eigenvalue logarithms. After the treatment of LogEig layer, the linear classification method on the basis of Euclidean space can be used to perform tasks.

We mainly exploit the following SPDnet model with 8 layers: , where is the input SPD matrix to the model, BiMap layer, ReEig layer, LogEig layer, fully-connected layer, and softmax log-loss layer, respectively.

5. Results

5.1. Experimental Analysis of Simulation Data

In this part, we made a comparision with two signal detection methods on the basis of the deep neural network: deep network based on time-frequency spectrum for signal detection and deep SPD matrix learning network for signal detection based on spectrum convolution feature. The data originated from a simulated signal data set, and the simulated clutter based on K-distribution is used as interference. K-distribution is a widely accepted sea clutter simulation model. Compared with Rayleigh distribution, lognormal distribution and Weibull distribution, it considers the correlation between echo pulses, and better fits the amplitude distribution of sea clutter.The probability density of K-distribution follows the following formula:

where

indicates the second modified Bessel function of order

,

indicates the Gamma function,

indicates the scale parameter to represent the mean value of clutter, which is set as 1 in this paper,

indicates the shape parameter to affect the shape of the distribution curve, which is set as 1 in this paper.

Target signal is generated by simulation. Its driving vector is

where

indicates the number of pulses of the signal and is set as 2048,

indicates the normalized Doppler frequency, set to 0.15.

The definition of normalized Doppler frequency is

where

indicates the Doppler frequency of the simulated target,

indicates the relative velocity of the target, set to 5 m/s,

indicates the speed of the electromagnetic wave and is

m/s,

indicates the carrier frequency of the radar, set to 9.39 GHz,

indicates the pulse repetition rate of the radar, set to 1000 Hz.

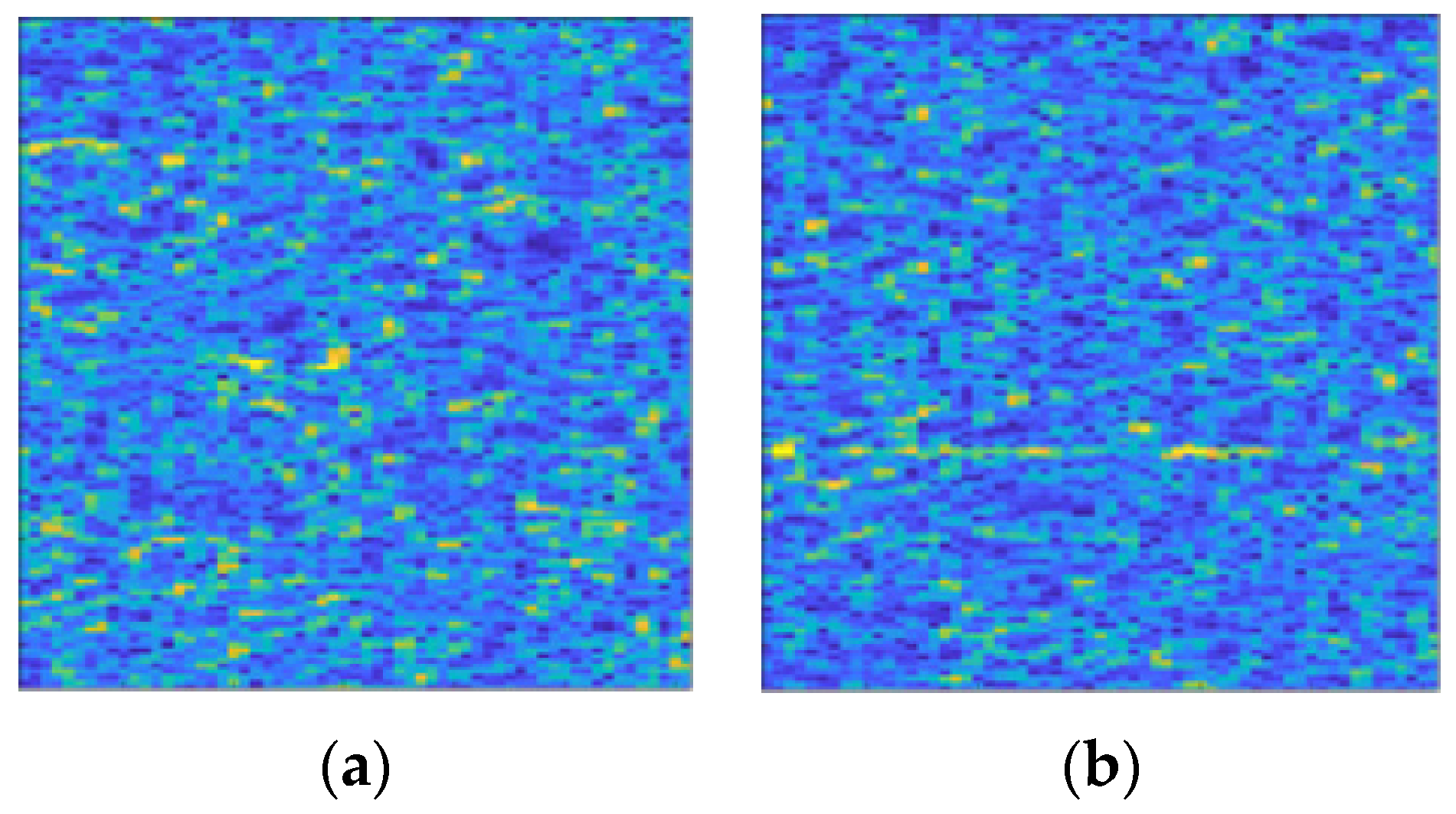

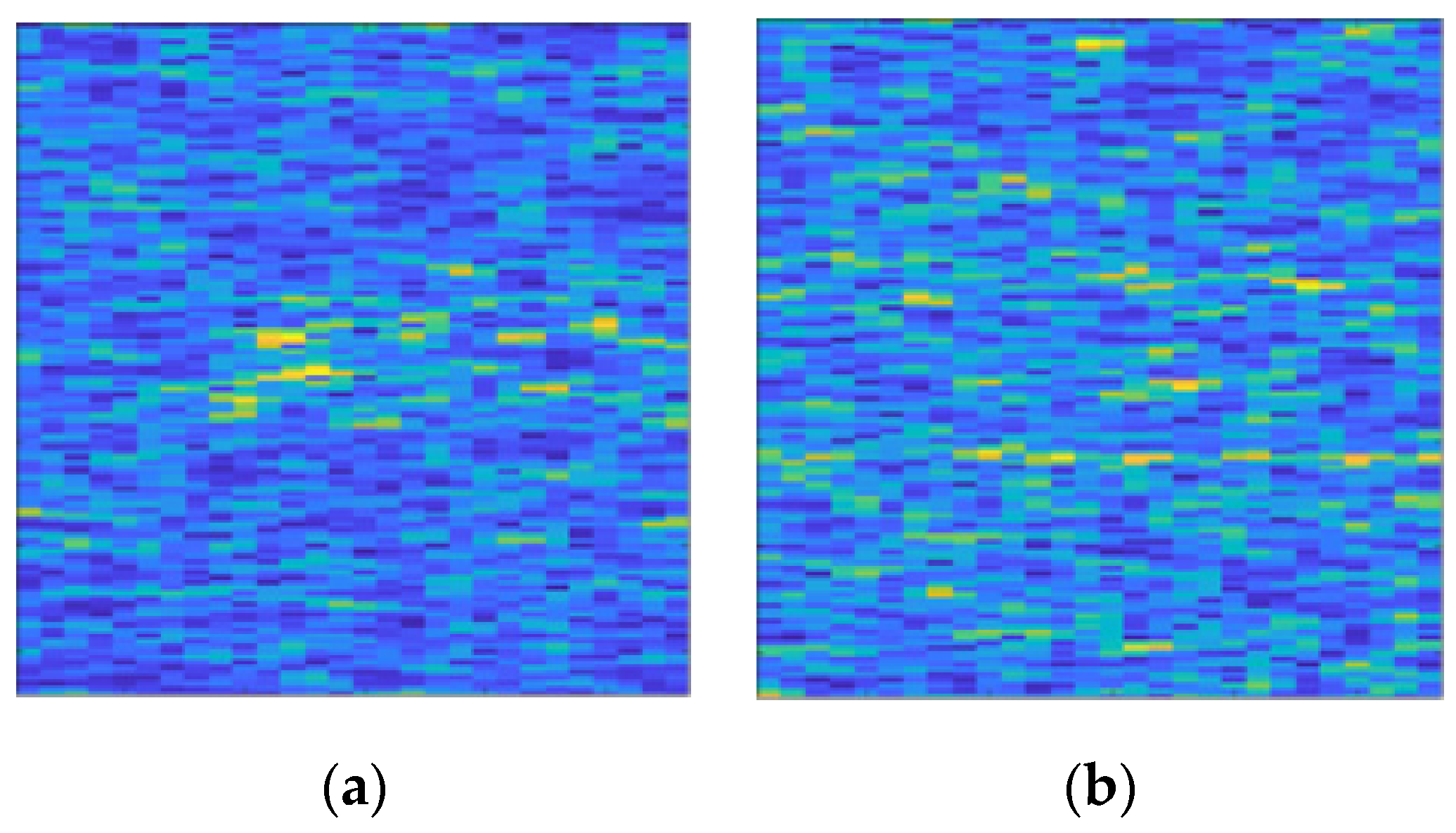

There are 11,000 samples with merely clutter and no target signal. The SCR of clutter interfered samples with the target signal is distributed from −5 dB to −20 dB at 1 dB intervals, with 4000 samples for each SCR. The time-frequency spectrum in the form of color map involved in this section is displayed in

Figure 8.

5.1.1. Comparison with Convolutional Neural Networks

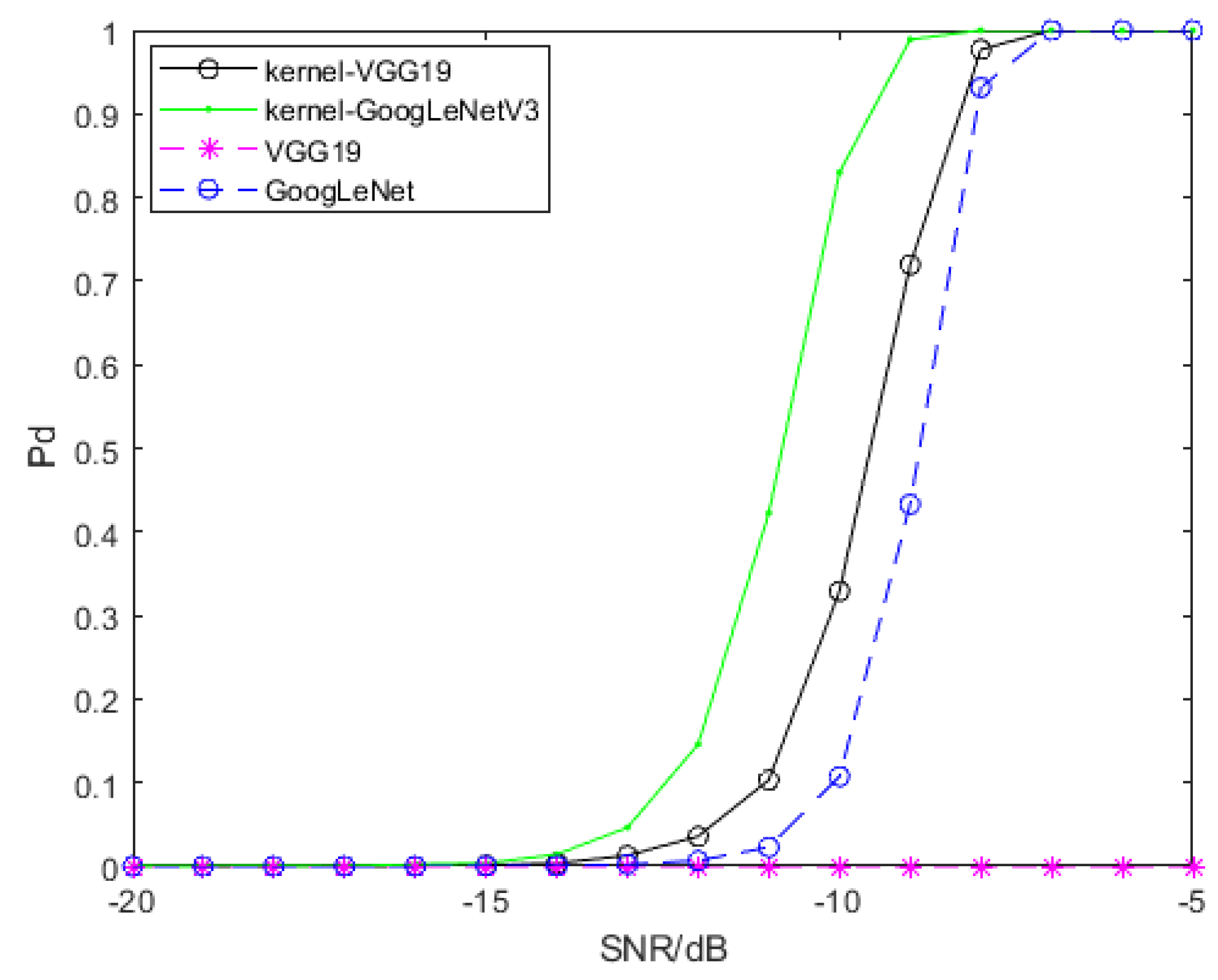

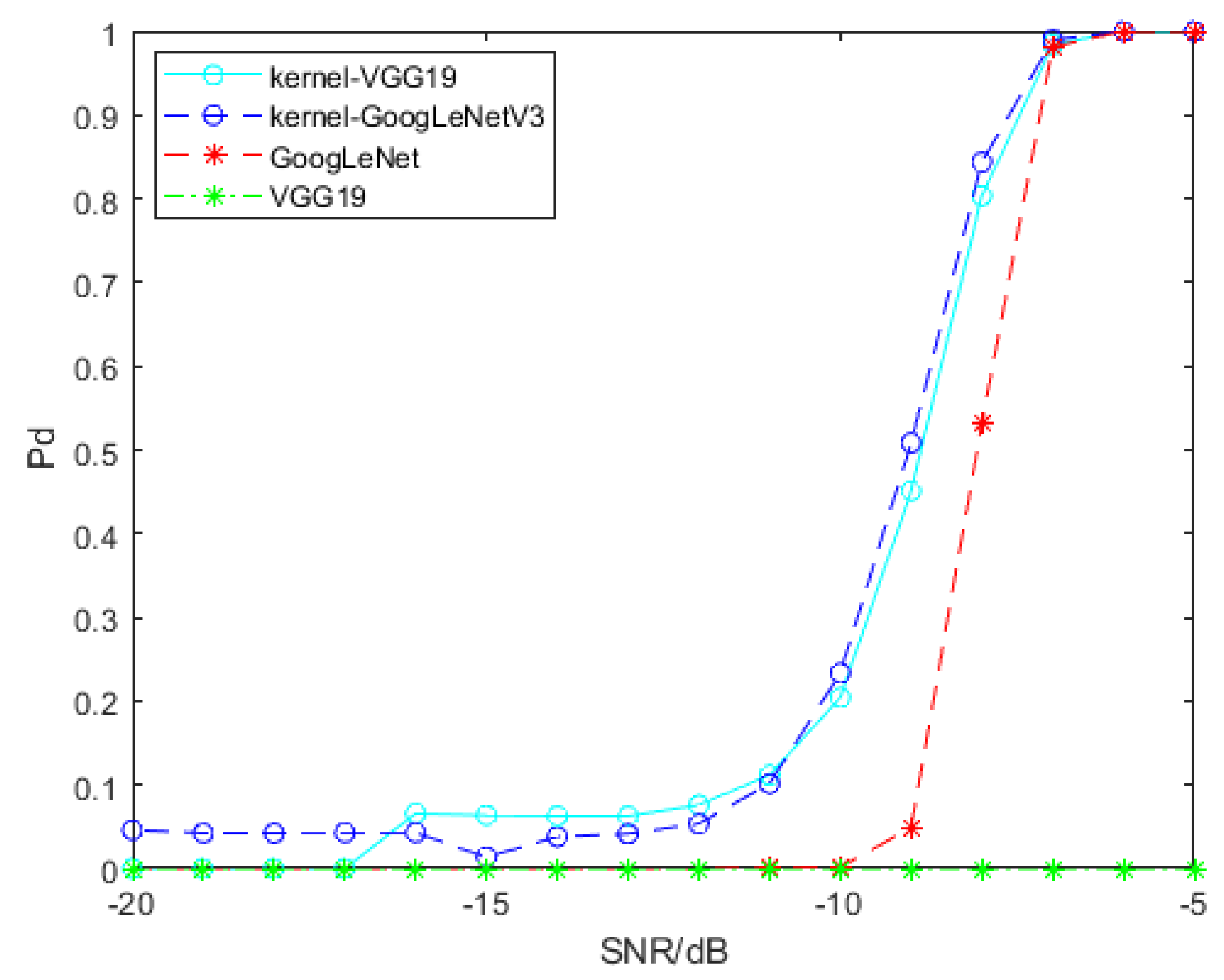

For the purpose of signal detection, SPDnet is exploited to process SPD matrices generated by convolution features, and GoogLeNet and VGG19 are exploited to process time-frequency spectra. Featuremaps of time-frequency spectra are generated and ourput by Tensorflow 1.8, and then they are processed by RBF kernel function to generate SPD matrices. We use transfer learning to adapt GoogLeNet and VGG19 to our signal detection problem, and retrain the SPDnet. The samples used for training all models are samples that only contain clutter and clutter interfered signal samples with SCR of −5 dB, −10 dB and −15 dB. Every training session, we use 1000 pure clutter samples and 1000 clutter disturbed signal samples, and the SCR of the latter is the same. The remaining samples are used to evaluate the detection performance of each model. Model training will have some preset parameters, which will not be updated with iteration, and we name them hyperparameters. The hyperparameters involved in this paper and their set values are as follows: the batch size, set as 20; the learning rate, set as 0.001; the weight decay, set as 0.0005; the epoch for SPDnet, set as 500; the epoch for GoogLeNet and VGG19, set as 800. All models have been fully trained. We trained SPDnet on an i7-8565U CPU, while GoogLeNet and VGG19 were trained on an Nvidia GeForce GTX 1080 Ti GPU. The detection probability of the above models is shown in

Figure 9. The SCR of signal samples used for training is −5 dB.

Figure 9 shows that two SPD matrix learning networks based on convolution features have advantages in detection problem. On the whole, the detection performance of two SPD matrix networks based on convolution features is better than that of GoogLeNet, and VGG19 cannot detect the target signal. The gain of the SPD matrix network trained by SPD matrices based on pool2 convolution features of VGG19 relative to GoogLeNet is about 0.6 dB when the detection probability of both models reaches 70%, and the gain of the SPD matrix network trained by SPD matrices based on the convolution features of GoogLeNetV3 relative to GoogLeNet is 2 dB when the detection probability of both models reaches 80%.

Table 1 is the comparision of the false alarm probability about different models involved in this paper. The false alarm probability of them is not greater than

under the circumstance of the SCR is −5 dB. With the decrease of SCR, the false alarm probability of them increases, but that of two SPD matrix network models increases greatly.

5.1.2. The Effect of Hyperparameters

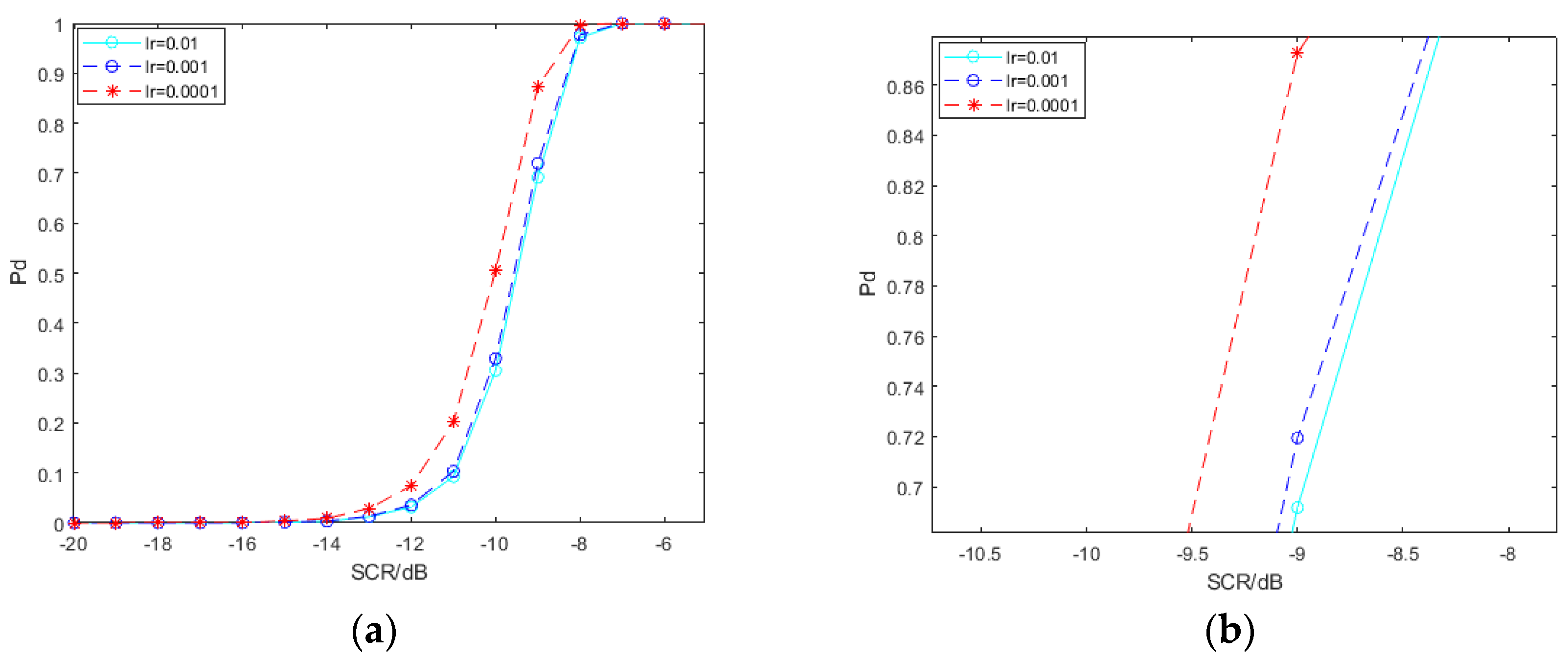

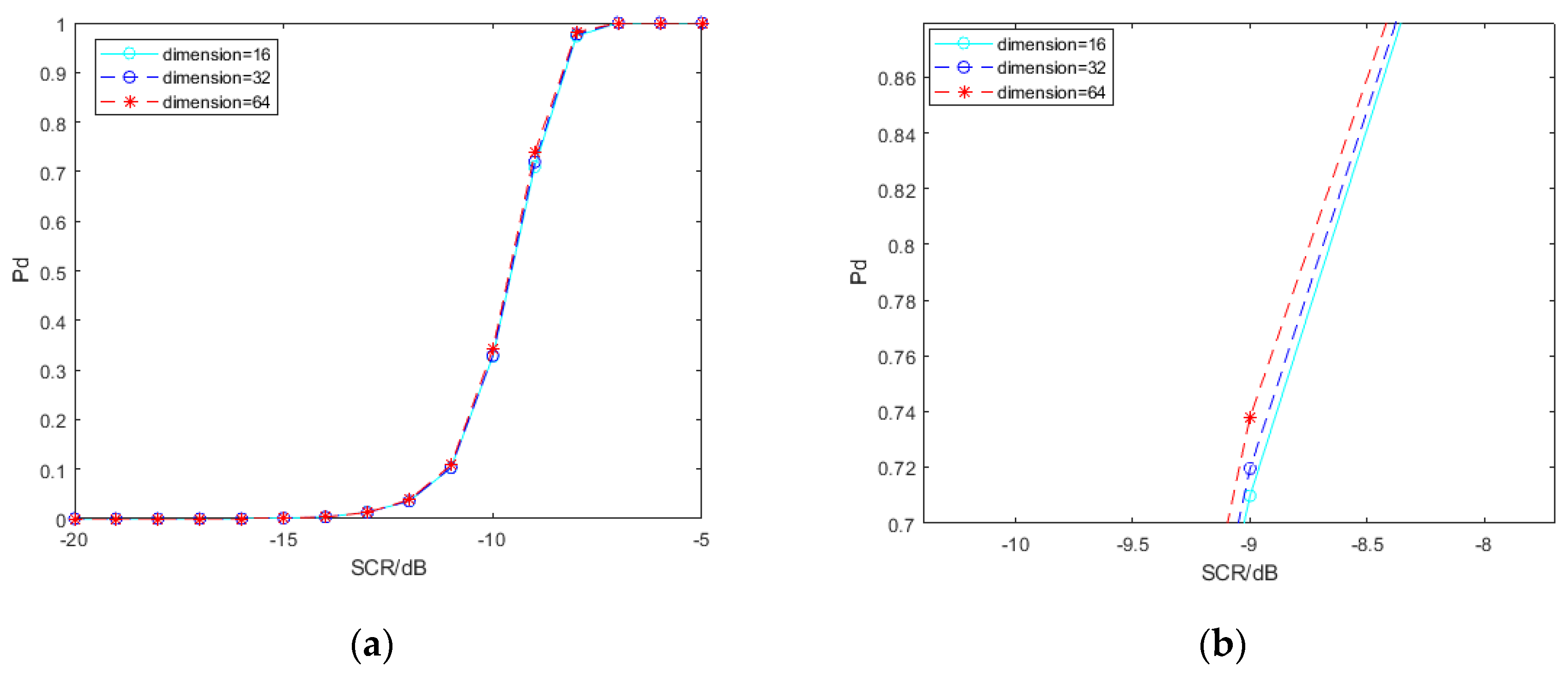

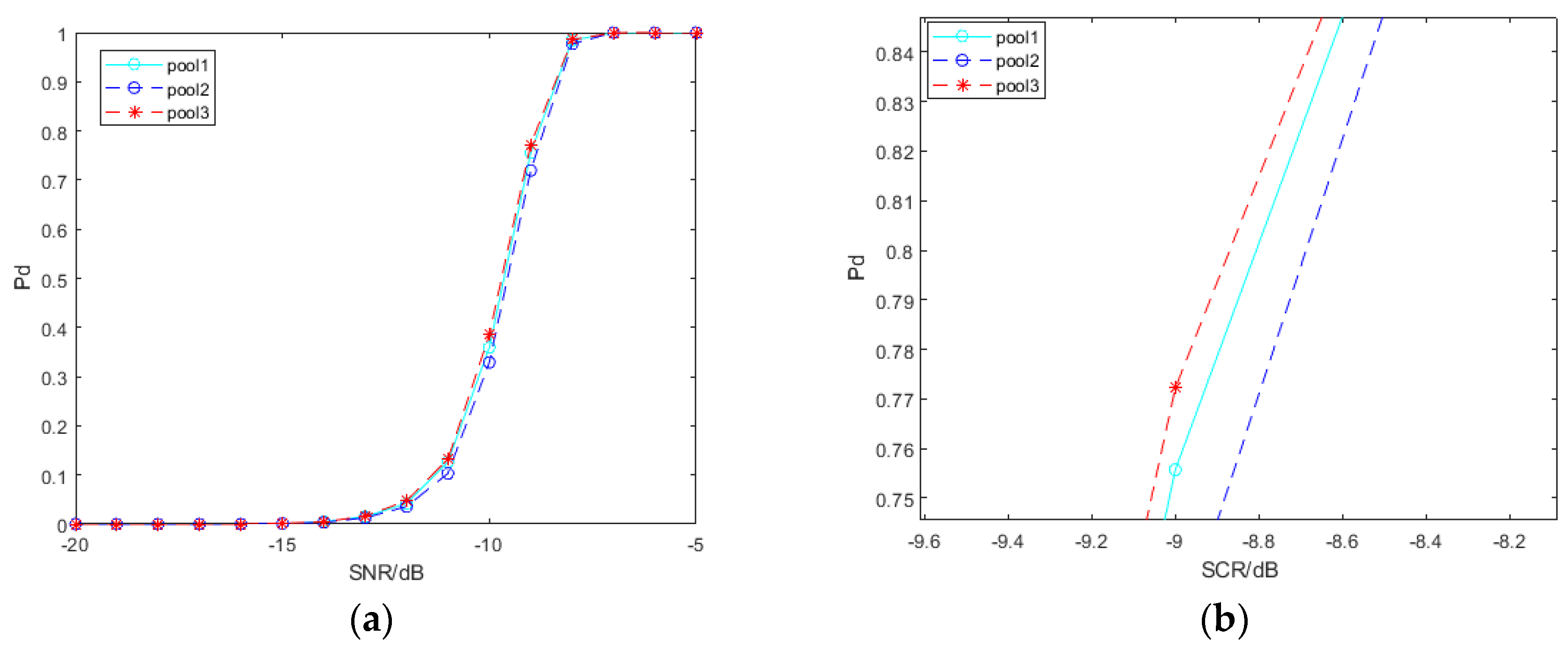

The learning effect of deep neural networks is influenced by hyperparameters. We explore the impact of the learning rate, the output layer of convolution features and the dimension of the SPD matrix before entering the LogEig layer, on the performance of the spectral convolution feature-based SPD matrix learning method, with deep learning for signal detection. In order to simplify the work, we merely use the SPD matrix network based on pool2 convolution features of VGG19. The signal samples interfered by clutter used by the network models involved in

Figure 10,

Figure 11 and

Figure 12 are all −5 dB.

The detection probability of the SPD matrix learning network trained by SPD matrices based on pool2 convolution features of VGG19 under different learning rates is shown in

Figure 10. The results show that the low learning rate is beneficial to the improvement of detection probability. The SPD matrix learning method for signal detection which the learning rate is set as 0.0001 gets a gain of about 0.5 dB compared with others under the circumstance of the detection probability; 80%. In the backpropagation algorithm of a neural network, the learning rate is the step length of each iteration to find the local optimum. A high learning rate may skip the optimum. If the training time is sufficient, a small learning rate helps find the local optimum.

In this paper, “dimension” refers to the size of the SPD matrix before entering the LogEig layer. The SPD matrix will be more compact and discriminative through bilinear mapping and eigenvalue rectification. However, the reduction of matrix size is bound to cause some features to be lost theoretically. The results shown in

Figure 11 confirm this conjecture. The detection probability is slightly optimized as the dimension increases. It is important to note that the overall effect is small.

From

Figure 5, the deeper the network layer is, the more abstract the convolution feature is extracted. In theory, there are differences in the convolution features extracted from different layers to construct the SPD matrix. We select the convolution features of VGG19 output at three layers to construct SPD matrices: pool1, pool2, and pool3. The influence of convolution features generated by different layers is shown in

Figure 12. As illustrated, the detection performance of SPD matrices based on convolution features of pool1 and pool3 is better than that based on convolution features of pool2. This may be related to the convolution features and characteristics of SPD matrices.

On the one hand, the deeper the layer is, the more abstract the convolution feature is extracted, which may lead to a loss of information for signal detection. The convolution features extracted at the shallow level retain more information, but in this case, there are fewer feature maps, and the generated SPD matrix dimension is smaller. On the other hand, although the convolution features extracted at a deeper level are more abstract, there are more feature maps and more comprehensive descriptions of samples, which can build the SPD matrix with a larger dimension.

The false alarm probability of above models when changing learning rate, dimension and the output layer of convolution features is shown in

Table 2,

Table 3 and

Table 4. In general, the false alarm probability increases rapidly with the decrease of SCR. Only when SCR is −5 dB, false alarm probability is within the allowed range.

5.2. Experimental Analysis of Semi-Physical Simulation Data

In this section, we select and use part of the IPIX (Ice Multiparameter Imaging X-Band) lake radar echo data as the clutter exerting interference [

31].

Table 5 and

Table 6 are the data files used and their basic parameters. Due to the data having no clear target information, we added one target signal. The number of pulses per sample is 1000, and other parameters are consistent with

Section 5.1. The time-frequency spectrum in the form of color map involved in this section is shown in

Figure 13.

The false alarm probability of different models is shown in

Table 7. With the decrease of SCR, the false alarm probability of all models increases rapidly, which may be related to the uneven distribution of real sea clutter. The false alarm probability of the two models of convolutional neural increases more slowly than that of the two models based on SPD matrix learning.

The detection property of the SPD matrix learning networks for signal detection and the convolutional neural networks is shown in

Figure 14. Compared with

Section 5.1, the detection property have changed significantly. The detection property of two SPD matrix learning networks on the basis of convolution features are better than that of GoogLeNet and VGG19. The advantage of two SPD matrix networks based on convolution features over GoogLeNet is obvious when SCR is low.

5.3. Complexity Analysis

In this section, we analyze the complexity of the involved models from two aspects: training time and size of space occupied by models. The comparison of training time and size of space occupied is shown in

Table 8 and

Table 9, respectively. Note that GPU is not used for training SPD matrix networks, as mentioned in

Section 5.1.1. By comparison, it can be seen that our model is of low complexity.

6. Conclusions

We present a deep SPD matrix method for signal detection on the basis of spectral convolution features. Utilizing the SPD matrix obtained by transforming convolution features through the nonlinear kernel function and integrating with the deep SPD matrix learning network, a target signal under low SCR can be detected by the present method. The superiorities of this approach are that it combines the convolution features with the SPD matrix, converts original samples to the SPD matrix space, exploits its Riemannian manifold property to enhance the discriminability between different samples. Synchronously, the use of deep learning ameliorates the capacity of signal detection. A simulation date set with K-distribution clutter as interference and a semi-physical simulation data set with real sea clutter as interference are used by us to evaluate the performance of our method. The final results show that this method is effective, and it can obtain a gain of 0.5–2 dB on the simulation data set, and can obtain a gain of 0.5–1 dB on the semi-physical simulation data set. We explored the impacts of the hyperparameters associated with our method. It is inevitable that, due to the non-uniformity of interference in the real environment, our method may have poor detection ability. Our next goal is to enhance the anti-interference ability of our method and reduce the possibility of false alarms.