1. Introduction

The variable selection problem in regression analysis consists of finding a suitable set of predictors for the response variable y from the columns p of a fixed design matrix with n rows (observations). In a Bayesian setting we have to evaluate the posterior probability of each regression model , where with the usual notation , with if column , is in model . The aim of this paper is to estimate for all . The main point is that the cardinality of is of order , huge even for moderate values of even if it were . In what follows, whatever p is, we only evaluate the evidence for models in which the size is no larger than n including the intercept and the regression the error variance.

Each can be calculated exactly (not just estimated) by means of all Bayes Factors (BF) , , where the marginal distribution of model is compared against that of a common null nested model . The reference null model considered here is the regression model with the intercept only. To obtain , a prior on model space , must be chosen.

Different priors on

have been proposed, starting from the discrete uniform

to fulfill the insufficient reasonable principle requirements, up to the hierarchical uniform prior [

1]

in which

indicates the size of model

. Under the hierarchical uniform, prior models of the same sizes receive the same prior probability and this also controls for the false discovery rate in declaring a covariate important when it is not important. This is relevant for large

p and when the model is sparse [

1].

More than focusing on comparing different definitions of

, we consider different definitions of

given by the prior probability of model parameters. In particular, we center on the semi-conjugate multivariate normal on regression coefficients, which leads to closed-form expression of

, which is important for computational feasibility. For instance, the conventional prior approach in [

2] has suitable properties (or

desiderata). An important one is the so-called

predictive matching property, which assures that

if there is not enough information in the data to distinguish between

and

. This is obtained by using the concept of effective sample size, which is the number used to rescale the prior covariance matrix to obtain unit information prior, which is a prior that bears the same information as one sample. The other approach is that of the non-local priors detailed in [

3]. These priors are non-local in the sense that the minimum prior density is at the null hypothesis in contrast to other usual approaches. The effect is that such a set of priors, asymptotically in

n, has a larger increment in recognizing the true set of important covariates (the so-called learning rate) more than the conventional prior approach, although they do not meet the predictive matching desiderata. In the sequel, we refer to these two sets of priors as conventional and non-local prior approaches and these are the only two definitions of BFs considered in this work.

Beyond choices of model parameters prior and/or model prior, the point of this work is that even under closed-form expression of BFs an exhaustive exploration of

is not feasible and thus a stochastic model search must be employed to obtain an estimation of

. By estimating

and intending to interpret the nature of the true underlying model, a summary of

which is of particular interest here is the inclusion probability of a covariate

j, denoted by

and defined by marginalizing

overall

such that

.

is necessary to define the

median probability model [

4] defined as the model in which covariates have

. If such a model exists, it is proved to be very near to the true model even under strong collinearity [

5].

When

cannot be fully explored, a stochastic model search is employed in place of heuristic algorithms as they allow to approximate

. In the case of the normal linear regression model, a popular stochastic approach is that of the well known Gibbs-sampling in [

6]. Such an algorithm implements a Markov chain by jumping from one model to another based on the marginal distribution of the data (the same that appears in the Bayes Factors). This algorithm returns a dependent sequence of

assumed to be a sample of size

S from

. The Gibbs-sampling operates on the space

using the fact that a closed-form expression of the marginal distributions is available for the normal regression model with the above-mentioned set of conjugate priors (conventional and non-local). The main advantage of Gibbs-sampling over heuristic approaches is that the theory assures that when the number of steps

all

has been properly explored, whereas heuristic methods (like step-wise methods) may stop at local maxima and may not bear properly posterior model uncertainty.

The aim of this paper is to use

to obtain an estimation of

, namely

, and in particular the new estimators

and

, later defined. A different definition of

derived from the Gibbs-sampling algorithm has been studied in [

7] applying the known concept of sampling theory. In particular, two estimators have been fully characterized:

- (i)

The intuitive and popular empirical proportion of the sampled models in containing covariate j, , where is the usual indicator function for the scalar in the vector . This estimator is called the empirical estimator;

- ()

The renormalized proportion of the sampled models containing covariate j, , called the renormalized estimator.

According to [

7] both are consistent estimators of

for

and that the error of

is the sum of two components: the error of

plus (or minus) a term that depends on the correlation between posterior probability of a

and its probability to be a visited model for the Gibbs-sampling algorithm. Basically, if the Gibbs-sampling moves very little around a model

just because it has the highest posterior probability (but not necessarily the largest one),

will be more biased than

[

7] otherwise it will be more precise than

. Moreover, if the model is sparse, the visited models, for finite

S depends on the initial state of the chain (for instance: the null model

or the full model for

).

The idea of this paper is to rethink about all these estimators of

and use a proper Bayesian approach to analyze the sequence

. In practice,

can be viewed as a sequence of non-ordinal categorical variables with two levels, which gives rise to representing

as the set of a

very sparse contingency table of dimension

cells, which are the probabilities

. Therefore, estimating cell probabilities is equivalent to the estimate of the posterior model probabilities. The main observation here is that this is the same setup as in [

8] except for the fact that samples

are dependent instead of being independent as assumed in [

8], although for large

S and given that this is a Gibbs-Sampling, dependence becomes mild. Deriving the posterior distribution of cells probability is the same as deriving an estimation of

and thus the

. That is, from

we define

and this is one of the proposed estimators that we will compare against

and

allowing also to properly account for the uncertainty around the obtained value of

.

The following

Section 2 will illustrate the Bayesian model for analyzing

and its approximation that is used to obtain

and a

(specified below). Further, model implementation, simulation study, and real data application on Travel and Tourism data are considered in

Section 3. Conclusion and final remarks are left for

Section 4.

2. The Dirichlet Process Mixture Model for Estimating Posterior Model Probabilities

The Dirichlet process prior model was initially considered for sparse contingency tables in [

8] and here it is applied to the more specific context of covariate selection and consequent estimation of covariate inclusion probabilities.

Parameters of interest are which is the set of all probabilities tensor on the space of size . These are the joint probabilities of all covariates and thus of all on space , namely the cells probabilities, where and every .

In the actual setup, these probabilities are attempted to be estimated with the Gibbs-sampling output,

regarded here as the data used to estimate

. Specifically, a sample

is a dichotomous unordered categorical variable and we denote the two categories of

, by

. The key idea in the Dirichlet process mixture model is representing probability

by decomposing it as an addictive mixture of

k (possibly infinite) sets of probabilities,

where

is the probability vector of the

sets of distributions

, with

, where

is the probability for covariate

j to be included into the set of predictors given the probability distribution over all

labeled by

h.

It is important to note that is the j covariate inclusion probability and its estimator is also an estimator of if the distribution h has high posterior probability given . In particular, if sets of probabilities are ordered according to their posterior probabilities, and then estimation of could be a good candidate for being a conditional (to ) estimator of .

For this purpose, we define the estimator

understood for all

j, given that the first component of the mixture has the highest probability of

.

The likelihood of the two parameters

and

given

is

Introducing the latent class indicator , the conditional probability to a specific set h is and we have that s are the inclusion probabilities of covariates conditional to the latent class indicator.

Thus the marginal distribution of

is obtained by marginalizing over all latent class indicators, namely

, is the parameter of interest which leads to the definition of our estimator

The larger the

k is, the better is the representation of

, thus we allow for

by using the following non-parametric prior:

where

corresponds to a Dirichlet measure and

Q to a Dirichlet process.

For the usual stick-breaking stochastic representation of this model, we have

where the Multinomial notation is here over-engineered as

observations are indicator variables. Parameters

induce non informative

a priori information about the probabilities of each covariate being in the model.

is the usual concentration parameter on the space of latent classes of model probability distributions. That is for small values of

, the probability of having many classes of different probability distributions decreases. In what follows we will consider either

fixed or under another layer of uncertainty by setting a gamma prior with shape

and scale

with

and

as in [

8].

With this model at hand, we can obtain justification for the popular empirical estimator of . That is, as this is a usual regular Bayesian model in which the prior information is washed out by the sample size S. This is an asymptotic, although not Bayesian, justification of using instead of the proposed . In this comparison scenario, instead plays the role of a more robust estimation as it is based on that distribution which has the largest probability. Therefore, comparing against is the same as comparing means over modes except that the underlying randomness is on probability distributions instead of random variables. Finally, comparing and against and is the same as comparing sampling-based estimators against Bayesian ones which incorporate the shrinkage effect of the prior. The claim here is that such an effect can be arbitrarily large for S finite and p arbitrary large.

2.1. Variational Algorithm for Approximate

The above stochastic representation suggests to obtain

by using another Gibbs-sampling exposed in [

8] and it was used to obtain simulations from the posterior distribution of

s and

vs. However, this algorithm can be very slow for large

p and the benefits of the proposed Bayesian approach can be compensated by just calculating

or

over larger values of

S (if these were possible to be obtained).

To avoid this drawback of the proposed modelling approach we make use of a recent and faster variational algorithm illustrated in [

9] and also implemented in the R package

mixdir. The algorithm relies on using approximated distribution, for the posterior of

and

. Such distributions are derived by applying the mean-field theory to variational inference (see [

10]). The approximating

q distributions of the variational approach lay down to be a mixture of Dirichlet distributions. For more details, see [

9]. This algorithm is much faster than the initial Gibbs-sampling in [

8] and thus may compensate for the need of a larger

S to obtain good estimations of

.

3. Implementation and Examples

The

R implementation of the proposed model is straightforward and it does not even need an ad hoc appendix because major packages are already available. In particular, the implementation requires the following packages for Gibbs-sampling

:

BayesVarSel for BF based on the conventional prior approach [

2] and/or

mombf for BF based on non-local priors [

11]. Finally, package

mixdir contains the variational Bayes method sketched above in

Section 2.1.

The minimal implementation for a response y and a design matrix X with p columns requires two steps:

Obtain a sample of use:

for conventional prior: gammas<- GibbsBvs(y,X)$modelslogBF[,-p+1]

for non-local prior: gammas<- modelSelection(y,X)$postSample

Estimate by cellsprob=mixdir(gammas) and calculate, for generic j covariate, the inclusions probabilities in each of the component of the mixture, pp=unlist(cellsprob$category_prob[[j]]). Then

In the examples and simulation studies illustrated below, we will mainly play with the number of rows S of above-calculated matrix gammas.

3.1. Riboflavin Simulation Study

In what follows we will consider the Riboflavin dataset (see [

12]) related to the riboflavin production by

Bacillus subtilis. We have

observations and

predictors (gene expressions) and a one-dimensional response (riboflavin production),

y. We assume that

is obtained from the conventional prior and non-local prior. Priors on models,

is the Uniform prior.

We use the Riboflavin dataset only for fixing and simulating 10,000 times the response vector y of size n according to , and are three columns of picked at random for each simulated response (the rest of columns of are supposed to have no effect on y).

The Gibbs algorithm starts at the null model and S has different sizes . The goal is to compare the proposed estimator against existing ones.

Results regarding the estimation of

over all simulations with the Conventional and Non-Local priors are shown in

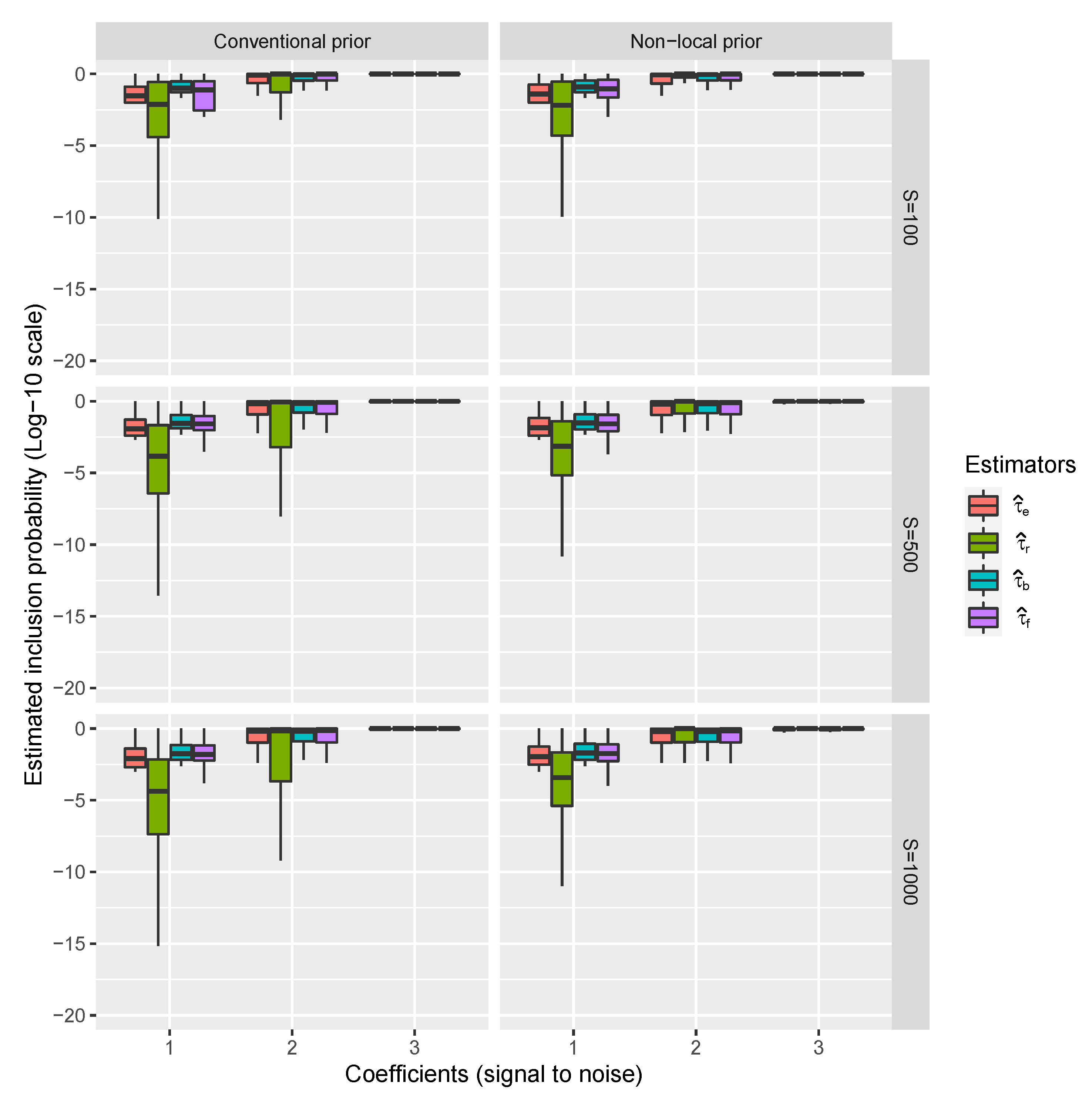

Figure 1.

It is possible to appreciate that when the signal in the data is small (coefficients are 1 or 2) and S is not large enough, the proposed estimators and perform better than the existing ones, while the performs better under the non-local prior approach. This is because, as mentioned above, the non-local prior approach favors the model learning rate which is reflected in the values of the BF used to renormalize .

However, such improvements depend on the specific simulation analysis, that is the design matrix, the noise in the response (here, two standard deviations) the specific value of the assumed coefficients (i.e., 1, 2 and 3) and also the values of S. In particular, it seems to disappear when the value of the coefficient is high (i.e., 2 or 3). To generalize these results concerning the choice of specific values of coefficients, regression error standard deviation, and S, we analyze the results using probit regressions (one for each prior). In particular, we transform 10,000 estimators on the probit scale and regress it with respect to the signal into the data (the value of the coefficient) and estimator type (four in total) with interactions among them (in total we have 12 probit regression coefficients: main effects plus interactions).

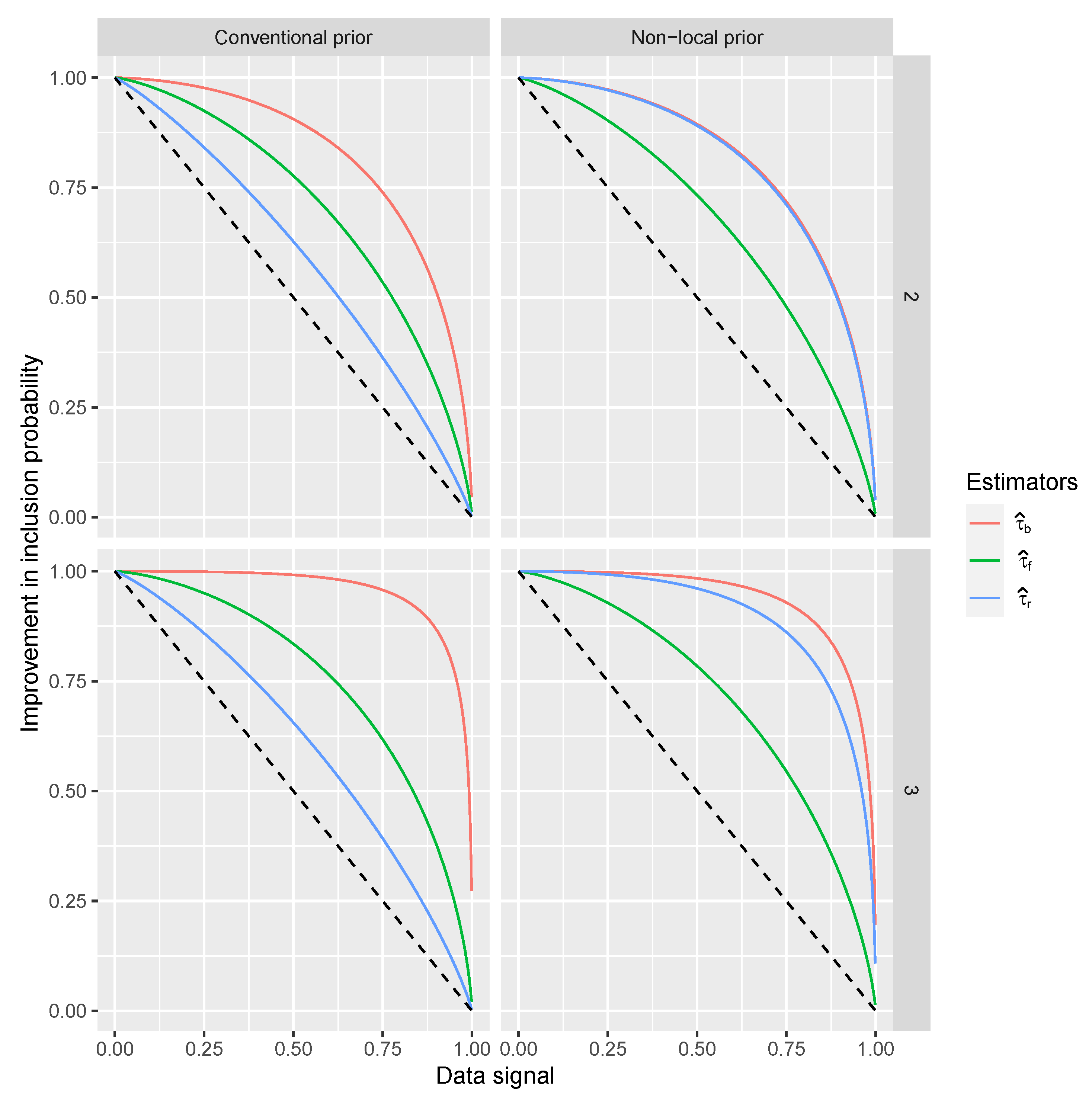

The resulting coefficient regressions that we focus on indicate the improvement to the

and the signal when the coefficient is 1. Such an improvement is an increment in the probit scale of the probability of having

for each combination of coefficient and estimator type. These increments are between 0.62 and 2.14 (all highly significant) and are important if the original point in the probit scale is low, while they are negligible if the point on the probit scale is large. Such an initial point corresponds to a signal in the data and the value of

S. The increment on

and the signal in the data is reported in

Figure 2.

From

Figure 2 we can see that the proposed estimators

and

generally outperform the existing estimator and popular

as the increment on

is larger when the signal in the data is lower. The

perform better than

under the non-local prior as the corresponding BFs are very informative for detecting variables.

3.2. Travel and Tourism Data Set

Tourism worldwide is threatened by the Covid-19 spread and it represents an important source of income for many countries. For instance, in Spain, Mexico, and France, according to The Organization for Economic Co-operation and Development (OECD) in 2016, tourism represented more than 7% of the Gross Domestic Product. The total number of covariates considered here is fully observed in countries.

We apply the analysis using Conventional and non-local priors assuming that the number of latent probability distributions on the model space is and a fixed concentration parameter to be as parsimonious as possible on determining the median probability model for and a burn-in of 100 Gibbs-sampling steps.

It is important to note that to reach almost a substantial agreement between the actual estimators

,

on one side and the newly proposed ones

,

it is necessary to have

S = 10,000, which corresponds to about seven times more the computational time used to obtain

Table 1 which reports the estimated median probability models.

The most important factor is precisely the one most affected by Covid-19, which is the number of operating airlines. The other factors are less related to Covid-19 but are still important. One is the organizations of the T&T govern monitoring represented by the timeliness of providing data on T&T. There are also variables related to country features: infrastructures (connection and power) internet search of T&T resources and the presence of natural resources (total known species). As observed above, non-local priors lead to more complex models than the conventional prior, but looking at the proposed estimators they seem to be more robust with respect to the choice of the prior on model parameters, in fact, reports almost the same covariates regardless of the BF definition.