MRI Brain Classification Using the Quantum Entropy LBP and Deep-Learning-Based Features

Abstract

1. Introduction

2. Related Work

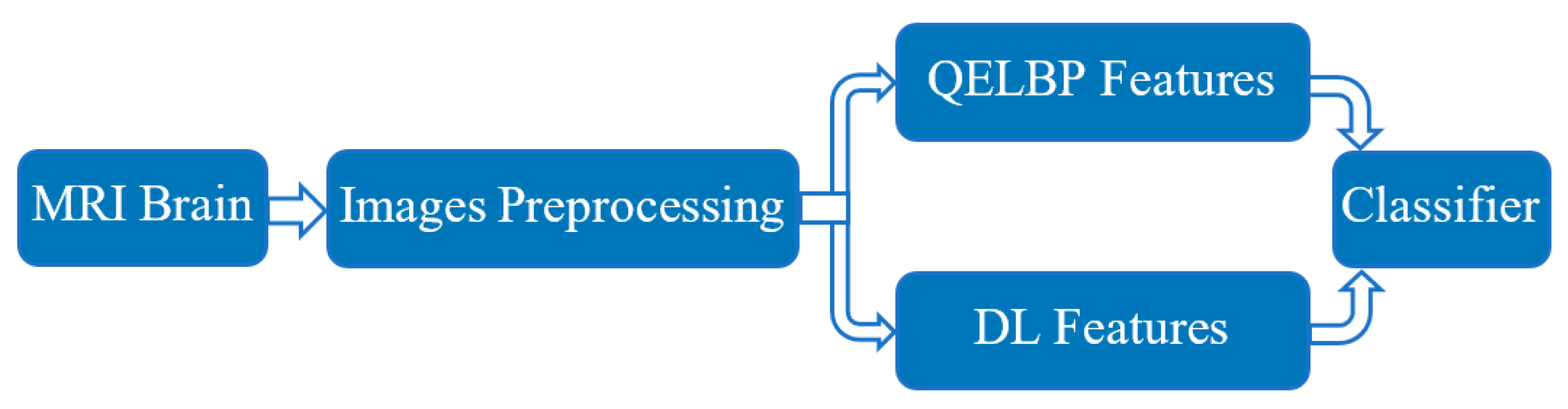

- The proposed QELBP features as a texture descriptor;

- The DL features as a deep feature extractor;

- The 154 MRI brain scans which are collected from Al-Kadhimiya Medical City, Iraq.

3. Proposed QELBP–DL Model

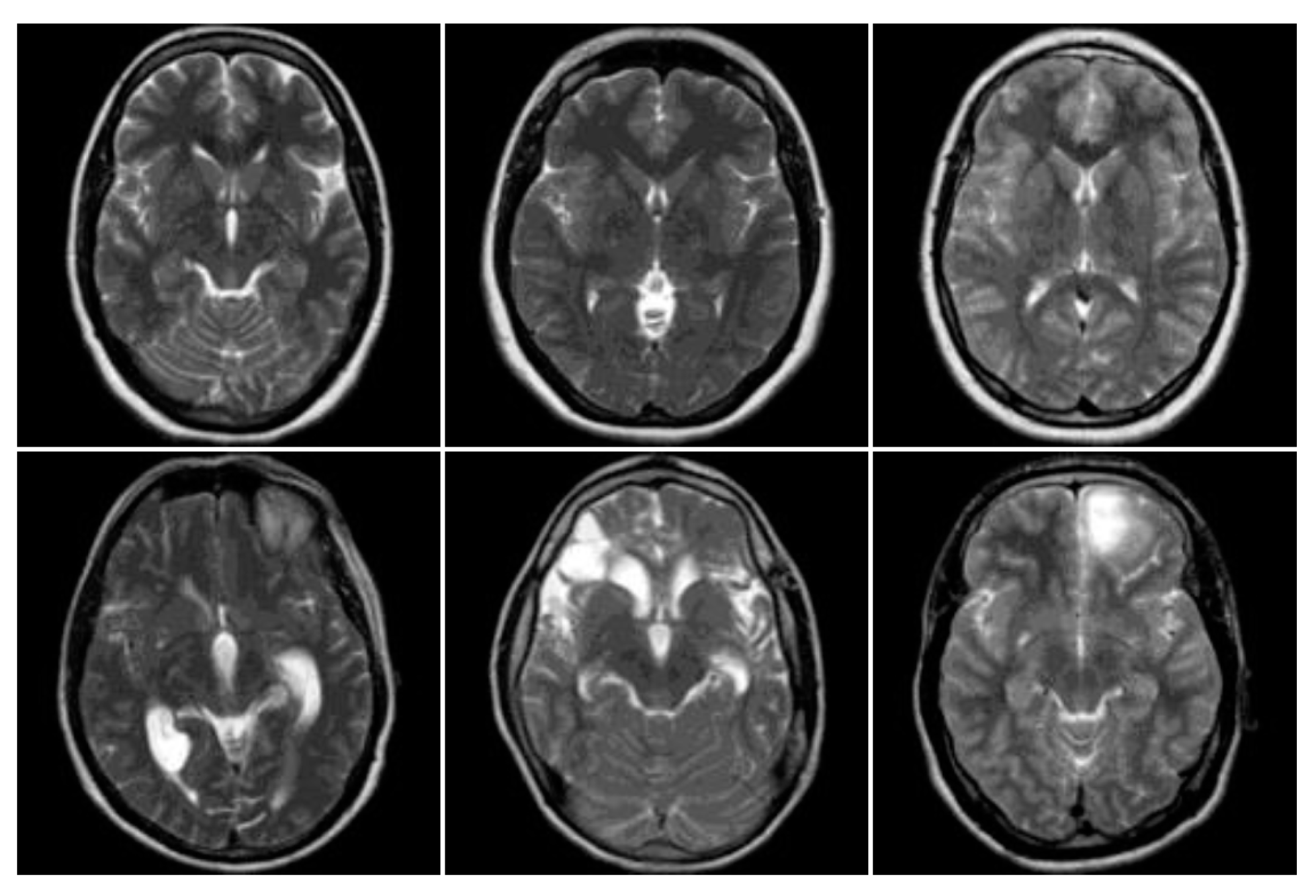

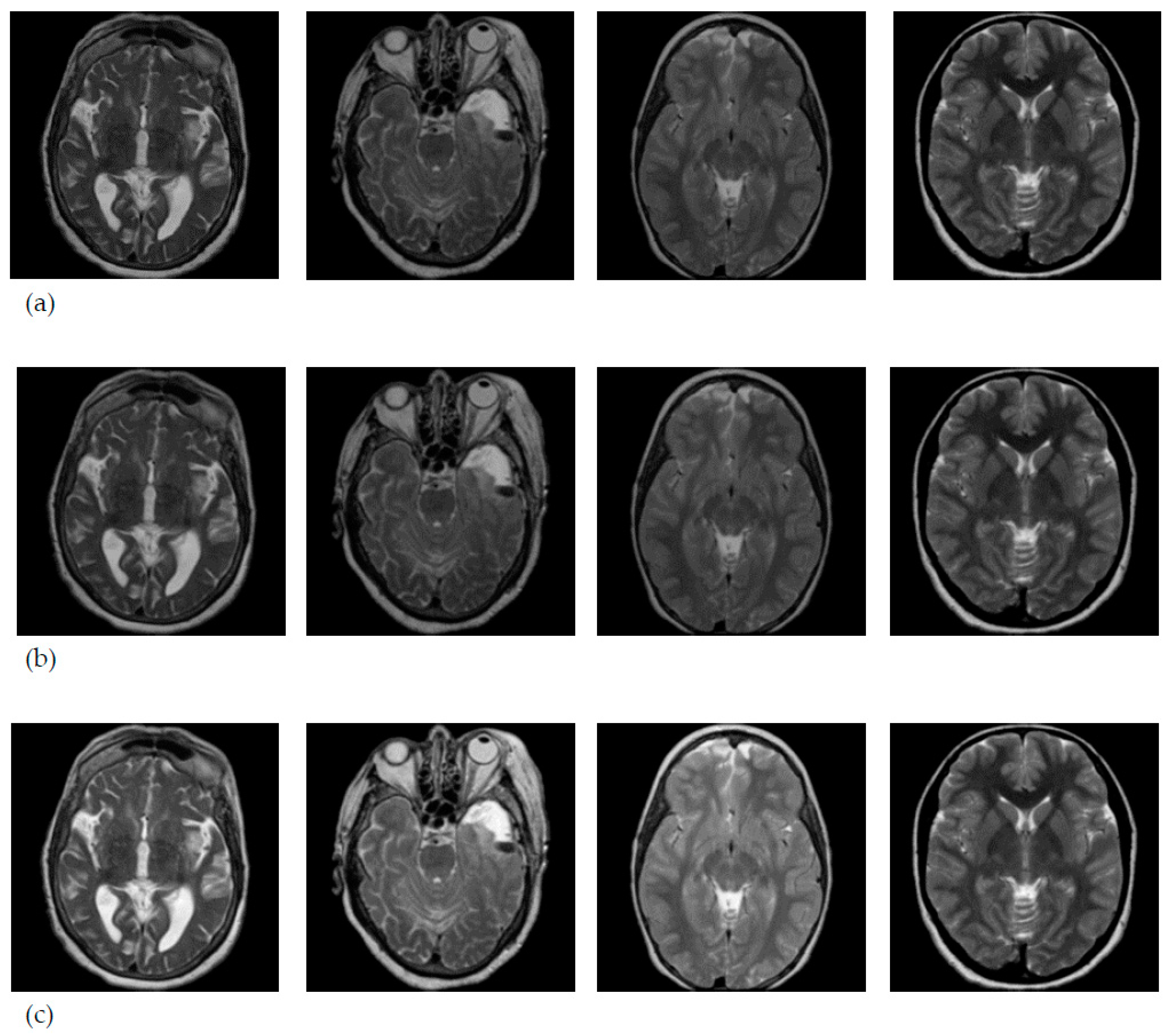

3.1. Data Collection

3.2. MRI Brain Scan Preprocessing

3.3. QELBP Feature Extraction

| Algorithm 1:QELBP |

|

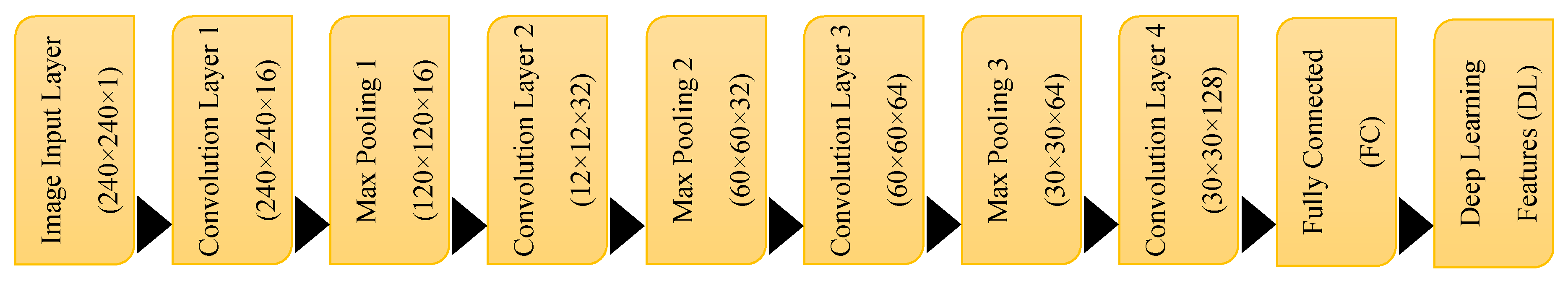

3.4. CNN Architecture for Feature Extraction

3.5. LSTM Classifier

3.6. Evaluation Metrics

4. Experimental Results

4.1. Performance Evaluation of MRI Brain Classification

4.2. Comparative Analysis for of MRI Brain Classification

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rosenberg, R.N. Atlas of Clinical Neurology; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

- Işın, A.; Direkoğlu, C.; Şah, M. Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Comput. Sci. 2016, 102, 317–324. [Google Scholar] [CrossRef]

- Jalab, H.A.; Hasan, A. Magnetic resonance imaging segmentation techniques of brain tumors: A review. Arch. Neurosci. 2019, 6. [Google Scholar] [CrossRef]

- Hasan, A.M.; Meziane, F.; Jalab, H.A. Performance of grey level statistic features versus Gabor wavelet for screening MRI brain tumors: A comparative study. In Proceedings of the 2016 6th International Conference on Information Communication and Management (ICICM), Hertfordshire, UK, 29–31 October 2016; pp. 136–140. [Google Scholar]

- Nabizadeh, N.; Kubat, M. Brain tumors detection and segmentation in MR images: Gabor wavelet vs. statistical features. Comput. Electr. Eng. 2015, 45, 286–301. [Google Scholar] [CrossRef]

- Liang, H.; Li, Q. Hyperspectral imagery classification using sparse representations of convolutional neural network features. Remote Sens. 2016, 8, 99. [Google Scholar] [CrossRef]

- Sachdeva, J.; Kumar, V.; Gupta, I.; Khandelwal, N.; Ahuja, C.K. A package-SFERCB-“Segmentation, feature extraction, reduction and classification analysis by both SVM and ANN for brain tumors”. Appl. Soft Comput. 2016, 47, 151–167. [Google Scholar] [CrossRef]

- Hasan, A.M.; Jalab, H.A.; Meziane, F.; Kahtan, H.; Al-Ahmad, A.S. Combining deep and handcrafted image features for MRI brain scan classification. IEEE Access 2019, 7, 79959–79967. [Google Scholar] [CrossRef]

- Yang, X.; Fan, Y. Feature extraction using convolutional neural networks for multi-atlas based image segmentation. In Proceedings of the Medical Imaging 2018: Image Processing, Houston, TX, USA, 11–13 February 2018; p. 1057439. [Google Scholar]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Lai, Z.; Deng, H. Medical Image Classification Based on Deep Features Extracted by Deep Model and Statistic Feature Fusion with Multilayer Perceptron. Comput. Intell. Neurosci. 2018, 2018, 2061516. [Google Scholar] [CrossRef]

- Despotović, I.; Goossens, B.; Philips, W. MRI segmentation of the human brain: Challenges, methods, and applications. Comput. Math. Methods Med. 2015, 2015, 450341. [Google Scholar] [CrossRef]

- Hasan, A.M. An Automated System for the Classification and Segmentation of Brain Tumours in MRI Images based on the Modified Grey Level Co-Occurrence Matrix. Ph.D. Thesis, University of Salford, Salford, UK, 2017. [Google Scholar]

- Hasan, A.M.; Meziane, F.; Aspin, R.; Jalab, H.A. MRI brain scan classification using novel 3-D statistical features. In Proceedings of the Second International Conference on Internet of things, Data and Cloud Computing, Cambridge, UK, 22 March 2017; pp. 1–5. [Google Scholar]

- Toudjeu, I.T.; Tapamo, J.-R. Circular Derivative Local Binary Pattern Feature Description for Facial Expression Recognition. Adv. Electr. Comput. Eng. 2019, 19, 51–57. [Google Scholar] [CrossRef]

- Umarov, S.; Tsallis, C.; Steinberg, S. On a q-central limit theorem consistent with nonextensive statistical mechanics. Milan J. Math. 2008, 76, 307–328. [Google Scholar] [CrossRef]

- Marsaglia, G.; Bray, T.A. A convenient method for generating normal variables. SIAM Rev. 1964, 6, 260–264. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.; Asari, V.K. A state-of-the-art survey on deep learning theory and architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Zeitschrift für Medizinische Physik 2019, 29, 102–127. [Google Scholar] [CrossRef]

- Palangi, H.; Deng, L.; Shen, Y.; Gao, J.; He, X.; Chen, J.; Song, X.; Ward, R. Deep sentence embedding using long short-term memory networks: Analysis and application to information retrieval. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 694–707. [Google Scholar] [CrossRef]

- Sak, H.; Senior, A.W.; Beaufays, F. Long short-term memory recurrent neural network architectures for large scale acoustic modeling. In Proceedings of the 15th Annual Conference of the International Speech Communication Association(INTERSPEECH 2014), Singapore, 14–18 September; pp. 338–342.

- Le, X.-H.; Ho, H.V.; Lee, G.; Jung, S. Application of long short-term memory (LSTM) neural network for flood forecasting. Water 2019, 11, 1387. [Google Scholar] [CrossRef]

- Loizou, C.P.; Pantziaris, M.; Seimenis, I.; Pattichis, C.S. Brain MR image normalization in texture analysis of multiple sclerosis. In Proceedings of the 2009 9th International Conference on Information Technology and Applications in Biomedicine, Larnaca, Cyprus, 5–7 November 2009; pp. 1–5. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Zhou, B.; Lapedriza, A.; Torralba, A.; Oliva, A. Places: An image database for deep scene understanding. J. Vis. 2017, 17, 1–12. [Google Scholar] [CrossRef]

- 26. Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2017, arXiv:1602.07360. [Google Scholar]

- Anitha, V.; Murugavalli, S. Brain tumour classification using two-tier classifier with adaptive segmentation technique. IET Comput. Vis. 2016, 10, 9–17. [Google Scholar] [CrossRef]

- Sultan, H.H.; Salem, N.M.; Al-Atabany, W. Multi-classification of brain tumor images using deep neural network. IEEE Access 2019, 7, 69215–69225. [Google Scholar] [CrossRef]

- Badža, M.M.; Barjaktarović, M.Č. Classification of Brain Tumors from MRI Images Using a Convolutional Neural Network. Appl. Sci. 2020, 10, 1999. [Google Scholar] [CrossRef]

- Raja, P.S. Brain tumor classification using a hybrid deep autoencoder with Bayesian fuzzy clustering-based segmentation approach. Biocybern. Biomed. Eng. 2020, 40, 440–453. [Google Scholar] [CrossRef]

| Actual Class | Predicted Class | |

|---|---|---|

| Abnormal | Normal | |

| Abnormal (positive) | TP | FN |

| Normal (negative) | FP | TN |

| Methods | Accuracy 100% | TP 100% | TN 100% | AUC |

|---|---|---|---|---|

| QELBP | 89.50 | 94 | 85 | 0.8489 |

| DL | 93.50 | 98 | 89 | 0.9259 |

| Proposed QELBP–DL | 98.80 | 99 | 97.80 | 0.9864 |

| Method | Accuracy 100% | Features Dimensions | TP 100% | TN 100% |

|---|---|---|---|---|

| AlexNet [24] | 92 | 4096 | 92 | 86 |

| GoogleNet [25] | 90 | 1000 | 96 | 83 |

| SqueezeNet [26] | 94 | 1000 | 97 | 88 |

| Proposed QELBP–DL | 98.80 | 12 | 99 | 97.80 |

| Methods | Dataset Used | Accuracy 100% | Sensitivity 100% | Specificity 100% | Precision 100% | TP 100% | TN 100% |

|---|---|---|---|---|---|---|---|

| Anitha and Murugavalli, 2016 [27] | Custom dataset-2 | 96.60 | 88 | 33 | 96 | 96 | 100 |

| Sachdeva et al. 2016 [7] | Institute of Medical Education and Research, Chandigarh, India | 91 | x | x | x | x | x |

| Sultan, H et al. 2019 [28] | Tianjing Medical University, China | 96.13 | 93 | 97 | 95 | 93 | 97 |

| Badža M et al. 2020 [29] | Tianjing Medical University, China | 96.56 | 97 | 96 | 94 | 96 | 95 |

| Raja et al. 2020 [30] | BRATS 2015 database | 98.50 | 96 | 99 | 96 | 98 | 96 |

| Proposed | Custom datasets | 98.80 | 98 | 99 | 97 | 99 | 97.8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasan, A.M.; Jalab, H.A.; Ibrahim, R.W.; Meziane, F.; AL-Shamasneh, A.R.; Obaiys, S.J. MRI Brain Classification Using the Quantum Entropy LBP and Deep-Learning-Based Features. Entropy 2020, 22, 1033. https://doi.org/10.3390/e22091033

Hasan AM, Jalab HA, Ibrahim RW, Meziane F, AL-Shamasneh AR, Obaiys SJ. MRI Brain Classification Using the Quantum Entropy LBP and Deep-Learning-Based Features. Entropy. 2020; 22(9):1033. https://doi.org/10.3390/e22091033

Chicago/Turabian StyleHasan, Ali M., Hamid A. Jalab, Rabha W. Ibrahim, Farid Meziane, Ala’a R. AL-Shamasneh, and Suzan J. Obaiys. 2020. "MRI Brain Classification Using the Quantum Entropy LBP and Deep-Learning-Based Features" Entropy 22, no. 9: 1033. https://doi.org/10.3390/e22091033

APA StyleHasan, A. M., Jalab, H. A., Ibrahim, R. W., Meziane, F., AL-Shamasneh, A. R., & Obaiys, S. J. (2020). MRI Brain Classification Using the Quantum Entropy LBP and Deep-Learning-Based Features. Entropy, 22(9), 1033. https://doi.org/10.3390/e22091033