Abstract

This paper uses quantitative eye tracking indicators to analyze the relationship between images of paintings and human viewing. First, we build the eye tracking fixation sequences through areas of interest (AOIs) into an information channel, the gaze channel. Although this channel can be interpreted as a generalization of a first-order Markov chain, we show that the gaze channel is fully independent of this interpretation, and stands even when first-order Markov chain modeling would no longer fit. The entropy of the equilibrium distribution and the conditional entropy of a Markov chain are extended with additional information-theoretic measures, such as joint entropy, mutual information, and conditional entropy of each area of interest. Then, the gaze information channel is applied to analyze a subset of Van Gogh paintings. Van Gogh artworks, classified by art critics into several periods, have been studied under computational aesthetics measures, which include the use of Kolmogorov complexity and permutation entropy. The gaze information channel paradigm allows the information-theoretic measures to analyze both individual gaze behavior and clustered behavior from observers and paintings. Finally, we show that there is a clear correlation between the gaze information channel quantities that come from direct human observation, and the computational aesthetics measures that do not rely on any human observation at all.

1. Introduction

The eye is one of the most important organ for human beings to know the external things and transmit information. The eye tracking system can track the trajectory of the eye, thereby obtaining eye movement indicators such as the fixation position, the number of fixations, and fixation duration. By the analysis of eye movement data, the subjective views can be obtained, so that we can expect to improve our ability to measure the individual’s understanding of an image or a scene.

With more and more researchers using eye tracking technology as a research tool, eye tracking is a promising method in academic and industrial research. It has the potential to provide insights into many issues in the visual and cognitive fields: education [1,2,3], medicine [4,5,6,7], assistive technology for people with a variety of debilitating conditions [8,9,10], better interface design [11,12,13], marketing and media [14,15,16], and human–computer interaction method for making decisions [17,18,19]. Furthermore, eye movement provides a new perspective and experimental method for cognitive research [20,21,22].

Thus, there is an increasingly urgent need for quantitative comparison of eye movement indicators [23]. The scanpath map [24,25,26,27], heat map [28,29], and transition matrix [30] are several important methods for analyzing the sequence of fixation. The scanpath map represents the fixations as a sequential sequence, and vector- and character-based editing methods have been applied to calculate the similarity and difference of scanpaths. The heat map represents the eye movement data as a Gaussian mixture model, but because this method loses the sequence information of the fixations, the index based on the heat map can only reflect the similarity of different regions of the observed image, and ignores the order of fixation.

Compared with heat map, modeling the gaze transitions as a first-order Markov chain transition matrix between areas of interest (AOIs) preserves the gaze switch information. Thus, quantitative analysis based on transition matrix, of which gaze entropy (the entropy of the Markov chain) is one of the most important measures, has been used in recent years. Gaze entropy was first applied into flight simulation in [31], although it is only in recent years that it has gained a growing interest from researchers. Shiferaw et al. have recently reviewed and discussed gaze entropy in [32].

As a first-order Markov chain can be interpreted as an information channel, we proposed for the first time the gaze information channel in [33], and applied it to study the artwork of Van Gogh. In addition to incorporating the stationary entropy and gaze transition entropy, the gaze information channel paradigm allows for additional information-theoretic measures to analyze the gaze behavior. The new informational measures include joint entropy, mutual information, and normalized mutual information. The gaze channel was further explored in [34], where the scientific posters cognition was studied from the perspective of the gaze channel.

In this paper, we expand our previous work in [33,34] in several lines:

- Differently to the authors of [33,34], the gaze channel does not depend on the gaze sequences being interpreted as a first-order Markov chain.

- We study images (artworks from Van Gogh) as in [33], versus posters containing text plus images in [34].

- We study 12 Van Gogh artworks versus only three artworks, and 10 observers versus three observers in [33].

- We use nine AOIs, versus only three in [33] and up to six in [34].

- We use regular grid division into AOIs, against predetermined in [34].

- We compare vertical division vs. horizontal division, allowing us an intuitive explanation of mutual information.

- We present and interpret the evolution of gaze channel quantities with observation time.

- We compare and relate our results with informational aesthetics measures described in the literature.

The rest of the paper is organized as follows. In Section 2, we present previous work on eye tracking data analysis based on the transition matrices, in Section 3 we model the gaze sequences between AOIs as an information channel, in Section 4 and Section 5 we show experimental design and results analysis, and conclusions and future work are presented in Section 6.

2. Background

Vandeberg et al. [35] used a multi-level Markov modeling approach to analyse gaze switch patterns. After modeling the individuals’ gaze as Markov chains, Krejtz et al. [36,37] calculated the entropy of the stationary distribution and the transition or conditional entropy to interpret the overall distribution of attention over AOIs, as the Markov chain transition probability matrix has a dual interpretation as a conditional probability matrix. Raptis et al. [38] asked the participants to complete recognition tasks with various complexities, then the researchers used and to eye tracking analysis; the result revealed there are quantitative differences on visual search patterns among individuals. Raptis et al. [38] stated that eye gaze, including gaze entropies, fixation duration, and number, can reflect personal differences in cognitive styles.

Zhong et al. [39] modeled the relationship between the image feature and the saliency as a Markov chain, and in order to predict the transition probabilities of the Markov chain, they trained a support vector regression (SVR) from true eye tracking data. At last, when given the stationary distribution of this chain, a saliency map of predicting user’s attention can be obtained.

Huang [40] used the female gaze data of browsing apparel retailers’ web pages to study how the female attention was influenced by visual content composition and slot position in personalized banner ads. Gu et al. [30] used heatmap entropy (visual attention entropy (VAE)) and its improved version, relative VAE (rVAE) to analyze eye tracking data of observing web pages; the result showed that VAE and rVAE have correlation with the perceived aesthetics. Hwang et al. [41] stated that it is important to notice scenes consist of objects representing not only low-level visual information, but also higher-level semantic data, and they presented transitional semantic guidance computation to estimate gaze transition.

Ma et al. [33] introduced the gaze information channel using Van Gogh paintings, and, based on preliminary results, observed that we can give a coherent interpretation to the channel quantities to both classify the observers and the artworks. Hao et al. [34] tracked observers’ eye movements for reading scientific posters, which contain both text and data, and modeled eye tracking fixation sequences between AOIs as a Markov chain and subsequently as an information channel to find quantitative links between eye movements and cognitive comprehension. The AOIs were determined by the design of the poster.

3. Methodology

3.1. Gaze Information Channel

Given an image I, divided it into s AOIs, where the set of AOIs is , let us build a matrix C of successively visited AOIs. Thus, element in matrix C, , will correspond to how many times the AOI j has been visited immediately after AOI i was visited, that is, how many times there has been a direct transition from i to j. This information is extracted from the recorded gaze sequences. Observe that gives the total number of times AOI i was visited. Observe also that if we consider an additional fictional AOI, let us say AOI number “0”, that represents both the initial state before our gaze lands on the painting and the final state when our gaze leaves the painting, then the number of exits and number of entries on any state have to be the same, this is for all i and j. If the trajectories are not short, , for practical purposes we can consider them equal and ignore AOI “0”. Observe that matrix C can be considered as the realization of a joint occurrence of random variables X and Y, , where each pair represents the occurrence of the gaze entering AOI x and leaving for AOI y. Let , , and , then the joint probabilities can be constructed as , the conditional probabilities matrix P as , and the marginal probabilities , as . Observe that by construction . We have thus built an information channel [42] between the S areas of interest to itself. Observe that this information channel can be considered too as a first-order Markov chain with equilibrium distribution and transition matrix P. In our previous work [33,34], we introduced the gaze information channel from the first-order Markov chain, while here we introduce first the information channel. The difference is not trivial, as when directly introducing the information channel we do not mind whether the gaze sequences follow a first-order or a higher-order Markov chain. However, even if the gaze does follow a higher order than first-order Markov chain, it is still possible by what we have shown before to model gaze sequences as a first-order Markov chain. In that case, the transition probabilities between states should be understood as the average ones. Given AOI i, would give then the average transition probability to AOI j, as the transition probabilities would depend on the given instant of the total observation time, and might change from the first seconds of observation to later seconds. Previous work has considered the gaze transitions as a first-order Markov chain [32].

According to the strategy of dividing into AOIs, there are mainly content-dependent AOIs and grid AOIs. In this paper, grid AOIs are used.

3.2. Gaze Information Channel Measures

In this section, Shannon’s information measures [42] for gaze information channel are introduced. In addition to the gaze stationary entropy and gaze transition entropy used in previous work [36], the gaze information channel makes it possible to introduce more informational measures to study the eye movement data. In the information channel, the stationary entropy is defined as

and gives the uncertainty of the distribution of the gaze between the AOIs.

The entropy of row, , is defined as

and gives the uncertainty about the next AOI when the current gaze location is the i-th AOI.

The conditional entropy of the information channel is given by the weighted average values of ,

and represents the randomness or uncertainty of next gaze transition for all AOIs.

The joint entropy of the information channel is the entropy of the joint distribution of X and Y,

and measures the total uncertainty of the information channel. Observe that, being for the gaze information channel , then , and as then .

The mutual information , given by

indicates the total correlation, or information shared, between the AOIs.

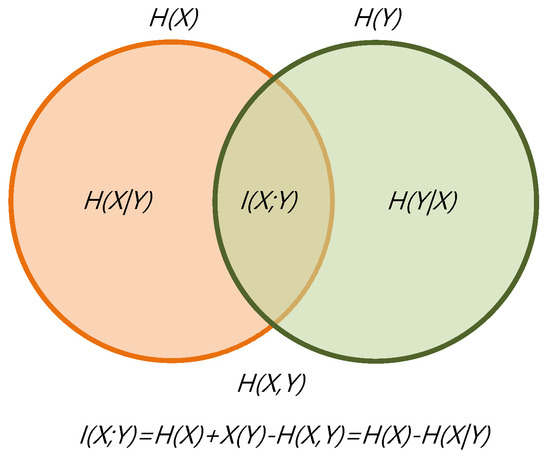

The relationship between information measures can be illustrated by a Venn diagram, as shown in Figure 1. The diagram represents the relationship between Shannon’s information measures.

Figure 1.

The information diagram represents the relationship between information channel measures.

3.3. Informational Aesthetics Measures

To study the evolution of Van Gogh’s style, Jaume Rigau et al. [43,44,45,46,47] used a quantitative approach based on aesthetic measures, including palette-based relative redundancy , Kolmogorov complexity-based redundancy , and the number of regions for a given ratio of mutual information .

Given a color image of N pixels, where C represents the palette distribution ( with colors or with luminance values), the palette entropy stands for the uncertainty of a pixel, and the maximum entropy is 24 () and 8 (), respectively. The relative redundancy is defined as

where ranges in [0, 1] and represents the reduction of pixel uncertainty due to the choice of a palette with a given color probability distribution instead of a uniform distribution. Observe that is similar to the redundancy per character of a natural language [48] and corresponds to Bense’s information theoretic interpretation [49] of Birkhoff’s aesthetic measure [50].

From the perspective of Kolmogorov complexity, an image’s order or regularity can be measured by the difference between the image size and its Kolmogorov complexity . The normalization of the order gives us the aesthetic measure

where ranges in [0, 1] and represents the degree of the order of the image without any prior knowledge of the palette. Note that the higher the order of the image, the higher the compression ratio.

We can segment an image into regions. The coarsest segmentation is to consider the whole image as a single region, and the finest segmentation would be to consider as many segments as pixels in the image. Given a segmentation, we represent by R the normalized areas of the regions. A given region can contain pixels of different colors from the palette C. Thus, an information channel between colors C and regions R can be established. The mutual information between C and R is given by

For a decomposition of an image into n regions, the ratio of mutual information is defined by

and ranges from 0 to 1. When we have one single region the mutual information is 0, and thus the ratio is 0. When we have as many regions as pixels we have captured the whole correlation of the image, and the ratio is 1. We are interested in how many segments n we need to divide the single image to arrive at a given percentage of mutual information. This is given by the inverse function

and is interpreted as a measure of image compositional complexity.

In addition to the above measures, Sigaki et al. [51] presented a quantitative analysis of art by estimating the permutation entropy and the statistical complexity of a painting, considered, as in the above measures, as an array of pixel values. Given a image as a two-dimensional array, subarrays of size are considered as a single sequence of components, and the possible order of the values of each sequence is classified into one of the possible orderings. For instance, for we have possible orderings. All possible, overlapping, subarrays are considered, and finally after normalization we will have a distribution P which represents the order of neighbor pixel values in the image, . The only parameters of the method are the values, also called embedding dimensions (for a more formal description, please refer to the work in [51,52]).

Then, the normalized permutation entropy is calculated by dividing the Shannon entropy of P distribution,

by its maximum possible value ,

Sigaki et al. [51] argue that although the value of is a good measure of randomness, it cannot fully capture the degree of structural complexity present in the image matrix. Therefore, they further calculated the so-called statistical complexity

where is a relative entropic measure (the Jensen–Shannon divergence) between and the uniform distribution , and computed as

where and

is a normalization constant obtained by calculating .

4. Experimental Design

4.1. Participants

Twelve Master’s students from Tianjin University were selected to take part in the experiment. All participants had normal or corrected-to-normal vision. Twenty minutes before the start of the experiment, all participants were forbidden to play on mobile phones or perform reading activities that may cause visual fatigue, and to perform eye exercises, such as activities that can relax the eyes and mind and body. The data from two participants had to be excluded because their eye tracking rate was below 98%. Finally, eye movement data of 10 students (6 females, 4 males, average age 24.8) were available for the study.

4.2. Stimuli

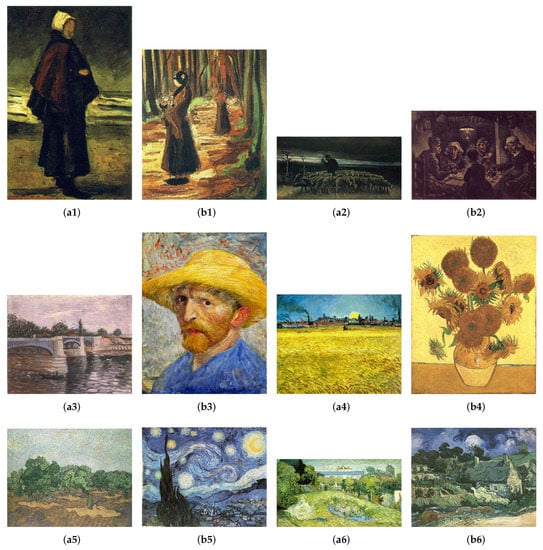

The stimuli are 12 paintings of Vincent Van Gogh in digital format. Van Gogh’s paintings are classified into six periods, which follow chronological order, as Earliest Paintings (1881–1883), Nuenen/Antwerp (1883–1886), Paris (1886–1888), Arles (1888–1889), Saint-Remy (1889–1890), and Auvers-sur-Oise (1890), respectively. The paintings are divided in two groups (a and b) as shown in Figure 2. Both groups include 6 representative paintings of each period (periods numbered from 1 to 6). We have considered the two groups of paintings used by Feixas et al. [53], which gives the values of the measures () for the 12 paintings. The 12 paintings were downloaded from The Vincent Van Gogh Gallery of David Brooks, http://www.Vggallery.com, a website remaining the most thorough and comprehensive Van Gogh resource on the World Wide Web.

Figure 2.

Group a and b of representative paintings of each period are shown (chronologically ordered from period 1:(a1&b1) to period 6:(a6&b6), Copyright 1996–2010 DavidBrooks). The values of and are labeled for each painting. (a1) Fisherman’s Wife on the Beach, 1882 (0.418, 0.759, 1264). (b1) Two women in the Woods, 1882 (0.310, 0.650, 2020). (a2) Shepherd with a Flock of Sheep, 1884 (0.463, 0.739, 875). (b2) The Potato Eaters, 1885 (0575, 0.850, 1417). (a3) The Seine with the Pont de la Grande Jette, 1887 (0.385, 0.718, 1396). (b3) Self-Portrait with Straw Hat, 1887 (0.295, 0.726, 1272). (a4) Sunset: Wheat Fields Near Arles, 1888 (0.345, 0.697, 1648). (b4) Vase with Fifteen Sunflowers, 1888 (0.349, 0.581, 2736). (a5) Olive Grove: Pale Blue Sky, 1889 (0.339, 0.593, 2456). (b5) Starry Night, 1889 (0.322, 0.594, 1758). (a6) Daubigny’s Garden, 1890 (0.315, 0.714, 2375). (b6) Thatched Cottages at Cordeville, 1890 (0.312, 0.592, 2095).

4.3. Apparatus

The experiment used a mobile eye tracking device SMIETG2w produced by the German SMI company. This eye tracker’s two non-contact infrared cameras (60/120 Hz) can capture images of the observer’s eyes, and calculate eye movements in real-time based on the pupil and corneal reflection principles. Another camera of the eye tracker can record image scene that the observer is viewing. In addition, the eye tracker is also equipped with a USB cable to transfer the data collected by the camera to the eye tracking control system.

The eye tracking control system is a high-performance workstation installed with IView X software. The video data collected by the eye movement instrument is integrated into the workstation for image data analysis after MPEG coding. Eye movement data acquisition software IView X can complete the fixation point calibration before formal observation. In our work, we adopted three-point calibration with higher accuracy. After the data collection is completed, the Begaze software can be used to generate fixation position.

4.4. Procedure

The calibration picture and the 12 Van Gogh paintings used in the formal experiment were presented on a computer monitor ( resolution; 23.8-inch LCD). The participant was invited to sit in a chair in front of the monitor, their eyes about 60 to 80 cm away from the screen, and chin resting on a fixed bracket. Then, the staff used the IView X software to make 3-point calibration for each participant. After calibration, the Van Gogh paintings were displayed in full screen, in random order. The observation time of each painting is 45 s, there are 10 s for rest after each painting is displayed, and the viewing mode is free-viewing, that is, no viewing task is assigned to the observer. Before the observation, the researcher does not disclose any information about the painting to be observed to the participant, which aims to reduce the influence of top-down factors and facilitate the analysis of the relationship between human eye behavior and the painting content itself.

5. Result Analysis

5.1. Channel Measures Analysis with 9 AOIs

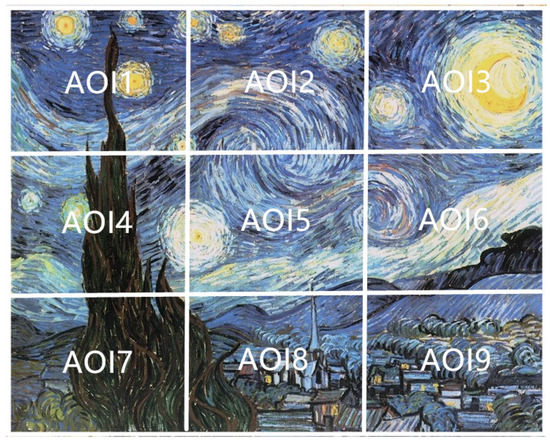

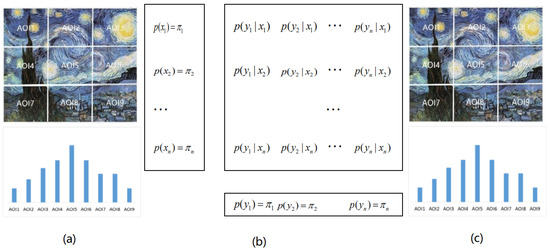

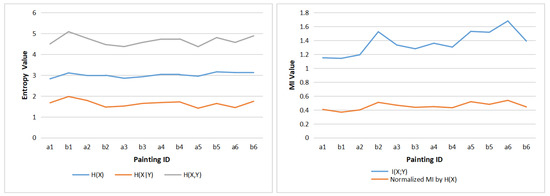

Each painting was divided into nine AOIs (as shown in Figure 3). This number of AOIs is a compromise between the detail we look in the analysis and the sparseness of the transition matrices. In order to demonstrate the differences when observing each painting, for each AOI, we add the fixations of the 10 observers together, then we use the gaze information channel (as shown in Figure 4) to compute the clustered entropy and MI for each painting. The clustered values for all observers are shown in the Appendix A in Table A1, Table A2, Table A3, Table A4, Table A5, Table A6, Table A7, Table A8, Table A9, Table A10, Table A11 and Table A12. We have built the equilibrium distribution by normalizing the row totals. Table 1 shows the values of entropy, MI, normalized MI, and the aesthetics measures from Section 3.3 for the 12 paintings. The validity of the clustering strategy was shown in [34]. From Figure 5 left, we can observe that there is little variation of , while there is an important variation of values, mainly due to the variation of (as ). The values of have a tendency to decrease from left to right, according to the chronological order of the paintings. From Figure 5 right we observe an increase in mutual information, attenuated in the case of normalized one. Remembering that paintings are ordered according to the evolution in the time of Van Gogh styles, and the interpretation of as randomness and of as correlation between the AOIs of the painting, Van Gogh style evolution towards its maturity, with richer compositions, is reflected in an increase of mutual information in the gaze channel.

Figure 3.

An example painting divided in the 9 AOIs.

Figure 4.

The gaze information channel for painting b5 with 9 AOIs, between the AOIs with equilibrium distribution (a,c) and information channel (b). Observe that the input (a) and output (c) distributions are the same.

Table 1.

The clustered entropies, MI, , , and , for 12 paintings.

Figure 5.

The clustered entropies (left) and clustered MI values (right) of 12 paintings.

Next, in order to study the individual differences between observers, the clustered entropy and MI for each observer are computed: for each AOI, we add the fixations of 12 paintings together, then use the gaze information channel compute the clustered entropy and MI for each painting. Table 2 shows the clustered entropy and MI for 10 observers, and Figure 6 shows the clustered entropies and MI.

Table 2.

The clustered entropies and MI for 10 observers.

Figure 6.

The clustered entropies (left) and clustered MI values (right) for 10 observers.

Comparing Figure 6 with Figure 5, it can be inferred that there is not as much difference between observers as there is between paintings. In Table 2, similar to Table 1, the standard deviation of is the lowest among , and values, thus the differences happen more in gaze switch among AOIs (given by ) than in the attention distribution among AOIs (given by ). Moreover, from Figure 6 left, it can be observed that the , and present close values for the different observers. Figure 6 right shows that the main differences between observers can be found in the values of mutual information, with basically two kind of observers: ones with lower MI, around 1.2, and the other ones with higher MI, around 1.4. These differences are smoothed down when considering normalized MI.

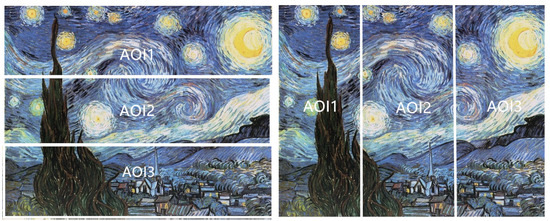

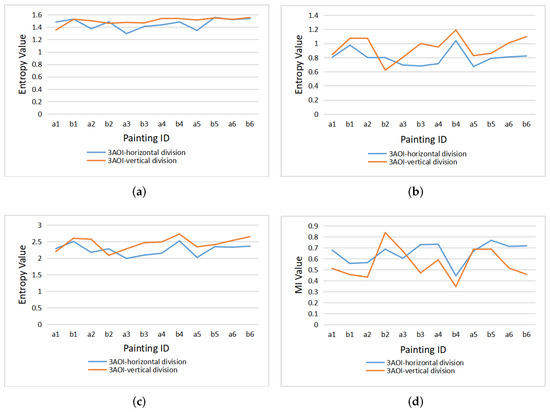

5.2. Comparison of Horizontal with Vertical Division

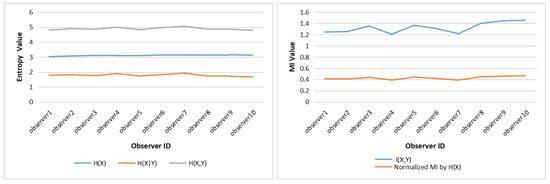

When reading text, human eye movement behavior is greatly affected by the direction of text layout. For example, if the text is arranged as usually in horizontal lines, our eyes will move in horizontal direction during reading. However, the direction of eye movement is more unpredictable when viewing images or paintings. Because of the differences in the content of the paintings, the observer’s attention distribution in different areas will be different. Therefore, in order to study the characteristics of the observer’s gaze switch and attention distribution, according to a different division into AOIs, we compared gaze entropy and mutual information values obtained in horizontal and vertical divisions into three AOIs, see Figure 7.

Figure 7.

An example of horizontal division (left) and vertical division (right) with 3 AOIs.

As done for nine AOIs, the gaze sequences of the 12 paintings are integrated, and gaze channel measures of each observer are calculated under horizontal and vertical divisions, respectively, to analyze the characteristics of eye movement behavior of each observer. Similarly, to obtain the gaze measures for each painting, the gaze sequences of the 10 observers are firstly integrated, and then processed with the gaze information channel based on horizontal and vertical division AOIs.

Figure 8 gives the clustered gaze information measures , , , and from all observers under the horizontal and vertical division. For the vertical division, the entropies (, and ) are higher than the entropy measures of horizontal division, while the mutual information from observers is lower in general under the vertical division, except for observer1. The larger mutual information represents the stronger relevance of the gaze in the area of horizontal division, so it can be concluded that gaze shift is more likely to occur in the horizontal direction.

Figure 8.

The clustered gaze measures for all observers under horizontal and vertical division: (a) , (b) , (c) , (d) .

Figure 9 presents the clustered gaze information measures , , , and of the 12 paintings under horizontal and vertical division. Similar to Figure 8, for most paintings, the gaze measures , , and generated by vertical division are higher than for horizontal division, while the mutual information is lower than for horizontal division. However, painting a1 and painting b2 do not follow this rule, as the and of painting a1 are higher in horizontal than vertical division, and the difference for of painting a1 between horizontal and vertical division is the smallest of all paintings. On the other hand, for painting b2, all four measures (, , , and ) have the opposite rules than for the other paintings.

Figure 9.

The clustered gaze measures for all paintings under horizontal and vertical division: (a) , (b) , (c) , (d) .

For the difference of gaze measures caused by the two division types in Figure 8 and Figure 9, we can forward the following explanation; on the one hand, the difference between the results of horizontal and vertical division is related to people’s inherent reading mode, and thus when the area of interest is divided horizontally, the number of gaze switches between different AOIs is relatively small, that is, the value is low. On the other hand, the painting content also has an important impact on the eye movement mode. For paintings a2 and a4 (as shown in Figure 2), the horizontal division splits coherently the sky and the field, with higher mutual information, while the vertical line cuts off the continuous scene, resulting in higher entropy measures and lower mutual information. For paintings a1 and b2 (as shown in Figure 2), the main body of the picture is the person. In the process of observation, people tend to observe the coherent content continuously, so the gaze shift occurs more in the vertical direction.

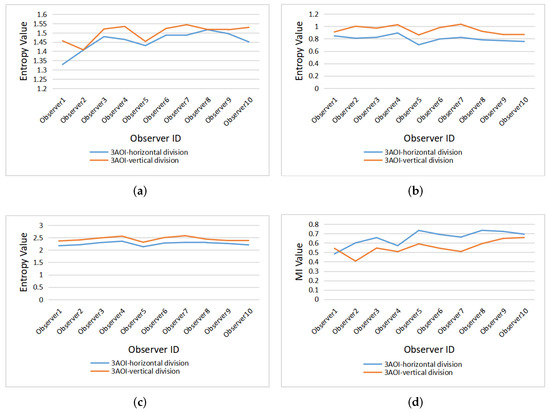

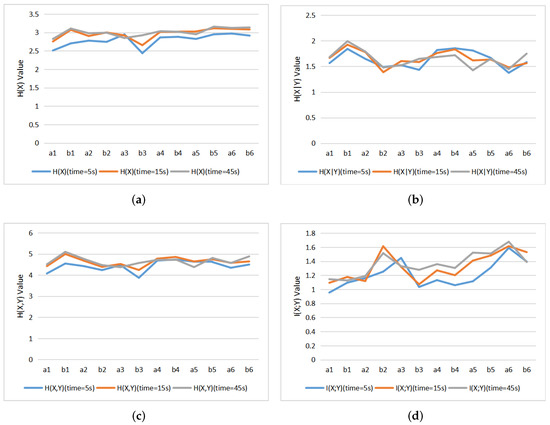

5.3. Comparison with Different Varying Observation Time

Figure 10 are line charts of 12 paintings with entropies and MI for different observation time. It can be seen that the , , and basically present an increasing trend until a stable value is reached. First, the global scanning of image over the time is illustrated by the change of , which gradually increases and tends to be stable, indicating that the distribution of fixation points are more evenly between the different AOIs. This increase in pushes the increase of . On the other hand, the increase in tends to correspond to a decrease in . The more we explore the image, the more correlation, or mutual information, we can discover, and the less the uncertainty in exploration, given by . We could thus divide observation behavior into two stages. In a first stage the observer will scan the image globally, without a specific aim or plan, and after that, the observer will focus on more details and in correlations within the image. This fits with the observations by Locher et al. [54,55].

Figure 10.

The line charts of 12 paintings with entropies and MI of different observation time: (a) , (b) , (c) , (d) .

5.4. Comparison with Aesthetic Measures

In this section, we study the relationship of gaze channel measures with the information aesthetics measures from Section 3.3. In Table 1, the values of entropy, MI, , , and are given for the 12 paintings.

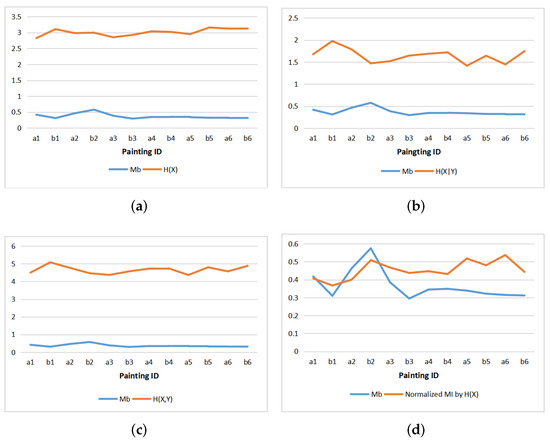

5.4.1. Comparison with

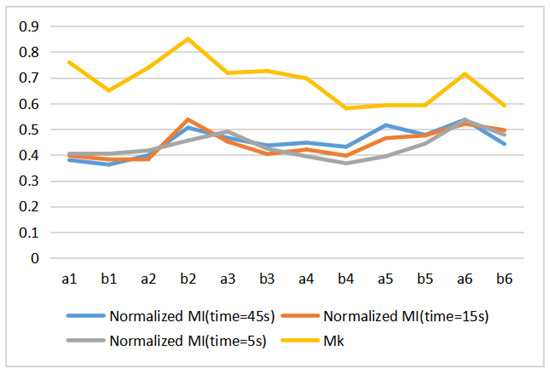

Figure 11 shows the line charts of and entropies and normalized MI for the 12 paintings. We see that the behavior of is rather opposite to the behavior of entropies, and presents some similarity with normalized mutual information. This can be interpreted as the measure representing correlation or redundancy in the scene. In fact, from its definition, is the normalized difference between the maximum entropy of the color histogram and the color histogram used in the painting, and thus represents the redundancy existing in the palette used, giving a certain measure of correlation. However, does not take into account any spacial order, thus we can not expect any accurate correlation.

Figure 11.

Comparing with entropies and normalized mutual information of paintings: (a) and , (b) and , (c) and , (d) and normalized MI.

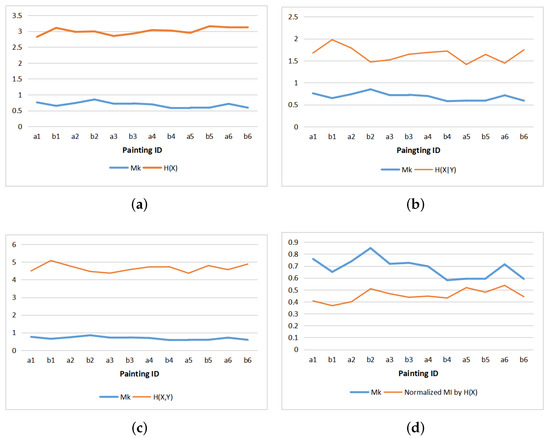

5.4.2. Comparison with

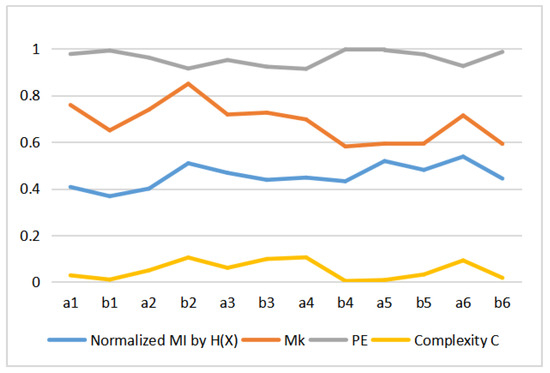

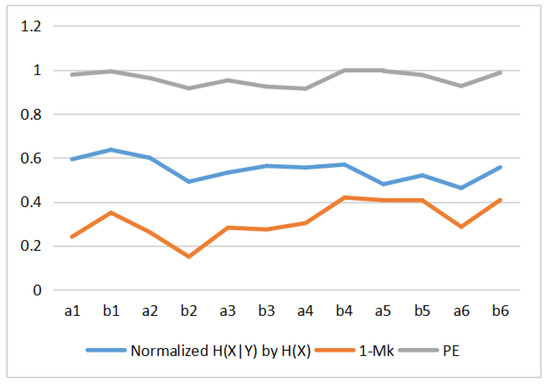

From information theory perspective, the positive correlation shown in Figure 12d between normalized MI and can be explained by the theoretical correspondence or similarity between the entropy rate expressed by and the Kolmogorov complexity approximated by the file length of the compressed image. Let us remember that is given by and normalized MI by . Thus, instead of analyzing the correlation between normalized MI and , we can equivalently analyze the relationship between the entropy rate and the Kolmogorov complexity.

Figure 12.

Comparing with entropies and normalized mutual information of paintings: (a) and , (b) and , (c) and , (d) and normalized MI.

Both measures express, from two different perspectives, the notion of compression. On the one hand, the entropy rate of a communication process quantifies the irreducible randomness in sequences produced by a source and also measures the size, in bits per symbol, of the optimal binary compression of the source [56]. Thus, a process highly random is difficult to compress. On the other hand, as mentioned above, the Kolmogorov complexity represents the difficulty in compressing an image, expressed by a set of bits which describes both its regularities and its random part. In our case, these measures are normalized in order to carry out a comparative study.

We visualize also in Figure 13 the change of normalized mutual information versus the increase in viewing time from 5 s, 15 s, to 45 s, the value of gaze mutual information will also change.

Figure 13.

Comparison and normalized MI by at different observation times.

5.4.3. Relationship with

We can find an indirect relationship of gaze information channel measures with . If we look in [53] at the spatial division triggered by the color to region channel, we can see that the first divisions, which give the maximum increase in mutual information, are triggered along horizontal divisions. This is fully in concordance with the findings in Section 5.2.

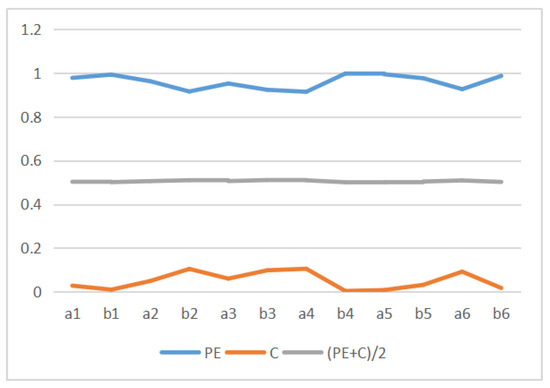

5.4.4. Comparison with and C

Table 3 shows the values of MI, , permutation entropy , and complexity C for the 12 Van Gogh paintings considered. These values are displayed in Figure 14. We can observe that the MI, and complexity C have similar curves pattern, but permutation entropy behavior is different. In fact, we can observe that C behavior is , see Figure 15. This can be explained as follows. The behavior of the normalized Jensen–Shannon distance is similar to , and thus C, defined by Equation (13), can be approximated by , and for values of near 1 as it is our case . This is illustrated in Figure 15. We display in Figure 16 the normalized (normalized + normalized =1), and . The correspondence between normalized and is the same as between normalized and . However, observe now the correspondence with too. Correspondence between the Kolmogorov complexity measured by compressibility, , and the normalized permutation entropy, was somehow to be expected, as measures [51], the degree of disorder in the pixel arrangement of an image, and the more disorder we can expect less compressibility and bigger size of compressed file, and vice versa.

Table 3.

The MI, , permutation entropy and complexity C for 12 paintings.

Figure 14.

Comparison of normalized MI by , , permutation entropy and complexity C.

Figure 15.

Illustration of the dependency of C vs for the values corresponding to the 12 paintings.

Figure 16.

Comparing normalized by , and permutation entropy of paintings.

6. Conclusions and Future Work

This paper uses quantitative indicators based on gaze information channel to study the relationship between Van Gogh artworks and human viewing. The eye tracking fixation sequences through areas of interest (AOIs) are modeled as an information channel, which extends the Markov chain modeling of those sequences.

For our study, we have used 12 Van Gogh paintings, two from each of the six periods in which critics classify Van Gogh art work. We have first shown that, with nine AOIs, the measures discriminate better between the different paintings than between the different observers. Then we have compared the values obtained with horizontal and vertical division into three AOIs, and found that in general the mutual information is higher for horizontal division. This can be put in correspondence with the semantic content of the painting.

Finally, we have compared previously defined computational measures to study artworks with the measures derived from the information channel paradigm. We have shown the relationship between the computational measures, which are independent of any observer, and the information channel measures, which come from the eye trajectories from human observers. In particular we have found a striking visual correlation between the measure which is related to the compressibility and the normalized mutual information MI, and inversely, between the normalized entropy of the channel, , and the permutation entropy, used recently to classify artworks.

Although promising, this paper has some limitations such as a small number of participants and paintings. With more data we could study quantitatively the correlations in addition to visually. Moreover, a larger variety of persons (e.g., laypersons vs. experts as in [57]) or painting styles (e.g., abstract vs. representational as in [58]) can be considered, as well as the aesthetic evaluation of the paintings by the observer. In the future, we will continue to explore the unique significance of human visual search patterns, which need to be paired with behavioral or cognitive metrics.

Author Contributions

Q.H. wrote the original draft, and performed visualization, formal analysis and investigation. L.M. performed the experiments and an initial data analysis. M.S. and M.F. provided the conceptualization and edited the draft. J.Z. provided supervision and funding acquisition. All authors have read and approved the final manuscript.

Funding

This work is supported by the National Natural Science Foundation of China under grant No. 61702359, and by grant TIN2016-75866-C3-3-R from Spanish Government.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

The (unnormalized) transition matrices () of painting a1.

Table A1.

The (unnormalized) transition matrices () of painting a1.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 11 | 6 | 2 | 5 | 0 | 0 | 0 | 0 | 0 | 24 |

| AOI2 | 7 | 114 | 8 | 5 | 30 | 2 | 1 | 2 | 0 | 169 |

| AOI3 | 2 | 8 | 12 | 0 | 3 | 7 | 0 | 0 | 0 | 32 |

| AOI4 | 3 | 3 | 1 | 64 | 20 | 9 | 4 | 1 | 1 | 106 |

| AOI5 | 1 | 27 | 0 | 16 | 134 | 15 | 1 | 23 | 0 | 217 |

| AOI6 | 0 | 8 | 9 | 2 | 11 | 86 | 0 | 3 | 5 | 124 |

| AOI7 | 0 | 0 | 0 | 6 | 1 | 1 | 28 | 14 | 0 | 50 |

| AOI8 | 1 | 2 | 0 | 6 | 18 | 1 | 14 | 117 | 7 | 166 |

| AOI9 | 0 | 0 | 0 | 1 | 0 | 4 | 2 | 7 | 13 | 27 |

| Total | 25 | 168 | 32 | 105 | 217 | 125 | 50 | 167 | 26 | 915 |

Table A2.

The (unnormalized) transition matrices () of painting a2.

Table A2.

The (unnormalized) transition matrices () of painting a2.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 17 | 1 | 1 | 9 | 1 | 1 | 1 | 0 | 0 | 31 |

| AOI2 | 7 | 12 | 1 | 2 | 0 | 10 | 0 | 1 | 0 | 33 |

| AOI3 | 0 | 8 | 8 | 0 | 0 | 0 | 2 | 0 | 0 | 18 |

| AOI4 | 4 | 0 | 1 | 60 | 10 | 9 | 0 | 4 | 0 | 88 |

| AOI5 | 1 | 1 | 0 | 9 | 34 | 1 | 0 | 8 | 2 | 56 |

| AOI6 | 1 | 6 | 1 | 10 | 5 | 73 | 6 | 12 | 5 | 119 |

| AOI7 | 0 | 0 | 5 | 1 | 0 | 10 | 22 | 0 | 3 | 41 |

| AOI8 | 0 | 2 | 0 | 2 | 4 | 8 | 2 | 40 | 11 | 69 |

| AOI9 | 1 | 1 | 1 | 1 | 2 | 2 | 8 | 6 | 42 | 64 |

| Total | 31 | 31 | 18 | 94 | 56 | 114 | 41 | 71 | 63 | 519 |

Table A3.

The (unnormalized) transition matrices () of painting a3.

Table A3.

The (unnormalized) transition matrices () of painting a3.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 45 | 8 | 0 | 14 | 3 | 0 | 0 | 0 | 0 | 70 |

| AOI2 | 8 | 25 | 7 | 1 | 16 | 2 | 1 | 0 | 0 | 60 |

| AOI3 | 0 | 6 | 20 | 0 | 0 | 4 | 0 | 0 | 0 | 30 |

| AOI4 | 10 | 4 | 0 | 118 | 16 | 1 | 12 | 5 | 1 | 167 |

| AOI5 | 3 | 15 | 1 | 23 | 186 | 30 | 3 | 14 | 1 | 276 |

| AOI6 | 1 | 1 | 0 | 1 | 33 | 168 | 0 | 2 | 10 | 216 |

| AOI7 | 2 | 0 | 0 | 7 | 6 | 1 | 50 | 16 | 1 | 83 |

| AOI8 | 1 | 1 | 0 | 2 | 16 | 3 | 17 | 90 | 4 | 134 |

| AOI9 | 0 | 0 | 2 | 0 | 0 | 8 | 0 | 6 | 22 | 38 |

| Total | 70 | 60 | 30 | 166 | 276 | 217 | 83 | 133 | 39 | 1074 |

Table A4.

The (unnormalized) transition matrices () of painting a4.

Table A4.

The (unnormalized) transition matrices () of painting a4.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 115 | 22 | 2 | 17 | 2 | 0 | 4 | 0 | 1 | 163 |

| AOI2 | 24 | 120 | 17 | 3 | 15 | 2 | 0 | 0 | 3 | 184 |

| AOI3 | 0 | 22 | 160 | 3 | 4 | 5 | 1 | 0 | 2 | 197 |

| AOI4 | 14 | 1 | 1 | 44 | 19 | 1 | 7 | 2 | 2 | 91 |

| AOI5 | 3 | 16 | 5 | 15 | 74 | 17 | 2 | 3 | 2 | 137 |

| AOI6 | 0 | 2 | 7 | 3 | 13 | 41 | 1 | 3 | 6 | 76 |

| AOI7 | 2 | 1 | 2 | 3 | 1 | 1 | 41 | 12 | 2 | 65 |

| AOI8 | 0 | 0 | 1 | 2 | 5 | 2 | 8 | 29 | 15 | 62 |

| AOI9 | 0 | 0 | 1 | 1 | 4 | 7 | 2 | 13 | 48 | 76 |

| Total | 158 | 184 | 196 | 91 | 137 | 76 | 66 | 62 | 81 | 1051 |

Table A5.

The (unnormalized) transition matrices () of painting a5.

Table A5.

The (unnormalized) transition matrices () of painting a5.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 22 | 10 | 0 | 6 | 3 | 0 | 2 | 3 | 3 | 49 |

| AOI2 | 7 | 26 | 7 | 1 | 8 | 0 | 1 | 0 | 1 | 51 |

| AOI3 | 1 | 7 | 34 | 0 | 2 | 6 | 0 | 0 | 0 | 50 |

| AOI4 | 10 | 0 | 1 | 101 | 15 | 0 | 8 | 0 | 0 | 135 |

| AOI5 | 2 | 3 | 0 | 21 | 139 | 18 | 4 | 5 | 8 | 200 |

| AOI6 | 1 | 1 | 5 | 0 | 19 | 206 | 0 | 1 | 20 | 253 |

| AOI7 | 0 | 2 | 0 | 4 | 4 | 1 | 87 | 13 | 1 | 112 |

| AOI8 | 0 | 0 | 2 | 0 | 7 | 1 | 11 | 60 | 14 | 95 |

| AOI9 | 5 | 0 | 0 | 0 | 3 | 23 | 0 | 15 | 73 | 119 |

| Total | 48 | 49 | 49 | 133 | 200 | 255 | 113 | 97 | 120 | 1064 |

Table A6.

The (unnormalized) transition matrices () of painting a6.

Table A6.

The (unnormalized) transition matrices () of painting a6.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 73 | 7 | 4 | 0 | 1 | 13 | 0 | 0 | 0 | 98 |

| AOI2 | 10 | 85 | 22 | 0 | 9 | 2 | 0 | 0 | 1 | 129 |

| AOI3 | 1 | 29 | 148 | 9 | 6 | 13 | 3 | 3 | 13 | 225 |

| AOI4 | 0 | 1 | 15 | 109 | 17 | 0 | 0 | 21 | 0 | 163 |

| AOI5 | 1 | 6 | 2 | 25 | 111 | 0 | 0 | 0 | 1 | 146 |

| AOI6 | 13 | 2 | 13 | 0 | 1 | 99 | 14 | 0 | 2 | 144 |

| AOI7 | 0 | 1 | 2 | 1 | 1 | 16 | 81 | 0 | 13 | 115 |

| AOI8 | 0 | 0 | 2 | 19 | 0 | 0 | 0 | 71 | 12 | 104 |

| AOI9 | 0 | 0 | 17 | 0 | 0 | 1 | 16 | 8 | 91 | 133 |

| Total | 98 | 131 | 225 | 163 | 146 | 144 | 114 | 103 | 133 | 1257 |

Table A7.

The (unnormalized) transition matrices () of painting b1.

Table A7.

The (unnormalized) transition matrices () of painting b1.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 88 | 8 | 18 | 19 | 3 | 1 | 2 | 0 | 3 | 142 |

| AOI2 | 5 | 40 | 13 | 2 | 7 | 15 | 0 | 1 | 1 | 84 |

| AOI3 | 16 | 15 | 61 | 1 | 12 | 3 | 0 | 0 | 1 | 109 |

| AOI4 | 22 | 2 | 3 | 67 | 6 | 0 | 15 | 3 | 0 | 118 |

| AOI5 | 4 | 9 | 10 | 7 | 54 | 8 | 0 | 5 | 0 | 97 |

| AOI6 | 0 | 8 | 4 | 1 | 6 | 28 | 1 | 2 | 14 | 64 |

| AOI7 | 1 | 0 | 0 | 13 | 0 | 2 | 35 | 16 | 3 | 70 |

| AOI8 | 0 | 1 | 0 | 5 | 6 | 2 | 14 | 27 | 10 | 65 |

| AOI9 | 1 | 1 | 0 | 0 | 3 | 8 | 3 | 11 | 34 | 61 |

| Total | 137 | 84 | 109 | 115 | 97 | 67 | 70 | 65 | 66 | 810 |

Table A8.

The (unnormalized) transition matrices () of painting b2.

Table A8.

The (unnormalized) transition matrices () of painting b2.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 55 | 11 | 0 | 13 | 2 | 0 | 1 | 0 | 0 | 82 |

| AOI2 | 12 | 122 | 8 | 4 | 19 | 2 | 0 | 0 | 1 | 168 |

| AOI3 | 0 | 11 | 50 | 0 | 1 | 12 | 0 | 1 | 1 | 76 |

| AOI4 | 13 | 3 | 1 | 123 | 22 | 1 | 16 | 6 | 1 | 186 |

| AOI5 | 2 | 18 | 0 | 26 | 248 | 10 | 2 | 39 | 3 | 348 |

| AOI6 | 0 | 0 | 15 | 1 | 12 | 109 | 0 | 2 | 18 | 157 |

| AOI7 | 0 | 1 | 0 | 13 | 5 | 2 | 54 | 4 | 0 | 79 |

| AOI8 | 0 | 0 | 0 | 6 | 36 | 2 | 6 | 155 | 15 | 220 |

| AOI9 | 0 | 0 | 2 | 0 | 3 | 19 | 0 | 15 | 83 | 122 |

| Total | 82 | 166 | 76 | 186 | 348 | 157 | 79 | 222 | 122 | 1438 |

Table A9.

The (unnormalized) transition matrices () of painting b3.

Table A9.

The (unnormalized) transition matrices () of painting b3.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 38 | 14 | 4 | 8 | 1 | 1 | 0 | 0 | 0 | 66 |

| AOI2 | 11 | 51 | 18 | 2 | 5 | 4 | 0 | 0 | 0 | 91 |

| AOI3 | 6 | 16 | 45 | 0 | 2 | 11 | 0 | 0 | 0 | 80 |

| AOI4 | 9 | 2 | 0 | 51 | 24 | 0 | 6 | 0 | 0 | 92 |

| AOI5 | 1 | 3 | 1 | 22 | 212 | 30 | 0 | 23 | 3 | 295 |

| AOI6 | 0 | 3 | 12 | 0 | 28 | 121 | 1 | 9 | 7 | 181 |

| AOI7 | 0 | 0 | 0 | 7 | 0 | 0 | 32 | 14 | 3 | 56 |

| AOI8 | 0 | 0 | 0 | 3 | 22 | 3 | 14 | 70 | 15 | 127 |

| AOI9 | 0 | 1 | 0 | 0 | 1 | 10 | 3 | 12 | 27 | 54 |

| Total | 65 | 90 | 80 | 93 | 295 | 180 | 56 | 128 | 55 | 1042 |

Table A10.

The (unnormalized) transition matrices () of painting b4.

Table A10.

The (unnormalized) transition matrices () of painting b4.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 81 | 0 | 14 | 4 | 0 | 23 | 0 | 0 | 0 | 122 |

| AOI2 | 1 | 32 | 6 | 3 | 0 | 0 | 0 | 14 | 3 | 59 |

| AOI3 | 7 | 9 | 53 | 32 | 0 | 2 | 1 | 1 | 1 | 106 |

| AOI4 | 4 | 7 | 28 | 179 | 15 | 17 | 8 | 17 | 7 | 282 |

| AOI5 | 0 | 1 | 0 | 21 | 84 | 3 | 27 | 5 | 7 | 148 |

| AOI6 | 23 | 0 | 2 | 13 | 2 | 140 | 21 | 0 | 0 | 201 |

| AOI7 | 2 | 0 | 0 | 5 | 32 | 17 | 84 | 1 | 1 | 142 |

| AOI8 | 2 | 9 | 0 | 22 | 6 | 0 | 2 | 99 | 14 | 154 |

| AOI9 | 0 | 1 | 1 | 2 | 9 | 0 | 0 | 17 | 32 | 62 |

| Total | 120 | 59 | 104 | 281 | 148 | 202 | 143 | 154 | 65 | 1276 |

Table A11.

The (unnormalized) transition matrices () of painting b5.

Table A11.

The (unnormalized) transition matrices () of painting b5.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 113 | 24 | 3 | 20 | 3 | 1 | 3 | 0 | 0 | 167 |

| AOI2 | 27 | 87 | 21 | 6 | 13 | 4 | 1 | 0 | 0 | 159 |

| AOI3 | 3 | 22 | 149 | 0 | 2 | 16 | 0 | 0 | 1 | 193 |

| AOI4 | 17 | 3 | 1 | 71 | 27 | 2 | 18 | 0 | 0 | 139 |

| AOI5 | 6 | 20 | 4 | 16 | 99 | 16 | 2 | 17 | 4 | 184 |

| AOI6 | 0 | 3 | 13 | 1 | 17 | 92 | 0 | 4 | 14 | 144 |

| AOI7 | 0 | 0 | 0 | 21 | 7 | 0 | 87 | 15 | 0 | 130 |

| AOI8 | 1 | 0 | 1 | 5 | 14 | 3 | 19 | 105 | 18 | 166 |

| AOI9 | 0 | 1 | 0 | 0 | 2 | 9 | 1 | 24 | 114 | 151 |

| Total | 167 | 160 | 192 | 140 | 184 | 143 | 131 | 165 | 151 | 1433 |

Table A12.

The (unnormalized) transition matrices () of painting b6.

Table A12.

The (unnormalized) transition matrices () of painting b6.

| a1 | AOI1 | AOI2 | AOI3 | AOI4 | AOI5 | AOI6 | AOI7 | AOI8 | AOI9 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| AOI1 | 62 | 5 | 15 | 2 | 12 | 2 | 0 | 0 | 1 | 99 |

| AOI2 | 4 | 78 | 17 | 0 | 3 | 2 | 0 | 9 | 0 | 113 |

| AOI3 | 13 | 15 | 105 | 2 | 4 | 14 | 0 | 2 | 1 | 156 |

| AOI4 | 4 | 1 | 2 | 75 | 15 | 1 | 20 | 3 | 1 | 122 |

| AOI5 | 8 | 0 | 5 | 21 | 103 | 15 | 1 | 2 | 1 | 156 |

| AOI6 | 4 | 1 | 9 | 5 | 15 | 132 | 12 | 22 | 3 | 203 |

| AOI7 | 0 | 1 | 0 | 12 | 2 | 15 | 67 | 5 | 23 | 125 |

| AOI8 | 0 | 9 | 0 | 3 | 2 | 17 | 7 | 86 | 18 | 142 |

| AOI9 | 0 | 1 | 3 | 3 | 0 | 4 | 18 | 15 | 74 | 118 |

| Total | 95 | 111 | 156 | 123 | 156 | 202 | 125 | 144 | 122 | 1234 |

References

- Was, C.; Sansosti, F.; Morris, B. Eye-Tracking Technology Applications in Educational Research; IGI Global: Hershey, PA, USA, 2016. [Google Scholar]

- Prieto, L.P.; Sharma, K.; Wen, Y.; Dillenbourg, P. The Burden of Facilitating Collaboration: Towards Estimation of Teacher Orchestration Load Using Eye-tracking Measures; International Society of the Learning Sciences, Inc. (ISLS): Albuquerque, NM, USA, 2015. [Google Scholar]

- Ellis, E.M.; Borovsky, A.; Elman, J.L.; Evans, J.L. Novel Word Learning: An Eye-tracking Study. Are 18-month-old Late Talkers Really Different From Their Typical Peers? J. Commun. Disord. 2015, 58, 143–157. [Google Scholar] [CrossRef] [PubMed]

- Fox, S.E.; Faulkner-Jones, B.E. Eye-Tracking in the Study of Visual Expertise: Methodology and Approaches in Medicine. Frontline Learn. Res. 2017, 5, 29–40. [Google Scholar] [CrossRef]

- Jarodzka, H.; Boshuizen, H.P. Unboxing the Black Box of Visual Expertise in Medicine. Frontline Learn. Res. 2017, 5, 167–183. [Google Scholar] [CrossRef][Green Version]

- Fong, A.; Hoffman, D.J.; Zachary Hettinger, A.; Fairbanks, R.J.; Bisantz, A.M. Identifying Visual Search Patterns in Eye Gaze Data; Gaining Insights into Physician Visual Workflow. J. Am. Med. Inform. Assoc. 2016, 23, 1180–1184. [Google Scholar] [CrossRef] [PubMed]

- McLaughlin, L.; Bond, R.; Hughes, C.; McConnell, J.; McFadden, S. Computing Eye Gaze Metrics for the Automatic Assessment of Radiographer Performance During X-ray Image Interpretation. Int. J. Med. Inform. 2017, 105, 11–21. [Google Scholar] [CrossRef]

- Holzman, P.S.; Proctor, L.R.; Hughes, D.W. Eye-tracking Patterns in Schizophrenia. Science 1973, 181, 179–181. [Google Scholar] [CrossRef]

- Pavlidis, G.T. Eye Movements in Dyslexia: Their Diagnostic Significance. J. Learn. Disabil. 1985, 18, 42–50. [Google Scholar] [CrossRef]

- Zhang, L.; Wade, J.; Bian, D.; Fan, J.; Swanson, A.; Weitlauf, A.; Warren, A.; Sarkar, N. Cognitive Load Measurement in A Virtual Reality-based Driving System for Autism Intervention. IEEE Trans. Affect. Comput. 2017, 8, 176–189. [Google Scholar] [CrossRef]

- Vidal, M.; Bulling, A.; Gellersen, H. Pursuits: Spontaneous Eye-based Interaction for Dynamic Interfaces. GetMobile Mob. Comput. Commun. 2015, 18, 8–10. [Google Scholar] [CrossRef]

- Strandvall, T. Eye Tracking in Human-computer Interaction and Usability Research. In Human-Computer Interaction—INTERACT 2009, Proceedings of the 12th IFIP TC 13 International Conference, Uppsala, Sweden, 24–28 August 2009; Springer: Berlin/Heidelberg, Germany, 2010; pp. 936–937. [Google Scholar]

- Wang, Q.; Yang, S.; Liu, M.; Cao, Z.; Ma, Q. An Eye-tracking Study of Website Complexity from Cognitive Load Perspective. Decis. Support Syst. 2014, 62, 1–10. [Google Scholar] [CrossRef]

- Schiessl, M.; Duda, S.; Tholke, A.; Fischer, R. Eye tracking and Its Application in Usability and Media Research. MMI-Interakt. J. 2003, 6, 41–50. [Google Scholar]

- Steiner, G.A. The People Look at Commercials: A Study of Audience Behavior. J. Bus. 1966, 39, 272–304. [Google Scholar] [CrossRef]

- Lunn, D.; Harper, S. Providing Assistance to Older Users of Dynamic Web Content. Comput. Hum. Behav. 2011, 27, 2098–2107. [Google Scholar] [CrossRef]

- Stuijfzand, B.G.; Van der Schaaf, M.F.; Kirschner, F.C.; Ravesloot, C.J.; Van der Gijp, A.; Vincken, K.L. Medical Students’ Cognitive Load in Volumetric Image Interpretation: Insights from Human-computer Interaction and Eye Movements. Comput. Hum. Behav. 2016, 62, 394–403. [Google Scholar] [CrossRef]

- Ju, U.; Kang, J.; Wallraven, C. Personality Differences Predict Decision-making in An Accident Situation in Virtual Driving. In Proceedings of the 2016 IEEE Virtual Reality, Greenville, SC, USA, 19–23 March 2016; pp. 77–82. [Google Scholar]

- Chen, X.; Starke, S.D.; Baber, C.; Howes, A. A Cognitive Model of How People Make Decisions through Interaction with Visual Displays. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1205–1216. [Google Scholar]

- Van Gog, T.; Scheiter, K. Eye Tracking as A Tool to Study and Enhance Multimedia Learning; Elsevier: Amsterdam, The Netherlands, 2010. [Google Scholar]

- Navarro, O.; Molina, A.I.; Lacruz, M.; Ortega, M. Evaluation of Multimedia Educational Materials Using Eye Tracking. Procedia-Soc. Behav. Sci. 2015, 197, 2236–2243. [Google Scholar] [CrossRef]

- Van Wermeskerken, M.; Van Gog, T. Seeing the Instructor’s Face and Gaze in Demonstration Video Examples Affects Attention Allocation but not Learning. Comput. Educ. 2017, 113, 98–107. [Google Scholar] [CrossRef]

- Duchowski, A.T.; Driver, J.; Jolaoso, S.; Tan, W.; Ramey, B.N.; Robbins, A. Scanpath Comparison Revisited. In Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications, Austin, TX, USA, 22–24 March 2010; pp. 219–226. [Google Scholar]

- Fe Bruin, J.A.; Malan, K.M.; Eloff, J.H.P. Saccade Deviation Indicators for Automated Eye Tracking Analysis. In Proceedings of the 2013 Conference on Eye Tracking South Africa, Cape Town, South Africa, 29–31 August 2013; pp. 47–54. [Google Scholar]

- Peysakhovich, V.; Hurter, C. Scanpath visualization and comparison using visual aggregation techniques. J. Eye Mov. Res. 2018, 10, 1–14. [Google Scholar]

- Mishra, A.; Kanojia, D.; Nagar, S.; Dey, K.; Bhattacharyya, P. Scanpath Complexity: Modeling Reading Effort Using Gaze Information. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Li, A.; Zhang, Y.; Chen, Z. Scanpath Mining of Eye Movement Trajectories for Visual Attention Analysis. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 535–540. [Google Scholar]

- Grindinger, T.; Duchowski, A.T.; Sawyer, M. Group-wise Similarity and Classification of Aggregate Scanpaths. In Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications, Austin, TX, USA, 22–24 March 2010; pp. 101–104. [Google Scholar]

- Isokoski, P.; Kangas, J.; Majaranta, P. Useful Approaches to Exploratory Analysis of Gaze Data: Enhanced Heatmaps, cluster Maps, and Transition Maps. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; p. 68. [Google Scholar]

- Gu, Z.; Jin, C.; Dong, Z.; Chang, D. Predicting Webpage Aesthetics with Heatmap Entropy. arXiv 2018, arXiv:1803.01537. [Google Scholar] [CrossRef]

- Ellis, S.R.; Stark, L. Statistical Dependency in Visual Scanning. Hum. Factors 1986, 28, 421–438. [Google Scholar] [CrossRef]

- Shiferaw, B.; Downey, L.; Crewther, D. A review of gaze entropy as a measure of visual scanning efficiency. Neurosci. Biobehav. Rev. 2019, 96, 353–366. [Google Scholar] [CrossRef]

- Ma, L.J.; Sbert, M.; Xu, Q.; Feixas, M. Gaze Information Channel. In Pacific Rim Conference on Multimedia; Springer: Cham, Switzerland, 2018; pp. 575–585. [Google Scholar]

- Hao, Q.; Sbert, M.; Ma, L. Gaze Information Channel in Cognitive Comprehension of Poster Reading. Entropy 2019, 21, 444. [Google Scholar] [CrossRef]

- Vandeberg, L.; Bouwmeester, S.; Bocanegra, B.R.; Zwaan, R.A. Detecting cognitive interactions through eye movement transitions. J. Mem. Lang. 2013, 69, 445–460. [Google Scholar] [CrossRef]

- Krejtz, K.; Duchowski, A.; Szmidt, T.; Krejtz, I.; Gonzalez Perilli, F.; Pires, A.; Vilaro, A.; Villalobos, N. Gaze Transition Entropy. ACM TAP 2015, 13, 4. [Google Scholar] [CrossRef]

- Krejtz, K.; Szmidt, T.; Duchowski, A.; Krejtz, I.; Perilli, F.G.; Pires, A.; Vilaro, A.; Villalobos, N. Entropy-based Statistical Analysis of Eye Movement Transitions. In Proceedings of the 2014 Symposium on Eye Tracking Research and Applications, Safety Harbor, FL, USA, 26–28 March 2014; pp. 159–166. [Google Scholar]

- Raptis, G.E.; Fidas, C.A.; Avouris, N.M. On Implicit Elicitation of Cognitive Strategies using Gaze Transition Entropies in Pattern Recognition Tasks. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1993–2000. [Google Scholar]

- Zhong, M.; Zhao, X.; Zou, X.C.; Wang, J.Z.; Wang, W. Markov chain based computational visual attention model that learns from eye tracking data. Pattern Recognit. Lett. 2014, 49, 1–10. [Google Scholar] [CrossRef]

- Huang, Y.T. The female gaze: Content composition and slot position in personalized banner ads, and how they influence visual attention in online shoppers. Comput. Hum. Behav. 2018, 82, 1–15. [Google Scholar] [CrossRef]

- Hwang, A.D.; Wang, H.C.; Pomplun, M. Semantic Guidance of Eye Movements in Real-world Scenes. Vis. Res. 2011, 51, 1192–1205. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley and Sons: Hoboken, NJ, USA, 1991; pp. 33–36. [Google Scholar]

- Wallraven, C.; Cunningham, D.W.; Rigau, J.; Feixas, M.; Sbert, M. Aesthetic appraisal of art: From eye movements to computers. In Computational Aesthetics 2009: Eurographics Workshop on Computational Aesthetics in Graphics, Visualization and Imaging; Eurographics: Goslar, Germany, 2009; pp. 137–144. [Google Scholar]

- Rigau, J.; Feixas, M.; Sbert, M. Conceptualizing Birkhoff’s Aesthetic Measure Using Shannon Entropy and Kolmogorov Complexity. In Computational Aesthetics; Eurographics: Goslar, Germany, 2007; pp. 105–112. [Google Scholar]

- Rigau, J.; Feixas, M.; Sbert, M. Informational dialogue with Van Gogh’s paintings. In Computational Aesthetics’08: Proceedings of the Fourth Eurographics Conference on Computational Aesthetics in Graphics, Visualization and Imaging; Eurographics: Goslar, Germany, 2008; pp. 115–122. [Google Scholar]

- Rigau, J.; Feixas, M.; Sbert, M. Informational aesthetics measures. IEEE Comput. Graph. Appl. 2008, 28, 24–34. [Google Scholar] [CrossRef]

- Rigau, J.; Feixas, M.; Sbert, M.; Wallraven, C. Toward Auvers Period: Evolution of Van Gogh’s Style. In Computational Aesthetics; Eurographics: Goslar, Germany, 2010; pp. 99–106. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar]

- Einführung in die informationstheoretische Ästhetik. Grundlegung und Anwendung in der Texttheorie (Introduction to the Information-theoretical Aesthetics. Foundation and Application in the Text Theory); Rowohlt Taschenbuch Verlag GmbH: Hamburg, Germany, 1969. [Google Scholar]

- Birkhoff, G.D. Aesthetic Measure; Harvard University Press: Cambridge, MA, USA, 1933. [Google Scholar]

- Sigaki, H.Y.; Perc, M.; Ribeiro, H.V. History of art paintings through the lens of entropy and complexity. Proc. Natl. Acad. Sci. USA 2018, 115, E8585–E8594. [Google Scholar] [CrossRef]

- Ribeiro, H.V.; Zunino, L.; Lenzi, E.K.; Santoro, P.A.; Mendes, R.S. Complexity-entropy causality plane as a complexity measure for two-dimensional patterns. PLoS ONE 2012, 7, e40689. [Google Scholar] [CrossRef]

- Feixas, M.; Bardera, A.; Rigau, J.; Xu, Q.; Sbert, M. Information theory tools for image processing. Synth. Lect. Comput. Graph. Animat. 2014, 6, 1–164. [Google Scholar] [CrossRef]

- Locher, P.; Krupinski, E.A.; Mello-Thoms, C.; Nodine, C.F. Visual interest in pictorial art during an aesthetic experience. Spat. Vis. 2007, 21, 55–77. [Google Scholar] [CrossRef] [PubMed]

- Locher, P.J. The usefulness of eye movement recordings to subject an aesthetic episode with visual art to empirical scrutiny. Psychol. Sci. 2006, 48, 106. [Google Scholar]

- Crutchfield, J.; Feldman, D. Regularities Unseen, Randomness Observed: Levels of Entropy Convergence. Chaos Interdiscip. J. Nonlinear Sci. 2003, 13, 25–54. [Google Scholar] [CrossRef]

- Vogt, S.; Magnussen, S. Expertise in pictorial perception: Eye-movement patterns and visual memory in artists and laymen. Perception 2007, 36, 91–100. [Google Scholar] [CrossRef]

- Pihko, E.; Virtanen, A.; Saarinen, V.-M.; Pannasch, S.; Hirvenkari, L.; Tossavainen, T.; Haapala, A.; Hari, R. Experiencing art: The influence of expertise and painting abstraction level. Front. Hum. Neurosci. 2011, 5, 94. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).