1. Introduction

Methods of search and detection address various problems of finding hidden objects and chasing after targets [

1]. Studies in this field were initiated in 1942 as a part of the mission to detect submarines in the Atlantic [

2] and were later distributed among various applications and scenarios.

In particular, the search problem addresses the activity of the searcher up to hunting the target in its location, and often results in an optimal search policy or in an effective movement trajectory of the searcher. The detection problem, in contrast, focuses on the recognition of the target’s location without necessarily reaching its physical location and results, usually, in a cost-effective distribution of the search efforts [

2]. For an overview of the field and related problems, see, e.g., [

3,

4,

5]. In the last decades, with the development of mobile robots and multi-robot systems, methods of search and detection were extended to apply to groups of autonomous agents, so the current studies also include considerations of communication and collective decision-making under uncertainties [

6,

7].

In the paper, we consider the problem of detection of multiple targets by a group of mobile agents. This problem is a direct extension of the classical Koopman setting that aims at the detection of the hidden objects [

2,

5,

8]. However, in contrast to Koopman’s formulation, we assume that the detection process is conducted by a finite small number of indivisible agents that start in certain locations, move over the domain, and explore it up to the detection of all the targets. Also, we assume that the agents are equipped with sensors that can detect the existence of the target in certain locations, yet with both false positive and false negative errors. The simple version of this problem was considered in 2012 by Israel et al. [

9] in the framework of search in shadowed space. In the same year, Chernikhovsky et al. [

10] considered a similar search problem with erroneous sensors and showed that the solution to the detection algorithm terminates in a finite number of steps.

The search problem after static or moving targets by finite and usually small number of agents appears in various applications, both military, and civil ones. The standard taxonomies of this problem are often based on the targets’ and the search agents’ abilities, such as their mobility level, their knowledge about the activities of the other party and on their cooperation level [

4,

7,

11]. Many search algorithms are classified with respect to the optimization principles that govern the motion of the agents in the group. In particular, since a global optimization of the agents’ motions requires unreasonable time and computation power, search algorithms are often implemented by using different heuristics, mostly informational one or by mimicking animal foraging [

6,

7,

12].

In the paper, we consider a detection process with several assumptions, which are usually considered separately. Following the basic Koopman formulation, we consider a probabilistic search scheme, in which the search agents have knowledge only about the targets’ location probabilities, while assuming that Koopman’s exponential random search formula which defines those detection probabilities is applicable. At the same time, we assume that the search-agents’ group includes a finite small number of members and that the agents are indivisible; such an assumption implies that the problem cannot be considered solely as a search-efforts distribution problem, but also as a problem that requires methods of swarm navigation and control.

In practice, communication among the agents as well as information processing can be organized at different levels: from peer-to-peer networks to a scheme of a central station that receives information from all the agents in the group [

6,

7]. In the first case, each agent obtains information from its neighbors and makes decisions based on such local information, while in the second case, a central station defines the agents’ motion based on global information. In practice, the algorithms of swarm control use both approaches, while in practice, applications based on a central station are usually restricted by computation power and communication constraints. Also, in most of the military applications, the use of a central station is a challenging one due to security reasons. In the suggested techniques, we assume an existence of a central station that holds a global probability map, which, on the one hand, can be used for theoretical consideration of the effectiveness of the suggested methods, while, on the other hand, is required for generating strict criteria for the termination of the detection process. Nonetheless, as we demonstrate in the paper, the use of a global map by a central station for the navigation of the group of search agents is less effective than the use of local maps accompanied by peer-to-peer communication.

Finally, we continue a line of practical research works [

10,

13], yet in contrast to some known methods of group search and detection, we consider a more realistic situation, in which both false positive and false negative detection errors exist. Another practical assumption is the one that considers a variety of sensors that can be used by each agent.

In this study, we consider the detection of a number of static targets; however, the developed algorithms allow further modification for the detection of mobile targets that is out of the scope of this paper. The objective of the presented research is to introduce methods of control of the mobile agents acting within a group such that detection of the targets is conducted in a minimal time period. Notice that the agents are not required to catch the targets, which is to reach physically their locations, but rather to detect the locations of the targets using their on-board sensors.

The suggested solution follows the occupancy grid approach, where the map of the targets’ candidate points is created simultaneously with the detection process and the agents’ motion [

14,

15]. The implemented sensor-fusion scheme follows a general Bayesian scheme [

16] with varying sensitivity of the sensors.

The algorithm implements three different levels of the agent’s knowledge about the targets’ location:

A global map that represents the information that is available to the group of agents and is obtained by fusion of information which is available to each agent.

A local map that represents the information available to every single agent and is obtained by fusion of information obtained by the agent’s sensors.

A sensor map which is obtained by a single sensor.

The above maps are also called probability maps since they provide the information on the target’s location by a probability distribution, often using a colored heat map to indicate the probability of the target being located in each grid of the map.

The algorithm was trained with different decision-making objectives based on:

The expected information gain by the agent’s next step.

The location of the center of view, which indicates a future agent’s location that, given the sensors’ capabilities, is expected to yield a maximal modification of the probability map. Formally, this approach relies on the expected information gain procedure which is applied to the global map instead of focusing on the close neighborhood of the agent.

The location of the center of gravity of the map, which is the first moment of the targets’ location probabilities.

In the detection by a single agent, it was found that all three procedures provide similar results. However, since the center of view approach implements additional information about sensors’ capabilities, in certain cases, it demonstrates better performance than two other algorithms. Also notice, that if the sensors are errorless and equal, then the center of view and the center of gravity approaches result in the same detection times.

In a collective detection by the agents’ group, it was found that in all three algorithms, the use of an individual local map by each agent results in shorter detection times than the times when using the global map. Further studies of these scenarios demonstrated that, due to the similar decisions which are governed by the use of a global map, the agents move towards the same areas instead of dividing the efforts over the space to simultaneously investigate different areas. These results meet recent theoretical considerations of the altruistic and egoistic behavior of search agents in the groups [

17] and form a basis for further considerations of the problem of “division of labor” in the groups of autonomous agents.

The algorithm was implemented by Python programming language, and the code can be directly used for solving the real-world tasks of detection of targets by groups of mobile agents.

2. Scenarios of Cooperative Detection

The considered detection problem follows general Koopman’s scenario [

2] (see also [

5,

8]) with additional consideration of the agents’ motion toward the destinated locations. Formally, using the occupancy grid approach [

14,

15], the problem is defined as follows.

Let be a finite set of cells such that represents a grid over a closed two-dimensional domain, and consider a set of mobile agents , , searching for hidden targets in the domain. For simplicity, we assume that each agent, as well as each target, can occupy only a single cell of the grid.

The state of a cell

,

is defined as a discrete random variable taking values

, such that

implies that the cell

does not contain any target, while

implies that cell

contains a target. In case we need to stress the time

t of the sensing, we will use the notation

, otherwise, we omit it. Note that, at any time t and for each cell

, the probabilities of these events are mutually exclusive, i.e.,

Each agent

is equipped with a variety of sensors

,

, that provide, not necessarily accurate, information about the states of the cells

,

, relative to the agent’s distance with respect to the Koopman’s exponential random search Formula [

2],

where

represents the search effort applied to the cell

with respect to the distance

between this cell

and the agent’s location

for observation period

. It is assumed that as the distance

gets shorter and as the period

gets longer, the higher the detection probability will be.

In order to formalize the possibility of both false positive and false negative detection errors, let us assume that the domain includes both true and dummy targets that broadcast signals indicating their presence in the domain cells. The signals sent by the true targets are considered as true alarms, and the signals that are sent by the dummy targets are considered as false alarms that represent the false-positive errors.

Then, with respect to Koopman’s search Formula (2), the probability of a perceived alarm is defined as follows:

where

is the sensitivity of the sensor

installed on agent

. The dependence of the detection probability at observation period

is considered in the updates of the probability map as defined below.

The probabilities of sending alarms from cells , , are defined by the probability map that represents the information about the targets’ locations in the domain. Moreover, we assume that the agents can share information about the targets’ locations as they have been perceived by the sensors.

The activity of the agents is outlined as follows. The agents start with some initial probability map

that defines initial probabilities

of detecting the targets in cells

,

, at time

.

At time , the agents , , are located in the cells and obtain the sent signals (that are either true or false alarms) from the cells in which the targets can be located. The probabilities of receiving the signal that was sent from the cell with probability is defined by the Koopman Formula (3).

After receiving the signals, the

th agent

updates the sensor probability maps,

,

where

The resulting sensor probability maps

,

, are combined into the probability map

where probabilities

of the target’s locations in the cells

,

, from the agent’s point of view are specified by fusion of the sensors’ probability maps

,

.

Finally, a global probability map

that defines the probabilities

of the target’s locations in the cells

,

, as they are known by the group of agents is obtained by the fusion of the agents’ probability maps

over all the agents

,

.

In the presented algorithms, the probability maps both at the sensors’ level and at the agents’ level are fused using a simple Bayesian scheme. Exact equations for calculating each probability map are presented in the next sections.

The general scenario of the targets’ detection by a group of mobile agents is outlined as follows. At each step, each agent makes a decision regarding its own next movement. The agent’s decision is based on either the local or the global probability maps (or both of them) as obtained at this step.

After taking its decision, the agent makes a movement step towards the chosen direction. At the completion of the step and arrival at the required cell, the agent observes the cells of the domain by utilizing its sensors, obtains true or false information about the target’s location, and updates the probability maps, respectively.

Then, the detection process continues following the updated probability maps and the agent’s current locations, in a step forward manner.

Our goal is to define the trajectories of the agents over the domain, such that all the targets will be detected in minimal time. Notice again that we do not require the agents to arrive physically to the exact targets’ locations, but rather to detect the locations of the targets at some level of certainty.

It is clear that the formulated problem follows the general Koopman’s scenario [

2]. As defined in the framework of probabilistic search [

5,

16]. Since the general case, the computational complexity of finding the optimal solution is

, we are interested in a practically computable near-optimal solution. In the next section, we consider several heuristic approaches and reasonable assumptions that lead to such a solution.

3. Sensor Fusion and Updating Schemes over the Probability Maps

As indicated above, we assume that each agent is equipped with several sensors , , that independently provide, not necessarily accurate, information about the cells states , . In the used framework of the occupancy grid, sensor fusion is conducted as follows.

Let for example

and

be two independent sensors installed on agent

and let

and

be the signals obtained by these sensors at time

. Then, the probability that the target is located in the cell

, that is the state

, is defined by Bayes rule as follows (see also [

15]):

where the sum is taken over all possible values of

. In the considered case, these values are

.

An extension of this equation to

onboard sensors of the agent

results in the probabilities

of the targets’ locations in the cells

,

, as they determined by the agent

using its sensors. This equation is based on the approach known as “independent opinion pool” [

15] under the assumption that the sensors are conditionally independent and that their reliabilities and accuracies are equivalent.

Similarly, the location probabilities of different agents can be fused to global probabilities,

of the targets’ locations in cells

,

, as determined by the group of the agents. Notice that such a definition requires a central unit that receives data from each agent and computes a global probability map using the obtained probabilities from all the agents.

The presented equations use the probabilities of the targets’ locations in the cells , , as determined by sensors , , installed on agents , . These probabilities form a sensor probability maps that are updated as follows.

At the initial time the probabilities are specified with respect to some initial distribution; if no information is available, these probabilities can be drawn by a uniform distribution. Then, these probabilities are updated by using the Bayesian approach as follows.

As indicated above, let be the signal obtained about cell relying on sensor of agent at time . Recall that in the considered scenario, implies that cell is occupied by a target while implies that cell is empty, both based on sensor .

Then, the state probabilities of cell that are updated by the sensor outputs are:

if a signal is perceived that is

, considering that the static target was located at the cell at time

, then the true positive probability is

Otherwise, while

, the false positive probability is

These equations define an updating scheme of the probabilities map for the sensor given the new observations. They include the probabilities that the sensor perceives the signal given that the target is in the cell and the probability that the sensor does not perceive a signal from cell given that the target is in that cell.

In order to define these probabilities, denote by

an alarm signal that is sent about cell

at time

. The value

implies, truly or not, that the cell is occupied and the value

implies, truly or not, that the cell is empty. Then, implementing Koopman’s Formula (3), one obtains the following

where, as above,

is the distance between the cell

and the agent’s location

and

is the sensitivity of the sensor

installed on the agent

.

These equations enable to calculate the occupation probabilities at each time given the probabilities at the previous time and the information obtained by the sensors at time . As indicated above, at the initial time , the probabilities are defined based on topographic data and prior information or, in the worst case, can be specified by a uniform distribution of the occupancy grid.

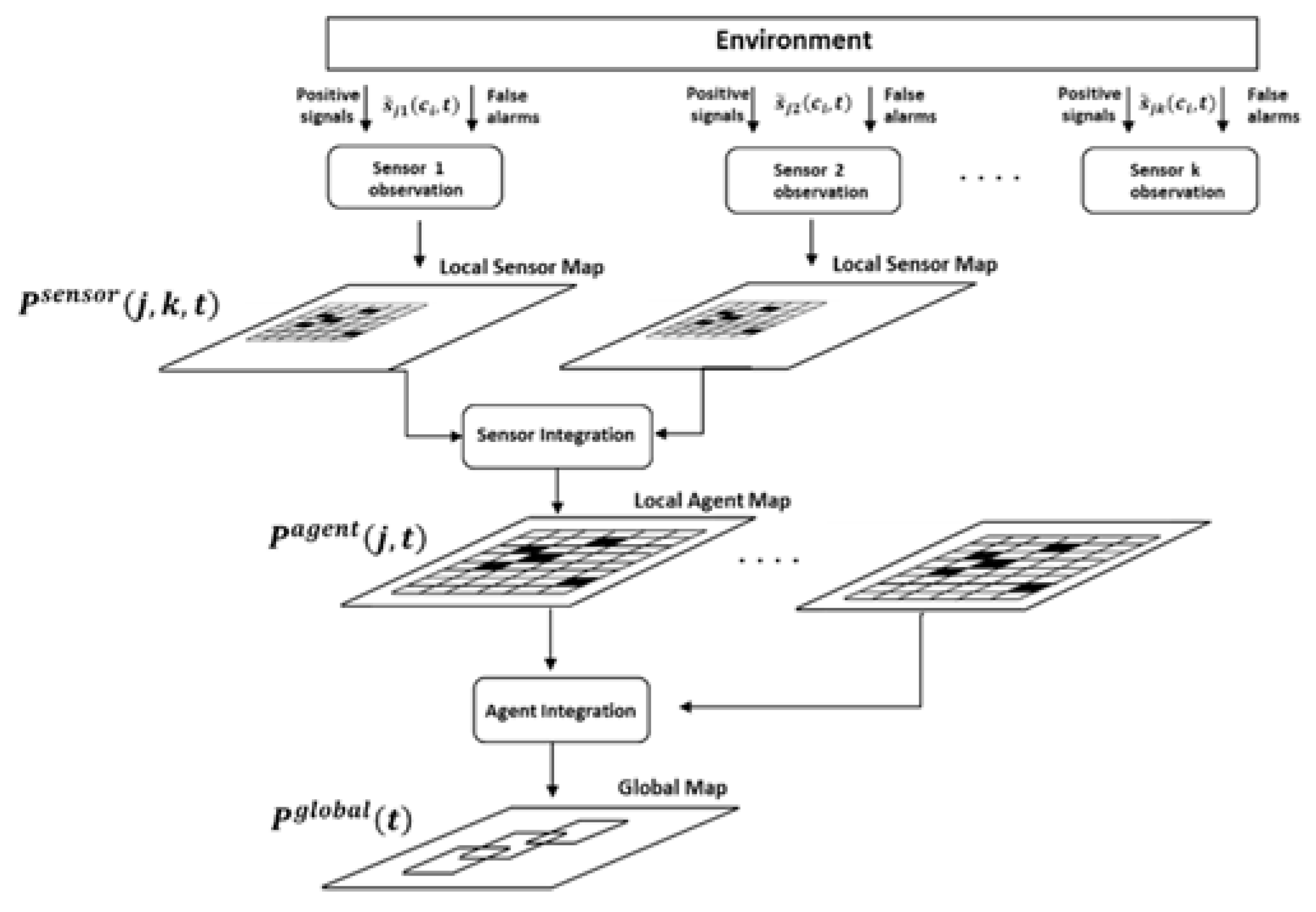

The above defined process of sensors’ fusion is illustrated in

Figure 1.

The sensors receive signals from the environment. Part of these signals are positive signals from the targets, indicating the real locations of the targets, while others are false alarms (i.e., false positive errors) that corresponds to false locations of the targets. Based on the received signals for each sensor, a local sensor map is created (see Equations (7) and (8)). Then, each agent integrates its sensor maps to a local agent map (see Equation (5)). Finally, a global map is created by integrating the agent maps (see Equation (6)). Such a hierarchical structure allows us to consider the maps of each level separately and, consequently, to define a more effective calculation process that uses only the maps required for current computations.

4. Agents’ Policies and Decision Making

In this section, we define the behavior of the group of agents taking actions in a gridded domain aiming to detect hidden targets. The agents detect the targets by their on-board sensors such that the sensors can identify the targets from certain nonzero distances. The goal is to define the trajectories of the agents such that they detect the targets in minimal time.

Formally, this problem is defined as follows. Denote by the trajectory of the agent starting from its initial cell and up to the cell occupied at time . Located at cell the agent makes a decision regarding its next location, following a certain policy that prescribes how to choose the next cell given a probability map . For simplicity and tractability, we assume that policy for each agent does not depend on the time and for any is specified by the applied probability map that holds the aggregated information on the location of the targets as a function of past movements of the agents. The result of the application of the policy is an action that controls the agent movement from the current cell to the next cell . More precisely, the policy is a function , where is a set of possible actions of the agent , and an action is defined by a function that specifies the choice of the agent’s positions. Assuming that the actions provide an unambiguous choice of the agent’s cells, the required solution is to define the function .

Assume that there are targets, , distributed somewhat over the domain, and recall that the probability defined by Equation (6) is the probability of detecting the targets in cells , , by the group of the agents. Both the number of the targets and the global probability are unknown to the agents; in real situations, the former value either cannot be obtained, or its knowledge requires additional efforts, while the specification of the latter value requires a central unit that obtains data from all the agents, a requirement which can be practically challenging in many applications. However, we use these values as parameters for simulations and sensitivity analysis to demonstrate that better results can be provided by a separate usage of the agents’ probability maps, such that the use of a central unit is often unnecessary.

Denote by

the time required to detect the target

,

, with probability

given the agents’ policies

. Then, the goal is to define such policies that result in minimal time for detecting the last target, that is

Note that this is an NP-hard problem that can’t be solved directly by conventional linear or integer mathematical programming [

4,

6]. In order to approximate the policies

in a tractable manner, we evaluate three different approaches:

Maximizing of the expected information gain () locally - over the cells that are reachable to each of the agents in a single move;

Heading the agents toward the center of view (), that is the point that provides maximum expected information-gain over all the cells in the domain;

Heading the agents toward the center of distribution, also known as the center of gravity ), that is defined by the first moment of the probability map.

Notice that the last approach is a greedy heuristic that requires minimal computation efforts, while the first two approaches are more complicated heuristics that require the computation of the possibilities in the local or the global neighborhoods of each agent.

For the aims of comparisons, we also consider a case where static agents remain in their initial places and an agent that accumulates signals received from the targets while being governed by the brute force learning rule. We apply it for a single agent only, since this case is extremely demanding computationally.

The expected information gain

for each sensor

of the agent

at time

is defined by the sum of the Kullback–Leibler (KL)divergence measures between the sensor probability map

as obtained after executing a chosen action

by the agent

and the sensor probability map

obtained without execution of any action; where such a null action is denoted by

. Then, the expected information gain,

is defined as

where

and logarithm is taken to the base of

; thus, the distance

is represented by the average number of bits. Certainly, instead of the KL distance that is a pseudo-metric in the probability distributions space, other information-theoretic metrics can be used. In particular,

can be defined by the Jensen–Shannon divergence as the average of the KL distances

, or by the use of Ornstein or Rokhlin metrics (for application of such metrics to search problems see [

4]). Nevertheless, here we use the conventional definition of the

and since the heuristics do not need the metric properties of the probability distributions space, we do not require the distance function to be a formal metric.

At the agent’s level, the expected information gain

is defined by the sum of the expected information gains for each sensor

, that is

Similarly, for the group of

agents, the expected information gain

at time

is

Notice that instead of calculating the KL distances for each agent based on his own map as well as calculating the global probability maps over all agents (Equations (5) and (6), respectively), the expected information gains of higher levels are calculated by the sums of expected information gains of the lower levels. Thus, the of the group is calculated as a sum of the s of the agents, and the of the agent is calculated as a sum of the s of its sensors. Such a definition follows a line of additive property of information and leads to essentially simpler computations.

Using the

s, the agent’s decision-making follows the maximization of the

measure, that is

For example, while located in any cell an agent can choose one of nine movement possibilities: make a step forward, backward, left, right, left-forward, left-backward, right-forward, right-backward, or stay in its current cell. Then, by Equation (15) the agent chooses such a movement that results in obtaining the maximum expected information gain about the targets’ locations.

The sensor probabilities

given the agent action

can be defined by using either the global probability map,

or by using the agent probability map,

. In the former case, the sensor probabilities are:

while in the latter case these probabilities are defined as follows

where

is the agent’s location after conducting the action

and

is the agent’s location if it decides to avoid conducting any action, i.e., staying in its current location.

A second approach to govern the agent’s action implements the center of view (

) measure, aiming at the grid point that provides maximum expected information gain over all the cells in the domain. In other words, the difference between information, which the agent obtains in its current position, versus the information, which it expects to obtain while being located at the

point, reaches its maximum. Formally, it means that in contrast to

that is calculated over neighboring locations (thus, eight points around the current agent’s location and its current point), the

is based on a calculated

over all the points in the domain. Thus, instead of calculating the sensor probabilities by Equations (16)–(19) using distances

and, for the

calculation, the sensor probabilities are defined by using the distances

between the cell

and other points in the domain,

,

, that can be considered as candidate locations of the

. In parallel to Equations (16) and (18) in this case, we have

If the agent chooses to stay in its current location, then the distance is defined as above by and the sensor probabilities are calculated by Equations (17) and (19).

By the use of these sensor probabilities, the

is defined in parallel to the

:

and the

is the point, in which

reaches its maximum, that is

Finally, the center of gravity (

), which is the first moment of the probability map, the following calculations are used. In a two-dimensional domain, the location of each cell

,

, is defined by two coordinates,

. In addition, recall that

stands for the state of the cell

. Then, the coordinates of the

for the axes are

and the final location of the

is obtained by rounding the values

and

to the closest integers that is

Notice that since we consider only the states , the sum of the probabilities in the denominator differs from the unit and varies with time and with the number of targets.

Since both here and in the previous case the desired points and can be located far from the current agent’s location, the agent follows toward these points by steps and changes its direction with respect to the changes of the coordinates of both and .

5. Policies Control and Brute Force Learning

In order to control the obtained policies, we apply the simple look-backward method. Based on this method, the control of the agents’ policies is conducted as follows.

Let the global probability map at time

be

and assume that, following the chosen policies

,

, the agents made a decision and conducted corresponding actions. Then, at time

, each of the agents is located in a new cell and following the observations from these cells the global probability map

. The value

is the actual information gain that was obtained by the actions defined by the policy

.

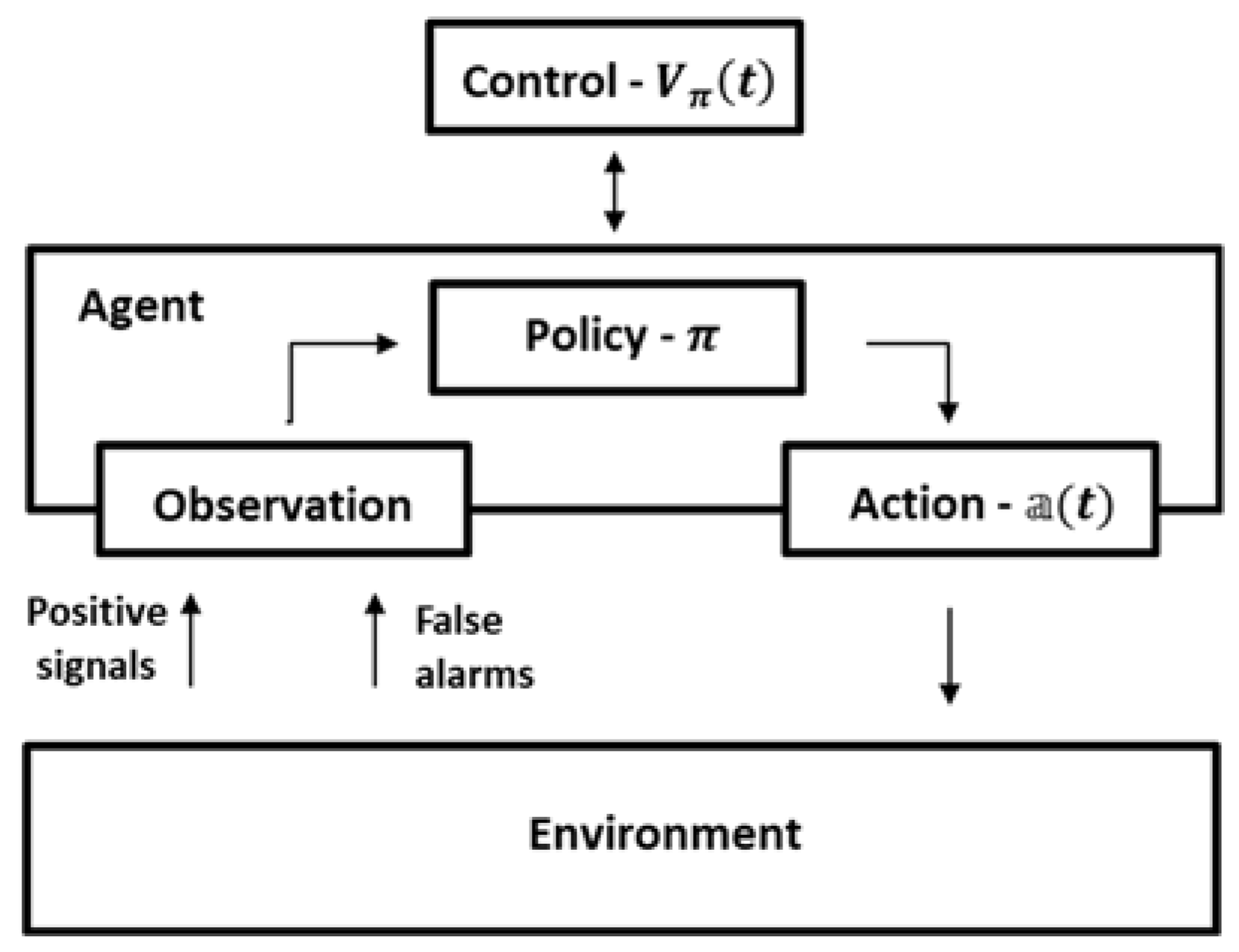

The defined decision-making process and control method are illustrated in

Figure 2.

Based on the policy , at time each agent makes a decision regarding its action. After the action and the corresponding movement, the agent observes the environment, and following the obtained sensor maps, the agent map and the global map are refined. In parallel, in order to control the agent’s policy, a value of information gain is calculated.

The calculated information gain

indicates the efficiency of the applied policy, and it is used as a comparative measure of the agents’ decisions: its accumulated value up to some time

gets larger as the agents’ policies are more efficient in the sense of the quantity of the obtained information about the targets’ locations. Accordingly, the best policy can be defined as follows:

where

denotes the best policy among all the combinations of the agents’ policies,

,

, that can be applied to the available global maps till time

.

The learning measure is also based on the actual information gain, but in this case, it is defined over the actions

that were chosen by the agents, namely:

where

stands for the global probability map obtained after performing the actions of all the agents, and

is the global probability map, if all the agents stay at their current locations.

The selection of actions is conducted as follows. Assume that at time

the agents are at their locations and observe a certain global probability map

. Then, for each combination

of their actions and for the null action

, global maps

and

are obtained and the average information gain

for the combination

is specified. The best combination of actions is defined by the maximal value

, that is

It is clear that this is the brute force learning that requires a consideration of all possible combinations of actions for all the agents with a large number of iterations. Thus, in practice, such a policy cannot be performed for a large number of agents and actions. However, for a single agent, this learning step can be performed in a relatively short time by available computation resources.

In the considered work, the brute force learning is used as a reference for the evaluation of the suggested methods.

6. Numerical Simulations and Analysis

Numerical simulations were implemented using the Python programming language, executed by a regular PC Intel I5 8265U processor. In all the cases, unless defined specifically, the run times of the algorithms are indicated by the numbers of iterations. Also, in all the tables, the policies are measured with respect to the defined approaches: expected information gain (

), center of view (

), and center of gravity (

), as presented in

Section 4.

In the simulations, the search is conducted over a gridded square domain of size cells, and it is assumed that each agent and each target can occupy only one cell in the domain. In the simulations, different setups included different numbers of the agents and different numbers of the targets. Also, we assume that there are two types of sensors while each agent is equipped with two sensors and of different types with corresponding sensitivities and . Both true alarms and false alarms are sent with respect to the sensors’ types and are perceived separately by each of the two sensor types.

Following the goal of finding such policies that result in a minimal time of detecting the last target (see Equation (11)), we determined the maximal simulation time by

where, as indicated above,

is the time required to detect the target

,

, with probability

. Then, the policies that result in a minimum time

given probability

are the best policies. In the simulations, we used the probability

.

To reduce systematic errors, the results presented below were obtained by averaging the outcomes of five repeated trials, each of which contained thirty sessions, executed with the same parameters, with the same initial locations of both the searchers and the targets. The true and false alarms in the sessions were generated by the same uniform distribution with a random seed for each trial.

6.1. Detection by a Single Agent

Let us start with a small illustrative example of detection by a single agent . In order to simulate the brute force learning defined by the Equations (31) and (32), we considered a small domain of the size cells. A broadcast of the false alarms was distributed uniformly over the domain, and the frequency of sending false alarms from all cells was false alarms per second for each type of sensor, that is, on average alarms per second from each cell to each type of sensor. The sensitivities of the sensors are .

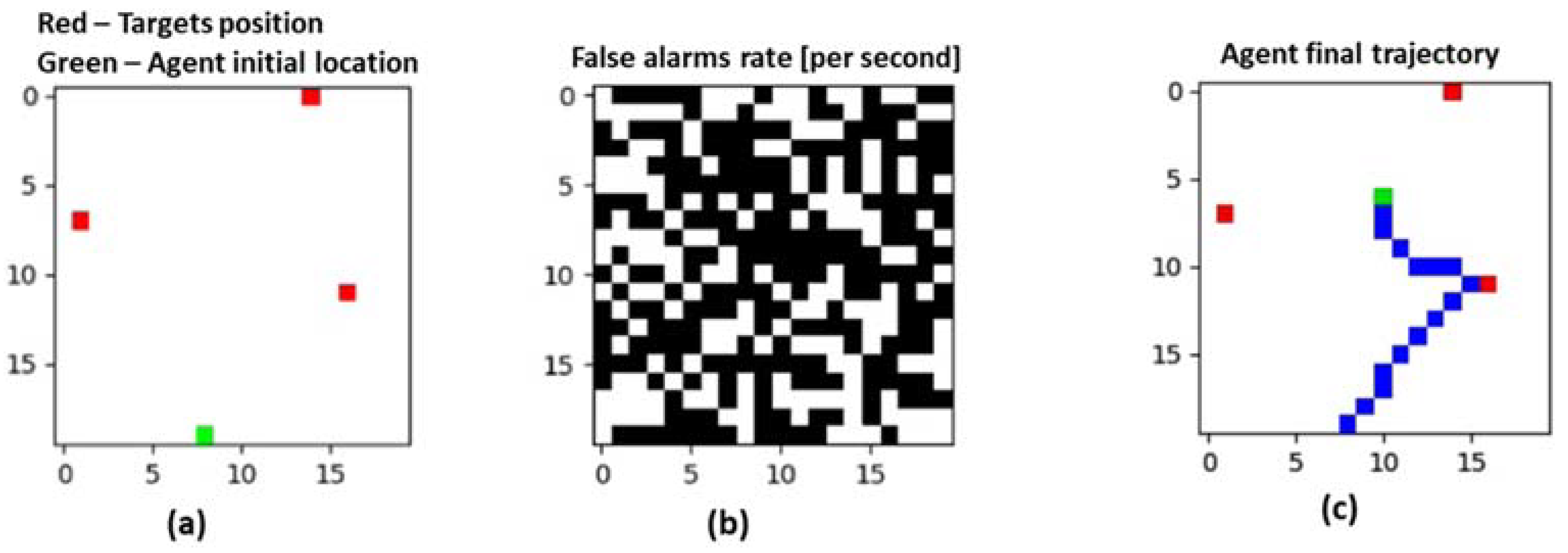

In the first setting, the single agent

was detecting

targets located in the cells with coordinates

,

and

; the starting position of the agent was

. The results of the simulation trials are summarized in

Table 1.

As expected, the best result, which leads to the minimum of is obtained by the brute force learning, while the times obtained by the policies based on the expected information gain (), the center of view (), and the center of gravity () are close to this best result. Notice that since the detection is conducted by a single agent, the results obtained by the and policies are equal.

Figure 3 illustrates the activity of a single agent detecting three targets using the center of view (

) policy.

The values of the accumulated information gain (see Equation (30)

that characterizes the effectiveness of the policy for the simulated policies are presented in

Table 2. The time

is the minimal time of detecting the last target based on the brute force policy.

With respect to the detection time, the maximal accumulated information gain is obtained by the brute force learning policy, while the , , and policies result in the accumulated information gains that are close to the maximum.

In the next simulation, a single agent

is detecting

targets located in the cells of the following coordinates:

,

,

,

and

; the starting position of the agent is

. The results of the simulation trials are summarized in

Table 3.

Similar to the previous case, the best result is obtained by the brute force learning, while the times obtained by the policies based on the center of view () and the center of gravity () are close to this best result. However, for this case with larger number of targets, one can already notice that the policy based on the expected information gain () is worse than the first three policies.

The relations between the values of the accumulated information gain, in this case, are again similar to the case of the detection of three targets. The best result is provided by the brute force learning policy. Then, the results of the and the policies obtain close to the brute force, while the policy is less effective. The worst results are obtained by the static agent.

6.2. Detection by Multiple Agents

Now let us consider detection by a group of agents that can share information and consequently use probability maps of each other as well as a global probability map, which represents the knowledge of the group.

Since in this case, we have not considered the brute force learning, in the simulations, we used a greater domain of size cells. As mentioned above, a broadcast of the false alarms was distributed uniformly over the domain. However, the frequency of sending false alarms from all cells was false alarms per second for each type of sensor that is alarms per second on average from each cell to each type of sensor. It is assumed that each agent is equipped by the sensors of two types. The sensitivities of the sensors of each type are denoted by and .

Recall that located in a cell, each agent can choose one of nine alternatives, while if the agent is located at the border of the domain, then the number of alternatives is smaller due to boundary conditions of the map.

In addition to the considered policies that are based on (i) expected information gain (

), (ii) center of view (

), and (iii) center of gravity (

), in the following simulations, we also distinguish decision-making under several scenarios:(i) when relying on the agent probability map versus (ii) when relying on the global probability map, as well as (i) selection of actions by each agent separately or (ii) selection of actions mutually by all the agents in the group. The use of the maps and the selection of the actions for the different policies are summarized in

Table 4.

The table presents the fact that when applying the policy, from its current cell the agent can either move to one of eight neighboring cells or stay in its current location. When applying the policy the agent can choose one of cells as a desired center of view, and when applying the policy the agent can consider only one cell as the cell.

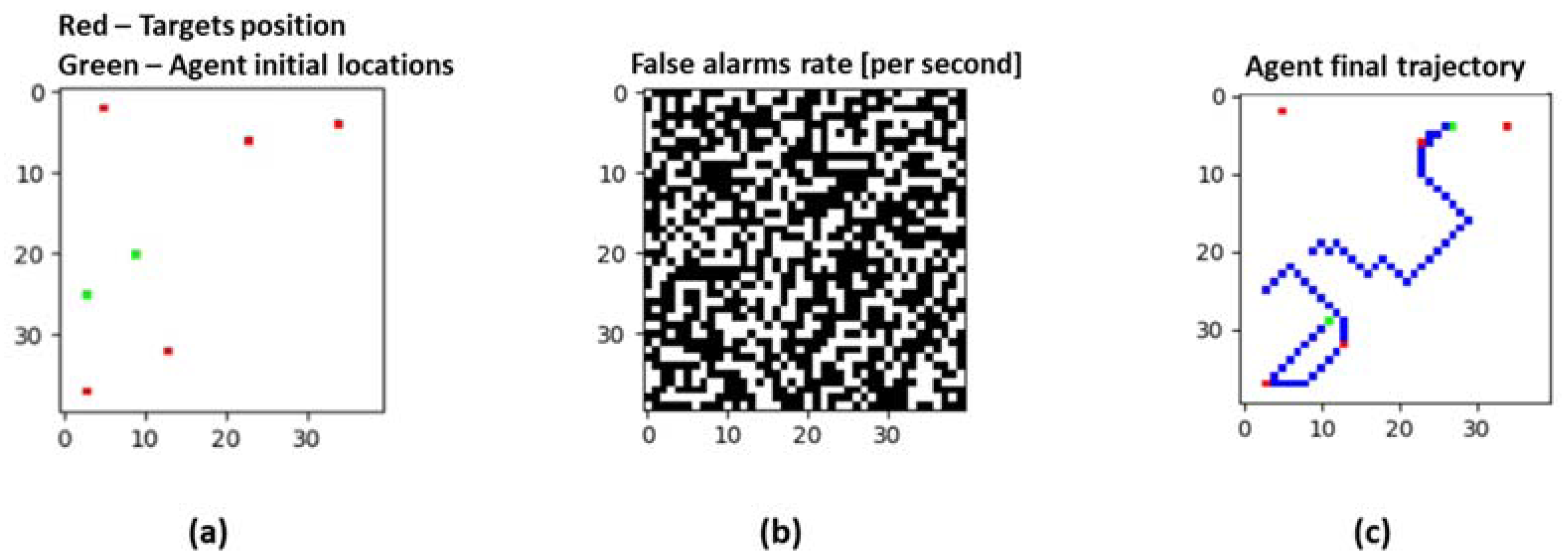

For consistency, let us start with the same setting as in the previous simulations, namely by detection of

targets located at the cells with coordinates

,

,

,

and

. These simulations are conducted for

agents, each of which is equipped by two sensors with respective sensitivities

,

. Initial positions of the agents are

and

. The results of the simulations when using different policies are presented in

Table 5 (cf.

Table 3).

Figure 4 illustrates the activity of two agents detecting five targets when using the expected information gain (

) with

policy.

Results of simulations using different policies are presented in

Table 5 (cf.

Table 3).

As expected, the worst results are obtained by the static agents. The best results are provided by the policies based on the center of view () and center of gravity (). As indicated above, these policies result in the same detection times. Finally, the policy, as seen above, results in a longer search than the and the policies, yet, better than the static agent policy.

In addition, notice that the decision-making policies that are based on the agent map provide significantly better results than the policies based on the global map. In other words, in the detection tasks, more information is not always better, unless actions between the agents can be synchronized. The reason for this result is the following. Relying on a single global map, all agents aim at the same preferable regions with the higher probabilities of detecting the targets while ignoring the regions with the lower probabilities. However, because of the existence of both false positive and false negative errors, the targets can appear in those ignored regions, to which the agents return only after the unsuccessful detection in the preferable regions. All these movements waste a lot of time. In contrast, while using the agent maps, each agent considers its local region and continues to the other regions only after unsuccessful detection in its close neighborhood. In such a manner, the agents divide the task and conduct the detection process in parallel in different regions. At the same time, the global map is used for terminating the detection for all the agents.

Finally, notice that a better choice of actions is provided by applying the group action. However, since it requires strong computation power without a significant improvement of the detection time, this approach is less attractive for practical tasks.

The accumulated information gain for this simulated detection of

targets by

agents is presented in

Table 6; the CPU times required for such detection are presented in

Table 7.

The obtained results support the previous observations. The higher accumulated information gain is obtained by the and the policies, the policy obtains the worse results, and the lowest gain is obtained by the group of static agents. Also, better results are achieved by the decision-making policies based on the agent maps, and the better choice of actions is provided by the use of group action.

In order to represent the relation between the detection efficiency and the sensors’ sensitivity, a similar detection scenario was simulated for the agents that are equipped by sensors with different sensitivities

and

,

.

Table 8 presents the detection times

and that accumulated information gains

for this scenario.

A comparison of the obtained times and information gains with the results presented in

Table 6 and

Table 8, respectively, show that in general, the change of the sensors’ sensitivities preserves the already considered trends in the efficiencies of the policies. At the same time, it stresses an advantage of the group action relative to the actions by each agent separately.

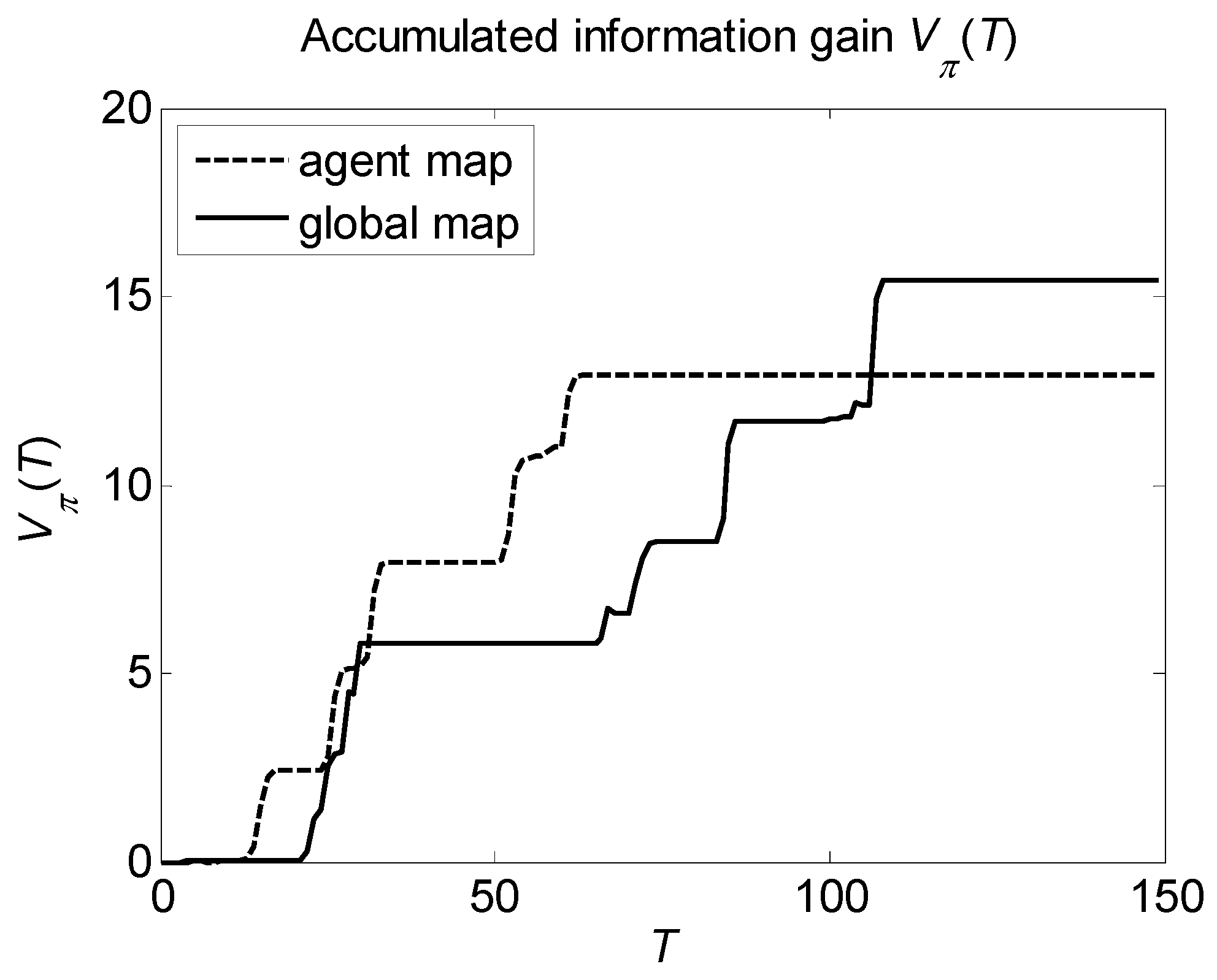

Finally, let us consider the dependence of the accumulated information gain on the detection time. An example of such functions is shown in

Figure 5, where we used the results of the previous simulation of detecting

targets by

agents with different sensors sensitivity

and

,

, following the

policy with agent action choice.

It is seen that in the beginning of the search process, the policy based on the agent map accumulates information faster than the policy based on the global map. However, as the search process continues, the accumulated information gain obtained by the global map policy converges to a value which is significantly greater than the one to which the agent map policy converges.

For validation of the presented results, further simulations were conducted for different settings. The obtained detection times and information gains demonstrate the same trends of the policies’ efficiency. In addition, it was found that as the number of the agents gets larger, the difference between the best policy and the nearly best and policies is increasing.

7. Discussion

The paper presents three heuristic techniques for navigation of autonomous agents searching for hidden targets in the presence of false positive and false negative errors. Two of these heuristics are based on the expected information gain calculated over the local neighborhood of each agent ( policy) or relative to the center of view ( policy), and the third heuristic uses the center of gravity ( policy) of the targets’ location probabilities. In order to make decisions regarding the next movements, the agents use either their own probability maps or a global probability map.

The simulations show that in short-term detection processes, the policies based on the agent map outperform the policies based on the global map, both while the agents’ movements are not centrally synchronized (individual decision-making) and while the agents’ actions are definitely synchronized (collective decision-making in the group). However, in the long-term detections, the accumulated information gain during the search process when using the global map policy was significantly larger than the accumulated information gain during the search process when using the agents’ maps policy. Probably, the reason for such a result is the following: while using the global map, the agents calculate the information gain also taking into account the not relevant information based on the false alarms, and while using the agents’ maps the influence of such alarms is lower and, consequently, the accumulated information gain is lower as well.

Detection using the policy demonstrated lower efficiency (in terms of detection time) than the and policies while using both the agents’ and the global probability maps. The main reason for such a result is the following. On the one hand, the policy does not always recognize what should be the next step since the differences in the expected information gain obtained by staying in the current cell and by moving to a neighboring cell are extremely small and cannot be used for a reasonable selection of the actions. On the other hand, in order to reveal the center of view, the policy requires the agent to check all the cells in the domain and, consequently, succeeds to find a significant change in the expected information gain.

While using the sensors with equal sensitivities, the and policies result in a close or even equal detection times. Therefore, since the policy has an extremely lower computational complexity than the policy, it should be preferable when the agents are equipped with similar sensors. However, if the sensitivities of the sensors are different, then the policy is significantly better.

As expected, a decision-making heuristic which relies on group actions leads to better performance than the one which relies on single agents’ actions, yet, the first heuristic requires much greater computation efforts. In order to shorten the running time, the number of calculations can be decreased by using some probability threshold. Thus, at each step of the computation, the cells with probabilities lower than the threshold are ignored, thus the number of calculations is reduced without significantly influencing the quality of the search results.

Finally, notice that the results obtained when using the presented techniques are close to the results obtained by the optimal brute-force learning method. Such a comparison both validates the suggested methods and demonstrates that, for the detection over large domains, where due to intractable computation complexity the brute force learning cannot be used, these methods might provide sub optimal results by reasonable computation efforts in reasonable running time.

8. Conclusions

In the paper, we considered the problem of detection of multiple targets by the group of mobile agents that directly extends the classical Koopman search problem [

2]. In contrast to many known algorithms, we addressed detection with both false positive and false negative detection errors.

The suggested solution implements three different levels of the agent’s knowledge about the targets’ locations: information that is available to the group of agents, information available to a single agent, and information obtained by a single on-board sensor of an agent.

For these settings, we considered three decision-making policies based on different considerations of the expected information gain, which can be obtained by the agent in its next step. Namely, the policies considered a local neighborhood of the agent, a location of the “center of view” from which the agents can obtain maximum information using their sensors, and a location of the “center of gravity” of the targets’ probability map.

The results obtained using the suggested policies were compared against the results provided by the worst policy, in which the agents are static, as well as the best policy of the brute force learning when tractable.

Simulations of the suggested solutions demonstrate that the best results among the constructed policies are obtained by a policy which is based on the center of view. Close results are provided by the policy based on the use of the center of gravity, and the worse results, yet sometimes satisfactory, were achieved by the policy based on the expected information gain over a local neighborhood of the agent.

In addition, it was found that in the considered problem including both false positive and false negative detection errors, decision-making policies based on the agent maps provide significantly better results than the policies based on the global map.

The best search policy under the considered settings was obtained by relying on group actions, at the expense of having a strong computation complexity, without significantly improving the detection time relative to the suggested heuristics. This observation makes this approach less attractive to implement.

Finally, it was demonstrated that the policies based on the agent map are more effective for the detection in a given short period of time, while in a long-term detection, the policies based on the use of a global map result in better outcomes in terms of accumulated information gain.

The constructed algorithms and software can form a basis for the further development of the proposed methods as well as other methods related to probabilistic search and detection. These methods can be used directly for practical applications in various fields, such as smart cities, military applications, and autonomous vehicles.