What Can Local Transfer Entropy Tell Us about Phase-Amplitude Coupling in Electrophysiological Signals?

Abstract

1. Introduction

2. Information Theory and Transfer Entropy

3. Approaching PAC Estimation with Information Theory Local Measures

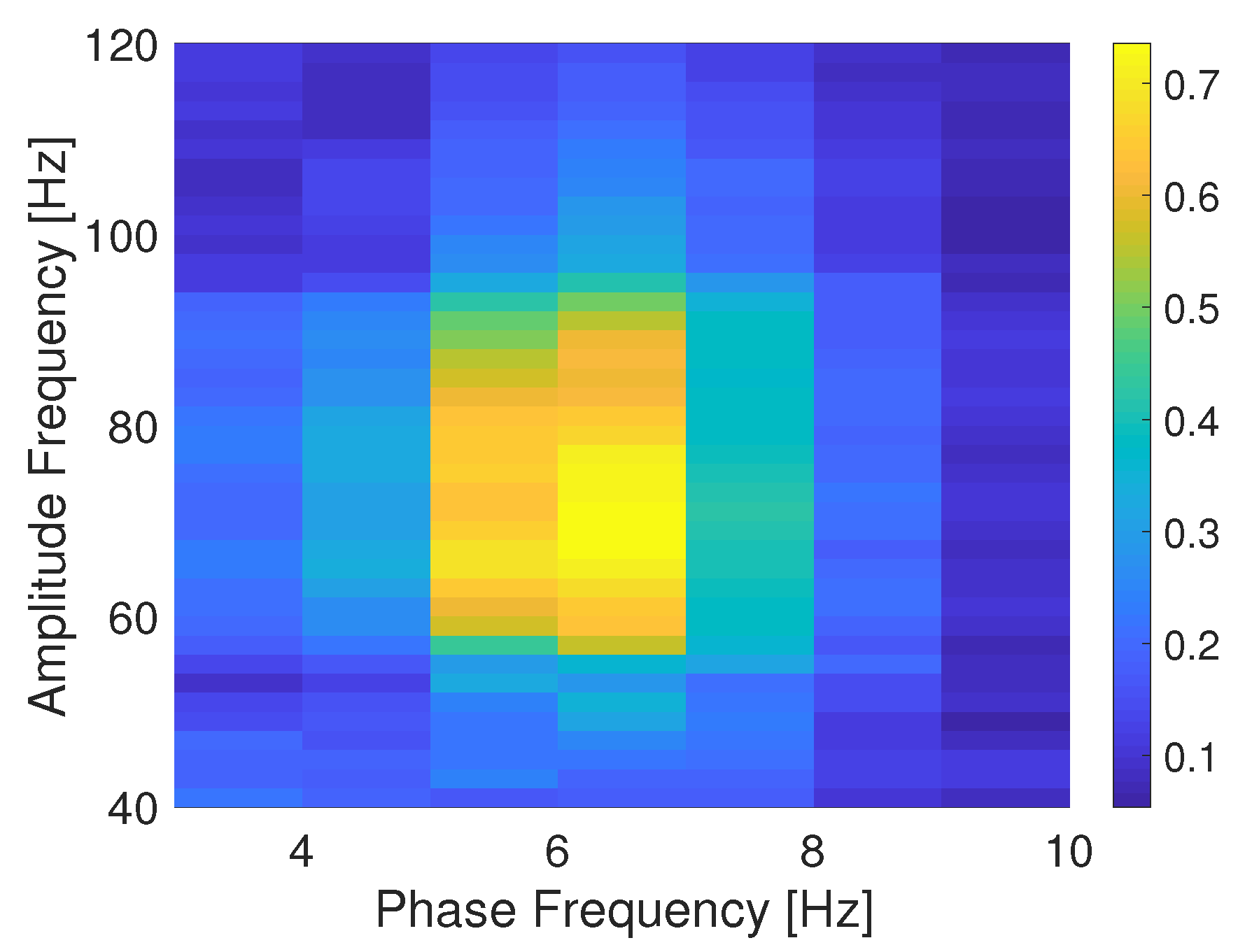

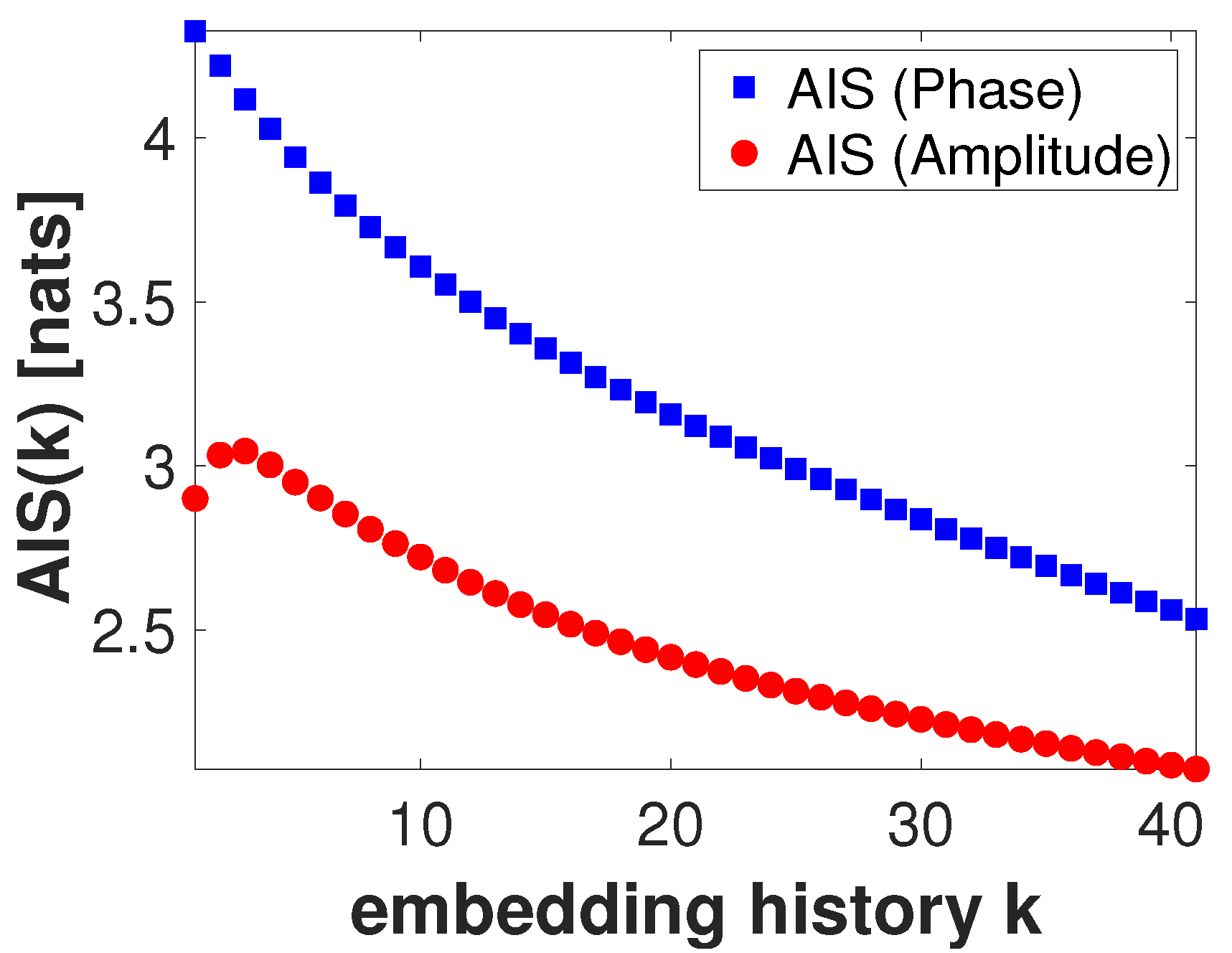

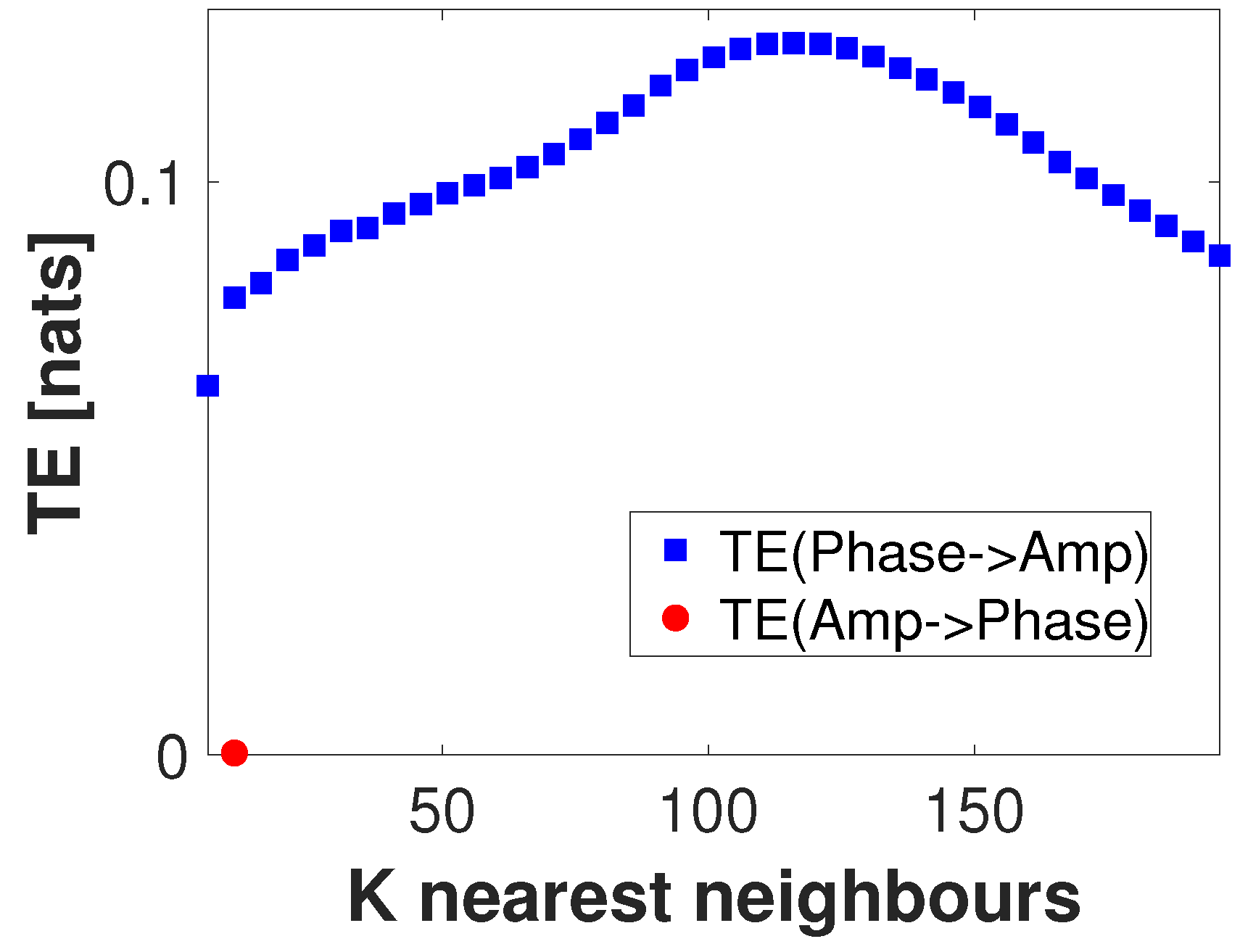

4. Simulation Results

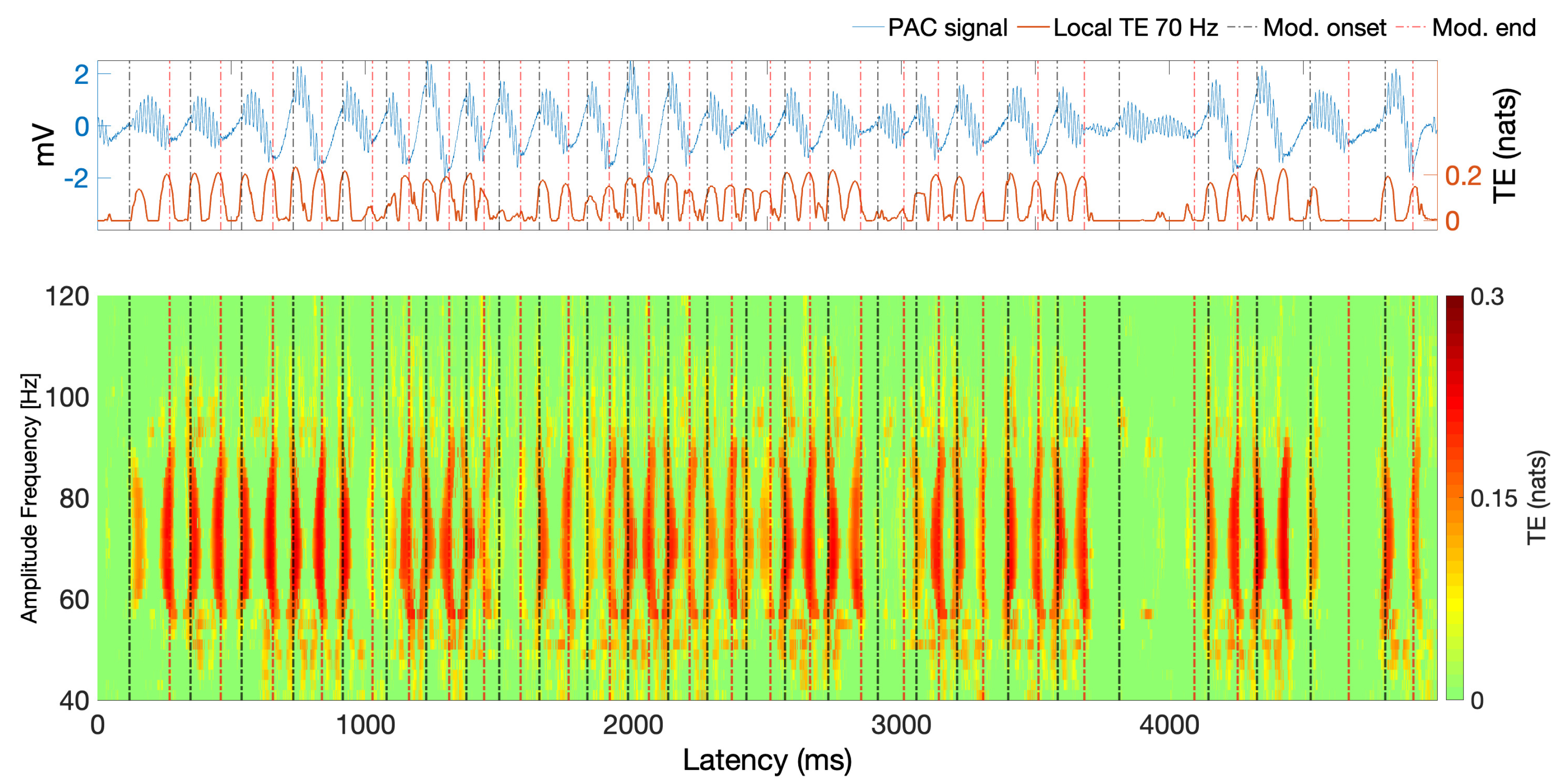

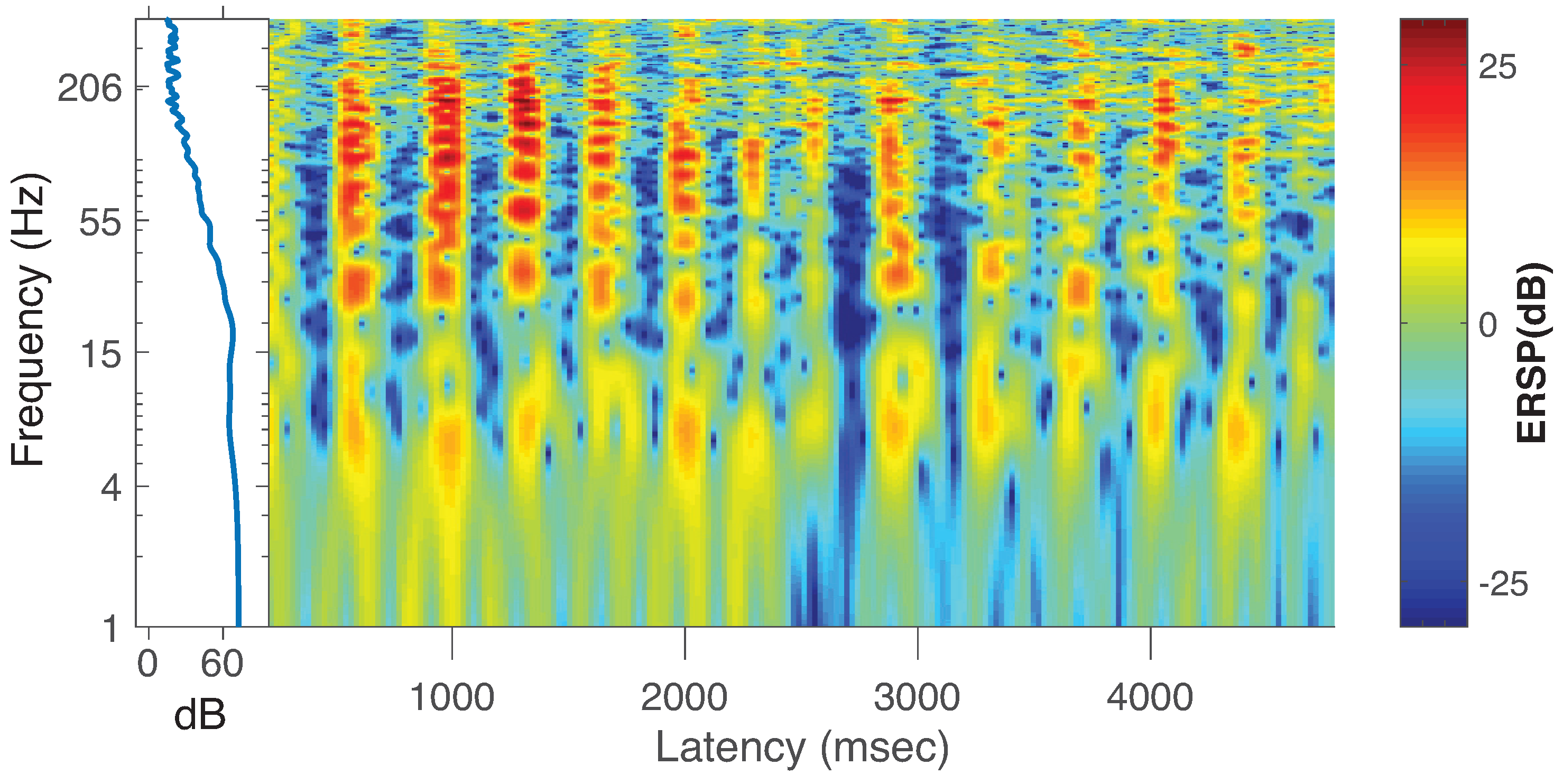

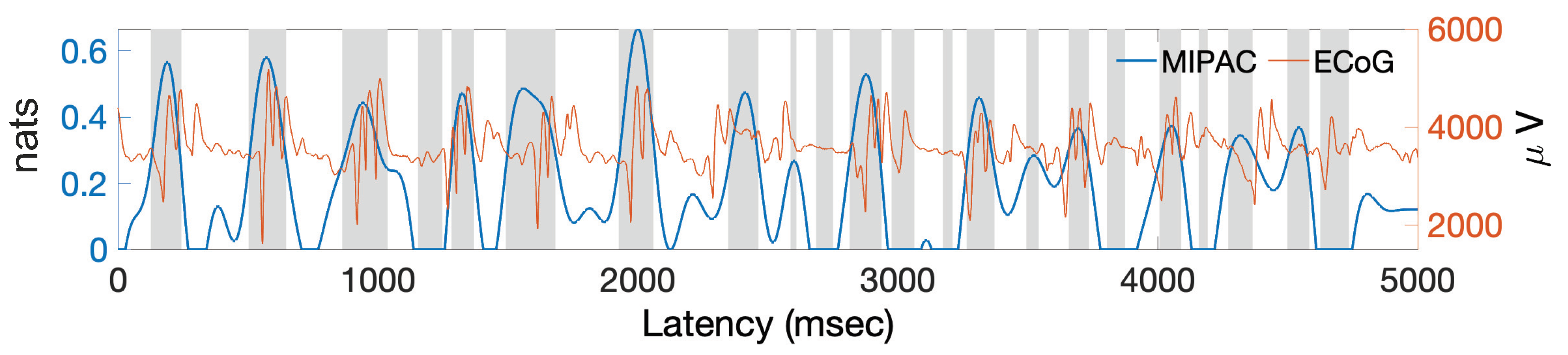

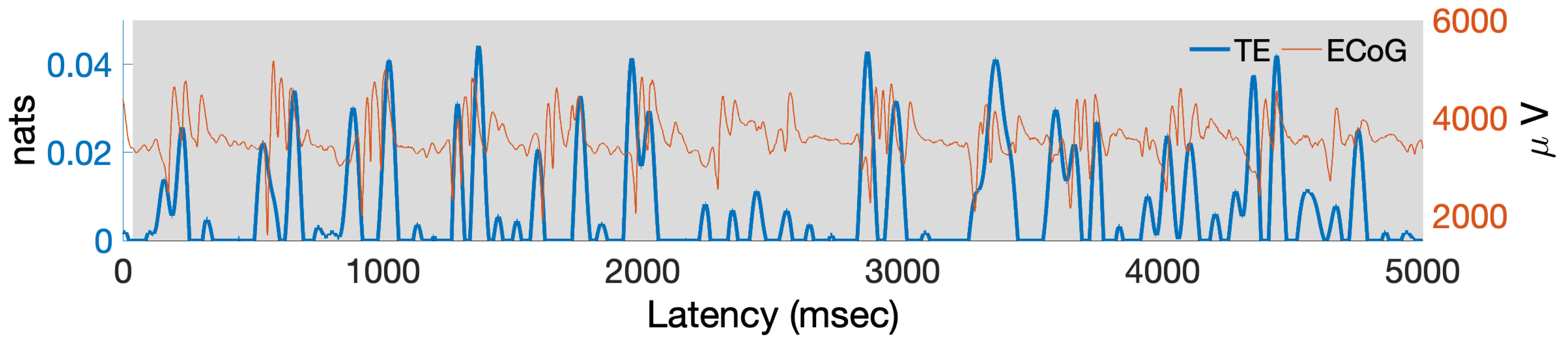

5. Estimating PAC with Transfer Entropy from Actual ECoG Data

6. Discussion

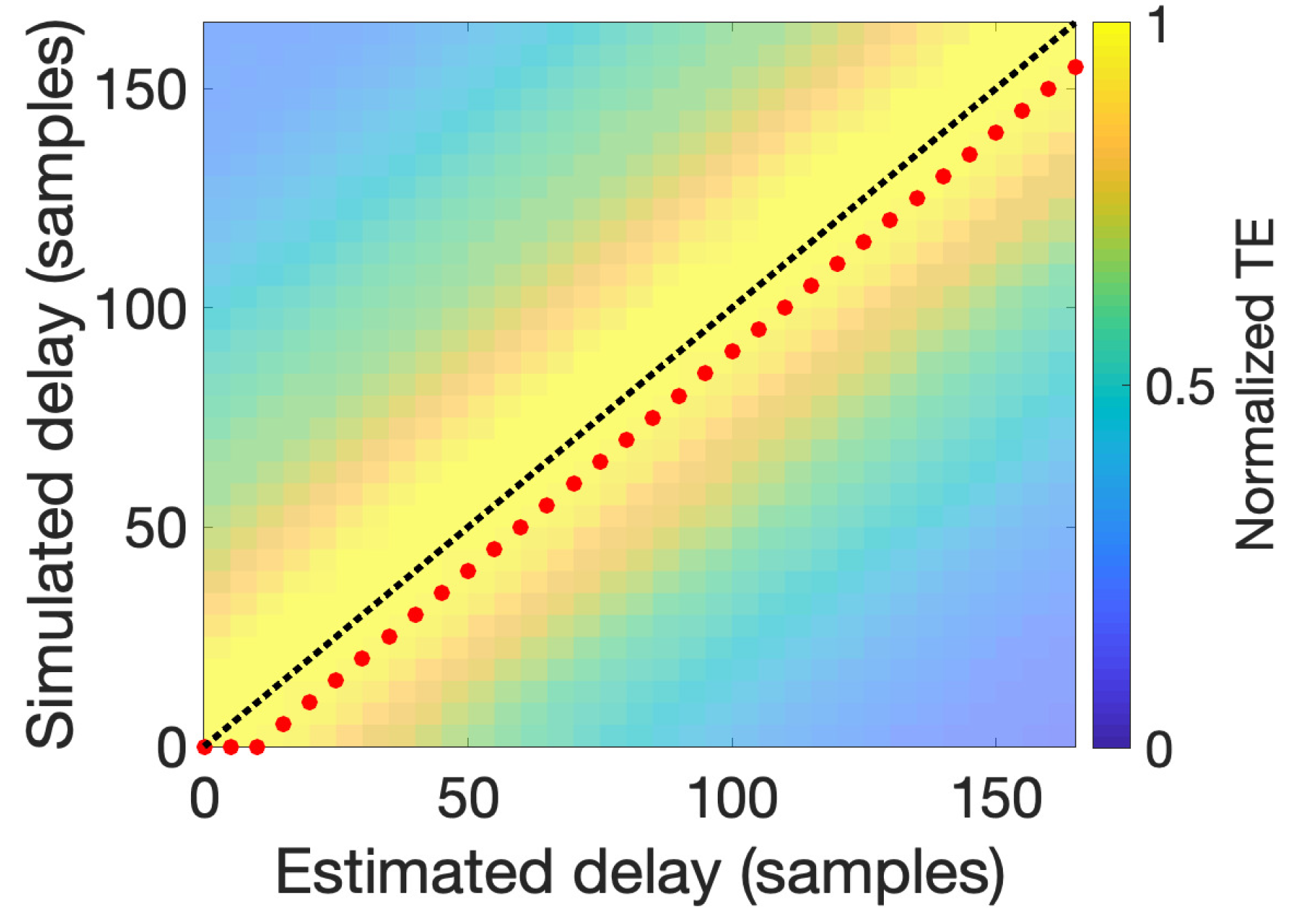

6.1. Computing TE and MIPAC in Simulated PAC Data

6.2. Estimating PAC with Local TE in Actual ECoG Data

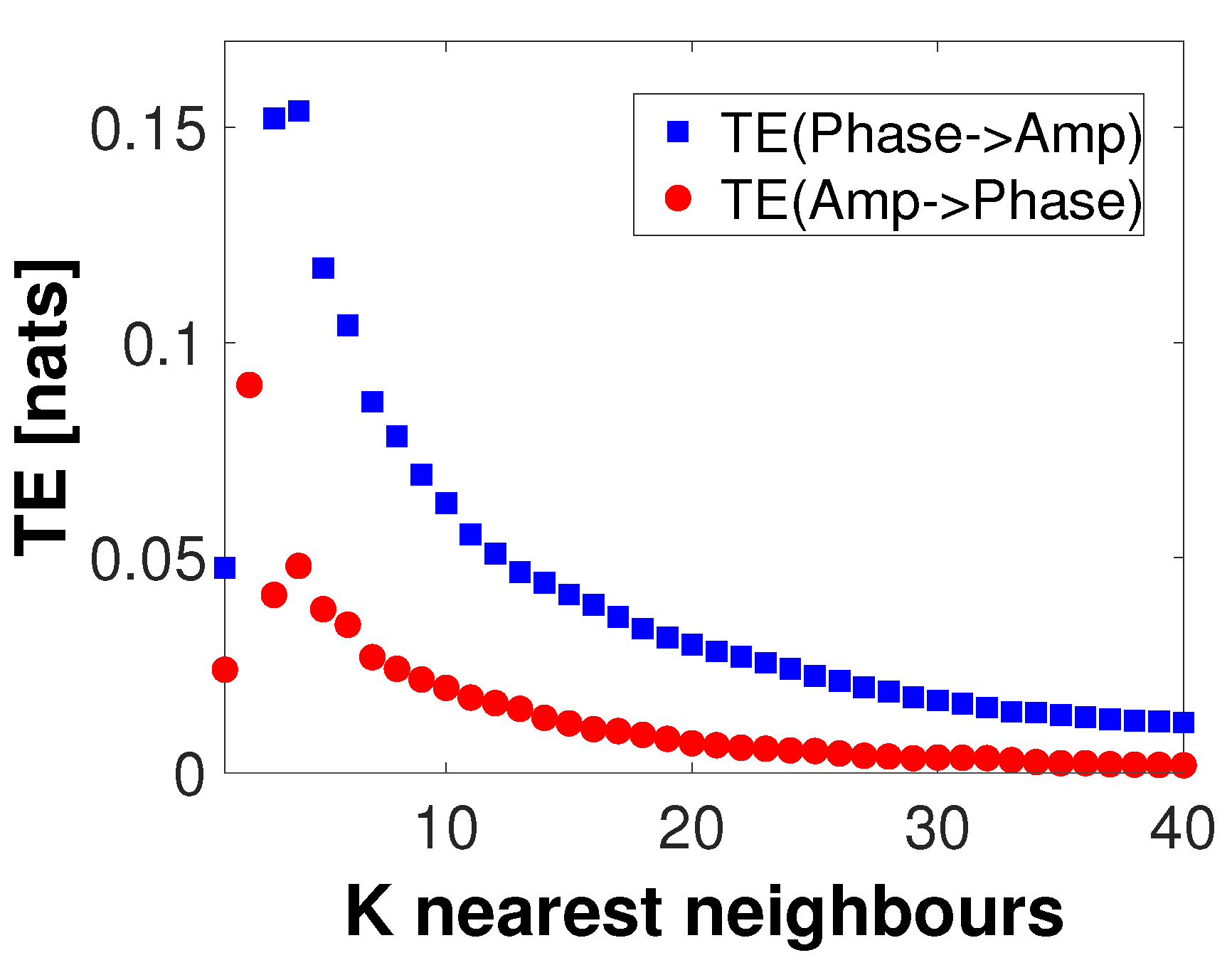

6.3. The Problem of Directional Causality in PAC

6.4. MI and TE, Two Faces of the PAC Process?

6.5. General Considerations for Approaching PAC Estimation Using Local Transfer Entropy

6.5.1. Parameters Selection for Estimating Local TE

6.5.2. Event-Related Data and TE

6.5.3. The Importance of the Filtering Strategy

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Buzsaki, G. Rhythms of the Brain; Oxford University Press: New York, NY, USA, 2006. [Google Scholar]

- Buzsáki, G.; Draguhn, A. Neuronal oscillations in cortical networks. Science 2004, 304, 1926–1929. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Cancino, R.; Heng, J.; Delorme, A.; Kreutz-Delgado, K.; Sotero, R.C.; Makeig, S. Measuring transient phase-amplitude coupling using local mutual information. NeuroImage 2019, 185, 361–378. [Google Scholar] [CrossRef] [PubMed]

- Buzsáki, G.; Geisler, C.; Henze, D.A.; Wang, X.J. Interneuron diversity series: Circuit complexity and axon wiring economy of cortical interneurons. Trends Neurosci. 2004, 27, 186–193. [Google Scholar] [CrossRef] [PubMed]

- Lozano-Soldevilla, D.; Ter Huurne, N.; Oostenveld, R. Neuronal oscillations with non-sinusoidal morphology produce spurious phase-to-amplitude coupling and directionality. Front. Comput. Neurosci. 2016, 10, 87. [Google Scholar] [CrossRef]

- Nonoda, Y.; Miyakoshi, M.; Ojeda, A.; Makeig, S.; Juhász, C.; Sood, S.; Asano, E. Interictal high-frequency oscillations generated by seizure onset and eloquent areas may be differentially coupled with different slow waves. Clin. Neurophysiol. 2016, 127, 2489–2499. [Google Scholar] [CrossRef] [PubMed]

- López-Azcárate, J.; Tainta, M.; Rodríguez-Oroz, M.C.; Valencia, M.; González, R.; Guridi, J.; Iriarte, J.; Obeso, J.A.; Artieda, J.; Alegre, M. Coupling between beta and high-frequency activity in the human subthalamic nucleus may be a pathophysiological mechanism in Parkinson’s disease. J. Neurosci. 2010, 30, 6667–6677. [Google Scholar] [CrossRef]

- De Hemptinne, C.; Ryapolova-Webb, E.S.; Air, E.L.; Garcia, P.A.; Miller, K.J.; Ojemann, J.G.; Ostrem, J.L.; Galifianakis, N.B.; Starr, P.A. Exaggerated phase–amplitude coupling in the primary motor cortex in Parkinson disease. Proc. Natl. Acad. Sci. USA 2013, 110, 4780–4785. [Google Scholar] [CrossRef]

- Goutagny, R.; Gu, N.; Cavanagh, C.; Jackson, J.; Chabot, J.G.; Quirion, R.; Krantic, S.; Williams, S. Alterations in hippocampal network oscillations and theta–gamma coupling arise before Aβ overproduction in a mouse model of A lzheimer’s disease. Eur. J. Neurosci. 2013, 37, 1896–1902. [Google Scholar] [CrossRef] [PubMed]

- Dimitriadis, S.I.; Laskaris, N.A.; Bitzidou, M.P.; Tarnanas, I.; Tsolaki, M.N. A novel biomarker of amnestic MCI based on dynamic cross-frequency coupling patterns during cognitive brain responses. Front. Neurosci. 2015, 9, 350. [Google Scholar] [CrossRef]

- Allen, E.A.; Liu, J.; Kiehl, K.A.; Gelernter, J.; Pearlson, G.D.; Perrone-Bizzozero, N.I.; Calhoun, V.D. Components of cross-frequency modulation in health and disease. Front. Syst. Neurosci. 2011, 5, 59. [Google Scholar] [CrossRef]

- Grove, T.B.; Lasagna, C.A.; Martinez-Cancino, R.; Pamidighantam, P.; Deldin, P.J.; Tso, I.F. Neural oscillatory abnormalities during gaze processing in schizophrenia: Evidence of reduced theta phase consistency and inter-areal theta-gamma coupling. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2020. [Google Scholar] [CrossRef]

- Bahramisharif, A.; Mazaheri, A.; Levar, N.; Schuurman, P.R.; Figee, M.; Denys, D. Deep brain stimulation diminishes cross-frequency coupling in obsessive-compulsive disorder. Biol. Psychiatry 2016, 80, e57–e58. [Google Scholar] [CrossRef] [PubMed]

- Canolty, R.T.; Edwards, E.; Dalal, S.S.; Soltani, M.; Nagarajan, S.S.; Kirsch, H.E.; Berger, M.S.; Barbaro, N.M.; Knight, R.T. High gamma power is phase-locked to theta oscillations in human neocortex. Science 2006, 313, 1626–1628. [Google Scholar] [CrossRef]

- Tort, A.B.; Komorowski, R.; Eichenbaum, H.; Kopell, N. Measuring phase-amplitude coupling between neuronal oscillations of different frequencies. J. Neurophysiol. 2010, 104, 1195–1210. [Google Scholar] [CrossRef]

- Penny, W.; Duzel, E.; Miller, K.; Ojemann, J. Testing for nested oscillation. J. Neurosci. Methods 2008, 174, 50–61. [Google Scholar] [CrossRef]

- Petkoski, S.; Jirsa, V.K. Transmission time delays organize the brain network synchronization. Philos. Trans. R. Soc. A 2019, 377, 20180132. [Google Scholar] [CrossRef]

- Felts, P.A.; Baker, T.A.; Smith, K.J. Conduction in segmentally demyelinated mammalian central axons. J. Neurosci. 1997, 17, 7267–7277. [Google Scholar] [CrossRef]

- Whitford, T.J.; Ford, J.M.; Mathalon, D.H.; Kubicki, M.; Shenton, M.E. Schizophrenia, myelination, and delayed corollary discharges: A hypothesis. Schizophr. Bull. 2012, 38, 486–494. [Google Scholar] [CrossRef]

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461. [Google Scholar] [CrossRef]

- Lizier, J.T. The Local Information Dynamics of Distributed Computation in Complex Systems; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Wiener, N. The theory of prediction. In Modern Mathematics for Engineers; McGraw-Hill Book Company, Inc.: New York, NY, USA, 1956. [Google Scholar]

- Wibral, M.; Pampu, N.; Priesemann, V.; Siebenhühner, F.; Seiwert, H.; Lindner, M.; Lizier, J.T.; Vicente, R. Measuring information-transfer delays. PLoS ONE 2013, 8, e55809. [Google Scholar] [CrossRef]

- Bossomaier, T.; Barnett, L.; Harré, M.; Lizier, J.T. An Introduction to Transfer Entropy; Springer: Cham, Switzerland, 2016; pp. 65–95. [Google Scholar]

- Lizier, J.T.; Prokopenko, M. Differentiating information transfer and causal effect. Eur. Phys. J. B 2010, 73, 605–615. [Google Scholar] [CrossRef]

- Wibral, M.; Vicente, R.; Lizier, J.T. Directed Information Measures in Neuroscience; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Local information transfer as a spatiotemporal filter for complex ystems. Phys. Rev. E 2008, 77, 026110. [Google Scholar] [CrossRef]

- Antos, A.; Kontoyiannis, I. Convergence properties of functional estimates for discrete distributions. Random Struct. Algorithms 2001, 19, 163–193. [Google Scholar] [CrossRef]

- Moon, Y.I.; Rajagopalan, B.; Lall, U. Estimation of mutual information using kernel density estimators. Phys. Rev. E 1995, 52, 2318. [Google Scholar] [CrossRef]

- Steuer, R.; Kurths, J.; Daub, C.O.; Weise, J.; Selbig, J. The mutual information: Detecting and evaluating dependencies between variables. Bioinformatics 2002, 18, S231–S240. [Google Scholar] [CrossRef]

- Dobrushin, R.L. Prescribing a system of random variables by conditional distributions. Theory Probab. Appl. 1970, 15, 458–486. [Google Scholar] [CrossRef]

- Vasicek, O. A test for normality based on sample entropy. J. R. Stat. Soc. Ser. B 1976, 38, 54–59. [Google Scholar] [CrossRef]

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef]

- Wibral, M.; Lizier, J.T.; Priesemann, V. Bits from brains for biologically inspired computing. Front. Robot. AI 2015, 2, 5. [Google Scholar] [CrossRef]

- Treves, A.; Panzeri, S. The upward bias in measures of information derived from limited data samples. Neural Comput. 1995, 7, 399–407. [Google Scholar] [CrossRef]

- Victor, J.D. Binless strategies for estimation of information from neural data. Phys. Rev. E 2002, 66, 051903. [Google Scholar] [CrossRef]

- Panzeri, S.; Senatore, R.; Montemurro, M.A.; Petersen, R.S. Correcting for the sampling bias problem in spike train information measures. J. Neurophysiol. 2007, 98, 1064–1072. [Google Scholar] [CrossRef]

- Kozachenko, L.; Leonenko, N.N. Sample Estimate of the Entropy of a Random Vector. Probl. Peredachi Informatsii 1987, 23, 9–16. [Google Scholar]

- Lizier, J.T. JIDT: An information-theoretic toolkit for studying the dynamics of complex systems. Front. Robot. AI 2014, 1, 11. [Google Scholar] [CrossRef]

- Berens, P. CircStat: A MATLAB toolbox for circular statistics. J. Stat. Softw. 2009, 31, 1–21. [Google Scholar] [CrossRef]

- Cao, L. Practical method for determining the minimum embedding dimension of a scalar time series. Phys. D Nonlinear Phenom. 1997, 110, 43–50. [Google Scholar] [CrossRef]

- Ragwitz, M.; Kantz, H. Markov models from data by simple nonlinear time series predictors in delay embedding spaces. Phys. Rev. E 2002, 65, 056201. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Martínez-Cancino, R.; Delorme, A.; Kenneth, K.D.; Scott, M. Computing phase amplitude coupling in EEGLAB: PACTools. In Proceedings of the 20th International IEEE Conference on BioInformatics and BioEngineering, Virtual Conference, USA, 26–28 October 2020. [Google Scholar]

- La Tour, T.D. Nonlinear Models for Neurophysiological Time Series. Ph.D. Thesis, Universite Paris-Saclay, Saint-Aubin, France, 2018. [Google Scholar]

- Makeig, S. Auditory event-related dynamics of the EEG spectrum and effects of exposure to tones. Electroencephalogr. Clin. Neurophysiol. 1993, 86, 283–293. [Google Scholar] [CrossRef]

- jidt/README-SchreiberTeDemos.pdf at Master · Jlizier/Jidt. Available online: https://github.com/jlizier/jidt/blob/master/demos/octave/SchreiberTransferEntropyExamples/README-SchreiberTeDemos.pdf (accessed on 6 November 2020).

- Kandel, E.R.; Schwartz, J.H.; Jessell, M.T.; Siegelbaum, S.; Hudspeth, A.J. Principles of Neural Science, 5th ed.; McGraw-Hill Education: New York, NY, USA, 2012. [Google Scholar]

- Onton, J.A.; Makeig, S. High-frequency broadband modulation of electroencephalographic spectra. Front. Hum. Neurosci. 2009, 3, 61. [Google Scholar] [CrossRef]

- Canolty, R.T.; Knight, R.T. The functional role of cross-frequency coupling. Trends Cogn. Sci. 2010, 14, 506–515. [Google Scholar] [CrossRef]

- Zhang, T.; Xu, X.; Zheng, C. Reduction in LFP cross-frequency coupling between theta and gamma rhythms associated with impaired STP and LTP in a rat model of brain ischemia. Front. Comput. Neurosci. 2013, 7, 27. [Google Scholar]

- Fell, J.; Axmacher, N. The role of phase synchronization in memory processes. Nat. Rev. Neurosci. 2011, 12, 105–118. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.; Bahramisharif, A.; van Gerven, M.A.; Jensen, O. Measuring directionality between neuronal oscillations of different frequencies. Neuroimage 2015, 118, 359–367. [Google Scholar] [CrossRef]

- Rasch, B.; Born, J. About sleep’s role in memory. Phys. Rev. 2013, 93, 681–766. [Google Scholar] [CrossRef]

- Bergmann, T.O.; Born, J. Phase-amplitude coupling: A general mechanism for memory processing and synaptic plasticity? Neuron 2018, 97, 10–13. [Google Scholar] [CrossRef]

- Schroeder, C.E.; Lakatos, P. The gamma oscillation: Master or slave? Brain Topogr. 2009, 22, 24–26. [Google Scholar] [CrossRef] [PubMed]

- Schroeder, C.E.; Lakatos, P. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 2009, 32, 9–18. [Google Scholar] [CrossRef]

- Mukamel, R.; Gelbard, H.; Arieli, A.; Hasson, U.; Fried, I.; Malach, R. Coupling between neuronal firing, field potentials, and FMRI in human auditory cortex. Science 2005, 309, 951–954. [Google Scholar] [CrossRef]

- Niessing, J.; Ebisch, B.; Schmidt, K.E.; Niessing, M.; Singer, W.; Galuske, R.A. Hemodynamic signals correlate tightly with synchronized gamma oscillations. Science 2005, 309, 948–951. [Google Scholar] [CrossRef]

- Wagner, J.; Makeig, S.; Hoopes, D.; Gola, M. Can oscillatory alpha-gamma phase-amplitude coupling be used to understand and enhance TMS effects? Front. Hum. Neurosci. 2019, 13, 263. [Google Scholar] [CrossRef]

- Aru, J.; Aru, J.; Priesemann, V.; Wibral, M.; Lana, L.; Pipa, G.; Singer, W.; Vicente, R. Untangling cross-frequency coupling in neuroscience. Curr. Opin. Neurobiol. 2015, 31, 51–61. [Google Scholar] [CrossRef]

- Novelli, L.; Wollstadt, P.; Mediano, P.; Wibral, M.; Lizier, J.T. Large-scale directed network inference with multivariate transfer entropy and hierarchical statistical testing. Netw. Neurosci. 2019, 3, 827–847. [Google Scholar] [CrossRef]

- Lindner, M.; Vicente, R.; Priesemann, V.; Wibral, M. TRENTOOL: A Matlab open source toolbox to analyse information flow in time series data with transfer entropy. BMC Neurosci. 2011, 12, 119. [Google Scholar] [CrossRef]

- Gómez-Herrero, G.; Wu, W.; Rutanen, K.; Soriano, M.C.; Pipa, G.; Vicente, R. Assessing coupling dynamics from an ensemble of time series. Entropy 2015, 17, 1958–1970. [Google Scholar] [CrossRef]

- Chavez, M.; Besserve, M.; Adam, C.; Martinerie, J. Towards a proper estimation of phase synchronization from time series. J. Neurosci. Methods 2006, 154, 149–160. [Google Scholar] [CrossRef]

- Dvorak, D.; Fenton, A.A. Toward a proper estimation of phase–amplitude coupling in neural oscillations. J. Neurosci. Methods 2014, 225, 42–56. [Google Scholar] [CrossRef]

- Hyafil, A. Misidentifications of specific forms of cross-frequency coupling: Three warnings. Front. Neurosci. 2015, 9, 370. [Google Scholar] [CrossRef] [PubMed]

- Weber, I.; Florin, E.; Von Papen, M.; Timmermann, L. The influence of filtering and downsampling on the estimation of transfer entropy. PLoS ONE 2017, 12, e0188210. [Google Scholar] [CrossRef]

- Wollstadt, P.; Sellers, K.K.; Rudelt, L.; Priesemann, V.; Hutt, A.; Fröhlich, F.; Wibral, M. Breakdown of local information processing may underlie isoflurane anesthesia effects. PLoS Comput. Biol. 2017, 13, e1005511. [Google Scholar] [CrossRef]

- Granger, C.W. Investigating causal relations by econometric models and cross-spectral methods. Econ. J. Econom. Soc. 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Florin, E.; Gross, J.; Pfeifer, J.; Fink, G.R.; Timmermann, L. The effect of filtering on Granger causality based multivariate causality measures. Neuroimage 2010, 50, 577–588. [Google Scholar] [CrossRef]

- Barnett, L.; Seth, A.K. Behaviour of Granger causality under filtering: Theoretical invariance and practical application. J. Neurosci. Methods 2011, 201, 404–419. [Google Scholar]

- Barnett, L.; Barrett, A.B.; Seth, A.K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 2009, 103, 238701. [Google Scholar] [CrossRef]

- Pinzuti, E.; Wollsdtadt, P.; Gutknecht, A.; Tüscher, O.; Wibral, M. Measuring spectrally-resolved information transfer for sender-and receiver-specific frequencies. bioRxiv 2020. [Google Scholar] [CrossRef]

- Faes, L.; Nollo, G.; Porta, A. Information-based detection of nonlinear Granger causality in multivariate processes via a nonuniform embedding technique. Phys. Rev. E 2011, 83, 051112. [Google Scholar] [CrossRef]

- Lizier, J.; Rubinov, M. Multivariate Construction of Effective Computational Networks from Observational Data; Max-Planck-Institut für Mathematik in den Naturwissenschaften: Leipzig, Germany, 2012. [Google Scholar]

- Wollstadt, P.; Lizier, J.T.; Vicente, R.; Finn, C.; Martinez-Zarzuela, M.; Mediano, P.; Novelli, L.; Wibral, M. IDTxl: The Information Dynamics Toolkit xl: A Python package for the efficient analysis of multivariate information dynamics in networks. arXiv 2018, arXiv:1807.10459. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez-Cancino, R.; Delorme, A.; Wagner, J.; Kreutz-Delgado, K.; Sotero, R.C.; Makeig, S. What Can Local Transfer Entropy Tell Us about Phase-Amplitude Coupling in Electrophysiological Signals? Entropy 2020, 22, 1262. https://doi.org/10.3390/e22111262

Martínez-Cancino R, Delorme A, Wagner J, Kreutz-Delgado K, Sotero RC, Makeig S. What Can Local Transfer Entropy Tell Us about Phase-Amplitude Coupling in Electrophysiological Signals? Entropy. 2020; 22(11):1262. https://doi.org/10.3390/e22111262

Chicago/Turabian StyleMartínez-Cancino, Ramón, Arnaud Delorme, Johanna Wagner, Kenneth Kreutz-Delgado, Roberto C. Sotero, and Scott Makeig. 2020. "What Can Local Transfer Entropy Tell Us about Phase-Amplitude Coupling in Electrophysiological Signals?" Entropy 22, no. 11: 1262. https://doi.org/10.3390/e22111262

APA StyleMartínez-Cancino, R., Delorme, A., Wagner, J., Kreutz-Delgado, K., Sotero, R. C., & Makeig, S. (2020). What Can Local Transfer Entropy Tell Us about Phase-Amplitude Coupling in Electrophysiological Signals? Entropy, 22(11), 1262. https://doi.org/10.3390/e22111262