1. Introduction

The Skellam distribution is obtained by taking the difference between two independent Poisson distributed random variables, which was introduced for the case of different intensities

by (see [

1]) and for equal means in [

2]. For large values of

, the distribution can be approximated by the normal distribution and if

is very close to 0, then the distribution tends to a Poisson distribution with intensity

. Similarly, if

tends to 0, the distribution tends to a Poisson distribution with non-positive integer values. The Skellam random variable is infinitely divisible, since it is the difference of two infinitely divisible random variables (see Proposition

in [

3]). Therefore, one can define a continuous time Lévy process for Skellam distribution, which is called Skellam process.

The Skellam process is an integer valued Lévy process and it can also be obtained by taking the difference of two independent Poisson processes. Its marginal probability mass function (pmf) involves the modified Bessel function of the first kind. The Skellam process has various applications in different areas, such as to model the intensity difference of pixels in cameras (see [

4]) and for modeling the difference of the number of goals of two competing teams in a football game [

5]. The model based on the difference of two point processes is proposed in (see [

6,

7,

8,

9]).

Recently, the time-fractional Skellam process has been studied in [

10], which is obtained by time-changing the Skellam process with an inverse stable subordinator. Further, they provided the application of time-fractional Skellam process in modeling of arrivals of jumps in high frequency trading data. It is shown that the inter-arrival times between the positive and negative jumps follow a Mittag–Leffler distribution rather then the exponential distribution. Similar observations are observed in the case of Danish fire insurance data (see [

11]). Buchak and Sakhno, in [

12], have also proposed the governing equations for time-fractional Skellam processes. Recently, [

13] introduced time-changed Poisson process of order

k, which is obtained by time changing the Poisson process of order

k (see [

14]) by general subordinators.

In this paper, we introduce Skellam process of order k and its running average. We also discuss the time-changed Skellam process of order k. In particular, we discuss space-fractional Skellam process and tempered space-fractional Skellam process via time changes in Skellam process by independent stable subordinator and tempered stable subordinator, respectively. We obtain closed form expressions for the marginal distributions of the considered processes and other important properties. Skellam process is used to model the difference between the number of goals between two teams in a football match. At the beginning, both teams have scores 0 each and at time t the team 1 score is , which is the cumulative sum of arrivals (goals) of size 1 until time t with exponential inter-arrival times. Similarly for team 2, the score is at time The difference between the number of goals can be modeled using at time t. Similarly, the Skellam process of order k can be used to model the difference between the number of points scored by two competing teams in a basketball match where Note that, in a basketball game, a free throw is count as one point, any basket from a shot taken from inside the three-point line counts for two points and any basket from a shot taken from outside the three-point line is considered as three points. Thus, a jump in the score of any team may be of size one, two, or three. Hence, a Skellam process of order 3 can be used to model the difference between the points scored.

In [

10], it is shown that the fractional Skellam process is a better model then the Skellam process for modeling the arrivals of the up and down jumps for the tick-by-tick financial data. Equivalently, it is shown that the Mittag–Leffler distribution is a better model than the exponential distribution for the inter-arrival times between the up and down jumps. However, it is evident from Figure 3 of [

10] that the fractional Skellam process is also not perfectly fitting the arrivals of positive and negative jumps. We hope that a more flexible class of processes like time-changed Skellam process of order

k (see

Section 6) and the introduced tempered space-fractional Skellam process (see

Section 7) would be better model for arrivals of jumps. Additionally, see [

8] for applications of integer-valued Lévy processes in financial econometrics. Moreover, distributions of order

k are interesting for reliability theory [

15]. The Fisher dispersion index is a widely used measure for quantifying the departure of any univariate count distribution from the equi-dispersed Poisson model [

16,

17,

18]. The introduced processes in this article can be useful in modeling of over-dispersed and under-dispersed data. Further, in (

49), we present probabilistic solutions of some fractional equations.

The remainder of this paper proceeds, as follows: in

Section 2, we introduce all the relevant definitions and results. We also derive the Lévy density for space- and tempered space-fractional Poisson processes. In

Section 3, we introduce and study running average of Poisson process of order

k.

Section 4 is dedicated to Skellam process of order

k.

Section 5 deals with running average of Skellam process of order

k. In

Section 6, we discuss the time-changed Skellam process of order

k. In

Section 7, we determine the marginal pmf, governing equations for marginal pmf, Lévy densities, and moment generating functions for space-fractional Skellam process and tempered space-fractional Skellam process.

3. Running Average of PPoK

In this section, we first introduced the running average of PPoK and their main properties. These results will be used further to discuss the running average of SPoK.

Definition 2 (Running average of PPoK).

We define the running average process by taking time-scaled integral of the path of the PPoK (see [26]), We can write the differential equation with initial condition

,

Which shows that it has continuous sample paths of bounded total variation. We explored the compound Poisson representation and distribution properties of running average of PPoK. The characteristic of

is obtained using the Lemma 1 of [

26]. We recall Lemma 1 from [

26] for ease of reference.

Lemma 1. If is a Lévy process and its Riemann integral is defined bythen the characteristic functions of Y satisfies Proposition 3. The characteristic function of is given by Proof. Using the Equation (

10), we have

Using (

32) and (

31), we have

Proposition 4. The running average process has a compound Poisson representation, such thatwhere are independent, identically distributed (iid) copies of X random variables, independent of and is a Poisson process with intensity . Subsequently, Further, the random variable X has the following pdfwhere follows discrete uniform distribution over and follows continuous uniform distribution over Proof. The pdf of

is

Using (

35), the characteristic function of

X is given by

For fixed

t, the characteristic function of

is

which is equal to the characteristic function of PPoK that is given in (

33). Hence, by the uniqueness of characteristic function, the result follows. □

Using the definition

the first two moments for random variable

X given in Proposition (4) are

and

. Further, using the mean, variance, and covariance of compound Poisson process, we have

Corollary 1. Putting , the running average of PPoK reduces to the running average of standard Poisson process (see Appendix in [26]). Corollary 2. The mean and variance of PPoK and running average of PPoK satisfy, and .

Remark 2. The Fisher index of dispersion for running average of PPoK is given by If the process is under-dispersed and for it is over-dispersed.

Next we discuss the long-range dependence (LRD) property of running average of PPoK. We recall the definition of LRD for a non-stationary process.

Definition 3 (Long range dependence (LRD)).

Let be a stochastic process that has a correlation function for for fixed s, that satisfies,for large t, , and . For the particular case when , the above equation reduced toWe say that, if , then X(t) has the LRD property and if it has short-range dependence (SRD) property [27]. Proposition 5. The running average of PPoK has LRD property.

Proof. Let

, then the correlation function for running average of PPoK

is

Subsequently, for

, it follows

□

5. Running Average of SPoK

In this section, we introduce and study the new stochastic Lévy process, which is the running average of SPoK.

Definition 5. The following stochastic process defined by taking the time-scaled integral of the path of the SPoK, is called the running average of SPoK.

Next, we provide the compound Poisson representation of running average of SPoK.

Proposition 10. The characteristic function of is given by Proof. By using the Lemma

to Equation (

40) after scaling by

. □

Remark 6. It is easily observable that Equation (43) has removable singularity at . To remove that singularity, we can define . Proposition 11. Let be a compound Poisson processwhere is a Poisson process with rate parameter and are iid random variables with mixed double uniform distribution function , which are independent of . Subsequently, Proof. Rearranging the

,

The random variable

being a mixed double uniformly distributed has density

where

follows discrete uniform distribution over

with pmf

and

be doubly uniform distributed random variables with density

Further,

is a weight parameter and

is the indicator function. Here, we obtained the characteristic of

using the Fourier transform of (45),

The characteristic function of

is

putting the characteristic function

in the above expression yields the characteristic function of

, which completes the proof. □

Remark 7. The q-th order moments of can be calculated using (37) and also using Taylor series expansion of the characteristic , around 0, such that We have and . Further, the mean, variance, and covariance of running average of SPoK are Corollary 3. For the running average of SPoK is same as the running average of PPoK, i.e., Corollary 4. For this process behave like the running average of Skellam process.

Corollary 5. The ratio of mean and variance of SPoK and running average of SPoK are and , respectively.

Remark 8. For running average of SPoK, when and , the process is over-dispersed. Otherwise, it exhibits under-dispersion.

7. Space Fractional Skellam Process and Tempered Space Fractional Skellam Process

In this section, we introduce time-changed Skellam processes where time-change are stable subordinator and tempered stable subordinator. These processes give the space-fractional version of the Skellam process similar to the time-fractional version of the Skellam process introduced in [

10].

7.1. The Space-Fractional Skellam Process

In this section, we introduce space-fractional Skellam processes (SFSP). Further, for introduced processes, we study main results, such as state probabilities and governing difference-differential equations of marginal pmf.

Definition 6 (SFSP).

Let and be two independent homogeneous Poison processes with intensities and , respectively. Let and be two independent stable subordinators with indices and , respectively. These subordinators are independent of the Poisson processes and . The subordinated stochastic processis called a SFSP.

Next, we derive the mgf of SFSP. We use the expression for marginal (pmf) of SFPP that is given in (

17) to obtain the marginal pmf of SFSP.

In the next result, we obtain the state probabilities of the SFSP.

Theorem 3. The pmf of SFSP is given by for .

Proof. Note that

and

are independent, hence

Using (

17), the result follows. □

In the next theorem, we discuss the governing differential-difference equation of the marginal pmf of SFSP.

Theorem 4. The marginal distribution of SFSP satisfies the following differential difference equationswith initial conditions and for Proof. The proof follows by using pgf. □

Remark 9. The mgf of the SFSP solves the differential equation Proposition 12. The Lévy density of SFSP is given by Proof. Substituting the Lévy density

and

of

and

, respectively, from the Equation (

24), we obtain

which gives the desired result. □

7.2. Tempered Space-Fractional Skellam Process (TSFSP)

In this section, we present the tempered space-fractional Skellam process (TSFSP). We discuss the corresponding fractional difference-differential equations, marginal pmfs, and moments of this process.

Definition 7 (TSFSP).

The TSFSP is obtained by taking the difference of two independent tempered space fractional Poisson processes. Let , be two independent TSS (see [28]) and be two independent Poisson processes that are independent of TSS. Subsequently, the stochastic processis called the TSFSP.

Theorem 5. The PMF is given by when and similarly for , Proof. Because

and

are independent,

which gives the marginal pmf of TSFPP using (

26). □

Remark 10. We use this expression to calculate the marginal distribution of TSFSP. The mgf is obtained using the conditioning argument. Let be the density function of . Subsequently, Using (54), the mgf of TSFSP is Remark 11. We have Further, the covariance of TSFSP can be obtained by using (29) and Proposition 13. The Lévy density of TSFSP is given by Proof. By adding Lévy density

and

of

and

, respectively, from Equation (

30), which leads to

□

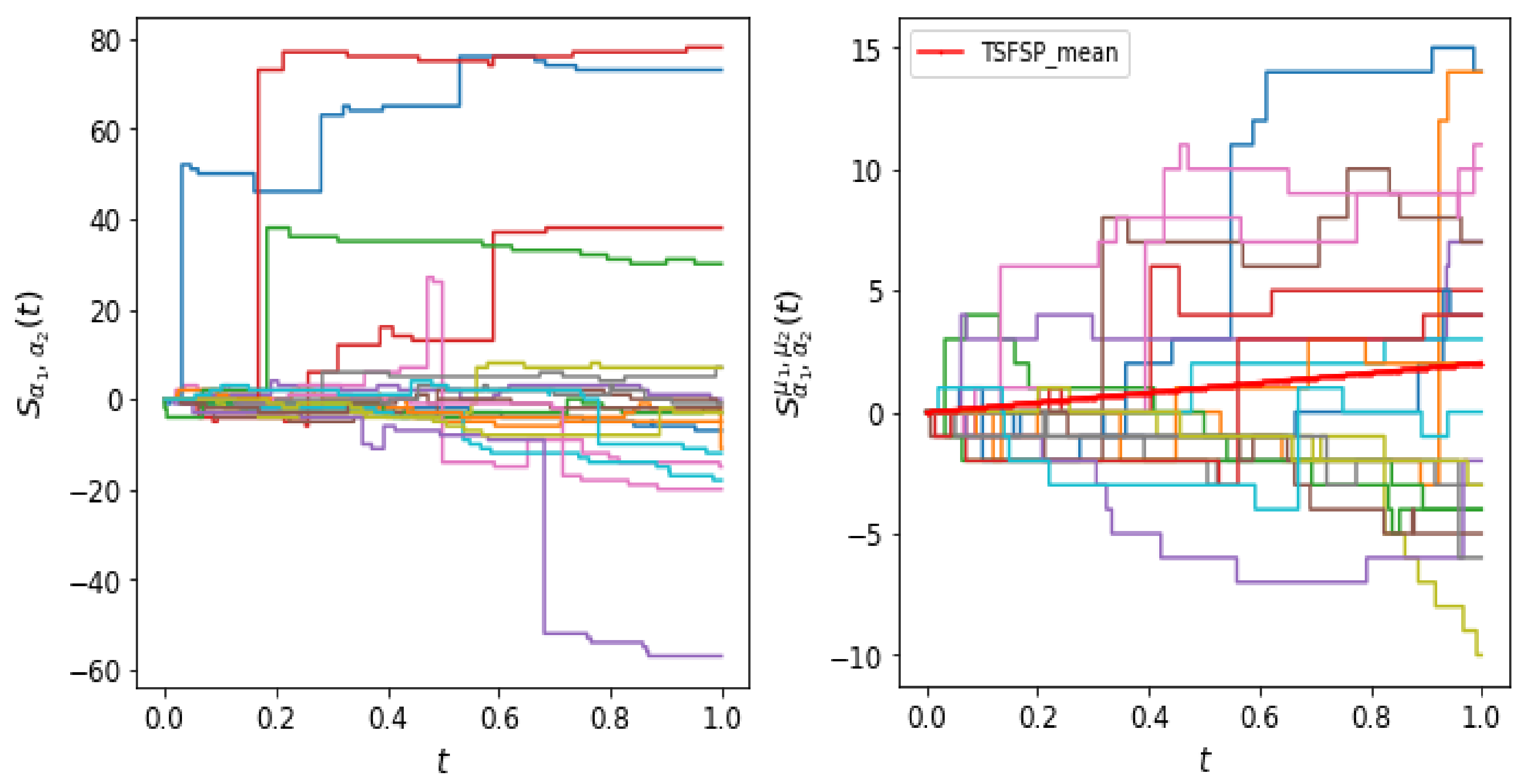

7.3. Simulation of SFSP and TSFSP

We present the algorithm to simulate the sample trajectories for SFSP and TSFSP. We use Python 3.7 and its libraries Numpy and Matplotlib for the simulation purpose. It is worth mentioning that Python is an open source and freely available software.

Simulation of SFSP: fix the values of the parameters , , and

Step-1: generate independent and uniformly distributed random vectors U, V of size 1000 each in the interval ;

Step-2: generate the increments of the

-stable subordinator

(see [

29]) with pdf

, while using the relationship

, where

Step-3: generate the increments of Poisson distributed rvs with parameter ;

Step-4: cumulative sum of increments gives the space fractional Poisson process sample trajectories; and,

Step-5: similarly generate and subtract these to obtain the SFSP .

We next present the algorithm for generating the sample trajectories of TSFSP.

Simulation of TSFSP: fix the values of the parameters , , , , and

Use the first two steps of previous algorithm for generating the increments of -stable subordinator .

Step-3: for generating the increments of TSS with pdf , we use the following steps, called “acceptance-rejection method”;

- (a)

generate the stable random variable ;

- (b)

generate uniform rv W (independent from );

- (c)

if , then (“accept"); otherwise, go back to (a) (“reject"). Note that, here we used that which implies for and the ratio is bounded between 0 and 1;

Step-4: generate Poisson distributed rv with parameter

Step-5: cumulative sum of increments gives the tempered space fractional Poisson process sample trajectories; and,

Step-6: similarly generate , then take difference of these to get the sample paths of the TSFSP.

The tail probability of

-stable subordinator behaves asymptotically as (see e.g., [

30])

For

and

and fixed

t, it is more probable that the value of the rv

is higher than the rv

. Thus, for same intensity parameter

for Poisson process the process

will have generally more arrivals than the process

until time

t. This is evident from the trajectories of the SFSP in

Figure 1, because the trajectories are biased towards positive side. The TSFPP is a finite mean process, however SFPP is an infinite mean process and hence SFSP paths are expected to have large jumps, since there could be a large number of arrivals in any interval.