1. Introduction

The quest of generalizing the Boltzmann–Gibbs entropy has become an active field of research in the past 30 years. Indeed, many formulations appeared in the literature extending the well-known formula (see e.g., [

1,

2,

3,

4]):

The (theoretical) approaches to generalize Equation (

1) may vary considerably (see e.g., [

1,

2,

3]). In this work we are particularly interested in the method firstly proposed by Abe in [

1], which consists of the basic idea of rewriting Equation (

1) as

Concretely, we substitute the differential operator

in Equation (

2) by a suitable

fractional one (see

Section 2 for the details) and then, after some calculations, we obtain a novel (at least to the best of our knowledge) formula, which depends on a parameter

.

The paper is structured as follows.

Section 2 introduces and discusses the motivation for the new entropy formulation.

Section 3 analyses the Dow Jones Industrial Average. Additionally, The Jensen-Shannon divergence is also adopted in conjunction with the hierarchical clustering technique for analyzing regularities embedded in the time series. Finally,

Section 4 outlines the conclusions.

2. Motivation

Let us introduce the following entropy function:

where

is the gamma function.

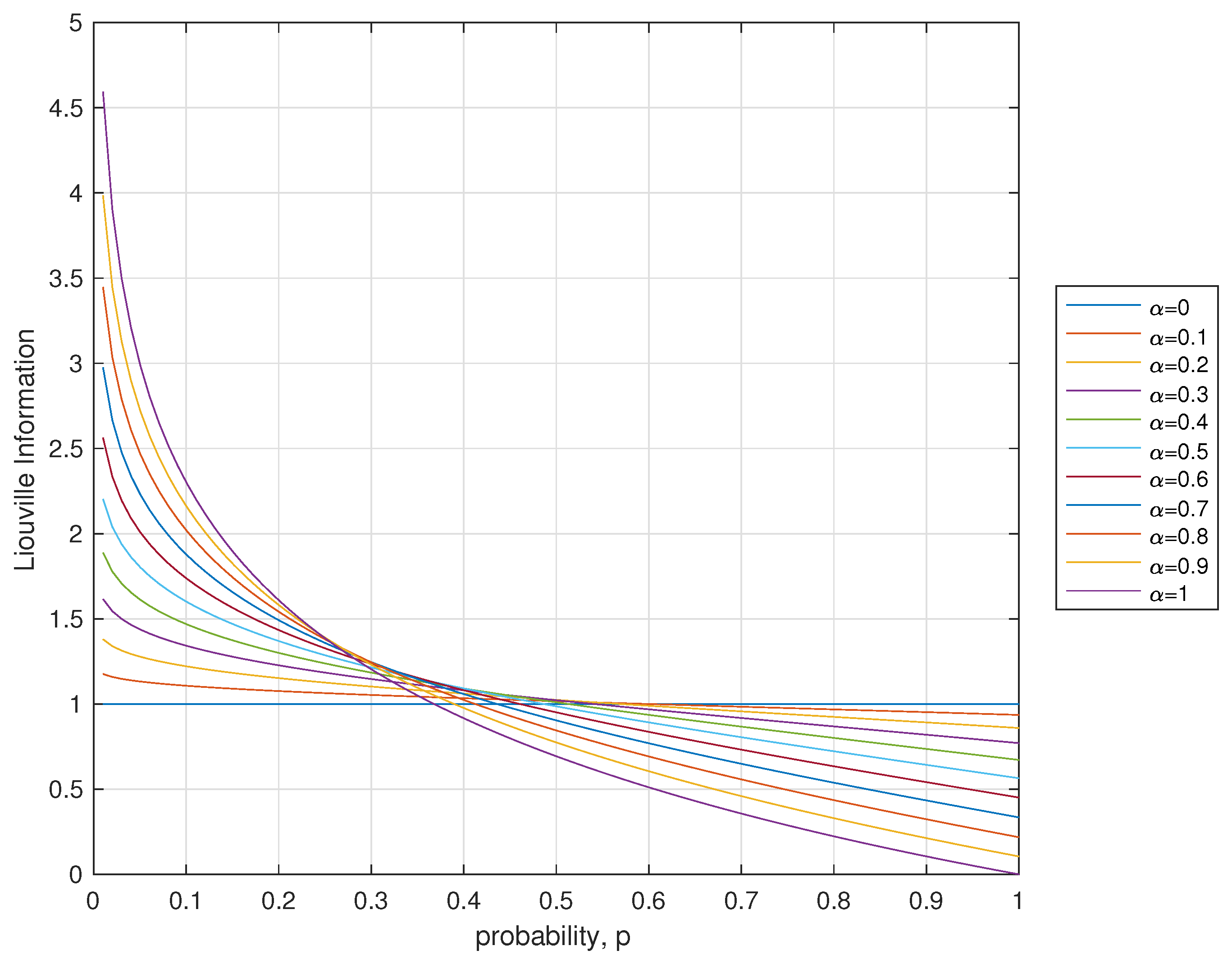

We define the quantity

as the Liouville information (See

Figure 1).

Our motivation to define the entropy function given by Equation (

3) is essentially due to the works of Abe [

1] and Ubriaco [

4]. Indeed, in Section 3 of [

4], the author notes (based on Abe’s work [

1]) that the Boltzmann-Gibbs and the Tsallis entropies may be obtained by

and

respectively. From this, he substitutes the above differential operator by a Liouville fractional derivative (see Section 2.3 in [

5]) and then he defines a

fractional entropy (see (19) in [

4]). With this in mind and taking into account the generalization of the Liouville fractional derivative given by the “fractional derivative of a function with respect to another function” (see Section 2.5 in [

5]) we consider using it in order to define a novel entropy. The Liouville fractional derivative of a function

f with respect to another function

g (with

) is defined by [

5,

6],

It is important to keep in mind that our goal is to obtain an explicit formula for the entropy. Therefore, we can think that a “good” candidate for

g is the exponential function, due to the fact that

and also the structure of Equation (

4). We chose

. Let us then calculate Equation (

4) with

and

. We obtain

It follows that,

and after using the property

, we finally get

We have, therefore, motivated the definition provided in Equation (

3).

Remark 1. We note that, if for some and , then in Equation (3) we have a division by zero. In this case we are obviously thinking about the limit of that function, i.e.,In addition, it is not hard to check that, for , we haveTherefore, we put in Equation (

3):

, with . Remark 2. The entropy function defined in Equation (

3)

brings interesting challenges. For instance, though numerically the functionfor seems to be concave (see Figure 2), a rigorous proof of that fact was not yet obtained. 3. An Example of Application

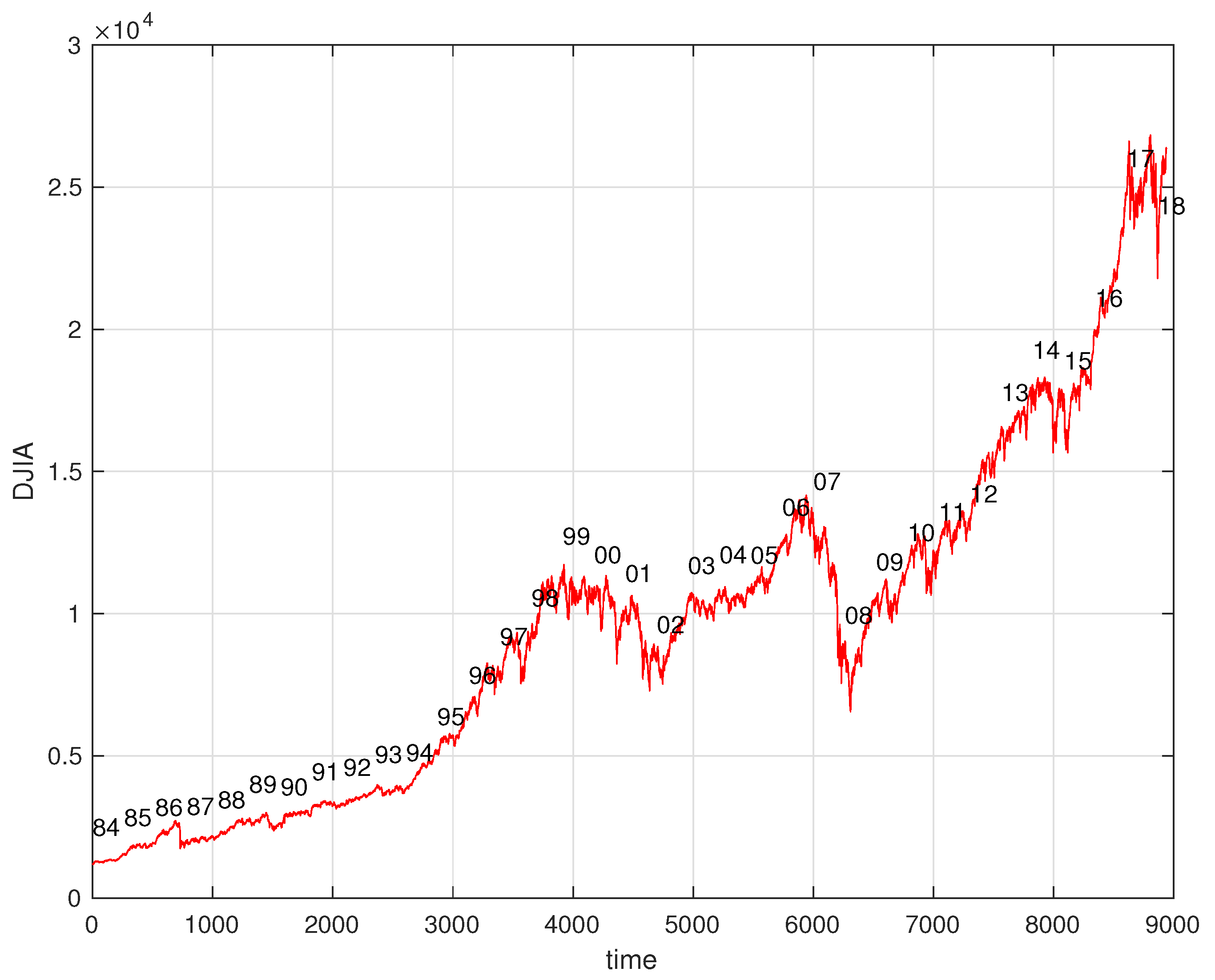

The Dow Jones Industrial Average (DJIA) is an index based on the value of 30 large companies from the United States traded in the stock market during time. The DJIA and other financial indices reveal a fractal nature and has been the topic of many studies using distinct mathematical and computational tools [

7,

8]. In this section we apply the previous concepts in the study of the DJIA in order to verify the variation of the new expressions with the fractional order. Therefore, we start by analyzing the evolution of daily closing values of the DJIA from January 1, 1985, to April 5, 2019, in the perspective of Equation (

3). All weeks include five days and missing values corresponding to special days are interpolated between adjacent values. For calculating the entropy we consider time windows of 149 days performing a total of

years.

Figure 3 and

Figure 4 show the time evolution of the DJIA and the corresponding value of

for

.

We verify that has a smooth evolution with that plays the role of a parameters for adjusting the sensitivity of the entropy index.

The Jensen-Shannon divergence (

) measures the similarity between two probability distributions and is given by

where

, and

and

represent the Kullback-Leibler divergence between distributions

P and

M, and

P and

Q, respectively.

For the classical Shannon information

the

can be calculated as:

In the case of the Liouville information

the

can be calculated as:

Obviously, for we obtain the Shannon formulation.

For processing the data produced by the

we adopt hierarchical clustering (HC). The main objective of the HC is to group together (or to place far apart) objects that are similar (or different) [

9,

10,

11,

12]. The HC receives as input a symmetrical matrix

D of distances (e.g., the

) between the

n items under analysis and produces as output a graph, in the form of a dendogram or a tree, where the length of the links represents the distance between data objects. We have two alternative algorithms, namely the agglomerative and the divisive clustering. In the first, each object starts in its own singleton cluster and, at each iteration of the HC scheme, the two most similar (in some sense) clusters are merged. The iterations stop when there is a single cluster containing all objects. In the second, all objects start in a single cluster and at each step, the HC removes the ‘outsiders’ from the least cohesive cluster. The iterations stop when each object is in its own singleton cluster. Both iterative schemes are achieved using an appropriate metric (a measure of the distance between pairs of objects) and a linkage criterion, which defines the dissimilarity between clusters as a function of the pairwise distance between objects.

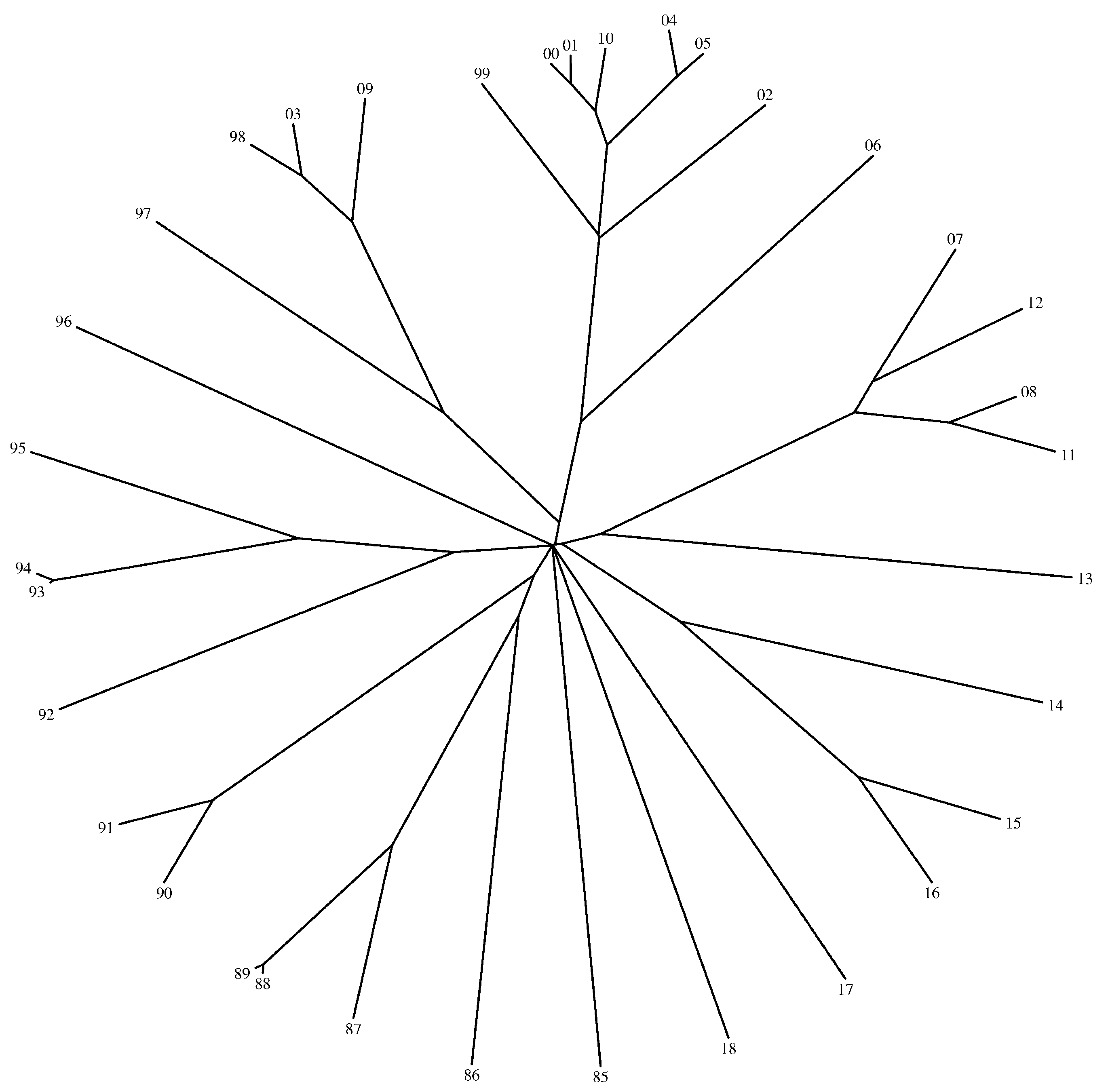

In our case the objects correspond to the years, from January 1, 1985, to December 31, 2018, that are compared using the and resulting matrix D (with dimension ) processed by means of HC.

Figure 5,

Figure 6 and

Figure 7 show the trees generated by the hierarchical clustering for the Shannon and the Liouville Jensen-Shannon divergence measures (with

), respectively. The 2-digit labels of the ‘leafs’ of the trees denote the years.

We note that our goal is not to characterize the dynamics of the DJIA time evolution since it is outside the scope of this paper. In fact, we adopt the DJIA simply as a prototype data series for assessing the effect of changing the value of in the and consequently in the HC generated tree. We verify that in general there is a strong similarity of the DJIA between consecutive years. In what concerns the use of the Shannon versus the Liouville , we observe that for close to 1 both entropies lead to identical results, while for close to 0 the Liouville produces a distinct tree. Therefore, we conclude that we can adjust the clustering performance by a proper tuning of the parameter .