Abstract

Model-free variable selection has attracted increasing interest recently due to its flexibility in algorithmic design and outstanding performance in real-world applications. However, most of the existing statistical methods are formulated under the mean square error (MSE) criterion, and susceptible to non-Gaussian noise and outliers. As the MSE criterion requires the data to satisfy Gaussian noise condition, it potentially hampers the effectiveness of model-free methods in complex circumstances. To circumvent this issue, we present a new model-free variable selection algorithm by integrating kernel modal regression and gradient-based variable identification together. The derived modal regression estimator is related closely to information theoretic learning under the maximum correntropy criterion, and assures algorithmic robustness to complex noise by replacing learning of the conditional mean with the conditional mode. The gradient information of estimator offers a model-free metric to screen the key variables. In theory, we investigate the theoretical foundations of our new model on generalization-bound and variable selection consistency. In applications, the effectiveness of the proposed method is verified by data experiments.

Keywords:

modal regression; maximum correntropy criterion; variable selection; reproducing kernel Hilbert space; generalization error MSC:

62J02; 68T05; 62F35

1. Introduction

Variable selection has attracted increasing attention in the machine learning community due to the massive requirements of high-dimensional data mining. Under different motivations, many variable selection methods have been constructed and shown promising performance in various applications. From the viewpoint of hypothesis function space, there are mainly two types of variable selection approaches with respect to linear assumption and nonlinear additive assumption, respectively. For the linear model assumption, variable selection algorithms are usually formulated based on the least-squares empirical risk and the sparsity-induced regularization, which include Least Absolute Shrinkage and Selection Operator (Lasso) [1], Group Lasso [2] and Elastic net [3] as special examples. For the nonlinear additive model assumption, various additive models have been developed to relax the linear restriction on regression function [4,5]. It is well known that additive models enjoy the flexibility and interpretability of their representation and can remedy the curse of dimensionality of high-dimensional nonparametric regression [6,7,8]. Typical examples of additive models include Sparse Additive Models (SpAM) [9], Component Selection and Smoothing Operator (COSSO) [10] and Group Sparse Additive Models (GroupSpAM) [11]. Most of the above approaches are formulated under Tikhonov regularization scheme with special hypothesis function space (e.g., linear function space, nonlinear function space with additive structure).

More recently, some works have been made in [12,13,14,15] to alleviate the restriction on the hypothesis function space, which just require that the regression function belongs to a reproducing kernel Hilbert space (RKHS). In contrast to the traditional structure assumption on regression function, these methods identify the important variable via the gradient of kernel-based estimator. There are two strategies to improve the model flexibility through the gradient information of predictor. One follows the learning gradient methods in [13,14,16], where the functional gradient is used to construct the loss function for forming the empirical risk. Under this strategy, two model-free variable selection methods are presented by combining the error metric associated with the gradient information of estimator and the coefficient-based -regularizer in [13] and -regularizer in [14], respectively. In particular, the variable selection consistency is also established based on the properties of RKHS and mild parameter conditions (e.g., the regularization parameter, the width of kernel). The other follows the structural sparsity issue in [15,17], where the functional gradient is employed to construct the sparsity-induced regularization term. Rosasco et al. in [17] proposes a least-squares regularization scheme with nonparametric sparsity, which can be solved by an iterative procedure associated with the theory of RKHS and proximal methods. Magda et al. [15] introduces a nonparametric structured sparsity by considering two regularizers based on partial derivatives and offers its optimization with the alternating direction method of multiples (ADMM) [18]. Moreover, to further improve the computation feasibility, a three-step variable selection algorithm is developed in [12] with the help of the three building blocks: kernel ridge regression, functional gradient in RKHS, and a hard threshold. Meanwhile, the effectiveness of the proposed algorithm in [12] is supported by theoretical guarantees on variable selection consistency and empirical verification on simulated data.

Despite the aforementioned methods showing promising performance for identifying the active variables, all of them rely heavily on the least-squares loss under the MSE criterion, which is sensitive to non-Gaussian noise [19,20], e.g., the heavy-tailed noise, the skewed noise, and outliers. In essence, learning methods under MSE aim to find an approximator to the conditional mean based on empirical observations. When the data are contaminated by a complex noise without zero mean, the mean-based estimator is difficult to reveal with the intrinsic regression function. This motivates us to formulate a new variable selection strategy in terms of other criterion with respect to different statistical metric (e.g., the conditional mode). Following the research line in [12,19], we consider a new robust variable selection method by integrating the issues of modal regression (for estimating the conditional mode function) and variable screening based on functional derivatives. To the best of our knowledge, this is the first paper to address robust model-free variable selection.

Statistical models for learning the conditional mode can be traced back to [21,22], which include the local modal regression in [23,24] and the global modal regression in [25,26,27]. Recently, the idea of modal regression has been successfully incorporated into machine learning methods from theoretical analysis [19] and application-oriented studies (e.g., cognitive impairment prediction [20] and cluster estimation [28]). Particularly, Feng et al. [19] considers a learning theory approach to modal regression and illustrates some relations between modal regression and learning under the maximum correntropy criterion [29,30,31]. In addition, Wang et al. [20] formulates a regularized modal regression (RMR) under modal regression criterion (MRC), and establishes its theoretical characteristics on generalization ability, robustness, and sparsity. It is natural to extend the RMR under linear regression assumption to general model-free variable selection setting.

Inspired by recent works in [12,19], we propose a new robust gradient-based variable selection method (RGVS) by integrating the RMR in RKHS and the model-free strategy for variable screening. Here, the kernel-based RMR is used to construct the robust estimator, which can reveal the truly conditional mode, even when facing data with non-Gaussian noise and outliers. Moreover, we evaluate the information quantity of each input variable by computing the corresponding gradient of estimator. Finally, a hard threshold is used to identify the truly active variables after offering the empirical norm of each gradient associated with hypothesis function. The above three steps assure the robustness and flexibility of our new approach.

To better highlight the novelty of RGVS, we present Table 1 to illustrate its relation with other related methods, e.g., linear models (Lasso [1], RMR [20]), additive models (SpAM [9], COSSO [10]), and General variable selection Method (GM) [12].

Table 1.

Properties of different regression algorithms.

Our main contributions can be summarized as follows.

- We formulate a new RGVS method by integrating the RMR in RKHS and the model-free strategy for variable screening. This algorithm can be implemented via the half-quadratic optimization [32]. To our knowledge, this algorithm is the first one for robust model-free variable selection.

- In theory, the proposed method enjoys statistical consistency on regression estimator under much general conditions on data noise and hypothesis space. In particular, the learning rate with polynomial decay is obtained, which is faster than in [20] for linear RMR. It should be noted that our work is established under the MRC, while all previous model-free methods are formulated under the MSE criterion. In addition, variable selection consistency is obtained for our approach under a self-calibration condition.

- In application, the proposed RGVS shows the empirical effectiveness on both simulated and real-world data sets. In particular, our approach can achieve much better performance than the model-free algorithm in [12] for complex noise data, e.g., containing Chi-square noise, Exponential noise, and Student noise. Experimental results together with theoretical analysis support the effectiveness of our approach.

The rest of this paper is organized as follows. After recalling the preliminaries of modal regression, we formulate the RGVS algorithm in Section 2. Then, theoretical analysis, optimization algorithm, and empirical evaluation are provided from Section 3 to Section 5 respectively. Finally, we conclude this paper in Section 6.

2. Gradient-Based Variable Selection in Modal Regression

Let and be a compact input space and an output space, respectively. We consider the following data-generating setting

where , and is a random noise. For the feasibility of theoretical analysis, we denote the intrinsic distribution of generated in (1) as . Let be empirical observations drawn independently according to the unknown distribution . Unlike sparse methods with certain model assumption (e.g., Lasso [1], SpAM [9]), the gradient-based sparse algorithms [12,13] mainly aim at screening out the informative variables according to the gradient information of intrinsic function. For input vector , the variable information is characterized by the gradient function . Clearly, implies that the j-th variable is uninformative [12,17]. Considering an -norm measure on the partial derivatives, we denote the true active set as

where and is the marginal distribution of .

Indeed, all the gradient-based variable selection algorithms [12,13,17] are constructed under Tikhonov regularization scheme in RKHS [33,34]. The RKHS associated with the Mercer kernel K is the closure of the linear span of . Such a Mercer kernel is a symmetric and positive semi-definite function. Denote as the inner product in , the reproducing properties of RKHS means .

2.1. Gradient-Based Variable Selection Based on Kernel Least-Squares Regression

In this subsection, we recall the gradient-based variable selection algorithm in [12] associated with least-squares error metric. When the noise in (1) satisfies (i.e., Gaussian noise), the regression function equals to the conditional mean, which can be represented by

Here denotes the conditional distribution of Y given x. Theoretically, the regression function in (3) is the minimizer of expected least-squares risk

As is unknown in practice, we cannot get directly by minimizing over certain hypothesis space. Given training samples , the empirical risk with respect to the expected risk is denoted by

The gradient-based variable selection algorithm in [12] depends on the estimator defined as below:

where is the regularization parameter and is the kernel-norm of f. The properties of RKHS [33] assure that

where and . Denote and , the closed-form solution is

Following Lemma 1 in [12], for any , we have , where

After imposing the empirical norm on , i.e.,

we get the estimated active set

where is a pre-configured constant for variable selection.

The general variable selection method has shown some theoretical advantages in [12], e.g., the representation flexibility and the computation feasibility. However, the gradient-based method [12] may result in a degraded performance for real-world data without the zero-mean noise condition. Inspired by the modal regression [19,35] to learn the conditional mode, we propose a new robust gradient-based variable selection method under much general noise condition.

2.2. Robust Gradient-Based Variable Selection Based on Kernel Modal Regression

Unlike the traditional zero-mean noise assumption [12,17], the modal regression requires that the conditional mode of random noise is zero for any , i.e.,

where is the conditional density of conditioned on X. In fact, this assumption imposes no restrictions on conditional mean, and can include the heavy-tailed noise, the skewed noise, and outliers. Then, we can verify that the mode-regression function

where denotes the conditional density of Y conditioned on . It is worth noting that is assumed to be unique and existing here. As shown in [19,20], in (6) is the maximizer of the MRC over all measurable functions, which is defined as

The maximizer of is difficult to be obtained since both and are unknown. Fortunately, Theorem 5 of [19] has proved that , where is the density function of at 0 and which can be easily approximated by the kernel density method [20]. With the help of modal kernel for the density estimation, we can formulate an empirical kernel density estimator at 0

Setting , we get the corresponding expected version

In addition, the modal regression also can be interpreted by minimizing a mode-induced error metric [19]. When for any , the mode-induced loss can be defined as

which is related closely with the correntropy-induced loss in [19,36]. Given training samples , we can formulate the RMR in RKHS as

where is a turning parameter that controls the complexity of the hypothesis space, and is the kernel-norm of .

Denote , and . From the representer theorem of kernel methods, we can deduce that

with

From Lemma 1 in [12], we know that for any and ,

The empirical measure on gradient function is

Then, the identified active set can be written as

where is a positive threshold selected under the sample-adaptive tuning framework [37].

3. Generalization-Bound and Variable Selection Consistency

This section establishes the theoretical guarantees on generalization ability and variable selection for the proposed RGVS. Firstly, we introduce some necessary assumptions.

Assumption 1.

The representing function ϕ associated with modal kernel satisfies: ϕ is bounded with , and ; is differentiable with and .

Observe that some smoothing kernels meet Assumption 1, such as Gaussian kernel and Logistic kernel, etc.

Assumption 2.

The conditional density function is second-order differentiable and is bounded.

Assumption 2 has been used in [19,20], which assures upper bound on together with Assumption 1.

Assumption 3.

Let be a space of s-times continuous differentiable functions. Assume that with with , and for a given constant M, the target function satisfies with .

Assumption 3 has been used extensively in learning theory literatures, see, e.g., [38,39,40,41,42,43,44]. In particular, the Gaussian kernel belongs to .

Our error analysis begins with the following inequality in [19], where the relationship between and is provided.

Lemma 1.

Under Assumptions 1–2, there holds

for any measurable function f: , where .

This indicates us to bound the excess risk via estimating . To be specific, we further make an error decomposition as follows.

Lemma 2.

Under Assumptions 1–3, there holds

Proof.

According to the definition of in (9), we have

Then, we can deduce that

This together with Lemma 1 yields the desired result. □

Observe that characterizes the divergence between the data-free risk and the empirical risk . To establish its uniform estimation, we need to give the upper bound of firstly.

According to the definition of , we have

Then,

Lemma 3.

For in (9), there holds

Lemma 3 tells us that with for any , where . This motivates us to measure the capacity of through the empirical covering number [45].

Definition 1.

Suppose that is a set of functions on with the -empirical metric . Then, the -empirical covering number of function set is defined as

where

with

Next, we introduce a concentration inequality established in [46].

Lemma 4.

Let be a function set associated with function t. Suppose that there are some constants and satisfying , for any . If for and , then for any and given , there holds

where is a constant only depending on θ and

Theorem 1.

Under Assumptions 1–3, taking and , we have for any

with confidence at least , where and

Proof.

Denote a function-based random variable set by

Under Assumption 1, for any , we have

Combining the above inequality and the properties of empirical covering number [40,41], we have

where is defined in (13).

According to Assumption 1, there exists . Furthermore, we get

where and are the bounded constants.

Recalling (14) and (15), we know Lemma 4 holds true for with , , , and . That is to say, for any and , with confidence

Combining Lemma 2 and (16) with , we have with confidence at least

where is positive constants independently of .

Setting and , we have and . Putting these selected parameters into (17), we get the desired estimation. □

Theorem 1 provides the upper bound to the excess risk of under the MRC, which extends the previous ERM-based analysis in [19] to the regularized learning scheme. In addition, we can further bound after imposing Assumption 3 in [19].

Corollary 1.

Let the conditions of Theorem 1 be true. Assume that , we have

with confidence at least .

The learning rate derived in Corollary 1 is faster than for the linear regularized modal regression [20]. Meanwhile, it should be noted that some kernel functions meet , e.g., Gaussian kernel, Sigmoid kernel, and Logistic kernel.

Since the proposed RGVS employs the non-convex mode-induced loss, our variable selection analysis is completely different from kernel method with least-squares loss [12]. Here, we introduce the following self-calibration inequality, which addresses that a weak convergence on risk implies a strong convergence in kernel-norm under certain conditions.

Assumption 4.

For any given σ and with , there exists a universal constant such that

Assumption 4 characterizes the concentration of our estimator near with the kernel-norm metric. Indeed, the current restriction is related to Assumption 4 in [12], Theorem 2.7 in [47] for quartile regression, and the so-called RNI condition in [48,49] as well.

In addition, the following condition is required, which implies that the gradient function associated with truly informative variables is separated well from zero. Similar assumptions can also be found in [12,50]. For simplicity, we denote .

Assumption 5.

There exists some constant such that

Proof.

As shown in [12], by direct computation, there holds

where denotes the Hilbert-Schmidt operator on , , , and is a positive constant. The concentration inequality for kernel operator in [17] states that

with confidence .

Meanwhile, with the similar proof of Theorem 1, we can deduce that

with confidence at least , where is a positive constant. Setting and , we further get

with confidence . This excess risk estimation together with Assumption 4 implies that

with confidence , where is a positive constant.

Now we turn to investigate the relationship between in (12) and in (2). Firstly, we suppose there exists some but . That is to say . By Assumption 5 with , we have

which contradicts with (21). This implies that with confidence .

Secondly, we suppose there exists some but . This means and . Then

which contradicts with (21) with confidence . Therefore, the desired property follows by combining these two results. □

Theorem 2 demonstrates that the identified variables are consistent with truly informative variables with probability 1 as . This result guarantees the variable selection performance of our approach, provided that the active variables have enough gradient signal. In the future, it is necessary to further investigate the self-calibration assumption for RMR in RKHS.

When choosing Gaussian kernel as the modal kernel, the modal regression is consistent with regression under the maximum correntropy criterion (MCC) [36]. In terms of the breakdown point theory, Theorem 24 in [19] established the robustness characterization of kernel regression under MCC and Theorem 3 in [36] provided robust analysis for RMR. These results imply the robustness of our approach.

4. Optimization Algorithm

With the help of half-quadratic (HQ) optimization [32], the maximization problem (9) can be transformed into a weighted least-squares problem, and then get the estimator via the ADMM [18]. Indeed, the kernel-based RMR (9) can be implemented directly by the optimization strategy in [36,51] for Gaussian kernel-based modal representation, and in [20] for Epanechnikov kernel-based modal representation. For completeness, we provide the optimization steps of (9) associated with Logistic kernel-based density estimation.

Consider a convex function

As illustrated in [52], a convex function and its convex conjugate function satisfy

According to the Logistic-based representation and (22), we have

Applying (23) into (10), we can obtain the augmented objective function

where , and is the auxiliary vector. Then the maximization problem (24) can be solved by the following iterative optimization algorithm.

According to Theorem 1 in [20], we have . Then, for a fixed , can be updated by . While b is settled down, update via

For and , the problem (25) can be rewritten as

where is an operator that transforms the vector into a diagonal matrix. By setting , we have

When is obtained from (26), we can calculate the gradient-based measure by (11) directly. Then we apply a pre-specified threshold to identify the truly active set . Here, the threshold is selected by the stability-based criterion [37], which include two steps as below. Firstly, the training samples are randomly divided into two subsets, and the identified active variable sets and are obtained under given for the k-th splitting of training samples. Then, the threshold is updated by maximizing the Cohen kappa statistical measure .

The optimization steps of RGVS are summarized in Algorithm 1.

| Algorithm 1: Optimization algorithm of RGVS with Logistic kernel |

| Input: Samples , the modal representation (Logistic kernel), Mercer kernel K; |

| Initialization: , , bandwidth , Max-iter , ; |

| Obtain in RKHS: |

| While not converged and Max-iter; |

| 1. Fixed , update ; |

| 2. Fixed , update ; |

| 3. Check the convergence condition: ; |

| 4. ; |

| End While |

| Output: ; |

| Variable Selection: }. |

| Output: |

5. Empirical Assessments

This section assesses the empirical performance of our proposed method on simulated and real-world datasets. Three variable selection methods are introduced as the baselines, which include Least Absolute Shrinkage and Selection Operator (Lasso) [1], Sparse Additive Models (SpAM) [9], and General Variable Selection Method (GM) [12].

In all experiments, the RKHS associated with Gaussian kernel is employed as the hypothesis function space. For simplicity, we denote and as the proposed RGVS method with Gaussian modal kernel and Logistic modal kernel, respectively. In the simulated experiments, we generate three datasets (with identical sample size) independently as the training set, the validation set, and the testing set, respectively. The hyper-parameters are tuned via grid research on validation set, and the corresponding grids are displayed as follows: the regularization parameter : ; the bandwidth and h: ; the threshold : }.

5.1. Simulated Data

Now we evaluate our approach on two synthetic data used in [12,13]. The first example is a simple additive function and the second one is a function that includes interaction terms.

Example 1.

We generate the p-dimension input by , where both and are extracted from the uniform distribution and . The output is generated by , where and is a random noise. Here, we consider the Gaussian noise , the Chi-square noise , the Student noise , and the Exponential noise , respectively.

Example 2.

This example follows the way of Example 1 to generate data. The differences are that and are extracted from the same distribution and the true function .

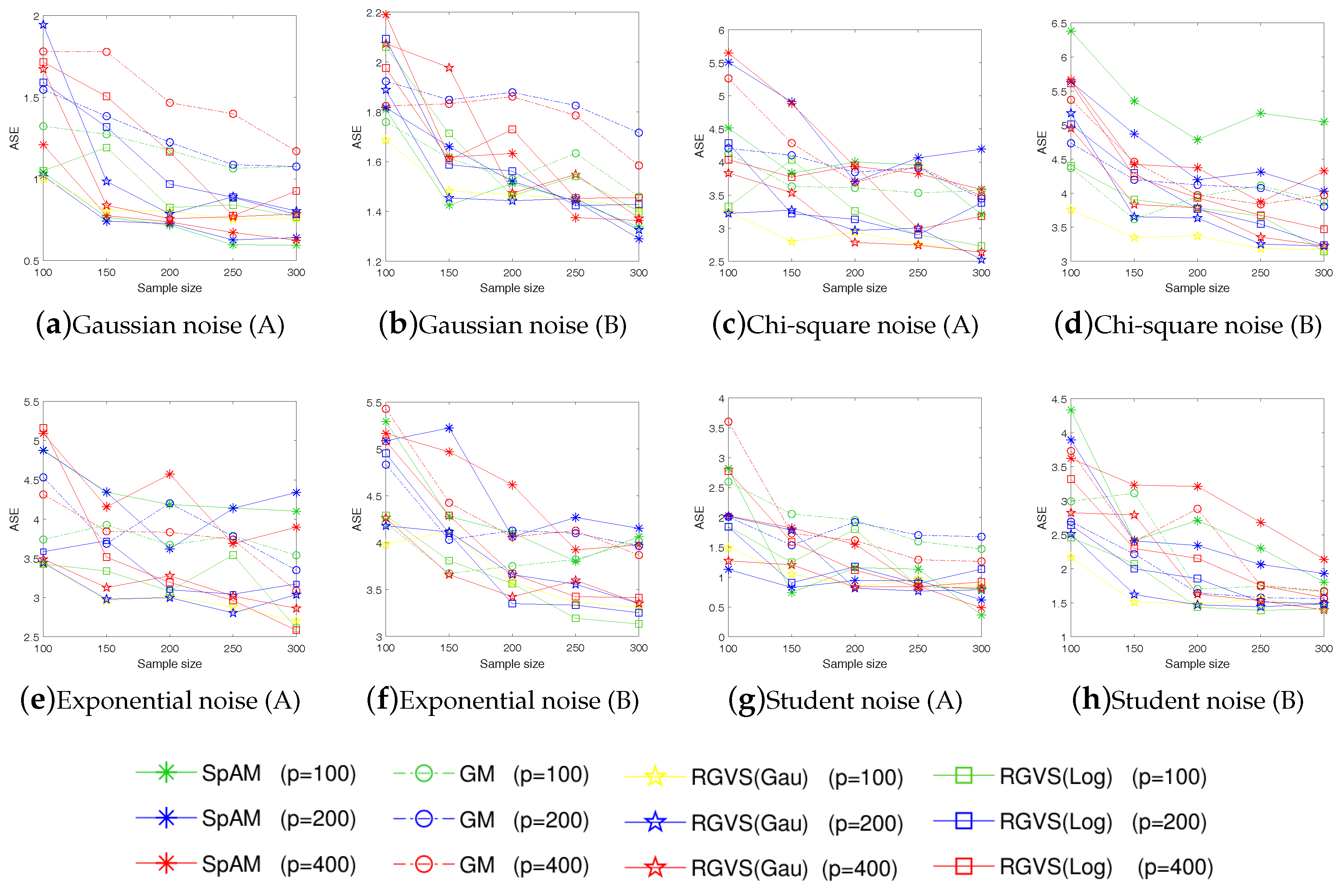

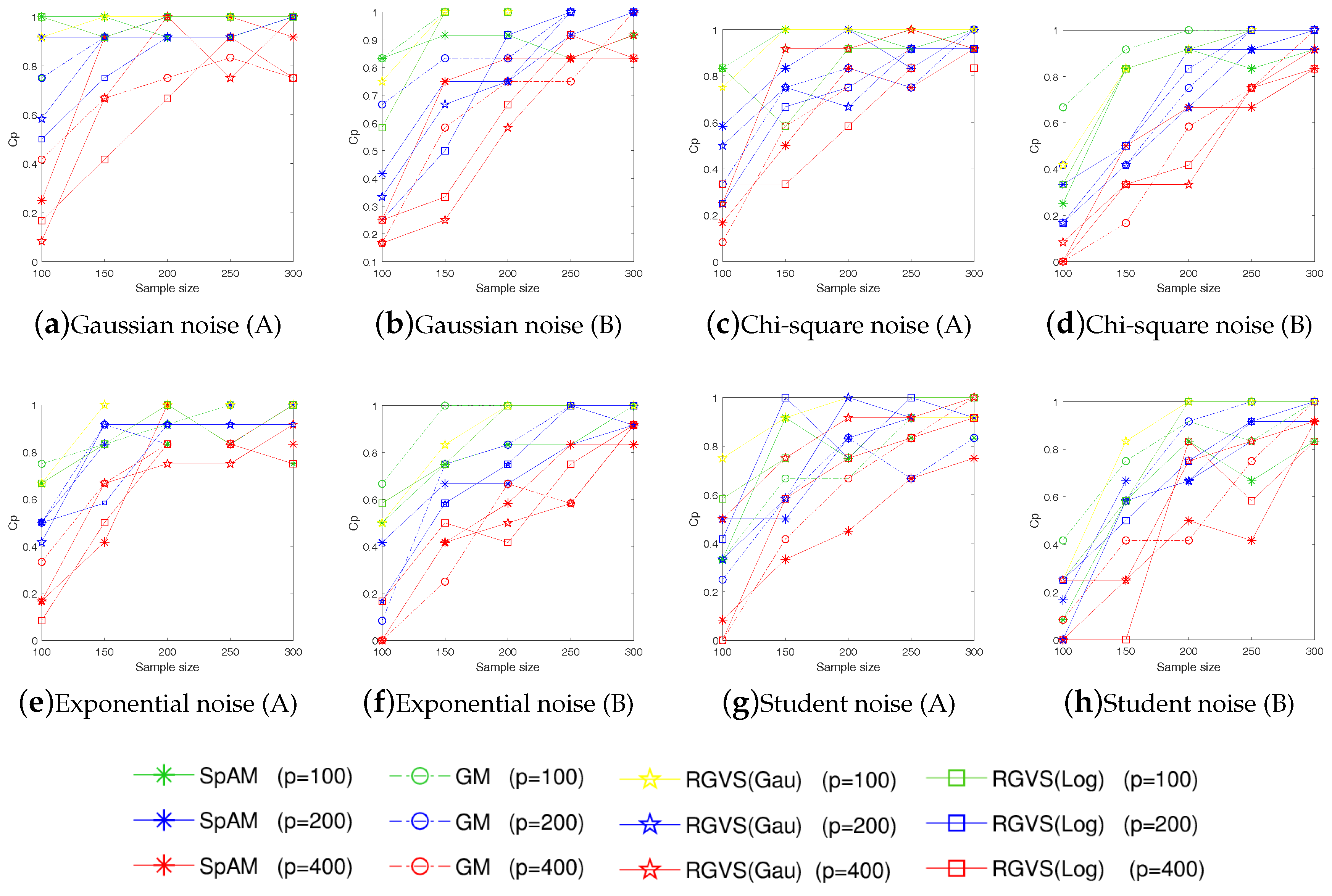

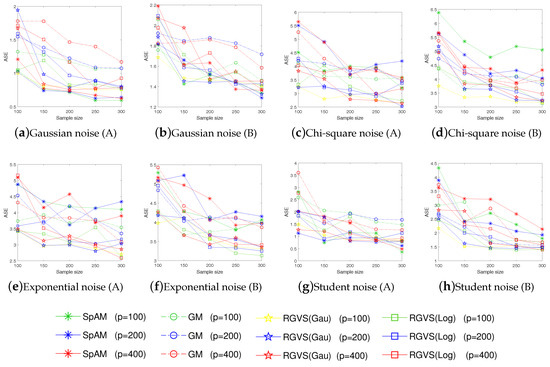

For each evaluation, we consider training set with different size and dimension . To make sure the results are reliable, each evaluation is repeated 50 times. Since the truly informative variables are usually unknown in practice, we evaluate the algorithmic performance according to the average squares error(ASE) defined as . To better evaluate the algorithmic performance, we also adopt some metrics used in [12,13] to measure the quality of model regression, e.g., Cp (correct-fitting), SIZE (the average number of selected variables), TP (the average number of the selected true informative variables), FP (the average number of the selected uninformative variables), Up (under-fitting probability), Op (over-fitting probability). The detail result is summarized in Table 2 and Table 3. To further support the competitive performance of the proposed method, we also provide the experimental results on ASE in Figure 1 and Cp in Figure 2 with and . Figure 1 and Figure 2 show that our method has always performed well with different n.

Table 2.

The averaged performance on simulated data in Example 1 (left) and Example 2 (right).

Table 3.

The averaged performance with simulated data in Example 1.

Figure 1.

The average squares error (ASE) vs. the sample size n under different noise (A and B represent Example 1. and Example 2 respectively).

Figure 2.

The correct-fitting probability (Cp) vs. the sample size n under different noise (A and B represent Example 1. and Example 2 respectively).

Empirical evaluations on simulated examples verify the promising performance of RGVS on variable selection and regression estimation, even for data with non-Gaussian noises (e.g., the Chi-square noise , the Student noise , and the Exponential noise ). Meanwhile, GM and RGVS have similar performance under the Gaussian noise setting, which is consistent with our motivation for algorithmic design.

5.2. Real-World Data

We now evaluate our RGVS on Auto-Mpg and Requirements of buildings, which are all collected from UCI. Since the variable number is very limited for the current datasets, 100 irrelative variables are added, which are generated from the distribution of .

Auto-Mpg data describes the mile per gallon of automobile (MPG). It contains 398 samples and 7 variables, including Cylinders, Displacement, Horsepower, Weight, Acceleration, Model year, and Origin. The second real data sets is obtained to assess the heating load and cooling load requirements of buildings which contains 768 samples and 8 input variables, including Relative Compactness, Surface Area, Wall Area, Roof Area, Overall Height, Orientation, Glazing Area, and Glazing Area Distribution. In particular, it has two response variables (heating load and cooling load).

Now, we use the 5-fold cross validation to tune the hyper-parameters and employ the relative sum of the squared errors (RSSE) to measure learning performance. Here , where is the estimator of f and denotes the average value of f on the test set . Experimental results are reported in Table 4 and Table 5.

Table 4.

Learning performance on Auto-Mpg.

Table 5.

Learning performance on Heating Load (UP) and Cooling Load (DOWN).

As shown in Table 4, our method identifies similar variables as GM, but can achieve the smaller RSSE. At same time, SpAM and Lasso tend to select less variables than GM and RGVS, which may discard the truly informative variable for regression estimation. Table 5 shows RGVS has better performance for both the Heating Load data and the Cooling Load data. All these empirical evaluations validate the effectiveness of our learning strategy consistently.

6. Conclusions

This paper proposes a new RGVS method rooted in kernel modal regression. The main advantages of RGVS are its flexibility on mimicking the decision function and adaptivity on screening the truly active variables. The proposed approach is evaluated by the theoretical analysis on the generalization error and variable selection, and by the empirical results on data experiments. In theory, our method can achieve the polynomial decay rate with . In applications, our model has shown the competitive performance for data with non-Gaussian noises.

Author Contributions

Methodology, B.S., H.C.; software, C.G., Y.W.; validation, B.S., H.X. and Y.W.; formal analysis, C.G., H.C.; writing—original draft preparation, C.G.,Y.W.; writing—review and editing, H.C. and H.X.

Funding

This research was funded in part by the National Natural Science Foundation of China (NSFC) under Grants 11601174 and 11671161.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Tibshirani, R. Regression shrinkage and delection via the Lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Stone, C.J. Additive regression and other nonparametric models. Ann. Stat. 1985, 13, 689–705. [Google Scholar] [CrossRef]

- Hastie, T.J.; Tibshirani, R.J. Generalized Additive Models; Chapman and Hall: London, UK, 1990. [Google Scholar]

- Kandasamy, K.; Yu, Y. Additive approximations in high dimensional nonparametric regression via the SALSA. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Kohler, M.; Krzyżak, A. Nonparametric regression based on hierarchical interaction models. IEEE Trans. Inf. Theory 2017, 63, 1620–1630. [Google Scholar] [CrossRef]

- Chen, H.; Wang, X.; Huang, H. Group sparse additive machine. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 198–208. [Google Scholar]

- Ravikumar, P.; Liu, H.; Lafferty, J.; Wasserman, L. SpAM: Sparse additive models. J. R. Stat. Soc. Ser. B 2009, 71, 1009–1030. [Google Scholar] [CrossRef]

- Lin, Y.; Zhang, H.H. Component selection and smoothing in multivariate nonparametric regression. Ann. Stat. 2007, 34, 2272–2297. [Google Scholar] [CrossRef]

- Yin, J.; Chen, X.; Xing, E.P. Group sparse additive models. In Proceedings of the International Conference on Machine Learning (ICML), Edinburgh, UK, 26 June–1 July 2012. [Google Scholar]

- He, X.; Wang, J.; Lv, S. Scalable kernel-based variable selection with sparsistency. arXiv 2018, arXiv:1802.09246. [Google Scholar]

- Yang, L.; Lv, S.; Wang, J. Model-free variable selection in reproducing kernel Hilbert space. J. Mach. Learn. Res. 2016, 17, 1–24. [Google Scholar]

- Ye, G.; Xie, X. Learning sparse gradients for variable selection and dimension reduction. Mach. Learn. 2012, 87, 303–355. [Google Scholar] [CrossRef]

- Gregorová, M.; Kalousis, A.; Marchand-Maillet, S. Structured nonlinear variable selection. arXiv 2018, arXiv:1805.06258. [Google Scholar]

- Mukherjee, S.; Zhou, D.X. Analysis of half-quadratic minimization methods for signal and image recovery. J. Mach. Learn. Res. 2006, 7, 519–549. [Google Scholar]

- Rosasco, L.; Villa, S.; Mosci, S.; Santoro, M.; Verri, A. Nonparametric sparsity and regularization. J. Mach. Learn. Res. 2013, 14, 1665–1714. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Feng, Y.; Fan, J.; Suykens, J.A.K. A statistical learning approach to modal regression. arXiv 2017, arXiv:1702.05960. [Google Scholar]

- Wang, X.; Chen, H.; Cai, W.; Shen, D.; Huang, H. Regularized modal regression with applications in cognitive impairment prediction. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 1448–1458. [Google Scholar]

- Parzen, E. On estimation of a probability density function and mode. Ann. Math. Stat. 1962, 33, 1065–1076. [Google Scholar] [CrossRef]

- Chernoff, H. Estimation of the mode. Ann. Inst. Stat. Math. 1964, 16, 31–41. [Google Scholar] [CrossRef]

- Yao, W.; Lindsay, B.G.; Li, R. Local modal regression. J. Nonparametr. Stat. 2012, 24, 647–663. [Google Scholar] [CrossRef]

- Chen, Y.C.; Genovese, C.R.; Tibshirani, R.J.; Wasserman, L. Nonparametric modal regression. Ann. Stat. 2014, 44, 489–514. [Google Scholar] [CrossRef]

- Collomb, G.; Härdle, W.; Hassani, S. A note on prediction via estimation of the conditional mode function. J. Stat. Plan. Inference 1986, 15, 227–236. [Google Scholar] [CrossRef]

- Lee, M.J. Mode regression. J. Econom. 1989, 42, 337–349. [Google Scholar] [CrossRef]

- Sager, T.W.; Thisted, R.A. Maximum likelihood estimation of isotonic modal regression. Ann. Stat. 1982, 10, 690–707. [Google Scholar] [CrossRef]

- Li, J.; Ray, S.; Lindsay, B. A nonparametric statistical approach to clustering via mode identification. J. Mach. Learn. Res. 2007, 8, 1687–1723. [Google Scholar]

- Liu, W.; Pokharel, P.P.; Príncipe, J.C. Correntropy: Properties and applications in non-Gaussian signal processing. IEEE Trans. Signal Process. 2007, 55, 5286–5298. [Google Scholar] [CrossRef]

- Príncipe, J.C. Information Theoretic Learning: Rényi’s Entropy and Kernel Perspectives; Springer: New York, NY, USA, 2010. [Google Scholar]

- Feng, Y.; Huang, X.; Shi, L.; Yang, Y.; Suykens, J.A.K. Learning with the maximum correntropy criterion induced losses for regression. J. Mach. Learn. Res. 2015, 16, 993–1034. [Google Scholar]

- Nikolova, M.; Ng, M.K. Analysis of half-quadratic minimization methods for signal and image recovery. SIAM J. Sci. Comput. 2005, 27, 937–966. [Google Scholar] [CrossRef]

- Aronszajn, N. Theory of Reproducing Kernels. Trans. Am. Math. Soc. 1950, 68, 337–404. [Google Scholar] [CrossRef]

- Cucker, F.; Zhou, D.X. Learning Theory: An Approximation Theory Viewpoint; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Yao, W.; Li, L. A new regression model: Modal linear regression. Scand. J. Stat. 2013, 41, 656–671. [Google Scholar] [CrossRef]

- Chen, H.; Wang, Y. Kernel-based sparse regression with the correntropy-induced loss. Appl. Comput. Harmon. Anal. 2018, 44, 144–164. [Google Scholar] [CrossRef]

- Sun, W.; Wang, J.; Fang, Y. Consistent selection of tuning parameters via variable selection stability. J. Mach. Learn. Res. 2012, 14, 3419–3440. [Google Scholar]

- Zou, B.; Li, L.; Xu, Z. The generalization performance of ERM algorithm with strongly mixing observations. Mach. Learn. 2009, 75, 275–295. [Google Scholar] [CrossRef]

- Guo, Z.C.; Zhou, D.X. Concentration estimates for learning with unbounded sampling. Adv. Comput. Math. 2013, 38, 207–223. [Google Scholar] [CrossRef]

- Shi, L.; Feng, Y.; Zhou, D.X. Concentration estimates for learning with ℓ1-regularizer and data dependent hypothesis spaces. Appl. Comput. Harmon. Anal. 2011, 31, 286–302. [Google Scholar] [CrossRef]

- Shi, L. Learning theory estimates for coefficient-based regularized regression. Appl. Comput. Harmon. Anal. 2013, 34, 252–265. [Google Scholar] [CrossRef]

- Chen, H.; Pan, Z.; Li, L.; Tang, Y. Error analysis of coefficient-based regularized algorithm for density-level detection. Neural Comput. 2013, 25, 1107–1121. [Google Scholar] [CrossRef]

- Zou, B.; Xu, C.; Lu, Y.; Tang, Y.Y.; Xu, J.; You, X. k-Times markov sampling for SVMC. IEEE Trans. Neural Networks Learn. Syst. 2018, 29, 1328–1341. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Li, W.; Zou, B.; Wang, Y.; Tang, Y.Y.; Han, H. Learning with coefficient-based regularized regression on Markov resampling. IEEE Trans. Neural Networks Learn. Syst. 2018, 29, 4166–4176. [Google Scholar]

- Steinwart, I.; Christmann, A. Support Vector Machines; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Wu, Q.; Ying, Y.; Zhou, D.X. Multi-kernel regularized classifiers. J. Complex. 2007, 23, 108–134. [Google Scholar] [CrossRef]

- Steinwart, I.; Christmann, A. Estimating conditional quantiles with the help of the pinball loss. Bernoulli 2011, 17, 211–225. [Google Scholar] [CrossRef]

- Belloni, A.; Chernozhukov, V. ℓ1-penalized quantile regression in high dimensional sparse models. Ann. Stat. 2009, 39, 82–130. [Google Scholar] [CrossRef]

- Kato, K. Group Lasso for high dimensional sparse quantile regression models. arXiv 2011, arXiv:1103.1458. [Google Scholar]

- Lv, S.; Lin, H.; Lian, H.; Huang, J. Oracle inequalities for sparse additive quantile regression in reproducing kernel Hilbert space. Ann. Stat. 2018, 46, 781–813. [Google Scholar] [CrossRef]

- Wang, Y.; Tang, Y.Y.; Li, L. Correntropy matching pursuit with application to robust digit and face recognition. IEEE Trans. Cybern. 2017, 47, 1354–1366. [Google Scholar] [CrossRef] [PubMed]

- Rockafellar, R.T. Convex Analysis; Princeton Univ. Press: Princeton, NJ, USA, 1997. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).